nodejs爬虫,服务器经常返回ECONNRESET或者socket hang up错误的解决方法

最近在做一个超大爬虫,总的http请求数量接近一万次,而且都是对同一个url发出的请求,只是请求的body有所不同。

测试发现,经常会出现request error: read ECONNRESET、request error: socket hang up 这样的错误,查看日志发现每次出错的请求的body并不一样,并且通过正常方式(手机APP)访问这个资源时不会出错。 虽然已经用了for ... of + await 的语法代替forEach,限制了同一时间的异步请求数量,但每次这1万个请求中总会出现4 5 百个这样的错误。猜测是服务器有某种保护机制,拒绝了同一IP的过多请求,搜索一番后在以下链接中找到解决方法:

https://www.gregjs.com/javascript/2015/how-to-scrape-the-web-gently-with-node-js/

Limiting maximum concurrent sockets in Node

即限制并发套接字的最大数量

1 var http = require('http'); 2 var https = require('https'); 3 http.globalAgent.maxSockets = 1; 4 https.globalAgent.maxSockets = 1;

而我用的是request包,参考npm文档(https://www.npmjs.com/package/request):

pool - an object describing which agents to use for the request. If this option is omitted the request will use the global agent (as long as your options allow for it). Otherwise, request will search the pool for your custom agent. If no custom agent is found, a new agent will be created and added to the pool. Note: pool is used only when the agent option is not specified.

- A

maxSocketsproperty can also be provided on thepoolobject to set the max number of sockets for all agents created (ex:pool: {maxSockets: Infinity}). - Note that if you are sending multiple requests in a loop and creating multiple new

poolobjects,maxSocketswill not work as intended. To work around this, either userequest.defaultswith your pool options or create the pool object with themaxSocketsproperty outside of the loop.

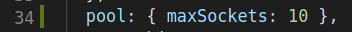

于是,在option中加入 ,即可解决。

,即可解决。

当然具体最大值设为多少合适需要多次测试,值太低则爬虫耗时过长,太高又可能出现之前的错误。