Pytorch实战学习(一):用Pytorch实现线性回归

《PyTorch深度学习实践》完结合集_哔哩哔哩_bilibili

P5--用Pytorch实现线性回归

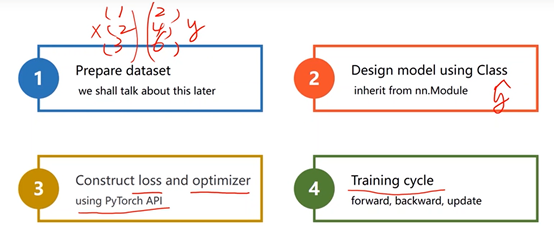

建立模型四大步骤

一、Prepare dataset

mini-batch:x、y必须是矩阵

## Prepare Dataset:mini-batch, X、Y是3X1的Tensor x_data = torch.Tensor([[1.0], [2.0], [3.0]]) y_data = torch.Tensor([[2.0], [4.0], [6.0]])

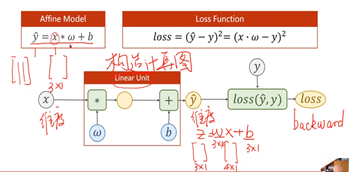

二、Design model

1、重点是构造计算图

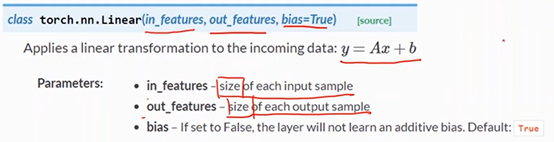

##Design Model ##构造类,继承torch.nn.Module类 class LinearModel(torch.nn.Module): ## 构造函数,初始化对象 def __init__(self): ##super调用父类 super(LinearModel, self).__init__() ##构造对象,Linear Unite,包含两个Tensor:weight和bias,参数(1, 1)是w的维度 self.linear = torch.nn.Linear(1, 1) ## 构造函数,前馈运算 def forward(self, x): ## w*x+b y_pred = self.linear(x) return y_pred model = LinearModel()

2、设置w的维度,后一层的神经元数量 X 前一层神经元数量

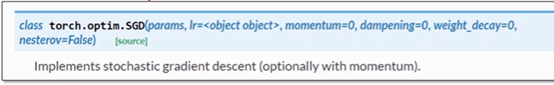

三、Construct Loss and Optimizer

##Construct Loss and Optimizer ##损失函数,传入y和y_presd criterion = torch.nn.MSELoss(size_average = False) ##优化器,model.parameters()找出模型所有的参数,Lr--学习率 optimizer = torch.optim.SGD(model.parameters(), lr=0.01)

1、损失函数

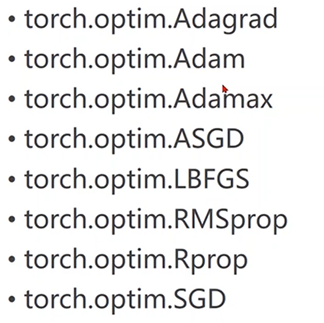

2、优化器

可用不同的优化器进行测试对比

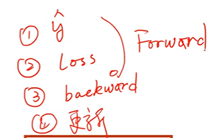

四、Training cycle

## Training cycle for epoch in range(100): y_pred = model(x_data) loss = criterion(y_pred, y_data) print(epoch, loss) ##梯度归零 optimizer.zero_grad() ##反向传播 loss.backward() ##更新 optimizer.step()

完整代码

import torch ## Prepare Dataset:mini-batch, X、Y是3X1的Tensor x_data = torch.Tensor([[1.0], [2.0], [3.0]]) y_data = torch.Tensor([[2.0], [4.0], [6.0]]) ##Design Model ##构造类,继承torch.nn.Module类 class LinearModel(torch.nn.Module): ## 构造函数,初始化对象 def __init__(self): ##super调用父类 super(LinearModel, self).__init__() ##构造对象,Linear Unite,包含两个Tensor:weight和bias,参数(1, 1)是w的维度 self.linear = torch.nn.Linear(1, 1) ## 构造函数,前馈运算 def forward(self, x): ## w*x+b y_pred = self.linear(x) return y_pred model = LinearModel() ##Construct Loss and Optimizer ##损失函数,传入y和y_presd criterion = torch.nn.MSELoss(size_average = False) ##优化器,model.parameters()找出模型所有的参数,Lr--学习率 optimizer = torch.optim.SGD(model.parameters(), lr=0.01) ## Training cycle for epoch in range(100): y_pred = model(x_data) loss = criterion(y_pred, y_data) print(epoch, loss) ##梯度归零 optimizer.zero_grad() ##反向传播 loss.backward() ##更新 optimizer.step() ## Outpue weigh and bias print('w = ', model.linear.weight.item()) print('b = ', model.linear.bias.item()) ## Test Model x_test = torch.Tensor([[4.0]]) y_test = model(x_test) print('y_pred = ', y_test.data)

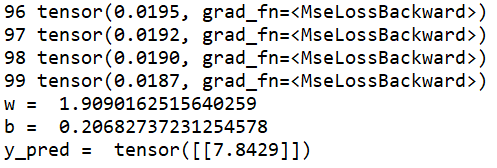

运行结果

训练100次后,得到的 weight and bias,还有预测的y