Spark on K8S - Operator

版本要求

Spark 官方没有开发 Spark Operator,现在是由 Google 开发的

这个 Operator 使用的 Spark on K8S 方案和 Spark 官方一样,只是包了一层,使得可以像声明其他 K8S 的应用(比如声明 Service)一样声明 Spark 任务,也可以像其他 K8S 应用一样,支持自动重启、失败重试、更新、挂载配置文件等功能

缺点就是受 Operator 自身的版本和实现的制约

如果使用官方 Spark on K8S 的 cluster 模式,需要自己实现一个用于提交 spark 任务的 pod(起类似 operator 的作用)

如果使用官方 Spark on K8S 的 client 模式,就不需要额外的 pod 或 operator,但每个 Spark 任务需要自己为 driver 配置用于和 executor 之间连接的端口

对 Helm 和 K8S 的版本要求参考官网

https://github.com/GoogleCloudPlatform/spark-on-k8s-operator/tree/master/charts/spark-operator-chart

Prerequisites

Helm >= 3

Kubernetes >= 1.13

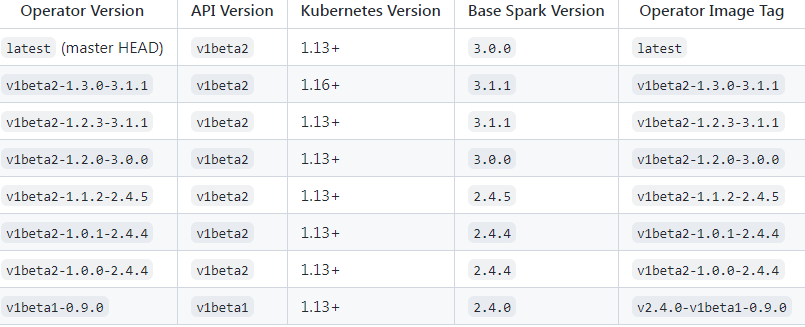

Operator 和 Spark 版本

https://github.com/GoogleCloudPlatform/spark-on-k8s-operator/#version-matrix

Spark Operator 启动后也是一个 Pod,会在调用 K8S 的 kubectl apply -f test.yml 命令的时候,读取配置文件的内容,然后调用 spark-submit 命令启动 spark 任务,所以特定版本的 operator 是基于特定版本的 spark 的,除非这个 operator 安装了多个版本的 spark 然后在 test.yml 指定版本,但现在的实现应该是没有的

启动 minikube

sudo minikube start --driver=none \

--image-repository=registry.cn-hangzhou.aliyuncs.com/google_containers \

--kubernetes-version="v1.16.3"

这是轻量的 K8S 环境

安装 Helm

下载

wget https://get.helm.sh/helm-v3.0.2-linux-amd64.tar.gz

tar zxvf helm-v3.0.2-linux-amd64.tar.gz

sudo cp ./linux-amd64/helm /usr/local/bin/

查看 helm 命令

> helm version

version.BuildInfo{Version:"v3.0.2", GitCommit:"19e47ee3283ae98139d98460de796c1be1e3975f", GitTreeState:"clean", GoVersion:"go1.13.5"}

添加常用源

helm repo add stable https://kubenetes-charts.storage.googleapis.com

helm repo add incubator https://kubernetes-charts-incubator.storage.googleapis.com

helm repo add bitnami https://charts.bitnami.com/bitnami

helm repo add aliyuncs https://apphub.aliyuncs.com

查找 chart

helm search repo tomcat

返回

NAME CHART VERSION APP VERSION DESCRIPTION

aliyuncs/tomcat 6.2.3 9.0.31 Chart for Apache Tomcat

bitnami/tomcat 9.5.3 10.0.12 Chart for Apache Tomcat

google 的源可能访问不了

使用 helm 安装 spark-operator

添加源

~$ sudo helm repo add spark-operator https://googlecloudplatform.github.io/spark-on-k8s-operator

"spark-operator" has been added to your repositories

搜索 Spark operator

~$ sudo helm search repo spark-operator/spark-operator --versions

NAME CHART VERSION APP VERSION DESCRIPTION

spark-operator/spark-operator 1.1.27 v1beta2-1.3.8-3.1.1 A Helm chart for Spark on Kubernetes operator

spark-operator/spark-operator 1.1.26 v1beta2-1.3.8-3.1.1 A Helm chart for Spark on Kubernetes operator

spark-operator/spark-operator 1.1.25 v1beta2-1.3.7-3.1.1 A Helm chart for Spark on Kubernetes operator

spark-operator/spark-operator 1.1.24 v1beta2-1.3.7-3.1.1 A Helm chart for Spark on Kubernetes operator

spark-operator/spark-operator 1.1.23 v1beta2-1.3.6-3.1.1 A Helm chart for Spark on Kubernetes operator

spark-operator/spark-operator 1.1.22 v1beta2-1.3.6-3.1.1 A Helm chart for Spark on Kubernetes operator

spark-operator/spark-operator 1.1.21 v1beta2-1.3.5-3.1.1 A Helm chart for Spark on Kubernetes operator

spark-operator/spark-operator 1.1.20 v1beta2-1.3.4-3.1.1 A Helm chart for Spark on Kubernetes operator

spark-operator/spark-operator 1.1.19 v1beta2-1.3.3-3.1.1 A Helm chart for Spark on Kubernetes operator

spark-operator/spark-operator 1.1.18 v1beta2-1.3.3-3.1.1 A Helm chart for Spark on Kubernetes operator

spark-operator/spark-operator 1.1.15 v1beta2-1.3.1-3.1.1 A Helm chart for Spark on Kubernetes operator

spark-operator/spark-operator 1.1.14 v1beta2-1.3.0-3.1.1 A Helm chart for Spark on Kubernetes operator

spark-operator/spark-operator 1.1.13 v1beta2-1.2.3-3.1.1 A Helm chart for Spark on Kubernetes operator

spark-operator/spark-operator 1.1.12 v1beta2-1.2.3-3.1.1 A Helm chart for Spark on Kubernetes operator

spark-operator/spark-operator 1.1.11 v1beta2-1.2.3-3.1.1 A Helm chart for Spark on Kubernetes operator

spark-operator/spark-operator 1.1.10 v1beta2-1.2.3-3.1.1 A Helm chart for Spark on Kubernetes operator

安装 spark operator

sudo helm install my-release spark-operator/spark-operator --version 1.1.20 --namespace spark

返回

NAME: my-release

LAST DEPLOYED: Mon Dec 18 16:42:28 2023

NAMESPACE: spark

STATUS: deployed

REVISION: 1

TEST SUITE: None

查看所有安装的 Helm Chart

~$ sudo helm list -n spark

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

my-release spark 1 2023-12-18 16:42:28.007743692 +0800 CST deployed spark-operator-1.1.20 v1beta2-1.3.4-3.1.1

查看某个 Helm Chart

~$ sudo helm status my-release -n spark

NAME: my-release

LAST DEPLOYED: Mon Dec 18 16:42:28 2023

NAMESPACE: spark

STATUS: deployed

REVISION: 1

TEST SUITE: None

如果要卸载

sudo helm uninstall my-release -n spark

# 可能有残留的东西要删除

kubectl delete serviceaccounts my-release-spark-operator

kubectl delete clusterrole my-release-spark-operator

kubectl delete clusterrolebindings my-release-spark-operator

成功启动后可以看到有个 spark operator 的 pod 在运行

spark operator 的 image 可能会没权限下载,导致 operator 的 pod 报错起不来

message: Back-off pulling image "gcr.io/spark-operator/spark-operator:latest"

reason: ImagePullBackOff

可以通过其他方式下载下来后,用 docker tag 20144a306214 gcr.io/spark-operator/spark-operator:latest 命令改 tag

正常的话,运行的 pod 是这样

~$ sudo kubectl get pods -n spark

NAME READY STATUS RESTARTS AGE

my-release-spark-operator-6f5bbf76f6-zcnln 1/1 Running 0 10m

~$

~$

~$ sudo kubectl get sa -n spark

NAME SECRETS AGE

default 1 27m

my-release-spark 1 21m

my-release-spark-operator 1 21m

~$

同时会有新的 CRD (Custom Resource Definition) 可以用

$ sudo kubectl get crd -n spark

scheduledsparkapplications.sparkoperator.k8s.io 2023-12-18T16:42:28Z

sparkapplications.sparkoperator.k8s.io 2023-12-18T16:42:28Z

通过 helm 创建 spark operator 的时候会自动创建 spark 的 service account 用于申请和操作 pod,也可以自己创建 service account

apiVersion: v1

kind: ServiceAccount

metadata:

name: spark

namespace: spark

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

namespace: spark

name: spark-role

rules:

- apiGroups: [""]

resources: ["pods"]

verbs: ["*"]

- apiGroups: [""]

resources: ["services"]

verbs: ["*"]

- apiGroups: [""]

resources: ["configmaps"]

verbs: ["*"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: spark-role-binding

namespace: spark

subjects:

- kind: ServiceAccount

name: spark

namespace: spark

roleRef:

kind: Role

name: spark-role

apiGroup: rbac.authorization.k8s.io

提交 spark 应用的时候需要指定这个 service account

提交 spark 任务

配置文件像这样

apiVersion: "sparkoperator.k8s.io/v1beta2"

kind: SparkApplication

metadata:

name: spark-pi

namespace: spark

spec:

type: Scala

mode: cluster

#image: "gcr.io/spark-operator/spark:v3.1.1"

image: "apache/spark:v3.1.3"

imagePullPolicy: IfNotPresent

mainClass: org.apache.spark.examples.SparkPi

mainApplicationFile: "local:///opt/spark/examples/jars/spark-examples_2.12-3.1.3.jar"

sparkVersion: "3.1.3"

restartPolicy:

type: Never

volumes:

- name: "test-volume"

hostPath:

path: "/tmp"

type: Directory

driver:

cores: 1

coreLimit: "1200m"

memory: "512m"

labels:

version: 3.1.3

serviceAccount: spark

volumeMounts:

- name: "test-volume"

mountPath: "/tmp"

executor:

cores: 1

instances: 1

memory: "512m"

labels:

version: 3.1.3

volumeMounts:

- name: "test-volume"

mountPath: "/tmp"

这里的 image 必须包含要执行的 spark 代码,local 指的是 image 里面的文件

这里虽然可以配 sparkVersion 但从 operator 的代码看,应该是没用到的,所以 operator 应该只用固定版本的 spark-submit 命令

启动 spark 任务

~$ sudo kubectl apply -f spark-test.yaml

sparkapplication.sparkoperator.k8s.io/spark-pi configured

如果启动成功,就可以看到相应的 driver pod 和 executor pod 在运行

~$ sudo kubectl get pods -n spark

NAME READY STATUS RESTARTS AGE

my-release-spark-operator-6f5bbf76f6-zcnln 1/1 Running 0 6h46m

spark-pi-driver 1/1 Running 0 2m45s

spark-pi-fd1d098c7d89e23d-exec-1 1/1 Running 0 47s

也可以直接查看 sparkapplication CRD

~$ sudo kubectl get sparkapplication -n spark

NAME STATUS ATTEMPTS START FINISH AGE

spark-pi RUNNING 1 2023-12-18T15:26:09Z <no value> 3m57s

程序结束后再查看

~$ sudo kubectl get pods -n spark

NAME READY STATUS RESTARTS AGE

my-release-spark-operator-6f5bbf76f6-zcnln 1/1 Running 0 6h46m

spark-pi-driver 0/1 Completed 0 3m10s

~$

~$

~$ sudo kubectl get sparkapplication -n spark

NAME STATUS ATTEMPTS START FINISH AGE

spark-pi COMPLETED 1 2023-12-18T15:26:09Z 2023-12-18T15:29:02Z 4m6s

默认会把 executor 删掉,只剩下 driver

如果要保留 executor,可以在 spec 下增加配置

apiVersion: "sparkoperator.k8s.io/v1beta2"

kind: SparkApplication

metadata:

name: spark-pi

namespace: spark

spec:

......

sparkConf:

spark.kubernetes.executor.deleteOnTermination: "false"

这样就 driver 和 executor 都保留了

$ sudo kubectl get pods -n spark

NAME READY STATUS RESTARTS AGE

my-release-spark-operator-6f5bbf76f6-b8sgn 1/1 Running 0 4d2h

spark-pi-driver 0/1 Completed 0 13s

spark-pi-f0f12e8cad19a99f-exec-1 0/1 Completed 0 9s

更多配置项可以查看

https://spark.incubator.apache.org/docs/3.1.3/running-on-kubernetes.html#configuration

Schedule 机制

spark operator 支持 cron 机制,只需要改成 ScheduledSparkApplication 类型

apiVersion: "sparkoperator.k8s.io/v1beta2"

kind: ScheduledSparkApplication

metadata:

name: spark-pi-scheduled

namespace: spark

spec:

schedule: "@every 5m"

concurrencyPolicy: Allow

template:

type: Scala

mode: cluster

#image: "gcr.io/spark-operator/spark:v3.1.1"

image: "apache/spark:v3.1.3"

imagePullPolicy: IfNotPresent

mainClass: org.apache.spark.examples.SparkPi

mainApplicationFile: "local:///opt/spark/examples/jars/spark-examples_2.12-3.1.3.jar"

sparkVersion: "3.1.3"

restartPolicy:

type: Never

driver:

cores: 1

coreLimit: "1200m"

memory: "512m"

labels:

version: 3.1.3

serviceAccount: spark

executor:

cores: 1

instances: 1

memory: "512m"

labels:

version: 3.1.3

这里的 schedule 也可以是

"*/10 * * * *"

最小单位是分钟

Metric

spark operator 有 metric 接口可以暴露一系列 metrics (比如成功数量、失败数量、运行数量等等) 给 Prometheus

Helm 安装 operator 的时候默认打开了 metric,如果要关掉可以

helm install my-release spark-operator/spark-operator --namespace spark-operator --set metrics.enable=false

可以配置 spark-operator 的 deployment 修改 metric 的路径、端口等

apiVersion: apps/v1

kind: Deployment

metadata:

name: sparkoperator

namespace: spark-operator

labels:

app.kubernetes.io/name: sparkoperator

app.kubernetes.io/version: v1beta2-1.3.0-3.1.1

spec:

replicas: 1

selector:

matchLabels:

app.kubernetes.io/name: sparkoperator

app.kubernetes.io/version: v1beta2-1.3.0-3.1.1

strategy:

type: Recreate

template:

metadata:

annotations:

prometheus.io/scrape: "true"

prometheus.io/port: "10254"

prometheus.io/path: "/metrics"

labels:

app.kubernetes.io/name: sparkoperator

app.kubernetes.io/version: v1beta2-1.3.0-3.1.1

spec:

serviceAccountName: sparkoperator

containers:

- name: sparkoperator

image: gcr.io/spark-operator/spark-operator:v1beta2-1.3.0-3.1.1

imagePullPolicy: Always

ports:

- containerPort: 10254

args:

- -logtostderr

- -enable-metrics=true

- -metrics-labels=app_type

可以配置 spark app 要不要暴露 metric

apiVersion: "sparkoperator.k8s.io/v1beta2"

kind: SparkApplication

metadata:

name: spark-pi

namespace: spark

spec:

type: Scala

mode: cluster

image: "gcr.io/spark-operator/spark:v3.1.1-gcs-prometheus"

imagePullPolicy: IfNotPresent

mainClass: org.apache.spark.examples.SparkPi

mainApplicationFile: "local:///opt/spark/examples/jars/spark-examples_2.12-3.1.1.jar"

arguments:

- "100000"

sparkVersion: "3.1.1"

restartPolicy:

type: Never

driver:

cores: 1

coreLimit: "1200m"

memory: "512m"

labels:

version: 3.1.1

serviceAccount: spark

executor:

cores: 1

instances: 1

memory: "512m"

labels:

version: 3.1.1

monitoring:

exposeDriverMetrics: true

exposeExecutorMetrics: true

prometheus:

jmxExporterJar: "/prometheus/jmx_prometheus_javaagent-0.11.0.jar"

port: 8090

spark operator 如果重启这些 metric 会被重置

如果要查看 metrics,可以直接登录 operator pod,执行命令

curl localhost:10254/metrics

端口和路径可以通过配置改变

浙公网安备 33010602011771号

浙公网安备 33010602011771号