Kubernetes:通过 minikube 安装单机测试环境

官网

https://kubernetes.io/docs/tasks/tools/install-minikube/

https://kubernetes.io/docs/tasks/tools/install-kubectl/

https://kubernetes.io/docs/setup/learning-environment/minikube/

Kubernetes 组件

- kubeadm:CLI 命令,用于安装初始化 Kubernetes 集群,添加新节点(minikube 会用到 kubeadm)

- kubectl:CLI 命令,用于操作集群,kubectl create/apply/scale/get/delete/exec/logs/etc

- Master:控制节点,包括 API Server、Scheduler、Controller Manager、etcd 等

- Node:计算节点,包括 Kubelet、Kube-Proxy、Container Runtime 等

- Add-On:除 Master、Node 的核心组件外还有一些推荐的可选组件,如 DNS、Dashboard、CNI 等等

- API Server:资源操作的唯一入口,REST API,无论外部如 kubectl 还是内部如 scheduler、controller、etcd、kubelet 都是通过 API Server 通信,支持 authentication/authorization

- Scheduler:负责资源的调度,按照预定的调度策略将 Pod 调度到相应的机器上

- Controller Manager:控制管理 Namespace、ServiceAccount、ResourceQuota、Replication、Volume、NodeLifecycle、Service、Endpoint、DaemonSet、Job、Cronjob、ReplicaSet、Deployment 等等

- etcd:high-available distributed key-value store,用于保存集群的状态,提供 watch for changes 功能

- Kubelet:负责维护容器的生命周期,通过 API Server 把状态告知 Master,同时负责 Volume (CVI) 和网络 (CNI) 的管理

- Kube-Proxy:网络代理和负载均衡,主要用于帮助 service 实现虚拟 IP

- Container Runtime:管理镜像,运行 Pod 和容器,最常用的是 Docker

- Registry:存储镜像

- DNS:为集群提供 DNS 服务(Kube-DNS、CoreDNS)

- Dashboard:提供 Web UI

- Container Resource Monitoring:cAdvisor + Kubelet、Prometheus、Google Cloud Monitoring

- Cluster-level Logging:Fluentd

- CNI:Container Network Interface(Flannel、Calico)

- CSI:Container Storage Interface

- Ingress Controller:为服务提供外网入口

Kubernetes 是 Go 语言编写的

Kubernetes 概念

- Pod:可以创建、调度、部署、管理的最小单位,Pod 包含一个或多个紧耦合的 container,并共享 hostname、IPC、network、storage 等

- ReplicaSet:确保 Pod 以指定的副本个数运行,和 Replication 的唯一区别是对选择器的支持不一样

- Deployment:用于管理 Pod、ReplicaSet,可以实现 Deploy/Scaling/Upgrade/Rollback

- Service:对外提供一个虚拟 IP,后端是一组有相同 Label 的 Pod,并在这些 Pod 之间做负载均衡(ClusterIP、NodePort、LoadBalancer 等几种配置),即负责转发数据到 Pod

- Ingress:链接多个 Service 作为统一入口,根据不同的路径,将数据转发到不同的 Service

- ConfigMap:用于解耦部署与配置的关系,即 Deployment、Pod 等不需要定义好配置,只需要指定对应的 ConfigMap,具体的内容由 ConfigMap 决定

- Secrets:ConfigMap 存储不敏感的信息,而 Secrets 用于存储敏感信息,Secrets 以加密的形式存储(可能是存在 etcd 中),当 Secrets 挂载到 Pod 时,会被解密,并且实质上是存在内存中,可以以文件的形式挂载

以上这些都需要通过 yaml 文件定义(这些文件也被称为 Manifest),然后通过 kubectl create -f xxx.yaml 启动

Minikube Features

Minikube 用于创建单机版的 Kubernetes

- DNS

- NodePorts

- ConfigMaps and Secrets

- Dashboards

- Container Runtime: Docker, CRI-O, and containerd

- Enabling CNI (Container Network Interface)

- Ingress

Install kubectl

curl -LO https://storage.googleapis.com/kubernetes-release/release/`curl -s https://storage.googleapis.com/kubernetes-release/release/stable.txt`/bin/linux/amd64/kubectl

chmod +x ./kubectl

sudo cp ./kubectl /usr/local/bin/kubectl

sudo kubectl version --client

sudo kubectl --help

实现 kubectl 的命令补全功能

# make sure bash-completion is installed

sudo apt-get install bash-completion

# make sure bash-completion is sourced in ~/.bashrc (root and other users)

if ! shopt -oq posix; then

if [ -f /usr/share/bash-completion/bash_completion ]; then

. /usr/share/bash-completion/bash_completion

elif [ -f /etc/bash_completion ]; then

. /etc/bash_completion

fi

fi

# or

if [ -f /etc/bash_completion ] && ! shopt -oq posix; then

. /etc/bash_completion

fi

# make sure kubectl completion is sourced in ~/.bashrc (root and other users)

echo 'source <(kubectl completion bash)' >>~/.bashrc

# generate kubectl completion file

sudo bash -c "./kubectl completion bash >/etc/bash_completion.d/kubectl"

kubectl 是 Go 语言实现的

Install a Hypervisor

这是可选项,通过安装 KVM 或 VirtualBox 等工具,Minikube 可以创建 VM 并在上面安装运行程序,如果不安装 Hypervisor 那 Minikube 就在本机上安装运行程序

Install Minikube

curl -Lo minikube https://storage.googleapis.com/minikube/releases/latest/minikube-linux-amd64

chmod +x minikube

sudo cp ./minikube /usr/local/bin/minikube

sudo minikube start --driver=<driver_name>

如果没有安装 Hypervisor,需要将 driver 指定为 none

sudo minikube start --driver=none

通过 none driver 启动,结果报错

😄 Ubuntu 16.04 (vbox/amd64) 上的 minikube v1.11.0

✨ 根据用户配置使用 none 驱动程序

💣 Sorry, Kubernetes 1.18.3 requires conntrack to be installed in root's path

提示要安装 conntrack

sudo apt-get install conntrack

重新启动 Minikube

😄 Ubuntu 16.04 (vbox/amd64) 上的 minikube v1.11.0

✨ 根据用户配置使用 none 驱动程序

👍 Starting control plane node minikube in cluster minikube

🤹 Running on localhost (CPUs=4, Memory=7976MB, Disk=18014MB) ...

ℹ️ OS release is Ubuntu 16.04.6 LTS

🐳 正在 Docker 19.03.8 中准备 Kubernetes v1.18.3…

❗ This bare metal machine is having trouble accessing https://k8s.gcr.io

💡 To pull new external images, you may need to configure a proxy: https://minikube.sigs.k8s.io/docs/reference/networking/proxy/

> kubeadm.sha256: 65 B / 65 B [--------------------------] 100.00% ? p/s 0s

> kubectl.sha256: 65 B / 65 B [--------------------------] 100.00% ? p/s 0s

> kubelet.sha256: 65 B / 65 B [--------------------------] 100.00% ? p/s 0s

> kubectl: 41.99 MiB / 41.99 MiB [----------------] 100.00% 6.79 MiB p/s 6s

> kubeadm: 37.97 MiB / 37.97 MiB [----------------] 100.00% 4.44 MiB p/s 9s

> kubelet: 108.04 MiB / 108.04 MiB [-------------] 100.00% 6.35 MiB p/s 18s

💥 initialization failed, will try again: run: /bin/bash -c "sudo env PATH=/var/lib/minikube/binaries/v1.18.3:$PATH kubeadm init --config /var/tmp/minikube/kubeadm.yaml --ignore-preflight-errors=DirAvailable--etc-kubernetes-manifests,DirAvailable--var-lib-minikube,DirAvailable--var-lib-minikube-etcd,FileAvailable--etc-kubernetes-manifests-kube-scheduler.yaml,FileAvailable--etc-kubernetes-manifests-kube-apiserver.yaml,FileAvailable--etc-kubernetes-manifests-kube-controller-manager.yaml,FileAvailable--etc-kubernetes-manifests-etcd.yaml,Port-10250,Swap": exit status 1

stdout:

[init] Using Kubernetes version: v1.18.3

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

stderr:

W0609 16:35:49.251770 29943 configset.go:202] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io]

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[WARNING Swap]: running with swap on is not supported. Please disable swap

[WARNING FileExisting-ebtables]: ebtables not found in system path

[WARNING FileExisting-socat]: socat not found in system path

[WARNING Service-Kubelet]: kubelet service is not enabled, please run 'systemctl enable kubelet.service'

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR ImagePull]: failed to pull image k8s.gcr.io/kube-apiserver:v1.18.3: output: Error response from daemon: Get https://k8s.gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

, error: exit status 1

[ERROR ImagePull]: failed to pull image k8s.gcr.io/kube-controller-manager:v1.18.3: output: Error response from daemon: Get https://k8s.gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

, error: exit status 1

[ERROR ImagePull]: failed to pull image k8s.gcr.io/kube-scheduler:v1.18.3: output: Error response from daemon: Get https://k8s.gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

, error: exit status 1

[ERROR ImagePull]: failed to pull image k8s.gcr.io/kube-proxy:v1.18.3: output: Error response from daemon: Get https://k8s.gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

, error: exit status 1

[ERROR ImagePull]: failed to pull image k8s.gcr.io/pause:3.2: output: Error response from daemon: Get https://k8s.gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

, error: exit status 1

[ERROR ImagePull]: failed to pull image k8s.gcr.io/etcd:3.4.3-0: output: Error response from daemon: Get https://k8s.gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

, error: exit status 1

[ERROR ImagePull]: failed to pull image k8s.gcr.io/coredns:1.6.7: output: Error response from daemon: Get https://k8s.gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

, error: exit status 1

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`

To see the stack trace of this error execute with --v=5 or higher

还是报错,无法访问 https://k8s.gcr.io,使用国内的源

sudo minikube start --driver=none --image-repository=registry.cn-hangzhou.aliyuncs.com/google_containers

成功了

😄 Ubuntu 16.04 (vbox/amd64) 上的 minikube v1.11.0

✨ 根据现有的配置文件使用 none 驱动程序

👍 Starting control plane node minikube in cluster minikube

🔄 Restarting existing none bare metal machine for "minikube" ...

ℹ️ OS release is Ubuntu 16.04.6 LTS

🐳 正在 Docker 19.03.8 中准备 Kubernetes v1.18.3…

🤹 开始配置本地主机环境...

❗ The 'none' driver is designed for experts who need to integrate with an existing VM

💡 Most users should use the newer 'docker' driver instead, which does not require root!

📘 For more information, see: https://minikube.sigs.k8s.io/docs/reference/drivers/none/

❗ kubectl 和 minikube 配置将存储在 /home/lin 中

❗ 如需以您自己的用户身份使用 kubectl 或 minikube 命令,您可能需要重新定位该命令。例如,如需覆盖您的自定义设置,请运行:

▪ sudo mv /home/lin/.kube /home/lin/.minikube $HOME

▪ sudo chown -R $USER $HOME/.kube $HOME/.minikube

💡 此操作还可通过设置环境变量 CHANGE_MINIKUBE_NONE_USER=true 自动完成

🔎 Verifying Kubernetes components...

🌟 Enabled addons: default-storageclass, storage-provisioner

🏄 完成!kubectl 已经配置至 "minikube"

💡 为获得最佳结果,请安装 kubectl:https://kubernetes.io/docs/tasks/tools/install-kubectl/

Minikube 默认至少要双核,如果只有单核,需要指定配置

sudo minikube start --driver=none \

--extra-config=kubeadm.ignore-preflight-errors=NumCPU \

--force --cpus 1 \

--image-repository=registry.cn-hangzhou.aliyuncs.com/google_containers

检查 Minikube 的状态

sudo minikube status

正常返回如下

minikube

type: Control Plane

host: Running

kubelet: Running

apiserver: Running

kubeconfig: Configured

停止集群

sudo minikube stop

删除集群

sudo minikube delete

验证 kubectl

sudo kubectl version --client

sudo kubectl cluster-info

返回

lin@lin-VirtualBox:~/K8S$ sudo kubectl version --client

Client Version: version.Info{Major:"1", Minor:"18", GitVersion:"v1.18.3", GitCommit:"2e7996e3e2712684bc73f0dec0200d64eec7fe40", GitTreeState:"clean", BuildDate:"2020-05-20T12:52:00Z", GoVersion:"go1.13.9", Compiler:"gc", Platform:"linux/amd64"}

lin@lin-VirtualBox:~/K8S$

lin@lin-VirtualBox:~/K8S$

lin@lin-VirtualBox:~/K8S$ sudo kubectl cluster-info

Kubernetes master is running at https://xxx.xxx.xxx.xxx:8443

KubeDNS is running at https://xxx.xxx.xxx.xxx:8443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

Minikube 是 Go 语言实现的

例子:echoserver

echoserver 镜像是一个简单的 HTTP 服务器,将请求的 body 携待的参数返回

这里没有定义 manifest 文件,而是直接指定 image 做 deploy,这一步会启动一个 deployment 和对应的 pod

sudo kubectl create deployment hello-minikube \

--image=registry.cn-hangzhou.aliyuncs.com/google_containers/echoserver:1.10

暴露端口,这一步会启动一个 service

sudo kubectl expose deployment hello-minikube --type=NodePort --port=8080

查看 pod 的状态

sudo kubectl get pod

sudo kubectl get pods

sudo kubectl get pods -o wide

get pod 的返回

NAME READY STATUS RESTARTS AGE

hello-minikube-7df785b6bb-v2phl 1/1 Running 0 5m51s

查看 pod 的信息

sudo kubectl describe pod hello-minikube

describe pod 的返回

Name: hello-minikube-7df785b6bb-mw6kv

Namespace: default

Priority: 0

Node: lin-virtualbox/100.98.137.196

Start Time: Wed, 10 Jun 2020 16:30:18 +0800

Labels: app=hello-minikube

pod-template-hash=7df785b6bb

Annotations: <none>

Status: Running

IP: 172.17.0.6

IPs:

IP: 172.17.0.6

Controlled By: ReplicaSet/hello-minikube-7df785b6bb

Containers:

echoserver:

Container ID: docker://ca6c7070ef7afc260f6fe6538da49e91bc60ba914b623d6080b03bd2886343b3

Image: registry.cn-hangzhou.aliyuncs.com/google_containers/echoserver:1.10

Image ID: docker-pullable://registry.cn-hangzhou.aliyuncs.com/google_containers/echoserver@sha256:56bec57144bd3610abd4a1637465ff491dd78a5e2ae523161569fa02cfe679a8

Port: <none>

Host Port: <none>

State: Running

Started: Wed, 10 Jun 2020 16:30:21 +0800

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-znf6q (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

default-token-znf6q:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-znf6q

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events: <none>

查看 deployment 的状态

sudo kubectl get deployment

get deployment 的返回

NAME READY UP-TO-DATE AVAILABLE AGE

hello-minikube 1/1 1 1 80m

查看 service 的状态

sudo minikube service hello-minikube --url

# or

sudo minikube service hello-minikube

返回

http://100.98.137.196:31526

# or

|-----------|----------------|-------------|-----------------------------|

| NAMESPACE | NAME | TARGET PORT | URL |

|-----------|----------------|-------------|-----------------------------|

| default | hello-minikube | 8080 | http://100.98.137.196:31526 |

|-----------|----------------|-------------|-----------------------------|

向 echoserver 发送请求

curl -X POST -d '{"abc":123}' http://100.98.137.196:31526/api/v1/hello

返回

Hostname: hello-minikube-7df785b6bb-v2phl

Pod Information:

-no pod information available-

Server values:

server_version=nginx: 1.13.3 - lua: 10008

Request Information:

client_address=172.17.0.1

method=POST

real path=/api/v1/hello

query=

request_version=1.1

request_scheme=http

request_uri=http://100.98.137.196:8080/api/v1/hello

Request Headers:

accept=*/*

content-length=11

content-type=application/x-www-form-urlencoded

host=100.98.137.196:31384

user-agent=curl/7.47.0

Request Body:

{"abc":123}

删除 service

sudo kubectl delete services hello-minikube

删除 service 后 Pod 不受影响还在 running

删除 deployment 后 Pod 才会被删除

sudo kubectl delete deployment hello-minikube

启动 Dashboard

sudo minikube dashboard

返回

🔌 正在开启 dashboard ...

🤔 正在验证 dashboard 运行情况 ...

🚀 Launching proxy ...

🤔 正在验证 proxy 运行状况 ...

http://127.0.0.1:42155/api/v1/namespaces/kubernetes-dashboard/services/http:kubernetes-dashboard:/proxy/

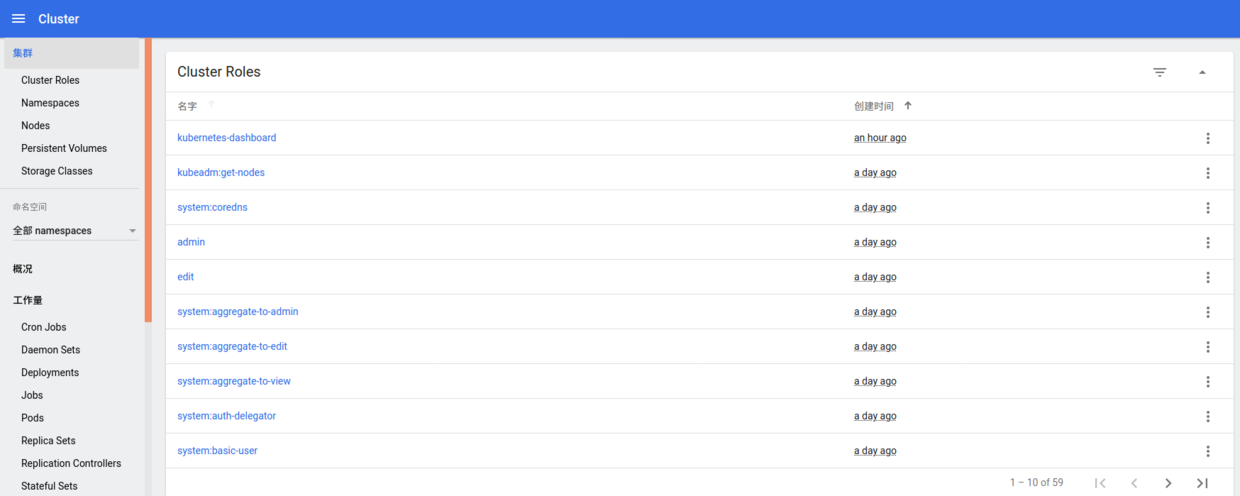

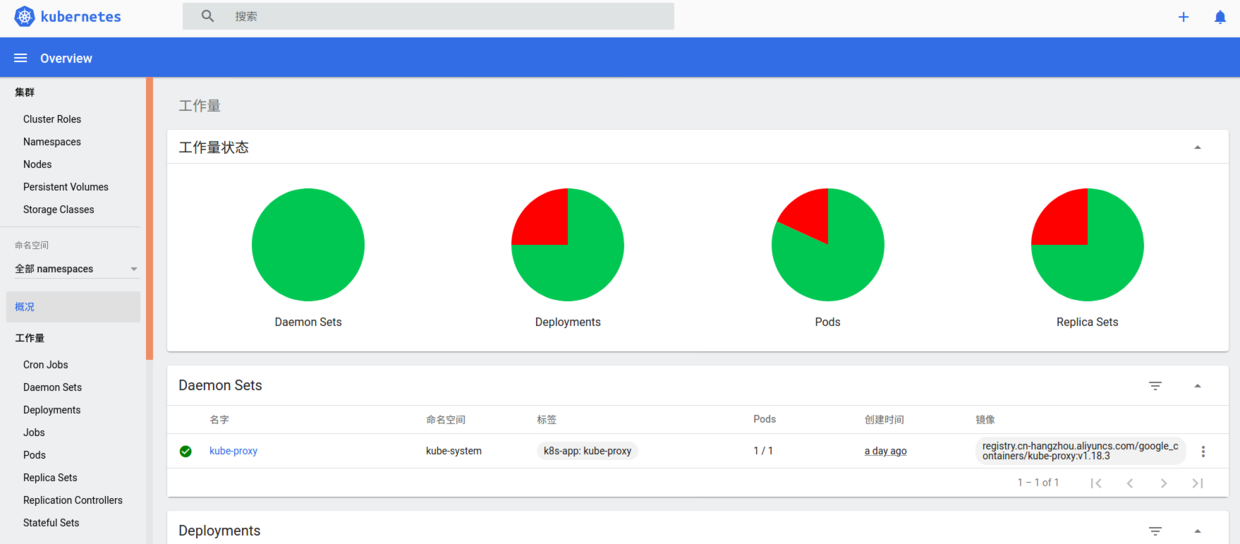

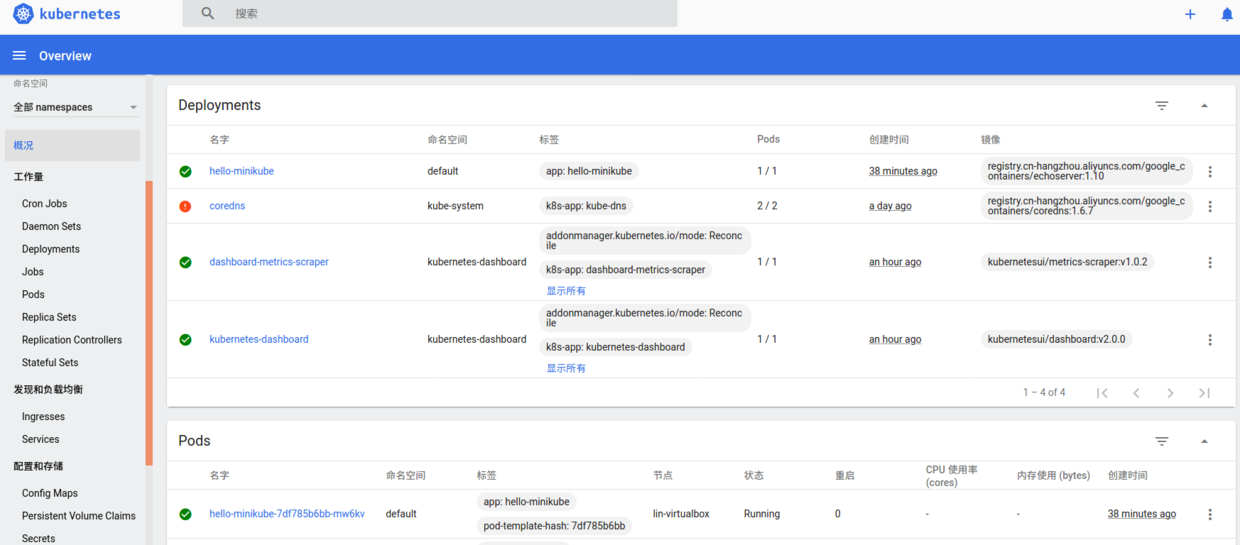

登陆 URL 可以看到

命名空间选择 "全部 namespaces",可以看到,K8S 的组件如 apiserver、controller、etcd、scheduler 等等也是容器化的

实际上这些镜像和启动的容器通过 docker 命令也可以看到

lin@lin-VirtualBox:~$ docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy v1.18.3 3439b7546f29 2 weeks ago 117MB

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler v1.18.3 76216c34ed0c 2 weeks ago 95.3MB

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver v1.18.3 7e28efa976bd 2 weeks ago 173MB

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager v1.18.3 da26705ccb4b 2 weeks ago 162MB

kubernetesui/dashboard v2.0.0 8b32422733b3 7 weeks ago 222MB

registry.cn-hangzhou.aliyuncs.com/google_containers/pause 3.2 80d28bedfe5d 3 months ago 683kB

registry.cn-hangzhou.aliyuncs.com/google_containers/coredns 1.6.7 67da37a9a360 4 months ago 43.8MB

registry.cn-hangzhou.aliyuncs.com/google_containers/etcd 3.4.3-0 303ce5db0e90 7 months ago 288MB

kubernetesui/metrics-scraper v1.0.2 3b08661dc379 7 months ago 40.1MB

registry.cn-hangzhou.aliyuncs.com/google_containers/echoserver 1.10 365ec60129c5 2 years ago 95.4MB

registry.cn-hangzhou.aliyuncs.com/google_containers/storage-provisioner v1.8.1 4689081edb10 2 years ago 80.8MB

docker ps -a

可以用 docker 命令操作这些 K8S 启动的容器,比如

docker exec

docker logs

kubectl 也有相应的命令做操作,比如

kubectl exec

kubectl logs

另外绕过 K8S 部署的容器 K8S 无法管理

Wordaround if coredns fail

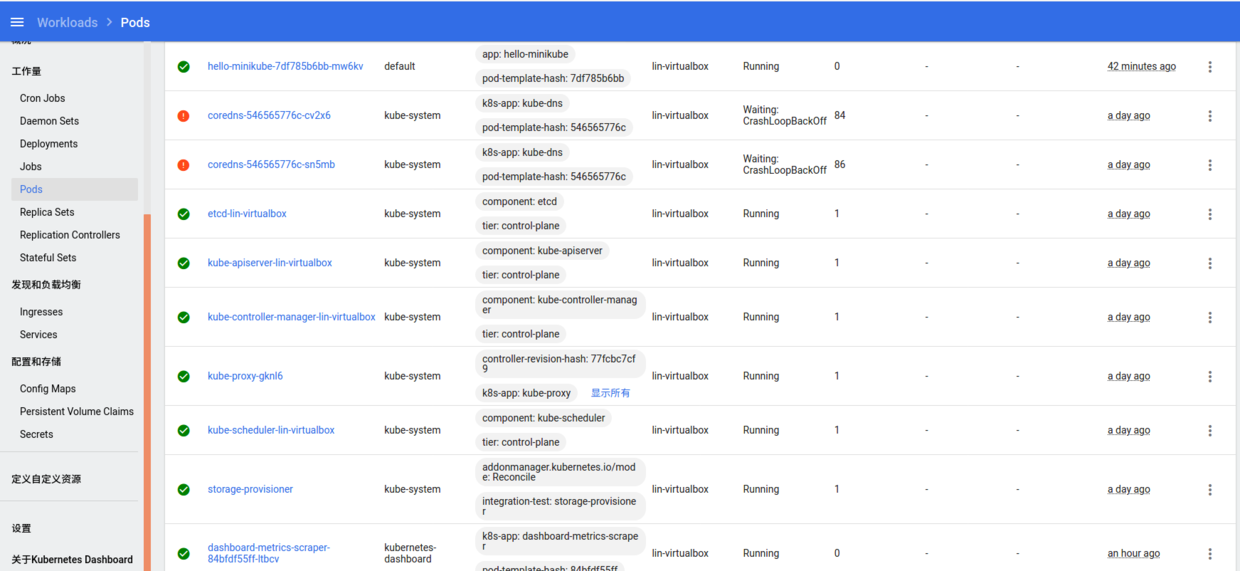

从 Dashboard 可以看到,有个 coredns 的服务出错了,有的服务会受到影响,比如后面要讲的 Flink on K8S

通过 kubectl get pod 查看状态

lin@lin-VirtualBox:~/K8S$ sudo kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-546565776c-5fq7p 0/1 CrashLoopBackOff 3 7h21m

coredns-546565776c-zx72j 0/1 CrashLoopBackOff 3 7h21m

etcd-lin-virtualbox 1/1 Running 0 7h21m

kube-apiserver-lin-virtualbox 1/1 Running 0 7h21m

kube-controller-manager-lin-virtualbox 1/1 Running 0 7h21m

kube-proxy-rgsgg 1/1 Running 0 7h21m

kube-scheduler-lin-virtualbox 1/1 Running 0 7h21m

storage-provisioner 1/1 Running 0 7h21m

通过 kubectl logs 查看日志

lin@lin-VirtualBox:~/K8S$ sudo kubectl logs -n kube-system coredns-546565776c-5fq7p

.:53

[INFO] plugin/reload: Running configuration MD5 = 4e235fcc3696966e76816bcd9034ebc7

CoreDNS-1.6.7

linux/amd64, go1.13.6, da7f65b

[FATAL] plugin/loop: Loop (127.0.0.1:58992 -> :53) detected for zone ".", see https://coredns.io/plugins/loop#troubleshooting. Query: "HINFO 5310754532638830744.3332451342029566297."

临时方案如下

# 编辑 coredns 的 ConfigMap,有一行 loop,将其删除

sudo kubectl edit cm coredns -n kube-system

# 重启服务

sudo kubectl delete pod coredns-546565776c-5fq7p -n kube-system

sudo kubectl delete pod coredns-546565776c-zx72j -n kube-system

重启后可以看到 coredns 变成 running 了

例子:Flink on K8S

定义 manifest 文件

https://ci.apache.org/projects/flink/flink-docs-release-1.10/ops/deployment/kubernetes.html

flink-configuration-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: flink-config

labels:

app: flink

data:

flink-conf.yaml: |+

jobmanager.rpc.address: flink-jobmanager

taskmanager.numberOfTaskSlots: 1

blob.server.port: 6124

jobmanager.rpc.port: 6123

taskmanager.rpc.port: 6122

jobmanager.heap.size: 1024m

taskmanager.memory.process.size: 1024m

log4j.properties: |+

log4j.rootLogger=INFO, file

log4j.logger.akka=INFO

log4j.logger.org.apache.kafka=INFO

log4j.logger.org.apache.hadoop=INFO

log4j.logger.org.apache.zookeeper=INFO

log4j.appender.file=org.apache.log4j.FileAppender

log4j.appender.file.file=${log.file}

log4j.appender.file.layout=org.apache.log4j.PatternLayout

log4j.appender.file.layout.ConversionPattern=%d{yyyy-MM-dd HH:mm:ss,SSS} %-5p %-60c %x - %m%n

log4j.logger.org.apache.flink.shaded.akka.org.jboss.netty.channel.DefaultChannelPipeline=ERROR, file

jobmanager-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: flink-jobmanager

spec:

replicas: 1

selector:

matchLabels:

app: flink

component: jobmanager

template:

metadata:

labels:

app: flink

component: jobmanager

spec:

containers:

- name: jobmanager

image: flink:latest

workingDir: /opt/flink

command: ["/bin/bash", "-c", "$FLINK_HOME/bin/jobmanager.sh start;\

while :;

do

if [[ -f $(find log -name '*jobmanager*.log' -print -quit) ]];

then tail -f -n +1 log/*jobmanager*.log;

fi;

done"]

ports:

- containerPort: 6123

name: rpc

- containerPort: 6124

name: blob

- containerPort: 8081

name: ui

livenessProbe:

tcpSocket:

port: 6123

initialDelaySeconds: 30

periodSeconds: 60

volumeMounts:

- name: flink-config-volume

mountPath: /opt/flink/conf

securityContext:

runAsUser: 9999 # refers to user _flink_ from official flink image, change if necessary

volumes:

- name: flink-config-volume

configMap:

name: flink-config

items:

- key: flink-conf.yaml

path: flink-conf.yaml

- key: log4j.properties

path: log4j.properties

taskmanager-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: flink-taskmanager

spec:

replicas: 2

selector:

matchLabels:

app: flink

component: taskmanager

template:

metadata:

labels:

app: flink

component: taskmanager

spec:

containers:

- name: taskmanager

image: flink:latest

workingDir: /opt/flink

command: ["/bin/bash", "-c", "$FLINK_HOME/bin/taskmanager.sh start; \

while :;

do

if [[ -f $(find log -name '*taskmanager*.log' -print -quit) ]];

then tail -f -n +1 log/*taskmanager*.log;

fi;

done"]

ports:

- containerPort: 6122

name: rpc

livenessProbe:

tcpSocket:

port: 6122

initialDelaySeconds: 30

periodSeconds: 60

volumeMounts:

- name: flink-config-volume

mountPath: /opt/flink/conf/

securityContext:

runAsUser: 9999 # refers to user _flink_ from official flink image, change if necessary

volumes:

- name: flink-config-volume

configMap:

name: flink-config

items:

- key: flink-conf.yaml

path: flink-conf.yaml

- key: log4j.properties

path: log4j.properties

jobmanager-service.yaml

apiVersion: v1

kind: Service

metadata:

name: flink-jobmanager

spec:

type: ClusterIP

ports:

- name: rpc

port: 6123

- name: blob

port: 6124

- name: ui

port: 8081

selector:

app: flink

component: jobmanager

jobmanager-rest-service.yaml. Optional service, that exposes the jobmanager rest port as public Kubernetes node’s port.

apiVersion: v1

kind: Service

metadata:

name: flink-jobmanager-rest

spec:

type: NodePort

ports:

- name: rest

port: 8081

targetPort: 8081

selector:

app: flink

component: jobmanager

通过 manifest 文件启动,注意有先后顺序

sudo kubectl create -f flink-configuration-configmap.yaml

sudo kubectl create -f jobmanager-service.yaml

sudo kubectl create -f jobmanager-deployment.yaml

sudo kubectl create -f taskmanager-deployment.yaml

查看配置的 ConfigMap

lin@lin-VirtualBox:~/K8S$ sudo kubectl get configmap

NAME DATA AGE

flink-config 2 4h28m

查看启动的 Pod

lin@lin-VirtualBox:~/K8S$ sudo kubectl get pods

NAME READY STATUS RESTARTS AGE

flink-jobmanager-574676d5d5-g75gh 1/1 Running 0 5m24s

flink-taskmanager-5bdb4857bc-vvn2j 1/1 Running 0 5m23s

flink-taskmanager-5bdb4857bc-wn5c2 1/1 Running 0 5m23s

hello-minikube-7df785b6bb-j9g6g 1/1 Running 0 55m

查看启动的 Deployment

lin@lin-VirtualBox:~/K8S$ sudo kubectl get deployment

NAME READY UP-TO-DATE AVAILABLE AGE

flink-jobmanager 1/1 1 1 4h28m

flink-taskmanager 1/2 2 1 4h28m

hello-minikube 1/1 1 1 5h18m

查看启动的 Service

lin@lin-VirtualBox:~/K8S$ sudo kubectl get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

flink-jobmanager ClusterIP 10.96.132.16 <none> 6123/TCP,6124/TCP,8081/TCP 4h28m

hello-minikube NodePort 10.104.137.240 <none> 8080:30041/TCP 5h18m

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 5h25m

登陆 Flink UI 以及提交 Flink Job 的几种方式

https://ci.apache.org/projects/flink/flink-docs-release-1.10/ops/deployment/kubernetes.html#deploy-flink-session-cluster-on-kubernetes

- proxy 方式

命令

kubectl proxy

登陆 URL

http://localhost:8001/api/v1/namespaces/default/services/flink-jobmanager:ui/proxy

(这种方式没讲到怎么 run job)

- NodePort service 方式

命令

sudo kubectl create -f jobmanager-rest-service.yaml

sudo kubectl get svc flink-jobmanager-rest

lin@lin-VirtualBox:~/K8S$ sudo kubectl get svc flink-jobmanager-rest

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

flink-jobmanager-rest NodePort 10.96.150.145 <none> 8081:32598/TCP 12s

登陆 URL

http://10.96.150.145:8081

提交 Job

./bin/flink run -m 10.96.150.145:8081 ./examples/streaming/WordCount.jar

在 UI 上可以看到提交的 Job

- port-forward

宿主机安装 socat

sudo apt-get install socat

命令

sudo kubectl port-forward flink-jobmanager-574676d5d5-xd9kx 8081:8081

登陆 URL

http://localhost:8081

提交 Job

./bin/flink run -m localhost:8081 ./examples/streaming/WordCount.jar

在 UI 上可以看到提交的 Job

删除 Flink

sudo kubectl delete -f jobmanager-deployment.yaml

sudo kubectl delete -f taskmanager-deployment.yaml

sudo kubectl delete -f jobmanager-service.yaml

sudo kubectl delete -f flink-configuration-configmap.yaml

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· Linux系列:如何用 C#调用 C方法造成内存泄露

· AI与.NET技术实操系列(二):开始使用ML.NET

· 记一次.NET内存居高不下排查解决与启示

· 探究高空视频全景AR技术的实现原理

· 理解Rust引用及其生命周期标识(上)

· DeepSeek 开源周回顾「GitHub 热点速览」

· 物流快递公司核心技术能力-地址解析分单基础技术分享

· .NET 10首个预览版发布:重大改进与新特性概览!

· AI与.NET技术实操系列(二):开始使用ML.NET

· 单线程的Redis速度为什么快?