【人工智能】卷积神经网络

用CNN实现离散数据的分类(以图像分类为例子)

感受野

感受野(Receptive Field):卷积神经网络各输出特征图中的每个像素点,在原始输入图片上映射区域的大小

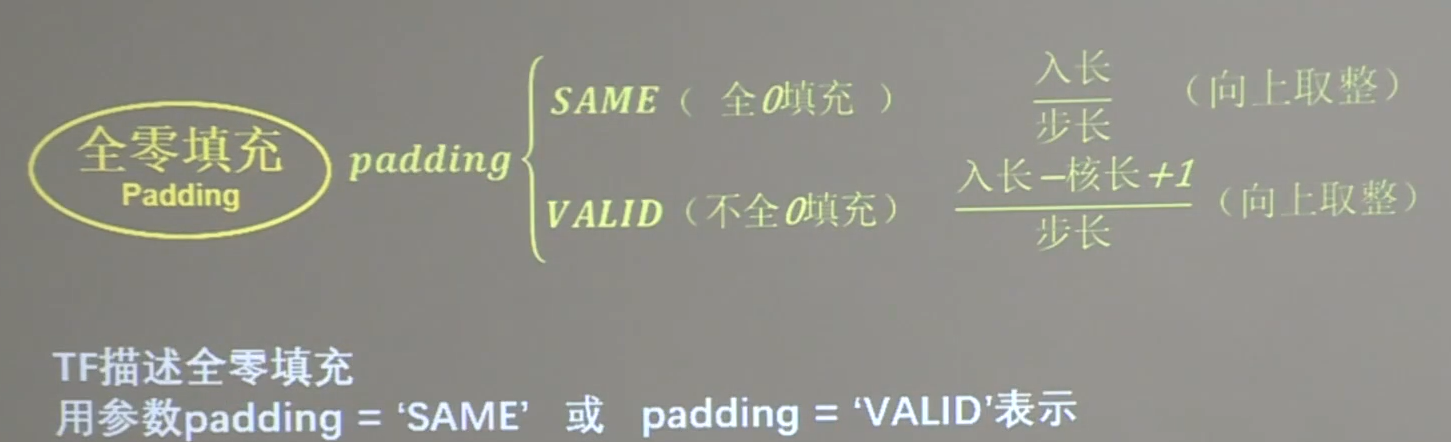

全零填充

目的:希望卷积计算保持输入特征图的尺寸不变

卷积输出特征图维度的计算公式

使用全零填充 padding = "SAME"

不使用全零填充 padding = "VALID"

TF描述卷积层

tf.keras.layers.Conv2D(

filters = 卷积个数,

kernel_size = 卷积核尺寸, # 正方形写 核长整数 或 (核高h,核宽w)

strides = 滑动步长,# 横纵向相同写 步长整数 或 (纵向步长h,横向步长w),默认1

padding = "same" or "valid" # 默认valid,不使用全零填充。

activation = "relu" or "signmoid" or "tanh" or "softmax" 等 # 如果有BN此处不写

input_shape = (高、宽、通道数) # 输入特征图维度,可省略

)

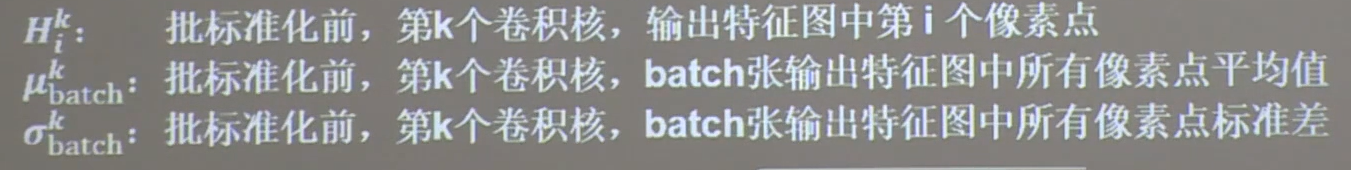

批标准化(BN)

批标准化(Batch Normalization)

- 标准化:使数据符合0均值,1为标准差的分布

- 批标准化:对一小批数据(batch),做标准化处理

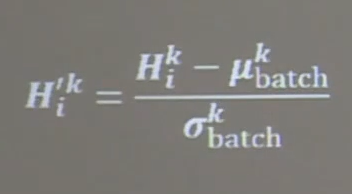

批标准化后,第k个卷积核的输出特征图(feature map)中第i个像素点

可以通过下面式子计算批标准化后的输出特征图

H'表示第k个卷积核,输出特征图中的第i个像素点

批标准化操作会让每个像素点进行减=均值除以标准差的自更新计算,对于第k个卷积核,batch张输出特征图中,所有数值的平均值和标准差

BN为每个卷积核引入可训练参数γ和β,调整批归一化的力度

池化

池化用于减少特征数据量

池化方法

- 最大值池化可提取图片纹理

- 均值池化可保证背景特征

TF描述池化:

tf.keras.layers.MaxPool2D(

pool_size = 池化尺寸, # 正方形写核长整数 或(核高h,核宽w)

strides = 池化步长, # 步长整数 或(纵向步长h,横向步长w),默认为pool_size

padding = "valid" or "same" # 默认valid,不使用全零填充

)

tf.keras.layers.AveragePooling2D(

pool_size = 池化尺寸, # 正方形写核长整数 或(核高h,核宽w)

strides = 池化步长, # 步长整数 或(纵向步长h,横向步长w),默认为pool_size

padding = "valid" or "same" # 默认valid,不使用全零填充

)

舍弃

目的:缓解神经网络过拟合

在神经网络训练时,将一部分神经元按照一定概率从神经网络中暂时舍弃。神经网络使用时,被舍弃的神经元恢复链接

TF描述池化:tf.keras.layers.Dropout(舍弃的概率)

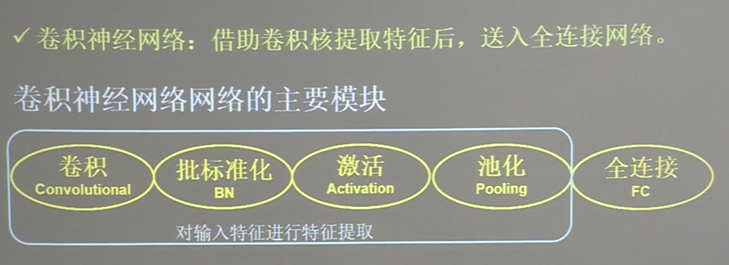

卷积神经网络

卷积神经网络的主要模块

【卷积(Convolutional) -> 批标准化(BN) -> 激活(Activation) -> 池化(Pooling) ->】-> 全连接(FC)

卷积是什么:卷积就是特征提取器,就是CBAPD

model = tf.keras.models.Sequential([

Conv2D(filters = 6,kernel_size=(5,5),padding='same'),# 卷积层 C

BatchNormalization(),# 批标准化。 BN层 B

Activation('relu'), # 激活层 A

MaxPool2D(pool_size=(2,2),strides=2,padding='same') # 池化层 P

Dropout(0.2), # 舍弃。 dropout层 D

])

Cifar10数据集

提供五万张32 * 32像素点的十分类彩色图片和标签,用于训练

提供一万张32 * 32像素点的十分类彩色图片和标签,用于测试

(每张图片有32行 32列像素点的红绿蓝三通道数据)

导入cifar10数据集

cifar10 = tf.keras.datasets.cifar10

(x_train,y_train),(x_test,y_test) = cifar10.load_data()

完整代码如下:

import tensorflow as tf

from matplotlib import pyplot as plt

import numpy as np

np.set_printoptions(threshold=np.inf)

cifar10 = tf.keras.datasets.cifar10

(x_train, y_train), (x_test, y_test) = cifar10.load_data()

# 可视化训练集输入特征的第一个元素

plt.imshow(x_train[0]) # 绘制图片

plt.show()

# # 打印出训练集输入特征的第一个元素

# print("x_train[0]:\n", x_train[0])

# # 打印出训练集标签的第一个元素

# print("y_train[0]:\n", y_train[0])

# # 打印出整个训练集输入特征形状

# print("x_train.shape:\n", x_train.shape)

# # 打印出整个训练集标签的形状

# print("y_train.shape:\n", y_train.shape)

# # 打印出整个测试集输入特征的形状

# print("x_test.shape:\n", x_test.shape)

# # 打印出整个测试集标签的形状

# print("y_test.shape:\n", y_test.shape)

## 需要查看运行结果自行解除这一大段的注释

卷积神经网络搭建

·

搭建一个一层卷积,两层全连接的网络

使用5个5X5的卷积核 ( 5*5 conv,filter=6)过2X2的池化核,池化步长是2(2*2 pool,strides=2),过128个神经元的全连接层(Dense 128)

由于cifar10是十分类,因此最后还要过一层十个神经元的全连接层

进行分析,分析思路CBAPD

C (核:6 * 6 * 5 ;步长:1 ;填充:same)

B (Yes) # 意为使用批标准化

A (relu)

P (max;核:2 * 2 ;步长:2 ; 填充:same)

D (0.2) # 把20%的神经元休眠

Flatten

Dense(神经元:128 ; 激活:relu ; Dropout:0.2)

Dense(神经元:10 ; 激活:softmax) # 过softmax函数使输出符合概率分布

代码实现如下:

from tensorflow.keras import Model

class Baseline(Model):

def __init__(self):

super(Baseline, self).__init__()

self.c1 = Conv2D(filters=6, kernel_size=(5, 5), padding='same') # 卷积层

self.b1 = BatchNormalization() # BN层

self.a1 = Activation('relu') # 激活层

self.p1 = MaxPool2D(pool_size=(2, 2), strides=2, padding='same') # 池化层

self.d1 = Dropout(0.2) # dropout层

self.flatten = Flatten() # 拉直

self.f1 = Dense(128, activation='relu')

self.d2 = Dropout(0.2) # 按照20%比例休眠神经元

self.f2 = Dense(10, activation='softmax')

def call(self, x):

x = self.c1(x)

x = self.b1(x)

x = self.a1(x)

x = self.p1(x)

x = self.d1(x)

x = self.flatten(x)

x = self.f1(x)

x = self.d2(x)

y = self.f2(x)

return y

完整代码如下:

import tensorflow as tf

import os

import numpy as np

from matplotlib import pyplot as plt

from tensorflow.keras.layers import Conv2D, BatchNormalization, Activation, MaxPool2D, Dropout, Flatten, Dense

from tensorflow.keras import Model

np.set_printoptions(threshold=np.inf)

cifar10 = tf.keras.datasets.cifar10

(x_train, y_train), (x_test, y_test) = cifar10.load_data()

x_train, x_test = x_train / 255.0, x_test / 255.0

class Baseline(Model):

def __init__(self):

super(Baseline, self).__init__()

self.c1 = Conv2D(filters=6, kernel_size=(5, 5), padding='same') # 卷积层

self.b1 = BatchNormalization() # BN层

self.a1 = Activation('relu') # 激活层

self.p1 = MaxPool2D(pool_size=(2, 2), strides=2, padding='same') # 池化层

self.d1 = Dropout(0.2) # dropout层

self.flatten = Flatten()

self.f1 = Dense(128, activation='relu')

self.d2 = Dropout(0.2)

self.f2 = Dense(10, activation='softmax')

def call(self, x):

x = self.c1(x)

x = self.b1(x)

x = self.a1(x)

x = self.p1(x)

x = self.d1(x)

x = self.flatten(x)

x = self.f1(x)

x = self.d2(x)

y = self.f2(x)

return y

model = Baseline()

model.compile(optimizer='adam',

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False),

metrics=['sparse_categorical_accuracy'])

checkpoint_save_path = "./checkpoint/Baseline.ckpt"

if os.path.exists(checkpoint_save_path + '.index'):

print('-------------load the model-----------------')

model.load_weights(checkpoint_save_path)

cp_callback = tf.keras.callbacks.ModelCheckpoint(filepath=checkpoint_save_path,

save_weights_only=True,

save_best_only=True)

history = model.fit(x_train, y_train, batch_size=32, epochs=5, validation_data=(x_test, y_test), validation_freq=1,

callbacks=[cp_callback])

model.summary()

# print(model.trainable_variables)

file = open('./weights.txt', 'w')

for v in model.trainable_variables:

file.write(str(v.name) + '\n')

file.write(str(v.shape) + '\n')

file.write(str(v.numpy()) + '\n')

file.close()

############################################### show ###############################################

# 显示训练集和验证集的acc和loss曲线

acc = history.history['sparse_categorical_accuracy']

val_acc = history.history['val_sparse_categorical_accuracy']

loss = history.history['loss']

val_loss = history.history['val_loss']

plt.subplot(1, 2, 1)

plt.plot(acc, label='Training Accuracy')

plt.plot(val_acc, label='Validation Accuracy')

plt.title('Training and Validation Accuracy')

plt.legend()

plt.subplot(1, 2, 2)

plt.plot(loss, label='Training Loss')

plt.plot(val_loss, label='Validation Loss')

plt.title('Training and Validation Loss')

plt.legend()

plt.show()

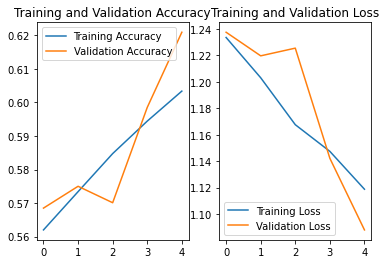

-------------load the model-----------------

Epoch 1/5

1563/1563 [==============================] - 26s 16ms/step - loss: 1.2335 - sparse_categorical_accuracy: 0.5620 - val_loss: 1.2375 - val_sparse_categorical_accuracy: 0.5685

Epoch 2/5

1563/1563 [==============================] - 24s 15ms/step - loss: 1.2030 - sparse_categorical_accuracy: 0.5734 - val_loss: 1.2196 - val_sparse_categorical_accuracy: 0.5750

Epoch 3/5

1563/1563 [==============================] - 24s 15ms/step - loss: 1.1676 - sparse_categorical_accuracy: 0.5847 - val_loss: 1.2254 - val_sparse_categorical_accuracy: 0.5701

Epoch 4/5

1563/1563 [==============================] - 23s 15ms/step - loss: 1.1474 - sparse_categorical_accuracy: 0.5944 - val_loss: 1.1421 - val_sparse_categorical_accuracy: 0.5985

Epoch 5/5

1563/1563 [==============================] - 24s 15ms/step - loss: 1.1188 - sparse_categorical_accuracy: 0.6034 - val_loss: 1.0880 - val_sparse_categorical_accuracy: 0.6209

Model: "baseline_1"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_8 (Conv2D) multiple 456

_________________________________________________________________

batch_normalization_3 (Batch multiple 24

_________________________________________________________________

activation_3 (Activation) multiple 0

_________________________________________________________________

max_pooling2d_6 (MaxPooling2 multiple 0

_________________________________________________________________

dropout_4 (Dropout) multiple 0

_________________________________________________________________

flatten_3 (Flatten) multiple 0

_________________________________________________________________

dense_8 (Dense) multiple 196736

_________________________________________________________________

dropout_5 (Dropout) multiple 0

_________________________________________________________________

dense_9 (Dense) multiple 1290

=================================================================

Total params: 198,506

Trainable params: 198,494

Non-trainable params: 12

_________________________________________________________________

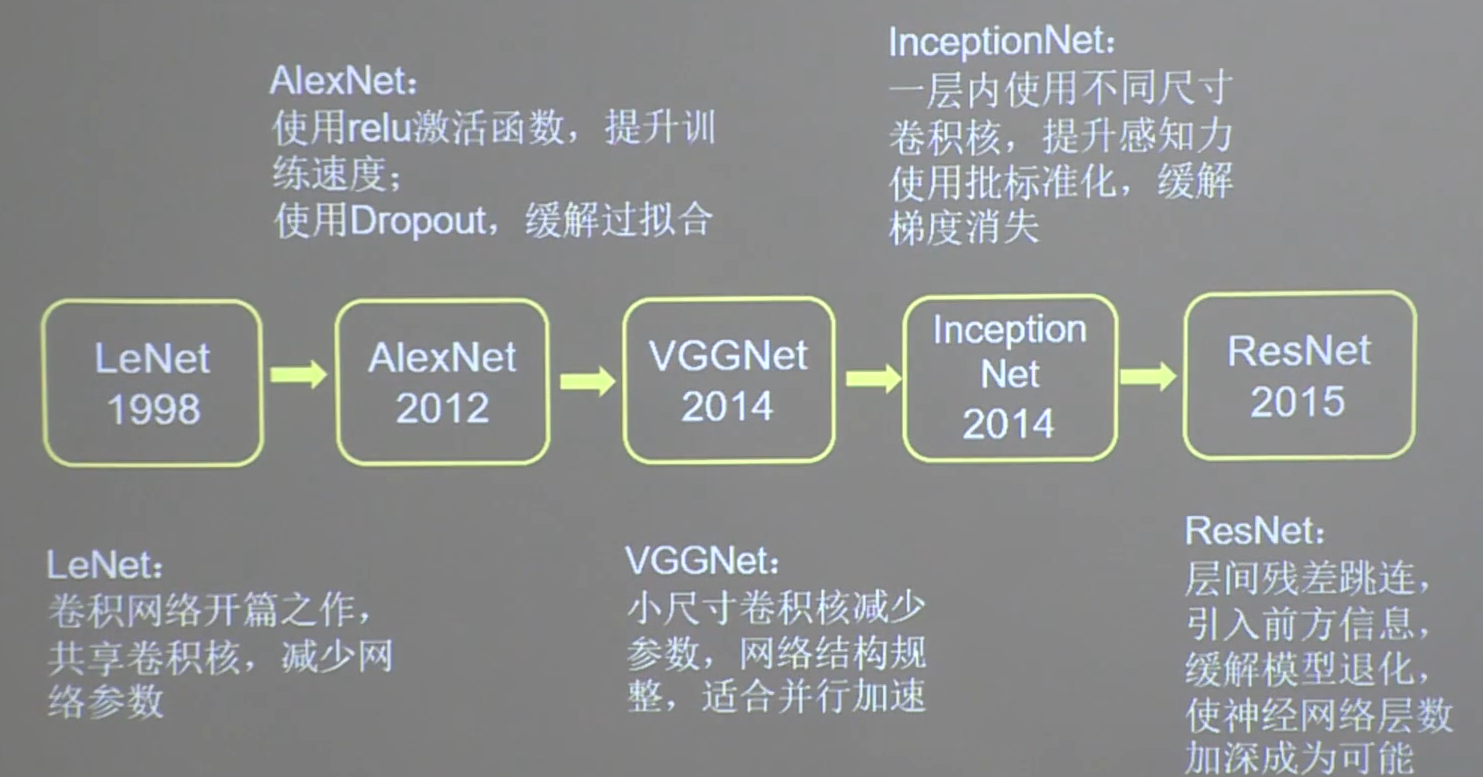

经典卷积网络

下面使用六步法,分别实现LeNET、AlexNet,VGGNet,INceptionNet和ResNet卷积神经网络

LetNet

由Yann LeCun 于1998年提出,卷积网络的开篇之作

特点:通过共享卷积核减少了网络的参数

import tensorflow as tf

import os

import numpy as np

from matplotlib import pyplot as plt

from tensorflow.keras.layers import Conv2D, BatchNormalization, Activation, MaxPool2D, Dropout, Flatten, Dense

from tensorflow.keras import Model

np.set_printoptions(threshold=np.inf)

cifar10 = tf.keras.datasets.cifar10

(x_train, y_train), (x_test, y_test) = cifar10.load_data()

x_train, x_test = x_train / 255.0, x_test / 255.0

class LeNet5(Model):

def __init__(self):

super(LeNet5, self).__init__()

self.c1 = Conv2D(filters=6, kernel_size=(5, 5),

activation='sigmoid')

self.p1 = MaxPool2D(pool_size=(2, 2), strides=2)

self.c2 = Conv2D(filters=16, kernel_size=(5, 5),

activation='sigmoid')

self.p2 = MaxPool2D(pool_size=(2, 2), strides=2)

self.flatten = Flatten()

self.f1 = Dense(120, activation='sigmoid')

self.f2 = Dense(84, activation='sigmoid')

self.f3 = Dense(10, activation='softmax')

def call(self, x):

x = self.c1(x)

x = self.p1(x)

x = self.c2(x)

x = self.p2(x)

x = self.flatten(x)

x = self.f1(x)

x = self.f2(x)

y = self.f3(x)

return y

model = LeNet5()

model.compile(optimizer='adam',

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False),

metrics=['sparse_categorical_accuracy'])

checkpoint_save_path = "./checkpoint/LeNet5.ckpt"

if os.path.exists(checkpoint_save_path + '.index'):

print('-------------load the model-----------------')

model.load_weights(checkpoint_save_path)

cp_callback = tf.keras.callbacks.ModelCheckpoint(filepath=checkpoint_save_path,

save_weights_only=True,

save_best_only=True)

history = model.fit(x_train, y_train, batch_size=32, epochs=5, validation_data=(x_test, y_test), validation_freq=1,

callbacks=[cp_callback])

model.summary()

# print(model.trainable_variables)

file = open('./weights.txt', 'w')

for v in model.trainable_variables:

file.write(str(v.name) + '\n')

file.write(str(v.shape) + '\n')

file.write(str(v.numpy()) + '\n')

file.close()

############################################### show ###############################################

# 显示训练集和验证集的acc和loss曲线

acc = history.history['sparse_categorical_accuracy']

val_acc = history.history['val_sparse_categorical_accuracy']

loss = history.history['loss']

val_loss = history.history['val_loss']

plt.subplot(1, 2, 1)

plt.plot(acc, label='Training Accuracy')

plt.plot(val_acc, label='Validation Accuracy')

plt.title('Training and Validation Accuracy')

plt.legend()

plt.subplot(1, 2, 2)

plt.plot(loss, label='Training Loss')

plt.plot(val_loss, label='Validation Loss')

plt.title('Training and Validation Loss')

plt.legend()

plt.show()

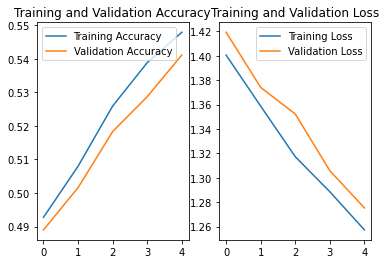

-------------load the model-----------------

Epoch 1/5

1563/1563 [==============================] - 14s 9ms/step - loss: 1.4006 - sparse_categorical_accuracy: 0.4928 - val_loss: 1.4194 - val_sparse_categorical_accuracy: 0.4890

Epoch 2/5

1563/1563 [==============================] - 14s 9ms/step - loss: 1.3587 - sparse_categorical_accuracy: 0.5080 - val_loss: 1.3741 - val_sparse_categorical_accuracy: 0.5016

Epoch 3/5

1563/1563 [==============================] - 14s 9ms/step - loss: 1.3173 - sparse_categorical_accuracy: 0.5259 - val_loss: 1.3523 - val_sparse_categorical_accuracy: 0.5183

Epoch 4/5

1563/1563 [==============================] - 14s 9ms/step - loss: 1.2885 - sparse_categorical_accuracy: 0.5388 - val_loss: 1.3057 - val_sparse_categorical_accuracy: 0.5287

Epoch 5/5

1563/1563 [==============================] - 15s 10ms/step - loss: 1.2573 - sparse_categorical_accuracy: 0.5479 - val_loss: 1.2753 - val_sparse_categorical_accuracy: 0.5411

Model: "le_net5_1"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_9 (Conv2D) multiple 456

_________________________________________________________________

max_pooling2d_7 (MaxPooling2 multiple 0

_________________________________________________________________

conv2d_10 (Conv2D) multiple 2416

_________________________________________________________________

max_pooling2d_8 (MaxPooling2 multiple 0

_________________________________________________________________

flatten_4 (Flatten) multiple 0

_________________________________________________________________

dense_10 (Dense) multiple 48120

_________________________________________________________________

dense_11 (Dense) multiple 10164

_________________________________________________________________

dense_12 (Dense) multiple 850

=================================================================

Total params: 62,006

Trainable params: 62,006

Non-trainable params: 0

_________________________________________________________________

AlexNet

Alex网络诞生于2012年,当年ImageNet竞赛冠军,Top5错误率为16.4%

AlexNet使用了激活函数relu,提升了训练速度,使用Dropout缓解了过拟合

import tensorflow as tf

import os

import numpy as np

from matplotlib import pyplot as plt

from tensorflow.keras.layers import Conv2D, BatchNormalization, Activation, MaxPool2D, Dropout, Flatten, Dense

from tensorflow.keras import Model

np.set_printoptions(threshold=np.inf)

cifar10 = tf.keras.datasets.cifar10

(x_train, y_train), (x_test, y_test) = cifar10.load_data()

x_train, x_test = x_train / 255.0, x_test / 255.0

class AlexNet8(Model):

def __init__(self):

super(AlexNet8, self).__init__()

self.c1 = Conv2D(filters=96, kernel_size=(3, 3))

self.b1 = BatchNormalization()

self.a1 = Activation('relu')

self.p1 = MaxPool2D(pool_size=(3, 3), strides=2)

self.c2 = Conv2D(filters=256, kernel_size=(3, 3))

self.b2 = BatchNormalization()

self.a2 = Activation('relu')

self.p2 = MaxPool2D(pool_size=(3, 3), strides=2)

self.c3 = Conv2D(filters=384, kernel_size=(3, 3), padding='same',

activation='relu')

self.c4 = Conv2D(filters=384, kernel_size=(3, 3), padding='same',

activation='relu')

self.c5 = Conv2D(filters=256, kernel_size=(3, 3), padding='same',

activation='relu')

self.p3 = MaxPool2D(pool_size=(3, 3), strides=2)

self.flatten = Flatten()

self.f1 = Dense(2048, activation='relu')

self.d1 = Dropout(0.5)

self.f2 = Dense(2048, activation='relu')

self.d2 = Dropout(0.5)

self.f3 = Dense(10, activation='softmax')

def call(self, x):

x = self.c1(x)

x = self.b1(x)

x = self.a1(x)

x = self.p1(x)

x = self.c2(x)

x = self.b2(x)

x = self.a2(x)

x = self.p2(x)

x = self.c3(x)

x = self.c4(x)

x = self.c5(x)

x = self.p3(x)

x = self.flatten(x)

x = self.f1(x)

x = self.d1(x)

x = self.f2(x)

x = self.d2(x)

y = self.f3(x)

return y

model = AlexNet8()

model.compile(optimizer='adam',

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False),

metrics=['sparse_categorical_accuracy'])

checkpoint_save_path = "./checkpoint/AlexNet8.ckpt"

if os.path.exists(checkpoint_save_path + '.index'):

print('-------------load the model-----------------')

model.load_weights(checkpoint_save_path)

cp_callback = tf.keras.callbacks.ModelCheckpoint(filepath=checkpoint_save_path,

save_weights_only=True,

save_best_only=True)

history = model.fit(x_train, y_train, batch_size=32, epochs=5, validation_data=(x_test, y_test), validation_freq=1,

callbacks=[cp_callback])

model.summary()

# print(model.trainable_variables)

file = open('./weights.txt', 'w')

for v in model.trainable_variables:

file.write(str(v.name) + '\n')

file.write(str(v.shape) + '\n')

file.write(str(v.numpy()) + '\n')

file.close()

############################################### show ###############################################

# 显示训练集和验证集的acc和loss曲线

acc = history.history['sparse_categorical_accuracy']

val_acc = history.history['val_sparse_categorical_accuracy']

loss = history.history['loss']

val_loss = history.history['val_loss']

plt.subplot(1, 2, 1)

plt.plot(acc, label='Training Accuracy')

plt.plot(val_acc, label='Validation Accuracy')

plt.title('Training and Validation Accuracy')

plt.legend()

plt.subplot(1, 2, 2)

plt.plot(loss, label='Training Loss')

plt.plot(val_loss, label='Validation Loss')

plt.title('Training and Validation Loss')

plt.legend()

plt.show()

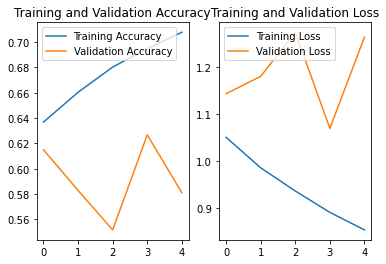

-------------load the model-----------------

Epoch 1/5

1563/1563 [==============================] - 356s 228ms/step - loss: 1.0504 - sparse_categorical_accuracy: 0.6369 - val_loss: 1.1432 - val_sparse_categorical_accuracy: 0.6150

Epoch 2/5

1563/1563 [==============================] - 389s 249ms/step - loss: 0.9855 - sparse_categorical_accuracy: 0.6604 - val_loss: 1.1803 - val_sparse_categorical_accuracy: 0.5829

Epoch 3/5

1563/1563 [==============================] - 337s 215ms/step - loss: 0.9361 - sparse_categorical_accuracy: 0.6803 - val_loss: 1.2742 - val_sparse_categorical_accuracy: 0.5517

Epoch 4/5

1563/1563 [==============================] - 325s 208ms/step - loss: 0.8906 - sparse_categorical_accuracy: 0.6949 - val_loss: 1.0693 - val_sparse_categorical_accuracy: 0.6268

Epoch 5/5

1563/1563 [==============================] - 332s 212ms/step - loss: 0.8532 - sparse_categorical_accuracy: 0.7078 - val_loss: 1.2640 - val_sparse_categorical_accuracy: 0.5811

Model: "alex_net8_1"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_11 (Conv2D) multiple 2688

_________________________________________________________________

batch_normalization_4 (Batch multiple 384

_________________________________________________________________

activation_4 (Activation) multiple 0

_________________________________________________________________

max_pooling2d_9 (MaxPooling2 multiple 0

_________________________________________________________________

conv2d_12 (Conv2D) multiple 221440

_________________________________________________________________

batch_normalization_5 (Batch multiple 1024

_________________________________________________________________

activation_5 (Activation) multiple 0

_________________________________________________________________

max_pooling2d_10 (MaxPooling multiple 0

_________________________________________________________________

conv2d_13 (Conv2D) multiple 885120

_________________________________________________________________

conv2d_14 (Conv2D) multiple 1327488

_________________________________________________________________

conv2d_15 (Conv2D) multiple 884992

_________________________________________________________________

max_pooling2d_11 (MaxPooling multiple 0

_________________________________________________________________

flatten_5 (Flatten) multiple 0

_________________________________________________________________

dense_13 (Dense) multiple 2099200

_________________________________________________________________

dropout_6 (Dropout) multiple 0

_________________________________________________________________

dense_14 (Dense) multiple 4196352

_________________________________________________________________

dropout_7 (Dropout) multiple 0

_________________________________________________________________

dense_15 (Dense) multiple 20490

=================================================================

Total params: 9,639,178

Trainable params: 9,638,474

Non-trainable params: 704

_________________________________________________________________

VGGNet

诞生于2014年,当年ImageNet竞赛冠军,Top5错误率减小到7.3%

使用了VGG卷积核,在减少了参数的同时提高之别准确率

import tensorflow as tf

import os

import numpy as np

from matplotlib import pyplot as plt

from tensorflow.keras.layers import Conv2D, BatchNormalization, Activation, MaxPool2D, Dropout, Flatten, Dense

from tensorflow.keras import Model

np.set_printoptions(threshold=np.inf)

cifar10 = tf.keras.datasets.cifar10

(x_train, y_train), (x_test, y_test) = cifar10.load_data()

x_train, x_test = x_train / 255.0, x_test / 255.0

class AlexNet8(Model):

def __init__(self):

super(AlexNet8, self).__init__()

self.c1 = Conv2D(filters=96, kernel_size=(3, 3))

self.b1 = BatchNormalization()

self.a1 = Activation('relu')

self.p1 = MaxPool2D(pool_size=(3, 3), strides=2)

self.c2 = Conv2D(filters=256, kernel_size=(3, 3))

self.b2 = BatchNormalization()

self.a2 = Activation('relu')

self.p2 = MaxPool2D(pool_size=(3, 3), strides=2)

self.c3 = Conv2D(filters=384, kernel_size=(3, 3), padding='same',

activation='relu')

self.c4 = Conv2D(filters=384, kernel_size=(3, 3), padding='same',

activation='relu')

self.c5 = Conv2D(filters=256, kernel_size=(3, 3), padding='same',

activation='relu')

self.p3 = MaxPool2D(pool_size=(3, 3), strides=2)

self.flatten = Flatten()

self.f1 = Dense(2048, activation='relu')

self.d1 = Dropout(0.5)

self.f2 = Dense(2048, activation='relu')

self.d2 = Dropout(0.5)

self.f3 = Dense(10, activation='softmax')

def call(self, x):

x = self.c1(x)

x = self.b1(x)

x = self.a1(x)

x = self.p1(x)

x = self.c2(x)

x = self.b2(x)

x = self.a2(x)

x = self.p2(x)

x = self.c3(x)

x = self.c4(x)

x = self.c5(x)

x = self.p3(x)

x = self.flatten(x)

x = self.f1(x)

x = self.d1(x)

x = self.f2(x)

x = self.d2(x)

y = self.f3(x)

return y

model = AlexNet8()

model.compile(optimizer='adam',

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False),

metrics=['sparse_categorical_accuracy'])

checkpoint_save_path = "./checkpoint/AlexNet8.ckpt"

if os.path.exists(checkpoint_save_path + '.index'):

print('-------------load the model-----------------')

model.load_weights(checkpoint_save_path)

cp_callback = tf.keras.callbacks.ModelCheckpoint(filepath=checkpoint_save_path,

save_weights_only=True,

save_best_only=True)

history = model.fit(x_train, y_train, batch_size=32, epochs=5, validation_data=(x_test, y_test), validation_freq=1,

callbacks=[cp_callback])

model.summary()

# print(model.trainable_variables)

file = open('./weights.txt', 'w')

for v in model.trainable_variables:

file.write(str(v.name) + '\n')

file.write(str(v.shape) + '\n')

file.write(str(v.numpy()) + '\n')

file.close()

############################################### show ###############################################

# 显示训练集和验证集的acc和loss曲线

acc = history.history['sparse_categorical_accuracy']

val_acc = history.history['val_sparse_categorical_accuracy']

loss = history.history['loss']

val_loss = history.history['val_loss']

plt.subplot(1, 2, 1)

plt.plot(acc, label='Training Accuracy')

plt.plot(val_acc, label='Validation Accuracy')

plt.title('Training and Validation Accuracy')

plt.legend()

plt.subplot(1, 2, 2)

plt.plot(loss, label='Training Loss')

plt.plot(val_loss, label='Validation Loss')

plt.title('Training and Validation Loss')

plt.legend()

plt.show()

-------------load the model-----------------

Epoch 1/5

179/1563 [==>...........................] - ETA: 4:14 - loss: 0.8443 - sparse_categorical_accuracy: 0.7139

InceptionNet

诞生于2014年,当年ImageNet竞赛冠军,Top5错误率为6.67%

引入了Inception结构块,在同一网络内使用不同尺寸的卷积核,提升模型感知力

使用了批标准化,缓解了梯度消失

import tensorflow as tf

import os

import numpy as np

from matplotlib import pyplot as plt

from tensorflow.keras.layers import Conv2D, BatchNormalization, Activation, MaxPool2D, Dropout, Flatten, Dense, \

GlobalAveragePooling2D

from tensorflow.keras import Model

np.set_printoptions(threshold=np.inf)

cifar10 = tf.keras.datasets.cifar10

(x_train, y_train), (x_test, y_test) = cifar10.load_data()

x_train, x_test = x_train / 255.0, x_test / 255.0

class ConvBNRelu(Model):

def __init__(self, ch, kernelsz=3, strides=1, padding='same'):

super(ConvBNRelu, self).__init__()

self.model = tf.keras.models.Sequential([

Conv2D(ch, kernelsz, strides=strides, padding=padding),

BatchNormalization(),

Activation('relu')

])

def call(self, x):

x = self.model(x, training=False) #在training=False时,BN通过整个训练集计算均值、方差去做批归一化,training=True时,通过当前batch的均值、方差去做批归一化。推理时 training=False效果好

return x

class InceptionBlk(Model):

def __init__(self, ch, strides=1):

super(InceptionBlk, self).__init__()

self.ch = ch

self.strides = strides

self.c1 = ConvBNRelu(ch, kernelsz=1, strides=strides)

self.c2_1 = ConvBNRelu(ch, kernelsz=1, strides=strides)

self.c2_2 = ConvBNRelu(ch, kernelsz=3, strides=1)

self.c3_1 = ConvBNRelu(ch, kernelsz=1, strides=strides)

self.c3_2 = ConvBNRelu(ch, kernelsz=5, strides=1)

self.p4_1 = MaxPool2D(3, strides=1, padding='same')

self.c4_2 = ConvBNRelu(ch, kernelsz=1, strides=strides)

def call(self, x):

x1 = self.c1(x)

x2_1 = self.c2_1(x)

x2_2 = self.c2_2(x2_1)

x3_1 = self.c3_1(x)

x3_2 = self.c3_2(x3_1)

x4_1 = self.p4_1(x)

x4_2 = self.c4_2(x4_1)

# concat along axis=channel

x = tf.concat([x1, x2_2, x3_2, x4_2], axis=3)

return x

class Inception10(Model):

def __init__(self, num_blocks, num_classes, init_ch=16, **kwargs):

super(Inception10, self).__init__(**kwargs)

self.in_channels = init_ch

self.out_channels = init_ch

self.num_blocks = num_blocks

self.init_ch = init_ch

self.c1 = ConvBNRelu(init_ch)

self.blocks = tf.keras.models.Sequential()

for block_id in range(num_blocks):

for layer_id in range(2):

if layer_id == 0:

block = InceptionBlk(self.out_channels, strides=2)

else:

block = InceptionBlk(self.out_channels, strides=1)

self.blocks.add(block)

# enlarger out_channels per block

self.out_channels *= 2

self.p1 = GlobalAveragePooling2D()

self.f1 = Dense(num_classes, activation='softmax')

def call(self, x):

x = self.c1(x)

x = self.blocks(x)

x = self.p1(x)

y = self.f1(x)

return y

model = Inception10(num_blocks=2, num_classes=10)

model.compile(optimizer='adam',

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False),

metrics=['sparse_categorical_accuracy'])

checkpoint_save_path = "./checkpoint/Inception10.ckpt"

if os.path.exists(checkpoint_save_path + '.index'):

print('-------------load the model-----------------')

model.load_weights(checkpoint_save_path)

cp_callback = tf.keras.callbacks.ModelCheckpoint(filepath=checkpoint_save_path,

save_weights_only=True,

save_best_only=True)

history = model.fit(x_train, y_train, batch_size=32, epochs=5, validation_data=(x_test, y_test), validation_freq=1,

callbacks=[cp_callback])

model.summary()

# print(model.trainable_variables)

file = open('./weights.txt', 'w')

for v in model.trainable_variables:

file.write(str(v.name) + '\n')

file.write(str(v.shape) + '\n')

file.write(str(v.numpy()) + '\n')

file.close()

############################################### show ###############################################

# 显示训练集和验证集的acc和loss曲线

acc = history.history['sparse_categorical_accuracy']

val_acc = history.history['val_sparse_categorical_accuracy']

loss = history.history['loss']

val_loss = history.history['val_loss']

plt.subplot(1, 2, 1)

plt.plot(acc, label='Training Accuracy')

plt.plot(val_acc, label='Validation Accuracy')

plt.title('Training and Validation Accuracy')

plt.legend()

plt.subplot(1, 2, 2)

plt.plot(loss, label='Training Loss')

plt.plot(val_loss, label='Validation Loss')

plt.title('Training and Validation Loss')

plt.legend()

plt.show()

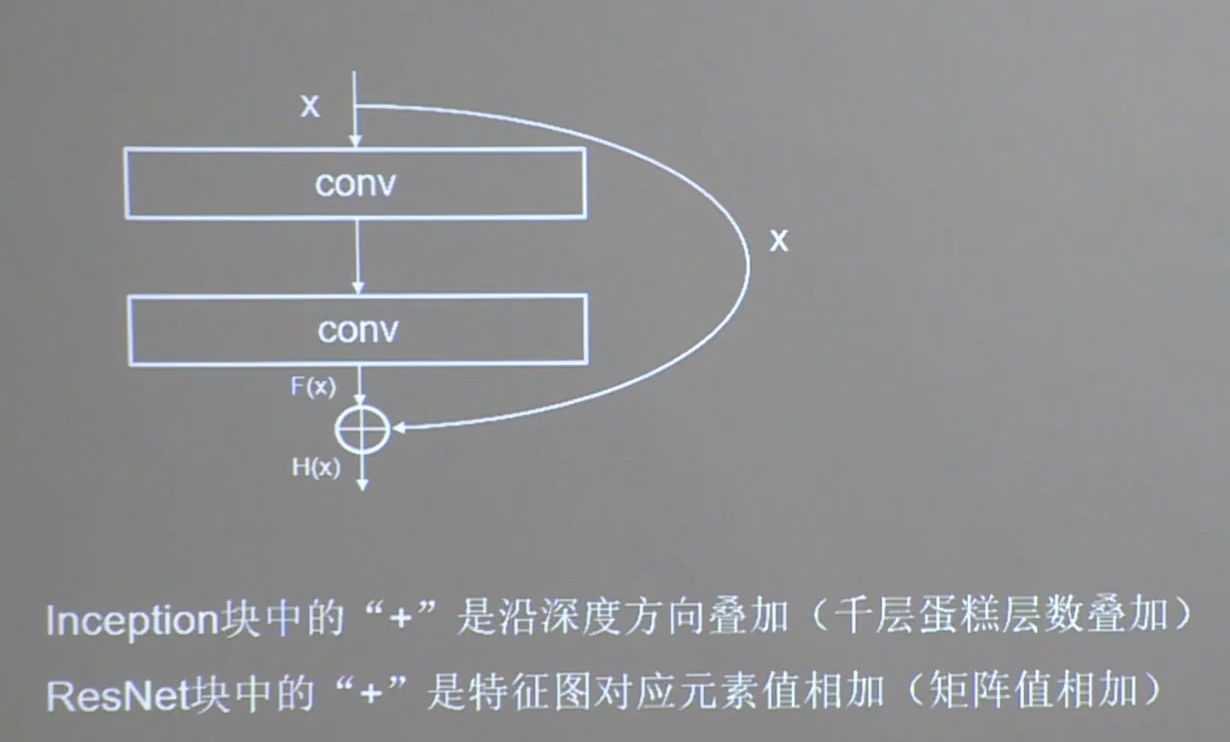

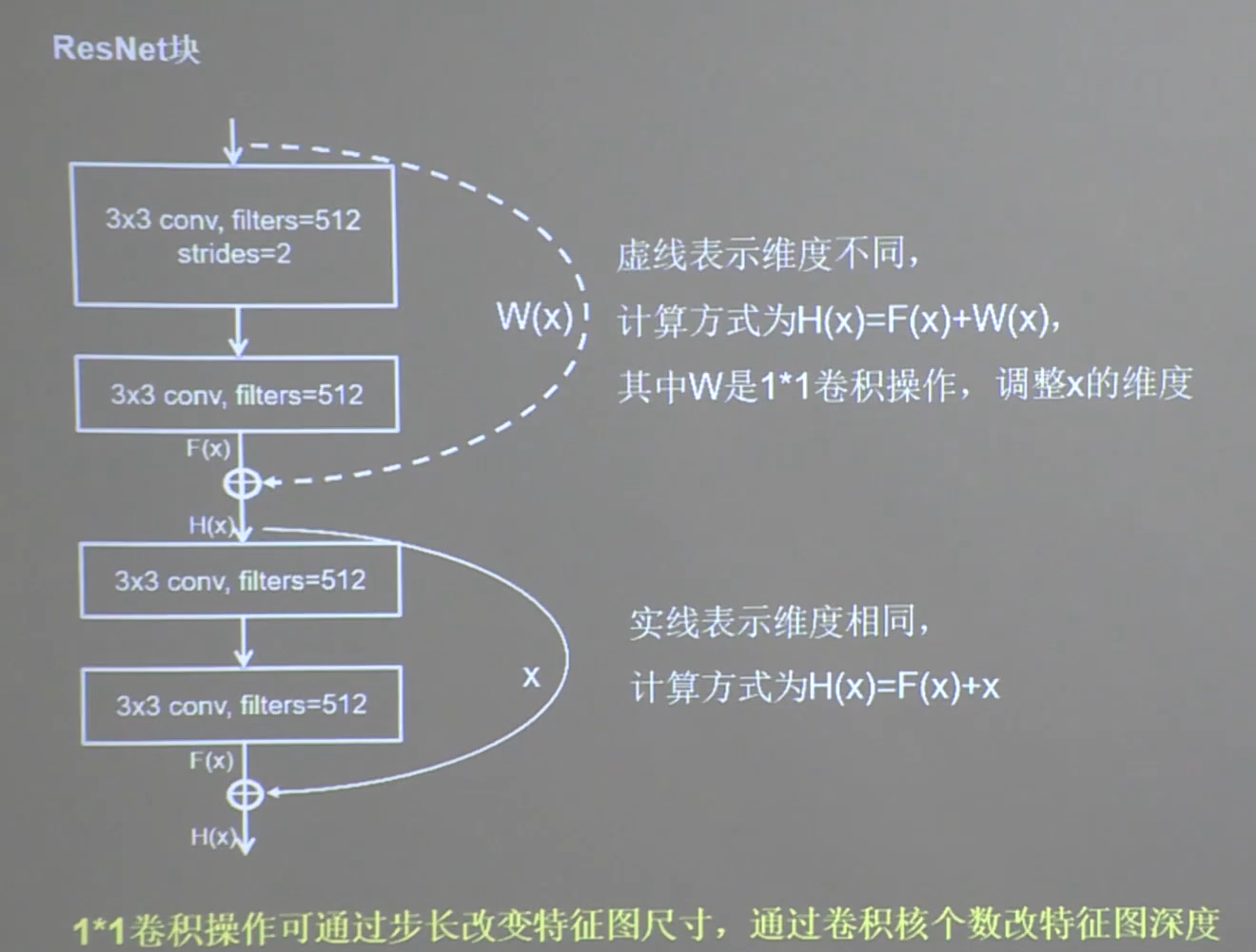

ResNet

诞生于2015年,当年ImageNet竞赛冠军,Top5错误率为3.57%

ResNet提出了层间残差跳连,引入前方信息,缓解梯度消失,使神经网络层数增加成为可能。

有效缓解神经网络模型堆叠造成退化的问题,使得神经网络可以向更深层次发展

import tensorflow as tf

import os

import numpy as np

from matplotlib import pyplot as plt

from tensorflow.keras.layers import Conv2D, BatchNormalization, Activation, MaxPool2D, Dropout, Flatten, Dense

from tensorflow.keras import Model

np.set_printoptions(threshold=np.inf)

cifar10 = tf.keras.datasets.cifar10

(x_train, y_train), (x_test, y_test) = cifar10.load_data()

x_train, x_test = x_train / 255.0, x_test / 255.0

class ResnetBlock(Model):

def __init__(self, filters, strides=1, residual_path=False):

super(ResnetBlock, self).__init__()

self.filters = filters

self.strides = strides

self.residual_path = residual_path

self.c1 = Conv2D(filters, (3, 3), strides=strides, padding='same', use_bias=False)

self.b1 = BatchNormalization()

self.a1 = Activation('relu')

self.c2 = Conv2D(filters, (3, 3), strides=1, padding='same', use_bias=False)

self.b2 = BatchNormalization()

# residual_path为True时,对输入进行下采样,即用1x1的卷积核做卷积操作,保证x能和F(x)维度相同,顺利相加

if residual_path:

self.down_c1 = Conv2D(filters, (1, 1), strides=strides, padding='same', use_bias=False)

self.down_b1 = BatchNormalization()

self.a2 = Activation('relu')

def call(self, inputs):

residual = inputs # residual等于输入值本身,即residual=x

# 将输入通过卷积、BN层、激活层,计算F(x)

x = self.c1(inputs)

x = self.b1(x)

x = self.a1(x)

x = self.c2(x)

y = self.b2(x)

if self.residual_path:

residual = self.down_c1(inputs)

residual = self.down_b1(residual)

out = self.a2(y + residual) # 最后输出的是两部分的和,即F(x)+x或F(x)+Wx,再过激活函数

return out

class ResNet18(Model):

def __init__(self, block_list, initial_filters=64): # block_list表示每个block有几个卷积层

super(ResNet18, self).__init__()

self.num_blocks = len(block_list) # 共有几个block

self.block_list = block_list

self.out_filters = initial_filters

self.c1 = Conv2D(self.out_filters, (3, 3), strides=1, padding='same', use_bias=False)

self.b1 = BatchNormalization()

self.a1 = Activation('relu')

self.blocks = tf.keras.models.Sequential()

# 构建ResNet网络结构

for block_id in range(len(block_list)): # 第几个resnet block

for layer_id in range(block_list[block_id]): # 第几个卷积层

if block_id != 0 and layer_id == 0: # 对除第一个block以外的每个block的输入进行下采样

block = ResnetBlock(self.out_filters, strides=2, residual_path=True)

else:

block = ResnetBlock(self.out_filters, residual_path=False)

self.blocks.add(block) # 将构建好的block加入resnet

self.out_filters *= 2 # 下一个block的卷积核数是上一个block的2倍

self.p1 = tf.keras.layers.GlobalAveragePooling2D()

self.f1 = tf.keras.layers.Dense(10, activation='softmax', kernel_regularizer=tf.keras.regularizers.l2())

def call(self, inputs):

x = self.c1(inputs)

x = self.b1(x)

x = self.a1(x)

x = self.blocks(x)

x = self.p1(x)

y = self.f1(x)

return y

model = ResNet18([2, 2, 2, 2])

model.compile(optimizer='adam',

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False),

metrics=['sparse_categorical_accuracy'])

checkpoint_save_path = "./checkpoint/ResNet18.ckpt"

if os.path.exists(checkpoint_save_path + '.index'):

print('-------------load the model-----------------')

model.load_weights(checkpoint_save_path)

cp_callback = tf.keras.callbacks.ModelCheckpoint(filepath=checkpoint_save_path,

save_weights_only=True,

save_best_only=True)

history = model.fit(x_train, y_train, batch_size=32, epochs=5, validation_data=(x_test, y_test), validation_freq=1,

callbacks=[cp_callback])

model.summary()

# print(model.trainable_variables)

file = open('./weights.txt', 'w')

for v in model.trainable_variables:

file.write(str(v.name) + '\n')

file.write(str(v.shape) + '\n')

file.write(str(v.numpy()) + '\n')

file.close()

############################################### show ###############################################

# 显示训练集和验证集的acc和loss曲线

acc = history.history['sparse_categorical_accuracy']

val_acc = history.history['val_sparse_categorical_accuracy']

loss = history.history['loss']

val_loss = history.history['val_loss']

plt.subplot(1, 2, 1)

plt.plot(acc, label='Training Accuracy')

plt.plot(val_acc, label='Validation Accuracy')

plt.title('Training and Validation Accuracy')

plt.legend()

plt.subplot(1, 2, 2)

plt.plot(loss, label='Training Loss')

plt.plot(val_loss, label='Validation Loss')

plt.title('Training and Validation Loss')

plt.legend()

plt.show()

经典卷积网络总结

浙公网安备 33010602011771号

浙公网安备 33010602011771号