python 堆

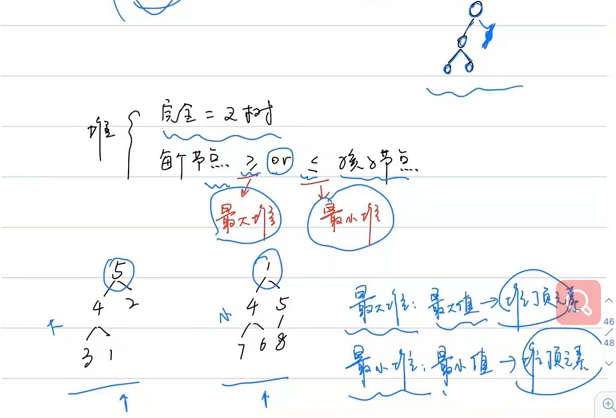

堆 Heap

一种二叉树的结构 - >完全二叉树

https://www.jianshu.com/p/801318c77ab5

https://www.cnblogs.com/namejr/p/9942411.html

"""Heap queue algorithm (a.k.a. priority queue).

堆队列算法(也称为优先队列)。

Heaps are arrays for which a[k] <= a[2*k+1] and a[k] <= a[2*k+2] for

all k, counting elements from 0. For the sake of comparison,

non-existing elements are considered to be infinite. The interesting

property of a heap is that a[0] is always its smallest element.

heap是数组,对于所有的k, a[k] <= a[2*k+1]和a[k] <= a[2*k+2],从0开始计算元素。

为了比较起见,不存在的元素被认为是无限的。堆的有趣属性是[0]总是它的最小元素。

Usage:

heap = [] # creates an empty heap 项目创建一个空堆heappush

heappush(heap, item) # pushes a new item on the heap 推动一个新的项目在堆上

item = heappop(heap) # pops the smallest item from the heap 从堆中弹出最小的项

item = heap[0] # smallest item on the heap without popping it 取堆上最小的项,而不取出它

heapify(x) # transforms list into a heap, in-place, in linear time 在线性时间内就地将列表转换为堆

item = heapreplace(heap, item) # pops and returns smallest item, and adds 弹出并返回最小的项,并添加

# new item; the heap size is unchanged 新项目;堆大小没有改变

Our API differs from textbook heap algorithms as follows:

- We use 0-based indexing. This makes the relationship between the

index for a node and the indexes for its children slightly less

obvious, but is more suitable since Python uses 0-based indexing.

我们使用基于0的索引。这使得节点的索引与其子节点的索引之间的关系稍微不那么明显,但更合适,因为Python使用基于0的索引。

- Our heappop() method returns the smallest item, not the largest.

我们的heapppop()方法返回最小的项,而不是最大的项

These two make it possible to view the heap as a regular Python list

without surprises: heap[0] is the smallest item, and heap.sort()

maintains the heap invariant!

这两种方法使将堆看作一个常规的Python列表变得可能:heap[0]是最小的项,而heap.sort()保持堆的不变!

"""

# Original code by Kevin O'Connor, augmented by Tim Peters and Raymond Hettinger

__about__ = """Heap queues

[explanation by François Pinard]

Heaps are arrays for which a[k] <= a[2*k+1] and a[k] <= a[2*k+2] for

all k, counting elements from 0. For the sake of comparison,

non-existing elements are considered to be infinite. The interesting

property of a heap is that a[0] is always its smallest element.

heap是数组,对于所有的k, a[k] <= a[2*k+1]和a[k] <= a[2*k+2],从0开始计算元素。为了比较起见,不存在的元素被认为是无限的。堆的有趣属性是[0]总是它的最小元素。

The strange invariant above is meant to be an efficient memory

representation for a tournament. The numbers below are `k', not a[k]:

上面这个奇怪的不变量是为了给比赛提供一个有效的内存表示。下面的数字是' k',不是[k]:

0

1 2

3 4 5 6

7 8 9 10 11 12 13 14

15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30

In the tree above, each cell `k' is topping `2*k+1' and `2*k+2'. In

a usual binary tournament we see in sports, each cell is the winner

over the two cells it tops, and we can trace the winner down the tree

to see all opponents s/he had. However, in many computer applications

of such tournaments, we do not need to trace the history of a winner.

To be more memory efficient, when a winner is promoted, we try to

replace it by something else at a lower level, and the rule becomes

that a cell and the two cells it tops contain three different items,

but the top cell "wins" over the two topped cells.

在上面的树中,每个单元格' k'都位于' 2*k+1'和' 2*k+2'的顶部。在我们在体育游戏中经常看到的二元竞赛中,每个单元格都是其顶端的两个单元格中的胜者,我们可以沿着树向下追踪胜者,查看他拥有的所有对手。然而,在许多这类比赛的计算机应用程序中,我们不需要追溯获胜者的历史。

为了提高内存效率,当一个优胜者被提升时,我们会尝试用较低级别的其他东西替换它,规则是,一个单元格和它上面的两个单元格包含三个不同的项目,但上面的单元格“赢得”了上面的两个单元格。

If this heap invariant is protected at all time, index 0 is clearly

the overall winner. The simplest algorithmic way to remove it and

find the "next" winner is to move some loser (let's say cell 30 in the

diagram above) into the 0 position, and then percolate this new 0 down

the tree, exchanging values, until the invariant is re-established.

This is clearly logarithmic on the total number of items in the tree.

By iterating over all items, you get an O(n ln n) sort.

如果这个堆不变式一直受到保护,索引0显然是整体的赢家。移除它并找到“下一个”赢家的最简单的算法方法是将一些输家(如上图中的单元格30)移到0的位置,然后沿着树向下传递这个新的0,交换值,直到重新建立不变量。

A nice feature of this sort is that you can efficiently insert new

items while the sort is going on, provided that the inserted items are

not "better" than the last 0'th element you extracted. This is

especially useful in simulation contexts, where the tree holds all

incoming events, and the "win" condition means the smallest scheduled

time. When an event schedule other events for execution, they are

scheduled into the future, so they can easily go into the heap. So, a

heap is a good structure for implementing schedulers (this is what I

used for my MIDI sequencer :-).

这种排序的一个很好的特性是,您可以在进行排序时有效地插入新项,前提是插入的项并不比您提取的最后一个0号元素“更好”。这在模拟环境中特别有用,其中树包含所有传入的事件,“胜利”条件意味着最小的计划时间。当一个事件调度其他事件执行时,它们被调度到未来,因此它们可以很容易地进入堆中。所以,堆是一个很好的结构实现调度程序(这是我的MIDI排序器:-)

Various structures for implementing schedulers have been extensively

studied, and heaps are good for this, as they are reasonably speedy,

the speed is almost constant, and the worst case is not much different

than the average case. However, there are other representations which

are more efficient overall, yet the worst cases might be terrible.

各种实现调度器的结构已经被广泛地研究过了,堆很适合于此,因为它们相当快,速度几乎是恒定的,最坏的情况与平均情况没有太大的不同。然而,总的来说,还有其他更有效的表示,但最糟糕的情况可能是可怕的。

Heaps are also very useful in big disk sorts. You most probably all

know that a big sort implies producing "runs" (which are pre-sorted

sequences, which size is usually related to the amount of CPU memory),

followed by a merging passes for these runs, which merging is often

very cleverly organised[1]. It is very important that the initial

sort produces the longest runs possible. Tournaments are a good way

to that. If, using all the memory available to hold a tournament, you

replace and percolate items that happen to fit the current run, you'll

produce runs which are twice the size of the memory for random input,

and much better for input fuzzily ordered.

堆在大磁盘分类中也非常有用。您可能都知道,一个大的排序意味着产生“运行”(这是预先排序的序列,其大小通常与CPU内存的数量有关),然后这些运行的合并通过,合并通常是非常聪明的组织[1]。最开始的排序产生最长的运行时间是非常重要的。比赛是一种很好的方法。如果,使用所有可用的内存来举办比赛,您替换和过滤恰巧适合当前运行的项目,您将产生两倍于t的运行

"""

def heappush(heap, item):

"""Push item onto heap, maintaining the heap invariant.将item推到堆上,保持堆不变。"""

heap.append(item)

_siftdown(heap, 0, len(heap)-1)

def heappop(heap):

"""Pop the smallest item off the heap, maintaining the heap invariant.从堆中取出最小的项,保持堆不变"""

lastelt = heap.pop() # raises appropriate IndexError if heap is empty如果堆为空,则抛出适当的IndexError

if heap:

returnitem = heap[0]

heap[0] = lastelt

_siftup(heap, 0)

return returnitem

return lastelt

def heapreplace(heap, item):

"""Pop and return the current smallest value, and add the new item.弹出并返回当前最小值,然后添加新项。

This is more efficient than heappop() followed by heappush(), and can be

more appropriate when using a fixed-size heap. Note that the value

returned may be larger than item! That constrains reasonable uses of

this routine unless written as part of a conditional replacement:

这比heappop()后跟heappush()更有效率,而且可以是这样更适合使用固定大小的堆。请注意返回值可能大于item!这限制了合理的使用除非作为条件替换的一部分编写,否则该例程:

if item > heap[0]:

item = heapreplace(heap, item)

"""

returnitem = heap[0] # raises appropriate IndexError if heap is empty如果堆为空,则抛出适当的IndexError

heap[0] = item

_siftup(heap, 0)

return returnitem

def heappushpop(heap, item):

"""Fast version of a heappush followed by a heappop.堆推进后再堆pop的快速版本。"""

if heap and heap[0] < item:

item, heap[0] = heap[0], item

_siftup(heap, 0)

return item

def heapify(x):

"""Transform list into a heap, in-place, in O(len(x)) time."""

n = len(x)

# Transform bottom-up. The largest index there's any point to looking at 改变自底向上。最大的指数是值得关注的

# is the largest with a child index in-range, so must have 2*i + 1 < n, 是最大的,子索引在范围内,所以必须有2*i + 1 < n

# or i < (n-1)/2. If n is even = 2*j, this is (2*j-1)/2 = j-1/2 so 或者I < (n-1)/2。如果n是偶数,那么(2*j-1)/2 = j-1/2

# j-1 is the largest, which is n//2 - 1. If n is odd = 2*j+1, this is J-1 是最大的,也就是n/ 2 -1。如果n是奇数= 2*j+1,这是

# (2*j+1-1)/2 = j so j-1 is the largest, and that's again n//2-1.(2*j+1-1)/2 = j 所以j-1是最大的,这又是n//2-1。

for i in reversed(range(n//2)):

_siftup(x, i)

def _heappop_max(heap):

"""Maxheap version of a heappop."""

lastelt = heap.pop() # raises appropriate IndexError if heap is empty

if heap:

returnitem = heap[0]

heap[0] = lastelt

_siftup_max(heap, 0)

return returnitem

return lastelt

def _heapreplace_max(heap, item):

"""Maxheap version of a heappop followed by a heappush."""

returnitem = heap[0] # raises appropriate IndexError if heap is empty

heap[0] = item

_siftup_max(heap, 0)

return returnitem

def _heapify_max(x):

"""Transform list into a maxheap, in-place, in O(len(x)) time."""

n = len(x)

for i in reversed(range(n//2)):

_siftup_max(x, i)

# 'heap' is a heap at all indices >= startpos, except possibly for pos. pos

# is the index of a leaf with a possibly out-of-order value. Restore the

# heap invariant.

def _siftdown(heap, startpos, pos):

newitem = heap[pos]

# Follow the path to the root, moving parents down until finding a place沿着这条路找到根,把父母移到下面,直到找到一个地方

# newitem fits.

while pos > startpos:

parentpos = (pos - 1) >> 1

parent = heap[parentpos]

if newitem < parent:

heap[pos] = parent

pos = parentpos

continue

break

heap[pos] = newitem

The child indices of heap index pos are already heaps, and we want to make

# a heap at index pos too. We do this by bubbling the smaller child of

# pos up (and so on with that child's children, etc) until hitting a leaf,

# then using _siftdown to move the oddball originally at index pos into place.

#

# We *could* break out of the loop as soon as we find a pos where newitem <=

# both its children, but turns out that's not a good idea, and despite that

# many books write the algorithm that way. During a heap pop, the last array

# element is sifted in, and that tends to be large, so that comparing it

# against values starting from the root usually doesn't pay (= usually doesn't

# get us out of the loop early). See Knuth, Volume 3, where this is

# explained and quantified in an exercise.

#

# Cutting the # of comparisons is important, since these routines have no

# way to extract "the priority" from an array element, so that intelligence

# is likely to be hiding in custom comparison methods, or in array elements

# storing (priority, record) tuples. Comparisons are thus potentially

# expensive.

#

# On random arrays of length 1000, making this change cut the number of

# comparisons made by heapify() a little, and those made by exhaustive

# heappop() a lot, in accord with theory. Here are typical results from 3

# runs (3 just to demonstrate how small the variance is):

def _siftup(heap, pos):

endpos = len(heap)

startpos = pos

newitem = heap[pos]

# Bubble up the smaller child until hitting a leaf.

childpos = 2*pos + 1 # leftmost child position

while childpos < endpos:

# Set childpos to index of smaller child.

rightpos = childpos + 1

if rightpos < endpos and not heap[childpos] < heap[rightpos]:

childpos = rightpos

# Move the smaller child up.

heap[pos] = heap[childpos]

pos = childpos

childpos = 2*pos + 1

# The leaf at pos is empty now. Put newitem there, and bubble it up

# to its final resting place (by sifting its parents down).

heap[pos] = newitem

_siftdown(heap, startpos, pos)