笔记6:多分类问题(练习篇)

导入相关包

import torch

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

from torch import nn

import torch.nn.functional as F

from torch.utils.data import TensorDataset

from torch.utils.data import DataLoader

from sklearn.model_selection import train_test_split

%matplotlib inline

数据加载及预处理

数据加载

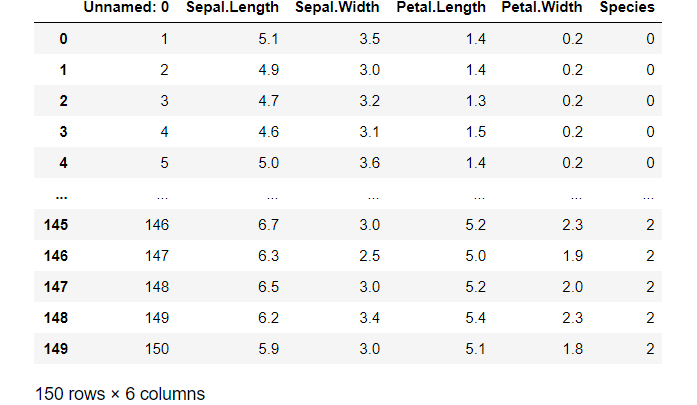

先把数据加载出来,观察数据的特点,为进一步预处理做准备

data = pd.read_csv('E:/datasets2/1-18/dataset/daatset/iris.csv')

把data输出一下

数据预处理

观察发现,最后一列(Species为文本),我们可以先输出一下看看有哪些类

data.Species.unique()

发现有三类 setosa, versicolor, virginica

然后我们可以对这三类进行编码,setosa为0, versicolor为1, virginica为2

data['Species'] = pd.factorize(data.Species)[0]

这里的pd.factorize()方法,可以将Species编码,然后再将data的Species列替换成这些编码即可

这时,我们再输出一下data

获得训练集和测试集

X = data.iloc[:, 1:-1].values

Y = data.iloc[:, -1].values

train_x, test_x, train_y, test_y = train_test_split(X, Y)

train_x = torch.from_numpy(train_x).type(torch.float32)

test_x = torch.from_numpy(test_x).type(torch.float32)

train_y = torch.from_numpy(train_y).type(torch.int64)

test_y = torch.from_numpy(test_y).type(torch.int64)

batch_size = 8

train_ds = TensorDataset(train_x, train_y)

train_dl = DataLoader(train_ds, batch_size = batch_size, shuffle = True)

test_ds = TensorDataset(test_x, test_y)

test_dl = DataLoader(test_ds, batch_size = batch_size)

这里有一个需要注意的地方,就是Y没有进行shape的变换。这是因为后面的损失函数要求标签是一维的,因此不需要变换。

当然,如果变换了,后面会给出报错,再进行相应的修改,也是没问题的

模型定义以及初始化

模型定义

class Model(nn.Module):

def __init__(self):

super().__init__()

self.linear_1 = nn.Linear(4, 32)

self.linear_2 = nn.Linear(32, 32)

self.linear_3 = nn.Linear(32, 3)

def forward(self, input):

x = F.relu(self.linear_1(input))

x = F.relu(self.linear_2(x))

x = self.linear_3(x)

return x

这里需要注意一点,就是理论上最后需要再激活,但是因为损失函数的设定,因此不用再激活

初始化

model = Model()

loss_func = nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(model.parameters(), lr = 0.0001)

epochs = 100

准确率定义

最后得到的是三个值,也就是每一类都有一个值,因此最大的那个才是预测结果。可以采用torch.argmax(y_pred, dim = 1)实现

def accuracy(y_pred, y_true):

y_pred = torch.argmax(y_pred, dim = 1)

acc = (y_pred == y_true).float().mean()

return acc

存储列表初始化

这里我们想最后输出损失值以及准确率,因此我们用列表存储一下

train_loss = []

train_acc = []

test_loss = []

test_acc = []

模型训练

for epoch in range(epochs):

for x, y in train_dl:

y_pred = model(x)

loss = loss_func(y_pred, y)

optimizer.zero_grad()

loss.backward()

optimizer.step()

with torch.no_grad():

epoch_acc = accuracy(model(train_x), train_y)

epoch_loss = loss_func(model(train_x), train_y).data

epoch_test_acc = accuracy(model(test_x), test_y)

epoch_test_loss = loss_func(model(test_x), test_y).data

print('epoch: ', epoch,

'acc: ', round(epoch_acc.item(), 3),

'loss: ', round(epoch_loss.item(), 3),

'test_acc: ', round(epoch_test_acc.item(), 3),

'test_loss: ', round(epoch_test_loss.item(), 3))

train_loss.append(epoch_loss)

train_acc.append(epoch_acc)

test_loss.append(epoch_test_loss)

test_acc.append(epoch_test_acc)

结果展示

训练过程展示

损失值可视化

plt.plot(range(1, epochs + 1), train_loss, label = 'train_loss')

plt.plot(range(1, epochs + 1), test_loss, label = 'test_loss')

plt.legend()

准确率可视化

plt.plot(range(1, epochs + 1), train_acc, label = 'train_acc')

plt.plot(range(1, epochs + 1), test_acc, label = 'test_acc')

plt.legend()