神经网络-感知机

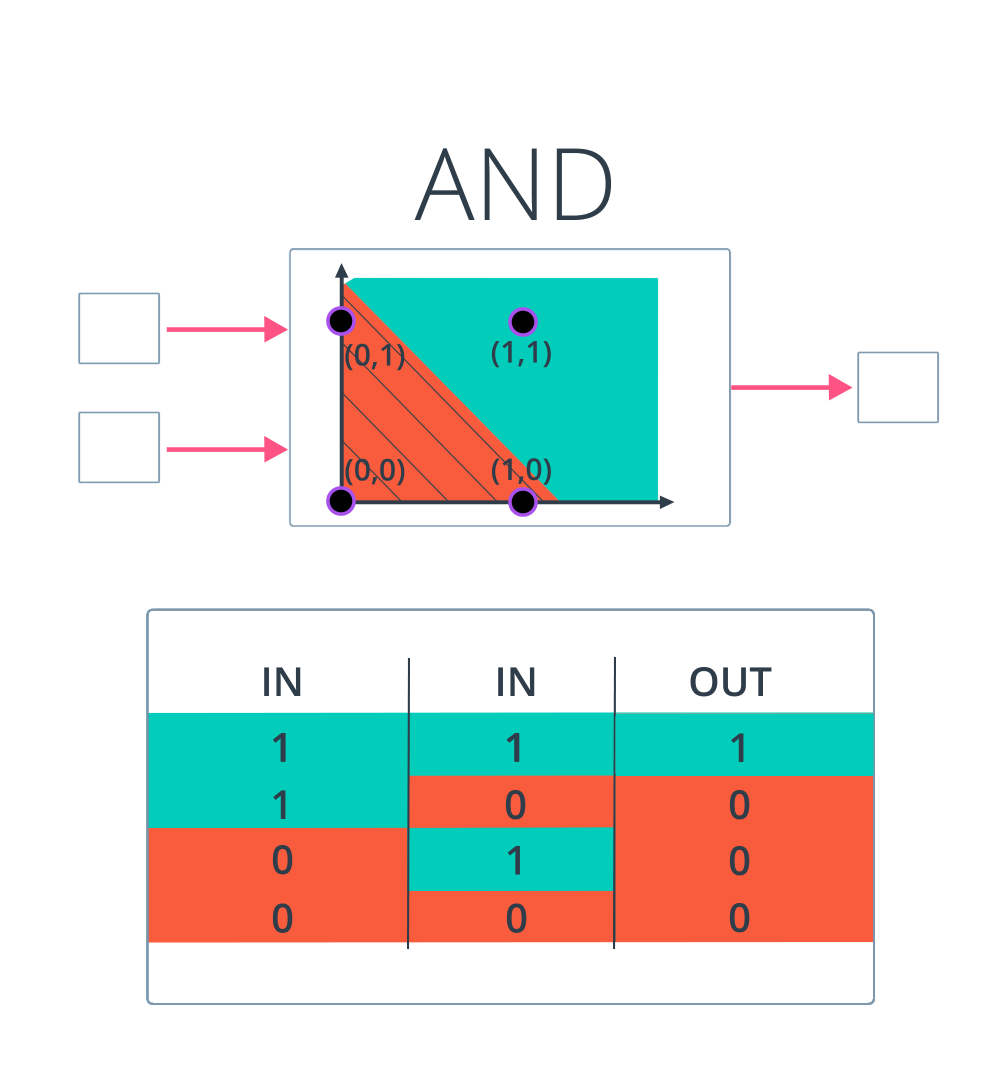

1、逻辑运算

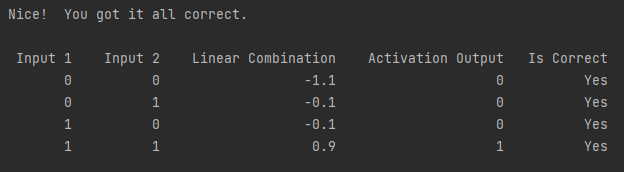

- AND Perceptron

import pandas as pd # TODO: Set weight1, weight2, and bias weight1 = 1 weight2 = 1 bias = -1.1 # DON'T CHANGE ANYTHING BELOW # Inputs and outputs test_inputs = [(0, 0), (0, 1), (1, 0), (1, 1)] correct_outputs = [False, False, False, True] outputs = [] # Generate and check output for test_input, correct_output in zip(test_inputs, correct_outputs): linear_combination = weight1 * test_input[0] + weight2 * test_input[1] + bias output = int(linear_combination >= 0) is_correct_string = 'Yes' if output == correct_output else 'No' outputs.append([test_input[0], test_input[1], linear_combination, output, is_correct_string]) # Print output num_wrong = len([output[4] for output in outputs if output[4] == 'No']) output_frame = pd.DataFrame(outputs, columns=['Input 1', ' Input 2', ' Linear Combination', ' Activation Output', ' Is Correct']) if not num_wrong: print('Nice! You got it all correct.\n') else: print('You got {} wrong. Keep trying!\n'.format(num_wrong)) print(output_frame.to_string(index=False))

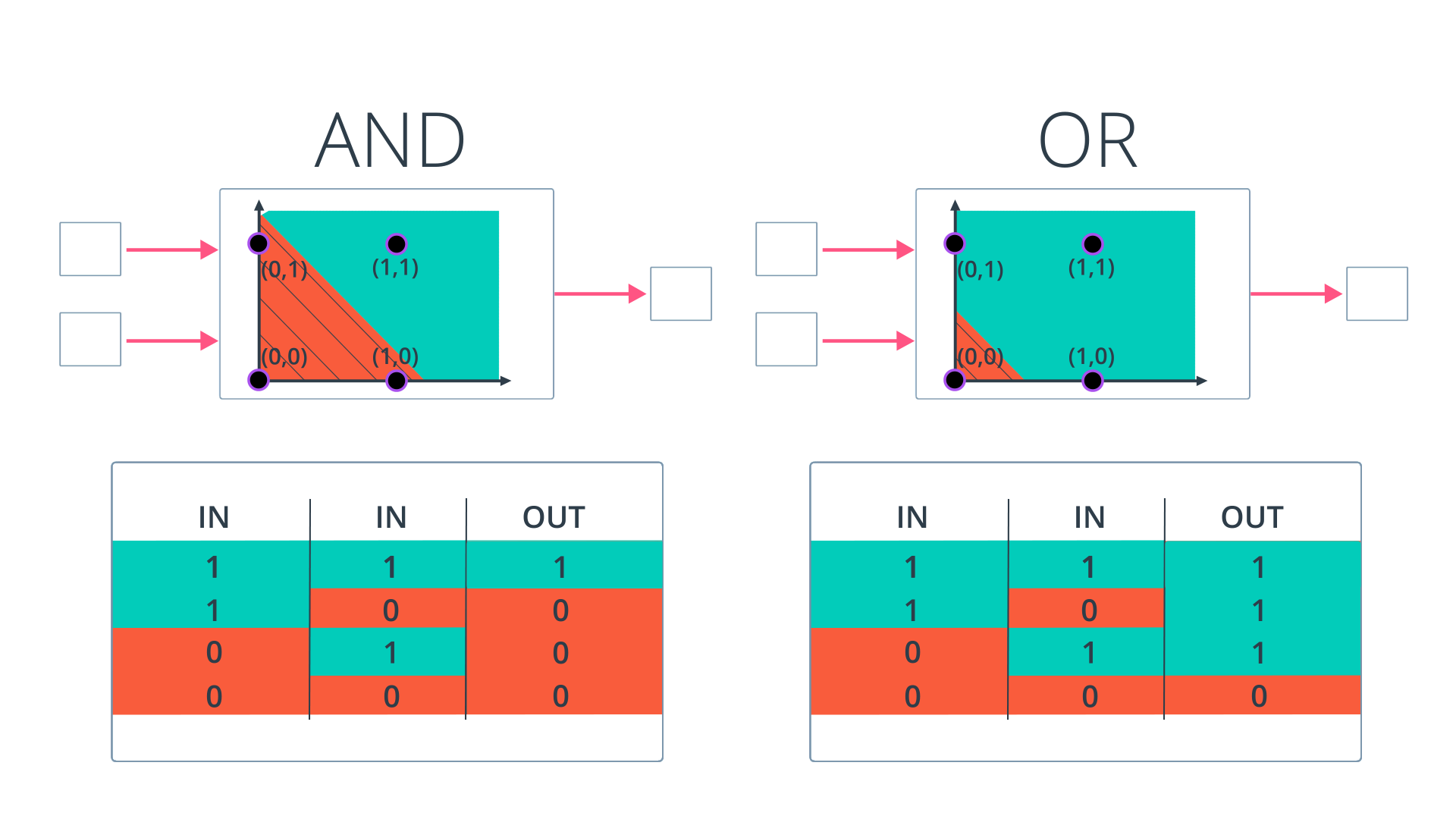

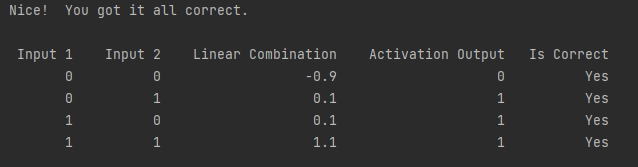

- OR Perceptron

import pandas as pd # TODO: Set weight1, weight2, and bias weight1 = 1 weight2 = 1 bias = -0.9 # DON'T CHANGE ANYTHING BELOW # Inputs and outputs test_inputs = [(0, 0), (0, 1), (1, 0), (1, 1)] correct_outputs = [False, True, True, True] outputs = [] # Generate and check output for test_input, correct_output in zip(test_inputs, correct_outputs): linear_combination = weight1 * test_input[0] + weight2 * test_input[1] + bias output = int(linear_combination >= 0) is_correct_string = 'Yes' if output == correct_output else 'No' outputs.append([test_input[0], test_input[1], linear_combination, output, is_correct_string]) # Print output num_wrong = len([output[4] for output in outputs if output[4] == 'No']) output_frame = pd.DataFrame(outputs, columns=['Input 1', ' Input 2', ' Linear Combination', ' Activation Output', ' Is Correct']) if not num_wrong: print('Nice! You got it all correct.\n') else: print('You got {} wrong. Keep trying!\n'.format(num_wrong)) print(output_frame.to_string(index=False))

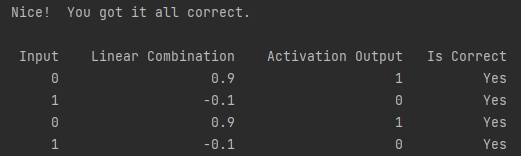

- NOT Perceptron

import pandas as pd # TODO: Set weight1, weight2, and bias weight = -1 bias = 0.9 # DON'T CHANGE ANYTHING BELOW # Inputs and outputs test_inputs = [0, 1, 0, 1] correct_outputs = [True, False, True, False] outputs = [] # Generate and check output for test_input, correct_output in zip(test_inputs, correct_outputs): linear_combination = weight * test_input + bias output = int(linear_combination >= 0) is_correct_string = 'Yes' if output == correct_output else 'No' outputs.append([test_input, linear_combination, output, is_correct_string]) # Print output num_wrong = len([output[3] for output in outputs if output[3] == 'No']) output_frame = pd.DataFrame(outputs, columns=['Input', ' Linear Combination', ' Activation Output', ' Is Correct']) if not num_wrong: print('Nice! You got it all correct.\n') else: print('You got {} wrong. Keep trying!\n'.format(num_wrong)) print(output_frame.to_string(index=False))

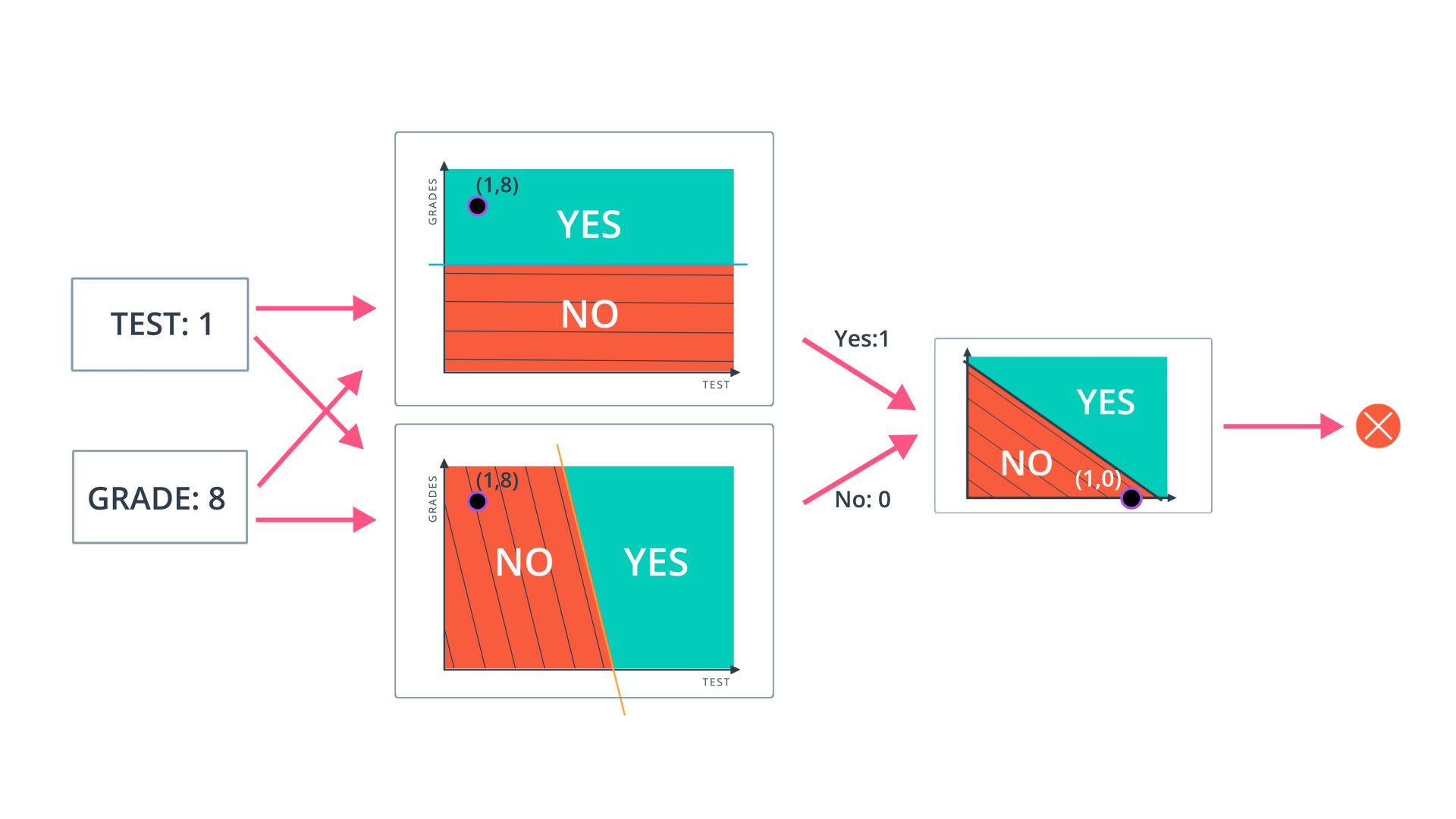

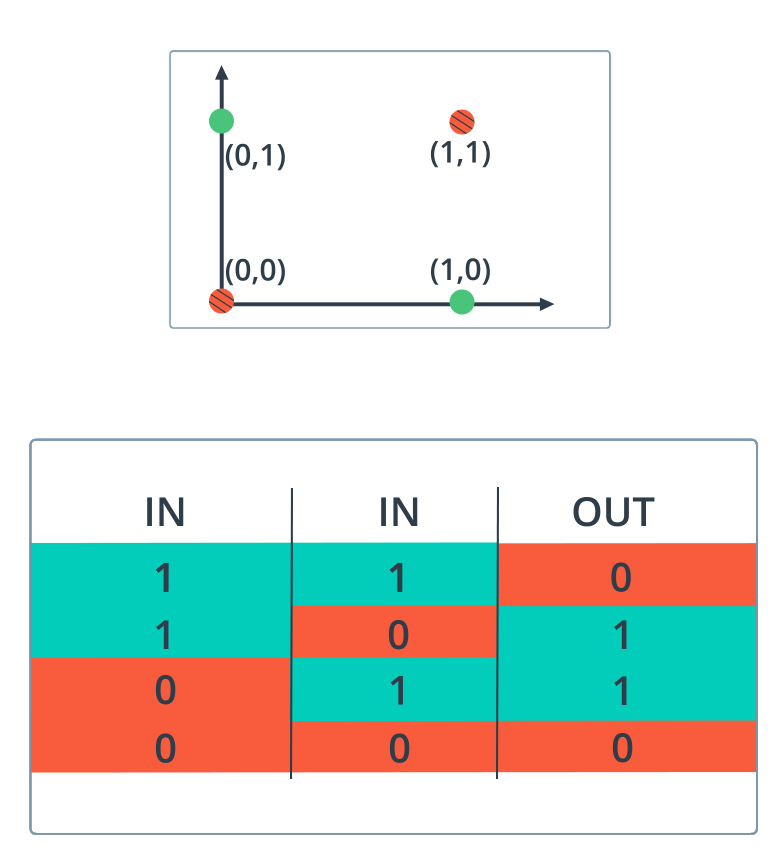

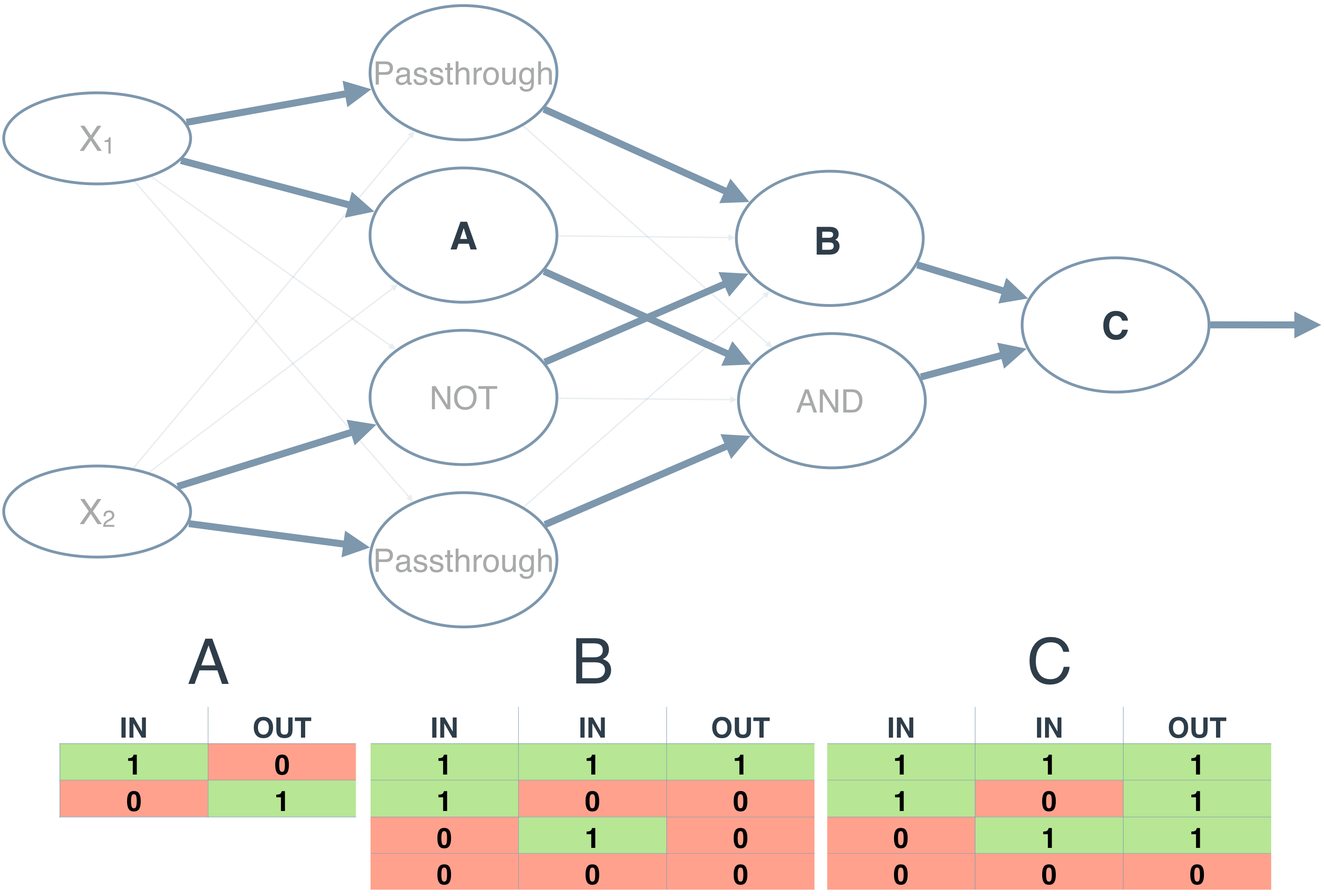

- XOR Perceptron

异或运算XOR,两个值相同为0,不同为1。

与之前的感知器不同,该图不是线性可分的。可以从 AND、NOT 和 OR 感知器构建一个神经网络来创建 XOR 逻辑。

即:(X1)XOR(X2)= [(X1)AND(NOT X2)] OR [(NOT X1)AND(X2)]

import numpy as np import pandas as pd # Inputs and outputs test_inputs = np.array([[1, 1], [1, 0], [0, 1], [0, 0]]) correct_outputs = [False, True, True, False] def not_perceptron(inputs): # TODO: Implement NOT logical function weight = -1 bias = 0.9 outputs = [] for input in inputs: linear_combination = weight * input + bias output = int(linear_combination >= 0) outputs.append(output) return outputs def and_perceptron(inputs1,inputs2): # TODO: Implement AND logical function weight1 = 1 weight2 = 1 bias = -1.1 outputs = [] for input1,input2 in zip(inputs1,inputs2): linear_combination = weight1 * input1 + weight2 * input2 + bias output = int(linear_combination >= 0) outputs.append(output) return outputs def or_perceptron(inputs1,inputs2): # TODO: Implement AND logical function weight1 = 1 weight2 = 1 bias = -0.9 outputs = [] for input1,input2 in zip(inputs1,inputs2): linear_combination = weight1 * input1 + weight2 * input2 + bias output = int(linear_combination >= 0) outputs.append(output) return outputs def xor_perceptron(inputs1,inputs2): outputs = [] outputs= or_perceptron( and_perceptron(inputs1, not_perceptron(inputs2)), and_perceptron(inputs2, not_perceptron(inputs1))) return outputs # print(or_perceptron(and_perceptron(test_inputs[:,0],not_perceptron(test_inputs[:,1])),and_perceptron(not_perceptron(test_inputs[:,0]),test_inputs[:,1])) perceptron1=and_perceptron(test_inputs[:,0],not_perceptron(test_inputs[:,1])) perceptron2=and_perceptron(not_perceptron(test_inputs[:,0]),test_inputs[:,1]) or_outputs=or_perceptron(perceptron1,perceptron2) # Generate and check output outputs=[] for test_input,or_output, correct_output in zip(test_inputs,or_outputs, correct_outputs): is_correct_string = 'Yes' if or_output == correct_output else 'No' outputs.append([test_input[0], test_input[1], or_output, is_correct_string]) # Print output num_wrong = len([output[3] for output in outputs if output[3] == 'No']) output_frame = pd.DataFrame(outputs, columns=['Input 1', ' Input 2', ' Activation Output', ' Is Correct']) if not num_wrong: print('Nice! You got it all correct.\n') else: print('You got {} wrong. Keep trying!\n'.format(num_wrong)) print(output_frame.to_string(index=False))

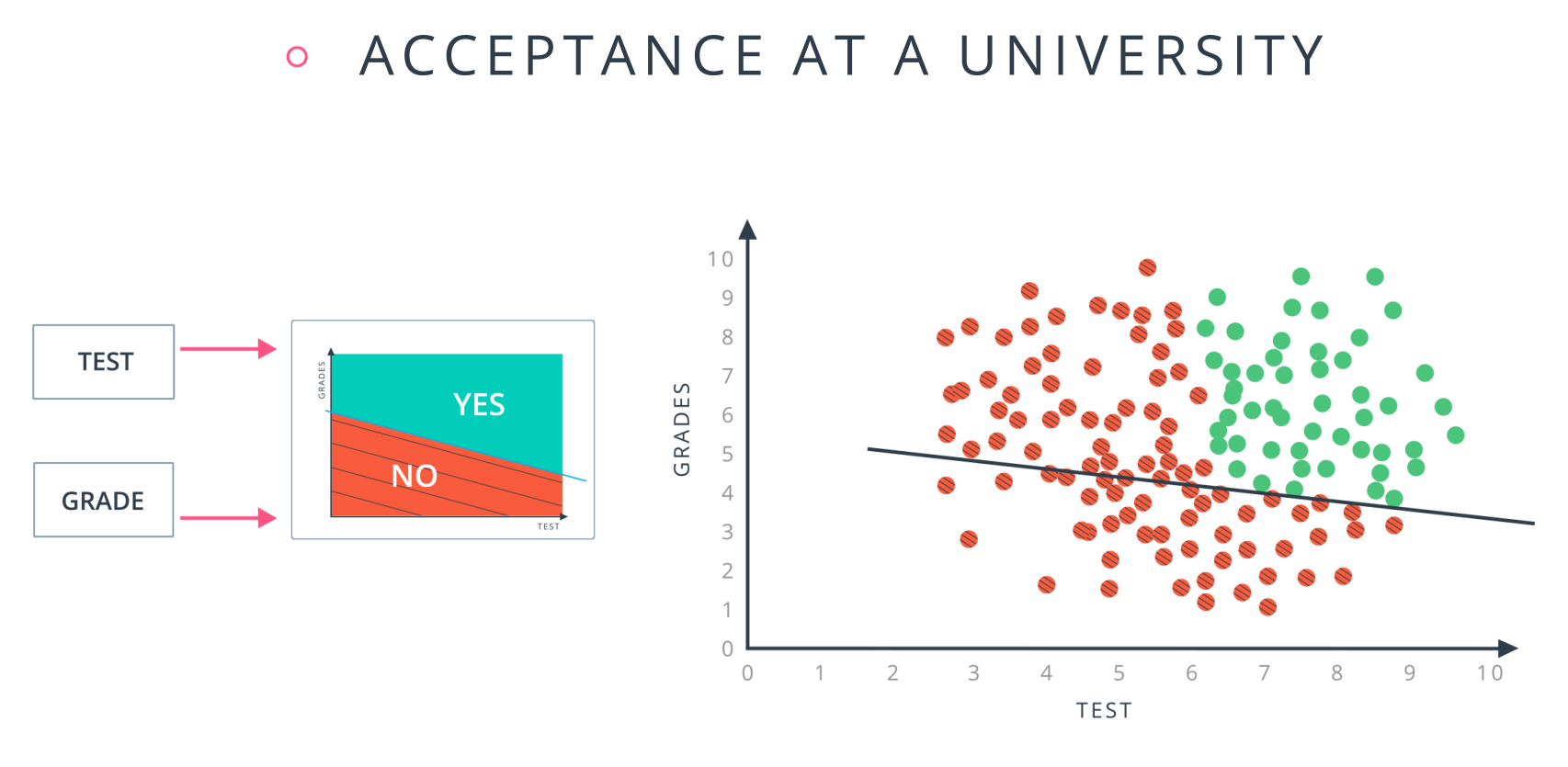

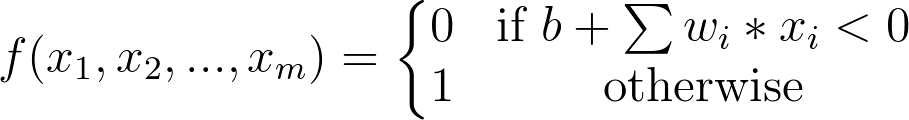

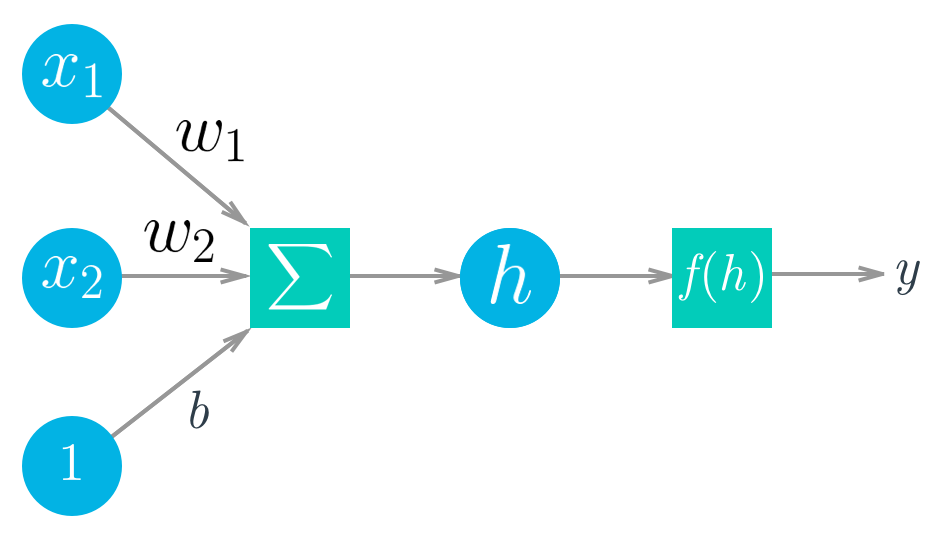

2、最简单的神经网络

其中:

h = ∑iwixi+b

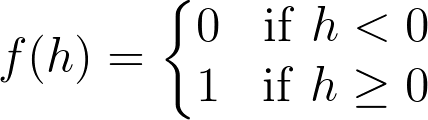

激活函数:

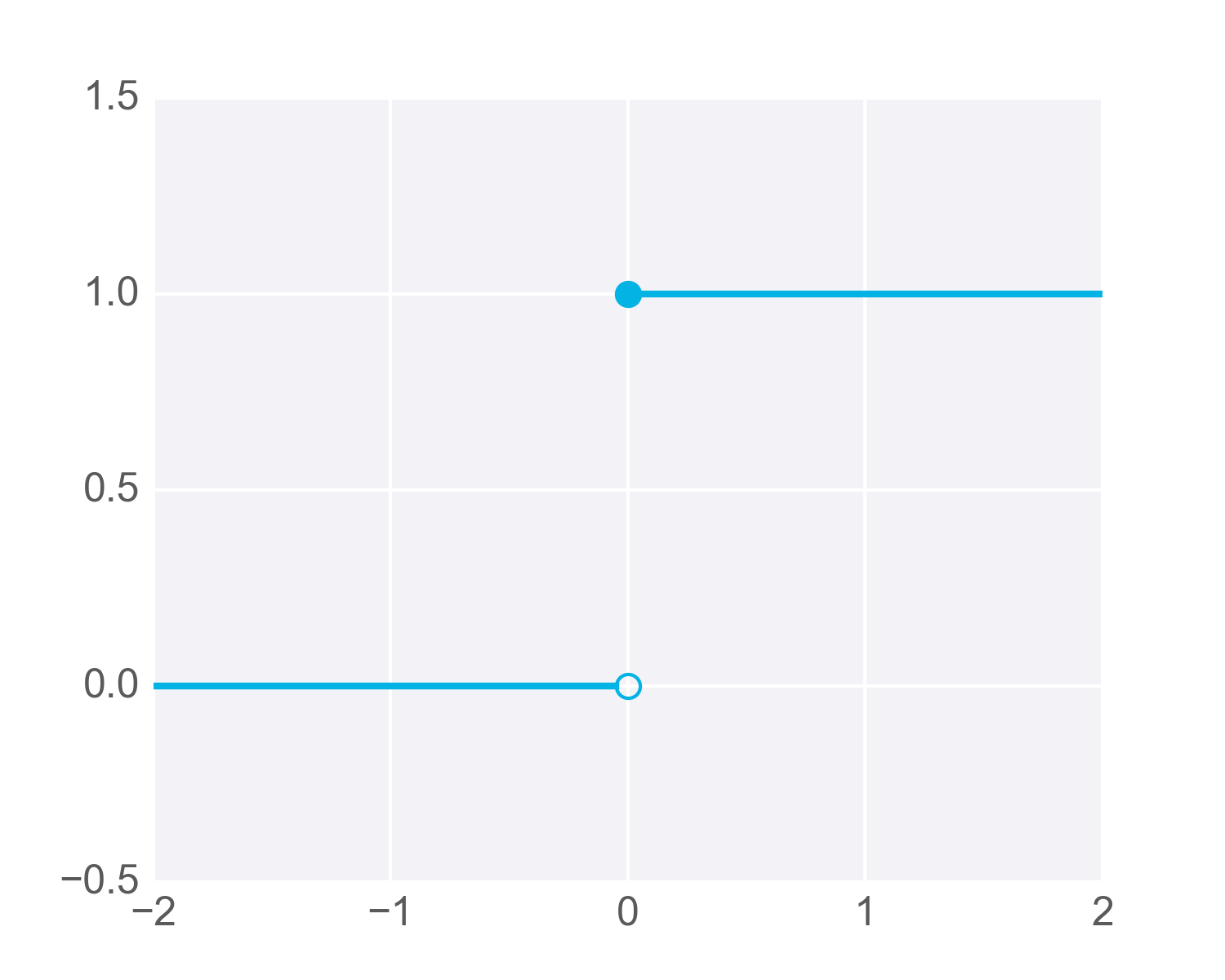

阶跃函数 ⇩

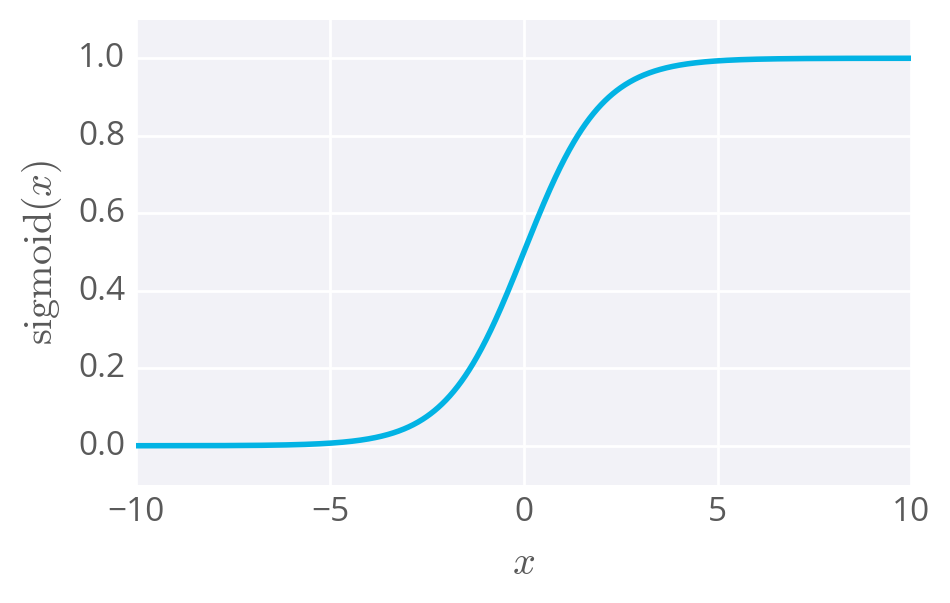

sigmoid函数 ⇩

sigmoid(x)=1/(1+e−x)

sigmoid 函数的范围在 0 和 1 之间,作为输出可以解释为成功的概率。

test:使用 NumPy 计算一个简单网络的输出,该网络具有两个输入节点和一个具有 sigmoid 激活函数的输出节点:

①利用 np.exp,实现 sigmoid 函数,sigmoid(x)=1/(1+e−x)

②计算网络的输出:y=f(h)=sigmoid(∑iwixi+b)

import numpy as np def sigmoid(x): # TODO: Implement sigmoid function return 1 / (1 + np.exp(-x)) inputs = np.array([0.7, -0.3]) weights = np.array([0.1, 0.8]) bias = -0.1 # TODO: Calculate the output output = sigmoid(np.dot(weights, inputs) + bias) print('Output:') print(output)

3、梯度下降

模型的关键因素是权重,需要一个函数去评价权重拟合网络的好坏。

常用的公式有误差平方和(SSE):