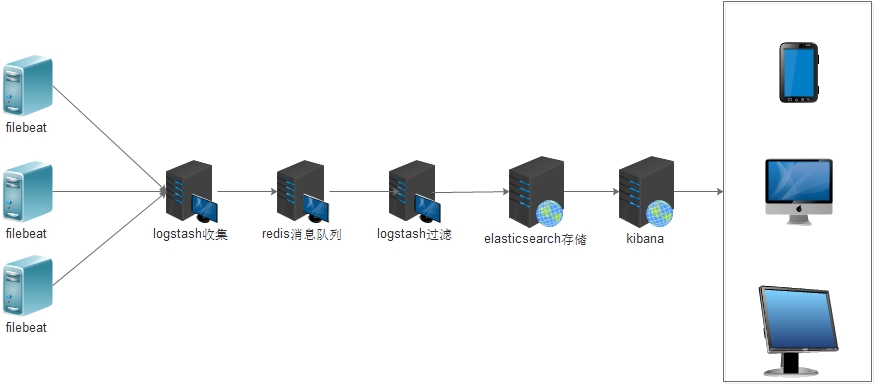

ELK之生产日志收集构架(filebeat-logstash-redis-logstash-elasticsearch-kibana)

本次构架图如下

说明:

1,前端服务器只启动轻量级日志收集工具filebeat(不需要JDK环境)

2,收集的日志不进过处理直接发送到redis消息队列

3,redis消息队列只是暂时存储日志数据,不需要进行持久化

4,logstash从redis消息队列读取数据并且按照一定规则进行过滤然后存储至elasticsearch

5,前端通过kibana进行图形化展示

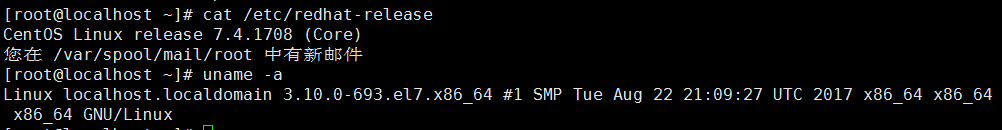

环境查看

服务器客户段安装filebeat

1 | rpm -ivh filebeat-6.2.4-x86_64.rpm |

修改配置文件/etc/filebeat/filebeat.yml(本次以收集系统日志及nginx访问日志为例)

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 | filebeat.prospectors:- type: log enabled: true paths: - /var/log/*.log - /var/log/messages tags: ["system-log-5611"]- type: log enabled: true paths: - /data/logs/nginx/http-access.log tags: ["nginx-log"]filebeat.config.modules: path: ${path.config}/modules.d/*.yml reload.enabled: falsesetup.template.settings: index.number_of_shards: 3setup.kibana:output.logstash: hosts: ["localhost:5044"] |

PS:系统日志打一个tags 同理nginx日志也打一个tags便于后面过滤

输出至logstash(本次试验logstash搭建在同一台主机,生产是单独的主机)

修改logstash配置文件/etc/logstash/conf.d/beat-redis.conf(这个logstash至进行日志收集不进行任何处理根据tags的不同过滤放置在不同的redis库)

为了便于排查错误还使用的标准输出

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 | input{ beats{ port => 5044 }}output{ if "system-log-5611" in [tags]{ redis { host => "192.168.56.11" port => "6379" password => "123456" db => "3" data_type => "list" key => "system-log-5611" } stdout{ codec => rubydebug } } if "nginx-log" in [tags]{ redis { host => "192.168.56.11" port => "6379" password => "123456" db => "4" data_type => "list" key => "nginx-log" } stdout{ codec => rubydebug } }} |

启动检查配置是否正确

1 2 | systemctl start filebeat/usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/beat-redis.conf |

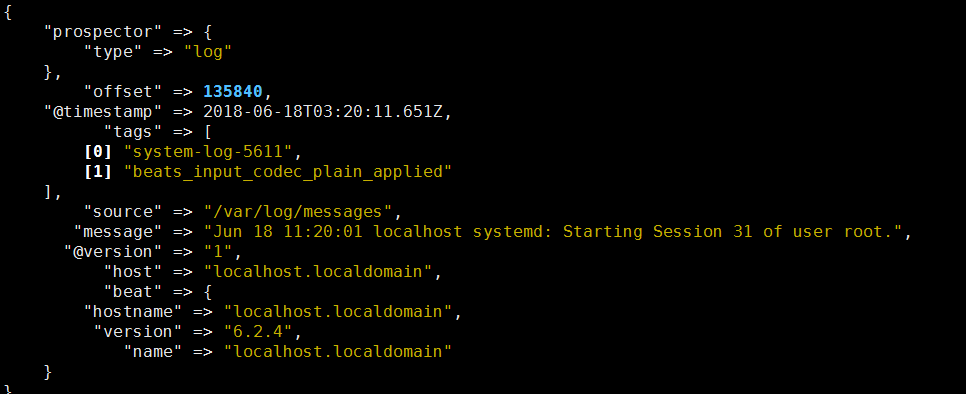

输出如下(同时输出至redis了)

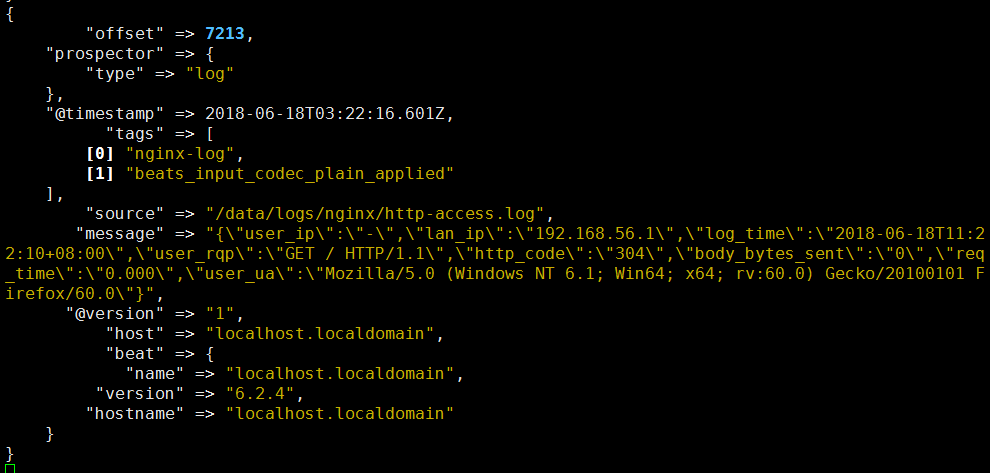

系统日志输出

nginx输出(可以看到nginx的message的输出格式为json但是logstash没有进行处理)

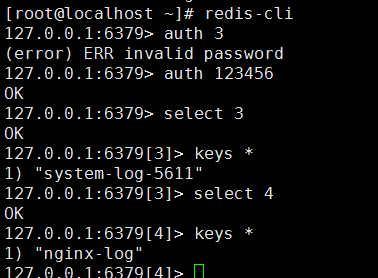

配置redis主机过程不详述

在另外一台主机修改logstash配置文件用于从redis读取日志数据并且进行过滤后输出至elasticsearch

redis-elastic.conf

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 | input{ redis { host => "192.168.56.11" port => "6379" password => "123456" db => "3" data_type => "list" key => "system-log-5611" } redis { host => "192.168.56.11" port => "6379" password => "123456" db => "4" data_type => "list" key => "nginx-log" }}filter{ if "nginx-log" in [tags] { json{ source => "message" } if [user_ua] != "-" { useragent { target => "agent" #agent将过来出的user agent的信息配置到了单独的字段中 source => "user_ua" #这个表示对message里面的哪个字段进行分析 } } }}output{ stdout{ codec => rubydebug }} |

PS:因为不同的日志收集至不同的redis所以输入有多个redis库

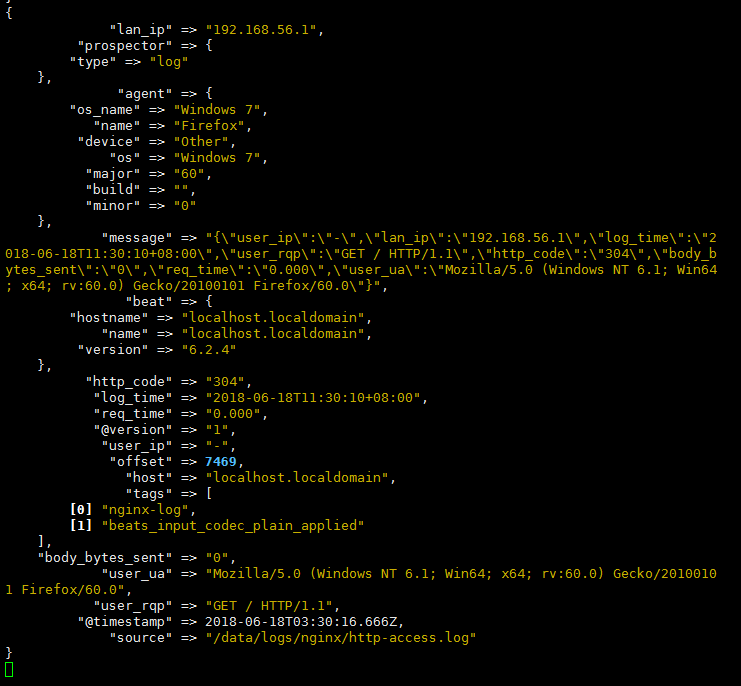

因为nginx是json格式需要通过filter进行过滤输出json格式

首先判断tag是是否nginx日志如果是则以json格式输出,并且再次判断客户端信息如果不为空则再次使用useragent过滤出详细的访问客户端信息

启动查看输出

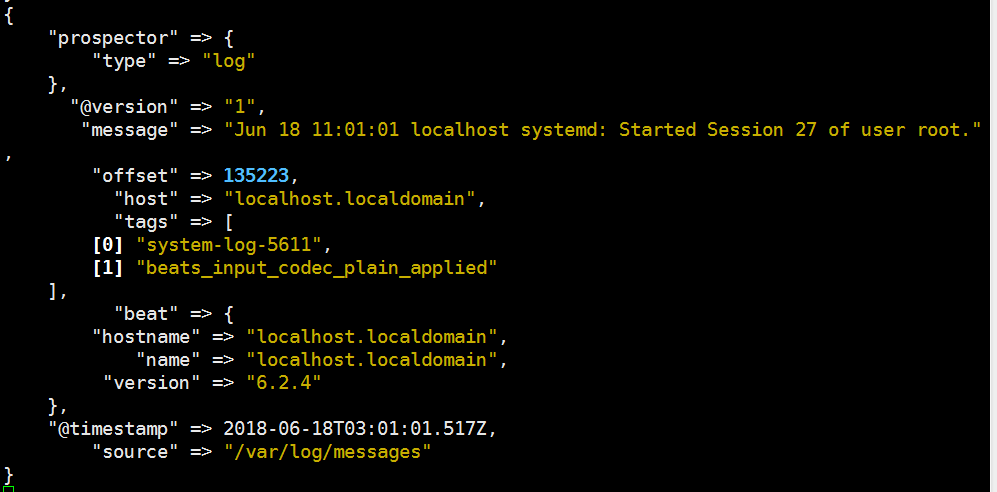

系统日志(输出和收集日志logstash的格式一样)

nginx访问日志(输出为json格式)

标准输出没有问题修改配置文件输出至elasticsearch

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 | input{ redis { host => "192.168.56.11" port => "6379" password => "123456" db => "3" data_type => "list" key => "system-log-5611" } redis { host => "192.168.56.11" port => "6379" password => "123456" db => "4" data_type => "list" key => "nginx-log" }}filter{ if "nginx-log" in [tags] { json{ source => "message" } if [user_ua] != "-" { useragent { target => "agent" #agent将过来出的user agent的信息配置到了单独的字段中 source => "user_ua" #这个表示对message里面的哪个字段进行分析 } } }}output{ if "nginx-log" in [tags]{ elasticsearch{ hosts => ["192.168.56.11:9200"] index => "nginx-log-%{+YYYY.MM}" } } if "system-log-5611" in [tags]{ elasticsearch{ hosts => ["192.168.56.11:9200"] index => "system-log-5611-%{+YYYY.MM}" } }} |

刷新访问日志

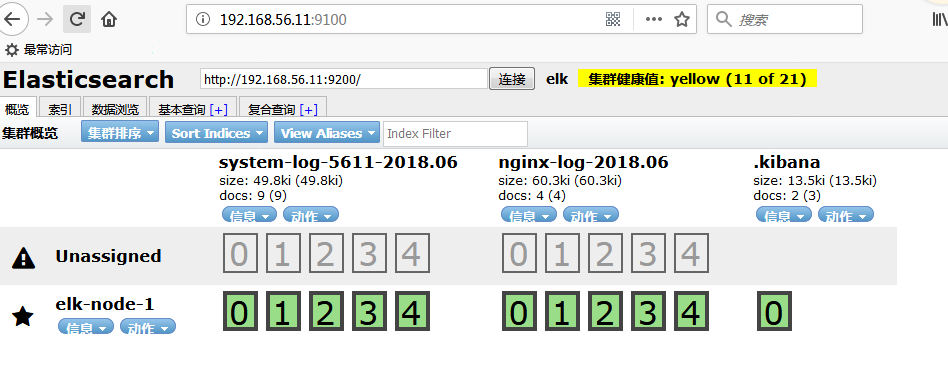

通过head访问查看

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· SQL Server 2025 AI相关能力初探

· Linux系列:如何用 C#调用 C方法造成内存泄露

· AI与.NET技术实操系列(二):开始使用ML.NET

· 记一次.NET内存居高不下排查解决与启示

· 探究高空视频全景AR技术的实现原理

· 阿里最新开源QwQ-32B,效果媲美deepseek-r1满血版,部署成本又又又降低了!

· AI编程工具终极对决:字节Trae VS Cursor,谁才是开发者新宠?

· 开源Multi-agent AI智能体框架aevatar.ai,欢迎大家贡献代码

· Manus重磅发布:全球首款通用AI代理技术深度解析与实战指南

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!