怎么使用K8S部署禅道

使用Docker部署禅道参考

https://www.cnblogs.com/minseo/p/15879412.html

本文介绍使用K8S部署最新版禅道

软件和系统版本

# 操作系统

# cat /etc/redhat-release

CentOS Linux release 7.9.2009 (Core)

# uname -a

Linux CentOS7K8SMaster01063 3.10.0-1160.el7.x86_64 #1 SMP Mon Oct 19 16:18:59 UTC 2020 x86_64 x86_64 x86_64 GNU/Linux

# 软件版本

# kubectl version

Client Version: version.Info{Major:"1", Minor:"13", GitVersion:"v1.13.4", GitCommit:"c27b913fddd1a6c480c229191a087698aa92f0b1", GitTreeState:"clean", BuildDate:"2019-02-28T13:37:52Z", GoVersion:"go1.11.5", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"13", GitVersion:"v1.13.4", GitCommit:"c27b913fddd1a6c480c229191a087698aa92f0b1", GitTreeState:"clean", BuildDate:"2019-02-28T13:30:26Z", GoVersion:"go1.11.5", Compiler:"gc", Platform:"linux/amd64"}

# docker version

Client: Docker Engine - Community

Version: 24.0.7

API version: 1.43

Go version: go1.20.10

Git commit: afdd53b

Built: Thu Oct 26 09:11:35 2023

OS/Arch: linux/amd64

Context: default

Server: Docker Engine - Community

Engine:

Version: 24.0.7

API version: 1.43 (minimum version 1.12)

Go version: go1.20.10

Git commit: 311b9ff

Built: Thu Oct 26 09:10:36 2023

OS/Arch: linux/amd64

Experimental: false

containerd:

Version: 1.6.24

GitCommit: 61f9fd88f79f081d64d6fa3bb1a0dc71ec870523

runc:

Version: 1.1.9

GitCommit: v1.1.9-0-gccaecfc

docker-init:

Version: 0.19.0

GitCommit: de40ad0

# 禅道版本

18.8

生成yaml文件

进入配置文件目录

cd /data/softs/k8s/Deploy/demo/zentao

使用命令生成配置文件并重定向

kubectl run zentao --replicas=1 --image=hub.zentao.net/app/zentao:18.8 --env="MYSQL_INTERNAL=true" --port=80 --dry-run -o yaml >> zentao-deployment.yaml

# 参数解析

# 运行kubectl run

kubectl run

# 自定义定义运行的deployment名本次定义为zentao

zentao

# 设置副本参数,本次设置副本数为1,不设置默认值为1

--replicas=1

# 指定docker镜像

--image=hub.zentao.net/app/zentao:18.8

# 指定环境变量使用本镜像的MySQL,不指定启动时报连接MySQL错误

--env="MYSQL_INTERNAL=true"

# 镜像启动端口

--port=80

# 不执行

--dry-run

# 生成yaml配置文件

-o yaml

重定向间隔符,有多个不同类型的服务需要启动需要使用间隔符

echo "---" >> zentao-deployment.yaml

生成service配置文件

# 需要先启动deployment才能生成service配置文件,因为生成service需要指定delployment名

# 运行启动deployment

kubectl apply -f zentao-deployment.yaml

kubectl expose deployment zentao --port=80 --type=NodePort --target-port=80 --name=zentao-service --dry-run -o yaml >> zentao-deployment.yaml

# 参数解析

# 运行生成service

kubectl expose

# 改service对应的delpoymet是zentao即上一步运行的deploymeng

deployment zentao

# 对应的容器端口为80

--port=80

# service类型为NodePort即随机在Node节点生成一个端口

--type=NodePort

# 改service在集群内的访问端口

--target-port=80

# 自定义service名

--name=zentao-service

# 不运行并生成yaml文件

--dry-run

-o yaml

完整的yaml配置文件

# cat zentao-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

run: zentao

name: zentao

spec:

replicas: 1

selector:

matchLabels:

run: zentao

strategy: {}

template:

metadata:

creationTimestamp: null

labels:

run: zentao

spec:

containers:

- env:

- name: MYSQL_INTERNAL

value: "true"

image: hub.zentao.net/app/zentao:18.8

name: zentao

ports:

- containerPort: 80

resources: {}

status: {}

---

apiVersion: v1

kind: Service

metadata:

creationTimestamp: null

labels:

run: zentao

name: zentao-service

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

run: zentao

type: NodePort

status:

loadBalancer: {}

执行yaml配置文件,生成deployment和service

# kubectl apply -f zentao-deployment.yaml

deployment.apps/zentao created

service/zentao-service created

查看生成的pod和svc

# kubectl get pod,svc

NAME READY STATUS RESTARTS AGE

pod/db-0 1/1 Running 1 8d

pod/nfs-client-provisioner-687f8c8b59-ghg8f 1/1 Running 1 9d

pod/tomcat-5d6455cc4-2mxng 1/1 Running 1 3d2h

pod/tomcat-5d6455cc4-kvpdm 1/1 Running 1 3d2h

pod/tomcat-5d6455cc4-q9b9m 1/1 Running 1 3d2h

pod/zentao-7697b87b74-p7jj9 1/1 Running 0 40s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 14d

service/mysql ClusterIP None <none> 3306/TCP 8d

service/tomcat-service NodePort 10.0.0.74 <none> 8080:47537/TCP 3d2h

service/zentao-service NodePort 10.0.0.48 <none> 80:30188/TCP 27s

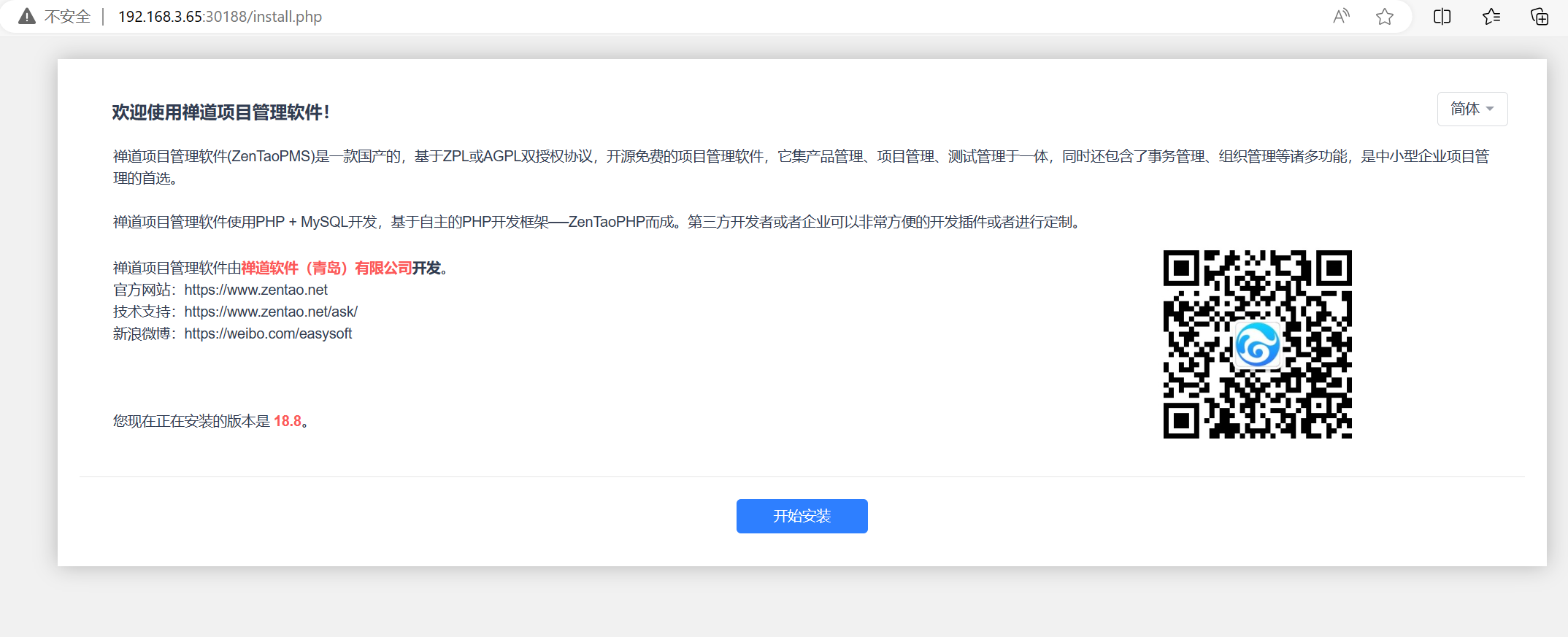

可以看到NodePort端口是46452,在浏览器使用任意Node的ip加端口访问

http://nodeip:port

安装配置过程不详述

启动没有做数据持久化,重启后会丢失数据

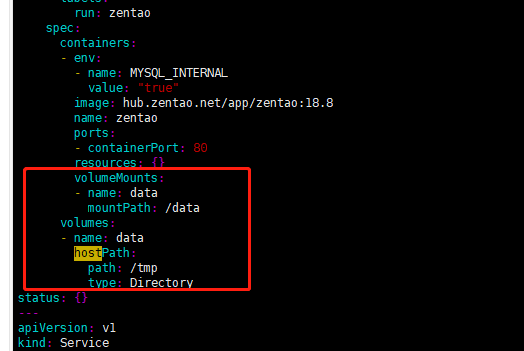

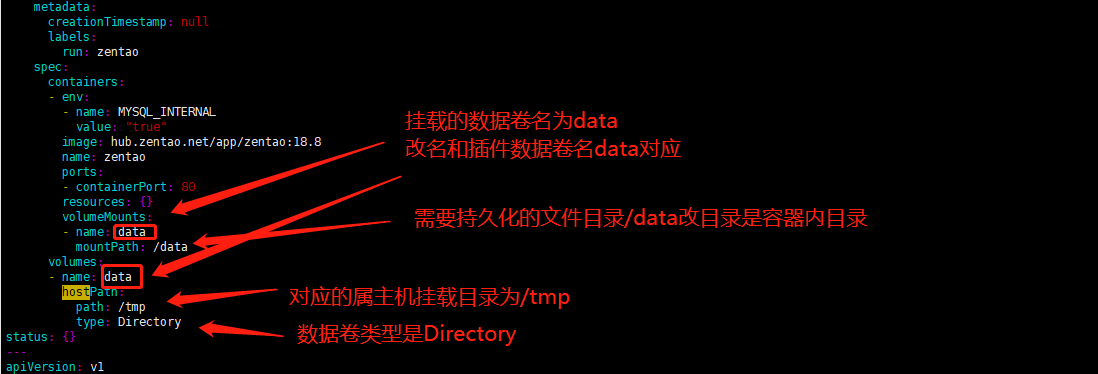

设置本地数据卷

修改配置文件增加本地数据卷配置增加以下配置

volumeMounts:

- name: data

mountPath: /data

volumes:

- name: data

hostPath:

path: /tmp

type: Directory

注意增加配置的位置

解析

重新应用配置文件

# kubectl apply -f zentao-deployment.yaml

deployment.apps/zentao configured

service/zentao-service configured

查看新的pod被分配到那一台宿主机

# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

db-0 1/1 Running 1 8d 172.17.83.7 192.168.3.66 <none> <none>

nfs-client-provisioner-687f8c8b59-ghg8f 1/1 Running 1 9d 172.17.83.6 192.168.3.66 <none> <none>

tomcat-5d6455cc4-2mxng 1/1 Running 1 3d17h 172.17.4.10 192.168.3.65 <none> <none>

tomcat-5d6455cc4-kvpdm 1/1 Running 1 3d17h 172.17.4.11 192.168.3.65 <none> <none>

tomcat-5d6455cc4-q9b9m 1/1 Running 1 3d17h 172.17.83.3 192.168.3.66 <none> <none>

zentao-78dcf94f46-4nsn8 1/1 Running 0 2m1s 172.17.83.8 192.168.3.66 <none> <none>

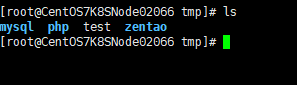

可以看到被分配到192.168.3.66这台主机,我们登陆改主机查看/tmp目录

容器内/data目录数据已经被持久化到改宿主机的/tmp目录

虽然数据持久化了,但是我们知道Pod是临时的,改Pod对应的宿主机可能会变化,所以我们需要使用持久化网络存储

持久化网络存储有NFS和Heketi方案,因为NFS不支持高可用我们采用Heketi方案

搭建Heketi参考

https://www.cnblogs.com/minseo/p/12575604.html

创建pvc的yaml配置文件

前提条件:已经创建好storageclass,参考上面搭建Heketi文档

# cat gluster-zentao-pvc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: gluster-zentao-pvc

namespace: default

#annotations:

# volume.beta.kubernetes.io/storage-class: "glusterfs"

spec:

#与storageclass名字对应

storageClassName: gluster-heketi-storageclass

# ReadWriteOnce:简写RWO,读写权限,且只能被单个node挂载;

# ReadOnlyMany:简写ROX,只读权限,允许被多个node挂载;

# ReadWriteMany:简写RWX,读写权限,允许被多个node挂载;

accessModes:

- ReadWriteMany

resources:

requests:

storage: 20Gi

创建pvc卷

# kubectl apply -f gluster-zentao-pvc.yaml

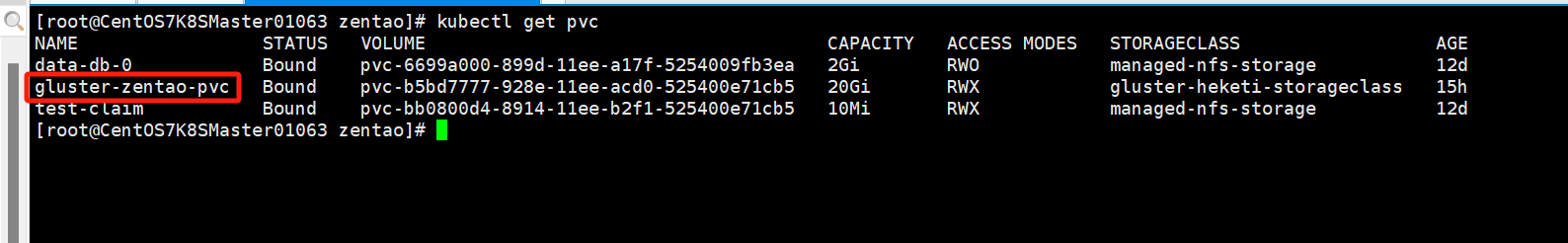

查看

# kubectl get pvc

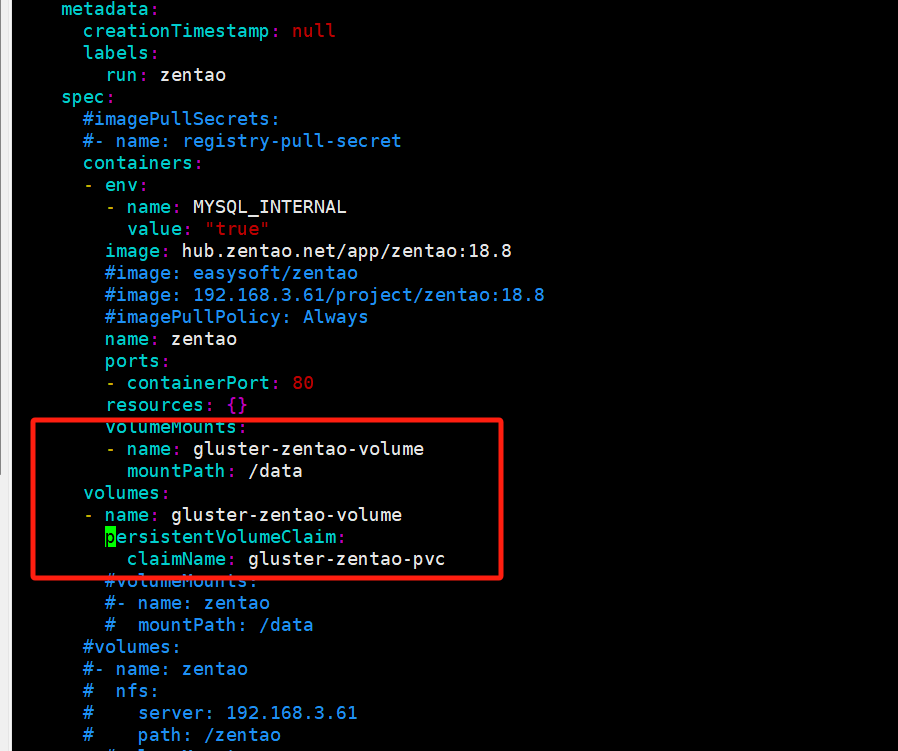

修改禅道deployment配置文件挂载数据卷

# cat zentao-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

run: zentao

name: zentao

spec:

replicas: 1

selector:

matchLabels:

run: zentao

strategy: {}

template:

metadata:

creationTimestamp: null

labels:

run: zentao

spec:

#imagePullSecrets:

#- name: registry-pull-secret

containers:

- env:

- name: MYSQL_INTERNAL

value: "true"

image: hub.zentao.net/app/zentao:18.8

#image: easysoft/zentao

#image: 192.168.3.61/project/zentao:18.8

#imagePullPolicy: Always

name: zentao

ports:

- containerPort: 80

resources: {}

volumeMounts:

- name: gluster-zentao-volume

mountPath: /data

volumes:

- name: gluster-zentao-volume

persistentVolumeClaim:

claimName: gluster-zentao-pvc

#volumeMounts:

#- name: zentao

# mountPath: /data

#volumes:

#- name: zentao

# nfs:

# server: 192.168.3.61

# path: /zentao

#volumeMounts:

#- name: data

# mountPath: /data

#volumes:

#- name: data

# hostPath:

# path: /tmp

# type: Directory

status: {}

---

apiVersion: v1

kind: Service

metadata:

creationTimestamp: null

labels:

run: zentao

name: zentao-service

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

run: zentao

type: NodePort

status:

loadBalancer: {}

增加的配置解析

# 挂载数据卷

volumeMounts:

# 把数据卷挂载到容器的/data目录,数据卷名称对应下面volumes的名称

- name: gluster-zentao-volume

mountPath: /data

# 数据卷

volumes:

# 数据卷名称,对应上面挂载数据卷名称

- name: gluster-zentao-volume

# 数据卷的源是pvc即上面创建的pvc

persistentVolumeClaim:

claimName: gluster-zentao-pvc

注意缩进关系

创建delpoy和svc

# kubectl apply -f zentao-deployment.yaml

查看创建的pod和svc

# kubectl get pod,svc

NAME READY STATUS RESTARTS AGE

pod/db-0 1/1 Running 1 12d

pod/nfs-client-provisioner-687f8c8b59-ghg8f 1/1 Running 1 12d

pod/zentao-786477c5cb-d87zm 1/1 Running 0 6m11s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/glusterfs-dynamic-885096e9-90e0-11ee-acd0-525400e71cb5 ClusterIP 10.0.0.22 <none> 1/TCP 33h

service/glusterfs-dynamic-a9e69673-9313-11ee-acd0-525400e71cb5 ClusterIP 10.0.0.253 <none> 1/TCP 6m39s

service/kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 17d

service/mysql ClusterIP None <none> 3306/TCP 12d

service/tomcat-service NodePort 10.0.0.74 <none> 8080:47537/TCP 6d19h

service/zentao-service NodePort 10.0.0.154 <none> 80:40834/TCP 6m11s

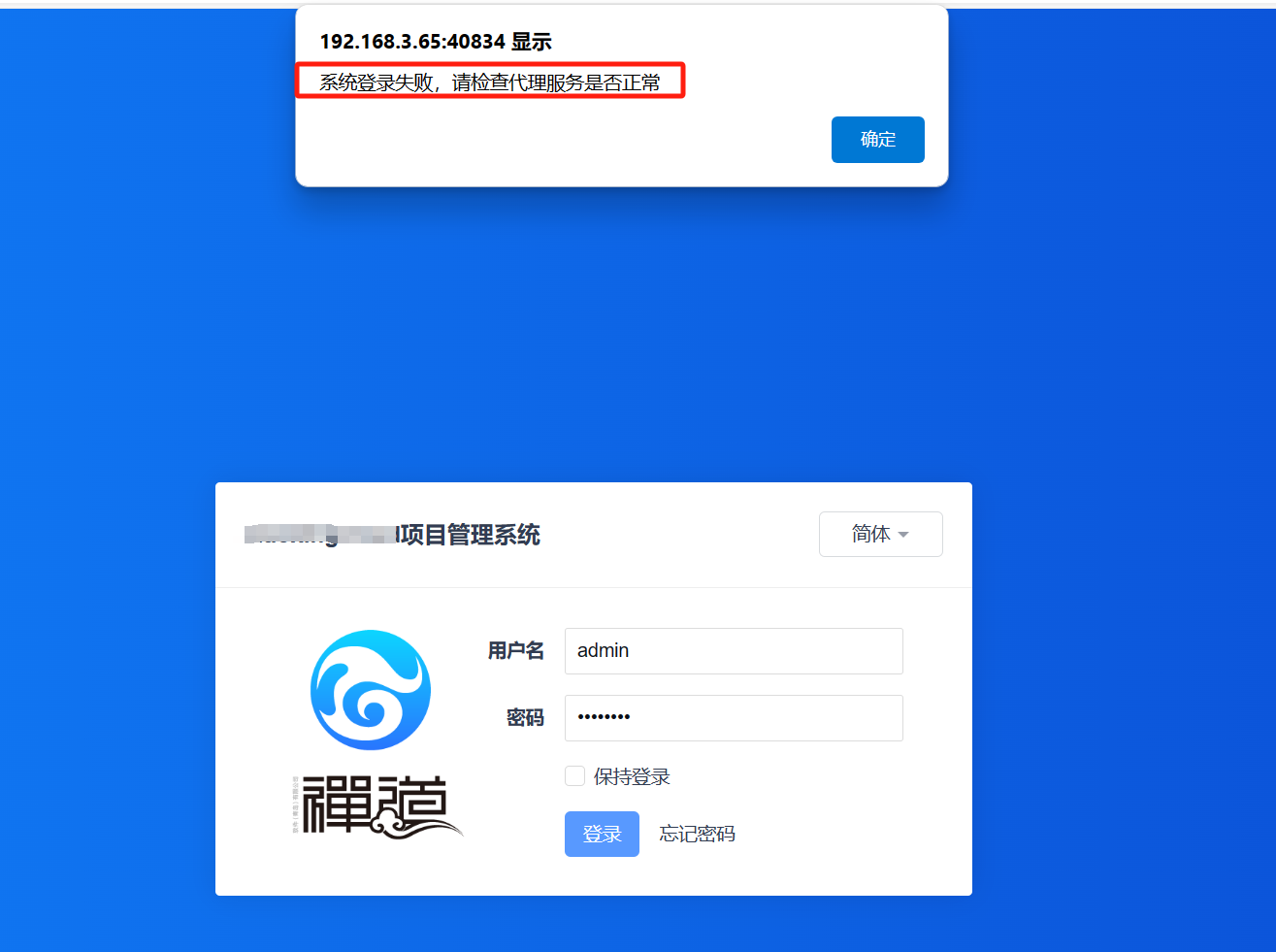

使用NodePort启动的随机端口是40834,使用ip+端口访问

http://ip:port

安装过程不详述

安装完成后登录会出现代理错误

原因未知,可能是动态存储heketi有关

系统登录失败,请检查代理服务是否正常

使用NFS作为存储

搭建NFS存储参考

https://www.cnblogs.com/minseo/p/12455950.html

在NFS服务器添加一条配置增加禅达的数据文件夹

# cat /etc/exports

/nfs *(rw,no_root_squash)

/ifs/kubernetes *(rw,no_root_squash)

/zentao *(rw,no_root_squash)

# systemctl restart nfs

修改禅道yaml配置文件

# cat zentao-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

run: zentao

name: zentao

spec:

replicas: 1

selector:

matchLabels:

run: zentao

strategy: {}

template:

metadata:

creationTimestamp: null

labels:

run: zentao

spec:

#imagePullSecrets:

#- name: registry-pull-secret

containers:

- env:

- name: MYSQL_INTERNAL

value: "true"

image: hub.zentao.net/app/zentao:18.8

#image: easysoft/zentao

#image: 192.168.3.61/project/zentao:18.8

#imagePullPolicy: Always

name: zentao

ports:

- containerPort: 80

resources: {}

#volumeMounts:

#- name: gluster-zentao-volume

# mountPath: /data

#volumes:

#- name: gluster-zentao-volume

# persistentVolumeClaim:

# claimName: gluster-zentao-pvc

volumeMounts:

- name: zentao

mountPath: /data

volumes:

- name: zentao

nfs:

server: 192.168.3.61

path: /zentao

#volumeMounts:

#- name: data

# mountPath: /data

#volumes:

#- name: data

# hostPath:

# path: /tmp

# type: Directory

status: {}

---

apiVersion: v1

kind: Service

metadata:

creationTimestamp: null

labels:

run: zentao

name: zentao-service

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

run: zentao

type: NodePort

status:

loadBalancer: {}

参数解析

# 数据卷挂载

volumeMounts:

# 对应数据卷名和下面创建的名一致

- name: zentao

# 挂载的是容器的/data目录

mountPath: /data

# 创建数据卷

volumes:

# 数据卷名和上面数据卷名对应

- name: zentao

# 数据卷的来源是nfs并且填写数据卷的服务器名和对应的挂载目录

nfs:

server: 192.168.3.61

path: /zentao

创建deployment

# kubectl apply -f zentao-deployment.yaml

安装不会出现使用glusterfs作为存储的登录问题

浙公网安备 33010602011771号

浙公网安备 33010602011771号