三 Ceph集群搭建

Ceph集群

集群组件

Ceph集群包括Ceph OSD,Ceph Monitor两种守护进程。

Ceph OSD(Object Storage Device): 功能是存储数据,处理数据的复制、恢复、回填、再均衡,并通过检查其他OSD守护进程的心跳来向Ceph Monitors提供一些监控信息。

Ceph Monitor: 是一个监视器,监视Ceph集群状态和维护集群中的各种关系。

Ceph存储集群至少需要一个Ceph Monitor和两个 OSD 守护进程。

集群环境准备

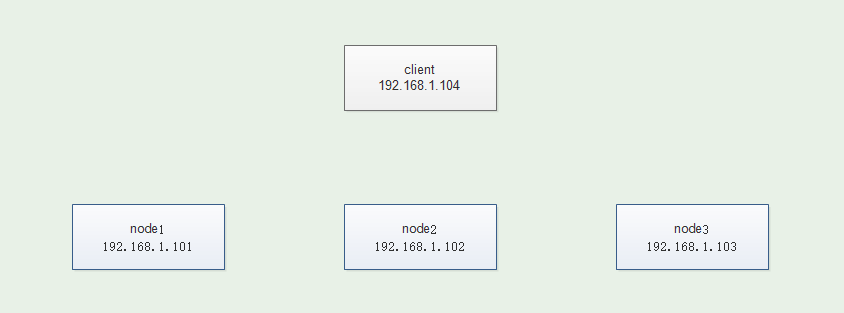

集群图

准备工作

准备四台服务器,需要可以上外网,其中三台作为ceph集群 一台作为cehp客户端,除了client外每台加一个磁盘(/dev/sdb),无需分区

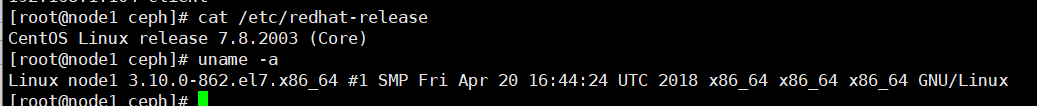

环境查看

配置主机名及hosts(四台主机主机名不一样hosts一样)

1 2 3 4 5 6 7 | # cat /etc/hosts127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4::1 localhost localhost.localdomain localhost6 localhost6.localdomain6192.168.1.101 node1192.168.1.102 node2192.168.1.103 node3192.168.1.104 client |

关闭防火墙及selinux

设置ntpdate时间同步并设置在crontab内

1 | ntpdate time1.aliyun.com |

配置ceph的yum源

1 | yum install epel-release -y |

四台主机均设置,如果需要设置其他版本的yum源可以打开页面选择

http://mirrors.aliyun.com/ceph

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 | # cat /etc/yum.repos.d/ceph.repo [ceph]name=cephbaseurl=http://mirrors.aliyun.com/ceph/rpm-mimic/el7/x86_64/enabled=1gpgcheck=0priority=1[ceph-noarch]name=cephnoarchbaseurl=http://mirrors.aliyun.com/ceph/rpm-mimic/el7/noarch/enabled=1gpgcheck=0priority=1[ceph-source]name=Ceph source packagesbaseurl=http://mirrors.aliyun.com/ceph/rpm-mimic/el7/SRPMSenabled=1gpgcheck=0priority=1 |

更新yum源

1 | yum update |

注意:需要更新否则后面会报错

配置ssh免密登录

以node1位部署节点在node1配置使node1可以免密登录四台主机

1 2 3 4 5 | ssh-keygen -t rsassh-copy-id node1ssh-copy-id node2ssh-copy-id node3ssh-copy-id client |

在node1上ssh其他主机无需输入密码代表配置ssh成功

在node1上安装部署工具(其他节点不用安装)

1 | yum install ceph-deploy -y |

建立一个集群配置目录

注意:后面大部分操作在此目录进行

1 2 | mkdir /etc/cephcd /etc/ceph |

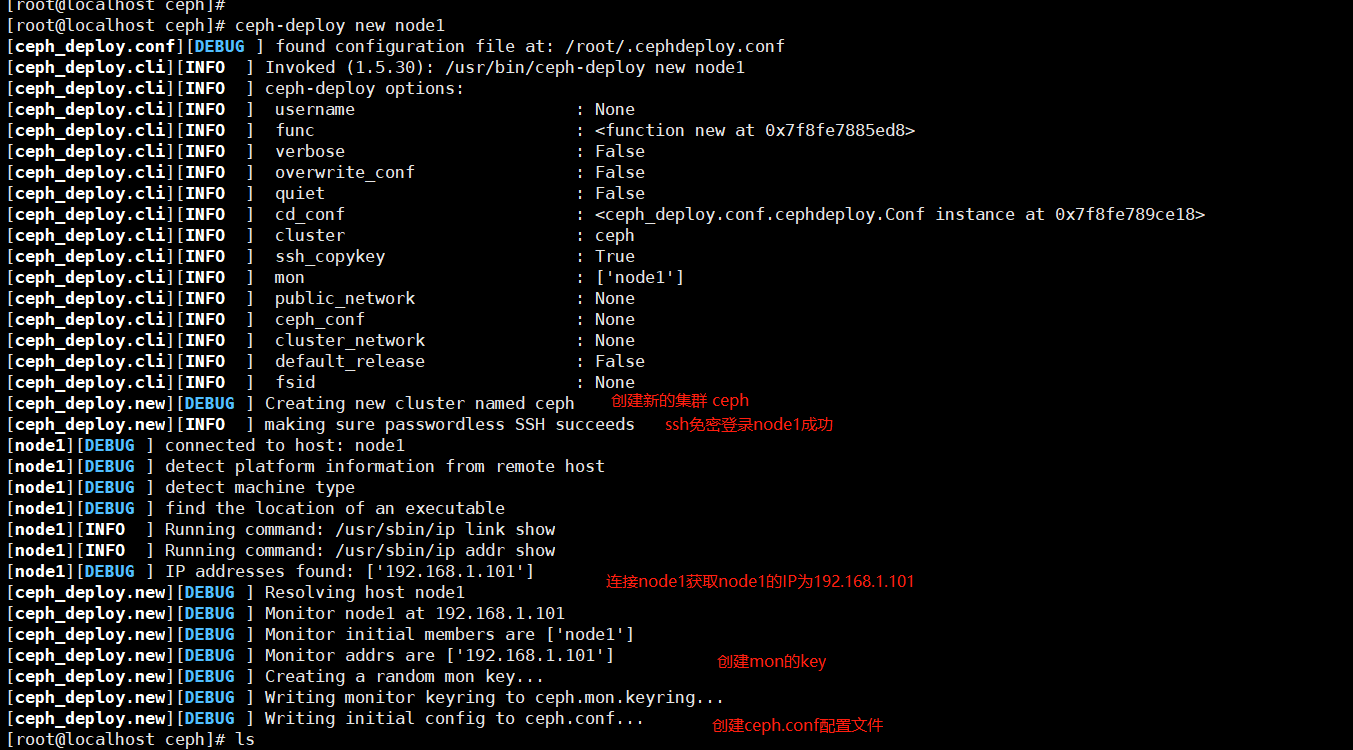

创建集群

1 | [root@node1 ~]# ceph-deploy new node1 |

过程如下

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 | [root@localhost ceph]# ceph-deploy new node1[ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf[ceph_deploy.cli][INFO ] Invoked (1.5.30): /usr/bin/ceph-deploy new node1[ceph_deploy.cli][INFO ] ceph-deploy options:[ceph_deploy.cli][INFO ] username : None[ceph_deploy.cli][INFO ] func : <function new at 0x7f8fe7885ed8>[ceph_deploy.cli][INFO ] verbose : False[ceph_deploy.cli][INFO ] overwrite_conf : False[ceph_deploy.cli][INFO ] quiet : False[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f8fe789ce18>[ceph_deploy.cli][INFO ] cluster : ceph[ceph_deploy.cli][INFO ] ssh_copykey : True[ceph_deploy.cli][INFO ] mon : ['node1'][ceph_deploy.cli][INFO ] public_network : None[ceph_deploy.cli][INFO ] ceph_conf : None[ceph_deploy.cli][INFO ] cluster_network : None[ceph_deploy.cli][INFO ] default_release : False[ceph_deploy.cli][INFO ] fsid : None[ceph_deploy.new][DEBUG ] Creating new cluster named ceph[ceph_deploy.new][INFO ] making sure passwordless SSH succeeds[node1][DEBUG ] connected to host: node1 [node1][DEBUG ] detect platform information from remote host[node1][DEBUG ] detect machine type[node1][DEBUG ] find the location of an executable[node1][INFO ] Running command: /usr/sbin/ip link show[node1][INFO ] Running command: /usr/sbin/ip addr show[node1][DEBUG ] IP addresses found: ['192.168.1.101'][ceph_deploy.new][DEBUG ] Resolving host node1[ceph_deploy.new][DEBUG ] Monitor node1 at 192.168.1.101[ceph_deploy.new][DEBUG ] Monitor initial members are ['node1'][ceph_deploy.new][DEBUG ] Monitor addrs are ['192.168.1.101'][ceph_deploy.new][DEBUG ] Creating a random mon key...[ceph_deploy.new][DEBUG ] Writing monitor keyring to ceph.mon.keyring...[ceph_deploy.new][DEBUG ] Writing initial config to ceph.conf... |

会在当前配置文件目录生成以下配置文件

1 2 | #ls ceph.conf ceph-deploy-ceph.log ceph.mon.keyring |

说明

1 2 3 4 | 说明:ceph.conf 集群配置文件ceph-deploy-ceph.log 使用ceph-deploy部署的日志记录ceph.mon.keyring mon的验证key文件 监控需要的令牌 |

集群节点安装ceph

node1 node2 node3 client都需要安装

1 | yum install ceph ceph-radosgw -y |

查看版本

1 2 | # ceph -vceph version 13.2.10 (564bdc4ae87418a232fc901524470e1a0f76d641) mimic (stable) |

注意: 如果公网OK,并且网速好的话,可以用`ceph-deploy install node1 node2 node3`命令来安装,但网速不好的话会比较坑

所以这里我们选择直接用准备好的本地ceph源,然后`yum install ceph ceph-radosgw -y`安装即可。

客户端client安装ceph-common

1 | [root@client ~]# yum install ceph-common -y |

好像上一步已经在client安装了cehp-common

创建mon监控

解决public网络用于监控

修改配置文件在[global]配置端添加下面一句

1 | public network = 192.168.1.0/24 |

监控节点初始化,并同步配置到所有节点(node1,node2,node3,不包括client)

1 | ceph-deploy mon create-initial |

如果执行报错

1 2 3 | [node1][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf[ceph_deploy.mon][ERROR ] RuntimeError: config file /etc/ceph/ceph.conf exists with different content; use --overwrite-conf to overwrite[ceph_deploy][ERROR ] GenericError: Failed to create 1 monitors |

则在修改配置public network = 192.168.1.0/24之前执行一次ceph-deploy mon create-initial修改配置之后再次执行即可

查看监控状态

1 2 | [root@node1 ceph]# ceph healthHEALTH_OK |

将配置信息同步到所有节点

1 | ceph-deploy admin node1 node2 node3 |

在node2 node3多了几个配置文件

1 2 3 4 5 6 | [root@node2 ceph]# ll总用量 12-rw------- 1 root root 151 8月 3 14:46 ceph.client.admin.keyring-rw-r--r-- 1 root root 322 8月 3 14:46 ceph.conf-rw-r--r-- 1 root root 92 4月 24 01:07 rbdmap-rw------- 1 root root 0 8月 1 16:46 tmpDX_w1Z |

查看状态

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 | [root@localhost ceph]# ceph -s cluster: id: a9aa277d-7192-4687-b384-9787b73ece71 health: HEALTH_OK #集群监控状态OK services: mon: 1 daemons, quorum node1 (age 3m) #1个mon mgr: no daemons active osd: 0 osds: 0 up, 0 in data: pools: 0 pools, 0 pgs objects: 0 objects, 0 B usage: 0 B used, 0 B / 0 B avail pgs: |

为了防止mon单点故障,你可以加多个mon节点(建议奇数个,因为有quorum仲裁投票)

1 2 | ceph-deploy mon add node2ceph-deploy mon add node3 |

再次查看

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 | [root@localhost ceph]# ceph -s cluster: id: a9aa277d-7192-4687-b384-9787b73ece71 health: HEALTH_OK services: mon: 3 daemons, quorum node1,node2,node3 (age 0.188542s) #3个mon了 mgr: no daemons active osd: 0 osds: 0 up, 0 in data: pools: 0 pools, 0 pgs objects: 0 objects, 0 B usage: 0 B used, 0 B / 0 B avail pgs: |

#### 监控到时间不同步的解决方法

ceph集群对时间同步要求非常高, 即使你已经将ntpd服务开启,但仍然可能有`clock skew deteted`相关警告**

请做如下尝试:

1, 在ceph集群所有节点上(`node1`,`node2`,`node3`)不使用ntpd服务,直接使用crontab同步

# systemctl stop ntpd

# systemctl disable ntpd

# crontab -e

*/10 * * * * ntpdate ntp1.aliyun.com 每5或10分钟同步1次公网的任意时间服务器

2, 调大时间警告的阈值

[root@node1 ceph]# vim ceph.conf

[global] 在global参数组里添加以下两行

mon clock drift allowed = 2 # monitor间的时钟滴答数(默认0.5秒)

mon clock drift warn backoff = 30 # 调大时钟允许的偏移量(默认为5)

修改了ceph.conf配置需要同步到所有节点

1 | ceph-deploy --overwrite-conf admin node1 node2 node3 |

前面第1次同步不需要加--overwrite-conf参数

这次修改ceph.conf再同步就需要加--overwrite-conf参数覆盖

所有ceph集群节点上重启ceph-mon.target服务

1 | systemctl restart ceph-mon.target |

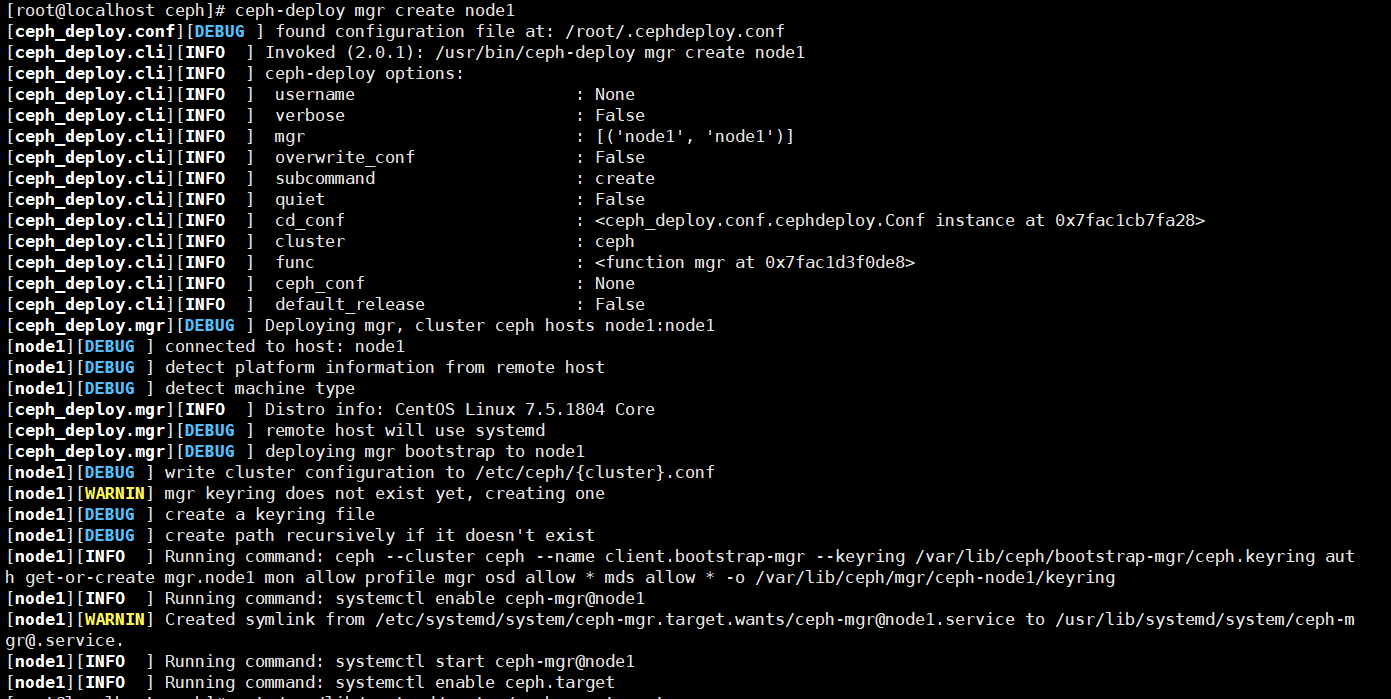

创建mgr(管理)

eph luminous版本中新增加了一个组件:Ceph Manager Daemon,简称ceph-mgr。

该组件的主要作用是分担和扩展monitor的部分功能,减轻monitor的负担,让更好地管理ceph存储系统。

1 | ceph-deploy mgr create node1 |

查看

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 | [root@localhost ceph]# ceph -s cluster: id: a9aa277d-7192-4687-b384-9787b73ece71 health: HEALTH_WARN Module 'restful' has failed dependency: No module named 'pecan' Reduced data availability: 1 pg inactive OSD count 0 < osd_pool_default_size 3 services: mon: 3 daemons, quorum node1,node2,node3 (age 10m) mgr: node1(active, since 2m) #创建了一个mgr节点是node osd: 0 osds: 0 up, 0 in data: pools: 1 pools, 1 pgs objects: 0 objects, 0 B usage: 0 B used, 0 B / 0 B avail pgs: 100.000% pgs unknown 1 unknown |

添加多个mgr可以实现HA

1 2 | ceph-deploy mgr create node2ceph-deploy mgr create node3 |

查看

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 | [root@localhost ceph]# ceph -s cluster: id: a9aa277d-7192-4687-b384-9787b73ece71 health: HEALTH_WARN Module 'restful' has failed dependency: No module named 'pecan' Reduced data availability: 1 pg inactive OSD count 0 < osd_pool_default_size 3 services: mon: 3 daemons, quorum node1,node2,node3 (age 11m) mgr: node1(active, since 3m), standbys: node2, node3 #3个mgr其中node1为active node2 3为standbys osd: 0 osds: 0 up, 0 in data: pools: 1 pools, 1 pgs objects: 0 objects, 0 B usage: 0 B used, 0 B / 0 B avail pgs: 100.000% pgs unknown 1 unknown |

创建OSD(存储盘)

查看帮助

1 2 3 | [root@node1 ceph]# ceph-deploy disk --help[root@node1 ceph]# ceph-deploy osd --help |

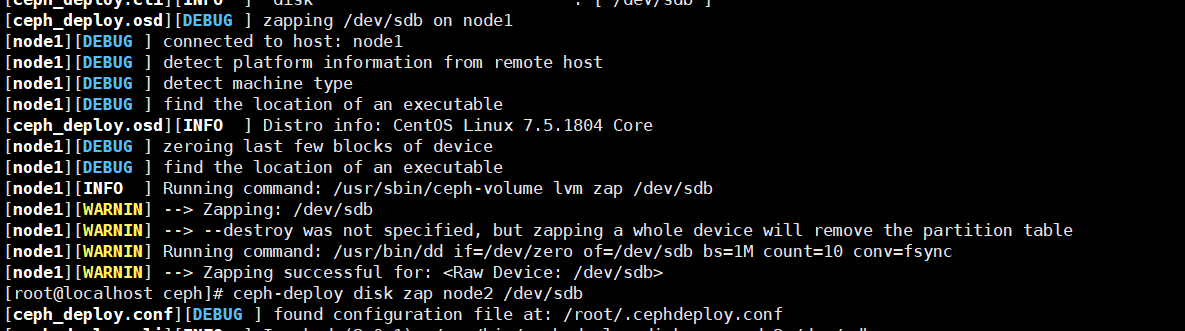

zap表示干掉磁盘上的数据,相当于格式化

1 2 3 | [root@node1 ceph]# ceph-deploy disk zap node1 /dev/sdb[root@node1 ceph]# ceph-deploy disk zap node2 /dev/sdb[root@node1 ceph]# ceph-deploy disk zap node3 /dev/sdb |

将磁盘创建为osd

1 2 3 | [root@node1 ceph]# ceph-deploy osd create --data /dev/sdb node1[root@node1 ceph]# ceph-deploy osd create --data /dev/sdb node2[root@node1 ceph]# ceph-deploy osd create --data /dev/sdb node3 |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 | [root@localhost ceph]# ceph-deploy osd create --data /dev/sdb node1[ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy osd create --data /dev/sdb node1[ceph_deploy.cli][INFO ] ceph-deploy options:[ceph_deploy.cli][INFO ] verbose : False[ceph_deploy.cli][INFO ] bluestore : None[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f718ae904d0>[ceph_deploy.cli][INFO ] cluster : ceph[ceph_deploy.cli][INFO ] fs_type : xfs[ceph_deploy.cli][INFO ] block_wal : None[ceph_deploy.cli][INFO ] default_release : False[ceph_deploy.cli][INFO ] username : None[ceph_deploy.cli][INFO ] journal : None[ceph_deploy.cli][INFO ] subcommand : create[ceph_deploy.cli][INFO ] host : node1[ceph_deploy.cli][INFO ] filestore : None[ceph_deploy.cli][INFO ] func : <function osd at 0x7f718b7115f0>[ceph_deploy.cli][INFO ] ceph_conf : None[ceph_deploy.cli][INFO ] zap_disk : False[ceph_deploy.cli][INFO ] data : /dev/sdb[ceph_deploy.cli][INFO ] block_db : None[ceph_deploy.cli][INFO ] dmcrypt : False[ceph_deploy.cli][INFO ] overwrite_conf : False[ceph_deploy.cli][INFO ] dmcrypt_key_dir : /etc/ceph/dmcrypt-keys[ceph_deploy.cli][INFO ] quiet : False[ceph_deploy.cli][INFO ] debug : False[ceph_deploy.osd][DEBUG ] Creating OSD on cluster ceph with data device /dev/sdb[node1][DEBUG ] connected to host: node1 [node1][DEBUG ] detect platform information from remote host[node1][DEBUG ] detect machine type[node1][DEBUG ] find the location of an executable[ceph_deploy.osd][INFO ] Distro info: CentOS Linux 7.5.1804 Core[ceph_deploy.osd][DEBUG ] Deploying osd to node1[node1][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf[node1][WARNIN] osd keyring does not exist yet, creating one[node1][DEBUG ] create a keyring file[node1][DEBUG ] find the location of an executable[node1][INFO ] Running command: /usr/sbin/ceph-volume --cluster ceph lvm create --bluestore --data /dev/sdb[node1][WARNIN] Running command: /usr/bin/ceph-authtool --gen-print-key[node1][WARNIN] Running command: /usr/bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring -i - osd new d39eddff-d29a-426a-ae2b-4c5a4026458d[node1][WARNIN] Running command: /usr/sbin/vgcreate --force --yes ceph-a1598e52-3eb9-45c4-8416-9c5537241d70 /dev/sdb[node1][WARNIN] stdout: Physical volume "/dev/sdb" successfully created.[node1][WARNIN] stdout: Volume group "ceph-a1598e52-3eb9-45c4-8416-9c5537241d70" successfully created[node1][WARNIN] Running command: /usr/sbin/lvcreate --yes -l 100%FREE -n osd-block-d39eddff-d29a-426a-ae2b-4c5a4026458d ceph-a1598e52-3eb9-45c4-8416-9c5537241d70[node1][WARNIN] stdout: Logical volume "osd-block-d39eddff-d29a-426a-ae2b-4c5a4026458d" created.[node1][WARNIN] Running command: /usr/bin/ceph-authtool --gen-print-key[node1][WARNIN] Running command: /usr/bin/mount -t tmpfs tmpfs /var/lib/ceph/osd/ceph-0[node1][WARNIN] Running command: /usr/bin/chown -h ceph:ceph /dev/ceph-a1598e52-3eb9-45c4-8416-9c5537241d70/osd-block-d39eddff-d29a-426a-ae2b-4c5a4026458d[node1][WARNIN] Running command: /usr/bin/chown -R ceph:ceph /dev/dm-3[node1][WARNIN] Running command: /usr/bin/ln -s /dev/ceph-a1598e52-3eb9-45c4-8416-9c5537241d70/osd-block-d39eddff-d29a-426a-ae2b-4c5a4026458d /var/lib/ceph/osd/ceph-0/block[node1][WARNIN] Running command: /usr/bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring mon getmap -o /var/lib/ceph/osd/ceph-0/activate.monmap[node1][WARNIN] stderr: 2020-08-04T17:39:52.619+0800 7fa772483700 -1 auth: unable to find a keyring on /etc/ceph/ceph.client.bootstrap-osd.keyring,/etc/ceph/ceph.keyring,/etc/ceph/keyring,/etc/ceph/keyring.bin,: (2) No such file or directory[node1][WARNIN] 2020-08-04T17:39:52.619+0800 7fa772483700 -1 AuthRegistry(0x7fa76c058528) no keyring found at /etc/ceph/ceph.client.bootstrap-osd.keyring,/etc/ceph/ceph.keyring,/etc/ceph/keyring,/etc/ceph/keyring.bin,, disabling cephx[node1][WARNIN] stderr: got monmap epoch 3[node1][WARNIN] Running command: /usr/bin/ceph-authtool /var/lib/ceph/osd/ceph-0/keyring --create-keyring --name osd.0 --add-key AQDnLClfQ6UHKhAAkJ585N2DYxYt5negauu0Mg==[node1][WARNIN] stdout: creating /var/lib/ceph/osd/ceph-0/keyring[node1][WARNIN] added entity osd.0 auth(key=AQDnLClfQ6UHKhAAkJ585N2DYxYt5negauu0Mg==)[node1][WARNIN] Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-0/keyring[node1][WARNIN] Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-0/[node1][WARNIN] Running command: /usr/bin/ceph-osd --cluster ceph --osd-objectstore bluestore --mkfs -i 0 --monmap /var/lib/ceph/osd/ceph-0/activate.monmap --keyfile - --osd-data /var/lib/ceph/osd/ceph-0/ --osd-uuid d39eddff-d29a-426a-ae2b-4c5a4026458d --setuser ceph --setgroup ceph[node1][WARNIN] --> ceph-volume lvm prepare successful for: /dev/sdb[node1][WARNIN] Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-0[node1][WARNIN] Running command: /usr/bin/ceph-bluestore-tool --cluster=ceph prime-osd-dir --dev /dev/ceph-a1598e52-3eb9-45c4-8416-9c5537241d70/osd-block-d39eddff-d29a-426a-ae2b-4c5a4026458d --path /var/lib/ceph/osd/ceph-0 --no-mon-config[node1][WARNIN] Running command: /usr/bin/ln -snf /dev/ceph-a1598e52-3eb9-45c4-8416-9c5537241d70/osd-block-d39eddff-d29a-426a-ae2b-4c5a4026458d /var/lib/ceph/osd/ceph-0/block[node1][WARNIN] Running command: /usr/bin/chown -h ceph:ceph /var/lib/ceph/osd/ceph-0/block[node1][WARNIN] Running command: /usr/bin/chown -R ceph:ceph /dev/dm-3[node1][WARNIN] Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-0[node1][WARNIN] Running command: /usr/bin/systemctl enable ceph-volume@lvm-0-d39eddff-d29a-426a-ae2b-4c5a4026458d[node1][WARNIN] stderr: Created symlink from /etc/systemd/system/multi-user.target.wants/ceph-volume@lvm-0-d39eddff-d29a-426a-ae2b-4c5a4026458d.service to /usr/lib/systemd/system/ceph-volume@.service.[node1][WARNIN] Running command: /usr/bin/systemctl enable --runtime ceph-osd@0[node1][WARNIN] stderr: Created symlink from /run/systemd/system/ceph-osd.target.wants/ceph-osd@0.service to /usr/lib/systemd/system/ceph-osd@.service.[node1][WARNIN] Running command: /usr/bin/systemctl start ceph-osd@0[node1][WARNIN] --> ceph-volume lvm activate successful for osd ID: 0[node1][WARNIN] --> ceph-volume lvm create successful for: /dev/sdb[node1][INFO ] checking OSD status...[node1][DEBUG ] find the location of an executable[node1][INFO ] Running command: /bin/ceph --cluster=ceph osd stat --format=json[ceph_deploy.osd][DEBUG ] Host node1 is now ready for osd use. |

查看

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | [root@localhost ceph]# ceph -s cluster: id: a9aa277d-7192-4687-b384-9787b73ece71 health: HEALTH_WARN Module 'restful' has failed dependency: No module named 'pecan' services: mon: 3 daemons, quorum node1,node2,node3 (age 22m) mgr: node1(active, since 14m), standbys: node2, node3 osd: 3 osds: 3 up (since 4s), 3 in (since 4s) #3个osd 3个up启用状态 3 in代表3G在使用 每块硬盘占用1G data: pools: 1 pools, 1 pgs objects: 0 objects, 0 B usage: 3.0 GiB used, 45 GiB / 48 GiB avail #3G使用 45G空闲 总计48G及三块16G硬盘相加之和 pgs: 1 active+clean |

集群节点的扩容方法

假设再加一个新的集群节点node4

1, 主机名配置和绑定

2, 在node4上`yum install ceph ceph-radosgw -y`安装软件

3, 在部署节点node1上同步配置文件给node4. `ceph-deploy admin node4`

4, 按需求选择在node4上添加mon或mgr或osd等

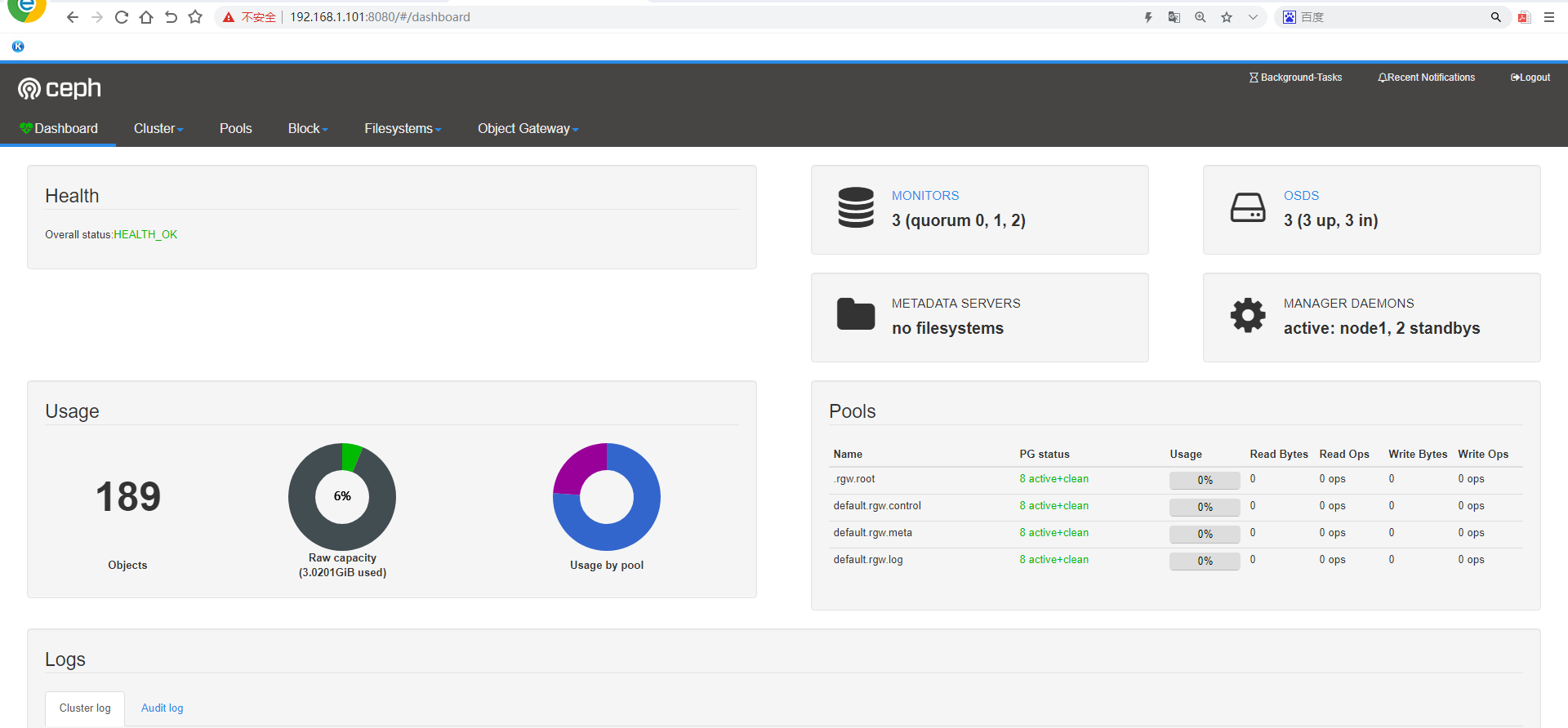

ceph dashboard

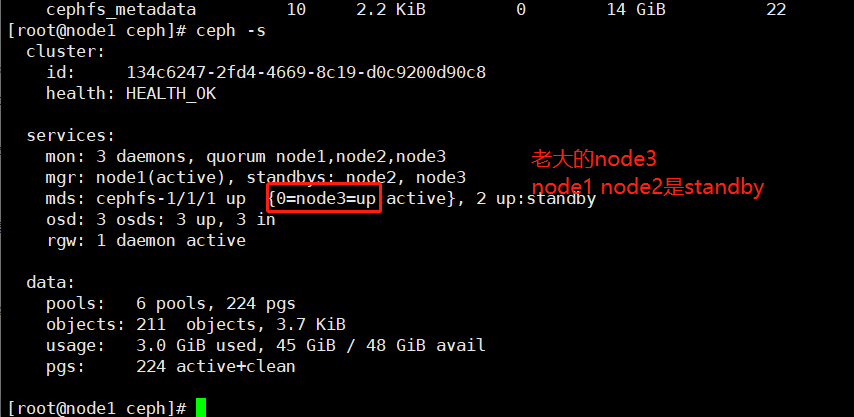

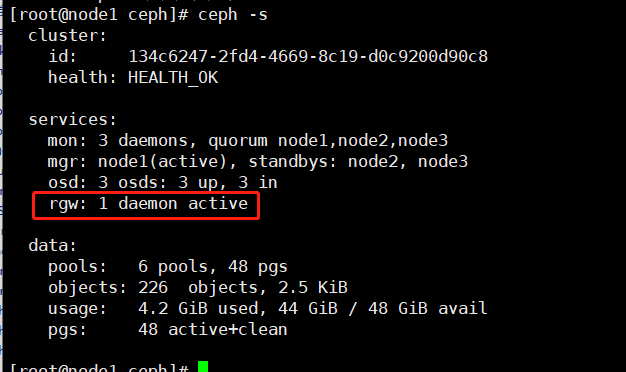

查看集群状态确认mgr的active节点

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | [root@node1 ceph]# ceph -s cluster: id: 134c6247-2fd4-4669-8c19-d0c9200d90c8 health: HEALTH_OK services: mon: 3 daemons, quorum node1,node2,node3 mgr: node1(active), standbys: node2, node3 #确认mgr active节点为node1 osd: 3 osds: 3 up, 3 in rgw: 1 daemon active data: pools: 4 pools, 32 pgs objects: 189 objects, 1.5 KiB usage: 3.0 GiB used, 45 GiB / 48 GiB avail pgs: 32 active+clean |

查看开启及关闭的模块

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 | [root@node1 mgr-dashboard]# ceph mgr module ls{ "enabled_modules": [ "balancer", "crash", "dashboard", "iostat", "restful", "status" ], "disabled_modules": [ { "name": "hello", "can_run": true, "error_string": "" }, { "name": "influx", "can_run": false, "error_string": "influxdb python module not found" }, { "name": "localpool", "can_run": true, "error_string": "" }, { "name": "prometheus", "can_run": true, "error_string": "" }, { "name": "selftest", "can_run": true, "error_string": "" }, { "name": "smart", "can_run": true, "error_string": "" }, { "name": "telegraf", "can_run": true, "error_string": "" }, { "name": "telemetry", "can_run": true, "error_string": "" }, { "name": "zabbix", "can_run": true, "error_string": "" } ]} |

开启dashboard模块

1 | ceph mgr module enable dashboard |

如果开启报错

1 2 | [root@localhost ceph]# ceph mgr module enable dashboardError ENOENT: all mgr daemons do not support module 'dashboard', pass --force to force enablement |

需要在每个开启mgr的节点安装ceph-mgr-dashboard

1 | yum install ceph-mgr-dashboard -y |

注意:不能仅仅在active节点安装,需要在standby节点都安装

创建自签名证书

1 2 | [root@node1 ceph]# ceph dashboard create-self-signed-certSelf-signed certificate created |

生成密钥对,并配置给ceph mgr

1 2 | [root@node1 ceph]# mkdir /etc/mgr-dashboard[root@node1 ceph]# cd /etc/mgr-dashboard/ |

1 | openssl req -new -nodes -x509 -subj "/O=IT-ceph/CN=cn" -days 3650 -keyout dashboard.key -out dashboard.crt -extensions v3_ca |

1 2 | [root@node1 mgr-dashboard]# lsdashboard.crt dashboard.key |

在ceph集群的active mgr节点上(我这里为node1)配置mgr services

1 2 | [root@node1 mgr-dashboard]# ceph config set mgr mgr/dashboard/server_addr 192.168.1.101[root@node1 mgr-dashboard]# ceph config set mgr mgr/dashboard/server_port 8080 |

重启dashboard模块,并查看访问地址

注意:需要重启不重启查看的端口是默认的8443端口无法访问

重启就是先关disable再开启enable

1 2 | [root@node1 mgr-dashboard]# ceph mgr module disable dashboard[root@node1 mgr-dashboard]# ceph mgr module enable dashboard |

查看mgr service

1 2 3 4 | # ceph mgr services{ "dashboard": "https://192.168.1.101:8080/"} |

设置访问web页面用户名和密码

1 | ceph dashboard set-login-credentials admin admin |

通过本机或其它主机访问 https://ip:8080

Ceph文件存储

要运行Ceph文件系统,你必须先装只是带一个mds的Ceph存储集群

Ceph.MDS:为Ceph文件存储类型存放元数据metadata(也就是说Ceph块存储和Ceph对象存储不使用MDS)

创建文件存储并使用

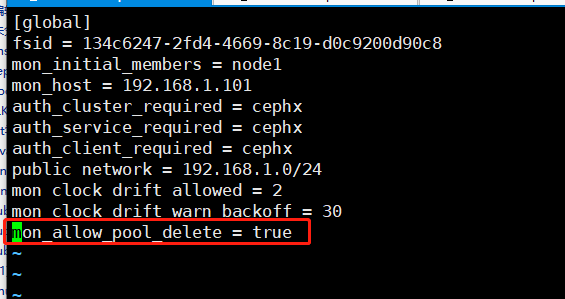

第1步 在node1部署节点上修改配置/etc/ceph/ceph.conf 增加配置

1 | mon_allow_pool_delete = true |

同步配置文件

注意:修改了配置文件才需要同步,没有修改不需要同步配置文件

1 | ceph-deploy --overwrite-conf admin node1 node2 node3 |

创建3个mds

1 | [root@node1 ceph]# ceph-deploy mds create node1 node2 node3 |

第2步: 一个Ceph文件系统需要至少两个RADOS存储池,一个用于数据,一个用于元数据。所以我们创建它们

1 2 3 4 | [root@node1 ceph]# ceph osd pool create cephfs_pool 128pool 'cephfs_pool' created[root@node1 ceph]# ceph osd pool create cephfs_metadata 64pool 'cephfs_metadata' created |

参数解释

1 2 | 创建pool自定义名为cephfs_pool 用于存储数据 PG数为128 用于存储数据所以PG数较大创建pool自定义名为cephfs_metadata 用于存储元数据 PG数为64 |

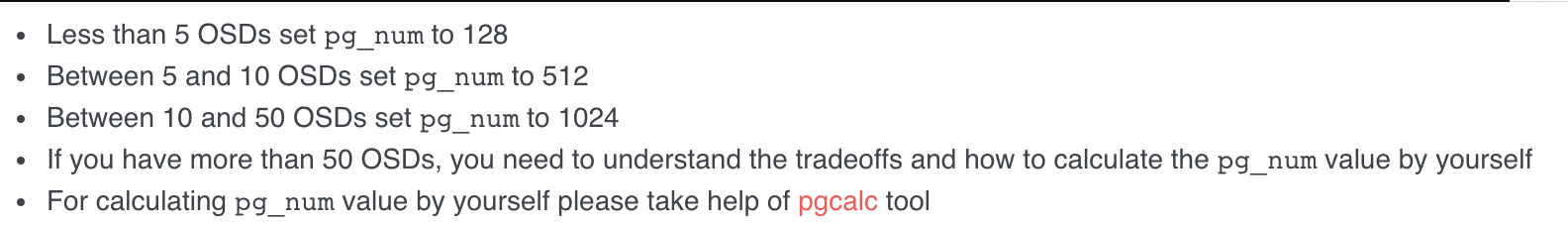

PG介绍

PG, Placement Groups。CRUSH先将数据分解成一组对象,然后根据对象名称、复制级别和系统中的PG数等信息执行散列操作,再将结果生成PG ID。可以将PG看做一个逻辑容器,这个容器包含多个对象,同时这个逻辑对象映射之多个OSD上。

如果没有PG,在成千上万个OSD上管理和跟踪数百万计的对象的复制和传播是相当困难的。没有PG这一层,管理海量的对象所消耗的计算资源也是不可想象的。建议每个OSD上配置50~100个PG。

如果定义PG数

一般的

少于5个OSD则PG数为128

5-10个OSD则PG数为512

10-50个OSD则PG数为1024

如果有更多的OSD需要自己理解计算

查看

1 2 3 | [root@node1 ceph]# ceph osd pool ls |grep cephfscephfs_poolcephfs_metadata |

查看创建的pool详细信息

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | [root@localhost ceph]# ceph osd pool get cephfs_pool allsize: 3min_size: 2pg_num: 128pgp_num: 128crush_rule: replicated_rulehashpspool: truenodelete: falsenopgchange: falsenosizechange: falsewrite_fadvise_dontneed: falsenoscrub: falsenodeep-scrub: falseuse_gmt_hitset: 1fast_read: 0pg_autoscale_mode: warn |

第3步: 创建Ceph文件系统,并确认客户端访问的节点

1 2 | [root@node1 ceph]# ceph fs new cephfs cephfs_metadata cephfs_poolnew fs with metadata pool 10 and data pool 9 |

查看

1 2 3 4 5 6 7 | [root@node1 ceph]# ceph osd pool ls.rgw.rootdefault.rgw.controldefault.rgw.metadefault.rgw.logcephfs_poolcephfs_metadata |

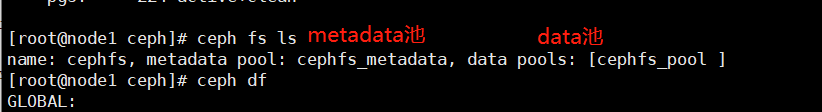

1 2 | [root@node1 ceph]# ceph fs lsname: cephfs, metadata pool: cephfs_metadata, data pools: [cephfs_pool ] |

1 2 | [root@node1 ceph]# ceph mds statcephfs-1/1/1 up {0=node3=up:active}, 2 up:standby #这里看到node3为up状态 |

metadata保存在node3上

客户端准备验证key文件

说明: ceph默认启用了cephx认证, 所以客户端的挂载必须要验证(ceph.conf默认配置文件开启)

在集群节点(node1,node2,node3)上任意一台查看密钥字符串

1 | cat /etc/ceph/ceph.client.admin.keyring |

输出

1 | ceph-authtool -p /etc/ceph/ceph.client.admin.keyring >admin.key |

把这个文件放在客户端client /root/admin.key

注意:直接把key复制编辑admin.key文档可能会在挂载时报错

客户端client安装

1 | yum -y install ceph-fuse |

需要安装否则客户端不支持

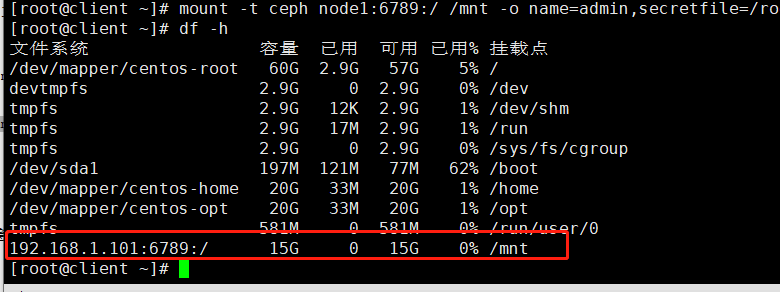

客户端挂载

可以使用其他node主机名进行挂载,例如node3

1 | mount -t ceph node1:6789:/ /mnt -o name=admin,secretfile=/root/admin.key |

查看

注意:如果使用文件挂载报错可以使用参数secret=秘钥进行挂载

可以使用两个客户端, 同时挂载此文件存储,可实现同读同写

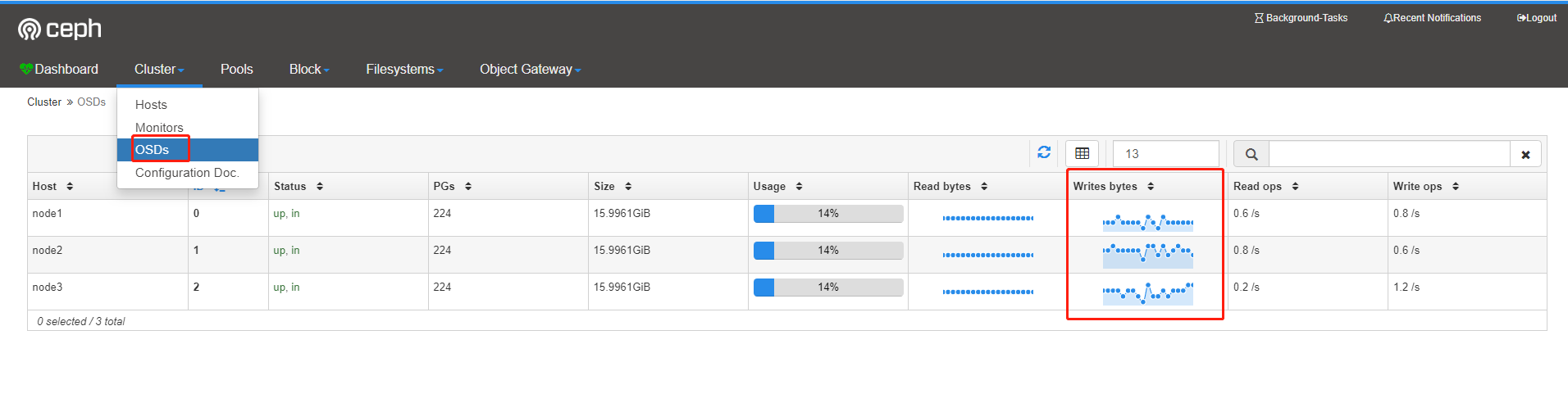

往挂载的硬盘写数据可以在dashboard查看读写监控状态

1 | dd if=/dev/zero of=/mnt/file1 bs=10M count=1000 |

删除文件存储

在所有挂载了文件存储的客户端卸载文件挂载

1 | umount /mnt |

停掉所有节点的mds node1 node2 node3

1 | systemctl stop ceph-mds.target |

回到集群任意一个节点上(node1,node2,node3其中之一)删除

如果要客户端删除,需要在node1上`ceph-deploy admin client`同步配置才可以

1 2 3 4 5 | [root@node3 ~]# ceph fs rm cephfs --yes-i-really-mean-it[root@node3 ~]# ceph osd pool delete cephfs_metadata cephfs_metadata --yes-i-really-really-mean-itpool 'cephfs_metadata' removed[root@node3 ~]# ceph osd pool delete cephfs_pool cephfs_pool --yes-i-really-really-mean-itpool 'cephfs_pool' removed |

注意:为了安全需要输入两次创建的pool名并且加参数--yes-i-really-really-mean-it才能删除

注意:需要在配置文件添加以下配置,才能删除

1 | mon_allow_pool_delete = true |

如果已经添加配置还提示

1 | Error EPERM: pool deletion is disabled; you must first set the mon_allow_pool_delete config option to true before you can destroy a pool |

则重启服务ceph-mon.target即可

启动md是服务 node1 node2 node3节点启动

1 | systemctl start ceph-mds.target |

Ceph快存储

第一步:在node1上同步配置文件到client

1 2 | [root@node1 ceph]# cd /etc/ceph[root@node1 ceph]# ceph-deploy admin client |

第二步:建立存储池,并初始化

在客户端client操作

1 2 | [root@client ~]# ceph osd pool create rbd_pool 128pool 'rbd_pool' created |

初始化

1 | [root@client ~]# rbd pool init rbd_pool |

第三步:创建一个存储卷(这里卷名为volume1 大小为5000M)

1 | rbd create volume1 --pool rbd_pool --size 5000 |

查看

1 2 | [root@client ~]# rbd ls rbd_poolvolume1 |

1 2 3 4 5 6 7 8 9 10 11 | [root@client ~]# rbd info volume1 -p rbd_poolrbd image 'volume1': #volume1为rbd_image size 4.9 GiB in 1250 objects order 22 (4 MiB objects) id: 60fc6b8b4567 block_name_prefix: rbd_data.60fc6b8b4567 format: 2 #两种格式1和2 默认是2 features: layering, exclusive-lock, object-map, fast-diff, deep-flatten op_features: flags: create_timestamp: Tue Aug 4 09:20:05 2020 |

第四步:将创建的卷映射成块设备

因为rbd镜像的一些特性,OS kernel并不支持,所以映射报错

1 2 3 4 5 | [root@client ~]# rbd map rbd_pool/volume1rbd: sysfs write failedRBD image feature set mismatch. You can disable features unsupported by the kernel with "rbd feature disable rbd_pool/volume1 object-map fast-diff deep-flatten".In some cases useful info is found in syslog - try "dmesg | tail".rbd: map failed: (6) No such device or address |

解决办法:disable掉相关特性

1 | rbd feature disable rbd_pool/volume1 exclusive-lock object-map fast-diff deep-flatten |

再次映射

1 2 | [root@client ~]# rbd map rbd_pool/volume1/dev/rbd0 |

创建了磁盘/dev/rbd0 类似于做了一个软连接

查看映射

1 2 3 | [root@client ~]# rbd showmappedid pool image snap device 0 rbd_pool volume1 - /dev/rbd0 |

如果需要取消映射可以使用命令

1 | rbd unmap /dev/rbd0 |

第六步:格式化挂载

1 2 | mkfs.xfs /dev/rbd0mount /dev/rbd0 /mnt |

查看

1 2 | [root@client ~]# df -h|tail -1/dev/rbd0 4.9G 33M 4.9G 1% /mnt |

块扩容与裁减

扩容成8000M

1 2 | [root@client ~]# rbd resize --size 8000 rbd_pool/volume1Resizing image: 100% complete...done. |

查看并没有变化

1 2 | [root@client ~]# df -h|tail -1/dev/rbd0 4.9G 33M 4.9G 1% /mnt |

动态刷新扩容

1 2 3 4 5 6 7 8 9 10 11 | [root@client ~]# xfs_growfs -d /mnt/meta-data=/dev/rbd0 isize=512 agcount=8, agsize=160768 blks = sectsz=512 attr=2, projid32bit=1 = crc=1 finobt=0 spinodes=0data = bsize=4096 blocks=1280000, imaxpct=25 = sunit=1024 swidth=1024 blksnaming =version 2 bsize=4096 ascii-ci=0 ftype=1log =internal bsize=4096 blocks=2560, version=2 = sectsz=512 sunit=8 blks, lazy-count=1realtime =none extsz=4096 blocks=0, rtextents=0data blocks changed from 1280000 to 2048000 |

注意:该命令和LVM扩容命令一致

再次查看,扩容成功

1 2 | [root@client ~]# df -h|tail -1/dev/rbd0 7.9G 33M 7.8G 1% /mnt |

块存储裁减

不能在线裁减,裁减后需要重新格式化再挂载,如果有数据需要提前备份好数据

裁减为5000M

1 2 | [root@client ~]# rbd resize --size 5000 rbd_pool/volume1 --allow-shrinkResizing image: 100% complete...done. |

卸载,格式化

1 2 3 4 | umount /mnt fdisk -lmkfs.xfs -f /dev/rbd0mount /dev/rbd0 /mnt/ |

查看

1 2 | [root@client ~]# df -h|tail -1/dev/rbd0 4.9G 33M 4.9G 1% /mnt |

删除块存储

1 2 3 4 5 6 7 | #卸载[root@client ~]# umount /mnt#取消映射 [root@client ~]# rbd unmap /dev/rbd0#删除存储池[root@client ~]# ceph osd pool delete rbd_pool rbd_pool --yes-i-really-really-mean-itpool 'rbd_pool' removed |

Ceph对象存储

第一步:在node1上创建rgw

1 | [root@node1 ceph]# ceph-deploy rgw create node1 |

查看,运行端口是7480

1 | lsof -i:7480 |

第二步:在客户端测试连接对象网关

在client操作

安装测试工具

创建一个测试用户,需要在部署节点使用ceph-deploy admin client同步配置文件给client

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 | [root@client ~]# radosgw-admin user create --uid="testuser" --display-name="First User"|grep -E 'access_key|secret_key' "access_key": "S859J38AS6WW1CZSB90M", "secret_key": "PCmfHoAsHw2GIEioSWvN887o02VXesOkX2gJ20fG"[root@client ~]# radosgw-admin user create --uid="testuser" --display-name="First User"{ "user_id": "testuser", "display_name": "First User", "email": "", "suspended": 0, "max_buckets": 1000, "auid": 0, "subusers": [], "keys": [ { "user": "testuser", "access_key": "S859J38AS6WW1CZSB90M", "secret_key": "PCmfHoAsHw2GIEioSWvN887o02VXesOkX2gJ20fG" } ], "swift_keys": [], "caps": [], "op_mask": "read, write, delete", "default_placement": "", "placement_tags": [], "bucket_quota": { "enabled": false, "check_on_raw": false, "max_size": -1, "max_size_kb": 0, "max_objects": -1 }, "user_quota": { "enabled": false, "check_on_raw": false, "max_size": -1, "max_size_kb": 0, "max_objects": -1 }, "temp_url_keys": [], "type": "rgw", "mfa_ids": []} |

上面一大段主要有用的为access_key与secret_key,用于连接对象存储网关

1 2 3 | [root@client ~]# radosgw-admin user create --uid="testuser" --display-name="First User"|grep -E 'access_key|secret_key' "access_key": "S859J38AS6WW1CZSB90M", "secret_key": "PCmfHoAsHw2GIEioSWvN887o02VXesOkX2gJ20fG" |

s3连接对象网关

第一步:客户端安装s3cmd工具,并编写配置文件

1 | yum install s3cmd |

创建配置文件,内容如下

1 2 3 4 5 6 7 8 | [root@client ~]# cat /root/.s3cfg [default]access_key = S859J38AS6WW1CZSB90Msecret_key = PCmfHoAsHw2GIEioSWvN887o02VXesOkX2gJ20fGhost_base = 192.168.1.101:7480host_bucket = 192.168.1.101:7480/%(bucket)cloudfront_host = 192.168.1.101:7480use_https = False |

列出bucket

1 | s3cmd ls |

创建一个桶

1 | s3cmd mb s3://test_bucket |

上传文件到桶

1 | [root@client ~]# s3cmd put /etc/fstab s3://test_bucket |

下载文件到当前目录

1 | [root@client ~]# s3cmd get s3://test_bucket/fstab |

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· SQL Server 2025 AI相关能力初探

· Linux系列:如何用 C#调用 C方法造成内存泄露

· AI与.NET技术实操系列(二):开始使用ML.NET

· 记一次.NET内存居高不下排查解决与启示

· 探究高空视频全景AR技术的实现原理

· 阿里最新开源QwQ-32B,效果媲美deepseek-r1满血版,部署成本又又又降低了!

· AI编程工具终极对决:字节Trae VS Cursor,谁才是开发者新宠?

· 开源Multi-agent AI智能体框架aevatar.ai,欢迎大家贡献代码

· Manus重磅发布:全球首款通用AI代理技术深度解析与实战指南

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!