使用Docker和K8s搭建FastDFS分布式文件系统

使用CentOS主机搭建FastDFS文件系统参考:https://www.cnblogs.com/minseo/p/10210428.html

搭建IP规划

| IP | 用途 |

| 192.168.1.227 | fdfs tracker |

| 192.168.1.228 | fdfs storage |

搭建环境查看

安装Docker

1 2 3 4 5 6 7 8 9 | # step 1: 安装必要的一些系统工具sudo yum install -y yum-utils device-mapper-persistent-data lvm2# Step 2: 添加软件源信息sudo yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo# Step 3: 更新并安装Docker-CEsudo yum makecache fastsudo yum -y install docker-ce# Step 4: 开启Docker服务sudo service docker start |

安装完后查看

1 | docker info |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 | # docker infoClient: Debug Mode: falseServer: Containers: 1 Running: 1 Paused: 0 Stopped: 0 Images: 1 Server Version: 19.03.8 Storage Driver: overlay2 Backing Filesystem: <unknown> Supports d_type: true Native Overlay Diff: true Logging Driver: json-file Cgroup Driver: cgroupfs Plugins: Volume: local Network: bridge host ipvlan macvlan null overlay Log: awslogs fluentd gcplogs gelf journald json-file local logentries splunk syslog Swarm: inactive Runtimes: runc Default Runtime: runc Init Binary: docker-init containerd version: 7ad184331fa3e55e52b890ea95e65ba581ae3429 runc version: dc9208a3303feef5b3839f4323d9beb36df0a9dd init version: fec3683 Security Options: seccomp Profile: default Kernel Version: 3.10.0-862.el7.x86_64 Operating System: CentOS Linux 7 (Core) OSType: linux Architecture: x86_64 CPUs: 1 Total Memory: 5.67GiB Name: localhost.localdomain ID: PGGH:4IF4:TXUV:3CSM:LZZY:KVTA:FONM:WJIO:KVME:YYJJ:55IZ:WR7Q Docker Root Dir: /var/lib/docker Debug Mode: false Registry: https://index.docker.io/v1/ Labels: Experimental: false Insecure Registries: 127.0.0.0/8 Live Restore Enabled: false |

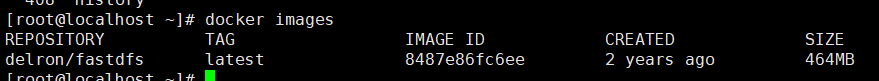

下载fdfsdfs镜像

1 | docker pull delron/fastdfs |

查看

以下操作在track节点192.168.1.227进行

使用容器启动track

1 | docker run -d --name tracker -v /opt/fdfsdata/tracker/:/var/fdfs delron/fastdfs tracker |

启动命令解析

1 2 3 4 | --network host #使用主机网络--name tracker #定义启动容器名称-v /opt/fdfsdata/tracker/:/var/fdfs #把本地目录挂载在容器的tracker基础数据目录tracker #最后的tracker代表本次使用镜像启动的服务为tracker服务 |

查看是否启动成功

1 2 3 | ps -ef|grep trackerlsof -i:22122docker ps |

默认启动端口为22122本次启动使用主机网络所以主机也会启动对于端口

登录容器查看tracker配置文件

1 | docker exec -it tracker /bin/bash |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 | # is this config file disabled# false for enabled# true for disableddisabled=false# bind an address of this host# empty for bind all addresses of this hostbind_addr=# the tracker server portport=22122# connect timeout in seconds# default value is 30sconnect_timeout=30# network timeout in seconds# default value is 30snetwork_timeout=60# the base path to store data and log filesbase_path=/var/fdfs# max concurrent connections this server supportedmax_connections=256# accept thread count# default value is 1# since V4.07accept_threads=1# work thread count, should <= max_connections# default value is 4# since V2.00work_threads=4# min buff size# default value 8KBmin_buff_size = 8KB# max buff size# default value 128KBmax_buff_size = 128KB# the method of selecting group to upload files# 0: round robin# 1: specify group# 2: load balance, select the max free space group to upload filestore_lookup=2# which group to upload file# when store_lookup set to 1, must set store_group to the group namestore_group=group2# which storage server to upload file# 0: round robin (default)# 1: the first server order by ip address# 2: the first server order by priority (the minimal)store_server=0# which path(means disk or mount point) of the storage server to upload file# 0: round robin# 2: load balance, select the max free space path to upload filestore_path=0# which storage server to download file# 0: round robin (default)# 1: the source storage server which the current file uploaded todownload_server=0# reserved storage space for system or other applications.# if the free(available) space of any stoarge server in # a group <= reserved_storage_space, # no file can be uploaded to this group.# bytes unit can be one of follows:### G or g for gigabyte(GB)### M or m for megabyte(MB)### K or k for kilobyte(KB)### no unit for byte(B)### XX.XX% as ratio such as reserved_storage_space = 10%reserved_storage_space = 10%#standard log level as syslog, case insensitive, value list:### emerg for emergency### alert### crit for critical### error### warn for warning### notice### info### debuglog_level=info#unix group name to run this program, #not set (empty) means run by the group of current userrun_by_group=#unix username to run this program,#not set (empty) means run by current userrun_by_user=# allow_hosts can ocur more than once, host can be hostname or ip address,# "*" (only one asterisk) means match all ip addresses# we can use CIDR ips like 192.168.5.64/26# and also use range like these: 10.0.1.[0-254] and host[01-08,20-25].domain.com# for example:# allow_hosts=10.0.1.[1-15,20]# allow_hosts=host[01-08,20-25].domain.com# allow_hosts=192.168.5.64/26allow_hosts=*# sync log buff to disk every interval seconds# default value is 10 secondssync_log_buff_interval = 10# check storage server alive interval secondscheck_active_interval = 120# thread stack size, should >= 64KB# default value is 64KBthread_stack_size = 64KB# auto adjust when the ip address of the storage server changed# default value is truestorage_ip_changed_auto_adjust = true# storage sync file max delay seconds# default value is 86400 seconds (one day)# since V2.00storage_sync_file_max_delay = 86400# the max time of storage sync a file# default value is 300 seconds# since V2.00storage_sync_file_max_time = 300# if use a trunk file to store several small files# default value is false# since V3.00use_trunk_file = false# the min slot size, should <= 4KB# default value is 256 bytes# since V3.00slot_min_size = 256# the max slot size, should > slot_min_size# store the upload file to trunk file when it's size <= this value# default value is 16MB# since V3.00slot_max_size = 16MB# the trunk file size, should >= 4MB# default value is 64MB# since V3.00trunk_file_size = 64MB# if create trunk file advancely# default value is false# since V3.06trunk_create_file_advance = false# the time base to create trunk file# the time format: HH:MM# default value is 02:00# since V3.06trunk_create_file_time_base = 02:00# the interval of create trunk file, unit: second# default value is 38400 (one day)# since V3.06trunk_create_file_interval = 86400# the threshold to create trunk file# when the free trunk file size less than the threshold, will create # the trunk files# default value is 0# since V3.06trunk_create_file_space_threshold = 20G# if check trunk space occupying when loading trunk free spaces# the occupied spaces will be ignored# default value is false# since V3.09# NOTICE: set this parameter to true will slow the loading of trunk spaces # when startup. you should set this parameter to true when neccessary.trunk_init_check_occupying = false# if ignore storage_trunk.dat, reload from trunk binlog# default value is false# since V3.10# set to true once for version upgrade when your version less than V3.10trunk_init_reload_from_binlog = false# the min interval for compressing the trunk binlog file# unit: second# default value is 0, 0 means never compress# FastDFS compress the trunk binlog when trunk init and trunk destroy# recommand to set this parameter to 86400 (one day)# since V5.01trunk_compress_binlog_min_interval = 0# if use storage ID instead of IP address# default value is false# since V4.00use_storage_id = false# specify storage ids filename, can use relative or absolute path# since V4.00storage_ids_filename = storage_ids.conf# id type of the storage server in the filename, values are:## ip: the ip address of the storage server## id: the server id of the storage server# this paramter is valid only when use_storage_id set to true# default value is ip# since V4.03id_type_in_filename = ip# if store slave file use symbol link# default value is false# since V4.01store_slave_file_use_link = false# if rotate the error log every day# default value is false# since V4.02rotate_error_log = false# rotate error log time base, time format: Hour:Minute# Hour from 0 to 23, Minute from 0 to 59# default value is 00:00# since V4.02error_log_rotate_time=00:00# rotate error log when the log file exceeds this size# 0 means never rotates log file by log file size# default value is 0# since V4.02rotate_error_log_size = 0# keep days of the log files# 0 means do not delete old log files# default value is 0log_file_keep_days = 0# if use connection pool# default value is false# since V4.05use_connection_pool = false# connections whose the idle time exceeds this time will be closed# unit: second# default value is 3600# since V4.05connection_pool_max_idle_time = 3600# HTTP port on this tracker serverhttp.server_port=8080# check storage HTTP server alive interval seconds# <= 0 for never check# default value is 30http.check_alive_interval=30# check storage HTTP server alive type, values are:# tcp : connect to the storge server with HTTP port only, # do not request and get response# http: storage check alive url must return http status 200# default value is tcphttp.check_alive_type=tcp# check storage HTTP server alive uri/url# NOTE: storage embed HTTP server support uri: /status.htmlhttp.check_alive_uri=/status.html |

初次启动成功会在容器的配置的base_path=/var/fdfs目录下创建data logs两个目录,因为挂载在宿主机的目录/opt/fdfsdata/tracker/可以直接查看宿主机目录

1 2 3 4 5 6 7 8 9 10 | tree /opt/fdfsdata/tracker//opt/fdfsdata/tracker/├── data│ ├── fdfs_trackerd.pid #存储分组信息│ ├── storage_changelog.dat #存储服务器列表│ ├── storage_groups_new.dat│ ├── storage_servers_new.dat│ └── storage_sync_timestamp.dat└── logs └── trackerd.log #tracker server日志文件 |

tracker配置完成

以下操作在storage节点192.168.1.228进行

启动storage服务

使用docker启动storage服务

1 | docker run -d --network=host --name storage -e TRACKER_SERVER=192.168.1.227:22122 -v /opt/fdfsdata/storage/:/var/fdfs -e GROUP_NAME=group1 delron/fastdfs storage |

解析

1 2 3 4 5 6 | -network=host #使用主机网络--name storage #定义容器名称-e TRACKER_SERVER=192.168.1.227:22122 #指定tracker服务 -v /opt/fdfsdata/storage/:/var/fdfs #挂载storage至宿主机目录/opt/fdfsdata/storage-e GROUP_NAME=group1 #定义组名delron/fastdfs storage #使用命令storage本次启动的服务为storage如果是启动storage则会对应启动nginx服务 |

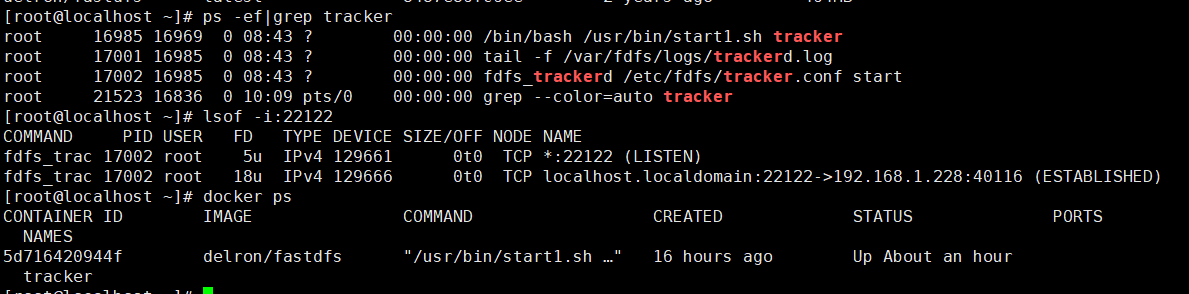

查看

1 2 3 4 | ps -ef|grep storagelsof -i:23000lsof -i:8888docker ps |

storage默认启动端口为23000默认nginx启动端口为8888

登录storage容器查看配置

1 | docker exec -it storage /bin/bash |

配置文件如下,英文为默认配置文件,中文为我自己注释内容

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 281 282 283 284 285 286 287 288 289 290 | # is this config file disabled# false for enabled# true for disableddisabled=false# the name of the group this storage server belongs to## comment or remove this item for fetching from tracker server,# in this case, use_storage_id must set to true in tracker.conf,# and storage_ids.conf must be configed correctly.#环境变量定义的组group_name=group1# bind an address of this host# empty for bind all addresses of this host#绑定主机 空为可以绑定所有主机bind_addr=# if bind an address of this host when connect to other servers # (this storage server as a client)# true for binding the address configed by above parameter: "bind_addr"# false for binding any address of this hostclient_bind=true# the storage server port#默认端口23000port=23000# connect timeout in seconds# default value is 30sconnect_timeout=30# network timeout in seconds# default value is 30snetwork_timeout=60# heart beat interval in secondsheart_beat_interval=30# disk usage report interval in secondsstat_report_interval=60# the base path to store data and log files#数据文件目录和日志目录base_path=/var/fdfs# max concurrent connections the server supported# default value is 256# more max_connections means more memory will be usedmax_connections=256# the buff size to recv / send data# this parameter must more than 8KB# default value is 64KB# since V2.00buff_size = 256KB# accept thread count# default value is 1# since V4.07accept_threads=1# work thread count, should <= max_connections# work thread deal network io# default value is 4# since V2.00work_threads=4# if disk read / write separated## false for mixed read and write## true for separated read and write# default value is true# since V2.00disk_rw_separated = true# disk reader thread count per store base path# for mixed read / write, this parameter can be 0# default value is 1# since V2.00disk_reader_threads = 1# disk writer thread count per store base path# for mixed read / write, this parameter can be 0# default value is 1# since V2.00disk_writer_threads = 1# when no entry to sync, try read binlog again after X milliseconds# must > 0, default value is 200mssync_wait_msec=50# after sync a file, usleep milliseconds# 0 for sync successively (never call usleep)sync_interval=0# storage sync start time of a day, time format: Hour:Minute# Hour from 0 to 23, Minute from 0 to 59sync_start_time=00:00# storage sync end time of a day, time format: Hour:Minute# Hour from 0 to 23, Minute from 0 to 59sync_end_time=23:59# write to the mark file after sync N files# default value is 500write_mark_file_freq=500# path(disk or mount point) count, default value is 1store_path_count=1# store_path#, based 0, if store_path0 not exists, it's value is base_path# the paths must be existstore_path0=/var/fdfs#store_path1=/var/fdfs2# subdir_count * subdir_count directories will be auto created under each # store_path (disk), value can be 1 to 256, default value is 256subdir_count_per_path=256# tracker_server can ocur more than once, and tracker_server format is# "host:port", host can be hostname or ip address#tracker服务ip地址和端口tracker_server=192.168.1.227:22122#standard log level as syslog, case insensitive, value list:### emerg for emergency### alert### crit for critical### error### warn for warning### notice### info### debuglog_level=info#unix group name to run this program, #not set (empty) means run by the group of current userrun_by_group=#unix username to run this program,#not set (empty) means run by current userrun_by_user=# allow_hosts can ocur more than once, host can be hostname or ip address,# "*" (only one asterisk) means match all ip addresses# we can use CIDR ips like 192.168.5.64/26# and also use range like these: 10.0.1.[0-254] and host[01-08,20-25].domain.com# for example:# allow_hosts=10.0.1.[1-15,20]# allow_hosts=host[01-08,20-25].domain.com# allow_hosts=192.168.5.64/26allow_hosts=*# the mode of the files distributed to the data path# 0: round robin(default)# 1: random, distributted by hash codefile_distribute_path_mode=0# valid when file_distribute_to_path is set to 0 (round robin), # when the written file count reaches this number, then rotate to next path# default value is 100file_distribute_rotate_count=100# call fsync to disk when write big file# 0: never call fsync# other: call fsync when written bytes >= this bytes# default value is 0 (never call fsync)fsync_after_written_bytes=0# sync log buff to disk every interval seconds# must > 0, default value is 10 secondssync_log_buff_interval=10# sync binlog buff / cache to disk every interval seconds# default value is 60 secondssync_binlog_buff_interval=10# sync storage stat info to disk every interval seconds# default value is 300 secondssync_stat_file_interval=300# thread stack size, should >= 512KB# default value is 512KBthread_stack_size=512KB# the priority as a source server for uploading file.# the lower this value, the higher its uploading priority.# default value is 10upload_priority=10# the NIC alias prefix, such as eth in Linux, you can see it by ifconfig -a# multi aliases split by comma. empty value means auto set by OS type# default values is emptyif_alias_prefix=# if check file duplicate, when set to true, use FastDHT to store file indexes# 1 or yes: need check# 0 or no: do not check# default value is 0check_file_duplicate=0# file signature method for check file duplicate## hash: four 32 bits hash code## md5: MD5 signature# default value is hash# since V4.01file_signature_method=hash# namespace for storing file indexes (key-value pairs)# this item must be set when check_file_duplicate is true / onkey_namespace=FastDFS# set keep_alive to 1 to enable persistent connection with FastDHT servers# default value is 0 (short connection)keep_alive=0# you can use "#include filename" (not include double quotes) directive to # load FastDHT server list, when the filename is a relative path such as # pure filename, the base path is the base path of current/this config file.# must set FastDHT server list when check_file_duplicate is true / on# please see INSTALL of FastDHT for detail##include /home/yuqing/fastdht/conf/fdht_servers.conf# if log to access log# default value is false# since V4.00use_access_log = false# if rotate the access log every day# default value is false# since V4.00rotate_access_log = false# rotate access log time base, time format: Hour:Minute# Hour from 0 to 23, Minute from 0 to 59# default value is 00:00# since V4.00access_log_rotate_time=00:00# if rotate the error log every day# default value is false# since V4.02rotate_error_log = false# rotate error log time base, time format: Hour:Minute# Hour from 0 to 23, Minute from 0 to 59# default value is 00:00# since V4.02error_log_rotate_time=00:00# rotate access log when the log file exceeds this size# 0 means never rotates log file by log file size# default value is 0# since V4.02rotate_access_log_size = 0# rotate error log when the log file exceeds this size# 0 means never rotates log file by log file size# default value is 0# since V4.02rotate_error_log_size = 0# keep days of the log files# 0 means do not delete old log files# default value is 0log_file_keep_days = 0# if skip the invalid record when sync file# default value is false# since V4.02file_sync_skip_invalid_record=false# if use connection pool# default value is false# since V4.05use_connection_pool = false# connections whose the idle time exceeds this time will be closed# unit: second# default value is 3600# since V4.05connection_pool_max_idle_time = 3600# use the ip address of this storage server if domain_name is empty,# else this domain name will ocur in the url redirected by the tracker serverhttp.domain_name=# the port of the web server on this storage server#默认web服务端口为8888http.server_port=8888 |

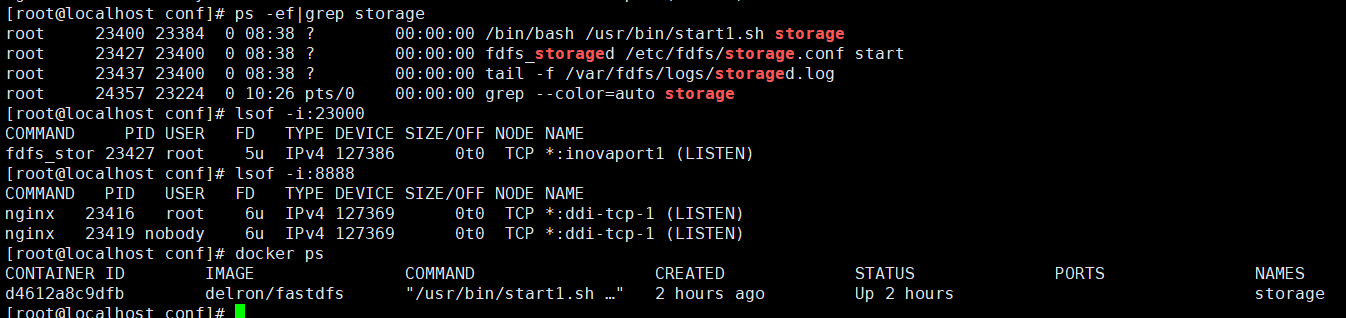

查看storage和tracker是否在通信

在容器内运行

1 | /usr/bin/fdfs_monitor /etc/fdfs/storage.conf |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 | # /usr/bin/fdfs_monitor /etc/fdfs/storage.conf[2020-05-15 03:11:04] DEBUG - base_path=/var/fdfs, connect_timeout=30, network_timeout=60, tracker_server_count=1, anti_steal_token=0, anti_steal_secret_key length=0, use_connection_pool=0, g_connection_pool_max_idle_time=3600s, use_storage_id=0, storage server id count: 0server_count=1, server_index=0tracker server is 192.168.1.227:22122group count: 1Group 1:group name = group1disk total space = 20470 MBdisk free space = 20388 MBtrunk free space = 0 MBstorage server count = 1active server count = 1storage server port = 23000storage HTTP port = 8888store path count = 1subdir count per path = 256current write server index = 0current trunk file id = 0 Storage 1: id = 192.168.1.228 ip_addr = 192.168.1.228 (localhost.localdomain) ACTIVE http domain = version = 5.11 join time = 2020-05-14 05:59:21 up time = 2020-05-15 00:38:52 total storage = 20470 MB free storage = 20388 MB upload priority = 10 store_path_count = 1 subdir_count_per_path = 256 storage_port = 23000 storage_http_port = 8888 current_write_path = 0 source storage id = if_trunk_server = 0 connection.alloc_count = 256 connection.current_count = 0 connection.max_count = 1 total_upload_count = 2 success_upload_count = 2 total_append_count = 0 success_append_count = 0 total_modify_count = 0 success_modify_count = 0 total_truncate_count = 0 success_truncate_count = 0 total_set_meta_count = 0 success_set_meta_count = 0 total_delete_count = 0 success_delete_count = 0 total_download_count = 0 success_download_count = 0 total_get_meta_count = 0 success_get_meta_count = 0 total_create_link_count = 0 success_create_link_count = 0 total_delete_link_count = 0 success_delete_link_count = 0 total_upload_bytes = 13 success_upload_bytes = 13 total_append_bytes = 0 success_append_bytes = 0 total_modify_bytes = 0 success_modify_bytes = 0 stotal_download_bytes = 0 success_download_bytes = 0 total_sync_in_bytes = 0 success_sync_in_bytes = 0 total_sync_out_bytes = 0 success_sync_out_bytes = 0 total_file_open_count = 2 success_file_open_count = 2 total_file_read_count = 0 success_file_read_count = 0 total_file_write_count = 2 success_file_write_count = 2 last_heart_beat_time = 2020-05-15 03:10:53 last_source_update = 2020-05-15 00:44:10 last_sync_update = 1970-01-01 00:00:00 last_synced_timestamp = 1970-01-01 00:00:00 |

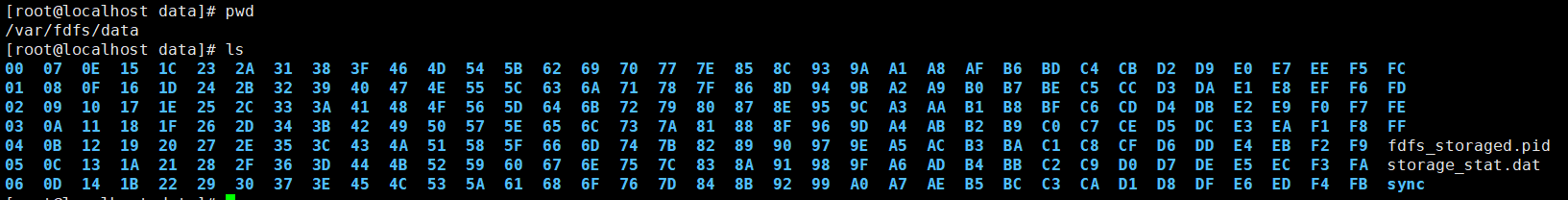

同tracker storage启动成功后在base_path下创建了data,logs目录记录storage server信息

在store_path0目录(这里同base_path目录)下创建了N*N个子目录

在容器内部

绑定的宿主机目录是一致的

文件上传设置

在tracker容器内

修改配置文件

1 2 3 | docker exec -it tracker /bin/bashcd /etc/fdfs/vi client.conf |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 | # connect timeout in seconds# default value is 30sconnect_timeout=30# network timeout in seconds# default value is 30snetwork_timeout=60# the base path to store log filesbase_path=/var/fdfs# tracker_server can ocur more than once, and tracker_server format is# "host:port", host can be hostname or ip addresstracker_server=192.168.1.227:22122#standard log level as syslog, case insensitive, value list:### emerg for emergency### alert### crit for critical### error### warn for warning### notice### info### debuglog_level=info# if use connection pool# default value is false# since V4.05use_connection_pool = false# connections whose the idle time exceeds this time will be closed# unit: second# default value is 3600# since V4.05connection_pool_max_idle_time = 3600# if load FastDFS parameters from tracker server# since V4.05# default value is falseload_fdfs_parameters_from_tracker=false# if use storage ID instead of IP address# same as tracker.conf# valid only when load_fdfs_parameters_from_tracker is false# default value is false# since V4.05use_storage_id = false# specify storage ids filename, can use relative or absolute path# same as tracker.conf# valid only when load_fdfs_parameters_from_tracker is false# since V4.05storage_ids_filename = storage_ids.conf#HTTP settingshttp.tracker_server_port=80#use "#include" directive to include HTTP other settiongs##include http.conf |

上传测试,test.txt为任意文件本次创建一个文本文档

1 | /usr/bin/fdfs_upload_file /etc/fdfs/client.conf test.txt |

成功返回

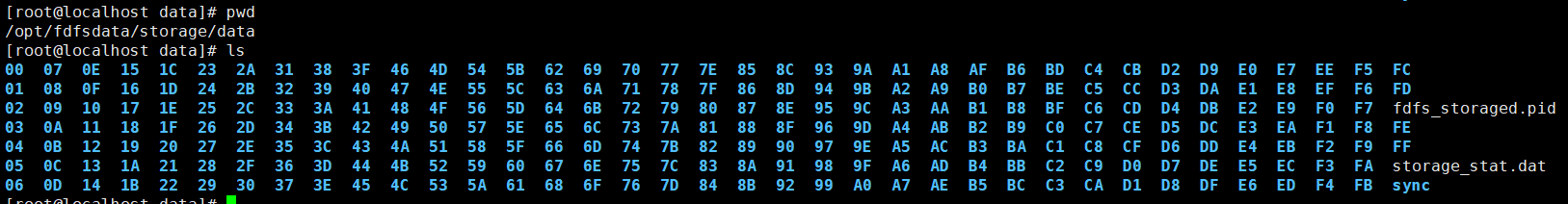

1 | group1/M00/00/00/wKgB5F6-C7iAOGL0AAAACR_BCn8962.txt |

返回的文件ID由group、存储目录、两级子目录、fileid、文件后缀名(由客户端指定,主要用于区分文件类型)拼接而成。

PS:以上上传为默认的端口23000如果配置了多个storage,需要上传到不同的storage需要在上传的时候指定对应storage的端口

1 | /usr/bin/fdfs_upload_file /etc/fdfs/client.conf test.txt 192.168.1.228:23001 |

配置nginx

容器自带nginx服务及fastdfs-nginx-module模块无需另外安装

登录storage容器查看nginx配置

1 | docker exec -it storage /bin/bash |

1 | cd /usr/local/nginx/conf |

去除多余项查看nginx配置

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 | # sed '/#/d' nginx.conf|sed '/^$/d'worker_processes 1;events { worker_connections 1024;}http { include mime.types; default_type application/octet-stream; sendfile on; keepalive_timeout 65; server { #监听端口8888 listen 8888; server_name localhost; #定义group组,这里使用通配符定义了0-9组 location ~/group[0-9]/ { ngx_fastdfs_module; } error_page 500 502 503 504 /50x.html; location = /50x.html { root html; } }} |

注意:

listen 80 端口值是要与 /etc/fdfs/storage.conf 中的 http.server_port=8888 相对应。如果改成其它端口,则需要统一,同时在防火墙中打开该端口。

location 的配置,如果有多个group则配置location ~/group([0-9])/M00 ,没有则不用配group。

使用地址访问,可以访问到对于文件,则代表配置成功

1 | http://192.168.1.228:8888/group1/M00/00/00/wKgB5F6-C7iAOGL0AAAACR_BCn8962.txt |

启动多个storage服务

默认启动storage服务只需要在启动docker定义对应变量

tracker服务器:TRACKER_SERVER

组名:GROUP_NAME

默认nginx配置的组名必须为group0-9

加入需要启动其他组名的storage服务器呢

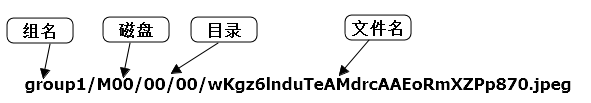

启动一个组名为secret的storage服务,对应的tracker服务器为192.168.1.227

1 | docker run -d -p 23001:23000 --name secret -e TRACKER_SERVER=192.168.1.227:22122 -v /opt/fastdfsdata/secret/:/var/fdfs -v /root/secret/nginx.conf:/usr/local/nginx/conf/nginx.conf -e GROUP_NAME=secret delron/fastdfs storage |

参数解析

1 2 3 4 5 6 7 8 9 10 | -d #后台运行-p 8081:8888#把容器默认的http8888端口映射为主机的8081端口 -p 23001:23000 #把容器storage服务默认的23000端口映射为主机的23001端口--name secret #容器名为secret-e TRACKER_SERVER=192.168.1.227:22122#定义tracker服务和端口 -v /opt/fastdfsdata/secret/:/var/fdfs#把storage的数据挂载到本机 -v /root/secret/nginx.conf:/usr/local/nginx/conf/nginx.conf #把修改后的nginx配置文件挂载到容器-e GROUP_NAME=secret #定义组名为secret delron/fastdfs #使用的容器storage #启动的为storage服务 |

nginx配置文件如下nginx.conf

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 | worker_processes 1;events { worker_connections 1024;}http { include mime.types; default_type application/octet-stream; sendfile on; keepalive_timeout 65; server { listen 8888; server_name localhost; location ~/secret/M00 { root /var/fdfs/data; ngx_fastdfs_module; } error_page 500 502 503 504 /50x.html; location = /50x.html { root html; } }} |

启动以后登录容器

1 | docker exec -it secret bash |

查看storage与tracker通信状态有了secret这个组

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 | # fdfs_monitor /etc/fdfs/storage.conf[2020-05-19 03:44:04] DEBUG - base_path=/var/fdfs, connect_timeout=30, network_timeout=60, tracker_server_count=1, anti_steal_token=0, anti_steal_secret_key length=0, use_connection_pool=0, g_connection_pool_max_idle_time=3600s, use_storage_id=0, storage server id count: 0server_count=1, server_index=0tracker server is 192.168.1.227:22122group count: 2Group 1:group name = group1disk total space = 20470 MBdisk free space = 20353 MBtrunk free space = 0 MBstorage server count = 1active server count = 1storage server port = 23000storage HTTP port = 8888store path count = 1subdir count per path = 256current write server index = 0current trunk file id = 0 Storage 1: id = 192.168.1.228 ip_addr = 192.168.1.228 ACTIVE http domain = version = 5.11 join time = 2020-05-14 05:59:21 up time = 2020-05-18 09:15:58 total storage = 20470 MB free storage = 20353 MB upload priority = 10 store_path_count = 1 subdir_count_per_path = 256 storage_port = 23000 storage_http_port = 8888 current_write_path = 0 source storage id = if_trunk_server = 0 connection.alloc_count = 256 connection.current_count = 0 connection.max_count = 0 total_upload_count = 14 success_upload_count = 14 total_append_count = 0 success_append_count = 0 total_modify_count = 0 success_modify_count = 0 total_truncate_count = 0 success_truncate_count = 0 total_set_meta_count = 0 success_set_meta_count = 0 total_delete_count = 0 success_delete_count = 0 total_download_count = 0 success_download_count = 0 total_get_meta_count = 0 success_get_meta_count = 0 total_create_link_count = 0 success_create_link_count = 0 total_delete_link_count = 0 success_delete_link_count = 0 total_upload_bytes = 9294 success_upload_bytes = 9294 total_append_bytes = 0 success_append_bytes = 0 total_modify_bytes = 0 success_modify_bytes = 0 stotal_download_bytes = 0 success_download_bytes = 0 total_sync_in_bytes = 0 success_sync_in_bytes = 0 total_sync_out_bytes = 0 success_sync_out_bytes = 0 total_file_open_count = 14 success_file_open_count = 14 total_file_read_count = 0 success_file_read_count = 0 total_file_write_count = 14 success_file_write_count = 14 last_heart_beat_time = 2020-05-19 03:43:58 last_source_update = 2020-05-18 08:02:26 last_sync_update = 1970-01-01 00:00:00 last_synced_timestamp = 1970-01-01 00:00:00 Group 2:group name = secretdisk total space = 20470 MBdisk free space = 20353 MBtrunk free space = 0 MBstorage server count = 1active server count = 1storage server port = 23000storage HTTP port = 8888store path count = 1subdir count per path = 256current write server index = 0current trunk file id = 0 Storage 1: id = 192.168.1.228 ip_addr = 192.168.1.228 ACTIVE http domain = version = 5.11 join time = 2020-05-19 03:21:37 up time = 2020-05-19 03:21:37 total storage = 20470 MB free storage = 20353 MB upload priority = 10 store_path_count = 1 subdir_count_per_path = 256 storage_port = 23000 storage_http_port = 8888 current_write_path = 0 source storage id = if_trunk_server = 0 connection.alloc_count = 256 connection.current_count = 0 connection.max_count = 1 total_upload_count = 1 success_upload_count = 1 total_append_count = 0 success_append_count = 0 total_modify_count = 0 success_modify_count = 0 total_truncate_count = 0 success_truncate_count = 0 total_set_meta_count = 0 success_set_meta_count = 0 total_delete_count = 0 success_delete_count = 0 total_download_count = 0 success_download_count = 0 total_get_meta_count = 0 success_get_meta_count = 0 total_create_link_count = 0 success_create_link_count = 0 total_delete_link_count = 0 success_delete_link_count = 0 total_upload_bytes = 174 success_upload_bytes = 174 total_append_bytes = 0 success_append_bytes = 0 total_modify_bytes = 0 success_modify_bytes = 0 stotal_download_bytes = 0 success_download_bytes = 0 total_sync_in_bytes = 0 success_sync_in_bytes = 0 total_sync_out_bytes = 0 success_sync_out_bytes = 0 total_file_open_count = 1 success_file_open_count = 1 total_file_read_count = 0 success_file_read_count = 0 total_file_write_count = 1 success_file_write_count = 1 last_heart_beat_time = 2020-05-19 03:43:38 last_source_update = 2020-05-19 03:23:09 last_sync_update = 1970-01-01 00:00:00 last_synced_timestamp = 1970-01-01 00:00:00 |

上传文件测试,因为容器的端口23000端口映射到主机的23001端口所以上传需要加IP和端口,也可以是容器本身的ip和端口127.0.0.1:23000

1 | fdfs_upload_file /etc/fdfs/client.conf test.txt 192.168.1.228:23001 |

返回

1 | secret/M00/00/00/rBEAA17DV2-ASbCdAAAABelE2rQ667.txt |

容器内访问会提示404

1 2 3 4 5 6 7 8 | # curl 127.0.0.1:8888/secret/M00/00/00/rBEAA17DV2-ASbCdAAAABelE2rQ667.txt<html><head><title>400 Bad Request</title></head><body bgcolor="white"><center><h1>400 Bad Request</h1></center><hr><center>nginx/1.12.2</center></body></html> |

容器内nginx日志报以下

1 | [2020-05-19 03:50:18] WARNING - file: /tmp/nginx/fastdfs-nginx-module-master/src/common.c, line: 1115, redirect again, url: /secret/M00/00/00/rBEAA17DV2-ASbCdAAAABelE2rQ667.txt?redirect=1 |

修改

1 | /etc/fdfs/mod_fastdfs.conf |

重启nginx

1 | /usr/local/nginx/sbin/nginx -s reload |

nginx日志usr/local/nginx/logs/error.log报以下代表正常

1 | [2020-05-19 03:52:59] INFO - fastdfs apache / nginx module v1.15, response_mode=proxy, base_path=/tmp, url_have_group_name=1, group_name=secret, storage_server_port=23000, path_count=1, store_path0=/var/fdfs, connect_timeout=10, network_timeout=30, tracker_server_count=1, if_alias_prefix=, local_host_ip_count=2, anti_steal_token=0, token_ttl=0s, anti_steal_secret_key length=0, token_check_fail content_type=, token_check_fail buff length=0, load_fdfs_parameters_from_tracker=1, storage_sync_file_max_delay=86400s, use_storage_id=0, storage server id count=0, flv_support=1, flv_extension=flv |

容器内再次访问测试

1 2 | # curl 127.0.0.1:8888/secret/M00/00/00/rBEAA17DV2-ASbCdAAAABelE2rQ667.txttest |

主机访问测试则使用8081端口

1 | curl 127.0.0.1:8081/secret/M00/00/00/rBEAA17DV2-ASbCdAAAABelE2rQ667.txt |

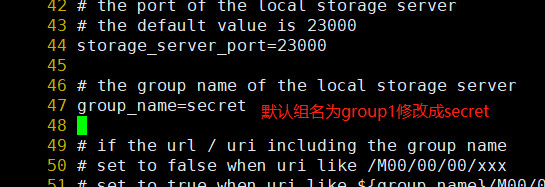

使用以上方法需要访问secret组还需要手动修改配置文件/etc/fdfs/mod_fastdfs.conf下面把配置文件下载下来修改以后再挂载

默认的组为group1修改为secret,其余保持默认

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 | # connect timeout in seconds# default value is 30sconnect_timeout=10# network recv and send timeout in seconds# default value is 30snetwork_timeout=30# the base path to store log filesbase_path=/tmp# if load FastDFS parameters from tracker server# since V1.12# default value is falseload_fdfs_parameters_from_tracker=true# storage sync file max delay seconds# same as tracker.conf# valid only when load_fdfs_parameters_from_tracker is false# since V1.12# default value is 86400 seconds (one day)storage_sync_file_max_delay = 86400# if use storage ID instead of IP address# same as tracker.conf# valid only when load_fdfs_parameters_from_tracker is false# default value is false# since V1.13use_storage_id = false# specify storage ids filename, can use relative or absolute path# same as tracker.conf# valid only when load_fdfs_parameters_from_tracker is false# since V1.13storage_ids_filename = storage_ids.conf# FastDFS tracker_server can ocur more than once, and tracker_server format is# "host:port", host can be hostname or ip address# valid only when load_fdfs_parameters_from_tracker is truetracker_server=192.168.1.227:22122:22122# the port of the local storage server# the default value is 23000storage_server_port=23000# the group name of the local storage servergroup_name=secret# if the url / uri including the group name# set to false when uri like /M00/00/00/xxx# set to true when uri like ${group_name}/M00/00/00/xxx, such as group1/M00/xxx# default value is falseurl_have_group_name = true# path(disk or mount point) count, default value is 1# must same as storage.confstore_path_count=1# store_path#, based 0, if store_path0=/var/fdfs# the paths must be exist# must same as storage.confstore_path0=/var/fdfs#store_path1=/home/yuqing/fastdfs1# standard log level as syslog, case insensitive, value list:### emerg for emergency### alert### crit for critical### error### warn for warning### notice### info### debuglog_level=info# set the log filename, such as /usr/local/apache2/logs/mod_fastdfs.log# empty for output to stderr (apache and nginx error_log file)log_filename=# response mode when the file not exist in the local file system## proxy: get the content from other storage server, then send to client## redirect: redirect to the original storage server (HTTP Header is Location)response_mode=proxy# the NIC alias prefix, such as eth in Linux, you can see it by ifconfig -a# multi aliases split by comma. empty value means auto set by OS type# this paramter used to get all ip address of the local host# default values is emptyif_alias_prefix=# use "#include" directive to include HTTP config file# NOTE: #include is an include directive, do NOT remove the # before include#include http.conf# if support flv# default value is false# since v1.15flv_support = true# flv file extension name# default value is flv# since v1.15flv_extension = flv# set the group count# set to none zero to support multi-group on this storage server# set to 0 for single group only# groups settings section as [group1], [group2], ..., [groupN]# default value is 0# since v1.14group_count = 0# group settings for group #1# since v1.14# when support multi-group on this storage server, uncomment following section#[group1]#group_name=group1#storage_server_port=23000#store_path_count=2#store_path0=/var/fdfs#store_path1=/home/yuqing/fastdfs1# group settings for group #2# since v1.14# when support multi-group, uncomment following section as neccessary#[group2]#group_name=group2#storage_server_port=23000#store_path_count=1#store_path0=/var/fdfs |

启动storage

1 | docker run -d -p 8081:8888 -p 23001:23000 --name secret -e TRACKER_SERVER=192.168.1.227:22122 e GROUP_NAME=secret -v /opt/fastdfsdata/secret/:/var/fdfs -v /root/secret/nginx.conf:/usr/local/nginx/conf/nginx.conf -v /root/secret/mod_fastdfs.conf:/etc/fdfs/mod_fastdfs.conf - delron/fastdfs storage |

启动容器以后不需要修改配置文件访问项目secret可以不会出现404或者无法访问

注意:配置文件如果去掉#号注释将无法访问nginx会报错,curl无响应,原因未知

k8s集群部署fastdfs

部署tracker

创建tracker存储pvc

本次pvc使用heketi管理的glusterfs提供存储 部署参考https://www.cnblogs.com/minseo/p/12575604.html

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 | # cat tracker-pvc.yaml apiVersion: v1#创建的类型是pvckind: PersistentVolumeClaimmetadata: #标签选择器 labels: app: tracker name: tracker-pv-claimspec: #可读写 accessModes: - ReadWriteMany #创建的大小为1G resources: requests: storage: 1Gi #使用的存储是heketi管理的glusterfs存储 storageClassName: gluster-heketi-storageclass |

创建tracker的yaml配置文件,因为pod ip可能会发生变化本次使用有状态statefulset控制器

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 | # cat tracker-statefulset.yaml apiVersion: apps/v1kind: StatefulSetmetadata: labels: app: tracker #statefulset名为tracker创建的pod名以该名为基础按顺序为tacker-0 1 2 3... name: trackerspec: replicas: 1 selector: matchLabels: app: tracker #serviceName名为tracker不要与statefulset名混淆,pod的解析需要在pod名后加该名例如tracker-0.tracker.default即$pod名.$serviceName.$命名空间 serviceName: "tracker" template: metadata: labels: app: tracker spec: containers: - image: delron/fastdfs name: fastdfs #容器启动命令本次为使用tracker command: ["/bin/bash", "/usr/bin/start1.sh","tracker"] #track数据挂载在pvc上默认容器的数据目录为/var/fdfs volumeMounts: - name: tracker-persistent-storage mountPath: /var/fdfs #创建挂载pvc claimName对应名称需要与创建pvc配置文件的名称相同 volumes: - name: tracker-persistent-storage persistentVolumeClaim: claimName: tracker-pv-claim |

创建svc因为是有状态服务所以不需要创建ClusterIP

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 | # cat tracker-cluster-svc.yaml apiVersion: v1kind: Servicemetadata: labels: app: tracker name: trackerspec: ports: - port: 22122 protocol: TCP targetPort: 22122 clusterIP: None selector: app: tracker |

创建pvc tracker 和 tracker svc

需要先创建pvc否则tracker的statefulset没有挂载的存储

1 2 3 | kubectl apply -f tracker-pvc.yaml kubectl apply -f tracker-statefulset.yaml kubectl apply -f tracker-cluster-svc.yaml |

查看创建的pvc pod svc

1 2 3 4 5 6 7 8 9 | # kubectl get pvc tracker-pv-claimNAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGEtracker-pv-claim Bound pvc-a8cb4a44-b096-4f27-ac8b-ac21171daff9 1Gi RWX gluster-heketi-storageclass 12m[root@localhost tracker]# kubectl get pod tracker-0NAME READY STATUS RESTARTS AGEtracker-0 1/1 Running 0 12m[root@localhost tracker]# kubectl get svc trackerNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEtracker ClusterIP None <none> 22122/TCP 163m |

登录tracker容器查看

1 | kubectl exec -it tracker-0 bash |

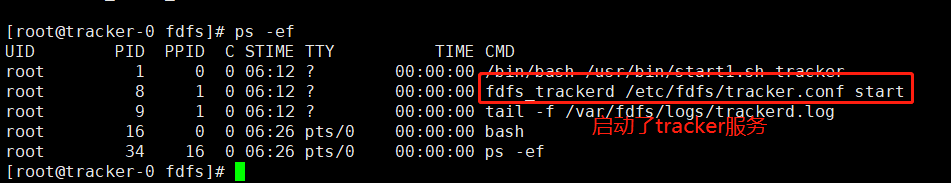

查看应用

tracker使用默认配置文件无需修改

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 | # cat /etc/fdfs/tracker.conf# is this config file disabled# false for enabled# true for disableddisabled=false# bind an address of this host# empty for bind all addresses of this hostbind_addr=# the tracker server portport=22122# connect timeout in seconds# default value is 30sconnect_timeout=30# network timeout in seconds# default value is 30snetwork_timeout=60# the base path to store data and log filesbase_path=/var/fdfs# max concurrent connections this server supportedmax_connections=256# accept thread count# default value is 1# since V4.07accept_threads=1# work thread count, should <= max_connections# default value is 4# since V2.00work_threads=4# min buff size# default value 8KBmin_buff_size = 8KB# max buff size# default value 128KBmax_buff_size = 128KB# the method of selecting group to upload files# 0: round robin# 1: specify group# 2: load balance, select the max free space group to upload filestore_lookup=2# which group to upload file# when store_lookup set to 1, must set store_group to the group namestore_group=group2# which storage server to upload file# 0: round robin (default)# 1: the first server order by ip address# 2: the first server order by priority (the minimal)store_server=0# which path(means disk or mount point) of the storage server to upload file# 0: round robin# 2: load balance, select the max free space path to upload filestore_path=0# which storage server to download file# 0: round robin (default)# 1: the source storage server which the current file uploaded todownload_server=0# reserved storage space for system or other applications.# if the free(available) space of any stoarge server in # a group <= reserved_storage_space, # no file can be uploaded to this group.# bytes unit can be one of follows:### G or g for gigabyte(GB)### M or m for megabyte(MB)### K or k for kilobyte(KB)### no unit for byte(B)### XX.XX% as ratio such as reserved_storage_space = 10%reserved_storage_space = 10%#standard log level as syslog, case insensitive, value list:### emerg for emergency### alert### crit for critical### error### warn for warning### notice### info### debuglog_level=info#unix group name to run this program, #not set (empty) means run by the group of current userrun_by_group=#unix username to run this program,#not set (empty) means run by current userrun_by_user=# allow_hosts can ocur more than once, host can be hostname or ip address,# "*" (only one asterisk) means match all ip addresses# we can use CIDR ips like 192.168.5.64/26# and also use range like these: 10.0.1.[0-254] and host[01-08,20-25].domain.com# for example:# allow_hosts=10.0.1.[1-15,20]# allow_hosts=host[01-08,20-25].domain.com# allow_hosts=192.168.5.64/26allow_hosts=*# sync log buff to disk every interval seconds# default value is 10 secondssync_log_buff_interval = 10# check storage server alive interval secondscheck_active_interval = 120# thread stack size, should >= 64KB# default value is 64KBthread_stack_size = 64KB# auto adjust when the ip address of the storage server changed# default value is truestorage_ip_changed_auto_adjust = true# storage sync file max delay seconds# default value is 86400 seconds (one day)# since V2.00storage_sync_file_max_delay = 86400# the max time of storage sync a file# default value is 300 seconds# since V2.00storage_sync_file_max_time = 300# if use a trunk file to store several small files# default value is false# since V3.00use_trunk_file = false# the min slot size, should <= 4KB# default value is 256 bytes# since V3.00slot_min_size = 256# the max slot size, should > slot_min_size# store the upload file to trunk file when it's size <= this value# default value is 16MB# since V3.00slot_max_size = 16MB# the trunk file size, should >= 4MB# default value is 64MB# since V3.00trunk_file_size = 64MB# if create trunk file advancely# default value is false# since V3.06trunk_create_file_advance = false# the time base to create trunk file# the time format: HH:MM# default value is 02:00# since V3.06trunk_create_file_time_base = 02:00# the interval of create trunk file, unit: second# default value is 38400 (one day)# since V3.06trunk_create_file_interval = 86400# the threshold to create trunk file# when the free trunk file size less than the threshold, will create # the trunk files# default value is 0# since V3.06trunk_create_file_space_threshold = 20G# if check trunk space occupying when loading trunk free spaces# the occupied spaces will be ignored# default value is false# since V3.09# NOTICE: set this parameter to true will slow the loading of trunk spaces # when startup. you should set this parameter to true when neccessary.trunk_init_check_occupying = false# if ignore storage_trunk.dat, reload from trunk binlog# default value is false# since V3.10# set to true once for version upgrade when your version less than V3.10trunk_init_reload_from_binlog = false# the min interval for compressing the trunk binlog file# unit: second# default value is 0, 0 means never compress# FastDFS compress the trunk binlog when trunk init and trunk destroy# recommand to set this parameter to 86400 (one day)# since V5.01trunk_compress_binlog_min_interval = 0# if use storage ID instead of IP address# default value is false# since V4.00use_storage_id = false# specify storage ids filename, can use relative or absolute path# since V4.00storage_ids_filename = storage_ids.conf# id type of the storage server in the filename, values are:## ip: the ip address of the storage server## id: the server id of the storage server# this paramter is valid only when use_storage_id set to true# default value is ip# since V4.03id_type_in_filename = ip# if store slave file use symbol link# default value is false# since V4.01store_slave_file_use_link = false# if rotate the error log every day# default value is false# since V4.02rotate_error_log = false# rotate error log time base, time format: Hour:Minute# Hour from 0 to 23, Minute from 0 to 59# default value is 00:00# since V4.02error_log_rotate_time=00:00# rotate error log when the log file exceeds this size# 0 means never rotates log file by log file size# default value is 0# since V4.02rotate_error_log_size = 0# keep days of the log files# 0 means do not delete old log files# default value is 0log_file_keep_days = 0# if use connection pool# default value is false# since V4.05use_connection_pool = false# connections whose the idle time exceeds this time will be closed# unit: second# default value is 3600# since V4.05connection_pool_max_idle_time = 3600# HTTP port on this tracker serverhttp.server_port=8080# check storage HTTP server alive interval seconds# <= 0 for never check# default value is 30http.check_alive_interval=30# check storage HTTP server alive type, values are:# tcp : connect to the storge server with HTTP port only, # do not request and get response# http: storage check alive url must return http status 200# default value is tcphttp.check_alive_type=tcp# check storage HTTP server alive uri/url# NOTE: storage embed HTTP server support uri: /status.htmlhttp.check_alive_uri=/status.html |

创建storage服务

storage的pvc配置文件 创建pvc大小为10G

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | cat storage-pvc.yaml apiVersion: v1kind: PersistentVolumeClaimmetadata: name: storage-pv-claim labels: app: storagespec: accessModes: - ReadWriteMany storageClassName: "gluster-heketi-storageclass" resources: requests: storage: 10Gi |

定义storage的statefulset

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 | cat storage-statefulset.yaml apiVersion: apps/v1kind: StatefulSetmetadata: labels: app: storage name: storagespec: replicas: 1 selector: matchLabels: app: storage serviceName: "storage" template: metadata: labels: app: storage spec: containers: - image: delron/fastdfs name: fastdfs #该容器启动为storage服务 command: ["/bin/bash", "/usr/bin/start1.sh","storage"] env: #定义环境变量 - name: TRACKER_SERVER #tracker服务器地址及IP DNS解析地址为$pod名.$serviceName.$命名空间 value: tracker-0.tracker.default:22122 #定义存储组名 - name: GROUP_NAME value: group1 volumeMounts: - name: storage-persistent-storage mountPath: /var/fdfs volumes: - name: storage-persistent-storage persistentVolumeClaim: claimName: storage-pv-claim |

定义storage的svc

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | cat storage-cluster-svc.yaml apiVersion: v1kind: Servicemetadata: labels: app: storage name: storagespec: ports: - port: 23000 protocol: TCP targetPort: 23000 clusterIP: None selector: app: storage type: ClusterIP |

创建storage服务,需要先创建pvc

1 2 3 | kubectl apply -f storage-pvc.yaml kubectl apply -f storage-statefulset.yaml kubectl apply -f storage-cluster-svc.yaml |

查看创建的pvc pod svc

1 2 3 4 5 6 7 8 9 | # kubectl get pvc storage-pv-claimNAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGEstorage-pv-claim Bound pvc-3079f84e-5812-4f34-8cae-723ebaf0ae04 10Gi RWX gluster-heketi-storageclass 177m[root@localhost storage]# kubectl get pod storage-0NAME READY STATUS RESTARTS AGEstorage-0 1/1 Running 0 160m[root@localhost storage]# kubectl get svc storageNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEstorage ClusterIP None <none> 23000/TCP 172m |

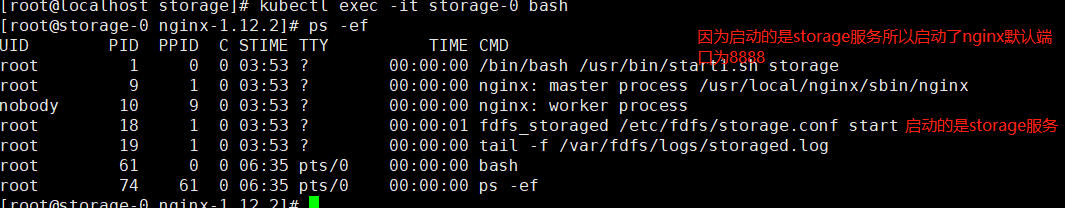

登录容内部查看

1 | kubectl exec -it storage-0 bash |

storage配置文件的默认配置文件修改了组名以及对应的tracker地址

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 281 282 283 284 285 | cat /etc/fdfs/storage.conf# is this config file disabled# false for enabled# true for disableddisabled=false# the name of the group this storage server belongs to## comment or remove this item for fetching from tracker server,# in this case, use_storage_id must set to true in tracker.conf,# and storage_ids.conf must be configed correctly.group_name=group1# bind an address of this host# empty for bind all addresses of this hostbind_addr=# if bind an address of this host when connect to other servers # (this storage server as a client)# true for binding the address configed by above parameter: "bind_addr"# false for binding any address of this hostclient_bind=true# the storage server portport=23000# connect timeout in seconds# default value is 30sconnect_timeout=30# network timeout in seconds# default value is 30snetwork_timeout=60# heart beat interval in secondsheart_beat_interval=30# disk usage report interval in secondsstat_report_interval=60# the base path to store data and log filesbase_path=/var/fdfs# max concurrent connections the server supported# default value is 256# more max_connections means more memory will be usedmax_connections=256# the buff size to recv / send data# this parameter must more than 8KB# default value is 64KB# since V2.00buff_size = 256KB# accept thread count# default value is 1# since V4.07accept_threads=1# work thread count, should <= max_connections# work thread deal network io# default value is 4# since V2.00work_threads=4# if disk read / write separated## false for mixed read and write## true for separated read and write# default value is true# since V2.00disk_rw_separated = true# disk reader thread count per store base path# for mixed read / write, this parameter can be 0# default value is 1# since V2.00disk_reader_threads = 1# disk writer thread count per store base path# for mixed read / write, this parameter can be 0# default value is 1# since V2.00disk_writer_threads = 1# when no entry to sync, try read binlog again after X milliseconds# must > 0, default value is 200mssync_wait_msec=50# after sync a file, usleep milliseconds# 0 for sync successively (never call usleep)sync_interval=0# storage sync start time of a day, time format: Hour:Minute# Hour from 0 to 23, Minute from 0 to 59sync_start_time=00:00# storage sync end time of a day, time format: Hour:Minute# Hour from 0 to 23, Minute from 0 to 59sync_end_time=23:59# write to the mark file after sync N files# default value is 500write_mark_file_freq=500# path(disk or mount point) count, default value is 1store_path_count=1# store_path#, based 0, if store_path0 not exists, it's value is base_path# the paths must be existstore_path0=/var/fdfs#store_path1=/var/fdfs2# subdir_count * subdir_count directories will be auto created under each # store_path (disk), value can be 1 to 256, default value is 256subdir_count_per_path=256# tracker_server can ocur more than once, and tracker_server format is# "host:port", host can be hostname or ip addresstracker_server=tracker-0.tracker.default:22122#standard log level as syslog, case insensitive, value list:### emerg for emergency### alert### crit for critical### error### warn for warning### notice### info### debuglog_level=info#unix group name to run this program, #not set (empty) means run by the group of current userrun_by_group=#unix username to run this program,#not set (empty) means run by current userrun_by_user=# allow_hosts can ocur more than once, host can be hostname or ip address,# "*" (only one asterisk) means match all ip addresses# we can use CIDR ips like 192.168.5.64/26# and also use range like these: 10.0.1.[0-254] and host[01-08,20-25].domain.com# for example:# allow_hosts=10.0.1.[1-15,20]# allow_hosts=host[01-08,20-25].domain.com# allow_hosts=192.168.5.64/26allow_hosts=*# the mode of the files distributed to the data path# 0: round robin(default)# 1: random, distributted by hash codefile_distribute_path_mode=0# valid when file_distribute_to_path is set to 0 (round robin), # when the written file count reaches this number, then rotate to next path# default value is 100file_distribute_rotate_count=100# call fsync to disk when write big file# 0: never call fsync# other: call fsync when written bytes >= this bytes# default value is 0 (never call fsync)fsync_after_written_bytes=0# sync log buff to disk every interval seconds# must > 0, default value is 10 secondssync_log_buff_interval=10# sync binlog buff / cache to disk every interval seconds# default value is 60 secondssync_binlog_buff_interval=10# sync storage stat info to disk every interval seconds# default value is 300 secondssync_stat_file_interval=300# thread stack size, should >= 512KB# default value is 512KBthread_stack_size=512KB# the priority as a source server for uploading file.# the lower this value, the higher its uploading priority.# default value is 10upload_priority=10# the NIC alias prefix, such as eth in Linux, you can see it by ifconfig -a# multi aliases split by comma. empty value means auto set by OS type# default values is emptyif_alias_prefix=# if check file duplicate, when set to true, use FastDHT to store file indexes# 1 or yes: need check# 0 or no: do not check# default value is 0check_file_duplicate=0# file signature method for check file duplicate## hash: four 32 bits hash code## md5: MD5 signature# default value is hash# since V4.01file_signature_method=hash# namespace for storing file indexes (key-value pairs)# this item must be set when check_file_duplicate is true / onkey_namespace=FastDFS# set keep_alive to 1 to enable persistent connection with FastDHT servers# default value is 0 (short connection)keep_alive=0# you can use "#include filename" (not include double quotes) directive to # load FastDHT server list, when the filename is a relative path such as # pure filename, the base path is the base path of current/this config file.# must set FastDHT server list when check_file_duplicate is true / on# please see INSTALL of FastDHT for detail##include /home/yuqing/fastdht/conf/fdht_servers.conf# if log to access log# default value is false# since V4.00use_access_log = false# if rotate the access log every day# default value is false# since V4.00rotate_access_log = false# rotate access log time base, time format: Hour:Minute# Hour from 0 to 23, Minute from 0 to 59# default value is 00:00# since V4.00access_log_rotate_time=00:00# if rotate the error log every day# default value is false# since V4.02rotate_error_log = false# rotate error log time base, time format: Hour:Minute# Hour from 0 to 23, Minute from 0 to 59# default value is 00:00# since V4.02error_log_rotate_time=00:00# rotate access log when the log file exceeds this size# 0 means never rotates log file by log file size# default value is 0# since V4.02rotate_access_log_size = 0# rotate error log when the log file exceeds this size# 0 means never rotates log file by log file size# default value is 0# since V4.02rotate_error_log_size = 0# keep days of the log files# 0 means do not delete old log files# default value is 0log_file_keep_days = 0# if skip the invalid record when sync file# default value is false# since V4.02file_sync_skip_invalid_record=false# if use connection pool# default value is false# since V4.05use_connection_pool = false# connections whose the idle time exceeds this time will be closed# unit: second# default value is 3600# since V4.05connection_pool_max_idle_time = 3600# use the ip address of this storage server if domain_name is empty,# else this domain name will ocur in the url redirected by the tracker serverhttp.domain_name=# the port of the web server on this storage serverhttp.server_port=8888 |

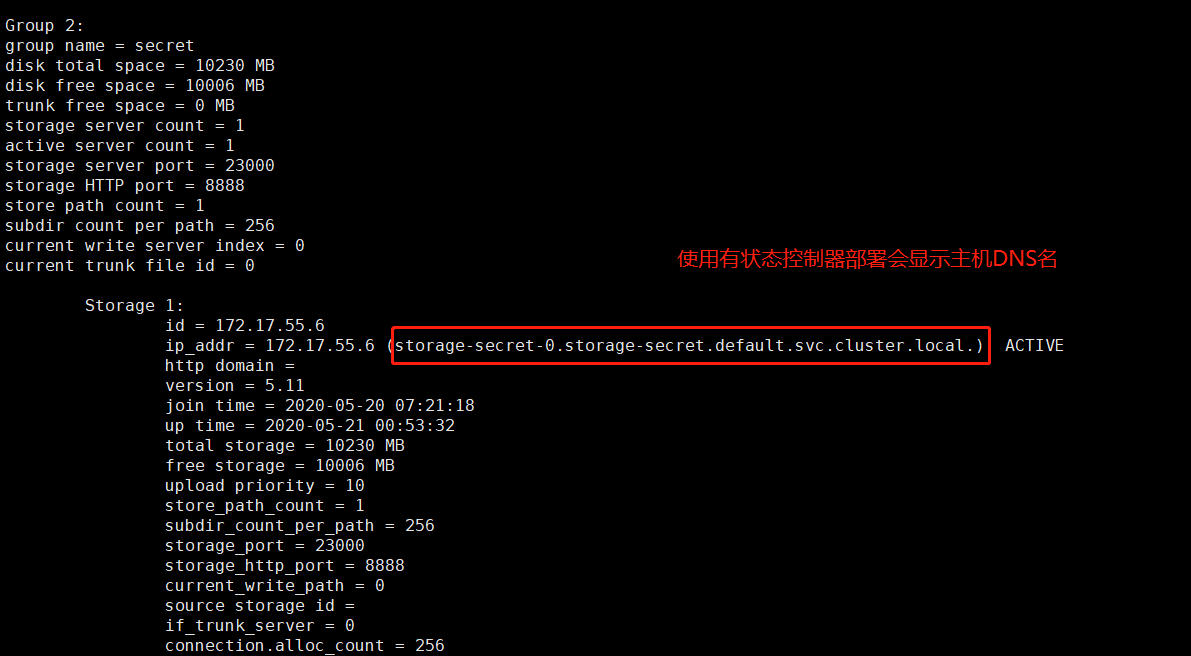

查看storage是否和tracker通信

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 | # fdfs_monitor /etc/fdfs/storage.conf[2020-05-20 06:40:55] DEBUG - base_path=/var/fdfs, connect_timeout=30, network_timeout=60, tracker_server_count=1, anti_steal_token=0, anti_steal_secret_key length=0, use_connection_pool=0, g_connection_pool_max_idle_time=3600s, use_storage_id=0, storage server id count: 0server_count=1, server_index=0tracker server is 172.17.55.4:22122group count: 1Group 1:group name = group1disk total space = 10230 MBdisk free space = 10006 MBtrunk free space = 0 MBstorage server count = 1active server count = 1storage server port = 23000storage HTTP port = 8888store path count = 1subdir count per path = 256current write server index = 0current trunk file id = 0 Storage 1: id = 172.17.55.7 ip_addr = 172.17.55.7 (storage-0.storage.default.svc.cluster.local.) ACTIVE http domain = version = 5.11 join time = 2020-05-20 03:41:41 up time = 2020-05-20 03:53:59 total storage = 10230 MB free storage = 10006 MB upload priority = 10 store_path_count = 1 subdir_count_per_path = 256 storage_port = 23000 storage_http_port = 8888 current_write_path = 0 source storage id = if_trunk_server = 0 connection.alloc_count = 256 connection.current_count = 0 connection.max_count = 2 total_upload_count = 2 success_upload_count = 2 total_append_count = 0 success_append_count = 0 total_modify_count = 0 success_modify_count = 0 total_truncate_count = 0 success_truncate_count = 0 total_set_meta_count = 0 success_set_meta_count = 0 total_delete_count = 0 success_delete_count = 0 total_download_count = 0 success_download_count = 0 total_get_meta_count = 0 success_get_meta_count = 0 total_create_link_count = 0 success_create_link_count = 0 total_delete_link_count = 0 success_delete_link_count = 0 total_upload_bytes = 500 success_upload_bytes = 500 total_append_bytes = 0 success_append_bytes = 0 total_modify_bytes = 0 success_modify_bytes = 0 stotal_download_bytes = 0 success_download_bytes = 0 total_sync_in_bytes = 520 success_sync_in_bytes = 0 total_sync_out_bytes = 0 success_sync_out_bytes = 0 total_file_open_count = 2 success_file_open_count = 2 total_file_read_count = 0 success_file_read_count = 0 total_file_write_count = 2 success_file_write_count = 2 last_heart_beat_time = 2020-05-20 06:40:31 last_source_update = 2020-05-20 03:57:21 last_sync_update = 2020-05-20 03:58:39 last_synced_timestamp = 1970-01-01 00:00:00 |

文件上传测试

1 2 | # fdfs_upload_file /etc/fdfs/client.conf test.txt group1/M00/00/00/rBE3B17E0VKAXzfOAAAABelE2rQ036.txt |

访问默认nginx端口是8888所以需要加端口访问

1 2 | # curl 127.0.0.1:8888/group1/M00/00/00/rBE3B17E0VKAXzfOAAAABelE2rQ036.txttest |

镜像自带fastdfs-nginx-module模块无需配置

1 2 | # /usr/local/nginx/sbin/nginx -s reloadngx_http_fastdfs_set pid=88 |

启动多个storage服务

生产需要启动多个storage服务例如启动一个secret服务

创建pvc配置文件,创建一个大小为10G的pvc

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | # cat secret-pvc.yaml apiVersion: v1kind: PersistentVolumeClaimmetadata: name: storage-secret-pv-claim labels: app: storage-secretspec: accessModes: - ReadWriteMany storageClassName: "gluster-heketi-storageclass" resources: requests: storage: 10Gi |

创建一个有状态服务器定义环境变量指定tracker服务器以及组名为secert

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 | # cat secret-statefulset.yaml apiVersion: apps/v1kind: StatefulSetmetadata: labels: app: storage-secret name: storage-secretspec: replicas: 1 selector: matchLabels: app: storage-secret serviceName: "storage-secret" template: metadata: labels: app: storage-secret spec: containers: - image: delron/fastdfs name: fastdfs command: ["/bin/bash", "/usr/bin/start1.sh","storage"] env: - name: TRACKER_SERVER value: tracker-0.tracker.default:22122 - name: GROUP_NAME value: secret volumeMounts: - name: storage-secret-persistent-storage mountPath: /var/fdfs - name: storage-secret-nginx-config mountPath: /usr/local/nginx/conf/nginx.conf subPath: nginx.conf - name: storage-secret-mod-config mountPath: /etc/fdfs/mod_fastdfs.conf subPath: mod_fastdfs.conf volumes: - name: storage-secret-persistent-storage persistentVolumeClaim: claimName: storage-secret-pv-claim - name: storage-secret-nginx-config configMap: name: storage-secret-nginx-config - name: storage-secret-mod-config configMap: name: storage-secret-mod-config |

创建secret项目的svc配置文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 | # cat secret-cluster-svc.yaml apiVersion: v1kind: Servicemetadata: labels: app: storage-secret name: storage-secretspec: ports: - port: 23000 protocol: TCP targetPort: 23000 clusterIP: None selector: app: storage-secret |

创建nginx配置configmap,因为默认nginx配置的项目为group使用需要创建nginx配置该为项目secret

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 | # cat nginx.conf worker_processes 1;events { worker_connections 1024;}http { include mime.types; default_type application/octet-stream; sendfile on; keepalive_timeout 65; server { listen 8888; server_name localhost; location ~/secret/M00 { root /var/fdfs/data; ngx_fastdfs_module; } error_page 500 502 503 504 /50x.html; location = /50x.html { root html; } }} |

创建secret的nginx配置文件

1 | kubectl create configmap storage-secret-nginx-config --from-file=nginx.conf |

查看

1 | kubectl describe configmap storage-secret-nginx-config |

同理需要创建mod配置文件,需要修改默认配置文件的tracker地址以及项目名为secret

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 | # cat mod_fastdfs.conf # connect timeout in seconds# default value is 30sconnect_timeout=10# network recv and send timeout in seconds# default value is 30snetwork_timeout=30# the base path to store log filesbase_path=/tmp# if load FastDFS parameters from tracker server# since V1.12# default value is falseload_fdfs_parameters_from_tracker=true# storage sync file max delay seconds# same as tracker.conf# valid only when load_fdfs_parameters_from_tracker is false# since V1.12# default value is 86400 seconds (one day)storage_sync_file_max_delay = 86400# if use storage ID instead of IP address# same as tracker.conf# valid only when load_fdfs_parameters_from_tracker is false# default value is false# since V1.13use_storage_id = false# specify storage ids filename, can use relative or absolute path# same as tracker.conf# valid only when load_fdfs_parameters_from_tracker is false# since V1.13storage_ids_filename = storage_ids.conf# FastDFS tracker_server can ocur more than once, and tracker_server format is# "host:port", host can be hostname or ip address# valid only when load_fdfs_parameters_from_tracker is truetracker_server=tracker-0.tracker.default:22122# the port of the local storage server# the default value is 23000storage_server_port=23000# the group name of the local storage servergroup_name=secret# if the url / uri including the group name# set to false when uri like /M00/00/00/xxx# set to true when uri like ${group_name}/M00/00/00/xxx, such as group1/M00/xxx# default value is falseurl_have_group_name = true# path(disk or mount point) count, default value is 1# must same as storage.confstore_path_count=1# store_path#, based 0, if store_path0=/var/fdfs# the paths must be exist# must same as storage.confstore_path0=/var/fdfs#store_path1=/home/yuqing/fastdfs1# standard log level as syslog, case insensitive, value list:### emerg for emergency### alert### crit for critical### error### warn for warning### notice### info### debuglog_level=info# set the log filename, such as /usr/local/apache2/logs/mod_fastdfs.log# empty for output to stderr (apache and nginx error_log file)log_filename=# response mode when the file not exist in the local file system## proxy: get the content from other storage server, then send to client## redirect: redirect to the original storage server (HTTP Header is Location)response_mode=proxy# the NIC alias prefix, such as eth in Linux, you can see it by ifconfig -a# multi aliases split by comma. empty value means auto set by OS type# this paramter used to get all ip address of the local host# default values is emptyif_alias_prefix=# use "#include" directive to include HTTP config file# NOTE: #include is an include directive, do NOT remove the # before include#include http.conf# if support flv# default value is false# since v1.15flv_support = true# flv file extension name# default value is flv# since v1.15flv_extension = flv# set the group count# set to none zero to support multi-group on this storage server# set to 0 for single group only# groups settings section as [group1], [group2], ..., [groupN]# default value is 0# since v1.14group_count = 0# group settings for group #1# since v1.14# when support multi-group on this storage server, uncomment following section#[group1]#group_name=group1#storage_server_port=23000#store_path_count=2#store_path0=/var/fdfs#store_path1=/home/yuqing/fastdfs1# group settings for group #2# since v1.14# when support multi-group, uncomment following section as neccessary#[group2]#group_name=group2#storage_server_port=23000#store_path_count=1#store_path0=/var/fdfs |

创建mod配置configmap

1 | kubectl create configmap storage-secret-mod-config --from-file=mod_fastdfs.conf |

应用

1 2 3 | kubectl apply -f secret-pvc.yaml kubectl apply -f secret-statefulset.yaml kubectl apply -f secret-cluster-svc.yaml |

查看创建的pvc pod svc

1 2 3 4 5 6 7 8 9 | # kubectl get pvc storage-secret-pv-claimNAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGEstorage-secret-pv-claim Bound pvc-e6adeeeb-d0e4-478a-9528-f1366788d20b 10Gi RWX gluster-heketi-storageclass 47h[root@localhost secret]# kubectl get pod storage-secret-0NAME READY STATUS RESTARTS AGEstorage-secret-0 1/1 Running 0 29h[root@localhost secret]# kubectl get svc storage-secretNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEstorage-secret ClusterIP None <none> 23000/TCP 47h |

登录容器查看

1 | kubectl exec -it storage-secret-0 bash |

查看storage和tracker通信是否正常

1 | fdfs_monitor /etc/fdfs/storage.conf |

上传测试,需要加storage的ip及端口,使用默认会上传至项目group

1 2 | # fdfs_upload_file /etc/fdfs/client.conf test.txt 127.0.0.1:23000secret/M00/00/00/rBE3Bl7Hc2KAUsleAAAABjh0DAo567.txt |

访问测试,默认端口是8888

1 | curl 127.0.0.1:8888/secret/M00/00/00/rBE3Bl7Hc-WAKwdZAAAABjh0DAo598.txt |

创建tracker和storage服务的nginx反向代理

生产中storage作为后端,可以创建nginx反向代理tracker以及storage

nginx配置文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 | # cat nginx.conf user root;worker_processes 4;#error_log logs/error.log info;events { worker_connections 1024; use epoll;}http { include mime.types; default_type application/octet-stream; sendfile on; tcp_nopush on; keepalive_timeout 65; server_names_hash_bucket_size 128; client_header_buffer_size 32k; large_client_header_buffers 4 32k; client_max_body_size 300m; proxy_redirect off; proxy_set_header Host $http_host; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_connect_timeout 90; proxy_send_timeout 90; proxy_read_timeout 90; proxy_buffer_size 16k; proxy_buffers 4 64k; proxy_busy_buffers_size 128k; proxy_temp_file_write_size 128k; log_format main '$remote_addr - $remote_user [$time_local] "$request" ' '$status $body_bytes_sent "$http_referer" ' '"$http_user_agent" "$http_x_forwarded_for"'; #include ./conf.d/*.conf; #upstream start upstream fdfs_group1 { server storage-0.storage.default:8888 weight=1 max_fails=2 fail_timeout=60s; } upstream secret { server storage-secret-0.storage-secret.default:8888 weight=1 max_fails=2 fail_timeout=60s; } upstream public { server 172.16.20.228:8888 weight=1 max_fails=2 fail_timeout=60s; } upstream tracker { server tracker-0.tracker.default:22122 weight=1 max_fails=2 fail_timeout=60s; } #upstream end #server start server { listen 80; server_name localhost; access_log /var/log/access.log main; error_log /var/log/error.log info; location ~ /group1/ { if ($arg_attname ~ "^(.*).*") { add_header Content-Disposition "attachment;filename=$arg_attname"; } proxy_next_upstream http_502 http_504 error timeout invalid_header; proxy_pass http://fdfs_group1; } location ~ /secret/ { if ($arg_attname ~ "^(.*).*") { add_header Content-Disposition "attachment;filename=$arg_attname"; } proxy_next_upstream http_502 http_504 error timeout invalid_header; proxy_pass http://secret; } location /public/ { if ($arg_attname ~ "^(.*).*") { add_header Content-Disposition "attachment;filename=$arg_attname"; } proxy_next_upstream http_502 http_504 error timeout invalid_header; proxy_pass http://public; } } server { listen 22122; server_name localhost; location / { proxy_pass http://tracker; } } #server end } |

注意:反向代理配置不需要配置include ./conf.d/*.conf;否则访问会出现404

创建nginx的configmap

1 | kubectl create configmap storage-secret-nginx-config --from-file=nginx.conf |

nginx的deployment的yaml文件,挂载了nginx配置

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 | # cat tracker-nginx-deployment.yaml apiVersion: apps/v1kind: Deploymentmetadata: labels: app: track-nginx name: track-nginxspec: replicas: 1 selector: matchLabels: app: track-nginx template: metadata: labels: app: track-nginx spec: containers: - image: nginx:1.14.0 name: nginx volumeMounts: - name: track-nginx-config mountPath: /etc/nginx/nginx.conf subPath: nginx.conf volumes: - name: track-nginx-config configMap: name: track-nginx-config |

nginx的svc yaml配置文件,把storage 和sercret的文件反向代理使用80端口访问,固定clusterIP

把tracker服务的22122端口暴露出去

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 | # cat tracker-nginx-svc.yaml apiVersion: v1kind: Servicemetadata: labels: app: track-nginx name: track-nginxspec: ports: - port: 80 name: tracker-nginx protocol: TCP targetPort: 80 - port: 22122 name: tracker-22122 protocol: TCP targetPort: 22122 clusterIP: 172.16.20.227 selector: app: track-nginx |

应用

1 2 | kubectl apply -f tracker-nginx-deployment.yaml kubectl apply -f tracker-nginx-svc.yaml |

查看

1 2 3 4 5 6 | # kubectl get svc track-nginxNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEtrack-nginx ClusterIP 172.16.20.227 <none> 80/TCP,22122/TCP 23h[root@localhost tracker]# kubectl get pod track-nginx-76b8468787-w5hrqNAME READY STATUS RESTARTS AGEtrack-nginx-76b8468787-w5hrq 1/1 Running 0 5h8m |

在storage容器上传文件以后使用反向代理的IP访问

1 2 3 4 5 6 | #上传# fdfs_upload_file /etc/fdfs/client.conf test.txt group1/M00/00/00/rBE3B17HfaSAeJY3AAAABelE2rQ954.txt#访问# curl 172.16.20.227/group1/M00/00/00/rBE3B17HfaSAeJY3AAAABelE2rQ954.txttest |

在storage的secret容器上传访问

1 2 3 4 | # fdfs_upload_file /etc/fdfs/client.conf test.txt 127.0.0.1:23000secret/M00/00/00/rBE3Bl7HfiCAQLUKAAAABumtiSc944.txt# curl 172.16.20.227/secret/M00/00/00/rBE3Bl7HfiCAQLUKAAAABumtiSc944.txttest2 |

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· SQL Server 2025 AI相关能力初探

· Linux系列:如何用 C#调用 C方法造成内存泄露

· AI与.NET技术实操系列(二):开始使用ML.NET

· 记一次.NET内存居高不下排查解决与启示

· 探究高空视频全景AR技术的实现原理

· 阿里最新开源QwQ-32B,效果媲美deepseek-r1满血版,部署成本又又又降低了!

· AI编程工具终极对决:字节Trae VS Cursor,谁才是开发者新宠?

· 开源Multi-agent AI智能体框架aevatar.ai,欢迎大家贡献代码

· Manus重磅发布:全球首款通用AI代理技术深度解析与实战指南

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

2019-05-15 Saltstack之使用salt安装es6.0以上的head插件

2017-05-15 Python之JSON序列