非关系统型数据库-mangodb

第三十六课 非关系统型数据库-mangodb

目录

二十四 mongodb介绍

二十五 mongodb安装

二十六 连接mongodb

二十七 mongodb用户管理

二十八 mongodb创建集合、数据管理

二十九 php的mongodb扩展

三十 php的mongo扩展

三十一 mongodb副本集介绍

三十二 mongodb副本集搭建

三十三 mongodb副本集测试

三十四 mongodb分片介绍

三十五 mongodb分片搭建

三十六 mongodb分片测试

三十七 mongodb备份恢复

三十八 扩展

二十四 mongodb介绍

官网www.mongodb.com, 截止2018年08月26日,当前最新版4.0.1

C++编写,基于分布式的,属于NoSQL的一种

在NoSQL中是最像关系型数据库的

MongoDB 将数据存储为一个文档,数据结构由键值(key=>value)对组成。MongoDB 文档类似于 JSON 对象。字段值可以包含其他文档、数组及文档数组。

关于JSON http://www.w3school.com.cn/json/index.asp

因为基于分布式,所以很容易扩展

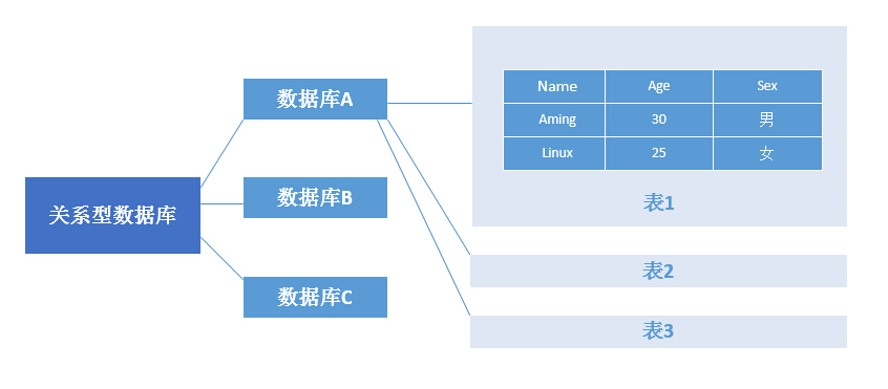

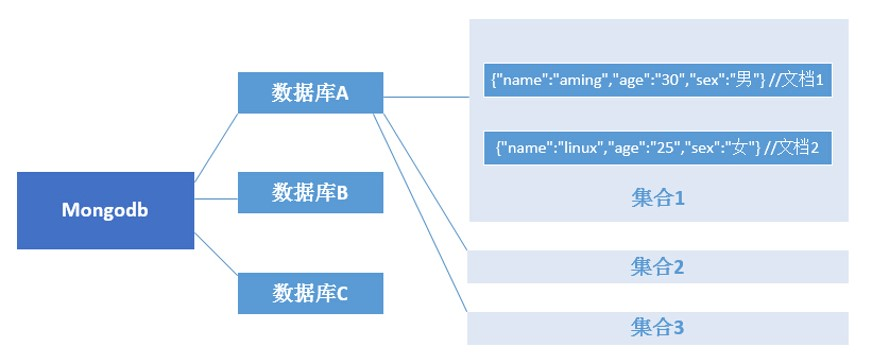

MongoDB和关系型数据库对比

关系型数据库数据结构

mongodb数据库数据结构

##二十五 mongodb安装

官方安装文档https://docs.mongodb.com/manual/tutorial/install-mongodb-on-red-hat/

1.新建yum源

[root@mangodbserver1 ~]# vim /etc/yum.repos.d/mangodb-org-4.0.repo

[mongodb-org-4.0]

name=MongoDB Repository

baseurl=https://repo.mongodb.org/yum/redhat/$releasever/mongodb-org/4.0/x86_64/

gpgcheck=1

enabled=1

gpgkey=https://www.mongodb.org/static/pgp/server-4.0.asc

2.查看软件包

[root@mangodbserver1 ~]# yum list |grep mongodb

collectd-write_mongodb.x86_64 5.8.0-4.el7 epel

mongodb.x86_64 2.6.12-6.el7 epel

mongodb-org.x86_64 4.0.1-1.el7 mongodb-org-4.0

mongodb-org-mongos.x86_64 4.0.1-1.el7 mongodb-org-4.0

mongodb-org-server.x86_64 4.0.1-1.el7 mongodb-org-4.0

mongodb-org-shell.x86_64 4.0.1-1.el7 mongodb-org-4.0

mongodb-org-tools.x86_64 4.0.1-1.el7 mongodb-org-4.0

mongodb-server.x86_64 2.6.12-6.el7 epel

mongodb-test.x86_64 2.6.12-6.el7 epel

nodejs-mongodb.noarch 1.4.7-1.el7 epel

php-mongodb.noarch 1.0.4-1.el7 epel

php-pecl-mongodb.x86_64 1.1.10-1.el7 epel

poco-mongodb.x86_64 1.6.1-3.el7 epel

syslog-ng-mongodb.x86_64 3.5.6-3.el7 epel

3.安装mangodb-org

[root@mangodbserver1 ~]# yum -y install mongodb-org

Loaded plugins: fastestmirror

Loading mirror speeds from cached hostfile

* base: mirrors.aliyun.com

* epel: mirrors.tongji.edu.cn

* extras: mirrors.aliyun.com

* updates: mirrors.163.com

Resolving Dependencies

...中间略...

Installed:

mongodb-org.x86_64 0:4.0.1-1.el7

Dependency Installed:

mongodb-org-mongos.x86_64 0:4.0.1-1.el7 mongodb-org-server.x86_64 0:4.0.1-1.el7 mongodb-org-shell.x86_64 0:4.0.1-1.el7 mongodb-org-tools.x86_64 0:4.0.1-1.el7

Complete!

##二十六 连接mongodb

1.启动mangodb

[root@mangodbserver1 ~]# systemctl start mongod.service

[root@mangodbserver1 ~]# systemctl enable mongod.service

[root@mangodbserver1 ~]# lsof -i :27017

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

mongod 1782 mongod 11u IPv4 22968 0t0 TCP localhost:27017 (LISTEN)

2.连接

# 本机直接运行命令mongo进入到mongodb shell中

-bash: mango: command not found

[root@mangodbserver1 ~]# mongo

MongoDB shell version v4.0.1

connecting to: mongodb://127.0.0.1:27017

MongoDB server version: 4.0.1

Welcome to the MongoDB shell.

For interactive help, type "help".

For more comprehensive documentation, see

http://docs.mongodb.org/

Questions? Try the support group

http://groups.google.com/group/mongodb-user

Server has startup warnings:

2018-08-26T14:01:51.415+0800 I CONTROL [initandlisten]

2018-08-26T14:01:51.415+0800 I CONTROL [initandlisten] ** WARNING: Access control is not enabled for the database.

2018-08-26T14:01:51.415+0800 I CONTROL [initandlisten] ** Read and write access to data and configuration is unrestricted.

2018-08-26T14:01:51.415+0800 I CONTROL [initandlisten]

---

Enable MongoDB's free cloud-based monitoring service, which will then receive and display

metrics about your deployment (disk utilization, CPU, operation statistics, etc).

The monitoring data will be available on a MongoDB website with a unique URL accessible to you

and anyone you share the URL with. MongoDB may use this information to make product

improvements and to suggest MongoDB products and deployment options to you.

To enable free monitoring, run the following command: db.enableFreeMonitoring()

To permanently disable this reminder, run the following command: db.disableFreeMonitoring()

---

>

# 如果mongodb监听端口并不是默认的27017,则在连接的时候需要加--port 选项,例如

# mongo --port 27018

# 连接远程mongodb,需要加--host,例如

# mongo --host 192.168.1.47

# 如果设置了验证,则在连接的时候需要带用户名和密码

# mongo -uusername -ppasswd --authenticationDatabase db 类似于MySQL

二十七 mongodb用户管理

常用操作

# 需要切换到admn库

> use admin

switched to db admin

# 创建用户admin,user指定用户,customData为说明字段,可以省略,pwd为密码,roles指定用户的角色,db指定库名

> db.createUser( { user: "admin", customData: {description: "superuser"}, pwd: "admin122", roles: [ { role: "root", db: "admin" } ] } )

Successfully added user: {

"user" : "admin",

"customData" : {

"description" : "superuser"

},

"roles" : [

{

"role" : "root",

"db" : "admin"

}

]

}

# 列出所有用户,需要切换到admin库

> db.system.users.find()

{ "_id" : "admin.admin", "user" : "admin", "db" : "admin", "credentials" : { "SCRAM-SHA-1" : { "iterationCount" : 10000, "salt" : "t3C0r5eRm8qrPrdyQwPGRw==", "storedKey" : "2FNCyDURiJKU6LnC8QvRVtUcO00=", "serverKey" : "1eyFpJkirCnUwvFTj7sLeucNZ5Q=" }, "SCRAM-SHA-256" : { "iterationCount" : 15000, "salt" : "h58+0R+lhBUZFMMRqjwRYcnOPyoCl62xb0gg5g==", "storedKey" : "G9gV0/k0nQ+KjBE/12qvtjhGNiFPBy6RRSolPZmVkNo=", "serverKey" : "/Vh31wMqLZkuxPh3zNL6QQLTfGlUcxqZx8fk1GRRugY=" } }, "customData" : { "description" : "superuser" }, "roles" : [ { "role" : "root", "db" : "admin" } ] }

# 查看当前库下所有的用户

> show users

{

"_id" : "admin.admin",

"user" : "admin",

"db" : "admin",

"customData" : {

"description" : "superuser"

},

"roles" : [

{

"role" : "root",

"db" : "admin"

}

],

"mechanisms" : [

"SCRAM-SHA-1",

"SCRAM-SHA-256"

]

}

# 创建用户kennminn

> db.createUser({user: "kennminn", pwd: "123456", roles:[{role: "read", db: "test"}]})

Successfully added user: {

"user" : "kennminn",

"roles" : [

{

"role" : "read",

"db" : "test"

}

]

}

# 删除用户kennminn

> db.dropUser('kennminn')

true

# 若要用户生效,还需要编辑启动脚本vim /usr/lib/systemd/system/mongod.service,在OPTIONS=后面增--auth

[root@mangodbserver1 ~]# vim /usr/lib/systemd/system/mongod.service

# 修改此句开启验证

Environment="OPTIONS=--auth -f /etc/mongod.conf"

[root@mangodbserver1 ~]# systemctl restart mongod.service

Warning: mongod.service changed on disk. Run 'systemctl daemon-reload' to reload units.

[root@mangodbserver1 ~]# systemctl daemon-reload

[root@mangodbserver1 ~]# systemctl restart mongod.service

# 未验证身份,查询失败

[root@mangodbserver1 ~]# mongo

MongoDB shell version v4.0.1

connecting to: mongodb://127.0.0.1:27017

MongoDB server version: 4.0.1

> use admin

switched to db admin

> show users

2018-08-26T16:52:37.729+0800 E QUERY [js] Error: command usersInfo requires authentication :

_getErrorWithCode@src/mongo/shell/utils.js:25:13

DB.prototype.getUsers@src/mongo/shell/db.js:1757:1

shellHelper.show@src/mongo/shell/utils.js:859:9

shellHelper@src/mongo/shell/utils.js:766:15

@(shellhelp2):1:1

# 认证身份

[root@mangodbserver1 ~]# mongo -u "admin" -p "admin122" --authenticationDatabase "admin"

MongoDB shell version v4.0.1

connecting to: mongodb://127.0.0.1:27017

MongoDB server version: 4.0.1

---

Enable MongoDB's free cloud-based monitoring service, which will then receive and display

metrics about your deployment (disk utilization, CPU, operation statistics, etc).

The monitoring data will be available on a MongoDB website with a unique URL accessible to you

and anyone you share the URL with. MongoDB may use this information to make product

improvements and to suggest MongoDB products and deployment options to you.

To enable free monitoring, run the following command: db.enableFreeMonitoring()

To permanently disable this reminder, run the following command: db.disableFreeMonitoring()

---

> use admin

switched to db admin

> show users

{

"_id" : "admin.admin",

"user" : "admin",

"db" : "admin",

"customData" : {

"description" : "superuser"

},

"roles" : [

{

"role" : "root",

"db" : "admin"

}

],

"mechanisms" : [

"SCRAM-SHA-1",

"SCRAM-SHA-256"

]

}

# 在db1库中创建用户test1,test1用户对db1库读写,对db2库只读。

> use db1

switched to db db1

> db.createUser( { user: "test1", pwd: "123aaa", roles: [ { role: "readWrite", db: "db1" }, {role: "read", db: "db2" } ] } )

Successfully added user: {

"user" : "test1",

"roles" : [

{

"role" : "readWrite",

"db" : "db1"

},

{

"role" : "read",

"db" : "db2"

}

]

}

> show users

{

"_id" : "db1.test1",

"user" : "test1",

"db" : "db1",

"roles" : [

{

"role" : "readWrite",

"db" : "db1"

},

{

"role" : "read",

"db" : "db2"

}

],

"mechanisms" : [

"SCRAM-SHA-1",

"SCRAM-SHA-256"

]

}

> use db2

switched to db db2

> show users

> db.auth("test1", "123aaa")

Error: Authentication failed.

0

> use db1

switched to db db1

> db.auth("test1", "123aaa")

MongoDB用户角色

Read:允许用户读取指定数据库

readWrite:允许用户读写指定数据库

dbAdmin:允许用户在指定数据库中执行管理函数,如索引创建、删除,查看统计或访问system.profile

userAdmin:允许用户向system.users集合写入,可以找指定数据库里创建、删除和管理用户

clusterAdmin:只在admin数据库中可用,赋予用户所有分片和复制集相关函数的管理权限。

readAnyDatabase:只在admin数据库中可用,赋予用户所有数据库的读权限

readWriteAnyDatabase:只在admin数据库中可用,赋予用户所有数据库的读写权限

userAdminAnyDatabase:只在admin数据库中可用,赋予用户所有数据库的userAdmin权限

dbAdminAnyDatabase:只在admin数据库中可用,赋予用户所有数据库的dbAdmin权限。

root:只在admin数据库中可用。超级账号,超级权限

MongoDB库管理

# 查看版本

> db.version()

4.0.1

# 如果库存在就切换,不存在就创建

> use db1

switched to db db1

# 查看库,该库是空库,没有创建集合,

> show dbs

admin 0.000GB

config 0.000GB

local 0.000GB

# 创建集合

> db.createCollection('clo1')

{ "ok" : 1 }

> show dbs

admin 0.000GB

config 0.000GB

db1 0.000GB

local 0.000GB

# 删除当前库,要想删除某个库,必须切换到那个库下

> use userdb

switched to db userdb

> db.createCollection('clo1')

{ "ok" : 1 }

> db.dropDatabase()

{ "dropped" : "userdb", "ok" : 1 }

# 查看mongodb服务器的状态

> db.serverStatus()

{

"host" : "mangodbserver1",

"version" : "4.0.1",

"process" : "mongod",

"pid" : NumberLong(2968),

"uptime" : 2383,

"uptimeMillis" : NumberLong(2382444),

"uptimeEstimate" : NumberLong(2382),

"localTime" : ISODate("2018-08-26T09:39:29.055Z"),

"asserts" : {

...下略...

},

"ttl" : {

"deletedDocuments" : NumberLong(0),

"passes" : NumberLong(39)

}

},

"ok" : 1

}

二十八 mongodb创建集合、数据管理

1.创建集合

# 语法:db.createCollection(name,options)

# name就是集合的名字,options可选,用来配置集合的参数,参数如下

# capped true/false (可选)如果为true,则启用封顶集合。封顶集合是固定大小的集合,当它达到其最大大小,会自动覆盖最早的条目。如果指定true,则也需要指定尺寸参数。

# autoindexID true/false (可选)如果为true,自动创建索引_id字段的默认值是false。

# size (可选)指定最大大小字节封顶集合。如果封顶如果是 true,那么你还需要指定这个字段。单位B

# max (可选)指定封顶集合允许在文件的最大数量。

> db.createCollection("mycol", { capped : true, size : 6142800, max : 10000 } )

{ "ok" : 1 }

2.数据管理

# 查看集合,或者使用show tables

> show collections

clo1

mycol

> show tables

clo1

mycol

# 如果集合不存在,直接插入数据,则mongodb会自动创建集合

> db.Account.insert({AccountID:1,UserName:"123",password:"123456"})

WriteResult({ "nInserted" : 1 })

# 更新

> db.Account.update({AccountID:1},{"$set":{"Age":20}})

WriteResult({ "nMatched" : 1, "nUpserted" : 0, "nModified" : 1 })

# 查看所有文档

> db.Account.find()

{ "_id" : ObjectId("5b828f3d727dfa33d5561c62"), "AccountID" : 1, "UserName" : "123", "password" : "123456", "Age" : 20 }

# 根据条件查询

> db.Account.find({AccountID:1})

{ "_id" : ObjectId("5b828f3d727dfa33d5561c62"), "AccountID" : 1, "UserName" : "123", "password" : "123456", "Age" : 20 }

# 根据条件删除

> db.Account.remove({AccountID:1})

WriteResult({ "nRemoved" : 1 })

> db.Account.find()

# 删除所有文档,即删除集合

> db.Account.drop()

true

# 先进入对应的库

> use db1

switched to db db1

# 然后查看集合状态

> db.printCollectionStats()

clo1

{

"ns" : "db1.clo1",

"size" : 0,

"count" : 0,

"storageSize" : 4096,

"capped" : false,

"wiredTiger" : {

"metadata" : {

"formatVersion" : 1

...中间略...

"nindexes" : 1,

"totalIndexSize" : 4096,

"indexSizes" : {

"_id_" : 4096

},

"ok" : 1

}

---

二十九 php的mongodb扩展

安装过程

[root@mangodbserver1 ~]# cd /usr/local/src/

[root@mangodbserver1 src]# git clone https://github.com/mongodb/mongo-php-driver

Cloning into 'mongo-php-driver'...

remote: Counting objects: 22561, done.

remote: Compressing objects: 100% (2/2), done.

remote: Total 22561 (delta 0), reused 2 (delta 0), pack-reused 22559

Receiving objects: 100% (22561/22561), 6.64 MiB | 2.12 MiB/s, done.

Resolving deltas: 100% (17804/17804), done.

[root@mangodbserver1 src]# cd mongo-php-driver

[root@mangodbserver1 mongo-php-driver]# git submodule update --init

Submodule 'src/libmongoc' (https://github.com/mongodb/mongo-c-driver.git) registered for path 'src/libmongoc'

Cloning into 'src/libmongoc'...

remote: Counting objects: 104584, done.

remote: Compressing objects: 100% (524/524), done.

remote: Total 104584 (delta 266), reused 240 (delta 138), pack-reused 103918

Receiving objects: 100% (104584/104584), 51.46 MiB | 4.84 MiB/s, done.

Resolving deltas: 100% (91157/91157), done.

Submodule path 'src/libmongoc': checked out 'a690091bae086f267791bd2227400f2035de99e8'

[root@mangodbserver1 mongo-php-driver]# /usr/local/php-fpm/bin/php

php php-cgi php-config phpize

[root@mangodbserver1 mongo-php-driver]# /usr/local/php-fpm/bin/phpize

Configuring for:

PHP Api Version: 20131106

Zend Module Api No: 20131226

Zend Extension Api No: 220131226

[root@mangodbserver1 mongo-php-driver]# ./configure --with-php-config=/usr/local/php-fpm/bin/php-config

checking for grep that handles long lines and -e... /usr/bin/grep

checking for egrep... /usr/bin/grep -E

checking for a sed that does not truncate output... /usr/bin/sed

...中间略...

config.status: creating /usr/local/src/mongo-php-driver/src/libmongoc/src/libbson/src/bson/bson-config.h

config.status: creating /usr/local/src/mongo-php-driver/src/libmongoc/src/libbson/src/bson/bson-version.h

config.status: creating /usr/local/src/mongo-php-driver/src/libmongoc/src/libmongoc/src/mongoc/mongoc-config.h

config.status: creating /usr/local/src/mongo-php-driver/src/libmongoc/src/libmongoc/src/mongoc/mongoc-version.h

config.status: creating config.h

[root@mangodbserver1 mongo-php-driver]# make && make install

/bin/sh /usr/local/src/mongo-php-driver/libtool --mode=compile cc -DBSON_COMPILATION -DMONGOC_COMPILATION -pthread

...中间略...

Build complete.

Don't forget to run 'make test'.

Installing shared extensions: /usr/local/php-fpm/lib/php/extensions/no-debug-non-zts-20131226/

[root@mangodbserver1 mongo-php-driver]# vim /usr/local/php-fpm/etc/php.ini

# 添加

extension = mongodb.so

[root@mangodbserver1 mongo-php-driver]# /usr/local/php-fpm/sbin/php-fpm -m | grep mongo

mongodb

[root@mangodbserver1 mongo-php-driver]# /etc/init.d/php-fpm restart

Gracefully shutting down php-fpm . done

Starting php-fpm done

三十 php的mongo扩展

[root@mangodbserver1 mongo-php-driver]# cd /usr/local/src/

[root@mangodbserver1 src]# wget https://pecl.php.net/get/mongo-1.6.16.tgz

--2018-08-26 20:58:55-- https://pecl.php.net/get/mongo-1.6.16.tgz

Resolving pecl.php.net (pecl.php.net)... 104.236.228.160

...中间略...

2018-08-26 20:58:59 (140 KB/s) - ‘mongo-1.6.16.tgz’ saved [210341/210341]

[root@mangodbserver1 src]# tar -zxvf mongo-1.6.16.tgz

[root@mangodbserver1 src]# cd mongo-1.6.16/

[root@mangodbserver1 mongo-1.6.16]# /usr/local/php-fpm/bin/phpize

Configuring for:

PHP Api Version: 20131106

Zend Module Api No: 20131226

Zend Extension Api No: 220131226

[root@mangodbserver1 mongo-1.6.16]# ./configure --with-php-config=/usr/local/php-fpm/bin/php-config

checking for grep that handles long lines and -e... /usr/bin/grep

checking for egrep... /usr/bin/grep -E

checking for a sed that does not truncate output... /usr/bin/sed

...中间略...

creating libtool

appending configuration tag "CXX" to libtool

configure: creating ./config.status

config.status: creating config.h

[root@mangodbserver1 mongo-1.6.16]# make && make install

/bin/sh /usr/local/src/mongo-1.6.16/libtool --mode=compile cc -I./util -I. -I/usr/local/src/mongo-1.6.16 -DPHP_ATOM_INC -I/usr/local/src/mongo-1.6.16/include -I/usr/local/src/mongo-1.6.16/main -I/usr/local/src/mongo-1.6.16 -I/usr/local/php-fpm/include/php -I/usr/local/php-fpm/include/php/main -I/usr/local/php-fpm/include/php/TSRM -I/usr/local/php-fpm/include/php/Zend -I/usr/local/php-fpm/include/php/ext -I/usr/local/php-fpm/include/php/ext/date/lib -I/usr/local/src/mongo-1.6.16/api -I/usr/local/src/mongo-1.6.16/util -I/usr/local/src/mongo-1.6.16/exceptions -I/usr/local/src/mongo-1.6.16/gridfs -I/usr/local/src/mongo-1.6.16/types -I/usr/local/src/mongo-1.6.16/batch -I/usr/local/src/mongo-1.6.16/contrib -I/usr/local/src/mongo-1.6.16/mcon -I/usr/local/src/mongo-1.6.16/mcon/contrib -DHAVE_CONFIG_H -g -O2 -c /usr/local/src/mongo-1.6.16/php_mongo.c -o php_mongo.lo

...中间略...

Build complete.

Don't forget to run 'make test'.

Installing shared extensions: /usr/local/php-fpm/lib/php/extensions/no-debug-non-zts-20131226/

[root@mangodbserver1 mongo-1.6.16]# vim /usr/local/php-fpm/etc/php.ini

# 添加extension = mongo.so

extension = mongo.so

[root@mangodbserver1 mongo-1.6.16]# /usr/local/php-fpm/sbin/php-fpm -m | grep mon

mongo

mongodb

[root@mangodbserver1 mongo-1.6.16]# /etc/init.d/php-fpm restart

Gracefully shutting down php-fpm . done

Starting php-fpm done

测试mongo扩展

参考文档 https://docs.mongodb.com/ecosystem/drivers/php/

http://www.runoob.com/mongodb/mongodb-php.html

# 新建测试页

[root@mangodbserver1 mongo-1.6.16]# vim /usr/local/nginx/html/1.php

<?php

$m = new MongoClient();

$db = $m->test;

$collection = $db->createCollection("runoob");

echo "集合创建成功";

?>

[root@mangodbserver1 mongo-1.6.16]# curl localhost/1.php

集合创建成功[root@mangodbserver1 mongo-1.6.16]#

##三十一 mongodb副本集介绍

早期版本使用master-slave,一主一从和MySQL类似,但slave在此架构中为只读,当主库宕机后,从库不能自动切换为主

目前已经淘汰master-slave模式,改为副本集,这种模式下有一个主(primary),和多个从(secondary),只读。支持给它们设置权重,当主宕掉后,权重最高的从切换为主

在此架构中还可以建立一个仲裁(arbiter)的角色,它只负责裁决,而不存储数据

在此架构中读写数据都是在主上,要想实现负载均衡的目的需要手动指定读库的目标server

三十二 mongodb副本集搭建

环境:

三台机器 CentOS Linux release 7.5.1804 (Core)

mongodbserver1: 192.168.1.47

mongodbserver2: 192.168.1.48

mongodbserver3: 192.168.1.49

1.分别编辑三台server的配置文件

net:

port: 27017

# 增加绑定本机ip

bindIp: 127.0.0.1,192.168.1.49

# 去掉注释,增加下两行内容

replication:

oplogSizeMB: 20

replSetName: rs0

[root@mongodbserver3 ~]# systemctl start mongod.service

[root@mongodbserver3 ~]# netstat -nltup | grep mongo

tcp 0 0 192.168.1.49:27017 0.0.0.0:* LISTEN 2073/mongod

tcp 0 0 127.0.0.1:27017 0.0.0.0:* LISTEN 2073/mongod

2.初始化

[root@mongodbserver1 ~]# mongo

> config={_id:"rs0",members:[{_id:0,host:"192.168.1.47:27017"},{_id:1,host:"192.168.1.48:27017"},{_id:2,host:"192.168.1.49:27017"}]}

{

"_id" : "rs0",

"members" : [

{

"_id" : 0,

"host" : "192.168.1.47:27017"

},

{

"_id" : 1,

"host" : "192.168.1.48:27017"

},

{

"_id" : 2,

"host" : "192.168.1.49:27017"

}

]

}

> rs.initiate(config)

{

"ok" : 1,

"operationTime" : Timestamp(1535293700, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1535293700, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

rs0:PRIMARY> rs.status()

{

"set" : "rs0",

"date" : ISODate("2018-08-26T14:31:21.930Z"),

"myState" : 1,

"term" : NumberLong(1),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1535293872, 1),

"t" : NumberLong(1)

},

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1535293872, 1),

"t" : NumberLong(1)

},

"appliedOpTime" : {

"ts" : Timestamp(1535293872, 1),

"t" : NumberLong(1)

},

"durableOpTime" : {

"ts" : Timestamp(1535293872, 1),

"t" : NumberLong(1)

}

},

"lastStableCheckpointTimestamp" : Timestamp(1535293832, 1),

"members" : [

{

"_id" : 0,

"name" : "192.168.1.47:27017",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 1166,

"optime" : {

"ts" : Timestamp(1535293872, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2018-08-26T14:31:12Z"),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "",

"electionTime" : Timestamp(1535293711, 1),

"electionDate" : ISODate("2018-08-26T14:28:31Z"),

"configVersion" : 1,

"self" : true,

"lastHeartbeatMessage" : ""

},

{

"_id" : 1,

"name" : "192.168.1.48:27017",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 181,

"optime" : {

"ts" : Timestamp(1535293872, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1535293872, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2018-08-26T14:31:12Z"),

"optimeDurableDate" : ISODate("2018-08-26T14:31:12Z"),

"lastHeartbeat" : ISODate("2018-08-26T14:31:21.381Z"),

"lastHeartbeatRecv" : ISODate("2018-08-26T14:31:21.417Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "192.168.1.47:27017",

"syncSourceHost" : "192.168.1.47:27017",

"syncSourceId" : 0,

"infoMessage" : "",

"configVersion" : 1

},

{

"_id" : 2,

"name" : "192.168.1.49:27017",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 181,

"optime" : {

"ts" : Timestamp(1535293872, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1535293872, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2018-08-26T14:31:12Z"),

"optimeDurableDate" : ISODate("2018-08-26T14:31:12Z"),

"lastHeartbeat" : ISODate("2018-08-26T14:31:21.381Z"),

"lastHeartbeatRecv" : ISODate("2018-08-26T14:31:21.548Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "192.168.1.47:27017",

"syncSourceHost" : "192.168.1.47:27017",

"syncSourceId" : 0,

"infoMessage" : "",

"configVersion" : 1

}

],

"ok" : 1,

"operationTime" : Timestamp(1535293872, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1535293872, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

rs0:PRIMARY>

三十三 mongodb副本集测试

# 主上操作

rs0:PRIMARY> use mydb

rs0:PRIMARY> use mydb

switched to db mydb

rs0:PRIMARY> db.acc.insert({AccountID:1,UserName:"123",password:"123456"})

WriteResult({ "nInserted" : 1 })

rs0:PRIMARY> show dbs

admin 0.000GB

config 0.000GB

db1 0.000GB

local 0.000GB

mydb 0.000GB

# 从上操作

[root@mongodbserver2 ~]# mongo

rs0:SECONDARY> show dbs

2018-08-26T22:45:06.594+0800 E QUERY [js] Error: listDatabases failed:{

"operationTime" : Timestamp(1535294702, 1),

"ok" : 0,

"errmsg" : "not master and slaveOk=false",

"code" : 13435,

"codeName" : "NotMasterNoSlaveOk",

"$clusterTime" : {

"clusterTime" : Timestamp(1535294702, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

} :

_getErrorWithCode@src/mongo/shell/utils.js:25:13

Mongo.prototype.getDBs@src/mongo/shell/mongo.js:67:1

shellHelper.show@src/mongo/shell/utils.js:876:19

shellHelper@src/mongo/shell/utils.js:766:15

@(shellhelp2):1:1

rs0:SECONDARY> rs.slaveOk()

rs0:SECONDARY> show dbs

admin 0.000GB

config 0.000GB

db1 0.000GB

local 0.000GB

mydb 0.000GB

rs0:SECONDARY> use mydb

switched to db mydb

rs0:SECONDARY> show tables

acc

副本集更改权重模拟主宕机

# 查看当前三台主机的权重,初始权重都是1

rs0:PRIMARY> rs.conf()

{

"_id" : "rs0",

"version" : 1,

"protocolVersion" : NumberLong(1),

"writeConcernMajorityJournalDefault" : true,

"members" : [

{

"_id" : 0,

"host" : "192.168.1.47:27017",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

},

{

"_id" : 1,

"host" : "192.168.1.48:27017",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

},

{

"_id" : 2,

"host" : "192.168.1.49:27017",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

}

],

"settings" : {

"chainingAllowed" : true,

"heartbeatIntervalMillis" : 2000,

"heartbeatTimeoutSecs" : 10,

"electionTimeoutMillis" : 10000,

"catchUpTimeoutMillis" : -1,

"catchUpTakeoverDelayMillis" : 30000,

"getLastErrorModes" : {

},

"getLastErrorDefaults" : {

"w" : 1,

"wtimeout" : 0

},

"replicaSetId" : ObjectId("5b82b904244cfb9393d33a63")

}

}

# 设置权重

rs0:PRIMARY> cfg.members[0].priority = 3

3

rs0:PRIMARY> cfg.members[1].priority = 2

2

rs0:PRIMARY> cfg.members[2].priority = 1

1

rs0:PRIMARY> rs.reconfig(cfg)

{

"ok" : 1,

"operationTime" : Timestamp(1535296028, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1535296028, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

# 查看新的权重,第二个节点将会成为候选主节点。

rs0:PRIMARY> rs.conf()

{

"_id" : "rs0",

"version" : 2,

"protocolVersion" : NumberLong(1),

"writeConcernMajorityJournalDefault" : true,

"members" : [

{

"_id" : 0,

"host" : "192.168.1.47:27017",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 3,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

},

{

"_id" : 1,

"host" : "192.168.1.48:27017",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 2,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

},

{

"_id" : 2,

"host" : "192.168.1.49:27017",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

}

],

"settings" : {

"chainingAllowed" : true,

"heartbeatIntervalMillis" : 2000,

"heartbeatTimeoutSecs" : 10,

"electionTimeoutMillis" : 10000,

"catchUpTimeoutMillis" : -1,

"catchUpTakeoverDelayMillis" : 30000,

"getLastErrorModes" : {

},

"getLastErrorDefaults" : {

"w" : 1,

"wtimeout" : 0

},

"replicaSetId" : ObjectId("5b82b904244cfb9393d33a63")

}

}

rs0:PRIMARY>

# 模拟主宕机,断开主的网卡

# 在192.168.1.48上查看,之前的主192.168.1.47的状态:"stateStr" : "(not reachable/healthy)",

# 192.168.1.48已经变为主了。

rs0:PRIMARY> rs.status()

{

"set" : "rs0",

"date" : ISODate("2018-08-26T15:11:07.305Z"),

"myState" : 1,

"term" : NumberLong(2),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1535296259, 1),

"t" : NumberLong(2)

},

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1535296259, 1),

"t" : NumberLong(2)

},

"appliedOpTime" : {

"ts" : Timestamp(1535296259, 1),

"t" : NumberLong(2)

},

"durableOpTime" : {

"ts" : Timestamp(1535296259, 1),

"t" : NumberLong(2)

}

},

"lastStableCheckpointTimestamp" : Timestamp(1535296229, 1),

"members" : [

{

"_id" : 0,

"name" : "192.168.1.47:27017",

"health" : 0,

"state" : 8,

"stateStr" : "(not reachable/healthy)",

"uptime" : 0,

"optime" : {

"ts" : Timestamp(0, 0),

"t" : NumberLong(-1)

},

"optimeDurable" : {

"ts" : Timestamp(0, 0),

"t" : NumberLong(-1)

},

"optimeDate" : ISODate("1970-01-01T00:00:00Z"),

"optimeDurableDate" : ISODate("1970-01-01T00:00:00Z"),

"lastHeartbeat" : ISODate("2018-08-26T15:11:04.624Z"),

"lastHeartbeatRecv" : ISODate("2018-08-26T15:09:36.925Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "Error connecting to 192.168.1.47:27017 :: caused by :: No route to host",

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "",

"configVersion" : -1

},

{

"_id" : 1,

"name" : "192.168.1.48:27017",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 3505,

"optime" : {

"ts" : Timestamp(1535296259, 1),

"t" : NumberLong(2)

},

"optimeDate" : ISODate("2018-08-26T15:10:59Z"),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "",

"electionTime" : Timestamp(1535296187, 1),

"electionDate" : ISODate("2018-08-26T15:09:47Z"),

"configVersion" : 2,

"self" : true,

"lastHeartbeatMessage" : ""

},

{

"_id" : 2,

"name" : "192.168.1.49:27017",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 2565,

"optime" : {

"ts" : Timestamp(1535296259, 1),

"t" : NumberLong(2)

},

"optimeDurable" : {

"ts" : Timestamp(1535296259, 1),

"t" : NumberLong(2)

},

"optimeDate" : ISODate("2018-08-26T15:10:59Z"),

"optimeDurableDate" : ISODate("2018-08-26T15:10:59Z"),

"lastHeartbeat" : ISODate("2018-08-26T15:11:05.393Z"),

"lastHeartbeatRecv" : ISODate("2018-08-26T15:11:07.196Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "192.168.1.48:27017",

"syncSourceHost" : "192.168.1.48:27017",

"syncSourceId" : 1,

"infoMessage" : "",

"configVersion" : 2

}

],

"ok" : 1,

"operationTime" : Timestamp(1535296259, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1535296259, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

rs0:PRIMARY>

# 将192.168.1.47重新连上网络,并查看状态,192.168.1.47又变为主了

rs0:PRIMARY> rs.status()

{

"set" : "rs0",

"date" : ISODate("2018-08-26T15:16:21.215Z"),

"myState" : 1,

"term" : NumberLong(3),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1535296574, 1),

"t" : NumberLong(3)

},

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1535296574, 1),

"t" : NumberLong(3)

},

"appliedOpTime" : {

"ts" : Timestamp(1535296574, 1),

"t" : NumberLong(3)

},

"durableOpTime" : {

"ts" : Timestamp(1535296574, 1),

"t" : NumberLong(3)

}

},

"lastStableCheckpointTimestamp" : Timestamp(1535296534, 1),

"members" : [

{

"_id" : 0,

"name" : "192.168.1.47:27017",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 3866,

"optime" : {

"ts" : Timestamp(1535296574, 1),

"t" : NumberLong(3)

},

"optimeDate" : ISODate("2018-08-26T15:16:14Z"),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "",

"electionTime" : Timestamp(1535296432, 1),

"electionDate" : ISODate("2018-08-26T15:13:52Z"),

"configVersion" : 2,

"self" : true,

"lastHeartbeatMessage" : ""

},

{

"_id" : 1,

"name" : "192.168.1.48:27017",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 159,

"optime" : {

"ts" : Timestamp(1535296574, 1),

"t" : NumberLong(3)

},

"optimeDurable" : {

"ts" : Timestamp(1535296574, 1),

"t" : NumberLong(3)

},

"optimeDate" : ISODate("2018-08-26T15:16:14Z"),

"optimeDurableDate" : ISODate("2018-08-26T15:16:14Z"),

"lastHeartbeat" : ISODate("2018-08-26T15:16:20.414Z"),

"lastHeartbeatRecv" : ISODate("2018-08-26T15:16:19.382Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "192.168.1.47:27017",

"syncSourceHost" : "192.168.1.47:27017",

"syncSourceId" : 0,

"infoMessage" : "",

"configVersion" : 2

},

{

"_id" : 2,

"name" : "192.168.1.49:27017",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 159,

"optime" : {

"ts" : Timestamp(1535296574, 1),

"t" : NumberLong(3)

},

"optimeDurable" : {

"ts" : Timestamp(1535296574, 1),

"t" : NumberLong(3)

},

"optimeDate" : ISODate("2018-08-26T15:16:14Z"),

"optimeDurableDate" : ISODate("2018-08-26T15:16:14Z"),

"lastHeartbeat" : ISODate("2018-08-26T15:16:20.414Z"),

"lastHeartbeatRecv" : ISODate("2018-08-26T15:16:21.156Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "192.168.1.47:27017",

"syncSourceHost" : "192.168.1.47:27017",

"syncSourceId" : 0,

"infoMessage" : "",

"configVersion" : 2

}

],

"ok" : 1,

"operationTime" : Timestamp(1535296574, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1535296574, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

rs0:PRIMARY>

三十四 mongodb分片介绍

分片就是将数据库进行拆分,将大型集合分隔到不同服务器上。比如,本来100G的数据,可以分割成10份存储到10台服务器上,这样每台机器只有10G的数据。

通过一个mongos的进程(路由)实现分片后的数据存储与访问,也就是说mongos是整个分片架构的核心,对客户端而言是不知道是否有分片的,客户端只需要把读写操作转达给mongos即可。

虽然分片会把数据分隔到很多台服务器上,但是每一个节点都是需要有一个备用角色的,这样能保证数据的高可用。

当系统需要更多空间或者资源的时候,分片可以让我们按需方便扩展,只需要把mongodb服务的机器加入到分片集群中即可

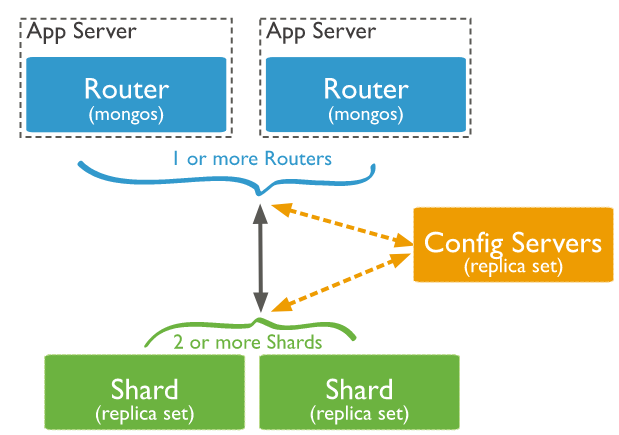

mongodb分片架构

mongos: 数据库集群请求的入口,所有的请求都通过mongos进行协调,不需要在应用程序添加一个路由选择器,mongos自己就是一个请求分发中心,它负责把对应的数据请求请求转发到对应的shard服务器上。在生产环境通常有多mongos作为请求的入口,防止其中一个挂掉所有的mongodb请求都没有办法操作。

config server: 配置服务器,存储所有数据库元信息(路由、分片)的配置。mongos本身没有物理存储分片服务器和数据路由信息,只是缓存在内存里,配置服务器则实际存储这些数据。mongos第一次启动或者关掉重启就会从 config server 加载配置信息,以后如果配置服务器信息变化会通知到所有的 mongos 更新自己的状态,这样 mongos 就能继续准确路由。在生产环境通常有多个 config server 配置服务器,因为它存储了分片路由的元数据,防止数据丢失!

shard: 存储了一个集合部分数据的MongoDB实例,每个分片是单独的mongodb服务或者副本集,在生产环境中,所有的分片都应该是副本集。

三十五 mongodb分片搭建

1.分片搭建 -服务器规划

三台机器

mongodbserver1: 192.168.1.47 mongos、config server、副本集1主节点、副本集2仲裁、副本集3从节点

mongodbserver2: 192.168.1.48 mongos、config server、副本集1从节点、副本集2主节点、副本集3仲裁

mongodbserver3: 192.168.1.49 mongos、config server、副本集1仲裁、副本集2从节点、副本集3

端口分配:mongos 20000、config 21000、副本集1 27001、副本集2 27002、副本集3 27003

三台机器全部关闭firewalld服务和selinux,或者增加对应端口的规则

2.分片搭建 – 创建目录

# 分别在三台机器上创建各个角色所需要的目录

# mkdir -p /data/mongodb/mongos/log

# mkdir -p /data/mongodb/config/{data,log}

# mkdir -p /data/mongodb/shard1/{data,log}

# mkdir -p /data/mongodb/shard2/{data,log}

# mkdir -p /data/mongodb/shard3/{data,log}

[root@mongodbserver1 ~]# mkdir -p /data/mongodb/mongos/log

[root@mongodbserver1 ~]# ls -ld !$

ls -ld /data/mongodb/mongos/log

drwxr-xr-x 2 root root 6 Aug 27 00:04 /data/mongodb/mongos/log

[root@mongodbserver1 ~]# mkdir -p /data/mongodb/config/{data,log}

[root@mongodbserver1 ~]#

[root@mongodbserver1 ~]# ls -l /data/mongodb/config

total 0

drwxr-xr-x 2 root root 6 Aug 27 00:04 data

drwxr-xr-x 2 root root 6 Aug 27 00:04 log

[root@mongodbserver1 ~]# mkdir -p /data/mongodb/shard1/{data,log}

[root@mongodbserver1 ~]# ls -l /data/mongodb/shard1

total 0

drwxr-xr-x 2 root root 6 Aug 27 00:05 data

drwxr-xr-x 2 root root 6 Aug 27 00:05 log

[root@mongodbserver1 ~]# mkdir -p /data/mongodb/shard2/{data,log}

[root@mongodbserver1 ~]# mkdir -p /data/mongodb/shard3/{data,log}

[root@mongodbserver1 ~]# ls -l /data/mongodb/shard2

total 0

drwxr-xr-x 2 root root 6 Aug 27 00:05 data

drwxr-xr-x 2 root root 6 Aug 27 00:05 log

[root@mongodbserver1 ~]# ls -l /data/mongodb/shard3

total 0

drwxr-xr-x 2 root root 6 Aug 27 00:06 data

drwxr-xr-x 2 root root 6 Aug 27 00:06 log

3.config server配置

# mongodb3.4版本以后需要对config server创建副本集

# 添加配置文件(三台机器都操作)

[root@mongodbserver3 ~]# mkdir /etc/mongod/

[root@mongodbserver3 ~]#

[root@mongodbserver3 ~]# vim /etc/mongod/config.conf

# 内容如下,bind_ip可侦听所有端口,为安全考虑,也可以只侦听本机端口

pidfilepath = /var/run/mongodb/configsrv.pid

dbpath = /data/mongodb/config/data

logpath = /data/mongodb/config/log/congigsrv.log

logappend = true

bind_ip = 0.0.0.0

port = 21000

fork = true

configsvr = true #declare this is a config db of a cluster;

replSet=configs #副本集名称

maxConns=20000 #设置最大连接数

# 启动config server

[root@mongodbserver1 ~]# mongod -f /etc/mongod/config.conf

2018-08-27T00:13:47.546+0800 I CONTROL [main] Automatically disabling TLS 1.0, to force-enable TLS 1.0 specify --sslDisabledProtocols 'none'

about to fork child process, waiting until server is ready for connections.

forked process: 41218

child process started successfully, parent exiting

# 登录任意一台机器的21000端口,初始化副本集

[root@mongodbserver1 ~]# mongo --port 21000

> config = { _id: "configs", members: [ {_id : 0, host : "192.168.1.47:21000"},{_id : 1, host : "192.168.1.48:21000"},{_id : 2, host : "192.168.1.49:21000"}] }

{

"_id" : "configs",

"members" : [

{

"_id" : 0,

"host" : "192.168.1.47:21000"

},

{

"_id" : 1,

"host" : "192.168.1.48:21000"

},

{

"_id" : 2,

"host" : "192.168.1.49:21000"

}

]

}

> rs.initiate(config)

{

"ok" : 1,

"operationTime" : Timestamp(1535300396, 1),

"$gleStats" : {

"lastOpTime" : Timestamp(1535300396, 1),

"electionId" : ObjectId("000000000000000000000000")

},

"lastCommittedOpTime" : Timestamp(0, 0),

"$clusterTime" : {

"clusterTime" : Timestamp(1535300396, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

configs:PRIMARY>

4.分片配置

# mongodbserver1,mongodbserver2,mongodbserver3上分别新建如下配置文件

[root@mongodbserver1 mongod]# cat /etc/mongod/shard1.conf

pidfilepath = /var/run/mongodb/shard1.pid

dbpath = /data/mongodb/shard1/data

logpath = /data/mongodb/shard1/log/shard1.log

logappend = true

bind_ip = 0.0.0.0

port = 27001

fork = true

oplogSize = 4096

journal = true

quiet = true

replSet=shard1 #副本集名称

shardsvr = true #declare this is a shard db of a cluster;

maxConns=20000 #设置最大连接数

[root@mongodbserver1 mongod]# cat /etc/mongod/shard2.conf

pidfilepath = /var/run/mongodb/shard2.pid

dbpath = /data/mongodb/shard2/data

logpath = /data/mongodb/shard2/log/shard2.log

logappend = true

bind_ip = 0.0.0.0

port = 27002

fork = true

oplogSize = 4096

journal = true

quiet = true

replSet=shard2 #副本集名称

shardsvr = true #declare this is a shard db of a cluster;

maxConns=20000 #设置最大连接数

[root@mongodbserver1 mongod]# cat /etc/mongod/shard3.conf

pidfilepath = /var/run/mongodb/shard3.pid

dbpath = /data/mongodb/shard3/data

logpath = /data/mongodb/shard3/log/shard3.log

logappend = true

bind_ip = 0.0.0.0

port = 27003

fork = true

oplogSize = 4096

journal = true

quiet = true

replSet=shard3 #副本集名称

shardsvr = true #declare this is a shard db of a cluster;

maxConns=20000 #设置最大连接数

[root@mongodbserver1 ~]# mongod -f /etc/mongod/shard1.conf

2018-08-27T09:45:20.879+0800 I CONTROL [main] Automatically disabling TLS 1.0, to force-enable TLS 1.0 specify --sslDisabledProtocols 'none'

about to fork child process, waiting until server is ready for connections.

forked process: 46045

child process started successfully, parent exiting

[root@mongodbserver1 ~]# mongod -f /etc/mongod/shard2.conf

2018-08-27T09:45:20.879+0800 I CONTROL [main] Automatically disabling TLS 1.0, to force-enable TLS 1.0 specify --sslDisabledProtocols 'none'

about to fork child process, waiting until server is ready for connections.

forked process: 46045

child process started successfully, parent exiting

[root@mongodbserver1 mongod]# mongod -f /etc/mongod/shard3.conf

2018-08-27T09:50:15.220+0800 I CONTROL [main] Automatically disabling TLS 1.0, to force-enable TLS 1.0 specify --sslDisabledProtocols 'none'

about to fork child process, waiting until server is ready for connections.

forked process: 46162

child process started successfully, parent exiting

[root@mongodbserver1 mongod]# netstat -nltup | grep :27003

tcp 0 0 0.0.0.0:27003 0.0.0.0:* LISTEN 46162/mongod

[root@mongodbserver1 mongod]# netstat -nltup | grep :27001

tcp 0 0 0.0.0.0:27001 0.0.0.0:* LISTEN 45946/mongod

[root@mongodbserver1 mongod]# netstat -nltup | grep :27002

tcp 0 0 0.0.0.0:27002 0.0.0.0:* LISTEN 46045/mongod

4.副本集初始化

# shard1副本集初始化

# 登录192.168.1.47或者192.168.1.48中的任何一台机器的27001端口初始化副本集,192.168.1.49因为shard1中我们把这台机器的27001端口作为了仲裁节点

[root@mongodbserver1 mongod]# mongo --port 27001

MongoDB shell version v4.0.1

connecting to: mongodb://127.0.0.1:27001/

MongoDB server version: 4.0.1

Server has startup warnings:

2018-08-27T09:37:06.874+0800 I CONTROL [initandlisten]

2018-08-27T09:37:06.874+0800 I CONTROL [initandlisten] ** WARNING: Access control is not enabled for the database.

2018-08-27T09:37:06.874+0800 I CONTROL [initandlisten] ** Read and write access to data and configuration is unrestricted.

2018-08-27T09:37:06.874+0800 I CONTROL [initandlisten] ** WARNING: You are running this process as the root user, which is not recommended.

2018-08-27T09:37:06.874+0800 I CONTROL [initandlisten]

---

Enable MongoDB's free cloud-based monitoring service, which will then receive and display

metrics about your deployment (disk utilization, CPU, operation statistics, etc).

The monitoring data will be available on a MongoDB website with a unique URL accessible to you

and anyone you share the URL with. MongoDB may use this information to make product

improvements and to suggest MongoDB products and deployment options to you.

To enable free monitoring, run the following command: db.enableFreeMonitoring()

To permanently disable this reminder, run the following command: db.disableFreeMonitoring()

---

> use admin

switched to db admin

> config = { _id: "shard1", members: [ {_id : 0, host : "192.168.1.47:27001"}, {_id: 1,host : "192.168.1.48:27001"},{_id : 2, host : "192.168.1.49:27001",arbiterOnly:true}] }

{

"_id" : "shard1",

"members" : [

{

"_id" : 0,

"host" : "192.168.1.47:27001"

},

{

"_id" : 1,

"host" : "192.168.1.48:27001"

},

{

"_id" : 2,

"host" : "192.168.1.49:27001",

"arbiterOnly" : true

}

]

}

> rs.initiate(config)

{

"ok" : 1,

"operationTime" : Timestamp(1535335745, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1535335745, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

shard1:SECONDARY> rs.status()

{

"set" : "shard1",

"date" : ISODate("2018-08-27T02:09:26.775Z"),

"myState" : 1,

"term" : NumberLong(1),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1535335758, 2),

"t" : NumberLong(1)

},

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1535335758, 2),

"t" : NumberLong(1)

},

"appliedOpTime" : {

"ts" : Timestamp(1535335758, 2),

"t" : NumberLong(1)

},

"durableOpTime" : {

"ts" : Timestamp(1535335758, 2),

"t" : NumberLong(1)

}

},

"lastStableCheckpointTimestamp" : Timestamp(1535335758, 1),

"members" : [

{

"_id" : 0,

"name" : "192.168.1.47:27001",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 1940,

"optime" : {

"ts" : Timestamp(1535335758, 2),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2018-08-27T02:09:18Z"),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "could not find member to sync from",

"electionTime" : Timestamp(1535335756, 1),

"electionDate" : ISODate("2018-08-27T02:09:16Z"),

"configVersion" : 1,

"self" : true,

"lastHeartbeatMessage" : ""

},

{

"_id" : 1,

"name" : "192.168.1.48:27001",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 20,

"optime" : {

"ts" : Timestamp(1535335758, 2),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1535335758, 2),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2018-08-27T02:09:18Z"),

"optimeDurableDate" : ISODate("2018-08-27T02:09:18Z"),

"lastHeartbeat" : ISODate("2018-08-27T02:09:26.532Z"),

"lastHeartbeatRecv" : ISODate("2018-08-27T02:09:25.106Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "192.168.1.47:27001",

"syncSourceHost" : "192.168.1.47:27001",

"syncSourceId" : 0,

"infoMessage" : "",

"configVersion" : 1

},

{

"_id" : 2,

"name" : "192.168.1.49:27001",

"health" : 1,

"state" : 7,

"stateStr" : "ARBITER",

"uptime" : 20,

"lastHeartbeat" : ISODate("2018-08-27T02:09:26.531Z"),

"lastHeartbeatRecv" : ISODate("2018-08-27T02:09:25.865Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "",

"configVersion" : 1

}

],

"ok" : 1,

"operationTime" : Timestamp(1535335758, 2),

"$clusterTime" : {

"clusterTime" : Timestamp(1535335758, 2),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

shard1:PRIMARY>

# shard2副本集初始化

# 登录192.168.1.48或者192.168.1.49中的任何一台机器的27002端口初始化副本集,192.168.1.47因为shard2中我们把这台机器的27002端口作为了仲裁节点

# rs.remove("192.168.1.49:27002"); 删除节点

# rs.add({_id: 2, host: "192.168.1.49:27002"})

# rs.add({_id: 2, host: "192.168.1.47:27002",arbiterOnly:true})

[root@mongodbserver2 ~]# mongo --port 27002

>config = { _id: "shard2", members: [ {_id : 0, host : "192.168.1.47:27002" ,arbiterOnly:true},{_id : 1, host : "192.168.1.48:27002"},{_id : 2, host : "192.168.1.49:27002"}] }

>rs.reinitiate(config)

# shard3副本集初始化

# 登录192.168.1.47或者192.168.1.49中的任何一台机器的27003端口初始化副本集,192.168.1.48因为shard3中我们把这台机器的27002端口作为了仲裁节点

[root@mongodbserver3 ~]# mongo --port 27003

> use admin

switched to db admin

> config = { _id: "shard3", members: [ {_id : 0, host : "192.168.1.47:27003"}, {_id : 1, host : "192.168.1.48:27003", arbiterOnly:true}, {_id : 2, host : "192.168.1.49:27003"}] }

{

"_id" : "shard3",

"members" : [

{

"_id" : 0,

"host" : "192.168.1.47:27003"

},

{

"_id" : 1,

"host" : "192.168.1.48:27003",

"arbiterOnly" : true

},

{

"_id" : 2,

"host" : "192.168.1.49:27003"

}

]

}

> rs.initiate(config)

{

"ok" : 1,

"operationTime" : Timestamp(1535338725, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1535338725, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

shard3:PRIMARY> rs.status()

{

"set" : "shard3",

"date" : ISODate("2018-08-27T02:59:59.805Z"),

"myState" : 1,

"term" : NumberLong(1),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1535338797, 1),

"t" : NumberLong(1)

},

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1535338797, 1),

"t" : NumberLong(1)

},

"appliedOpTime" : {

"ts" : Timestamp(1535338797, 1),

"t" : NumberLong(1)

},

"durableOpTime" : {

"ts" : Timestamp(1535338797, 1),

"t" : NumberLong(1)

}

},

"lastStableCheckpointTimestamp" : Timestamp(1535338797, 1),

"members" : [

{

"_id" : 0,

"name" : "192.168.1.47:27003",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 74,

"optime" : {

"ts" : Timestamp(1535338797, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1535338797, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2018-08-27T02:59:57Z"),

"optimeDurableDate" : ISODate("2018-08-27T02:59:57Z"),

"lastHeartbeat" : ISODate("2018-08-27T02:59:57.821Z"),

"lastHeartbeatRecv" : ISODate("2018-08-27T02:59:58.287Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "192.168.1.49:27003",

"syncSourceHost" : "192.168.1.49:27003",

"syncSourceId" : 2,

"infoMessage" : "",

"configVersion" : 1

},

{

"_id" : 1,

"name" : "192.168.1.48:27003",

"health" : 1,

"state" : 7,

"stateStr" : "ARBITER",

"uptime" : 74,

"lastHeartbeat" : ISODate("2018-08-27T02:59:57.821Z"),

"lastHeartbeatRecv" : ISODate("2018-08-27T02:59:59.384Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "",

"configVersion" : 1

},

{

"_id" : 2,

"name" : "192.168.1.49:27003",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 4185,

"optime" : {

"ts" : Timestamp(1535338797, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2018-08-27T02:59:57Z"),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "could not find member to sync from",

"electionTime" : Timestamp(1535338735, 1),

"electionDate" : ISODate("2018-08-27T02:58:55Z"),

"configVersion" : 1,

"self" : true,

"lastHeartbeatMessage" : ""

}

],

"ok" : 1,

"operationTime" : Timestamp(1535338797, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1535338797, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

5.配置路由服务器

bye

[root@mongodbserver2 ~]# vim /etc/mongod/mongos.conf

pidfilepath = /var/run/mongodb/mongos.pid

logpath = /data/mongodb/mongos/log/mongos.log

logappend = true

bind_ip = 0.0.0.0

port = 20000

fork = true

configdb = configs/192.168.1.47:21000, 192.168.1.48:21000, 192.168.1.49:21000 #监听的配置服务器,只能有1个或者3个>

,configs为配置服务器的副本集名字

maxConns=20000 #设置最大连接数

"/etc/mongod/mongos.conf" [New] 8L, 360C written

[root@mongodbserver2 ~]# mongos -f /etc/mongod/mongos.conf

2018-08-27T11:05:04.093+0800 I CONTROL [main] Automatically disabling TLS 1.0, to force-enable TLS 1.0 specify --sslDisabledProtocols 'none'

2018-08-27T11:05:04.254+0800 I NETWORK [main] getaddrinfo(" 192.168.1.48") failed: Name or service not known

2018-08-27T11:05:04.305+0800 I NETWORK [main] getaddrinfo(" 192.168.1.49") failed: Name or service not known

about to fork child process, waiting until server is ready for connections.

forked process: 4238

child process started successfully, parent exiting

[root@mongodbserver2 ~]#

6.启用分片

mongos> sh.addShard("shard1/192.168.1.47:27001,192.168.1.48:27001,192.168.1.49:27001")

{

"shardAdded" : "shard1",

"ok" : 1,

"operationTime" : Timestamp(1535339410, 3),

"$clusterTime" : {

"clusterTime" : Timestamp(1535339410, 3),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

# 192.168.1.49初始化的时候角色弄错了,删掉了

mongos> sh.addShard("shard2/192.168.1.47:27002,192.168.1.48:27002")

{

"shardAdded" : "shard2",

"ok" : 1,

"operationTime" : Timestamp(1535339580, 9),

"$clusterTime" : {

"clusterTime" : Timestamp(1535339580, 9),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

mongos> sh.addShard("shard3/192.168.1.47:27003,192.168.1.48:27003,192.168.1.49:27003")

{

"shardAdded" : "shard3",

"ok" : 1,

"operationTime" : Timestamp(1535339462, 5),

"$clusterTime" : {

"clusterTime" : Timestamp(1535339462, 5),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

mongos> sh.status()

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("5b82d3380925dce6faaca18a")

}

shards:

{ "_id" : "shard1", "host" : "shard1/192.168.1.47:27001,192.168.1.48:27001", "state" : 1 }

{ "_id" : "shard2", "host" : "shard2/192.168.1.48:27002", "state" : 1 }

{ "_id" : "shard3", "host" : "shard3/192.168.1.47:27003,192.168.1.49:27003", "state" : 1 }

active mongoses:

"4.0.1" : 3

autosplit:

Currently enabled: yes

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

No recent migrations

databases:

{ "_id" : "config", "primary" : "config", "partitioned" : true }

config.system.sessions

shard key: { "_id" : 1 }

unique: false

balancing: true

chunks:

shard1 1

{ "_id" : { "$minKey" : 1 } } -->> { "_id" : { "$maxKey" : 1 } } on : shard1 Timestamp(1, 0)

mongos>

三十六 mongodb分片测试

[root@mongodbserver1 mongod]# mongo --port 20000

MongoDB shell version v4.0.1

connecting to: mongodb://127.0.0.1:20000/

MongoDB server version: 4.0.1

Server has startup warnings:

2018-08-27T11:05:03.697+0800 I CONTROL [main]

2018-08-27T11:05:03.697+0800 I CONTROL [main] ** WARNING: Access control is not enabled for the database.

2018-08-27T11:05:03.697+0800 I CONTROL [main] ** Read and write access to data and configuration is unrestricted.

2018-08-27T11:05:03.697+0800 I CONTROL [main] ** WARNING: You are running this process as the root user, which is not recommended.

2018-08-27T11:05:03.697+0800 I CONTROL [main]

mongos> use admin

switched to db admin

mongos> db.runCommand({ enablesharding : "testdb"})

{

"ok" : 1,

"operationTime" : Timestamp(1535339870, 5),

"$clusterTime" : {

"clusterTime" : Timestamp(1535339870, 5),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

mongos> db.runCommand( { shardcollection : "testdb.table1",key : {id: 1} } )

{

"collectionsharded" : "testdb.table1",

"collectionUUID" : UUID("733c1dad-cb4e-4ed3-a3ca-c3cfbec2e30e"),

"ok" : 1,

"operationTime" : Timestamp(1535339897, 16),

"$clusterTime" : {

"clusterTime" : Timestamp(1535339897, 16),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

mongos> use testdb

switched to db testdb

mongos> for (var i = 1; i <= 10000; i++) db.table1.save({id:i,"test1":"testval1"})

WriteResult({ "nInserted" : 1 })

mongos> db.table1.stats()

{

"sharded" : true,

"capped" : false,

"wiredTiger" : {

"metadata" : {

"formatVersion" : 1

},

"creationString" : "access_pattern_hint=none,allocation_size=4KB,app_metadata=(formatVersion=1),assert=(commit_timestamp=none,read_timestamp=none),block_allocation=best,block_compressor=snappy,cache_resident=false,checksum=on,colgroups=,collator=,columns=,dictionary=0,encryption=(keyid=,name=),exclusive=false,extractor=,format=btree,huffman_key=,huffman_value=,ignore_in_memory_cache_size=false,immutable=false,internal_item_max=0,internal_key_max=0,internal_key_truncate=true,internal_page_max=4KB,key_format=q,key_gap=10,leaf_item_max=0,leaf_key_max=0,leaf_page_max=32KB,leaf_value_max=64MB,log=(enabled=false),lsm=(auto_throttle=true,bloom=true,bloom_bit_count=16,bloom_config=,bloom_hash_count=8,bloom_oldest=false,chunk_count_limit=0,chunk_max=5GB,chunk_size=10MB,merge_custom=(prefix=,start_generation=0,suffix=),merge_max=15,merge_min=0),memory_page_max=10m,os_cache_dirty_max=0,os_cache_max=0,prefix_compression=false,prefix_compression_min=4,source=,split_deepen_min_child=0,split_deepen_per_child=0,split_pct=90,type=file,value_format=u",

"type" : "file",

"uri" : "statistics:table:collection-24--8506913186853910022",

"LSM" : {

"bloom filter false positives" : 0,

"bloom filter hits" : 0,

"bloom filter misses" : 0,

"bloom filter pages evicted from cache" : 0,

"bloom filter pages read into cache" : 0,

"bloom filters in the LSM tree" : 0,

"chunks in the LSM tree" : 0,

"highest merge generation in the LSM tree" : 0,

"queries that could have benefited from a Bloom filter that did not exist" : 0,

"sleep for LSM checkpoint throttle" : 0,

"sleep for LSM merge throttle" : 0,

"total size of bloom filters" : 0

},

"block-manager" : {

"allocations requiring file extension" : 21,

"blocks allocated" : 21,

"blocks freed" : 0,

"checkpoint size" : 155648,

"file allocation unit size" : 4096,

"file bytes available for reuse" : 0,

"file magic number" : 120897,

"file major version number" : 1,

"file size in bytes" : 167936,

"minor version number" : 0

},

"btree" : {

"btree checkpoint generation" : 91,

"column-store fixed-size leaf pages" : 0,

"column-store internal pages" : 0,

"column-store variable-size RLE encoded values" : 0,

"column-store variable-size deleted values" : 0,

"column-store variable-size leaf pages" : 0,

"fixed-record size" : 0,

"maximum internal page key size" : 368,

"maximum internal page size" : 4096,

"maximum leaf page key size" : 2867,

"maximum leaf page size" : 32768,

"maximum leaf page value size" : 67108864,

"maximum tree depth" : 3,

"number of key/value pairs" : 0,

"overflow pages" : 0,

"pages rewritten by compaction" : 0,

"row-store internal pages" : 0,

"row-store leaf pages" : 0

},

"cache" : {

"bytes currently in the cache" : 1349553,

"bytes read into cache" : 0,

"bytes written from cache" : 530298,

"checkpoint blocked page eviction" : 0,

"data source pages selected for eviction unable to be evicted" : 0,

"eviction walk passes of a file" : 0,

"eviction walk target pages histogram - 0-9" : 0,

"eviction walk target pages histogram - 10-31" : 0,

"eviction walk target pages histogram - 128 and higher" : 0,

"eviction walk target pages histogram - 32-63" : 0,

"eviction walk target pages histogram - 64-128" : 0,

"eviction walks abandoned" : 0,

"eviction walks gave up because they restarted their walk twice" : 0,

"eviction walks gave up because they saw too many pages and found no candidates" : 0,

"eviction walks gave up because they saw too many pages and found too few candidates" : 0,

"eviction walks reached end of tree" : 0,

"eviction walks started from root of tree" : 0,

"eviction walks started from saved location in tree" : 0,

"hazard pointer blocked page eviction" : 0,

"in-memory page passed criteria to be split" : 0,

"in-memory page splits" : 0,

"internal pages evicted" : 0,

"internal pages split during eviction" : 0,

"leaf pages split during eviction" : 0,

"modified pages evicted" : 0,

"overflow pages read into cache" : 0,

"page split during eviction deepened the tree" : 0,

"page written requiring lookaside records" : 0,

"pages read into cache" : 0,

"pages read into cache after truncate" : 1,

"pages read into cache after truncate in prepare state" : 0,

"pages read into cache requiring lookaside entries" : 0,

"pages requested from the cache" : 10000,

"pages seen by eviction walk" : 0,

"pages written from cache" : 20,

"pages written requiring in-memory restoration" : 0,

"tracked dirty bytes in the cache" : 1349094,

"unmodified pages evicted" : 0

},

"cache_walk" : {

"Average difference between current eviction generation when the page was last considered" : 0,

"Average on-disk page image size seen" : 0,

"Average time in cache for pages that have been visited by the eviction server" : 0,

"Average time in cache for pages that have not been visited by the eviction server" : 0,

"Clean pages currently in cache" : 0,

"Current eviction generation" : 0,

"Dirty pages currently in cache" : 0,

"Entries in the root page" : 0,

"Internal pages currently in cache" : 0,

"Leaf pages currently in cache" : 0,

"Maximum difference between current eviction generation when the page was last considered" : 0,

"Maximum page size seen" : 0,

"Minimum on-disk page image size seen" : 0,

"Number of pages never visited by eviction server" : 0,

"On-disk page image sizes smaller than a single allocation unit" : 0,

"Pages created in memory and never written" : 0,

"Pages currently queued for eviction" : 0,

"Pages that could not be queued for eviction" : 0,

"Refs skipped during cache traversal" : 0,

"Size of the root page" : 0,

"Total number of pages currently in cache" : 0

},

"compression" : {

"compressed pages read" : 0,

"compressed pages written" : 19,

"page written failed to compress" : 0,

"page written was too small to compress" : 1,

"raw compression call failed, additional data available" : 0,

"raw compression call failed, no additional data available" : 0,

"raw compression call succeeded" : 0

},

"cursor" : {

"bulk-loaded cursor-insert calls" : 0,

"create calls" : 5,

"cursor operation restarted" : 0,

"cursor-insert key and value bytes inserted" : 561426,

"cursor-remove key bytes removed" : 0,

"cursor-update value bytes updated" : 0,

"cursors cached on close" : 0,

"cursors reused from cache" : 9996,

"insert calls" : 10000,

"modify calls" : 0,

"next calls" : 1,

"prev calls" : 1,

"remove calls" : 0,

"reserve calls" : 0,

"reset calls" : 20003,

"search calls" : 0,

"search near calls" : 0,

"truncate calls" : 0,

"update calls" : 0

},

"reconciliation" : {

"dictionary matches" : 0,

"fast-path pages deleted" : 0,

"internal page key bytes discarded using suffix compression" : 36,

"internal page multi-block writes" : 0,

"internal-page overflow keys" : 0,

"leaf page key bytes discarded using prefix compression" : 0,

"leaf page multi-block writes" : 1,

"leaf-page overflow keys" : 0,

"maximum blocks required for a page" : 1,

"overflow values written" : 0,

"page checksum matches" : 0,

"page reconciliation calls" : 2,

"page reconciliation calls for eviction" : 0,

"pages deleted" : 0

},

"session" : {

"cached cursor count" : 5,

"object compaction" : 0,

"open cursor count" : 0

},

"transaction" : {

"update conflicts" : 0

}

},

"ns" : "testdb.table1",

"count" : 10000,

"size" : 540000,

"storageSize" : 167936,

"totalIndexSize" : 208896,

"indexSizes" : {

"_id_" : 94208,

"id_1" : 114688

},

"avgObjSize" : 54,

"maxSize" : NumberLong(0),

"nindexes" : 2,

"nchunks" : 1,

"shards" : {

"shard3" : {

"ns" : "testdb.table1",

"size" : 540000,

"count" : 10000,

"avgObjSize" : 54,

"storageSize" : 167936,

"capped" : false,

"wiredTiger" : {

"metadata" : {

"formatVersion" : 1

},

"creationString" : "access_pattern_hint=none,allocation_size=4KB,app_metadata=(formatVersion=1),assert=(commit_timestamp=none,read_timestamp=none),block_allocation=best,block_compressor=snappy,cache_resident=false,checksum=on,colgroups=,collator=,columns=,dictionary=0,encryption=(keyid=,name=),exclusive=false,extractor=,format=btree,huffman_key=,huffman_value=,ignore_in_memory_cache_size=false,immutable=false,internal_item_max=0,internal_key_max=0,internal_key_truncate=true,internal_page_max=4KB,key_format=q,key_gap=10,leaf_item_max=0,leaf_key_max=0,leaf_page_max=32KB,leaf_value_max=64MB,log=(enabled=false),lsm=(auto_throttle=true,bloom=true,bloom_bit_count=16,bloom_config=,bloom_hash_count=8,bloom_oldest=false,chunk_count_limit=0,chunk_max=5GB,chunk_size=10MB,merge_custom=(prefix=,start_generation=0,suffix=),merge_max=15,merge_min=0),memory_page_max=10m,os_cache_dirty_max=0,os_cache_max=0,prefix_compression=false,prefix_compression_min=4,source=,split_deepen_min_child=0,split_deepen_per_child=0,split_pct=90,type=file,value_format=u",

"type" : "file",

"uri" : "statistics:table:collection-24--8506913186853910022",

"LSM" : {

"bloom filter false positives" : 0,

"bloom filter hits" : 0,

"bloom filter misses" : 0,

"bloom filter pages evicted from cache" : 0,

"bloom filter pages read into cache" : 0,

"bloom filters in the LSM tree" : 0,

"chunks in the LSM tree" : 0,

"highest merge generation in the LSM tree" : 0,

"queries that could have benefited from a Bloom filter that did not exist" : 0,

"sleep for LSM checkpoint throttle" : 0,

"sleep for LSM merge throttle" : 0,

"total size of bloom filters" : 0

},

"block-manager" : {

"allocations requiring file extension" : 21,

"blocks allocated" : 21,

"blocks freed" : 0,

"checkpoint size" : 155648,

"file allocation unit size" : 4096,

"file bytes available for reuse" : 0,

"file magic number" : 120897,

"file major version number" : 1,

"file size in bytes" : 167936,

"minor version number" : 0

},

"btree" : {

"btree checkpoint generation" : 91,

"column-store fixed-size leaf pages" : 0,

"column-store internal pages" : 0,

"column-store variable-size RLE encoded values" : 0,

"column-store variable-size deleted values" : 0,

"column-store variable-size leaf pages" : 0,

"fixed-record size" : 0,

"maximum internal page key size" : 368,

"maximum internal page size" : 4096,

"maximum leaf page key size" : 2867,

"maximum leaf page size" : 32768,

"maximum leaf page value size" : 67108864,

"maximum tree depth" : 3,

"number of key/value pairs" : 0,

"overflow pages" : 0,

"pages rewritten by compaction" : 0,

"row-store internal pages" : 0,

"row-store leaf pages" : 0

},

"cache" : {

"bytes currently in the cache" : 1349553,

"bytes read into cache" : 0,

"bytes written from cache" : 530298,

"checkpoint blocked page eviction" : 0,

"data source pages selected for eviction unable to be evicted" : 0,

"eviction walk passes of a file" : 0,

"eviction walk target pages histogram - 0-9" : 0,

"eviction walk target pages histogram - 10-31" : 0,

"eviction walk target pages histogram - 128 and higher" : 0,

"eviction walk target pages histogram - 32-63" : 0,

"eviction walk target pages histogram - 64-128" : 0,

"eviction walks abandoned" : 0,

"eviction walks gave up because they restarted their walk twice" : 0,

"eviction walks gave up because they saw too many pages and found no candidates" : 0,

"eviction walks gave up because they saw too many pages and found too few candidates" : 0,

"eviction walks reached end of tree" : 0,

"eviction walks started from root of tree" : 0,

"eviction walks started from saved location in tree" : 0,

"hazard pointer blocked page eviction" : 0,

"in-memory page passed criteria to be split" : 0,

"in-memory page splits" : 0,

"internal pages evicted" : 0,

"internal pages split during eviction" : 0,

"leaf pages split during eviction" : 0,

"modified pages evicted" : 0,

"overflow pages read into cache" : 0,

"page split during eviction deepened the tree" : 0,

"page written requiring lookaside records" : 0,

"pages read into cache" : 0,

"pages read into cache after truncate" : 1,

"pages read into cache after truncate in prepare state" : 0,

"pages read into cache requiring lookaside entries" : 0,

"pages requested from the cache" : 10000,

"pages seen by eviction walk" : 0,

"pages written from cache" : 20,

"pages written requiring in-memory restoration" : 0,

"tracked dirty bytes in the cache" : 1349094,

"unmodified pages evicted" : 0

},

"cache_walk" : {

"Average difference between current eviction generation when the page was last considered" : 0,

"Average on-disk page image size seen" : 0,

"Average time in cache for pages that have been visited by the eviction server" : 0,

"Average time in cache for pages that have not been visited by the eviction server" : 0,

"Clean pages currently in cache" : 0,

"Current eviction generation" : 0,

"Dirty pages currently in cache" : 0,

"Entries in the root page" : 0,

"Internal pages currently in cache" : 0,

"Leaf pages currently in cache" : 0,

"Maximum difference between current eviction generation when the page was last considered" : 0,

"Maximum page size seen" : 0,

"Minimum on-disk page image size seen" : 0,

"Number of pages never visited by eviction server" : 0,

"On-disk page image sizes smaller than a single allocation unit" : 0,

"Pages created in memory and never written" : 0,

"Pages currently queued for eviction" : 0,

"Pages that could not be queued for eviction" : 0,

"Refs skipped during cache traversal" : 0,

"Size of the root page" : 0,

"Total number of pages currently in cache" : 0

},

"compression" : {

"compressed pages read" : 0,

"compressed pages written" : 19,

"page written failed to compress" : 0,

"page written was too small to compress" : 1,

"raw compression call failed, additional data available" : 0,

"raw compression call failed, no additional data available" : 0,

"raw compression call succeeded" : 0

},

"cursor" : {

"bulk-loaded cursor-insert calls" : 0,

"create calls" : 5,

"cursor operation restarted" : 0,

"cursor-insert key and value bytes inserted" : 561426,

"cursor-remove key bytes removed" : 0,

"cursor-update value bytes updated" : 0,

"cursors cached on close" : 0,

"cursors reused from cache" : 9996,

"insert calls" : 10000,

"modify calls" : 0,

"next calls" : 1,

"prev calls" : 1,

"remove calls" : 0,

"reserve calls" : 0,

"reset calls" : 20003,

"search calls" : 0,

"search near calls" : 0,

"truncate calls" : 0,

"update calls" : 0

},

"reconciliation" : {

"dictionary matches" : 0,

"fast-path pages deleted" : 0,

"internal page key bytes discarded using suffix compression" : 36,

"internal page multi-block writes" : 0,

"internal-page overflow keys" : 0,

"leaf page key bytes discarded using prefix compression" : 0,

"leaf page multi-block writes" : 1,

"leaf-page overflow keys" : 0,

"maximum blocks required for a page" : 1,

"overflow values written" : 0,

"page checksum matches" : 0,

"page reconciliation calls" : 2,

"page reconciliation calls for eviction" : 0,

"pages deleted" : 0

},

"session" : {

"cached cursor count" : 5,

"object compaction" : 0,

"open cursor count" : 0

},

"transaction" : {

"update conflicts" : 0

}

},

"nindexes" : 2,

"totalIndexSize" : 208896,

"indexSizes" : {

"_id_" : 94208,

"id_1" : 114688

},

"ok" : 1,

"operationTime" : Timestamp(1535339957, 1),

"$gleStats" : {

"lastOpTime" : {

"ts" : Timestamp(1535339939, 572),

"t" : NumberLong(1)

},

"electionId" : ObjectId("7fffffff0000000000000001")

},

"lastCommittedOpTime" : Timestamp(1535339957, 1),

"$configServerState" : {

"opTime" : {

"ts" : Timestamp(1535339956, 1),

"t" : NumberLong(1)

}

},

"$clusterTime" : {

"clusterTime" : Timestamp(1535339957, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

},

"ok" : 1,

"operationTime" : Timestamp(1535339957, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1535339957, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

mongos>

三十七 mongodb备份恢复

1.备份指定库

[root@mongodbserver1 mongod]# mongodump --host 127.0.0.1 --port 20000 -d testdb -o /tmp/mongobak

2018-08-27T11:22:43.430+0800 writing testdb.table1 to

2018-08-27T11:22:43.541+0800 done dumping testdb.table1 (10000 documents)

[root@mongodbserver1 mongod]# ls -lh /tmp/mongobak/testdb/

total 532K

-rw-r--r-- 1 root root 528K Aug 27 11:22 table1.bson

-rw-r--r-- 1 root root 187 Aug 27 11:22 table1.metadata.json

2.备份所有库

[root@mongodbserver1 mongod]# mongodump --host 127.0.0.1 --port 20000 -o /tmp/mongobak/alldatabase

2018-08-27T11:24:40.955+0800 writing admin.system.version to

2018-08-27T11:24:41.049+0800 done dumping admin.system.version (1 document)

2018-08-27T11:24:41.143+0800 writing config.locks to

2018-08-27T11:24:41.144+0800 writing config.changelog to

2018-08-27T11:24:41.144+0800 writing testdb.table1 to

2018-08-27T11:24:41.144+0800 writing config.lockpings to

2018-08-27T11:24:41.149+0800 done dumping config.changelog (7 documents)

2018-08-27T11:24:41.149+0800 writing config.shards to