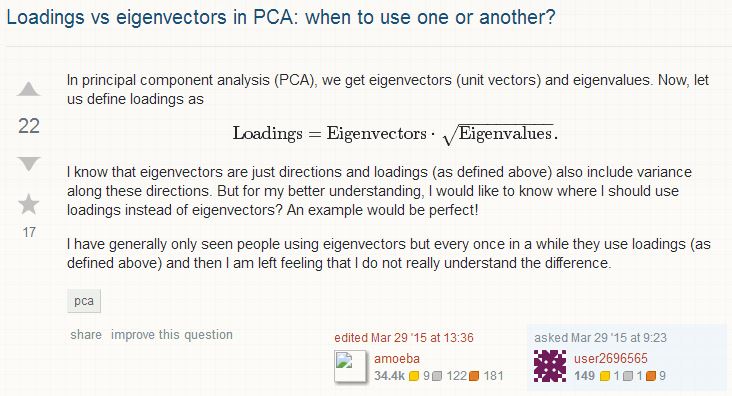

Loadings vs eigenvectors in PCA 主成分分析(PCA)中的负荷和特征向量

Source: Cross Validated

- ttnphns

In PCA, you split covariance (or correlation) matrix into scale part (eigenvalues) and direction part (eigenvectors). You may then endow eigenvectors with the scale: loadings. So, loadings are thus become comparable by magnitude with the covariances/correlations observed between the variables, - because what had been drawn out from the variables' covariation now returns back - in the form of the covariation between the variables and the principal components. Actually, loadings are the covariances/correlations between the original variables and the unit-scaled components. This answer shows geometrically what loadings are and what are coefficients associating components with variables in PCA or factor analysis.

Loadings:

Help you interpret principal components or factors; Because they are the linear combination weights (coefficients) whereby unit-scaled components or factors define or "load" a variable.

(Eigenvector is just a coefficient of orthogonal transformation or projection, it is devoid of "load" within its value. "Load" is (information of the amount of) variance, magnitude. PCs are extracted to explain variance of the variables. Eigenvalues are the variances of (= explained by) PCs. When we multiply eigenvector by sq.root of the eivenvalue we "load" the bare coefficient by the amount of variance. By that virtue we make the coefficient to be the measure of association, co-variability.)Loadings sometimes are "rotated" (e.g. varimax) afterwards to facilitate interpretability (see also);

It is loadings which "restore" the original covariance/correlation matrix (see also this thread discussing nuances of PCA and FA in that respect);

While in PCA you can compute values of components both from eigenvectors and loadings, in factor analysis you compute factor scores out of loadings.

And, above all, loading matrix is informative: its vertical sums of squares are the eigenvalues, components' variances, and its horizontal sums of squares are portions of the variables' variances being "explained" by the components.

Rescaled or standardized loading is the loading divided by the variable's st. deviation; it is the correlation. (If your PCA is correlation-based PCA, loading is equal to the rescaled one, because correlation-based PCA is the PCA on standardized variables.) Rescaled loading squared has the meaning of the contribution of a pr. component into a variable; if it is high (close to 1) the variable is well defined by that component alone.

An example of computations done in PCA and FA for you to see.

Eigenvectors are unit-scaled loadings; and they are the coefficients (the cosines) of orthogonal transformation (rotation) of variables into principal components or back. Therefore it is easy to compute the components' values (not standardized) with them. Besides that their usage is limited. Eigenvector value squared has the meaning of the contribution of a variable into a pr. component; if it is high (close to 1) the component is well defined by that variable alone.

Although eigenvectors and loadings are simply two different ways to normalize coordinates of the same points representing columns (variables) of the data on a biplot, it is not a good idea to mix the two terms. This answer explained why. See also.