Chapter 3 Top 10 List

3.1 Introduction

Given a set of (key-as-string, value-as-integer) pairs, then finding a Top-N ( where N > 0) list is a "design pattern" (a "design pattern" is a language-independent reusable solution to a common problem, which enable us to produce reusable code). For example, let key-as-string be a URL and value-as-integer be the number of times that URL is visited, then you might ask: what are the top-10 URLs for last week? This kind of a question is common for this type of (key, value) pairs.

对于“(key-as-string, value-as-integer)”这种类型的键值对,“Top 10 list” 问题是很常见的,比如说key是URL,value是URL被访问的次数,问你上周的top-10 URLs是什么。这章就是讲述如何用Apache Hadoop (using classic MapReduce's map() and reduce() functions) 以及Apache Spark (using RDD's, Resilient Distributed Datasets) 来解决这个问题,MapReduce针对唯一键,不过可以拓展到“Top-N”问题,Spark则还分为键是否唯一来讨论。

3.2 Top-N Formalized

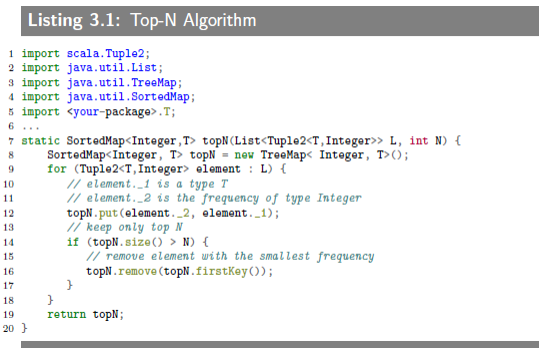

The easy way to implement top-N in Java is to use SortedMap and TreeMap data structures and then keep adding all elements of L to topN, but make sure to remove the first element (an element with the

smallest frequency) of topN if topN.size() > N.

Top-N 的形式化。

3.3 MapReduce Solution

Let cats be a relation of 3 attributes: (cat_id, cat_name, cat_weight) and assume we have billions of cats (big data).

| Attribute Name | Attribute Type |

|---|---|

| cat_id | String |

| cat_name | String |

| cat_weight | Double |

Let N > 0 and suppose we want to find "top N list" of cats (based on cat_weight). Before we delve into MapReduce solution, let’s see how we can express "top 10 list" of cats in SQL:

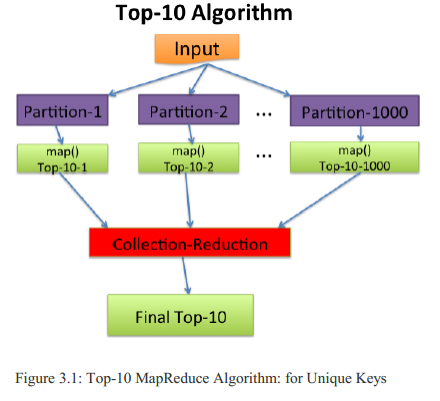

SELECT cat_id, cat_name, cat_weight FROM cats ORDER BY cat_weight DESC LIMIT 10;The MapReduce solution is pretty straightforward: each mapper will find a local "top N list" (for N > 1) and then will pass it to a SINGLE reducer. Then the single reducer will find the final "top N list" from all local "top N list" passed from mappers.

一如既往的开始举例子,设想有一个关于猫的数据(而且是大数据),有id,name和weight三个属性,现在要求基于weight的"top N list"。上面给出了SQL语句的解决方式,很简单粗暴的对整张表排序,具有很大的局限性。很多情况下,我们要处理的数据不像关系数据库那样是结构化的,我们需要能处理像日志文件这种半结构化数据的分析能力。而且,关系型数据库处理大数据,反馈不及时,速度慢。

MapReduce 解决这个问题的思路也很简单:每个mapper负责找出本地的“top N list”,然后全部的结果传到一个reducer,并由其得出最终的“top N list”。通常来说,全部传到一个reducer会导致性能瓶颈问题,因为大量的数据都由一个reducer来做,而集群的其它节点却什么都不做。但是,这个问题不同,每个mapper传给该reducer的数据只是一个本地数据的“top N lsit”,实际上reducer最终处理的数据量并不会很大。

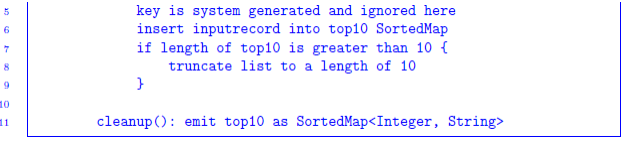

下面是Top-10的算法,一如既往的蛮贴蛮看。数据被分隔成各个小块,每块交给一个mapper来处理。mapper发送自己的输出时,我们使用一个单独的reducer key,这样它们就能被同一个reducer收到。

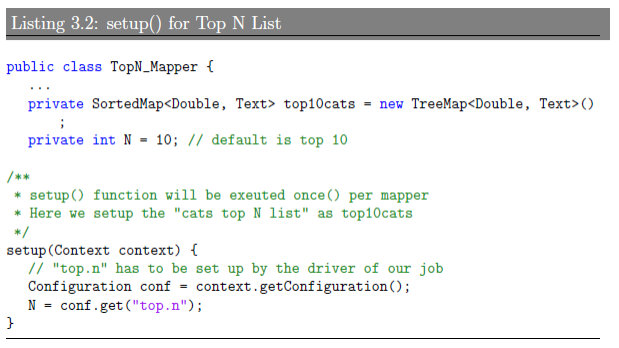

To parameterize the "top N list", we just need to pass the N from the driver (which launched the MapReduce job) to map() and reduce() functions by using MapReduce Configuration object. The driver sets "top.n" parameter and map() and reduce() read that parameter in their setup() functions.

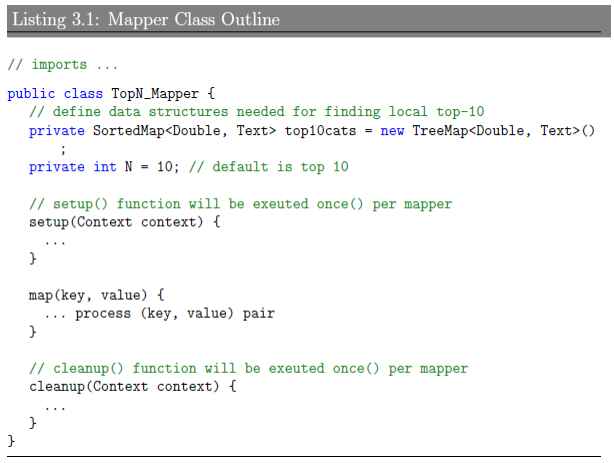

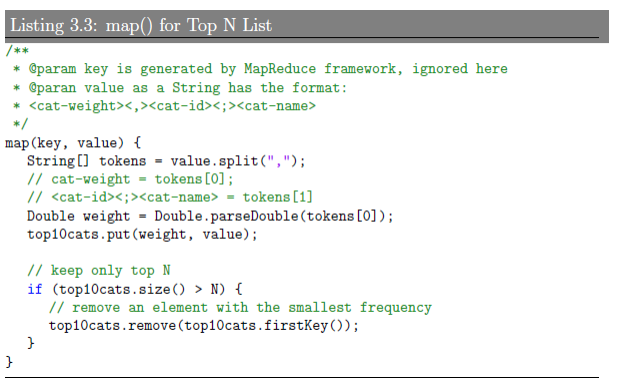

Here we will focus on finding "top N list" of cats. The mapper class will have the following structure:

Next, we define the setup() function:

The map() finction accepts a chunck of input and generates a local top-10 list. We are using different delimiters to optimize parsing input by mappers and reducers (to avoid non-necessary String

concatenations).

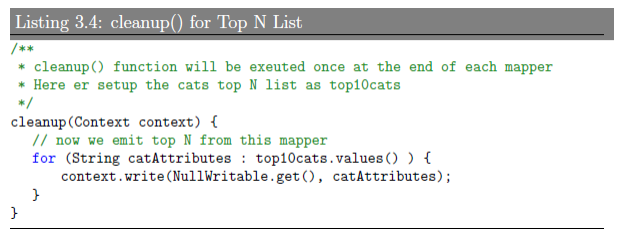

Each mapper accepts a partition of cats. After mapper finishes creating a top-10 list asSortedMap<Double, Text>, the cleanup() method emits the top-10 list. Note that we use a single key as NullWritable.get(), which guarantees that all mappers’s output will be consumed by a single reducer.

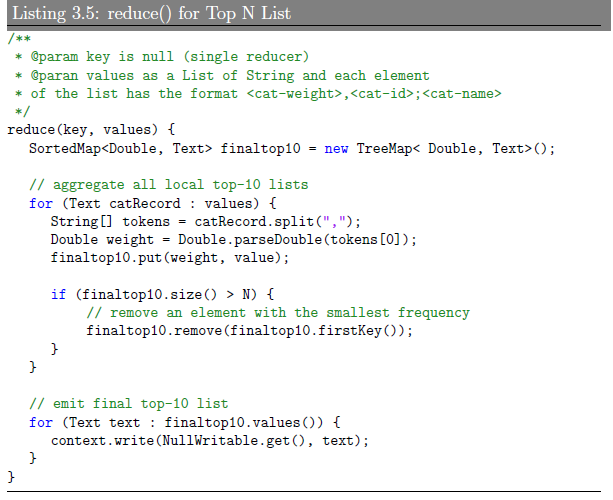

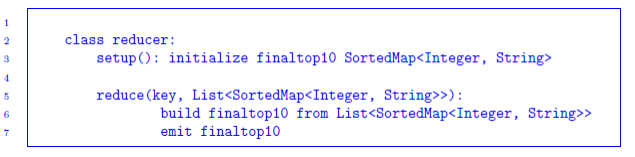

The single reducer gets all local top-10 lists and create a single final top-10 list.

3.4 Implementation in Hadoop

The MapReduce/Hadoop implementation is comprised of the following classes:

| Class Name | Class Description |

|---|---|

| TopN_Driver | Driver to submit job |

| TopN_Mapper | Defines map() |

| TopN_Reducer | Defines reducer() |

The TopN_Driver class reads N (for Top-N) from a command line and sets it in the Hadoop’s Configuration object to be read by the map() function.

实现这个方法需要上面的类,其中“TopN_Driver”类可以从命令行读入“N”用来做相关的参数配置。然后是,跳过书上的运行示例,就从输入文件读入,然后执行并输出到输出文件这些。

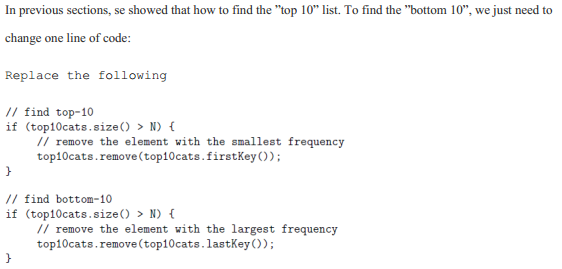

3.5 Bottom 10

3.6 Spark Implementation: Unique Keys

Spark provides StorageLevel class, which has flags for controlling

the storage of an RDD. Some of these flags are:

- MEMORY_ONLY (use only memory for RDDs)

- DISK_ONLY (use only hard disk for RDDs)

- MEMORY_AND_DISK (use combination of memory and disk for RDDs).

这里我们假设键是唯一的。Spark有着更高级的抽象和丰富的 API,编程相对容易,可以从HDFS或其他Hadoop支持的文件系统读取写入,而且在同样条件下运行得更快。Spark还提供存储级别上的类,就像上面引用的一样。

3.6.1 Introduction

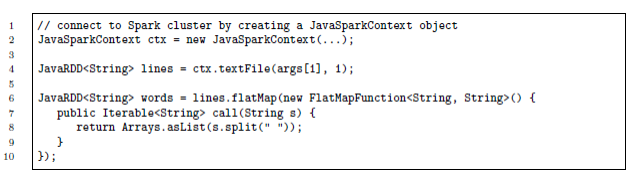

the following code snippet presents two RDD’s (lines and words):

The following table explains the code:

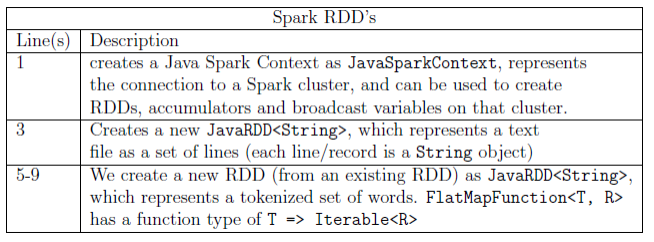

Spark is very powerfull in creating new RDD’s from existing ones. For example, below, we use lines to create a new RDD asJavaPairRDD<Integer, String>as pairs.

Each item inJavaPairRDD<String,Integer>represents aTuple2<String,Integer>, Here we assume that each input record has two tokens:<String> <,> <Integer>.

为了理解Spark,我们需要理解RDD(Resilient Distributed Dataset,弹性分布式数据集)的概念。RDD是Spark的基本抽象,代表一个不可改变,分割的元素集合,还可以被并行地操作。与同时处理多种不同类型的输入输出不同,你只需要处理RDD,因为RDD可以表示不同类型的输入输出。真的是很抽象的概念,不过出现的频率相对来说很高了。

3.6.2 What is an RDD?

In Spark, an RDD (Resilient Distributed Dataset) is the basic data abstraction. RDDs are used to represent a set of immutable objects in Spark. For example, to represent a set of Strings, we can

useJavaRDD<String>and to represent (key-as-string, value-as-integer) pairs, we can useJavaPairRDD<String,Integer>. RDDs enable MapReduce opertions (such as map and reduceByKey) to run in parallel (parallelism is achived by partitioning RDDs). Spark’s API enable us to implement custom RDDs.

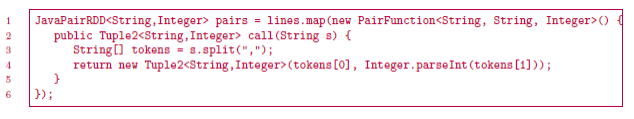

3.6.3 Spark's Function Classes

3.6.4 Spark Solution for Top-10 Pattern

Let’s assume that our input records will have the following

format:

and the goal is to find Top-10 list for a given input. First, we will partition input into segments (let’s say, we partition our input into 1000 mappers – each mapper will work on one segment of the partition independently):

The job of a reducer is almost similar to the mapper: it finds the top-10 from a given set of all top-10 generated by mappers. The reducer will get a collection ofSortedMap<Integer, String>(as an

input) and will create a single finalSortedMap<Integer, String>as an output.

Spark提供了基于Mapreduce模型的高级抽象,你甚至有可能用一个驱动程序(driver)就完成你处理大数据的任务(job)。这个例子用Spark来解决的算法思路和上面的一样,把大数据分割成块,分给很多mapper找出每块的“Top-10”,把全部的“Top-10”传给一个reducer来找出整个数据规模上的“Top-10”。这里约定了一下输入的格式,mapper及reducer需要实现的功能。书上还特别提到,mapper里的setup()和cleanup()在Spark里并没有相关的支持,我们可以用Spark的JavaPairRDD.mapFunctions()来实现。

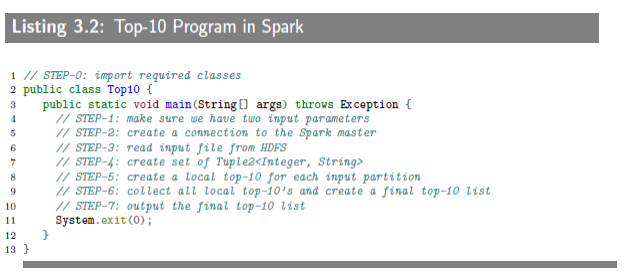

3.6.5 Complete Spark Solution for Top-10 Pattern

只用一个Java类来实现这个算法,总的步骤如上,每步具体的代码如下照例蛮贴蛮看。

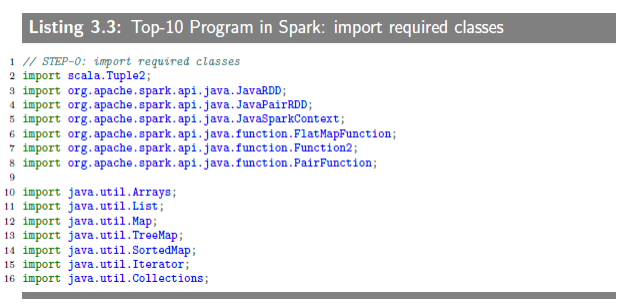

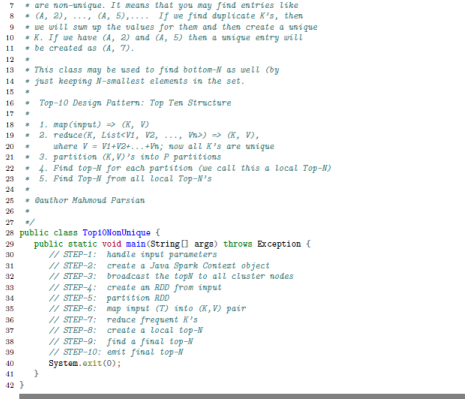

3.6.5.1 Top10 class: STEP-0

就引入各种需要的包。

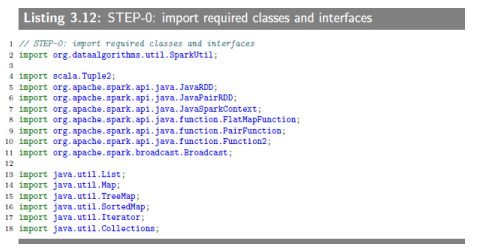

3.6.5.2 Top10 class: STEP-1

设置两个参数:Spark-Master和HDFS Input File,示例如上。

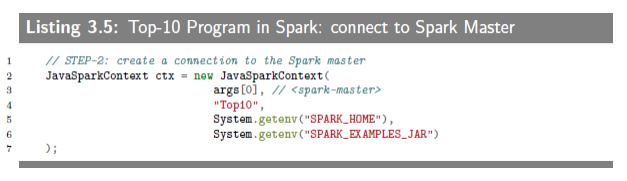

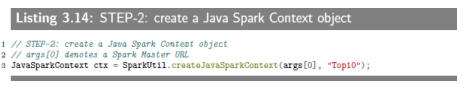

3.6.5.3 Top10 class: STEP-2

创建对象“JavaSparkContext”来建立和Spark-Master之间的连接,我们还需要用它创建其它的RDD.

3.6.5.4 Top10 class: STEP-3

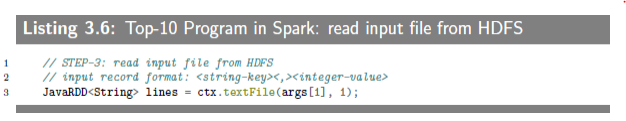

从HDFS读入文件,并保存在新创建的RDDJavaRDD<string>中。

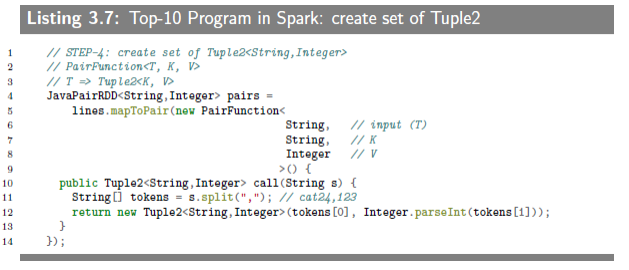

3.6.5.5 Top10 class: STEP-4

用JavaRDD<string>创建JavaPairRDD<Integer, String>。

3.6.5.6 Top10 class: STEP-5

找出本地的“Top-10”。

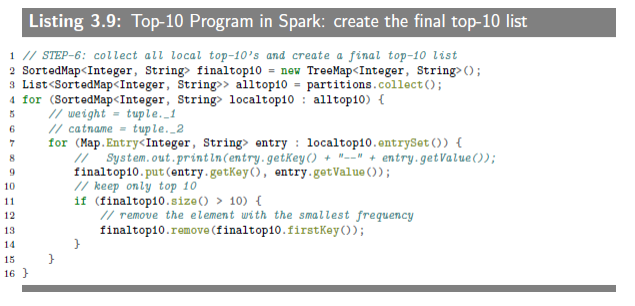

3.6.5.7 Top10 class: STEP-6

获取所有的local top-10 lists并计算最终的“Top-10”。

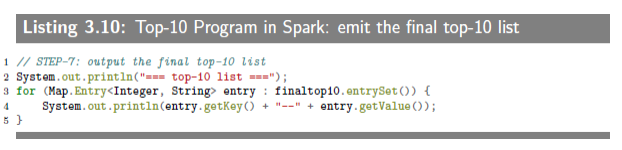

3.6.5.8 Top10 class: STEP-7

打印最终结果。

照例跳过“3.6.6-7”的运行示例。

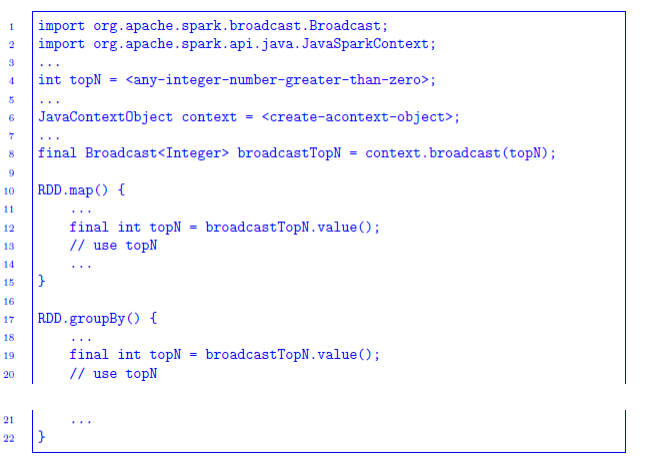

3.7 What if for Top-N

在Spark里,我们可以让N变成一个全球性的共享数据,这样任何集群的节点都可以获得N,从而扩展到可以解决“Top-N”问题。

3.7.1 Shared Data Structures Definition and Usage

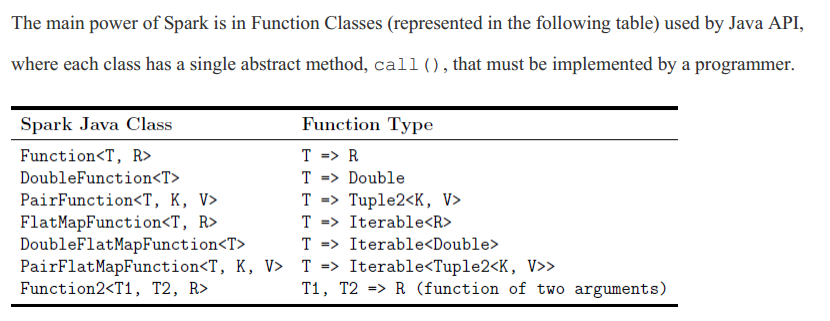

- Lines 1-2: import required classes. The Broadcast class enable us to define global shared data structures and then read them from any cluster node within mappers, reducers, and transformers. The general format to define a shared data structure of type T is:

T t = <create-data-structure-of-type-T>; Broadcast<T> broadcastT = context.broadcast(t);After a data structure (broadcastT is broadcasted, then it may be read from any cluster node within mappers, reducers, and transformers.

- Line 4: define your top-N as top-10, top-20, or top-100

- Line 6: create an instance of JavaContextObject

- Line 8: define a global shared data structure for topN (which can be any value)

- Line 12, 19: read and use a global shared data structure for topN (from any cluster node). The general format to read a shared data structure of type T is:

T t = broadcastT.value();

用broadcast类来定义全球性共享的数据结构,等该数据结构被广播之后,我们就可以在任意的集群节点访问到该数据结构。

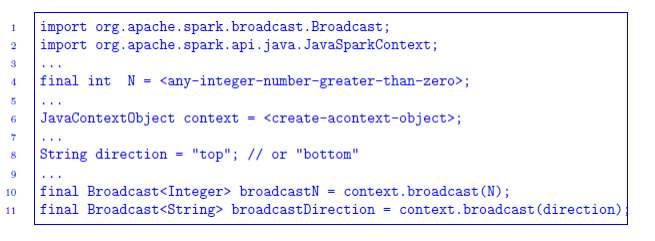

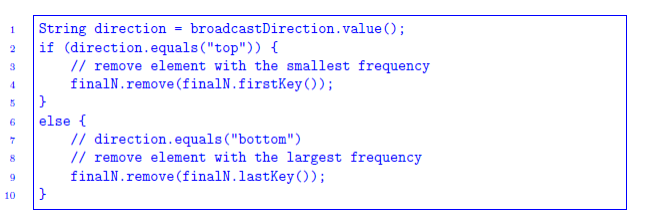

3.8 What if for Bottom-N

Now, based on the value of broadcastDirection, we will either remove the first entry (when direction is equal to "top") or last entry (when direction is equal to "bottom"): this has to be done consistently to all code.

3.9 Spark Implementation: Non-Unique Keys

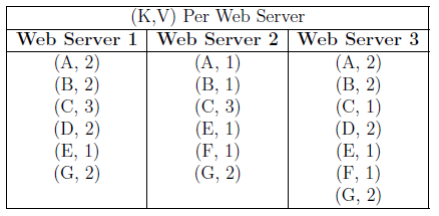

To further understand the non-unique keys concept, let’s assume that we have only 7 URLs : {A, B, C, D, E, F, G} and the following are tallies of URLs generated per web server:

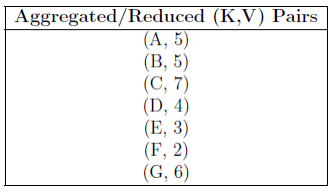

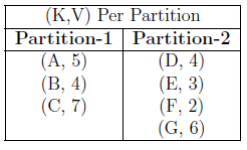

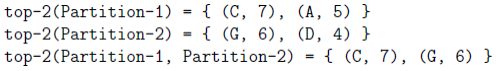

Let’s assume that we want to get top-2 of all visited URLs. If we get local top-2 per each web server and then get the top-2 of all three local top-2’s, the result will not be correct. The reason is that URLs are not unique among all web servers. To make a correct solution, first we create unique URLs from all input and then we partition unique URLs into M > 0 partitions. Next we get the local top-2 per partition and finally we perform final top-2 amng all local top-2’s. For our example, the generated unique URLs will be:

Now assume that we partition all unique URLs into two partitions:

To find Top-2 of all data:

So the main point is that before finding Top-N of any set of (K,V) pairs, we have to make sure that all K’s are unique.

之前的算法默认键是唯一的,现在讨论不唯一的情况。为了说明这二者的差别,书上举了上面一个例子。设想有一个网站,仍然是统计访问次数最多的URL,但是有三个服务分别记录着访问键值对。从上面的引用可以看出,为了得到正确的结果,我们需要先把键变成唯一的。

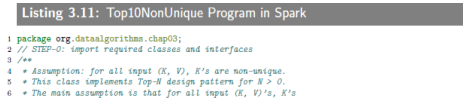

3.9.1 Complete Spark Solution for Top-10 Partten

实际上这是一个通用的方法,有一步是用来确保键是唯一的。

3.9.1.1 Input

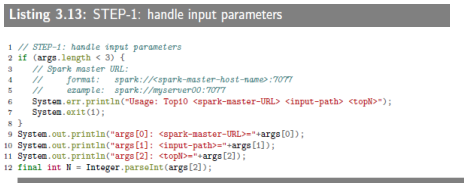

3.9.1.2 STEP-1: handle input parameters

3.9.1.3 STEP-2: create a Java Spark Context object

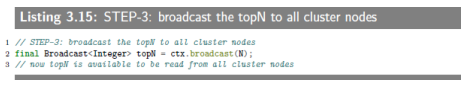

3.9.1.4 STEP-3: broadcast the topN to all cluster nodes

To broadcast or share objects and data structures among all cluster nodes, you may use Spark’s Broadcast class.

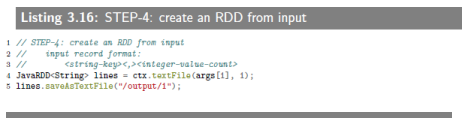

3.9.1.5 STEP-4: create an RDD from input

Input data is read from HDFS and the first RDD is created.

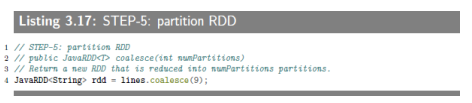

3.9.1.6 STEP-5: partition RDD

There is no magic bullet formula for calculating the number of partitions. This does depend on the number of cluster nodes, the

number of cores per server, and the size of RAM available. My experience indicate that you need to set this by trial and experience.

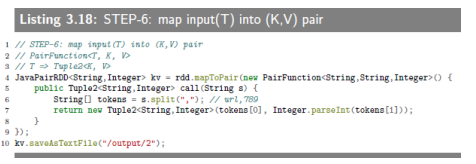

3.9.1.7 STEP-6: map input(T) into (K, V) pair

This step does basic mapping: it converts every input record into a (K,V) pair, where K is a "key such as URL" and V is a "value such as count". This step will generate duplicate keys.

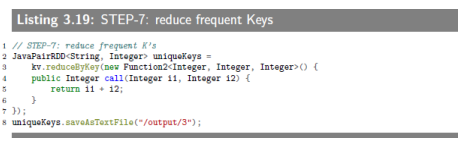

3.9.1.8 STEP-7: reduce frequent Keys

Previos step (STEP-6) generated duplicate keys. This step creates unique keys and aggregates the associated values.

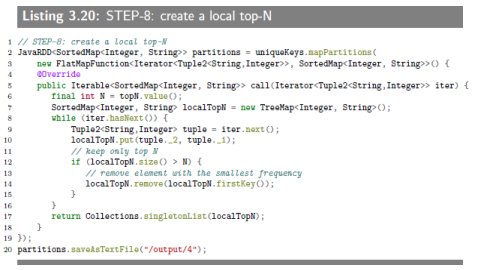

3.9.1.9 STEP-8: create a local top-N

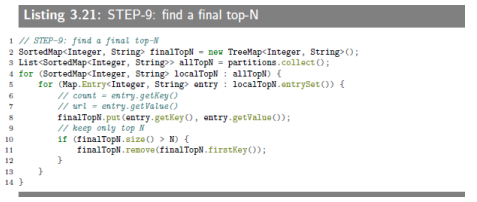

3.9.1.10 STEP-9: find a final top-N

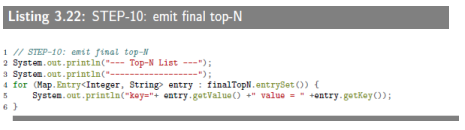

3.9.1.11 STEP-10: emit final top-N

跳过运行脚本和运行示例。

稍微总结一下这一章。知道了“Top 10 List”到底是什么问题,就是找出一堆数据里面某些属性的Top 10这样。因为是大数据,而且大多情况要处理非结构化数据,所以关系型数据库并不适用于解决此类问题。至于Hadoop和Spark解决这个问题的算法,思路也很简单,把大数据分块交给各个Mapper来找出本地的Top-10,最后传给一个reducer整合出最终的Top-10。因为每个Mapper传的只是个“Top10 list”,最终那个reducer处理数据量并不会很大,所以不会有性能瓶颈的问题。还谈到了扩展成“Top-N”及“Bottom-N”,以及键是否唯一的问题。关于具体的实现,书上也是讲了很多,蛮贴蛮看的代码让博文看起来特别长,现在是打不出来的。总的来说,还是只是理解了一些概念,问题不大,继续往下。