实验3:OpenFlow协议分析实践

实验3:OpenFlow协议分析实践

一、实验目的

- 能够运用 wireshark 对 OpenFlow 协议数据交互过程进行抓包;

- 能够借助包解析工具,分析与解释 OpenFlow协议的数据包交互过程与机制。

二、实验环境

- 下载虚拟机软件Oracle VisualBox;

- 在虚拟机中安装Ubuntu 20.04 Desktop amd64,并完整安装Mininet;

三、实验要求

一)基本要求

- 搭建下图所示拓扑,完成相关 IP 配置,并实现主机与主机之间的 IP 通信。用抓包软件获取控制器与交换机之间的通信数据包。

| 主机 | IP地址 |

|---|---|

| h1 | 192.168.0.101/24 |

| h2 | 192.168.0.102/24 |

| h3 | 192.168.0.103/24 |

| h4 | 192.168.0.104/24 |

新建topo.py文件,其代码为

from mininet.net import Mininet

from mininet.node import Controller, RemoteController, OVSController

from mininet.node import CPULimitedHost, Host, Node

from mininet.node import OVSKernelSwitch, UserSwitch

from mininet.node import IVSSwitch

from mininet.cli import CLI

from mininet.log import setLogLevel, info

from mininet.link import TCLink, Intf

from subprocess import call

def myNetwork():

net = Mininet( topo=None,

build=False,

ipBase='192.168.0.0/24')

info( '*** Adding controller\n' )

c0=net.addController(name='c0',

controller=Controller,

protocol='tcp',

port=6633)

info( '*** Add switches\n')

s1 = net.addSwitch('s1', cls=OVSKernelSwitch)

s2 = net.addSwitch('s2', cls=OVSKernelSwitch)

info( '*** Add hosts\n')

h1 = net.addHost('h1', cls=Host, ip='192.168.0.101/24', defaultRoute=None)

h2 = net.addHost('h2', cls=Host, ip='192.168.0.102/24', defaultRoute=None)

h3 = net.addHost('h3', cls=Host, ip='192.168.0.103/24', defaultRoute=None)

h4 = net.addHost('h4', cls=Host, ip='192.168.0.104/24', defaultRoute=None)

info( '*** Add links\n')

net.addLink(h1, s1)

net.addLink(s1, s2)

net.addLink(s2, h2)

net.addLink(s2, h4)

net.addLink(s1, h3)

info( '*** Starting network\n')

net.build()

info( '*** Starting controllers\n')

for controller in net.controllers:

controller.start()

info( '*** Starting switches\n')

net.get('s1').start([c0])

net.get('s2').start([c0])

info( '*** Post configure switches and hosts\n')

CLI(net)

net.stop()

if __name__ == '__main__':

setLogLevel( 'info' )

myNetwork()

导出的拓扑文件存入目录:/home/用户名/学号/lab3

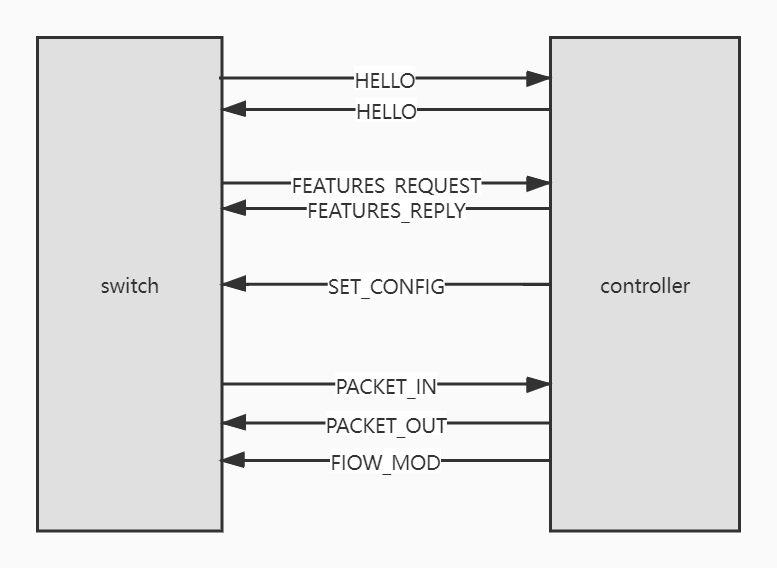

2.查看抓包结果,分析OpenFlow协议中交换机与控制器的消息交互过程,画出相关交互图或流程图

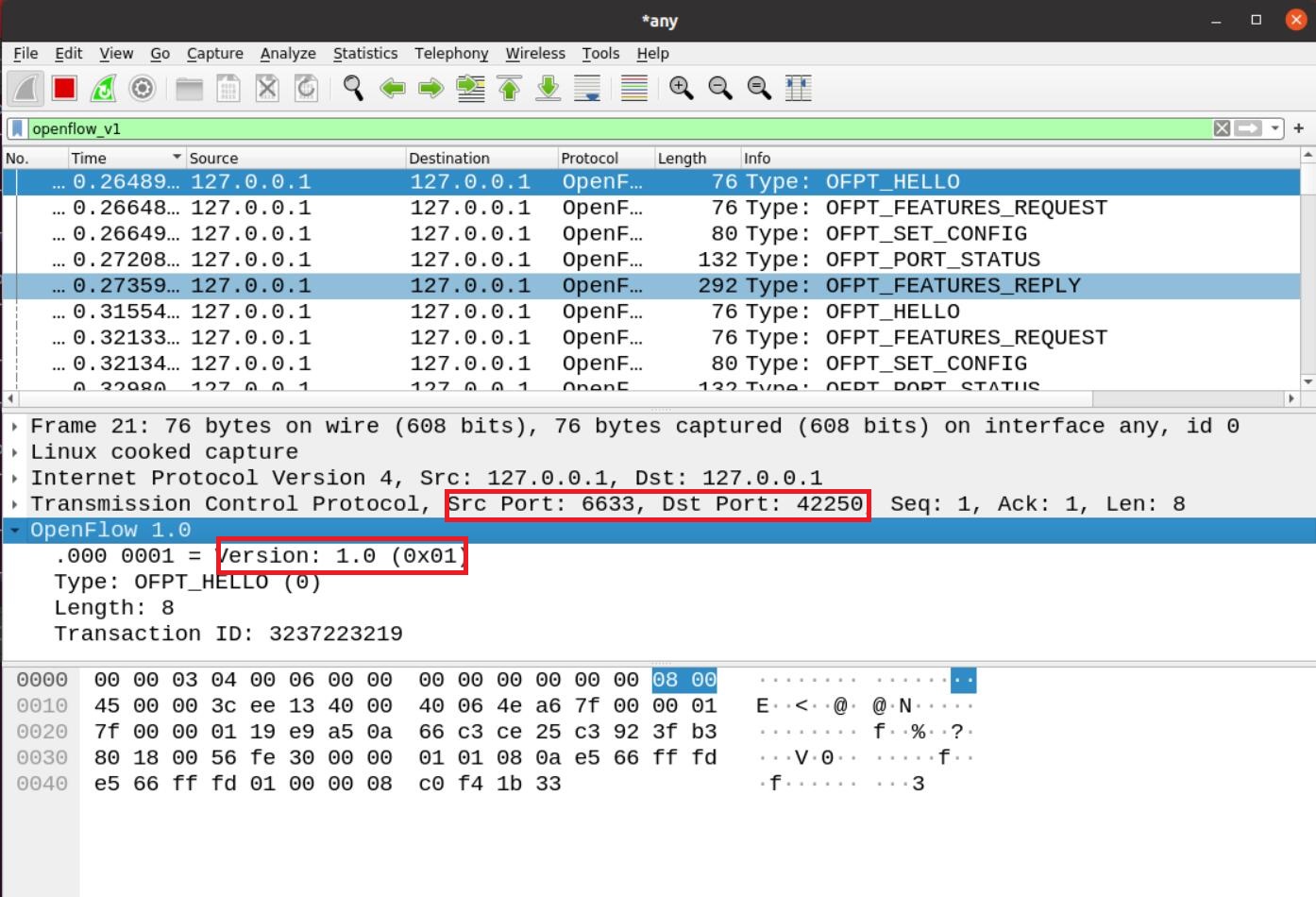

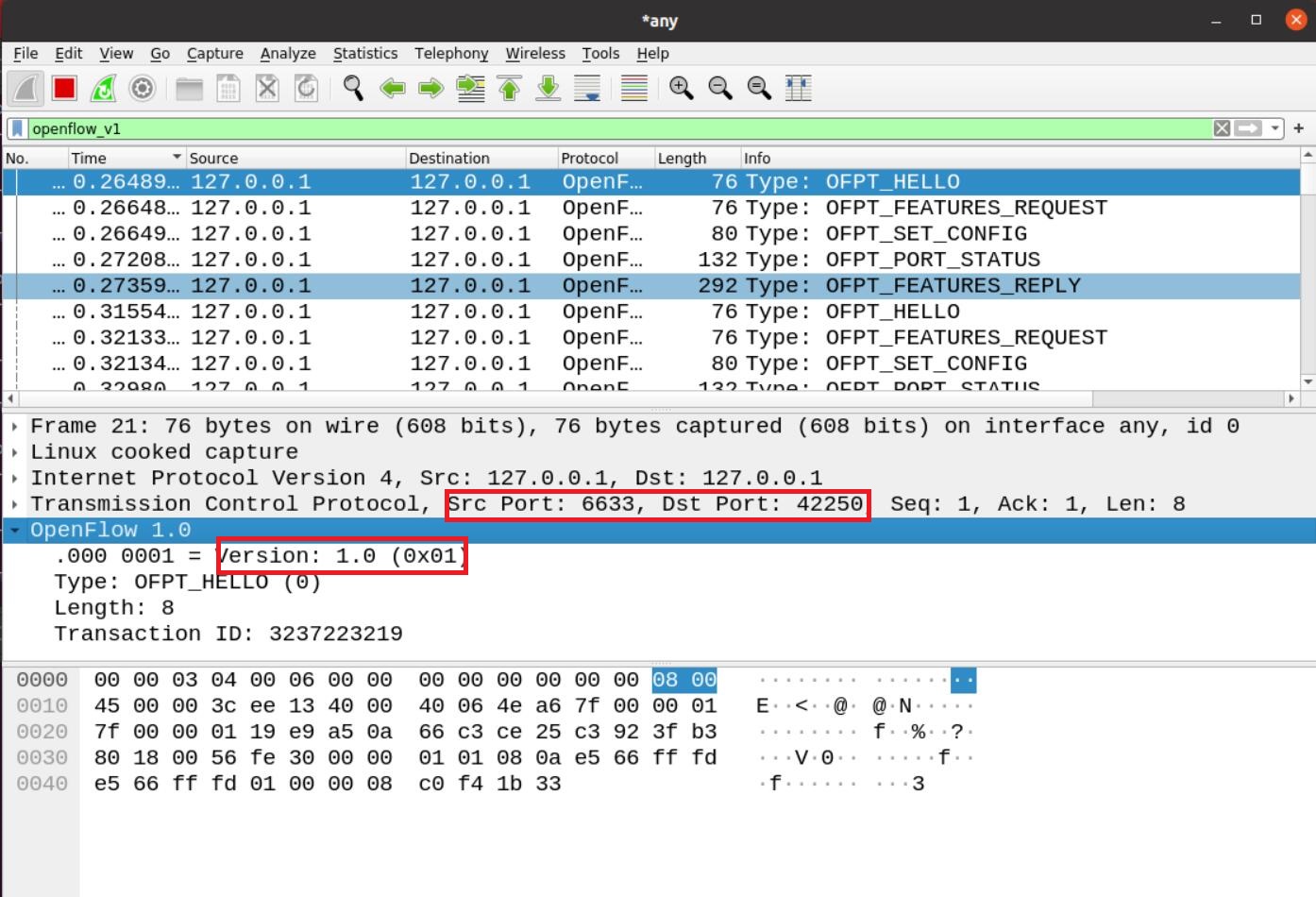

(1)Hello

- 控制器6633端口(我最高能支持OpenFlow 1.0) ---> 交换机42250端口

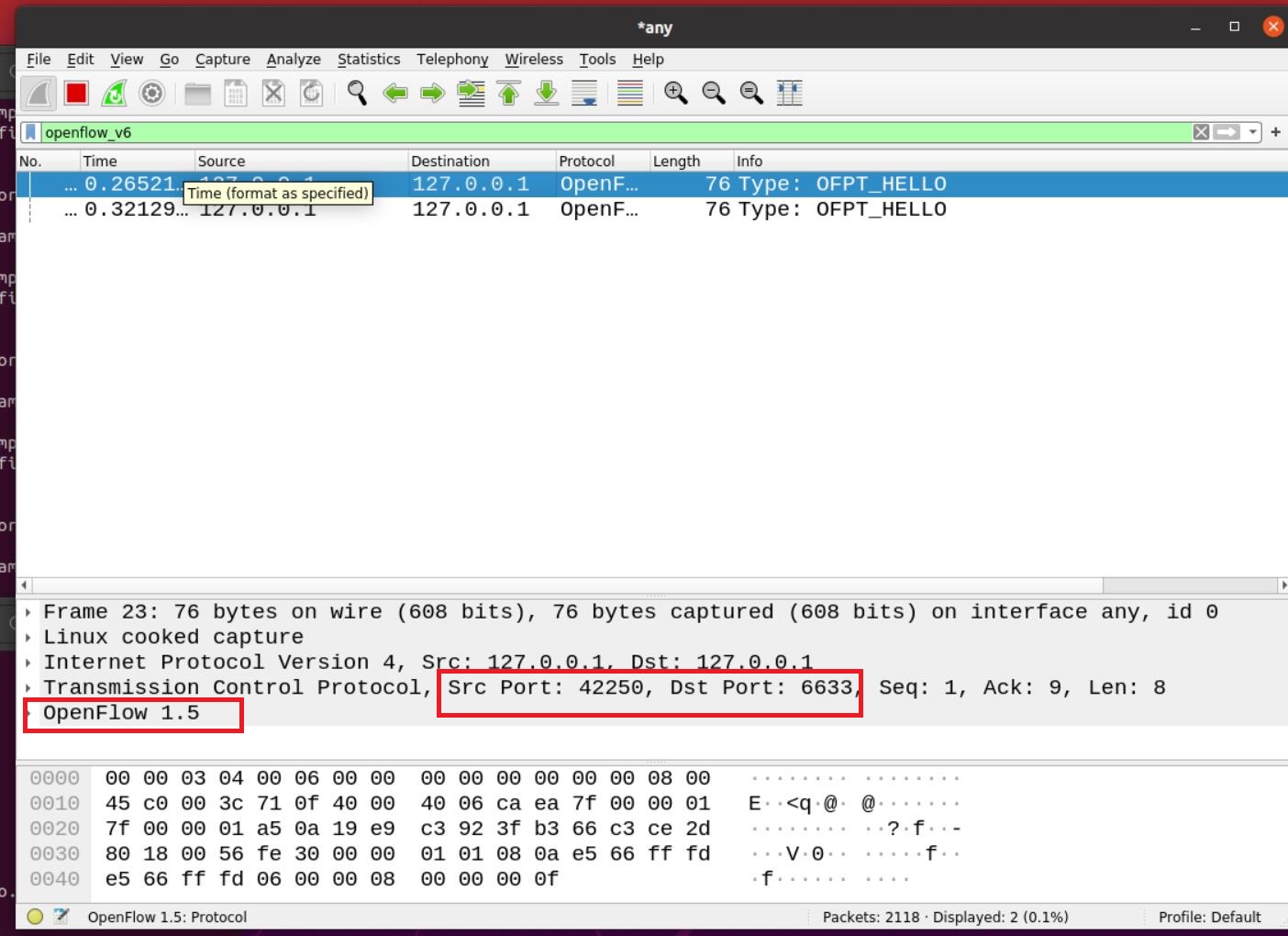

- 交换机端口42250(我最高能支持OpenFlow 1.5)--- 控制器6633端口

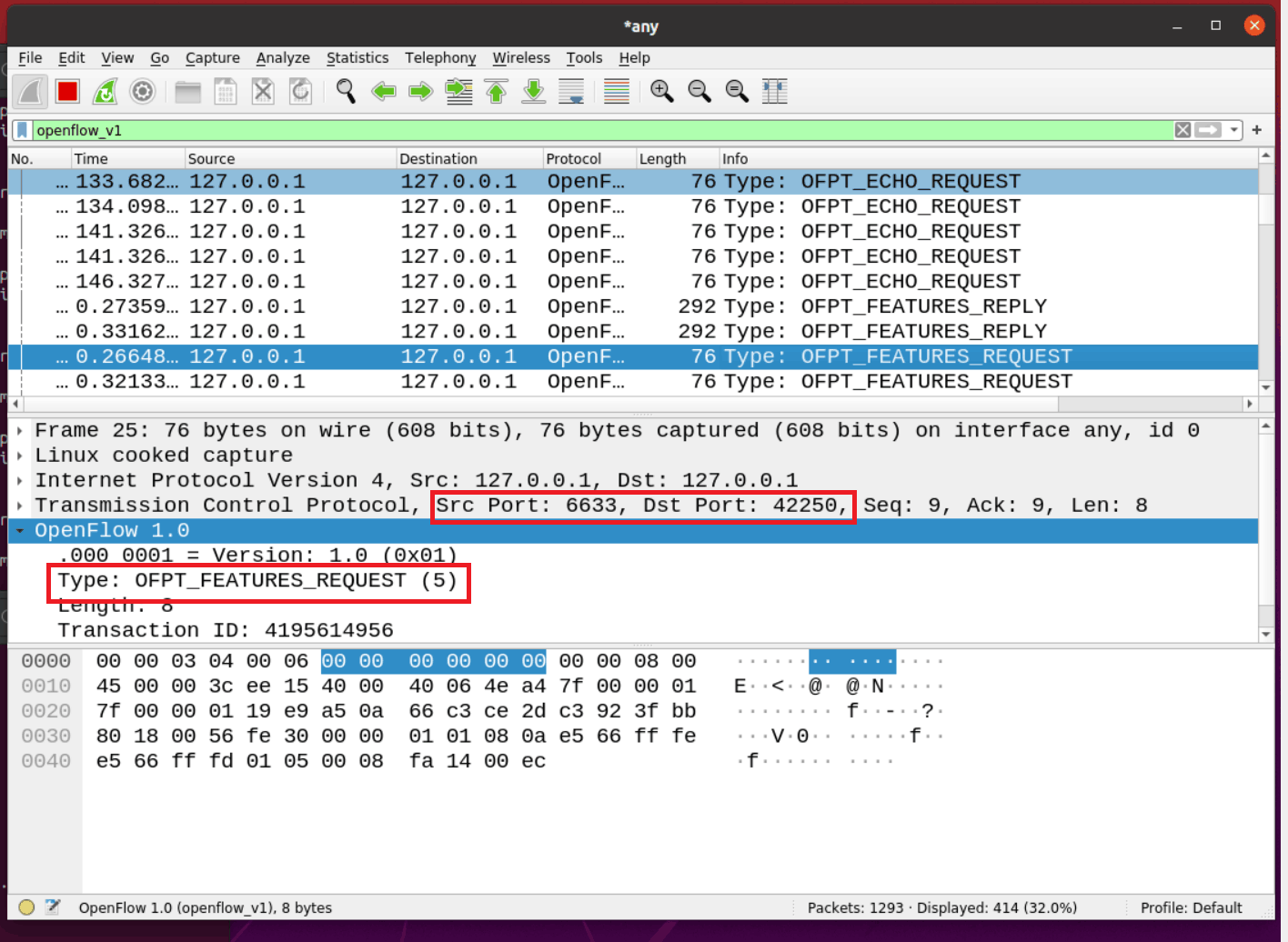

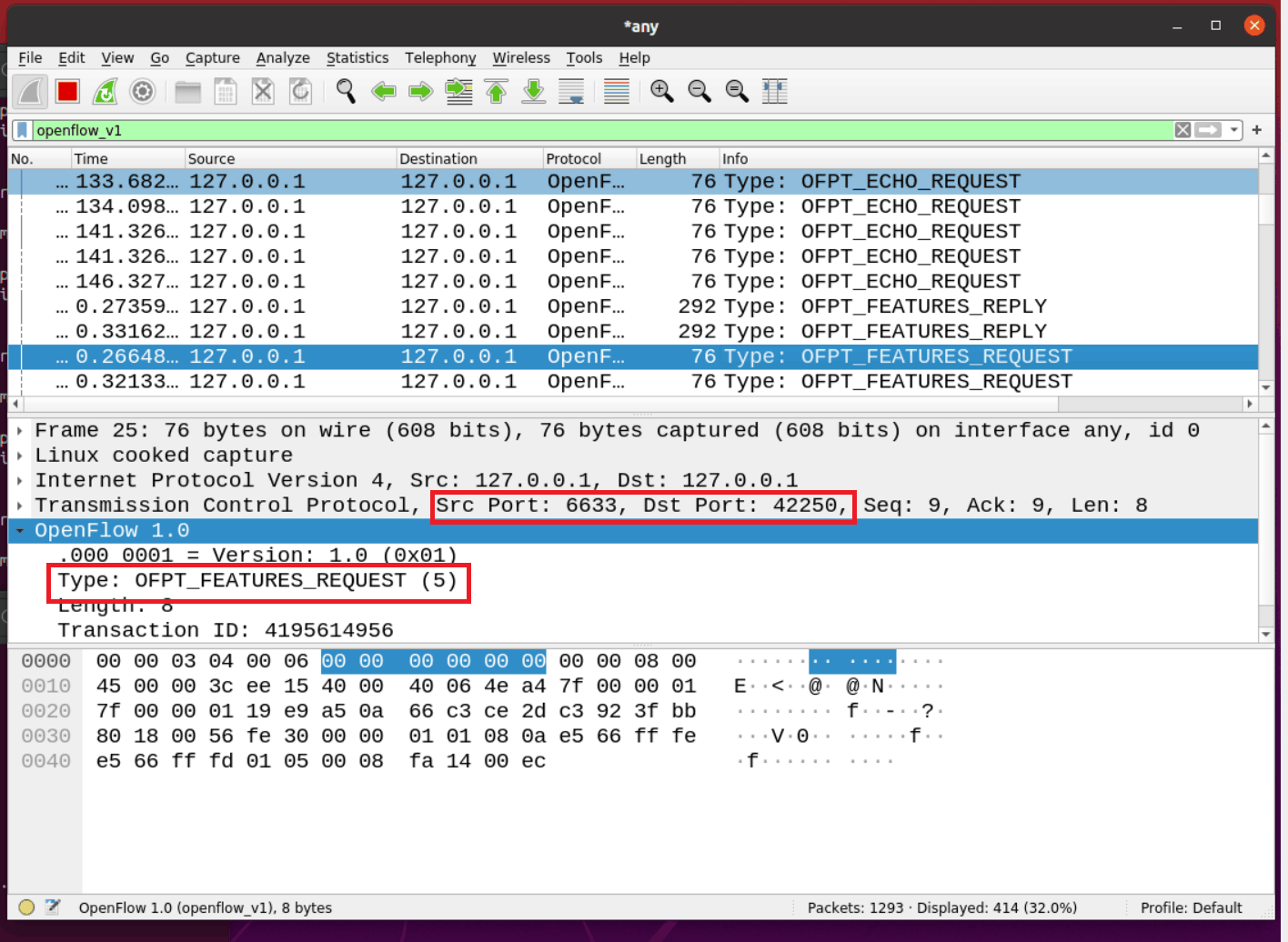

(2)Features Request

- 控制器6633端口(我需要你的特征信息) ---> 交换机42250端口

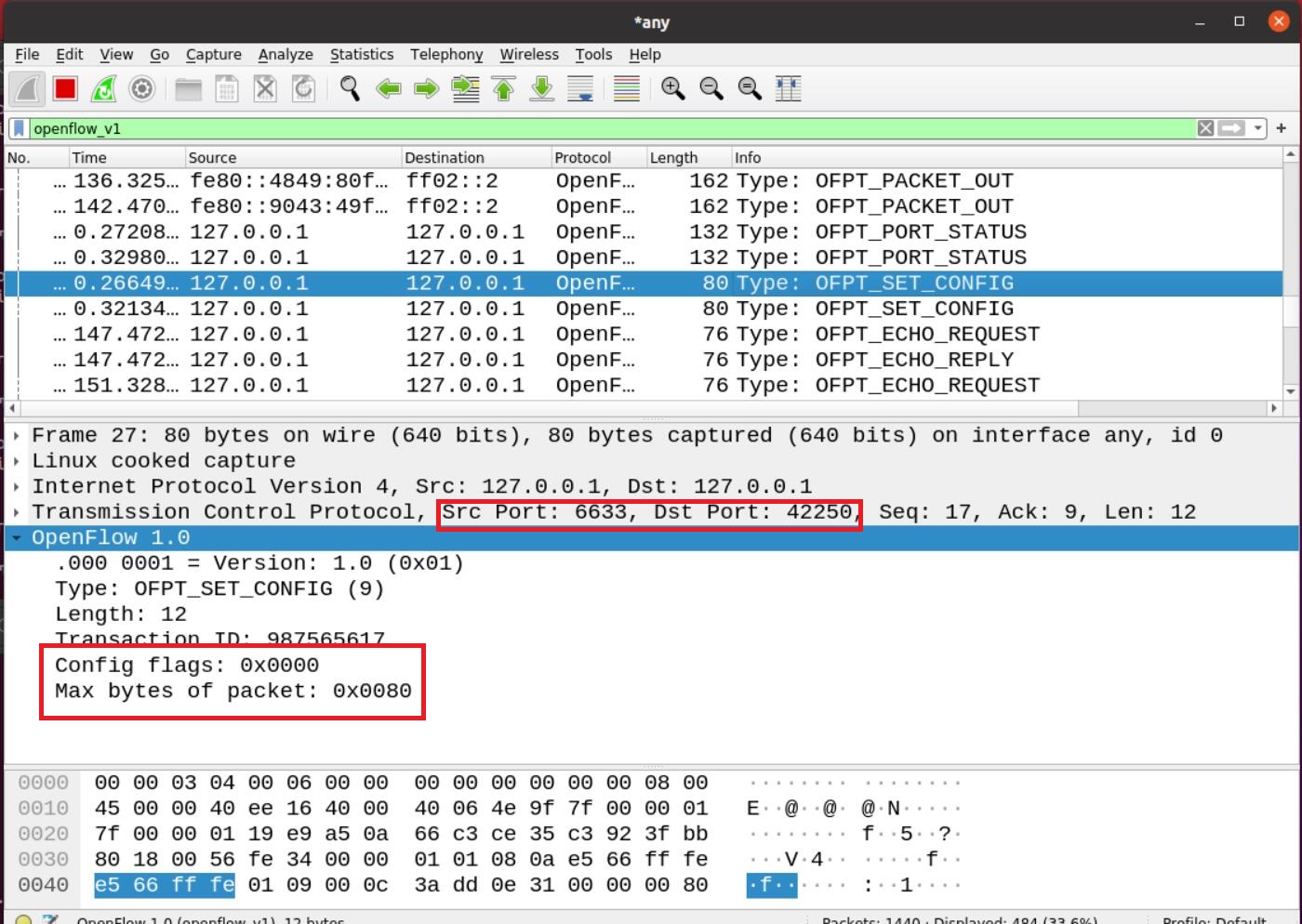

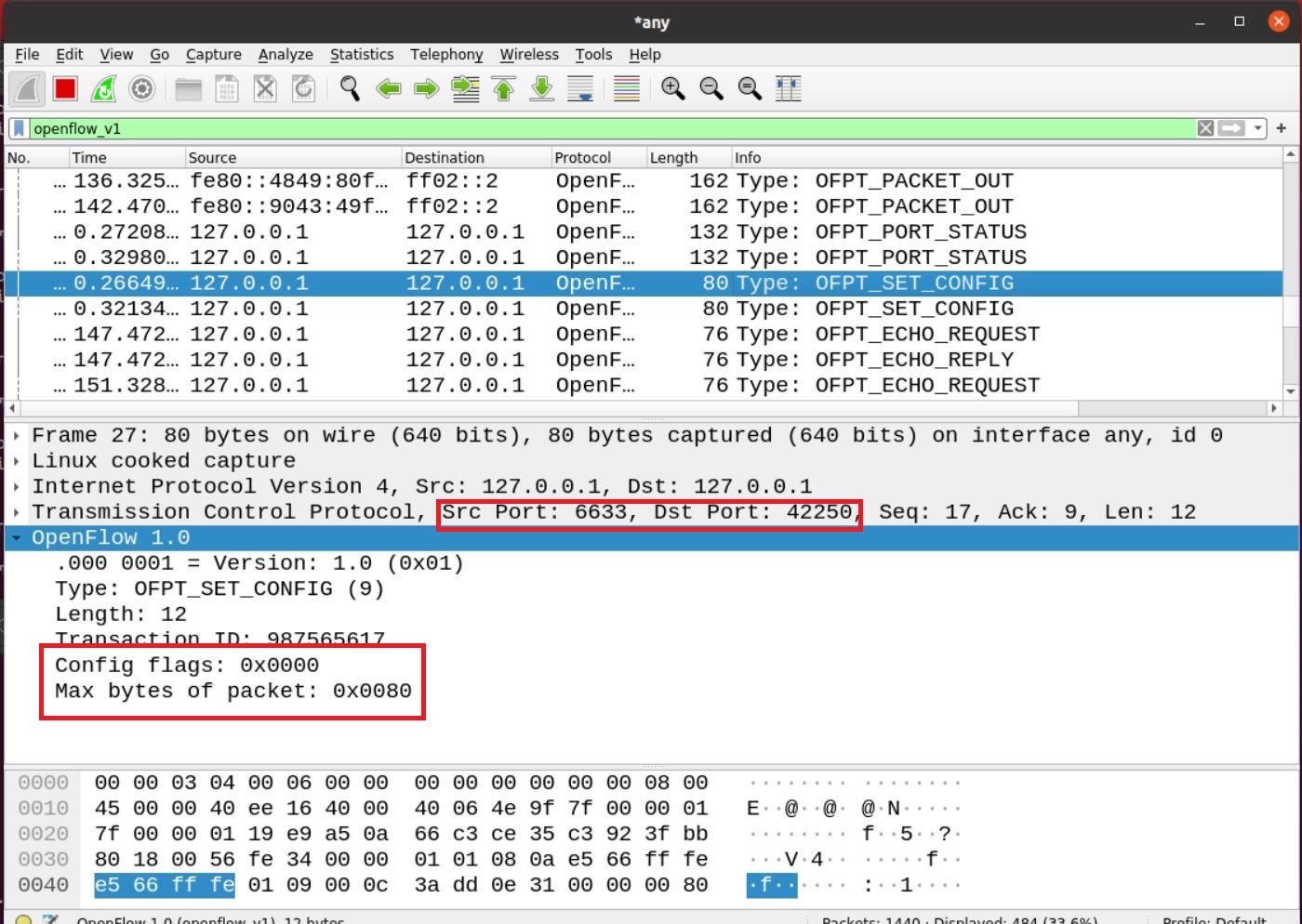

(3)OFPT_SET_CONFIG

- 控制器6633端口 ---> 交换机42250端口

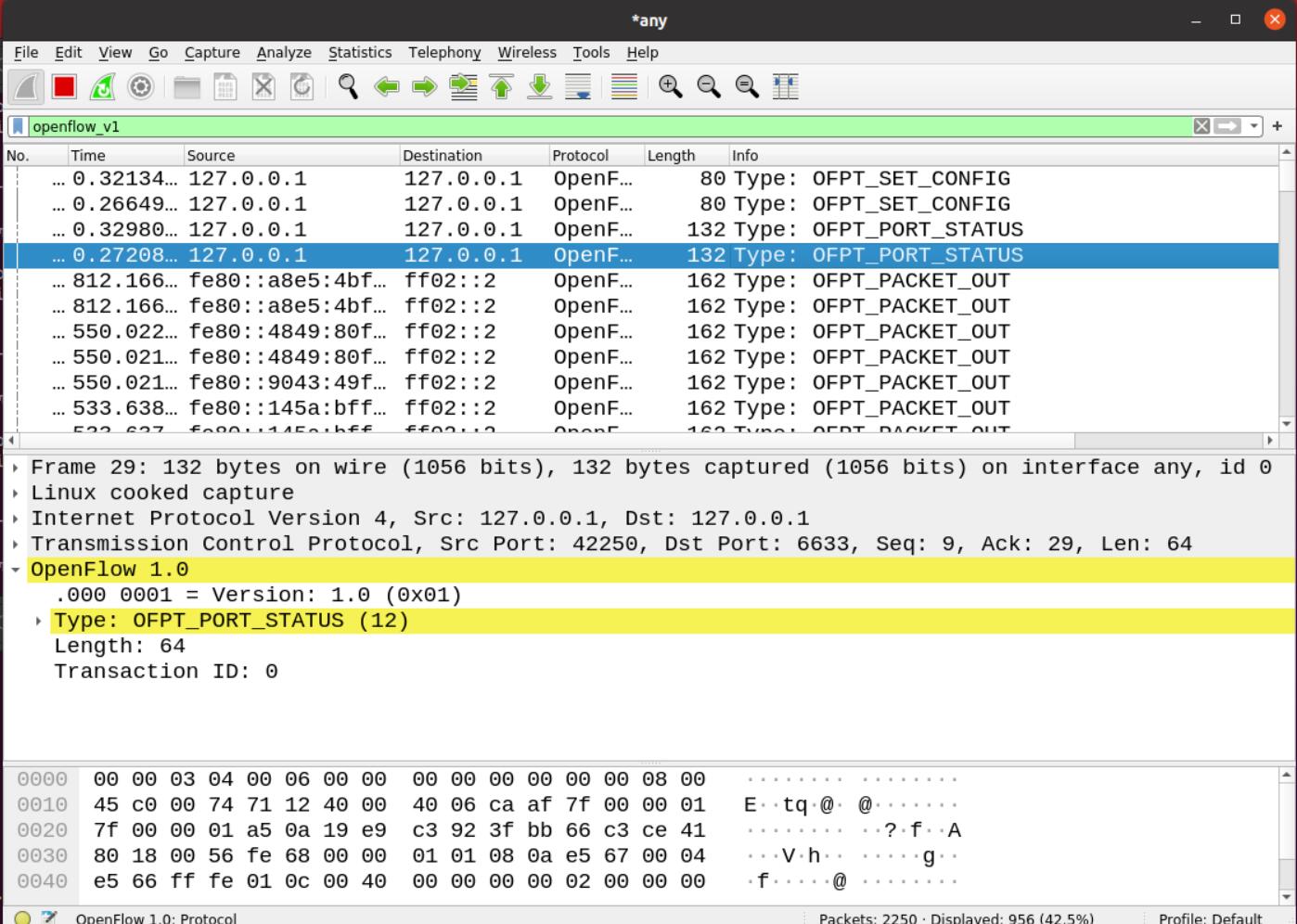

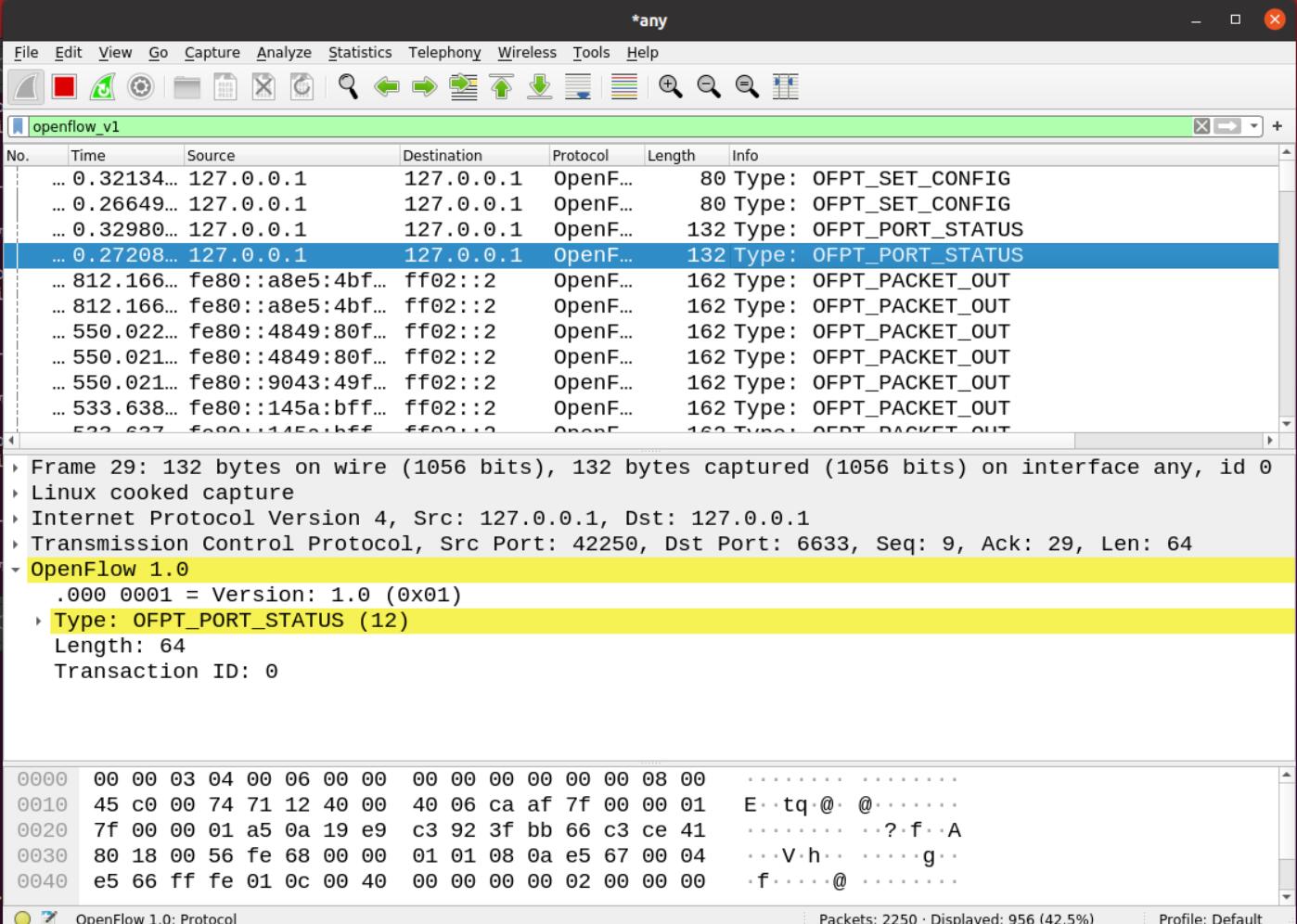

(4)OFPT_PORT_STATUS

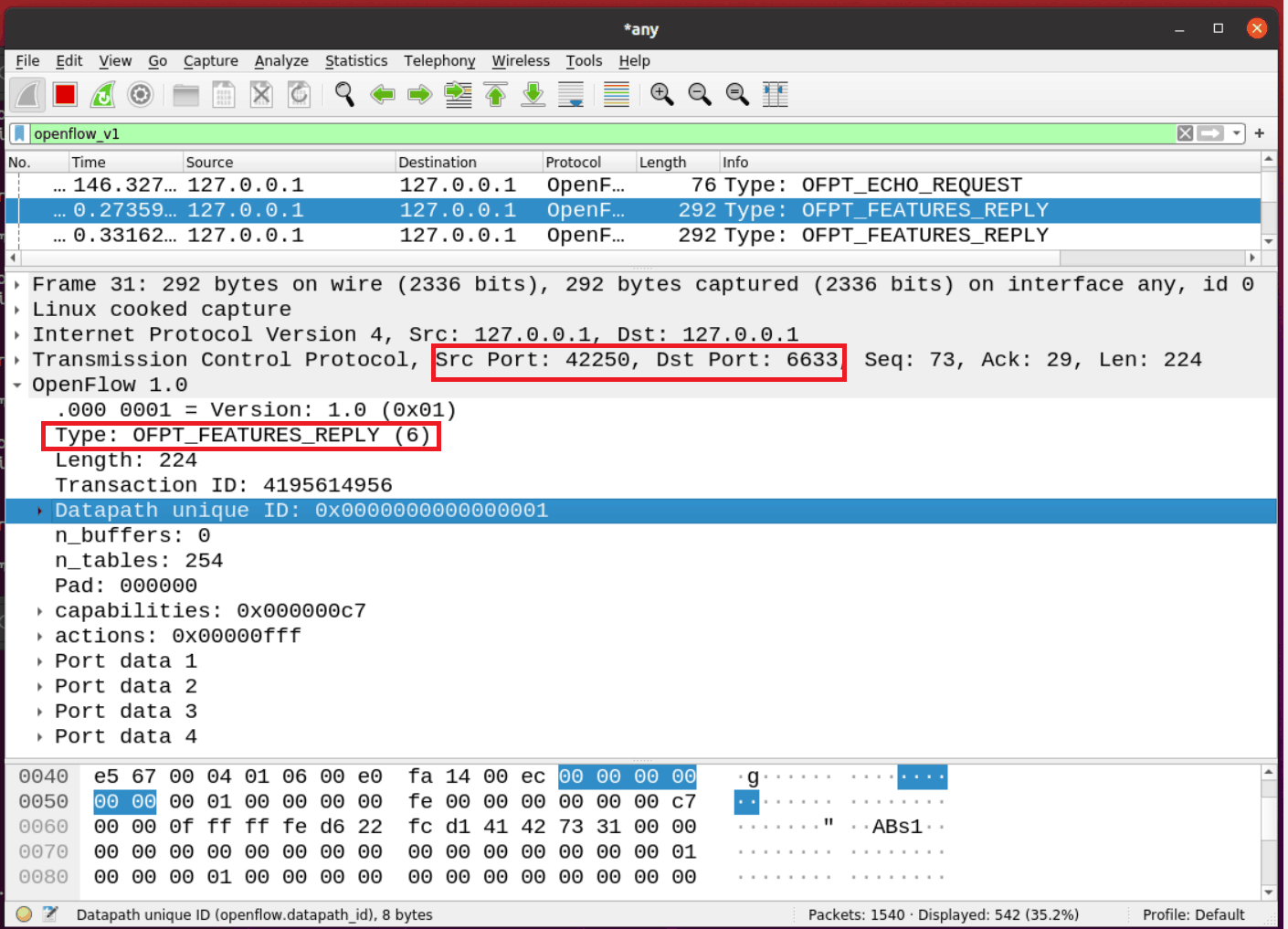

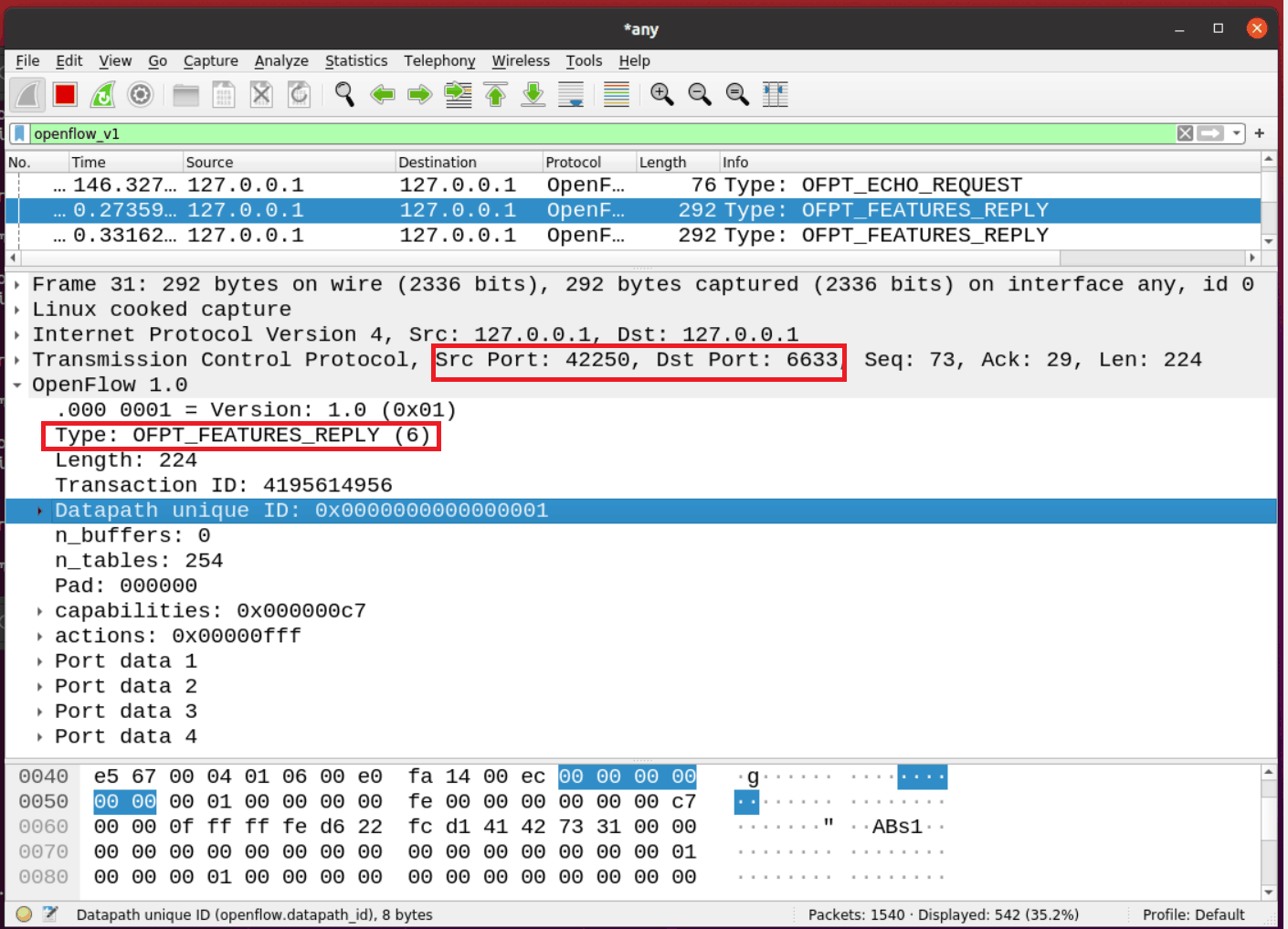

(5)Features Reply

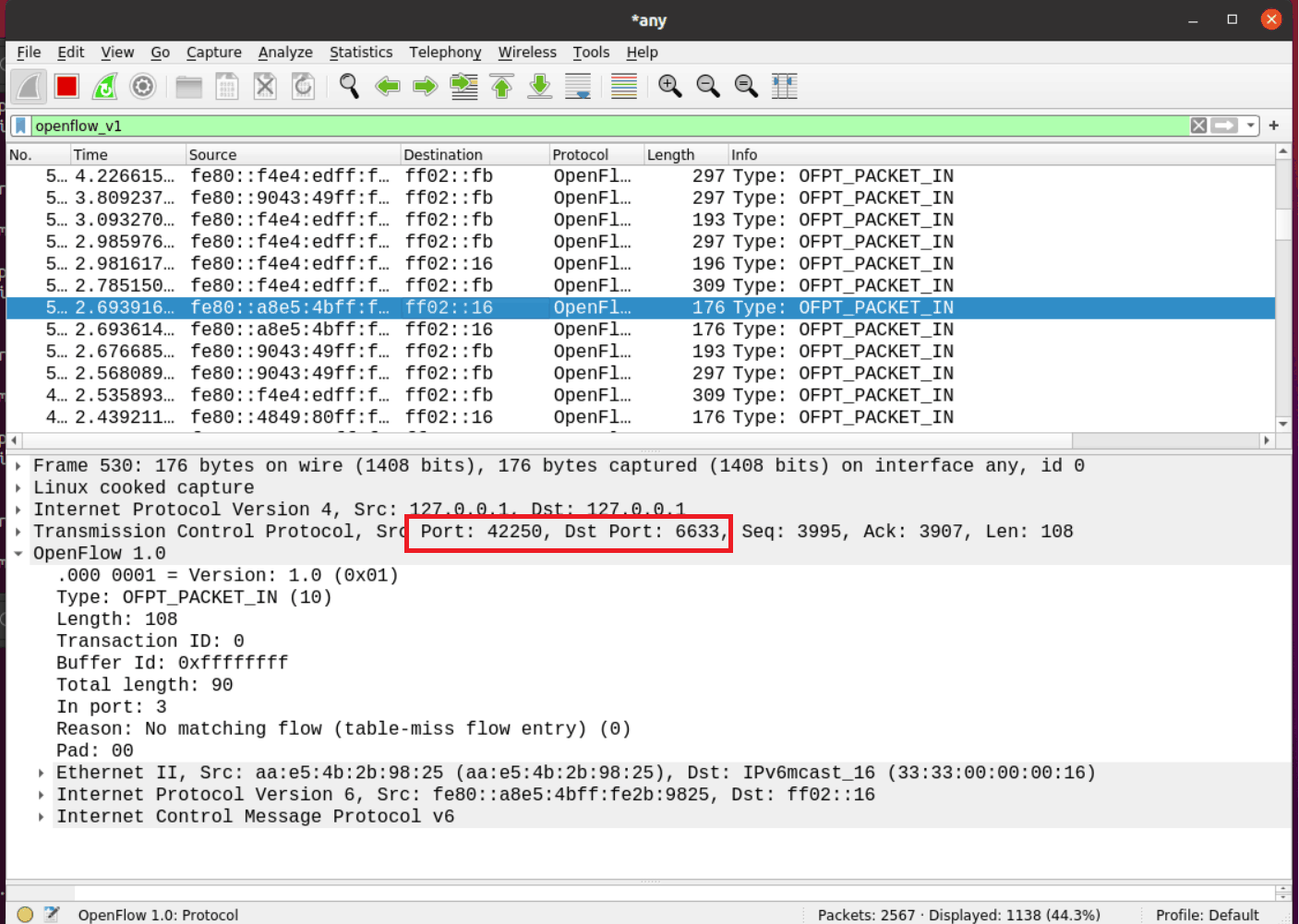

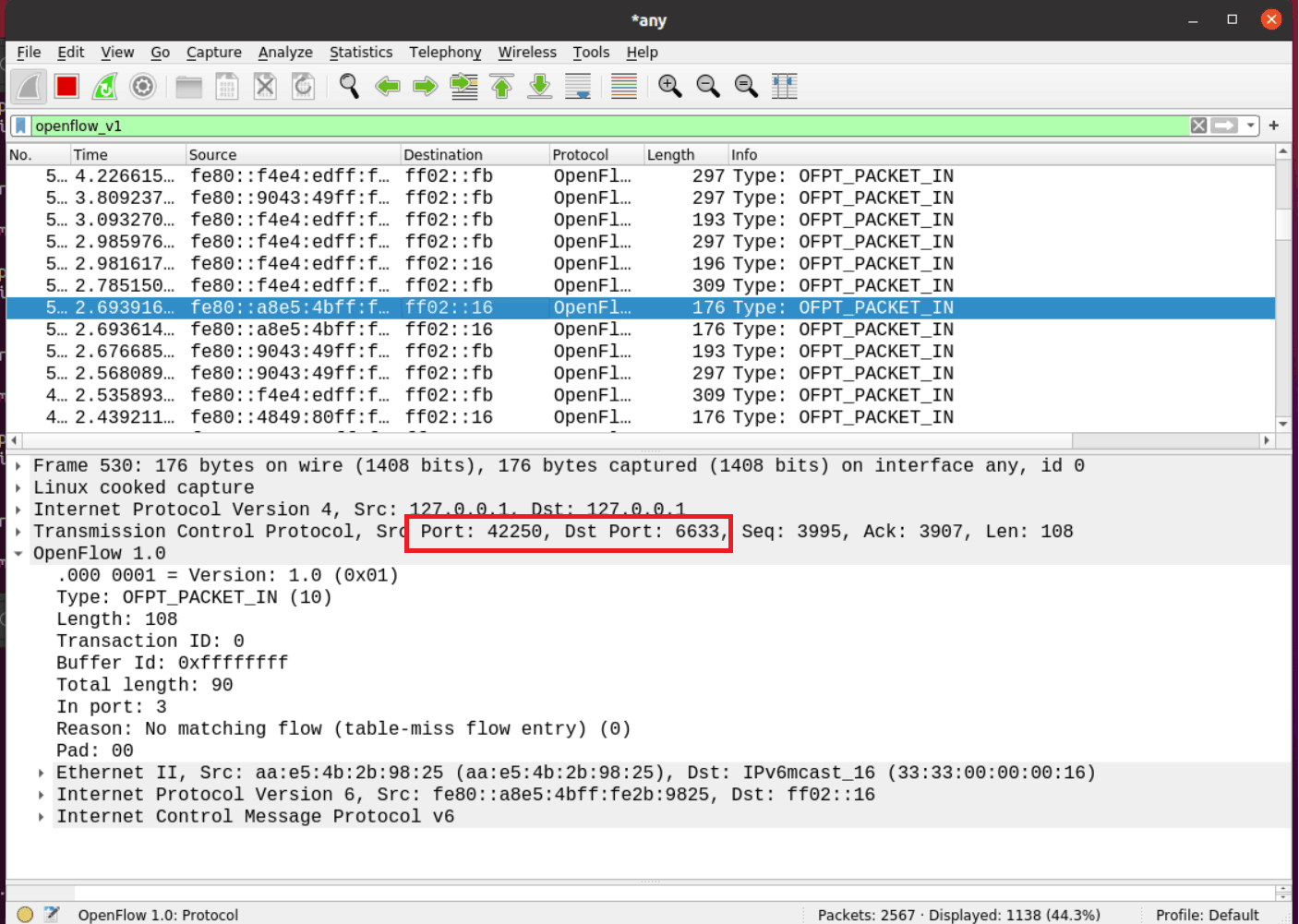

(6)Packet_in

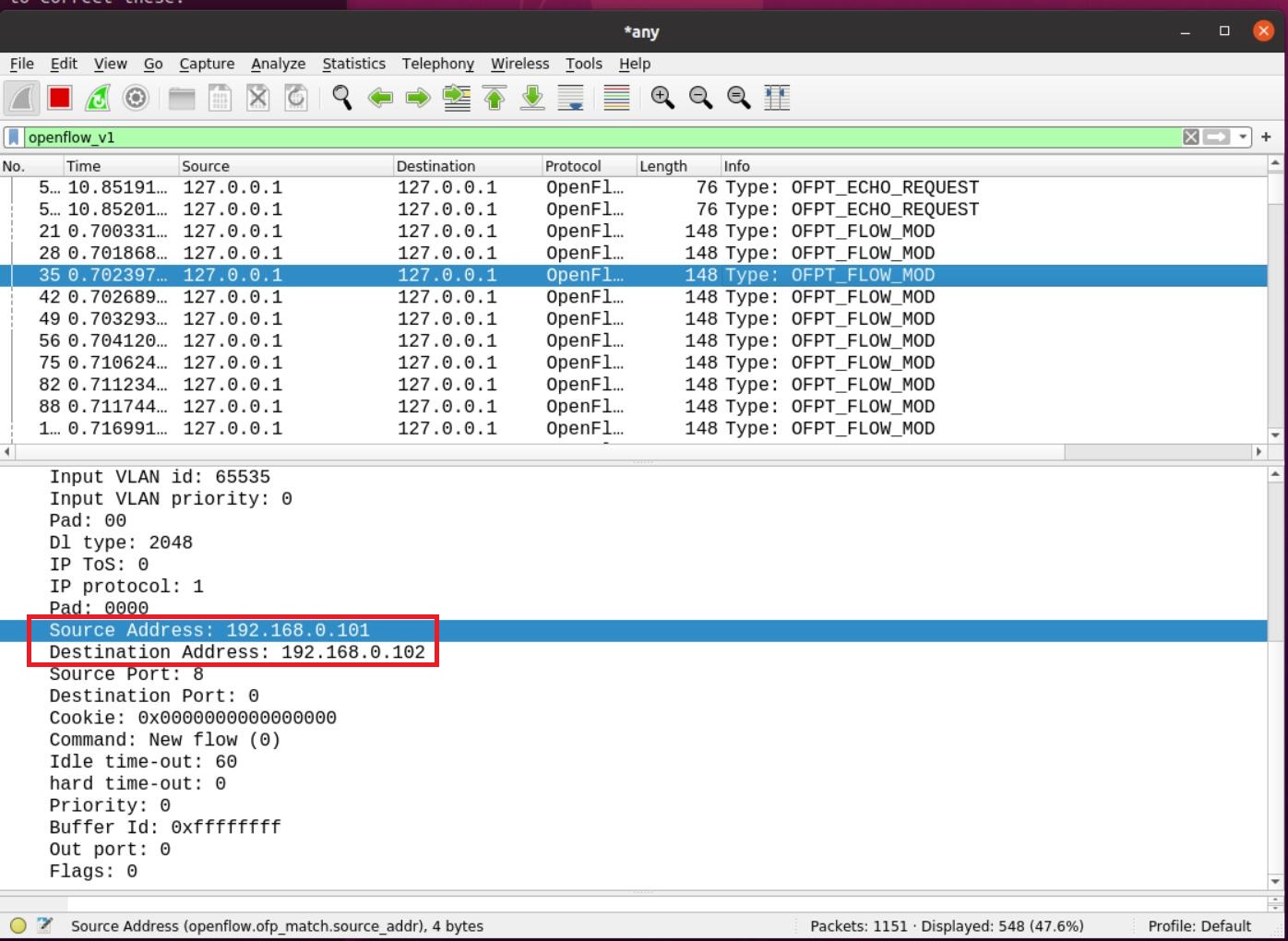

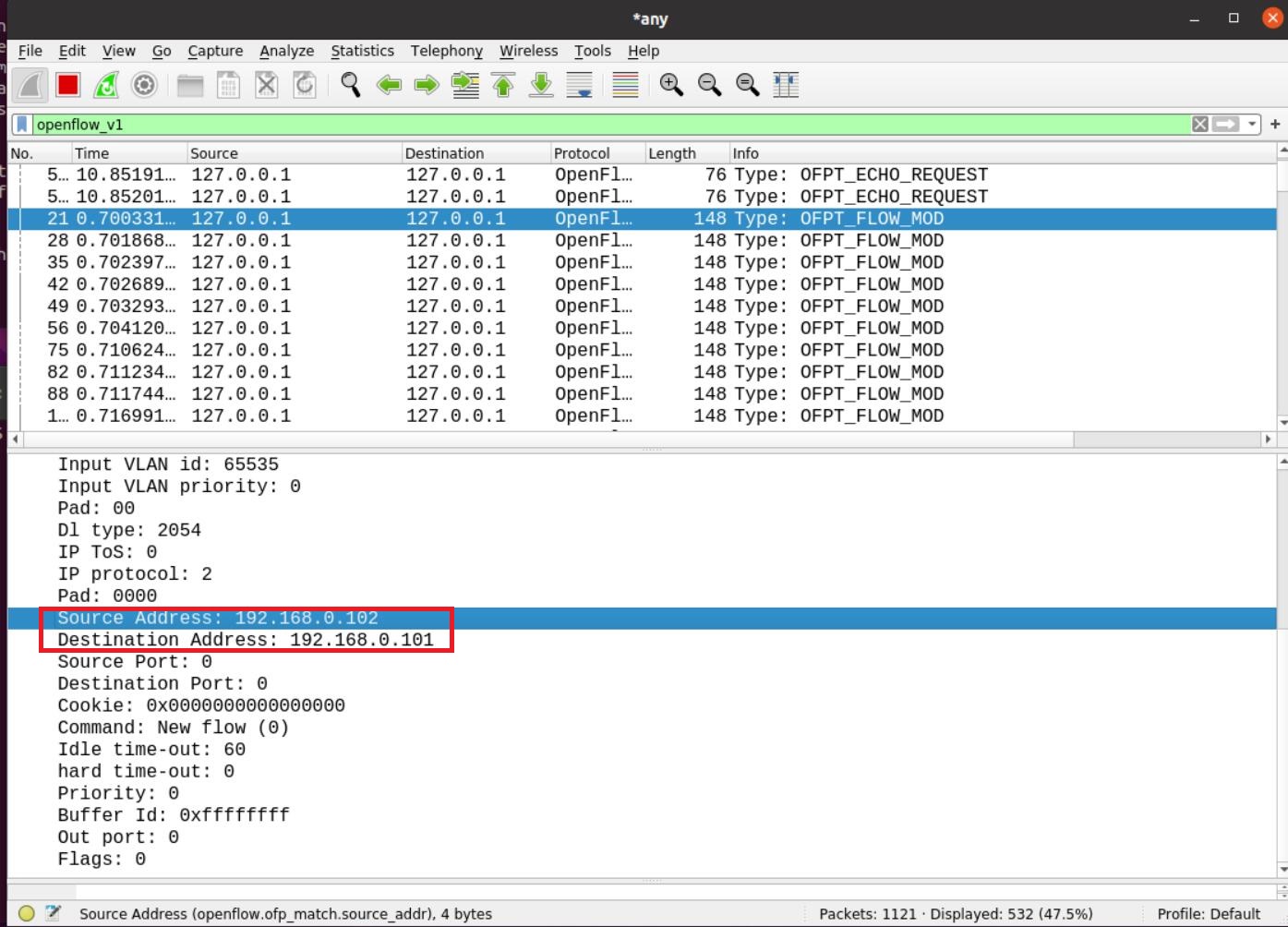

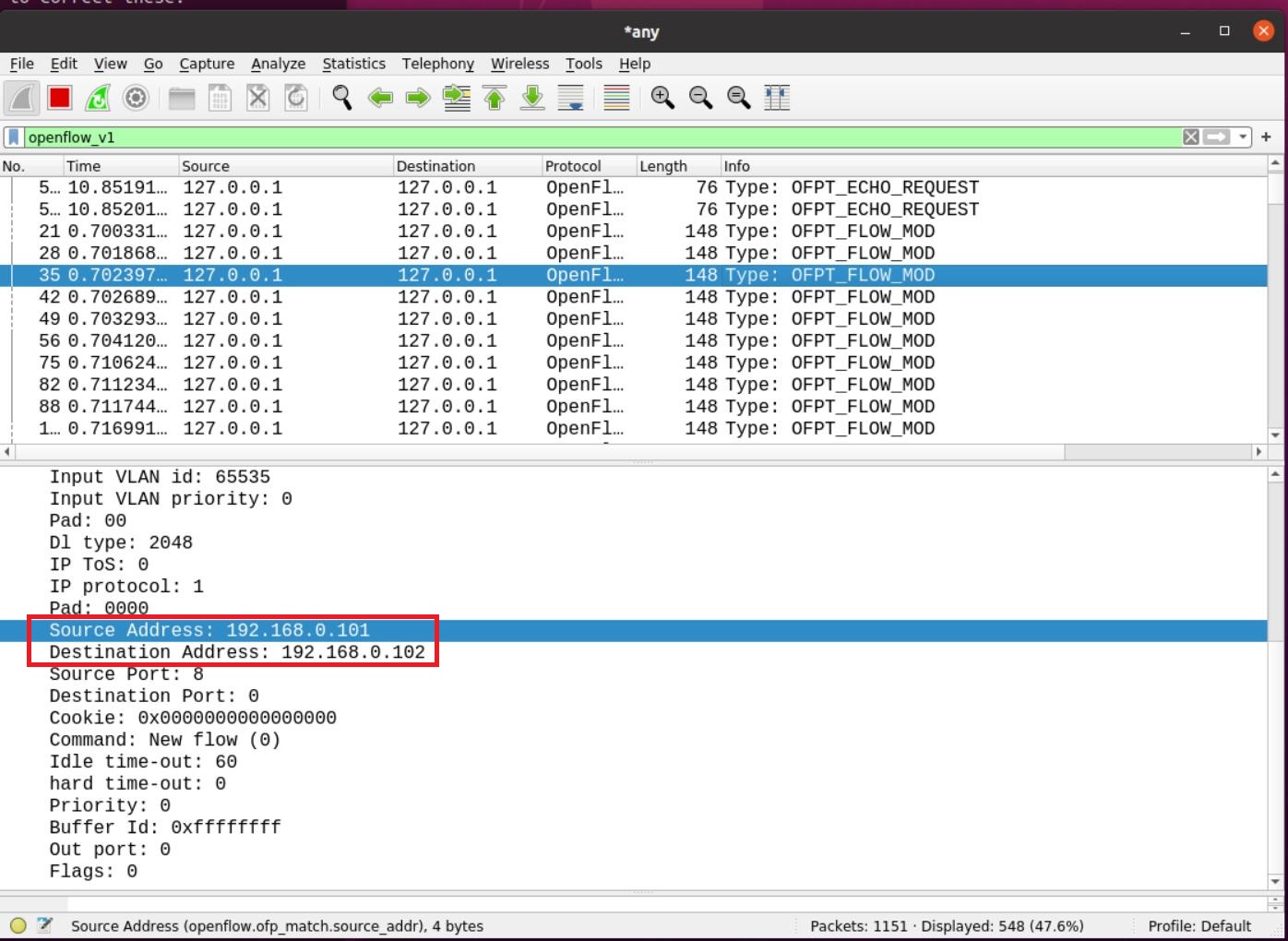

(7)Flow_mod

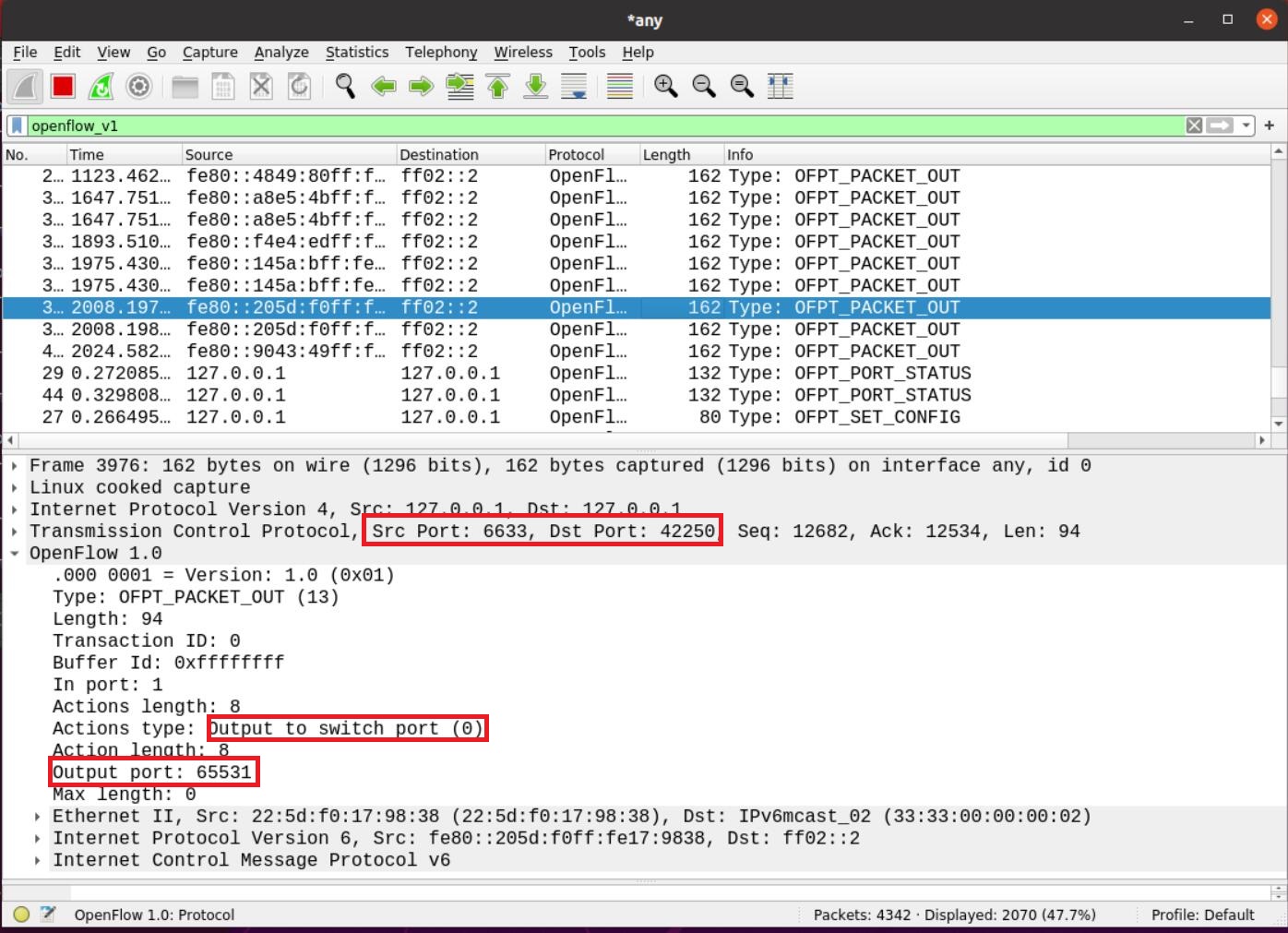

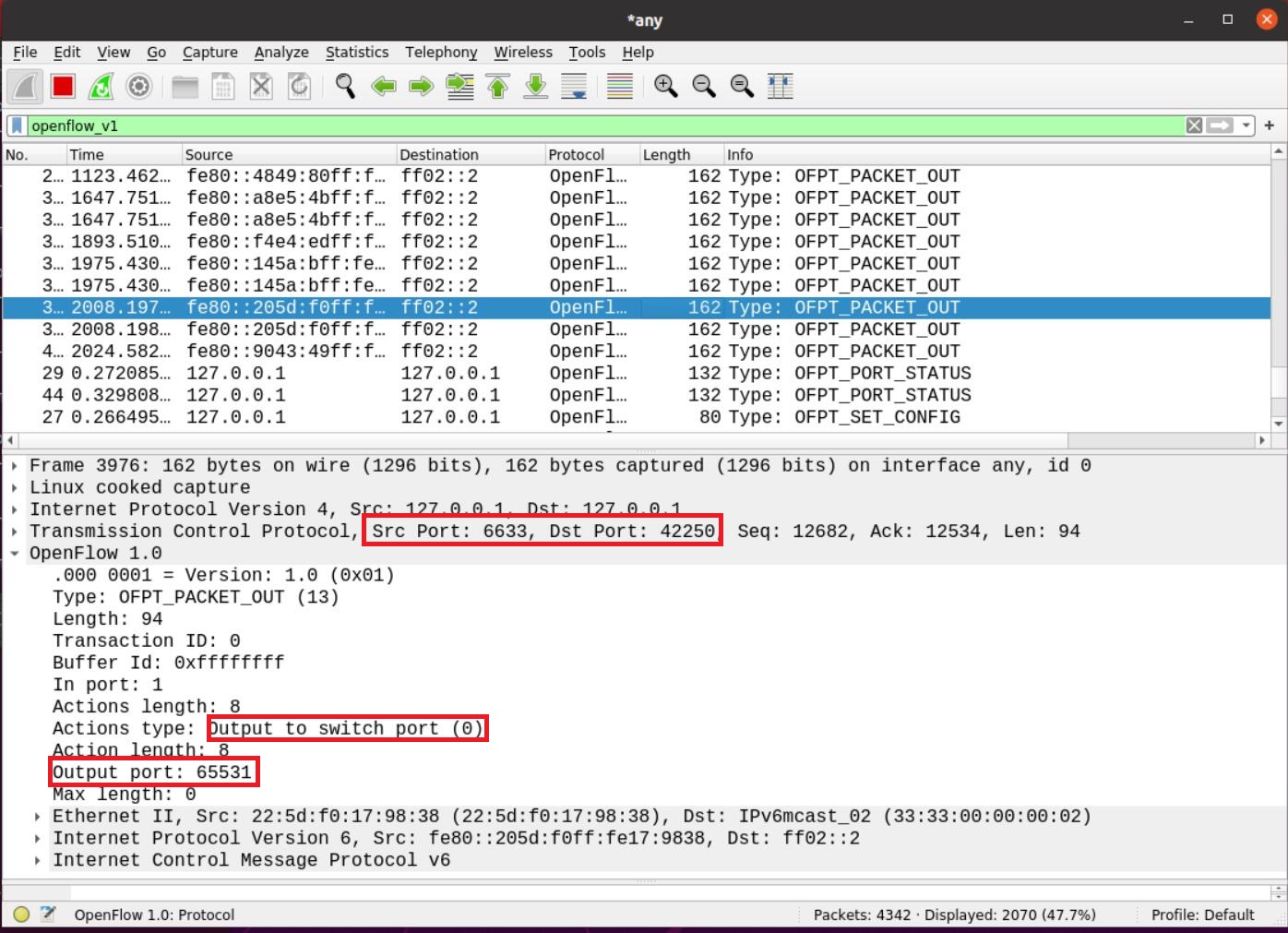

(8)Packet_out

(9)交互图

3.回答问题:交换机与控制器建立通信时是使用TCP协议还是UDP协议?

答:TCP

(二)进阶要求

- 将抓包结果对照OpenFlow源码,了解OpenFlow主要消息类型对应的数据结构定义。

1.HELLO

- 对应OpenFlow源码

struct ofp_header {

uint8_t version; /* OFP_VERSION. */

uint8_t type; /* One of the OFPT_ constants. */

uint16_t length; /* Length including this ofp_header. */

uint32_t xid; /* Transaction id associated with this packet.

Replies use the same id as was in the request

to facilitate pairing. */

};

OFP_ASSERT(sizeof(struct ofp_header) == 8);

/* OFPT_HELLO. This message has an empty body, but implementations must

* ignore any data included in the body, to allow for future extensions. */

struct ofp_hello {

struct ofp_header header;

};

2.Features Reques

- 与HELLO中的ofp_header结构体定义变量名相同

3.Set_Conig

-

对应的openflow源码

struct ofp_switch_config { struct ofp_header header; uint16_t flags; /* OFPC_* flags. */ uint16_t miss_send_len; /* Max bytes of new flow that datapath should send to the controller. */ }; OFP_ASSERT(sizeof(struct ofp_switch_config) == 12);

4.Port_Status

-

对应的openflow源码

struct ofp_port_status { struct ofp_header header; uint8_t reason; /* One of OFPPR_*. */ uint8_t pad[7]; /* Align to 64-bits. */ struct ofp_phy_port desc; }; OFP_ASSERT(sizeof(struct ofp_port_status) == 64);

5.Features Reply

- 对应的openflow源码

/* Description of a physical port */

struct ofp_phy_port {

uint16_t port_no;

uint8_t hw_addr[OFP_ETH_ALEN];

char name[OFP_MAX_PORT_NAME_LEN]; /* Null-terminated */

uint32_t config; /* Bitmap of OFPPC_* flags. */

uint32_t state; /* Bitmap of OFPPS_* flags. */

/* Bitmaps of OFPPF_* that describe features. All bits zeroed if

* unsupported or unavailable. */

uint32_t curr; /* Current features. */

uint32_t advertised; /* Features being advertised by the port. */

uint32_t supported; /* Features supported by the port. */

uint32_t peer; /* Features advertised by peer. */

};

/* Switch features. */

struct ofp_switch_features {

struct ofp_header header;

uint64_t datapath_id; /* Datapath unique ID. The lower 48-bits are for

a MAC address, while the upper 16-bits are

implementer-defined. */

uint32_t n_buffers; /* Max packets buffered at once. */

uint8_t n_tables; /* Number of tables supported by datapath. */

uint8_t pad[3]; /* Align to 64-bits. */

/* Features. */

uint32_t capabilities; /* Bitmap of support "ofp_capabilities". */

uint32_t actions; /* Bitmap of supported "ofp_action_type"s. */

/* Port info.*/

struct ofp_phy_port ports[0]; /* Port definitions. The number of ports

is inferred from the length field in

the header. */

};

6.Packet_in

- 对应的openflow源代码

/* Why is this packet being sent to the controller? */

enum ofp_packet_in_reason {

OFPR_NO_MATCH, /* No matching flow. */

OFPR_ACTION /* Action explicitly output to controller. */

};

/* Packet received on port (datapath -> controller). */

struct ofp_packet_in {

struct ofp_header header;

uint32_t buffer_id; /* ID assigned by datapath. */

uint16_t total_len; /* Full length of frame. */

uint16_t in_port; /* Port on which frame was received. */

uint8_t reason; /* Reason packet is being sent (one of OFPR_*) */

uint8_t pad;

uint8_t data[0]; /* Ethernet frame, halfway through 32-bit word,

so the IP header is 32-bit aligned. The

amount of data is inferred from the length

field in the header. Because of padding,

offsetof(struct ofp_packet_in, data) ==

sizeof(struct ofp_packet_in) - 2. */

};

7.Flow_mod

- 对应的openflow源代码

/* Fields to match against flows */

struct ofp_match {

uint32_t wildcards; /* Wildcard fields. */

uint16_t in_port; /* Input switch port. */

uint8_t dl_src[OFP_ETH_ALEN]; /* Ethernet source address. */

uint8_t dl_dst[OFP_ETH_ALEN]; /* Ethernet destination address. */

uint16_t dl_vlan; /* Input VLAN id. */

uint8_t dl_vlan_pcp; /* Input VLAN priority. */

uint8_t pad1[1]; /* Align to 64-bits */

uint16_t dl_type; /* Ethernet frame type. */

uint8_t nw_tos; /* IP ToS (actually DSCP field, 6 bits). */

uint8_t nw_proto; /* IP protocol or lower 8 bits of

* ARP opcode. */

uint8_t pad2[2]; /* Align to 64-bits */

uint32_t nw_src; /* IP source address. */

uint32_t nw_dst; /* IP destination address. */

uint16_t tp_src; /* TCP/UDP source port. */

uint16_t tp_dst; /* TCP/UDP destination port. */

};

/* Flow setup and teardown (controller -> datapath). */

struct ofp_flow_mod {

struct ofp_header header;

struct ofp_match match; /* Fields to match */

uint64_t cookie; /* Opaque controller-issued identifier. */

/* Flow actions. */

uint16_t command; /* One of OFPFC_*. */

uint16_t idle_timeout; /* Idle time before discarding (seconds). */

uint16_t hard_timeout; /* Max time before discarding (seconds). */

uint16_t priority; /* Priority level of flow entry. */

uint32_t buffer_id; /* Buffered packet to apply to (or -1).

Not meaningful for OFPFC_DELETE*. */

uint16_t out_port; /* For OFPFC_DELETE* commands, require

matching entries to include this as an

output port. A value of OFPP_NONE

indicates no restriction. */

uint16_t flags; /* One of OFPFF_*. */

struct ofp_action_header actions[0]; /* The action length is inferred

from the length field in the

header. */

};

8.Packet_out

- 对应的openflow源代码

/* Action header that is common to all actions. The length includes the

* header and any padding used to make the action 64-bit aligned.

* NB: The length of an action *must* always be a multiple of eight. */

struct ofp_action_header {

uint16_t type; /* One of OFPAT_*. */

uint16_t len; /* Length of action, including this

header. This is the length of action,

including any padding to make it

64-bit aligned. */

uint8_t pad[4];

};

OFP_ASSERT(sizeof(struct ofp_action_header) == 8);

/* Send packet (controller -> datapath). */

struct ofp_packet_out {

struct ofp_header header;

uint32_t buffer_id; /* ID assigned by datapath (-1 if none). */

uint16_t in_port; /* Packet's input port (OFPP_NONE if none). */

uint16_t actions_len; /* Size of action array in bytes. */

struct ofp_action_header actions[0]; /* Actions. */

/* uint8_t data[0]; */ /* Packet data. The length is inferred

from the length field in the header.

(Only meaningful if buffer_id == -1.) */

};

(三)个人总结

-

实验难度:适中

-

实验过程遇到的困难及解决办法

(一)

-

完全抓不到任何OpenFlow包

-

仅能够抓到echo_request包和echo_reply包

问题原因分析

1.mininet和控制器在一台虚拟机中运行,不会有实际的OpenFlow报文从网卡发出的。

2.在启动wireshark之前,就启动了控制器,mininet创建了topo并执行ping操作。

这种情况,实际是有Packet-in包等报文的,但是wireshark当时没有启动,所以抓不到。

解决方法

先开启抓包再构建拓扑。先启动wireshark,再去创建topo,启动控制器,执行ping操作。

这样保证在ping的开始阶段,wireshark第一时间抓到OpenFlow的报文。

(二)

可以看出使用的是openflow1.5版本,因此要在过滤器中输入“openflow1.6”过滤出Openflow1.6的数据报文,用来抓取HELLO。

3.个人感想

-

运用 wireshark 对 OpenFlow 协议数据交互过程进行抓包;

-

能够借助包解析工具,在

openflow/include/openflow当中的openflow.h头文件与抓包结果进行对照,了解OpenFlow协议的数据包交互过程与机制。

-