Binder Native 层(二)

Binder 框架及 Native 层

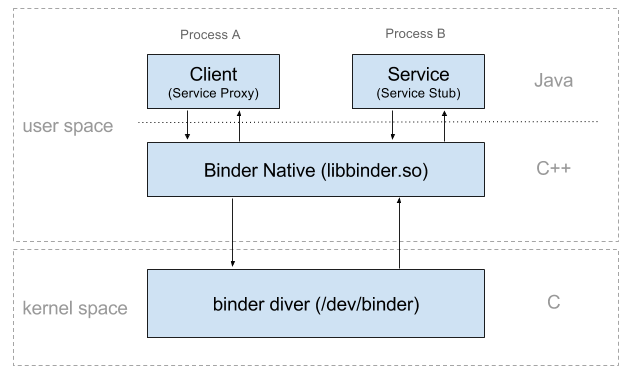

Binder机制使本地对象可以像操作当前对象一样调用远程对象,可以使不同的进程间互相通信。Binder 使用 Client/Server 架构,客户端通过服务端代理,经过 Binder 驱动与服务端交互。

Binder 机制实现进程间通信的奥秘在于 kernel 中的 Binder 驱动。

JNI 的代码位于 frameworks/base/core/jni 目录下,主要是 android_util_Binder.cpp 文件和头文件 android_util_Binder.h

Binder JNI 代码是 Binder Java 层操作到 Binder Native 层的接口封装,最后会被编译进 libandroid_runtime.so 系统库。

Binder 本地层的代码在 frameworks/native/libs/binder 目录下, 此目录在 Android 系统编译后会生成 libbinder.so 文件,供 JNI 调用。libbinder 封装了所有对 binder 驱动的操作,是上层应用与驱动交互的桥梁。头文件则在 frameworks/native/include/binder 目录下。

Binder Native 的入口

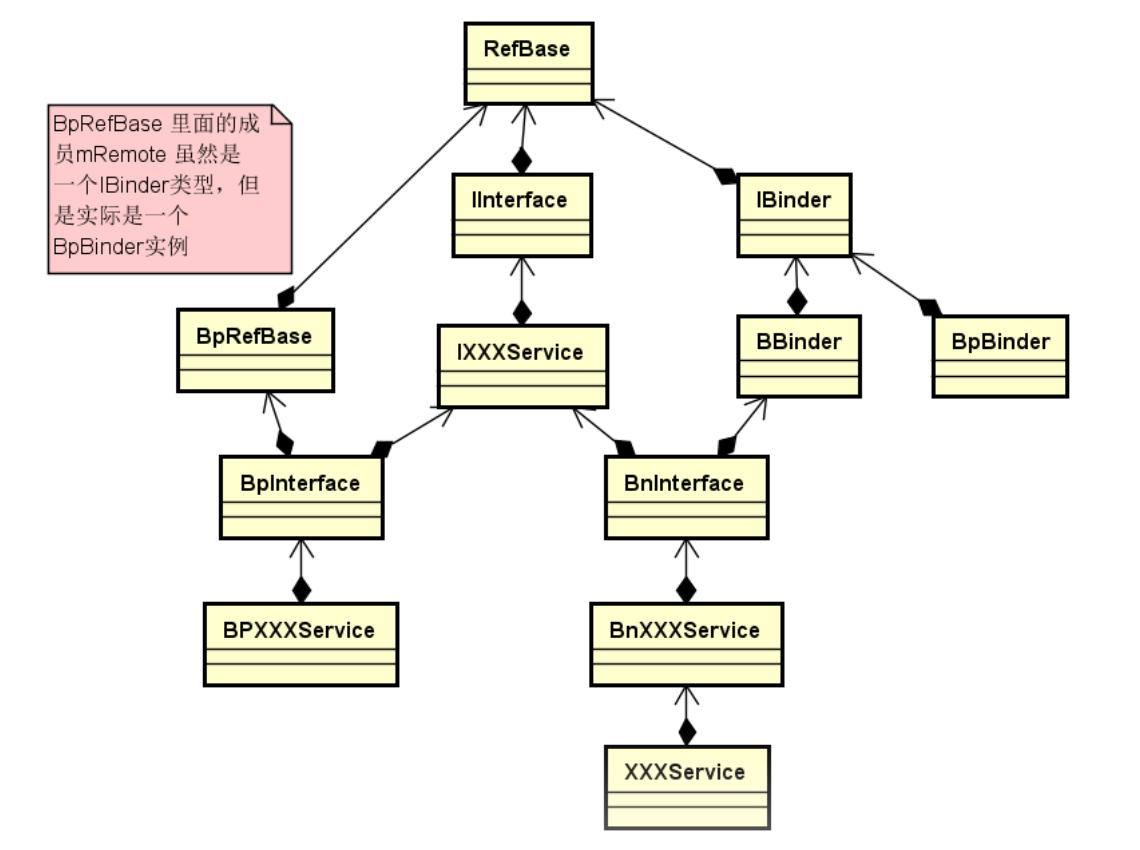

IInterface.cpp 是 Binder 本地层入口,与 java 层的 android.os.IInterface 对应,提供 asBinder() 的实现,返回 IBinder 对象。

在头文件中有两个类 BnInterface (Binder Native Interface) 和 BpInterface (Binder Proxy Interface), 对应于 java 层的 Stub和 Proxy

sp<IBinder> IInterface::asBinder(const IInterface* iface) { if (iface == NULL) return NULL; return const_cast<IInterface*>(iface)->onAsBinder(); } template<typename INTERFACE> class BnInterface : public INTERFACE, public BBinder { public: virtual sp<IInterface> queryLocalInterface(const String16& _descriptor); virtual const String16& getInterfaceDescriptor() const; protected: virtual IBinder* onAsBinder(); }; // ---------------------------------------------------------------------- template<typename INTERFACE> class BpInterface : public INTERFACE, public BpRefBase { public: BpInterface(const sp<IBinder>& remote); protected: virtual IBinder* onAsBinder(); };

其中 BnInterface 是实现Stub功能的模板,扩展BBinder的onTransact()方法实现Binder命令的解析和执行。BpInterface是实现Proxy功能的模板,BpRefBase里有个mRemote对象指向一个BpBinder对象。

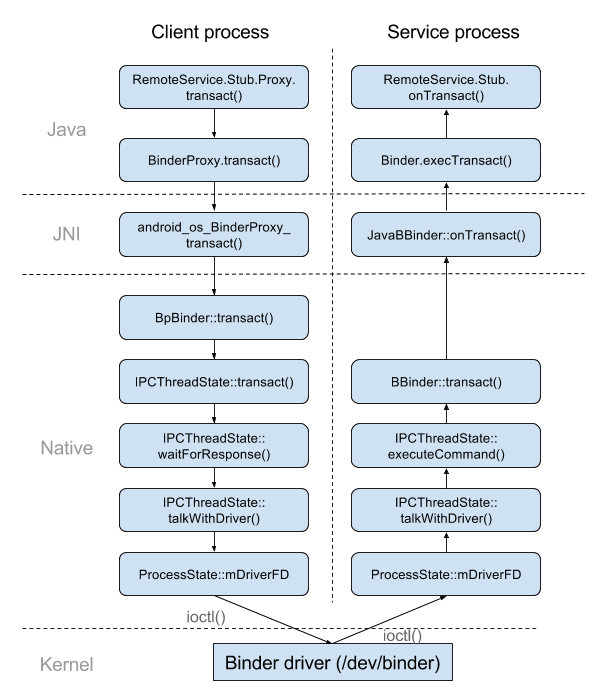

Binder 本地层的整个函数/方法调用过程

1. Java 层 IRemoteService.Stub.Proxy 调用 android.os.IBinder (实现在 android.os.Binder.BinderProxy) 的 transact() 发送 Stub.TRANSACTION_addUser 命令。

2. 由 BinderProxy.transact() 进入 native 层。

3. 由 jni 转到 android_os_BinderProxy_transact() 函数。

4. 调用 IBinder->transact 函数。

static jboolean android_os_BinderProxy_transact(JNIEnv* env, jobject obj, jint code, jobject dataObj, jobject replyObj, jint flags) // throws RemoteException { IBinder* target = (IBinder*) env->GetLongField(obj, gBinderProxyOffsets.mObject); status_t err = target->transact(code, *data, reply, flags); }

而 gBinderProxyOffsets.mObject 则是在 java 层调用 IBinder.getContextObject() 时在 javaObjectForIBinder 函数中设置的

static jobject android_os_BinderInternal_getContextObject(JNIEnv* env, jobject clazz) { sp<IBinder> b = ProcessState::self()->getContextObject(NULL); return javaObjectForIBinder(env, b); } jobject javaObjectForIBinder(JNIEnv* env, const sp<IBinder>& val) { ... LOGDEATH("objectForBinder %p: created new proxy %p !\n", val.get(), object); // The proxy holds a reference to the native object. env->SetLongField(object, gBinderProxyOffsets.mObject, (jlong)val.get()); val->incStrong((void*)javaObjectForIBinder); ... }

经过 ProcessState::getContextObject() 和 ProcessState::getStrongProxyForHandle()

sp<IBinder> ProcessState::getContextObject(const sp<IBinder>& /*caller*/) { return getStrongProxyForHandle(0); } sp<IBinder> ProcessState::getStrongProxyForHandle(int32_t handle) { sp<IBinder> result; ... b = new BpBinder(handle); result = b; ... return result; }

可见 android_os_BinderProxy_transact() 函数实际上调用的是 BpBinder::transact() 函数。

5. BpBinder::transact() 则又调用了 IPCThreadState::self()->transact() 函数。

status_t IPCThreadState::transact(int32_t handle, uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags) { status_t err = data.errorCheck(); flags |= TF_ACCEPT_FDS; if (err == NO_ERROR) { LOG_ONEWAY(">>>> SEND from pid %d uid %d %s", getpid(), getuid(), (flags & TF_ONE_WAY) == 0 ? "READ REPLY" : "ONE WAY"); err = writeTransactionData(BC_TRANSACTION, flags, handle, code, data, NULL); } if ((flags & TF_ONE_WAY) == 0) { if (reply) { err = waitForResponse(reply); } else { Parcel fakeReply; err = waitForResponse(&fakeReply); } } else { err = waitForResponse(NULL, NULL); } return err; } status_t IPCThreadState::writeTransactionData(int32_t cmd, uint32_t binderFlags, int32_t handle, uint32_t code, const Parcel& data, status_t* statusBuffer) { binder_transaction_data tr; tr.target.ptr = 0; /* Don't pass uninitialized stack data to a remote process */ tr.target.handle = handle; tr.code = code; ... mOut.writeInt32(cmd); mOut.write(&tr, sizeof(tr)); return NO_ERROR; }

由函数内容可以看出, 数据再一次通过 writeTransactionData() 传递给 mOut 进行写入操作。 mOut 是一个 Parcel 对象, 声明在 IPCThreadState.h 文件中。之后则调用 waitForResponse() 函数。

6. IPCThreadState::waitForResponse() 在一个 while 循环里不断的调用 talkWithDriver() 并检查是否有数据返回。

status_t IPCThreadState::waitForResponse(Parcel *reply, status_t *acquireResult) { uint32_t cmd; int32_t err; while (1) { if ((err=talkWithDriver()) < NO_ERROR) break; ... cmd = (uint32_t)mIn.readInt32(); switch (cmd) { case BR_TRANSACTION_COMPLETE: ... case BR_REPLY: { binder_transaction_data tr; err = mIn.read(&tr, sizeof(tr)); ALOG_ASSERT(err == NO_ERROR, "Not enough command data for brREPLY"); if (err != NO_ERROR) goto finish; if (reply) { if ((tr.flags & TF_STATUS_CODE) == 0) { reply->ipcSetDataReference( reinterpret_cast<const uint8_t*>(tr.data.ptr.buffer), tr.data_size, reinterpret_cast<const binder_size_t*>(tr.data.ptr.offsets), tr.offsets_size/sizeof(binder_size_t), freeBuffer, this); } else { err = *reinterpret_cast<const status_t*>(tr.data.ptr.buffer); freeBuffer(NULL, reinterpret_cast<const uint8_t*>(tr.data.ptr.buffer), tr.data_size, reinterpret_cast<const binder_size_t*>(tr.data.ptr.offsets), tr.offsets_size/sizeof(binder_size_t), this); } } else { freeBuffer(NULL, reinterpret_cast<const uint8_t*>(tr.data.ptr.buffer), tr.data_size, reinterpret_cast<const binder_size_t*>(tr.data.ptr.offsets), tr.offsets_size/sizeof(binder_size_t), this); continue; } } goto finish; } default: err = executeCommand(cmd); if (err != NO_ERROR) goto finish; break; } } ... }

7. IPCThreadState::talkWithDriver() 函数是真正与 binder 驱动交互的实现。ioctl(mProcess->mDriverFD, BINDER_WRITE_READ, &bwr) 就是使用系统调用函数 ioctl 向 binder 设备文件 /dev/binder 发送 BINDER_WRITE_READ命令。

status_t IPCThreadState::talkWithDriver(bool doReceive) { if (mProcess->mDriverFD <= 0) { return -EBADF; } binder_write_read bwr; // Is the read buffer empty? const bool needRead = mIn.dataPosition() >= mIn.dataSize(); // We don't want to write anything if we are still reading // from data left in the input buffer and the caller // has requested to read the next data. const size_t outAvail = (!doReceive || needRead) ? mOut.dataSize() : 0; bwr.write_size = outAvail; bwr.write_buffer = (uintptr_t)mOut.data(); // This is what we'll read. if (doReceive && needRead) { bwr.read_size = mIn.dataCapacity(); bwr.read_buffer = (uintptr_t)mIn.data(); } else { bwr.read_size = 0; bwr.read_buffer = 0; } // Return immediately if there is nothing to do. if ((bwr.write_size == 0) && (bwr.read_size == 0)) return NO_ERROR; bwr.write_consumed = 0; bwr.read_consumed = 0; status_t err; #if defined(HAVE_ANDROID_OS) // 使用系统调用 ioctl 向 /dev/binder 发送 BINDER_WRITE_READ 命令 if (ioctl(mProcess->mDriverFD, BINDER_WRITE_READ, &bwr) >= 0) err = NO_ERROR; else err = -errno; #else err = INVALID_OPERATION; #endif do { if (mProcess->mDriverFD <= 0) { err = -EBADF; } } while (err == -EINTR); if (err >= NO_ERROR) { if (bwr.write_consumed > 0) { if (bwr.write_consumed < mOut.dataSize()) mOut.remove(0, bwr.write_consumed); else mOut.setDataSize(0); } if (bwr.read_consumed > 0) { mIn.setDataSize(bwr.read_consumed); mIn.setDataPosition(0); } return NO_ERROR; } return err; }

经过 IPCThreadState::talkWithDriver() ,就将数据发送给了 Binder 驱动。

继续追踪 IPCThreadState::waitForResponse() ,可以从 第6步 发现 IPCThreadState 不断的循环读取 Binder 驱动返回,获取到返回命令后执行了 executeCommand(cmd) 函数。

8. IPCThreadState::executeCommand() 处理 Binder 驱动返回命令

status_t IPCThreadState::executeCommand(int32_t cmd) { BBinder* obj; RefBase::weakref_type* refs; status_t result = NO_ERROR; switch ((uint32_t)cmd) { ... case BR_TRANSACTION: { binder_transaction_data tr; result = mIn.read(&tr, sizeof(tr)); ... Parcel buffer; buffer.ipcSetDataReference( reinterpret_cast<const uint8_t*>(tr.data.ptr.buffer), tr.data_size, reinterpret_cast<const binder_size_t*>(tr.data.ptr.offsets), tr.offsets_size/sizeof(binder_size_t), freeBuffer, this); ... Parcel reply; status_t error; if (tr.target.ptr) { sp<BBinder> b((BBinder*)tr.cookie); error = b->transact(tr.code, buffer, &reply, tr.flags); } else { error = the_context_object->transact(tr.code, buffer, &reply, tr.flags); } ... } break; ... }

9. 可以看出其调用了 BBinder::transact() 函数,将数据返回给上层。

status_t BBinder::transact( uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags) { data.setDataPosition(0); status_t err = NO_ERROR; switch (code) { case PING_TRANSACTION: reply->writeInt32(pingBinder()); break; default: err = onTransact(code, data, reply, flags); break; } if (reply != NULL) { reply->setDataPosition(0); } return err; }

10. 而这里的 b->transact(tr.code, buffer, &reply, tr.flags) 中的 b (BBinder) 是 JavaBBinder 的实例,所以会调用 JavaBBinder::onTransact() 函数

// frameworks/base/core/jni/android_util_Binder.cpp virtual status_t onTransact( uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags = 0) { JNIEnv* env = javavm_to_jnienv(mVM); ... jboolean res = env->CallBooleanMethod(mObject, gBinderOffsets.mExecTransact, code, reinterpret_cast<jlong>(&data), reinterpret_cast<jlong>(reply), flags); } static int int_register_android_os_Binder(JNIEnv* env) { ... gBinderOffsets.mExecTransact = GetMethodIDOrDie(env, clazz, "execTransact", "(IJJI)Z"); ... }

11. 可见 JNI 通过 gBinderOffsets.mExecTransact 最后执行了 android.os.Binder 的 execTransact() 方法。

execTransact() 方法是 jni 回调的入口。

// Entry point from android_util_Binder.cpp's onTransact private boolean execTransact(int code, long dataObj, long replyObj, int flags) { Parcel data = Parcel.obtain(dataObj); Parcel reply = Parcel.obtain(replyObj); ... try { res = onTransact(code, data, reply, flags); } ... }

12. 而我们则在服务端 IRemoteService.Stub 重载了 onTransact() 方法,所以数据最后会回到我们的服务端并执行服务端实现的 addUser() 方法。

public static abstract class Stub extends android.os.Binder implements org.xdty.remoteservice.IRemoteService { ... @Override public boolean onTransact(int code, android.os.Parcel data, android.os.Parcel reply, int flags) throws android.os.RemoteException { switch (code) { case INTERFACE_TRANSACTION: { reply.writeString(DESCRIPTOR); return true; } case TRANSACTION_basicTypes: { ... return true; } case TRANSACTION_addUser: { data.enforceInterface(DESCRIPTOR); org.xdty.remoteservice.User _arg0; if ((0 != data.readInt())) { _arg0 = org.xdty.remoteservice.User.CREATOR.createFromParcel(data); } else { _arg0 = null; } this.addUser(_arg0); reply.writeNoException(); return true; } } return super.onTransact(code, data, reply, flags); } }

Binder 设备文件的打开和读写

我们看到 JNI 过程中调用了 ProcessState::getContextObject() 函数, 在 ProcessState 初始化时会打开 binder 设备

// ProcessState.cpp ProcessState::ProcessState() : mDriverFD(open_driver()) ... { ... }

open_driver() 函数内容如下

// ProcessState.cpp static int open_driver() { // 打开设备文件 int fd = open("/dev/binder", O_RDWR); if (fd >= 0) { fcntl(fd, F_SETFD, FD_CLOEXEC); int vers = 0; // 获取驱动版本 status_t result = ioctl(fd, BINDER_VERSION, &vers); if (result == -1) { ALOGE("Binder ioctl to obtain version failed: %s", strerror(errno)); close(fd); fd = -1; } // 检查驱动版本是否一致 if (result != 0 || vers != BINDER_CURRENT_PROTOCOL_VERSION) { ALOGE("Binder driver protocol does not match user space protocol!"); close(fd); fd = -1; } // 设置最多 15 个 binder 线程 size_t maxThreads = DEFAULT_MAX_BINDER_THREADS; result = ioctl(fd, BINDER_SET_MAX_THREADS, &maxThreads); if (result == -1) { ALOGE("Binder ioctl to set max threads failed: %s", strerror(errno)); } } else { ALOGW("Opening '/dev/binder' failed: %s\n", strerror(errno)); } return fd; }

设备的读写

打开设备文件后,文件描述符被保存在 mDriverFD, 通过系统调用 ioctl 函数操作 mDriverFD 就可以实现和 binder 驱动的交互。

对 Binder 设备文件的所有读写及关闭操作则都在 IPCThreadState中,如上一小节提及到的 IPCThreadState::talkWithDriver函数

talkWithDriver() 函数封装了 BINDER_WRITE_READ 命令,会向 binder 驱动写入或从驱动读取封装在 binder_write_read 结构体中的本地或远程对象。

// IPCThreadState.cpp status_t IPCThreadState::talkWithDriver(bool doReceive) { binder_write_read bwr; const bool needRead = mIn.dataPosition() >= mIn.dataSize(); const size_t outAvail = (!doReceive || needRead) ? mOut.dataSize() : 0; // 写入数据 bwr.write_size = outAvail; bwr.write_buffer = (uintptr_t)mOut.data(); // 读取数据 if (doReceive && needRead) { bwr.read_size = mIn.dataCapacity(); bwr.read_buffer = (uintptr_t)mIn.data(); } else { bwr.read_size = 0; bwr.read_buffer = 0; } ... // 使用 ioctl 系统调用发送 BINDER_WRITE_READ 命令到 biner 驱动 if (ioctl(mProcess->mDriverFD, BINDER_WRITE_READ, &bwr) >= 0) err = NO_ERROR; ... }

BpBinder.cpp

BpBinder(Base proxy Binder) 对应于 Java 层的 Service Proxy,

先查看头文件 BpBinder.h 代码片断

class BpBinder : public IBinder { public: inline int32_t handle() const { return mHandle; } virtual status_t transact( uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags = 0); virtual status_t linkToDeath(const sp<DeathRecipient>& recipient, void* cookie = NULL, uint32_t flags = 0); virtual status_t unlinkToDeath( const wp<DeathRecipient>& recipient, void* cookie = NULL, uint32_t flags = 0, wp<DeathRecipient>* outRecipient = NULL); };

可以看到 BpBinder 中声明了 transact() linkToDeath() 等重要函数。再看具体实现

status_t BpBinder::transact( uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags) { ... status_t status = IPCThreadState::self()->transact( mHandle, code, data, reply, flags); ... return DEAD_OBJECT; } status_t BpBinder::linkToDeath( const sp<DeathRecipient>& recipient, void* cookie, uint32_t flags) { ... IPCThreadState* self = IPCThreadState::self(); self->requestDeathNotification(mHandle, this); self->flushCommands(); ... return DEAD_OBJECT; }

可以看出 BPBinder 是最终是通过调用 IPCThreadState 的函数来完成数据传递操作。

IPCThreadState.cpp

AppOpsManager.cpp

APPOpsManager (APP Operation Manager) 是 应用操作管理者,实现对客户端操作的检查、启动、完成等

浙公网安备 33010602011771号

浙公网安备 33010602011771号