iOS学习笔记27-摄像头

一、摄像头

在iOS中,手机摄像头的使用有以下两种方法:

UIImagePickerController拍照和视频录制

- 优点:使用方便,功能强大

- 缺点:高度封装性,无法实现一些自定义工作

AVFoundation框架实现

- 优点:灵活性强,提供了很多现成的输入设备和输出设备,还有很多底层的内容可以供开发者使用

- 缺点:需要和底层打交道,学习难度大,使用复杂

我们平常使用UIImagePickerController就基本可以满足了,功能确实强大,但它也有不好的一点,那就是由于它的高度封装性,如果要进行某些自定义工作就比较复杂,例如如果要做出一款类似于美颜相机的拍照界面就比较难以实现,这个时候就要考虑AVFoundation框架实现。

二、UIImagePickerController

UIImagePickerController继承于UINavigationController,属于UIKit框架,可以实现图片选取、拍照、录制视频等功能,使用起来十分方便。

1. 常用属性:

@property (nonatomic) UIImagePickerControllerSourceType sourceType;/* 拾取源类型枚举 */

typedef NS_ENUM(NSInteger, UIImagePickerControllerSourceType) {

UIImagePickerControllerSourceTypePhotoLibrary,//照片库

UIImagePickerControllerSourceTypeCamera,//摄像头

UIImagePickerControllerSourceTypeSavedPhotosAlbum//相簿

};

/*

媒体类型,默认情况下此数组包含kUTTypeImage,表示拍照

如果要录像,必须设置为kUTTypeVideo(视频不带声音)或kUTTypeMovie(视频带声音)

*/

@property (nonatomic,copy) NSArray<NSString *> *mediaTypes;

@property (nonatomic) NSTimeInterval videoMaximumDuration;//视频最大录制时长,默认10s

@property (nonatomic) UIImagePickerControllerQualityType videoQuality;//视频质量

typedef NS_ENUM(NSInteger, UIImagePickerControllerQualityType) {

UIImagePickerControllerQualityTypeHigh = 0, //高清

UIImagePickerControllerQualityTypeMedium, //中等,适合WiFi传输

UIImagePickerControllerQualityTypeLow, //低质量,适合蜂窝网传输

UIImagePickerControllerQualityType640x480, //640*480

UIImagePickerControllerQualityTypeIFrame1280x720, //1280*720

UIImagePickerControllerQualityTypeIFrame960x540, //960*540

};

@property (nonatomic) BOOL showsCameraControls;/* 是否显示摄像头控制面板,默认为YES */

@property (nonatomic,strong) UIView *cameraOverlayView;/* 摄像头上覆盖的视图 */

@property (nonatomic) CGAffineTransform cameraViewTransform;/* 摄像头形变 */

@property (nonatomic) UIImagePickerControllerCameraCaptureMode cameraCaptureMode;/* 摄像头捕捉模式 */

typedef NS_ENUM(NSInteger, UIImagePickerControllerCameraCaptureMode) {

UIImagePickerControllerCameraCaptureModePhoto,//拍照模式

UIImagePickerControllerCameraCaptureModeVideo//视频录制模式

};

@property (nonatomic) UIImagePickerControllerCameraDevice cameraDevice;/* 摄像头设备 */

typedef NS_ENUM(NSInteger, UIImagePickerControllerCameraDevice) {

UIImagePickerControllerCameraDeviceRear,//前置摄像头

UIImagePickerControllerCameraDeviceFront//后置摄像头

};

@property (nonatomic) UIImagePickerControllerCameraFlashMode cameraFlashMode;/* 闪光灯模式 */

typedef NS_ENUM(NSInteger, UIImagePickerControllerCameraFlashMode) {

UIImagePickerControllerCameraFlashModeOff = -1,//关闭闪光灯

UIImagePickerControllerCameraFlashModeAuto = 0,//闪光灯自动,默认

UIImagePickerControllerCameraFlashModeOn = 1//打开闪光灯

};2. 常用对象方法:

- (void)takePicture; //拍照

- (BOOL)startVideoCapture;//开始录制视频

- (void)stopVideoCapture;//停止录制视频3. 代理方法:

/* 媒体获取完成会调用 */

- (void)imagePickerController:(UIImagePickerController *)picker

didFinishPickingMediaWithInfo:(NSDictionary<NSString *,id> *)info;

/* 取消获取会调用 */

- (void)imagePickerControllerDidCancel:(UIImagePickerController *)picker;4. 扩展函数,用于保存到相簿:

/* 保存图片到相簿 */

void UIImageWriteToSavedPhotosAlbum(

UIImage *image,//保存的图片UIImage

id completionTarget,//回调的执行者

SEL completionSelector, //回调方法

void *contextInfo//回调参数信息

);

//上面一般保存图片的回调方法为:

- (void)image:(UIImage *)image

didFinishSavingWithError:(NSError *)error

contextInfo:(void *)contextInfo;

/* 判断是否能保存视频到相簿 */

BOOL UIVideoAtPathIsCompatibleWithSavedPhotosAlbum(NSString *videoPath);

/* 保存视频到相簿 */

void UISaveVideoAtPathToSavedPhotosAlbum(

NSString *videoPath, //保存的视频文件路径

id completionTarget, //回调的执行者

SEL completionSelector,//回调方法

void *contextInfo//回调参数信息

);

//上面一般保存视频的回调方法为:

- (void)video:(NSString *)videoPath

didFinishSavingWithError:(NSError *)error

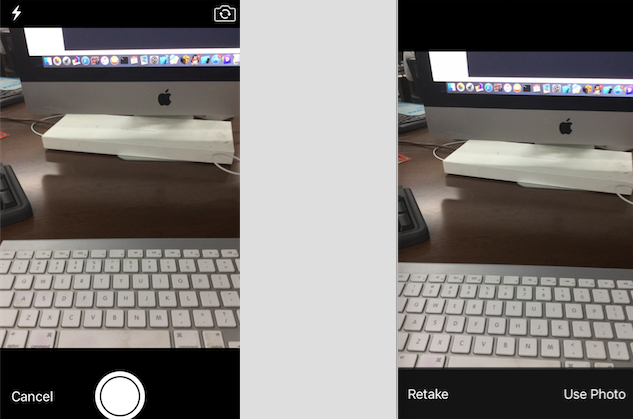

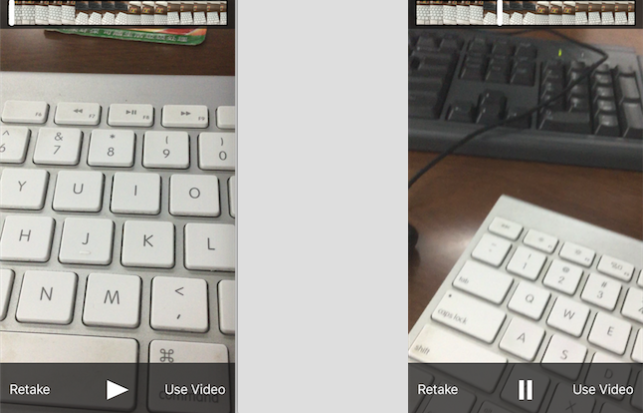

contextInfo:(void *)contextInfo;5. 使用摄像头的步骤:

- 创建

UIImagePickerController对象 - 指定拾取源,拍照和录像都需要使用摄像头

- 指定摄像头设备,是前置的还是后置的

- 设置媒体类型,媒体类型定义在

MobileCoreServices.framework中 - 指定摄像头捕捉模式,录像必须先设置媒体类型再设置捕捉模式。

- 展示

UIImagePickerController,通常以模态弹出形式打开 - 拍照或录像结束后,在代理方法中展示或者保存照片或视频

6. 下面是具体实例代码:

#import "ViewController.h"

#import <MobileCoreServices/MobileCoreServices.h>

@interface ViewController () <UIImagePickerControllerDelegate,UINavigationControllerDelegate>

@property (strong, nonatomic) UIImagePickerController *pickerController;//拾取控制器

@property (strong, nonatomic) IBOutlet UIImageView *showImageView;//显示图片

@end

@implementation ViewController

- (void)viewDidLoad {

[super viewDidLoad];

//初始化拾取控制器

[self initPickerController];

}

/* 初始化拾取控制器 */

- (void)initPickerController{

//创建拾取控制器

UIImagePickerController *pickerController = [[UIImagePickerController alloc] init];

//设置拾取源为摄像头

pickerController.sourceType = UIImagePickerControllerSourceTypeCamera;

//设置摄像头为后置

pickerController.cameraDevice = UIImagePickerControllerCameraDeviceRear;

pickerController.editing = YES;//设置运行编辑,即可以点击一些拾取控制器的控件

pickerController.delegate = self;//设置代理

self.pickerController = pickerController;

}

#pragma mark - UI点击

/* 点击拍照 */

- (IBAction)imagePicker:(id)sender {

//设定拍照的媒体类型

self.pickerController.mediaTypes = @[(NSString *)kUTTypeImage];

//设置摄像头捕捉模式为捕捉图片

self.pickerController.cameraCaptureMode = UIImagePickerControllerCameraCaptureModePhoto;

//模式弹出拾取控制器

[self presentViewController:self.pickerController animated:YES completion:nil];

}

/* 点击录像 */

- (IBAction)videoPicker:(id)sender {

//设定录像的媒体类型

self.pickerController.mediaTypes = @[(NSString *)kUTTypeMovie];

//设置摄像头捕捉模式为捕捉视频

self.pickerController.cameraCaptureMode = UIImagePickerControllerCameraCaptureModeVideo;

//设置视频质量为高清

self.pickerController.videoQuality = UIImagePickerControllerQualityTypeHigh;

//模式弹出拾取控制器

[self presentViewController:self.pickerController animated:YES completion:nil];

}

#pragma mark - 代理方法

/* 拍照或录像成功,都会调用 */

- (void)imagePickerController:(UIImagePickerController *)picker

didFinishPickingMediaWithInfo:(NSDictionary<NSString *,id> *)info

{

//从info取出此时摄像头的媒体类型

NSString *mediaType = [info objectForKey:UIImagePickerControllerMediaType];

if ([mediaType isEqualToString:(NSString *)kUTTypeImage]) {//如果是拍照

//获取拍照的图像

UIImage *image = [info objectForKey:UIImagePickerControllerOriginalImage];

//保存图像到相簿

UIImageWriteToSavedPhotosAlbum(image, self,

@selector(image:didFinishSavingWithError:contextInfo:), nil);

} else if ([mediaType isEqualToString:(NSString *)kUTTypeMovie]) {//如果是录像

//获取录像文件路径URL

NSURL *url = [info objectForKey:UIImagePickerControllerMediaURL];

NSString *path = url.path;

//判断能不能保存到相簿

if (UIVideoAtPathIsCompatibleWithSavedPhotosAlbum(path)) {

//保存视频到相簿

UISaveVideoAtPathToSavedPhotosAlbum(path, self,

@selector(video:didFinishSavingWithError:contextInfo:), nil);

}

}

//拾取控制器弹回

[self dismissViewControllerAnimated:YES completion:nil];

}

/* 取消拍照或录像会调用 */

- (void)imagePickerControllerDidCancel:(UIImagePickerController *)picker

{

NSLog(@"取消");

//拾取控制器弹回

[self dismissViewControllerAnimated:YES completion:nil];

}

#pragma mark - 保存图片或视频完成的回调

- (void)image:(UIImage *)image didFinishSavingWithError:(NSError *)error

contextInfo:(void *)contextInfo {

NSLog(@"保存图片完成");

self.showImageView.image = image;

self.showImageView.contentMode = UIViewContentModeScaleToFill;

}

- (void)video:(NSString *)videoPath didFinishSavingWithError:(NSError *)error

contextInfo:(void *)contextInfo {

NSLog(@"保存视频完成");

}

@end

功能十分强大,基本满足一般的需求,使用起来也很简单。

三、AVFoundation的拍照录像

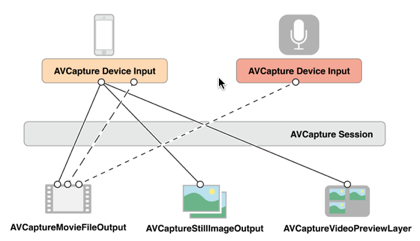

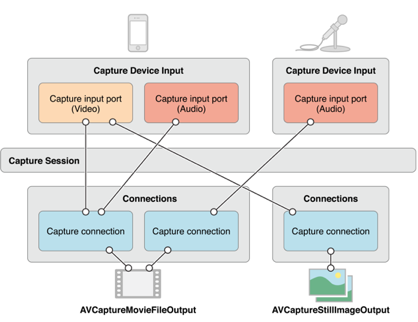

首先了解下AVFoundation做拍照和录像的相关类:

AVCaptureSession:

媒体捕捉会话,负责把捕获到的音视频数据输出到输出设备上,一个会话可以有多个输入输出。AVCaptureVideoPervieewLayer:

相机拍摄预览图层,是CALayer的子类,实时查看拍照或录像效果。

AVCaptureDevice:

输入设备,包括麦克风、摄像头等,可以设置一些物理设备的属性AVCaptureDeviceInput:

设备输入数据管理对象,管理输入数据-

AVCaptureOutput:

设备输出数据管理对象,管理输出数据,通常使用它的子类:AVCaptureAudioDataOutput//输出音频管理对象,输出数据为NSData AVCaptureStillImageDataOutput//输出图片管理对象,输出数据为NSData AVCaptureVideoDataOutput//输出视频管理对象,输出数据为NSData /* 输出文件管理对象,输出数据以文件形式输出 */ AVCaptureFileOutput {//子类 AVCaptureAudioFileOutput //输出是音频文件 AVCaptureMovieFileOutput //输出是视频文件 }

拍照或录像的一般步骤为:

- 创建

AVCaptureSession对象 - 使用

AVCaptureDevice的类方法获得要使用的设备 - 利用输入设备

AVCaptureDevice创建并初始化AVCaptureDeviceInput对象 - 初始化输出数据管理对象,看具体输出什么数据决定使用哪个

AVCaptureOutput子类 - 将

AVCaptureDeviceInput、AVCaptureOutput添加到媒体会话管理对象AVCaptureSession中 - 创建视频预览图层

AVCaptureVideoPreviewLayer并指定媒体会话,添加图层到显示容器中 - 调用媒体会话

AVCaptureSession的startRunning方法开始捕获,stopRunning方法停止捕捉 - 将 捕获的音频或视频数据输出到指定文件

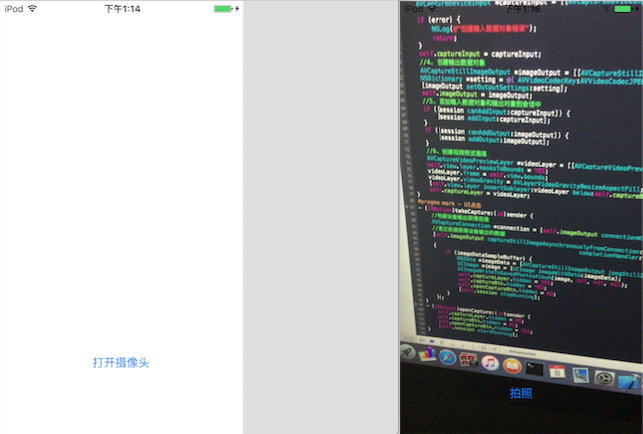

拍照使用实例如下:

#import "ViewController.h"

#import <AVFoundation/AVFoundation.h>

@interface ViewController ()

@property (strong, nonatomic) AVCaptureSession *session;//媒体管理会话

@property (strong, nonatomic) AVCaptureDeviceInput *captureInput;//输入数据对象

@property (strong, nonatomic) AVCaptureStillImageOutput *imageOutput;//输出数据对象

@property (strong, nonatomic) AVCaptureVideoPreviewLayer *captureLayer;//视频预览图层

@property (strong, nonatomic) IBOutlet UIButton *captureBtn;//拍照按钮

@property (strong, nonatomic) IBOutlet UIButton *openCaptureBtn;//打开摄像头按钮

@end

@implementation ViewController

- (void)viewDidLoad {

[super viewDidLoad];

[self initCapture];

self.openCaptureBtn.hidden = NO;

self.captureBtn.hidden = YES;

}

/* 初始化摄像头 */

- (void)initCapture{

//1. 创建媒体管理会话

AVCaptureSession *session = [[AVCaptureSession alloc] init];

self.session = session;

//判断分辨率是否支持1280*720,支持就设置为1280*720

if( [session canSetSessionPreset:AVCaptureSessionPreset1280x720] ) {

session.sessionPreset = AVCaptureSessionPreset1280x720;

}

//2. 获取后置摄像头设备对象

AVCaptureDevice *device = nil;

NSArray *cameras = [AVCaptureDevice devicesWithMediaType:AVMediaTypeVideo];

for (AVCaptureDevice *camera in cameras) {

if (camera.position == AVCaptureDevicePositionBack) {//取得后置摄像头

device = camera;

}

}

if(!device) {

NSLog(@"取得后置摄像头错误");

return;

}

//3. 创建输入数据对象

NSError *error = nil;

AVCaptureDeviceInput *captureInput = [[AVCaptureDeviceInput alloc] initWithDevice:device

error:&error];

if (error) {

NSLog(@"创建输入数据对象错误");

return;

}

self.captureInput = captureInput;

//4. 创建输出数据对象

AVCaptureStillImageOutput *imageOutput = [[AVCaptureStillImageOutput alloc] init];

NSDictionary *setting = @{ AVVideoCodecKey:AVVideoCodecJPEG };

[imageOutput setOutputSettings:setting];

self.imageOutput = imageOutput;

//5. 添加输入数据对象和输出对象到会话中

if ([session canAddInput:captureInput]) {

[session addInput:captureInput];

}

if ([session canAddOutput:imageOutput]) {

[session addOutput:imageOutput];

}

//6. 创建视频预览图层

AVCaptureVideoPreviewLayer *videoLayer =

[[AVCaptureVideoPreviewLayer alloc] initWithSession:session];

self.view.layer.masksToBounds = YES;

videoLayer.frame = self.view.bounds;

videoLayer.videoGravity = AVLayerVideoGravityResizeAspectFill;

//插入图层在拍照按钮的下方

[self.view.layer insertSublayer:videoLayer below:self.captureBtn.layer];

self.captureLayer = videoLayer;

}

#pragma mark - UI点击

/* 点击拍照按钮 */

- (IBAction)takeCapture:(id)sender {

//根据设备输出获得连接

AVCaptureConnection *connection = [self.imageOutput connectionWithMediaType:AVMediaTypeVideo];

//通过连接获得设备输出的数据

[self.imageOutput captureStillImageAsynchronouslyFromConnection:connection

completionHandler:^(CMSampleBufferRef imageDataSampleBuffer, NSError *error)

{

//获取输出的JPG图片数据

NSData *imageData =

[AVCaptureStillImageOutput jpegStillImageNSDataRepresentation:imageDataSampleBuffer];

UIImage *image = [UIImage imageWithData:imageData];

UIImageWriteToSavedPhotosAlbum(image, nil, nil, nil);//保存到相册

self.captureLayer.hidden = YES;

self.captureBtn.hidden = YES;

self.openCaptureBtn.hidden = NO;

[self.session stopRunning];//停止捕捉

}];

}

/* 点击打开摄像头按钮 */

- (IBAction)openCapture:(id)sender {

self.captureLayer.hidden = NO;

self.captureBtn.hidden = NO;

self.openCaptureBtn.hidden = YES;

[self.session startRunning];//开始捕捉

}

@end

录像的操作差不多,下面代码是以上面代码为基础进行修改:

- 比拍照多了一个音频输入,改变输出数据对象的类

- 需要处理视频输出代理方法

- 录制的方法是在输出数据对象上

1. 获取音频输入数据对象以及视频输出数据对象

//获取麦克风设备对象

AVCaptureDevice *device = [AVCaptureDevice devicesWithMediaType:AVMediaTypeAudio].firstObject;

if(!device) {

NSLog(@"取得麦克风错误");

return;

}

//创建输入数据对象

NSError *error = nil;

AVCaptureDeviceInput *audioInput = [[AVCaptureDeviceInput alloc] initWithDevice:device

error:&error];

if (error) {

NSLog(@"创建输入数据对象错误");

return;

}

//创建视频文件输出对象

AVCaptureMovieFileOutput *movieOutput = [[AVCaptureMovieFileOutput alloc] init];

self.movieOutput = movieOutput;2. 添加进媒体管理会话中

if([session canAddInput:captureInput]) {

[session addInput:captureInput];

[session addInput:audioInput];

//添加防抖动功能

AVCaptureConnection *connection = [movieOutput connectionWithMediaType:AVMediaTypeVideo];

if ([connection isVideoStabilizationSupported]) {

connection.preferredVideoStabilizationMode = AVCaptureVideoStabilizationModeAuto;

}

}

if ([session canAddOutput:movieOutput]) {

[session addOutput:movieOutput];

}3. 点击录像按钮

if (!self.movieOutput.isRecording) {

NSString *outputPath = [NSTemporaryDirectory() stringByAppendingString:@"myMovie.mov"];

NSURL *url = [NSURL fileURLWithPath:outputPath];//记住是文件URL,不是普通URL

//开始录制并设置代理监控录制过程,录制文件会存放到指定URL路径下

[self.movieOutput startRecordingToOutputFileURL:url recordingDelegate:self];

} else {

[self.movieOutput stopRecording];//结束录制

}4. 处理录制代理AVCaptureFileOutputRecordingDelegate

/* 开始录制会调用 */

- (void)captureOutput:(AVCaptureFileOutput *)captureOutput

didStartRecordingToOutputFileAtURL:(NSURL *)fileURL

fromConnections:(NSArray *)connections

{

NSLog(@"开始录制");

}

/* 录制完成会调用 */

- (void)captureOutput:(AVCaptureFileOutput *)captureOutput

didFinishRecordingToOutputFileAtURL:(NSURL *)outputFileURL

fromConnections:(NSArray *)connections

error:(NSError *)error

{

NSLog(@"完成录制");

NSString *path = outputFileURL.path;

//保存录制视频到相簿

if (UIVideoAtPathIsCompatibleWithSavedPhotosAlbum(path)) {

UISaveVideoAtPathToSavedPhotosAlbum(path, nil, nil, nil);

}

}四、iOS音频视频使用总结

以上的表格中,我没有全部都讲,我主要讲AVFoundation框架,里面还有非常多的内容可以学习,这个框架是非常强大,有时间也可以再深入去学习。

iOS对于多媒体支持相当灵活和完善,具体开发过程到底如何选择,以上的表格仅供参考。

如果我的内容能对你有所帮助,我就很开心啦!