【Java】JDBC Part5.1 Hikari连接池补充

Hikari Connection Pool Hikari 连接池

HikariCP 官方文档 https://github.com/brettwooldridge/HikariCP

Maven依赖

一般都用8版本

Maven仓库所在地址

https://mvnrepository.com/artifact/com.zaxxer/HikariCP/3.4.5

<dependency> <groupId>com.zaxxer</groupId>

<artifactId>HikariCP</artifactId> <version>3.4.2</version> </dependency>

官方硬编码获取连接

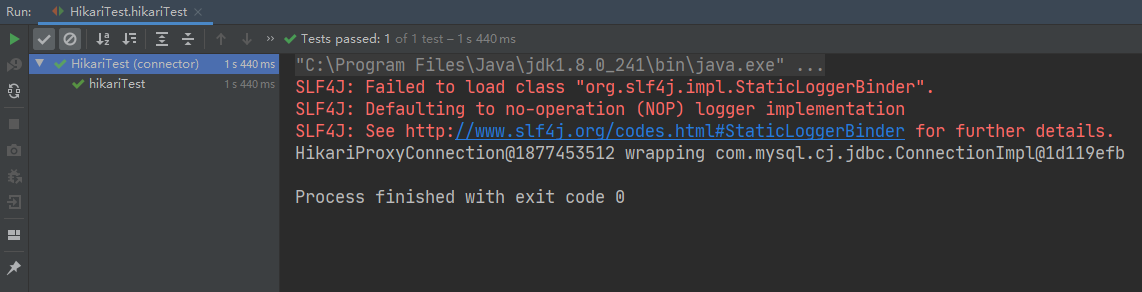

public class HikariTest { @Test public void hikariTest() throws Exception { HikariConfig config = new HikariConfig(); config.setJdbcUrl("jdbc:mysql:///jdbc_db?serverTimezone=Asia/Shanghai"); config.setUsername("root"); config.setPassword("123456"); config.addDataSourceProperty("cachePrepStmts", "true"); config.addDataSourceProperty("prepStmtCacheSize", "300"); config.addDataSourceProperty("prepStmtCacheSqlLimit", "2048"); HikariDataSource dataSource = new HikariDataSource(config); Connection connection = dataSource.getConnection(); System.out.println(connection); connection.close(); } }

因为没有配置SLF4J日志工厂,这里报加载失败信息

上面的硬编码配置还支持了设置连接池的SQL预编译相关

- 开启与编译对象缓存

- 设置缓存个数300

- 设置缓存上限2048个

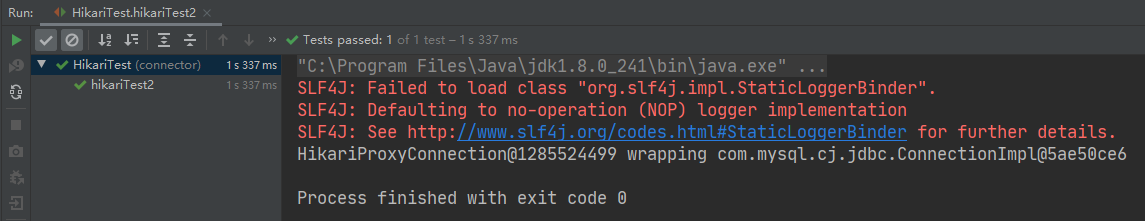

Hikari也是支持对配置文件读取方式的

但是啊但是啊,官方解释的驱动名很费解,我试了半天都不行

然后在这个博客看到了:http://zetcode.com/articles/hikaricp/

就是说你连MySQL不需要配置驱动的,直接写Url就好了

hikari.properties

jdbcUrl = jdbc:mysql:///jdbc_db?serverTimezone=Asia/Shanghai dataSource.user = root dataSource.password = 123456 # 开启SQL预编译对象缓存 dataSource.cachePrepStmts = true # SQL预编译对象缓存个数 256 dataSource.prepStmtCacheSize = 256 # SQL预编译对象缓存个数上限 512 dataSource.prepStmtCacheSqlLimit = 512

连接测试

@Test public void hikariTest2() throws Exception { final String configureFile = "src/main/resources/hikari.properties"; HikariConfig configure = new HikariConfig(configureFile); HikariDataSource dataSource = new HikariDataSource(configure); Connection connection = dataSource.getConnection(); System.out.println(connection); connection.close(); }

封装工具类

public class JdbcHikariUtil { private static final DataSource dataSource = new HikariDataSource(new HikariConfig("src/main/resources/hikari.properties")); public static Connection getConnection(){ try { return dataSource.getConnection(); } catch (SQLException sqlException) { sqlException.printStackTrace(); } return null; } }

做一个全连接池和原生JDBC的封装工具类

首先是Maven依赖

<!-- https://mvnrepository.com/artifact/mysql/mysql-connector-java --> <dependency> <groupId>mysql</groupId> <artifactId>mysql-connector-java</artifactId> <version>8.0.19</version> </dependency> <!-- https://mvnrepository.com/artifact/junit/junit --> <dependency> <groupId>junit</groupId> <artifactId>junit</artifactId> <version>4.13</version> <scope>test</scope> </dependency> <!-- https://mvnrepository.com/artifact/com.mchange/c3p0 --> <dependency> <groupId>com.mchange</groupId> <artifactId>c3p0</artifactId> <version>0.9.5.5</version> </dependency> <!-- https://mvnrepository.com/artifact/commons-dbutils/commons-dbutils --> <dependency> <groupId>commons-dbutils</groupId> <artifactId>commons-dbutils</artifactId> <version>1.7</version> </dependency> <!-- https://mvnrepository.com/artifact/org.apache.commons/commons-dbcp2 --> <dependency> <groupId>org.apache.commons</groupId> <artifactId>commons-dbcp2</artifactId> <version>2.7.0</version> </dependency> <!-- https://mvnrepository.com/artifact/org.apache.commons/commons-pool2 --> <dependency> <groupId>org.apache.commons</groupId> <artifactId>commons-pool2</artifactId> <version>2.8.0</version> </dependency> <!-- https://mvnrepository.com/artifact/com.alibaba/druid --> <dependency> <groupId>com.alibaba</groupId> <artifactId>druid</artifactId> <version>1.1.22</version> </dependency> <dependency> <groupId>com.zaxxer</groupId> <artifactId>HikariCP</artifactId> <version>3.4.2</version> </dependency>

然后是各个连接池的配置

原生JDBC jdbc.properties

driverClass = com.mysql.cj.jdbc.Driver

url = jdbc:mysql://localhost:3306/jdbc_db?serverTimezone=Asia/Shanghai

user = root

password = 123456

C3P0 c3p0-config.xml

<?xml version="1.0" encoding="UTF-8" ?> <c3p0-config> <!-- 自定义的配置命名--> <named-config name="c3p0_xml_config"> <!-- 四个基本信息 --> <property name="driverClass">com.mysql.cj.jdbc.Driver</property> <!-- 默认本地可以省略 jdbc:mysql:///jdbc_db?serverTimezone=Asia/Shanghai --> <property name="jdbcUrl">jdbc:mysql://localhost:3306/jdbc_db?serverTimezone=Asia/Shanghai</property> <property name="user">root</property> <property name="password">123456</property> <!-- 连接池管理信息 --> <!-- 连接对象数不够时,每次申请 迭增的连接数 --> <property name="acquireIncrement">5</property> <!-- 初始池大小存放的连接对象数 --> <property name="initialPoolSize">10</property> <!-- 最小连接对象数 --> <property name="minPoolSize">10</property> <!-- 最大连接对象数,不可超出的范围 --> <property name="maxPoolSize">100</property> <!-- 最多维护的SQL编译对象个数--> <property name="maxStatements">50</property> <!-- 每个连接对象最多可使用的SQL编译对象的个数 --> <property name="maxStatementsPerConnection">2</property> </named-config> </c3p0-config>

DBCP dbcp.properties

driverClassName = com.mysql.cj.jdbc.Driver

url = jdbc:mysql:///jdbc_db?serverTimezone=Asia/Shanghai

username = root

password = 123456

Druid druid.properties ,可以共用c3p0,基本一样

driverClassName = com.mysql.cj.jdbc.Driver

url = jdbc:mysql:///jdbc_db?serverTimezone=Asia/Shanghai

username = root

password = 123456

Hikari hikari.properties

jdbcUrl = jdbc:mysql:///jdbc_db?serverTimezone=Asia/Shanghai

dataSource.user = root

dataSource.password = 123456

# 开启SQL预编译对象缓存

dataSource.cachePrepStmts = true

# SQL预编译对象缓存个数 256

dataSource.prepStmtCacheSize = 256

# SQL预编译对象缓存个数上限 512

dataSource.prepStmtCacheSqlLimit = 512

完整封装工具类

package cn.dai.util; import com.alibaba.druid.pool.DruidDataSourceFactory; import com.mchange.v2.c3p0.ComboPooledDataSource; import com.zaxxer.hikari.HikariConfig; import com.zaxxer.hikari.HikariDataSource; import org.apache.commons.dbcp2.BasicDataSourceFactory; import javax.sql.DataSource; import java.io.InputStream; import java.sql.*; import java.util.Properties; /** * @author ArkD42 * @file Jdbc * @create 2020 - 04 - 24 - 22:04 */ public class CompleteJdbcUtils { private CompleteJdbcUtils(){} //private static String driverClass; private static String url; private static String user; private static String password; private static DataSource dataSourceFromDBCP; private static DataSource dataSourceFromDruid; private static final DataSource dataSourceFromC3P0 = new ComboPooledDataSource("c3p0_xml_config"); private static final DataSource dataSourceFromHikari = new HikariDataSource(new HikariConfig("src/main/resources/hikari.properties")); static { InputStream originalJdbcStream = CompleteJdbcUtils.class.getClassLoader().getResourceAsStream("jdbc.properties"); Properties originalJdbcProperties = new Properties(); InputStream dbcpStream = CompleteJdbcUtils.class.getClassLoader().getResourceAsStream("dbcp.properties"); Properties dbcpProperties = new Properties(); InputStream druidStream = CompleteJdbcUtils.class.getClassLoader().getResourceAsStream("druid.properties"); Properties druidProperties = new Properties(); try { originalJdbcProperties.load(originalJdbcStream); //driverClass = originalJdbcProperties.getProperty("driverClass"); url = originalJdbcProperties.getProperty("url"); user = originalJdbcProperties.getProperty("user"); password = originalJdbcProperties.getProperty("password"); //Class.forName(driverClass); //--------------------------------------------------------------------------\\ dbcpProperties.load( dbcpStream ); dataSourceFromDBCP = BasicDataSourceFactory.createDataSource(dbcpProperties); druidProperties.load( druidStream ); dataSourceFromDruid = DruidDataSourceFactory.createDataSource(druidProperties); } catch ( Exception e) { e.printStackTrace(); } } // 原生JDBC public static Connection getConnectionByOriginalJdbc(){ try { return DriverManager.getConnection(url,user,password); } catch (SQLException sqlException) { sqlException.printStackTrace(); } return null; } // C3P0 public static Connection getConnectionByC3P0(){ try { return dataSourceFromC3P0.getConnection(); } catch (SQLException sqlException) { sqlException.printStackTrace(); } return null; } // DBCP public static Connection getConnectionByDBCP(){ try { return dataSourceFromDBCP.getConnection(); } catch (SQLException sqlException) { sqlException.printStackTrace(); } return null; } // Druid public static Connection getConnectionByDruid(){ try { return dataSourceFromDruid.getConnection(); } catch (SQLException sqlException) { sqlException.printStackTrace(); } return null; } // Hikari public static Connection getConnectionByHikari(){ try { return dataSourceFromHikari.getConnection(); } catch (SQLException sqlException) { sqlException.printStackTrace(); } return null; } // 资源释放 public static void releaseResource(Connection connection, PreparedStatement preparedStatement, ResultSet resultSet){ try{ if (resultSet != null) resultSet.close(); if (preparedStatement != null) preparedStatement.close(); if (connection != null) connection.close(); } catch (SQLException sqlException){ sqlException.printStackTrace(); } } }

测试

@Test public void te5() throws SQLException { Connection connectionByOriginalJdbc = CompleteJdbcUtils.getConnectionByOriginalJdbc(); Connection connectionByC3P0 = CompleteJdbcUtils.getConnectionByC3P0(); Connection connectionByDBCP = CompleteJdbcUtils.getConnectionByDBCP(); Connection connectionByDruid = CompleteJdbcUtils.getConnectionByDruid(); Connection connectionByHikari = CompleteJdbcUtils.getConnectionByHikari(); Connection[]connections = new Connection[]{ connectionByOriginalJdbc, connectionByC3P0, connectionByDBCP, connectionByDruid, connectionByHikari }; for (Connection connection: connections) { System.out.println(connection); connection.close(); } }

对应完整工具类封装的一些常用方法

- 增删改 Update

- 查询单个结果 queryOne

- 查询多个结果 queryToList

- 对注入条件的封装

// 获取SQL预编译对象 public static PreparedStatement getPreparedStatement(Connection connection,String sql){ try { return connection.prepareStatement(sql); } catch (SQLException sqlException) { sqlException.printStackTrace(); } return null; } // 参数注入 public static void argumentsInject(PreparedStatement preparedStatement,Object[] args){ for (int i = 0; i < args.length; i++) { try { preparedStatement.setObject(i+1,args[i]); } catch (SQLException sqlException) { sqlException.printStackTrace(); } } } // 转换日期格式 public static java.sql.Date parseToSqlDate(String patternTime){ SimpleDateFormat simpleDateFormat = new SimpleDateFormat("yyyy-MM-dd"); java.util.Date date = null;//"1987-09-01" try { date = simpleDateFormat.parse(patternTime); } catch (ParseException e) { e.printStackTrace(); } return new java.sql.Date(date.getTime()); } // 更新操作 public static int update(Connection connection,String sql,Object[] args) { PreparedStatement preparedStatement = getPreparedStatement(connection, sql); if (args != null) argumentsInject(preparedStatement,args); try { return preparedStatement.executeUpdate(); } catch (SQLException sqlException) { sqlException.printStackTrace(); } return 0; } // 自己封装的查询 public static <T> List<T> queryToList(Connection connection,Class<T> tClass,String sql,Object[] args){ PreparedStatement preparedStatement = getPreparedStatement(connection, sql); if (args != null) argumentsInject(preparedStatement,args); ResultSet resultSet = null; try{ resultSet = preparedStatement.executeQuery(); ResultSetMetaData metaData = resultSet.getMetaData(); int columnCount = metaData.getColumnCount(); List<T> tList = new ArrayList<T>(); while(resultSet.next()){ T t = tClass.newInstance(); for (int i = 0; i < columnCount; i++) { Object columnValue = resultSet.getObject(i + 1); String columnLabel = metaData.getColumnLabel(i + 1); Field field = tClass.getDeclaredField(columnLabel); field.setAccessible( true ); field.set(t,columnValue); } tList.add(t); } return tList; } catch (Exception e){ e.printStackTrace(); } finally { releaseResource(connection,preparedStatement,resultSet); } return null; } // MapList集合封装 public static List<Map<String, Object>> queryToList(Connection connection,String sql, Object[] args){ PreparedStatement preparedStatement = getPreparedStatement(connection, sql); if (args != null) argumentsInject(preparedStatement,args); ResultSet resultSet = null; try{ resultSet = preparedStatement.executeQuery(); ResultSetMetaData metaData = resultSet.getMetaData(); int columnCount = metaData.getColumnCount(); List<Map<String,Object>> mapList = new ArrayList<Map<String, Object>>(); while(resultSet.next()){ Map<String,Object> row = new HashMap<String, Object>(); for (int i = 0; i < columnCount; i++) { String columnLabel = metaData.getColumnLabel(i + 1); Object columnValue = resultSet.getObject(i + 1); row.put(columnLabel,columnValue); } mapList.add(row); } return mapList; }catch (Exception e){ e.printStackTrace(); } finally { releaseResource(connection,preparedStatement,resultSet);} return null; } // 反射实现单个查询 public static <T> T queryOne(Connection connection,Class<T> tClass,String sql,Object[] args){ PreparedStatement preparedStatement = getPreparedStatement(connection,sql); argumentsInject(preparedStatement,args); ResultSet resultSet = null; try{ resultSet = preparedStatement.executeQuery(); ResultSetMetaData metaData = resultSet.getMetaData(); int columnCount = metaData.getColumnCount(); if (resultSet.next()){ T t = tClass.newInstance(); for (int i = 0; i < columnCount; i++) { Object columnValue = resultSet.getObject(i + 1); String columnLabel = metaData.getColumnLabel(i + 1); Field field = tClass.getDeclaredField(columnLabel); field.setAccessible( true ); field.set(t,columnValue); } return t; } }catch (Exception e){ e.printStackTrace();} finally { releaseResource(connection,preparedStatement,resultSet);} return null; } // Map单个查询 public static Map<String,Object> queryOne(Connection connection,String sql,Object[] args){ PreparedStatement preparedStatement = getPreparedStatement(connection,sql); argumentsInject(preparedStatement,args); ResultSet resultSet = null; try{ resultSet = preparedStatement.executeQuery(); ResultSetMetaData metaData = resultSet.getMetaData(); int columnCount = metaData.getColumnCount(); if (resultSet.next()){ Map<String,Object> map = new HashMap<String, Object>(); for (int i = 0; i < columnCount; i++) { Object columnValue = resultSet.getObject(i + 1); String columnLabel = metaData.getColumnLabel(i + 1); map.put(columnLabel,columnValue); } return map; } }catch (Exception e){ e.printStackTrace();} finally { releaseResource(connection,preparedStatement,resultSet);} return null; } // 求特殊值的通用方法 聚合函数 public <E> E getValue(Connection connection,String sql,Object[] args){ PreparedStatement preparedStatement = getPreparedStatement(connection, sql); if (args != null) argumentsInject(preparedStatement,args); ResultSet resultSet = null; try{ resultSet = preparedStatement.executeQuery(); if (resultSet.next())return (E)resultSet.getObject(1);; } catch (Exception e){ e.printStackTrace(); } finally{ CompleteJdbcUtils.releaseResource(connection,preparedStatement,resultSet);} return null; }

浙公网安备 33010602011771号

浙公网安备 33010602011771号