使用docker-compose部署ELK

文件目录结构

- elk

- docker-compose.yml

- elasticsearch.yml

- kibana.yml

- logstash.yml

- logstash.conf

- filebeat.yml

- data/

- elasticsearch/

- logs/

- password.txt

data/elasticsearch/ 文件夹是用来保存elasticsearch数据用的

data/password.txt 文件是用来保存密码的,如果记得住密码,请忽略

data/logs 需要采集的日志的目录,也就是你应用写日志的位置,正常情况不会这么干,这里只是用来测试效果

docker-compose.yml 文件

version: "3" services: es: image: elasticsearch:7.6.1 labels: co.elastic.logs/enabled: "false" hostname: docker-es ports: - "9200:9200" - "9300:9300" environment: - discovery.type=single-node - "ES_JAVA_OPTS=-Xms1g -Xmx1g" volumes: - ./elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml - ./data/elasticsearch:/usr/share/elasticsearch/data kibana: image: kibana:7.6.1 labels: co.elastic.logs/enabled: "false" hostname: docker-kibana ports: - "5601:5601" volumes: - ./kibana.yml:/usr/share/kibana/config/kibana.yml depends_on: - es logstash: image: logstash:7.6.1 hostname: docker-logstash ports: - "5044:5044" - "9600:9600" volumes: - ./logstash.yml:/usr/share/logstash/config/logstash.yml - ./logstash.conf:/usr/share/logstash/pipeline/logstash.conf - ./data/logs:/logs environment: - "ES_JAVA_OPTS=-Xms512m -Xmx512m" - "LS_OPTS=--config.reload.automatic" depends_on: - es filebeat: image: docker.elastic.co/beats/filebeat:7.6.1 labels: co.elastic.logs/enabled: "false" user: root hostname: docker-filebeat volumes: - ./filebeat.yml:/usr/share/filebeat/filebeat.yml - "/var/lib/docker/containers:/var/lib/docker/containers:ro" - "/var/run/docker.sock:/var/run/docker.sock:ro" depends_on: - es

elasticsearch.yml

network.host: 0.0.0.0 http.port: 9200 transport.port: 9300 http.cors.enabled: true http.cors.allow-origin: "*" xpack.security.enabled: true xpack.security.http.ssl.enabled: false xpack.security.transport.ssl.enabled: false

kibana.yml

server: host: "0.0.0.0" port: 5601 # ES elasticsearch: # 这里替换成自己服务器的IP,不能用127.0.0.1和0.0.0.0 hosts: ["http://docker-es:9200"] username: "kibana_system" password: "xxxxx" # 汉化 i18n.locale: "zh-CN"

logstash.yml

http.host: "0.0.0.0" path.config: /usr/share/logstash/pipeline/*.conf xpack.monitoring.enabled: false

logstash.conf

input { file { path => "/logs/*/*.log" start_position => "beginning" } } output { elasticsearch { hosts => [ "http://docker-es:9200" ] user => "elastic" password => "xxxx" index => "logstash-%{+YYYY-MM-dd}" } }

filebeat.yml

filebeat.config: modules: path: ${path.config}/modules.d/*.yml reload.enabled: false filebeat.autodiscover: providers: - type: docker hints.enabled: true processors: - add_cloud_metadata: ~ output.elasticsearch: hosts: 'docker-es:9200' username: 'elastic' password: 'xxxx'

ps:上面的es地址同kibana.yml,这里替换成自己服务器的IP,不能用127.0.0.1和0.0.0.0

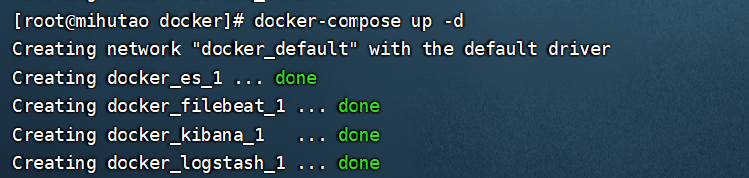

需要的基本上都搞好了,可以开始运行了,启动命令:

docker-compose up -d

查看容器状态,如果起动失败,有可能是这几个yml的文件的权限不够,把这几权限赋予755,切记这里的logstash日志输入输出配置文件,一定要授权755权限,不能777或其他,如不授权ELK读取不了此文件

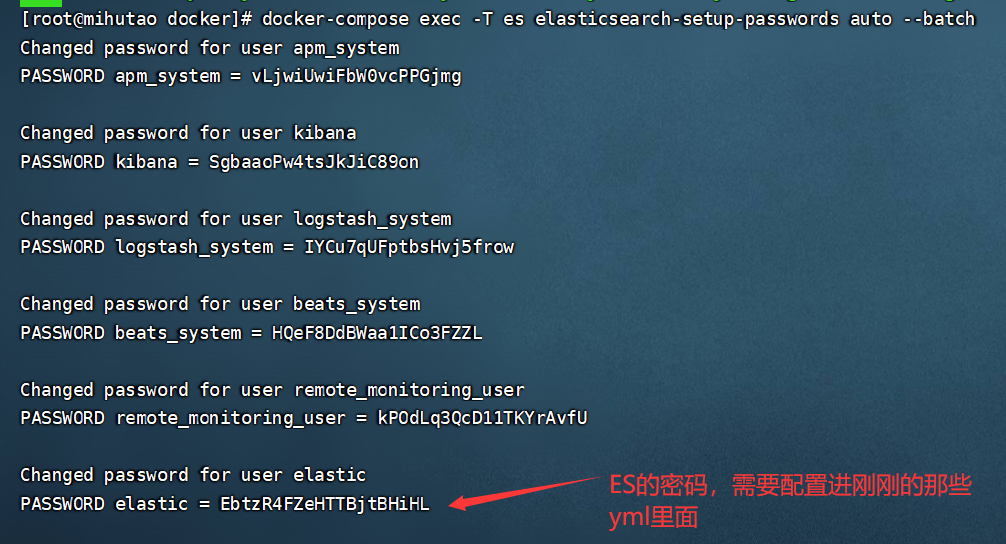

现在访问kibana的时候,是访问不了的,因为ES设置了密码的,我们的yml里面没有配置,我们需要重置一下密码

命令:

docker-compose exec -T es elasticsearch-setup-passwords auto --batch

然后重新修改配置文件, kibana.yml, logstash.conf, filebeat.yml 改对应的ES的密码就可以了,也就是上文的账号:elastic 密码:EbtzR4FZeHTTBjtBHiHL,注意账号和密码不要搞错了,然后我们停止,再启动

docker-compose down

docker-compose up -d

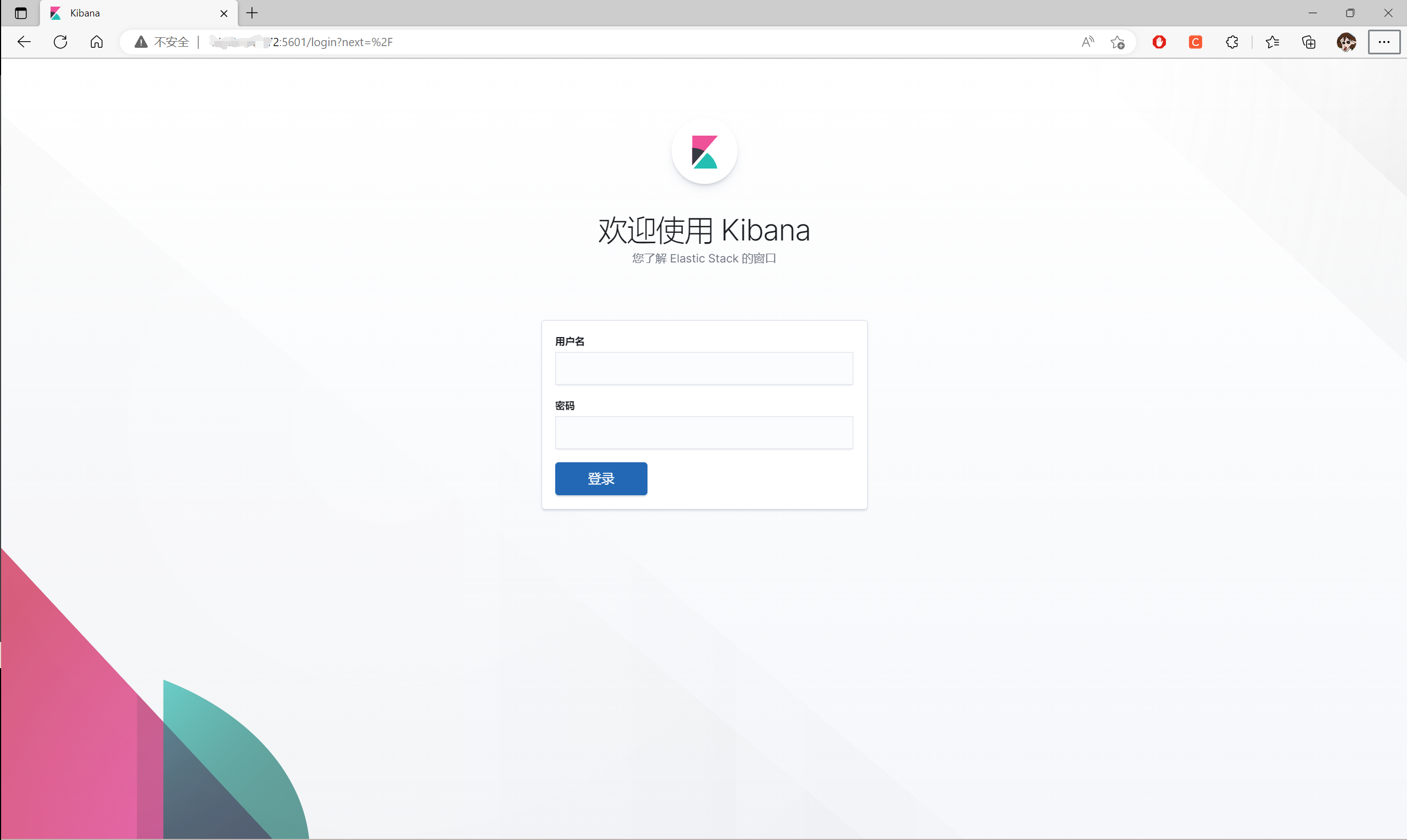

然后访问http://IP:5601/,需要等待加载一会,直至出现下列界面,输入刚刚我们重置的ES账号和密码

输入密码登录进去出现以下界面

本文来自博客园,作者:迷糊桃,转载请注明原文链接:https://www.cnblogs.com/mihutao/p/16856029.html

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本

· C#/.NET/.NET Core优秀项目和框架2025年2月简报

· Manus爆火,是硬核还是营销?

· 终于写完轮子一部分:tcp代理 了,记录一下

· 【杭电多校比赛记录】2025“钉耙编程”中国大学生算法设计春季联赛(1)