基于样例的图像修补算法在移除较大的内容,修补大块范围的应用上,比基于像素的传统图像修补方法要适用得多。其基本思想和基于像素的方法还是一致的,只是基于像素的方法是一个像素一个像素地填充待修补区域,而基于样例的方法是一片一片样例地填充。大致的步骤是:

- 确定待修补的边界

- 计算边界的优先级,确定优先修补的patch

- 从周围已知区域选择和待修补patch最相似的patch,并填充待修补patch

- 如果待修补区域已经全部填充,结束。否则回到2。

这里有论文的中英文版。从原理上来说比较容易理解,就不赘述了。直接上代码,然后上实例。

using ElementType = struct {

int flag;

float confidence;

};

static float compute_confidence(const long x, const long y, const int radius, const unique_ptr<ElementType[]>& sET, const long Width, const long Height)

{

float confidence = 0;

long hstart = max(0, y - radius), hend = min(Height, y + radius + 1);

long wstart = max(0, x - radius), wend = min(Width, x + radius + 1);

long offset = hstart * Width;

for (long m = hstart; m < hend; ++m)

{

for (long n = wstart; n < wend; ++n)

{

if (sET[offset + n].confidence != 0.0f)

{

confidence += sET[offset + n].confidence;

}

}

offset += Width;

}

confidence /= (hend - hstart) * (wend - wstart);

return confidence;

}

static float compute_dataterm(const long x, const long y, const unique_ptr<BYTE[]>& sY, const unique_ptr<ElementType[]>& sET, const long Width, const long Height)

{

float gradIx = 0, gradIy = 0;

long coff = y * Width + x;

float gradNx = 0.0f, gradNy = 0.0f;

long hstart = max(0, y - 1), hend = min(Height, y + 2);

long wstart = max(0, x - 1), wend = min(Width, x + 2);

long offset = hstart * Width;

for (long m = hstart; m < hend; ++m)

{

int dy = m - y;

for (long n = wstart; n < wend; ++n)

{

int dx = n - x;

if (sET[offset + n].flag != INSIDE)

{

int dl = sY[offset + n] - sY[coff];

if (dx && dy) {

gradIx += (0.5f * sqrtf(2) * dl) / dx;

gradIy += (0.5f * sqrtf(2) * dl) / dy;

}

else if (dx) {

gradIx += dl / dx;

}

else if (dy) {

gradIy += dl / dy;

}

}

else {

gradNx += dx;

gradNy += dy;

}

}

offset += Width;

}

// rotate (gradIx, gradIy) 90 degree

{

float temp = -gradIy;

gradIy = gradIx;

gradIx = temp;

}

// normalize gradN

if (gradNx != 0.0f || gradNy != 0.0f)

{

float mag = sqrtf(gradNx * gradNx + gradNy * gradNy);

gradNx /= mag;

gradNy /= mag;

}

float dataterm = abs(gradIx * gradNx + gradIy * gradNy) / 255.0f;

return dataterm;

}

#define LAB_DISTANCE

BOOL CDibProcess::ExemplarInpaint(CDibMask* pDibMask, const int radius, const int search_radius)

{

CRect* pRect = nullptr;

CRect Mask;

unique_ptr<BYTE[]> sMask;

if (pDibMask)

{

pDibMask->GetMaskCircumRect(Mask);

pDibMask->GetMask(sMask);

pRect = &Mask;

}

else

return FALSE;

long i, j;

const long Width = GetWidth();

const long Height = GetHeight();

long Hstart, Hend, Wstart, Wend;

long len;

pRect->NormalizeRect();

if (pRect->top < 0) pRect->top = 0;

if (pRect->left < 0) pRect->left = 0;

if (pRect->bottom > Height) pRect->bottom = Height;

if (pRect->right > Width) pRect->right = Width;

Hstart = pRect->top;

Hend = pRect->bottom;

Wstart = pRect->left;

Wend = pRect->right;

ASSERT(Hstart >= 0 && Hstart <= Hend && Hend <= Height);

ASSERT(Wstart >= 0 && Wstart <= Wend && Wend <= Width);

unique_ptr<BYTE[]> sRed, sGreen, sBlue;

if (GetRGB(sRed, sGreen, sBlue, &len) == FALSE) return FALSE;

unique_ptr<BYTE[]> sLum(new BYTE[len]);

for (i = 0; i < len; ++i) {

sLum[i] = RGB2Y(sRed[i], sGreen[i], sBlue[i]);

}

#ifdef LAB_DISTANCE

unique_ptr<BYTE[]> sL(new BYTE[len]), sA(new BYTE[len]), sB(new BYTE[len]);

for (i = 0; i < len; ++i) {

RGB2LAB(sRed[i], sGreen[i], sBlue[i], sL[i], sA[i], sB[i]);

}

#endif

// initialize

unique_ptr<ElementType[]> sET(new ElementType[len]);

BYTE* pm = sMask.get();

for (i = 0; i < len; ++i)

{

if (*pm++ == 0)

{

sET[i].flag = KNOWN;

sET[i].confidence = 1.0f;

}

else

{

sET[i].flag = INSIDE;

sET[i].confidence = 0.0f;

}

}

// initialize fill front

multimap<float, CPoint, greater<float>> fill_front; // <priority, position>

long hstart = max(0, Hstart - 1), hend = min(Height, Hend + 1);

long wstart = max(0, Wstart - 1), wend = min(Width, Wend + 1);

BYTE *start_off = sMask.get() + hstart * Width + wstart;

for (i = hstart; i < hend; ++i)

{

pm = start_off;

for (j = wstart; j < wend; ++j)

{

if (*pm == 0) {

// check 4 connected pixels

if (i - 1 >= 0 && *(pm - Width)

|| j - 1 >= 0 && *(pm - 1)

|| j + 1 < Width && *(pm + 1)

|| i + 1 < Height && *(pm + Width))

{

// fill front, compute priority = confidence * dataterm

float confidence = compute_confidence(j, i, radius, sET, Width, Height);

float dataterm = compute_dataterm(j, i, sLum, sET, Width, Height);

fill_front.emplace(confidence * dataterm, CPoint(j, i));

++pm;

}

else {

// known

++pm;

}

}

else {

// inside

++pm;

}

}

start_off += Width;

}

if (fill_front.size() == 0) return FALSE;

while (fill_front.size())

{

auto it = fill_front.begin(); // point to the highest priority

CPoint point = it->second;

fill_front.erase(it);

// determine the patch rect certered by point

CRect patch_rect(point.x - radius, point.y - radius, point.x + radius + 1, point.y + radius + 1);

patch_rect.IntersectRect(patch_rect, CRect(0, 0, Width, Height));

// serach the exemplar that minimizes distance to patch_rect

float min_distance = 1e20f;

CRect min_rect;

const long patch_width = patch_rect.Width(), patch_height = patch_rect.Height();

bool valid_fill_front_pixel = true;

long search_hstart = max(0, point.y - search_radius), search_hend = min(Height, point.y + search_radius + 1) - patch_height;

long search_wstart = max(0, point.x - search_radius), search_wend = min(Width, point.x + search_radius + 1) - patch_width;

for (i = search_hstart; i < search_hend; ++i)

{

for (j = search_wstart; j < search_wend; ++j)

{

long offset = i * Width + j;

long patch_offset = patch_rect.top * Width + patch_rect.left;

float d_sum = 0;

long total = 0;

bool valid = true;

for (long m = 0; m < patch_height; ++m)

{

for (long n = 0; n < patch_width; ++n)

{

if (sET[offset + n].flag != INSIDE)

{

if (sET[patch_offset + n].flag != INSIDE)

{

#ifdef LAB_DISTANCE

int dx = sL[offset + n] - sL[patch_offset + n];

int dy = sA[offset + n] - sA[patch_offset + n];

int dz = sB[offset + n] - sB[patch_offset + n];

#else

int dx = sRed[offset + n] - sRed[patch_offset + n];

int dy = sGreen[offset + n] - sGreen[patch_offset + n];

int dz = sBlue[offset + n] - sBlue[patch_offset + n];

#endif

d_sum += sqrtf(float(dx * dx + dy * dy + dz * dz));

++total;

}

}

else

{

// jump out of the double loop

m = patch_height;

valid = false;

break;

}

}

offset += Width;

patch_offset += Width;

}

if (valid)

{

if (total == patch_height * patch_width)

{

// the point is not a fill front point

valid_fill_front_pixel = false;

i = Height - patch_height;

break;

}

float distance = d_sum / (float)total;

if (distance < min_distance)

{

min_distance = distance;

min_rect.SetRect(j, i, j + patch_width, i + patch_height);

}

else if (distance == min_distance) {

float ph_distance = sqrtf(float((patch_rect.top - i) * (patch_rect.top - i) + (patch_rect.left - j) * (patch_rect.left - j)));

float min_rect_ph_distance = sqrtf(float((patch_rect.top - min_rect.top) * (patch_rect.top - min_rect.top) + (patch_rect.left - min_rect.left) * (patch_rect.left - min_rect.left)));

if (ph_distance < min_rect_ph_distance) {

min_distance = distance;

min_rect.SetRect(j, i, j + patch_width, i + patch_height);

}

}

}

}

}

if (!valid_fill_front_pixel) continue; // directly go to next fill front pixel

if (min_distance == 1e20f) // in case the search radius is too small to find a match patch

return FALSE;

// found the rect with minimum distance, then copy

{

long offset = min_rect.top * Width + min_rect.left;

long patch_offset = patch_rect.top * Width + patch_rect.left;

TRACE(TEXT("fill front (%d, %d), copy [%d, %d, %d, %d] -> [%d, %d, %d, %d], offset %d -> %d\n"), point.x, point.y,

min_rect.left, min_rect.top, min_rect.right, min_rect.bottom,

patch_rect.left, patch_rect.top, patch_rect.right, patch_rect.bottom, offset, patch_offset);

for (i = 0; i < patch_height; ++i)

{

for (j = 0; j < patch_width; ++j)

{

if (sET[patch_offset + j].flag == INSIDE)

{

sRed[patch_offset + j] = sRed[offset + j];

sGreen[patch_offset + j] = sGreen[offset + j];

sBlue[patch_offset + j] = sBlue[offset + j];

#ifdef LAB_DISTANCE

sL[patch_offset + j] = sL[offset + j];

sA[patch_offset + j] = sA[offset + j];

sB[patch_offset + j] = sB[offset + j];

#endif

sLum[patch_offset + j] = sLum[offset + j];

sET[patch_offset + j].flag = KNOWN;

}

}

offset += Width;

patch_offset += Width;

}

// use the updated confidence at point to update the each pixel confidence in the patch

float confidence = compute_confidence(point.x, point.y, radius, sET, Width, Height);

patch_offset = patch_rect.top * Width + patch_rect.left;

for (i = 0; i < patch_height; ++i)

{

for (j = 0; j < patch_width; ++j)

{

if (sET[patch_offset + j].confidence == 0.0f)

sET[patch_offset + j].confidence = confidence;

}

patch_offset += Width;

}

}

// update fill front priorities

{

// first remove the fill front pixels inside the patch

for (it = fill_front.begin(); it != fill_front.end(); ++it)

{

point = it->second;

if (patch_rect.PtInRect(point))

{

it = fill_front.erase(it);

if (it == fill_front.end()) break;

}

}

// then add new pixels to fill front from possible 4 sides of the patch

i = patch_rect.top;

j = patch_rect.left + 1;

long patch_offset = i * Width + j;

if (i - 1 >= 0) {

for (; j < patch_rect.right - 1; ++j)

{

if (sET[patch_offset - Width].flag == INSIDE)

{

float dataterm = compute_dataterm(j, i, sLum, sET, Width, Height);

fill_front.emplace(sET[patch_offset].confidence * dataterm, CPoint(j, i));

}

++patch_offset;

}

}

i = patch_rect.bottom - 1;

j = patch_rect.left + 1;

patch_offset = i * Width + j;

if (i + 1 < Height) {

for (; j < patch_rect.right - 1; ++j)

{

if (sET[patch_offset + Width].flag == INSIDE)

{

float dataterm = compute_dataterm(j, i, sLum, sET, Width, Height);

fill_front.emplace(sET[patch_offset].confidence * dataterm, CPoint(j, i));

}

++patch_offset;

}

}

i = patch_rect.top + 1;

j = patch_rect.left;

patch_offset = i * Width + j;

if (j - 1 >= 0) {

for (; i < patch_rect.bottom - 1; ++i)

{

if (sET[patch_offset - 1].flag == INSIDE)

{

float dataterm = compute_dataterm(j, i, sLum, sET, Width, Height);

fill_front.emplace(sET[patch_offset].confidence * dataterm, CPoint(j, i));

}

patch_offset += Width;

}

}

i = patch_rect.top + 1;

j = patch_rect.right - 1;

patch_offset = i * Width + j;

if (j + 1 < Width) {

for (; i < patch_rect.bottom - 1; ++i)

{

if (sET[patch_offset + 1].flag == INSIDE)

{

float dataterm = compute_dataterm(j, i, sLum, sET, Width, Height);

fill_front.emplace(sET[patch_offset].confidence * dataterm, CPoint(j, i));

}

patch_offset += Width;

}

}

// then 4 corner pixels

i = patch_rect.top;

j = patch_rect.left;

patch_offset = i * Width + j;

if (i - 1 >= 0 && sET[patch_offset - Width].flag == INSIDE

|| j - 1 >= 0 && sET[patch_offset - 1].flag == INSIDE)

{

float dataterm = compute_dataterm(j, i, sLum, sET, Width, Height);

fill_front.emplace(sET[patch_offset].confidence * dataterm, CPoint(j, i));

}

//i = patch_rect.top;

j = patch_rect.right - 1;

patch_offset = i * Width + j;

if (i - 1 >= 0 && sET[patch_offset - Width].flag == INSIDE

|| j + 1 < Width && sET[patch_offset + 1].flag == INSIDE)

{

float dataterm = compute_dataterm(j, i, sLum, sET, Width, Height);

fill_front.emplace(sET[patch_offset].confidence * dataterm, CPoint(j, i));

}

i = patch_rect.bottom - 1;

j = patch_rect.left;

patch_offset = i * Width + j;

if (j - 1 >= 0 && sET[patch_offset - 1].flag == INSIDE

|| i + 1 < Height && sET[patch_offset + Width].flag == INSIDE)

{

float dataterm = compute_dataterm(j, i, sLum, sET, Width, Height);

fill_front.emplace(sET[patch_offset].confidence * dataterm, CPoint(j, i));

}

//i = patch_rect.bottom - 1;

j = patch_rect.right - 1;

patch_offset = i * Width + j;

if (j + 1 < Width && sET[patch_offset + 1].flag == INSIDE

|| i + 1 < Height && sET[patch_offset + Width].flag == INSIDE)

{

float dataterm = compute_dataterm(j, i, sLum, sET, Width, Height);

fill_front.emplace(sET[patch_offset].confidence * dataterm, CPoint(j, i));

}

}

}

PutRGB(pRect, sRed, sGreen, sBlue);

return TRUE;

}

在计算两片样例之间的距离时,颜色空间的选择还是挺重要的。我试过几个不同的颜色空间,按照论文中使用的CIE Lab应该效果是最好的。

先看论文里那个简单的三单色图案,和基于像素的方法比较,效果要更好一些,没有了边缘的模糊。

原图(640x480) 样例半径3,搜索半径50

再看几个在基于像素的方法中用过的图像。总的来说,相对于基于像素的方法,没有了模糊的效果,在待修补的范围较大,背景比较复杂的情况,一般要比基于像素的方法要好一些。虽然基于样例的方法是从周围已知的图像中选择最接近的样例,会向待修补区域里面延伸已知区域的纹理和明显的梯度,但同样的周围已知的样例,和待修补区域原始的内容肯定有差异,填补了以后衔接的地方就会出现一些不自然,不连贯。而且随着向待修补区域中心推进,置信度会逐渐降低,这些不自然不连贯的地方也会不断延伸,甚至扩大。

待修补图 样例半径1,搜索半径10

原图(698x319) inpaint模板

样例半径3,搜索半径50 样例半径4,搜索半径80

原图(2048x1269) inpaint模板

样例半径3,搜索半径50 样例半径5,搜索半径100

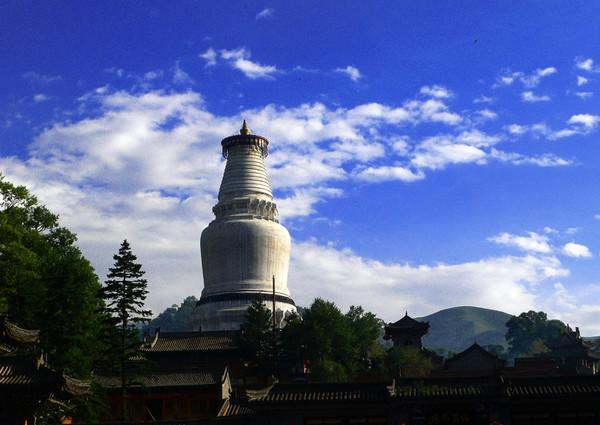

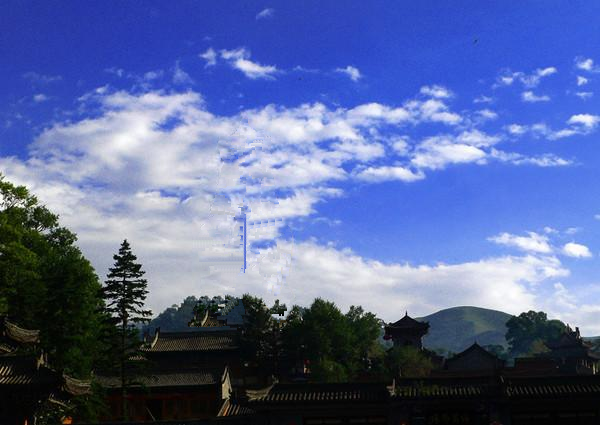

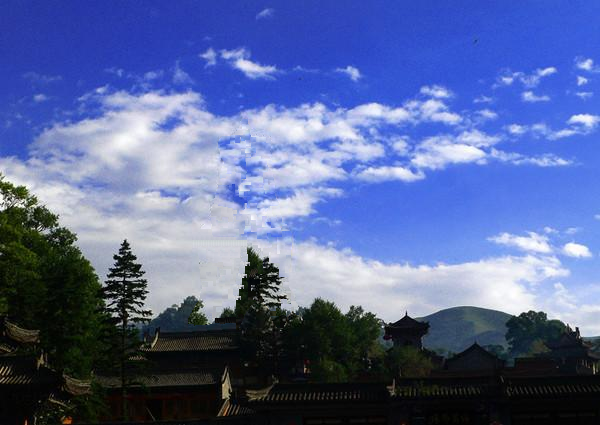

再看这样一个例子,去除图像中的白塔。背景相对来说更复杂一些。不同的样例半径和搜索半径,得出的结果可能很不相同。样例半径决定了一次填补的区域大小。阳历半径太小,可能容易选择错误的匹配样例,导致后面匹配也都跑偏了。样例半径太大了,可能衔接的地方过渡不自然。如果比较确定用来填补的区域比较近,搜索半径就小一些,否则就大一些。

原图 inpaint模板

样例半径3,搜索半径80 样例半径3,搜索半径100

样例半径5,搜索半径100 样例半径5,搜索半径150

浙公网安备 33010602011771号

浙公网安备 33010602011771号