大作业-中文文本分类

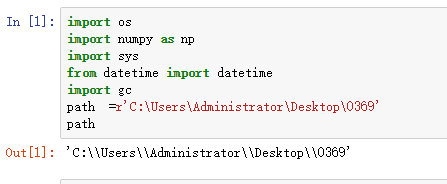

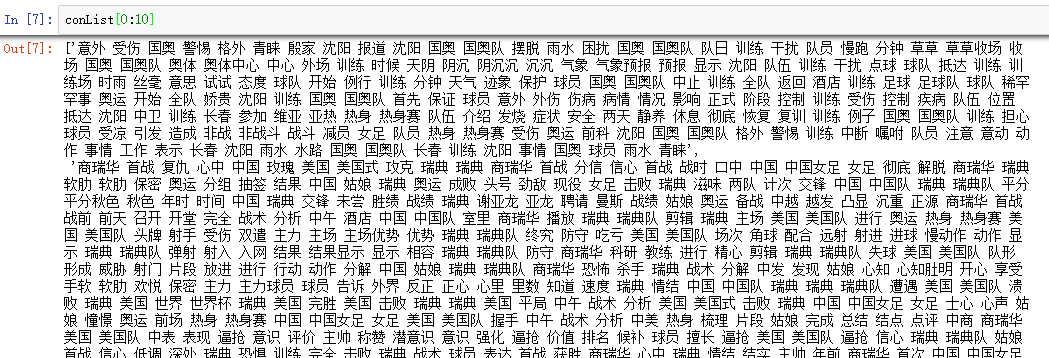

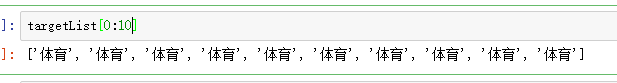

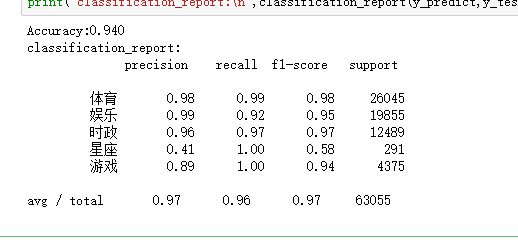

import os import numpy as np import sys from datetime import datetime import gc path =r'C:\Users\Administrator\Desktop\0369' path import jieba with open(r'C:\Users\Administrator\Desktop\stopsCN.txt', encoding='utf-8') as f: stops = f.read().split('\n') def processing (tokens): #去掉停用词 tokens = [token for token in tokens if token not in stops] #去掉非字母汉字的字符 tokens = "".join([token for token in tokens if token.isalpha()]) #结巴分词,去掉小于2的词 tokens =" ".join([token for token in jieba.cut(tokens,cut_all=True) if len(token)>=2]) return tokens conList = [] targetList = [] for root,dirs,files in os.walk(path): # 用os.walk获取需要的变量,并拼接文件路径再打开每一个文件 # print(root) # print(dirs) # print(files) for filetext in files: Filetext = os.path.join(root,filetext) size = os.path.getsize(Filetext) print(Filetext,size) with open(Filetext,'r',encoding='utf-8') as f: content = f.read() # 获取文本 target = Filetext.split('\\')[-2] # 获取类别标签 targetList.append(target) conList.append(processing(content)) targetList[0:10] conList[0:10] #### 划分训练集测试集 from sklearn.feature_extraction.text import TfidfVectorizer #Feature extraction文本特征提取 from sklearn.model_selection import train_test_split from sklearn.naive_bayes import GaussianNB,MultinomialNB from sklearn.model_selection import cross_val_score from sklearn.metrics import classification_report x_train,x_test,y_train,y_test = train_test_split(conList,targetList,test_size=0.2,stratify=targetList) vector = TfidfVectorizer() X_train = vector.fit_transform(x_train) X_test = vector.transform(x_test) # 建立模型,这里用多项式朴素贝叶斯,因为样本特征的a分布大部分是多元离散值 bys = MultinomialNB() MX = bys.fit(X_train,y_train) #预测 y_predict = MX.predict(X_test) scores = cross_val_score(bys,X_test,y_test,cv=3) print("Accuracy:%.3f"%scores.mean()) print("classification_report:\n",classification_report(y_predict,y_test))

2、boston房价预测

from sklearn.datasets import load_boston

from sklearn.model_selection import train_test_split

data = load_boston()

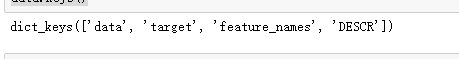

data.keys()

#划分数据集

x_train,x_test,y_train,y_test = train_test_split(data.data,data.target,test_size=0.3)

#建立多元线性回归模型

from sklearn.linear_model import LinearRegression

mlr = LinearRegression()

mlr.fit(x_train,y_train) #训练模型

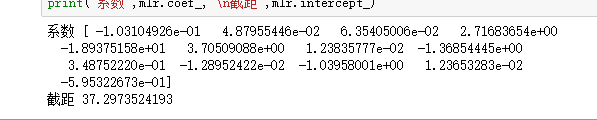

print('系数',mlr.coef_,"\n截距",mlr.intercept_)

#判断模型的好坏

from sklearn.metrics import regression

y_predict = mlr.predict(x_test) #预测

print("预测的均方误差:", regression.mean_squared_error(y_test,y_predict))

print("预测的平均绝对误差:", regression.mean_absolute_error(y_test,y_predict))

# 模型的分数

print("多元线性回归模型的分数:",mlr.score(x_test, y_test))

#多元多项式回归模型

from sklearn.preprocessing import PolynomialFeatures

poly = PolynomialFeatures(degree=2)

x_poly_train = poly.fit_transform(x_train) #创建多项式训练集

x_poly_test = poly.transform(x_test) #创建多项式数据集

#建立模型

mlrp = LinearRegression()

mlrp.fit(x_poly_train,y_train)

y_predict1 = mlrp.predict(x_poly_test)

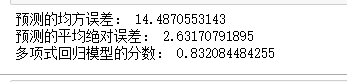

print("预测的均方误差:", regression.mean_squared_error(y_test,y_predict1))

print("预测的平均绝对误差:", regression.mean_absolute_error(y_test,y_predict1))

# 打印模型的分数

print("多项式回归模型的分数:",mlrp.score(x_poly_test, y_test))

结果: