logstash安装、配置及测试

一、logstash安装、配置及简单测试

1、安装logstash

LOGSTASH -- IP:192.168.56.11

yum install jdk-8u181-linux-x64.rpm logstash-6.6.1.rpm -y

2、测试logstash

2.1 测试标准输入和输出

# /usr/share/logstash/bin/logstash -e 'input { stdin {}} output { stdout {codec => rubydebug}}'

WARNING: Could not find logstash.yml which is typically located in $LS_HOME/config or /etc/logstash. You can specify the path using --path.settings. Continuing using the defaults

Could not find log4j2 configuration at path /usr/share/logstash/config/log4j2.properties. Using default config which logs errors to the console

[WARN ] 2019-03-03 21:59:39.368 [LogStash::Runner] multilocal - Ignoring the 'pipelines.yml' file because modules or command line options are specified

[INFO ] 2019-03-03 21:59:39.376 [LogStash::Runner] runner - Starting Logstash {"logstash.version"=>"6.6.1"}

[INFO ] 2019-03-03 21:59:46.744 [Converge PipelineAction::Create<main>] pipeline - Starting pipeline {:pipeline_id=>"main", "pipeline.workers"=>1, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>50}

[INFO ] 2019-03-03 21:59:46.933 [Converge PipelineAction::Create<main>] pipeline - Pipeline started successfully {:pipeline_id=>"main", :thread=>"#<Thread:0x4c914f3d run>"}

The stdin plugin is now waiting for input:

[INFO ] 2019-03-03 21:59:46.991 [Ruby-0-Thread-1: /usr/share/logstash/lib/bootstrap/environment.rb:6] agent - Pipelines running {:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]}

[INFO ] 2019-03-03 21:59:47.254 [Api Webserver] agent - Successfully started Logstash API endpoint {:port=>9600}

123456hehe,haha.

{

"message" => "123456hehe,haha.",

"host" => "localhost.localdomain",

"@timestamp" => 2019-03-03T13:59:56.542Z,

"@version" => "1"

}

2.2 测试输出到文件

# /usr/share/logstash/bin/logstash -e 'input {stdin {}} output {file { path => "/tmp/test-%{+YYYY.MM.dd}.log"

WARNING: Could not find logstash.yml which is typically located in $LS_HOME/config or /etc/logstash. You can specify the path using --path.settings. Continuing using the defaults

Could not find log4j2 configuration at path /usr/share/logstash/config/log4j2.properties. Using default config which logs errors to the console

[WARN ] 2019-03-03 22:01:24.481 [LogStash::Runner] multilocal - Ignoring the 'pipelines.yml' file because modules or command line options are specified

[INFO ] 2019-03-03 22:01:24.502 [LogStash::Runner] runner - Starting Logstash {"logstash.version"=>"6.6.1"}

[INFO ] 2019-03-03 22:01:31.722 [Converge PipelineAction::Create<main>] pipeline - Starting pipeline {:pipeline_id=>"main", "pipeline.workers"=>1, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>50}

[INFO ] 2019-03-03 22:01:31.932 [Converge PipelineAction::Create<main>] pipeline - Pipeline started successfully {:pipeline_id=>"main", :thread=>"#<Thread:0x37cef916 run>"}

[INFO ] 2019-03-03 22:01:31.990 [Ruby-0-Thread-1: /usr/share/logstash/lib/bootstrap/environment.rb:6] agent - Pipelines running {:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]}

The stdin plugin is now waiting for input:

[INFO ] 2019-03-03 22:01:32.330 [Api Webserver] agent - Successfully started Logstash API endpoint {:port=>9600}

[INFO ] 2019-03-03 22:01:32.691 [[main]>worker0] file - Opening file {:path=>"/tmp/test-2019.03.03.log"}

123456,file,hehe,haha.

# cat /tmp/test-2019.03.03.log

{"@version":"1","host":"localhost.localdomain","message":"123456,file,hehe,haha.","@timestamp":"2019-03-03T14:01:42.505Z"}

2.3 测试输出到elasticsearch

# /usr/share/logstash/bin/logstash -e 'input {stdin {}} output {elasticsearch { hosts => ["192.168.56.15:9200"] index => "logstash-test-%{+YYYY.MM.dd}"}}'

WARNING: Could not find logstash.yml which is typically located in $LS_HOME/config or /etc/logstash. You can specify the path using --path.settings. Continuing using the defaults

Could not find log4j2 configuration at path /usr/share/logstash/config/log4j2.properties. Using default config which logs errors to the console

[WARN ] 2019-03-03 22:04:34.539 [LogStash::Runner] multilocal - Ignoring the 'pipelines.yml' file because modules or command line options are specified

[INFO ] 2019-03-03 22:04:34.561 [LogStash::Runner] runner - Starting Logstash {"logstash.version"=>"6.6.1"}

[INFO ] 2019-03-03 22:04:41.741 [Converge PipelineAction::Create<main>] pipeline - Starting pipeline {:pipeline_id=>"main", "pipeline.workers"=>1, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>50}

[INFO ] 2019-03-03 22:04:42.275 [[main]-pipeline-manager] elasticsearch - Elasticsearch pool URLs updated {:changes=>{:removed=>[], :added=>[http://192.168.56.15:9200/]}}

[WARN ] 2019-03-03 22:04:42.533 [[main]-pipeline-manager] elasticsearch - Restored connection to ES instance {:url=>"http://192.168.56.15:9200/"}

[INFO ] 2019-03-03 22:04:42.810 [[main]-pipeline-manager] elasticsearch - ES Output version determined {:es_version=>6}

[WARN ] 2019-03-03 22:04:42.812 [[main]-pipeline-manager] elasticsearch - Detected a 6.x and above cluster: the `type` event field won't be used to determine the document _type {:es_version=>6}

[INFO ] 2019-03-03 22:04:42.877 [[main]-pipeline-manager] elasticsearch - New Elasticsearch output {:class=>"LogStash::Outputs::ElasticSearch", :hosts=>["//192.168.56.15:9200"]}

[INFO ] 2019-03-03 22:04:42.895 [Ruby-0-Thread-5: :1] elasticsearch - Using mapping template from {:path=>nil}

[INFO ] 2019-03-03 22:04:42.919 [Ruby-0-Thread-5: :1] elasticsearch - Attempting to install template {:manage_template=>{"template"=>"logstash-*", "version"=>60001, "settings"=>{"index.refresh_interval"=>"5s"}, "mappings"=>{"_default_"=>{"dynamic_templates"=>[{"message_field"=>{"path_match"=>"message", "match_mapping_type"=>"string", "mapping"=>{"type"=>"text", "norms"=>false}}}, {"string_fields"=>{"match"=>"*", "match_mapping_type"=>"string", "mapping"=>{"type"=>"text", "norms"=>false, "fields"=>{"keyword"=>{"type"=>"keyword", "ignore_above"=>256}}}}}], "properties"=>{"@timestamp"=>{"type"=>"date"}, "@version"=>{"type"=>"keyword"}, "geoip"=>{"dynamic"=>true, "properties"=>{"ip"=>{"type"=>"ip"}, "location"=>{"type"=>"geo_point"}, "latitude"=>{"type"=>"half_float"}, "longitude"=>{"type"=>"half_float"}}}}}}}}

[INFO ] 2019-03-03 22:04:43.033 [Converge PipelineAction::Create<main>] pipeline - Pipeline started successfully {:pipeline_id=>"main", :thread=>"#<Thread:0x731a8435 run>"}

The stdin plugin is now waiting for input:

[INFO ] 2019-03-03 22:04:43.093 [Ruby-0-Thread-1: /usr/share/logstash/lib/bootstrap/environment.rb:6] agent - Pipelines running {:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]}

[INFO ] 2019-03-03 22:04:43.386 [Api Webserver] agent - Successfully started Logstash API endpoint {:port=>9600}

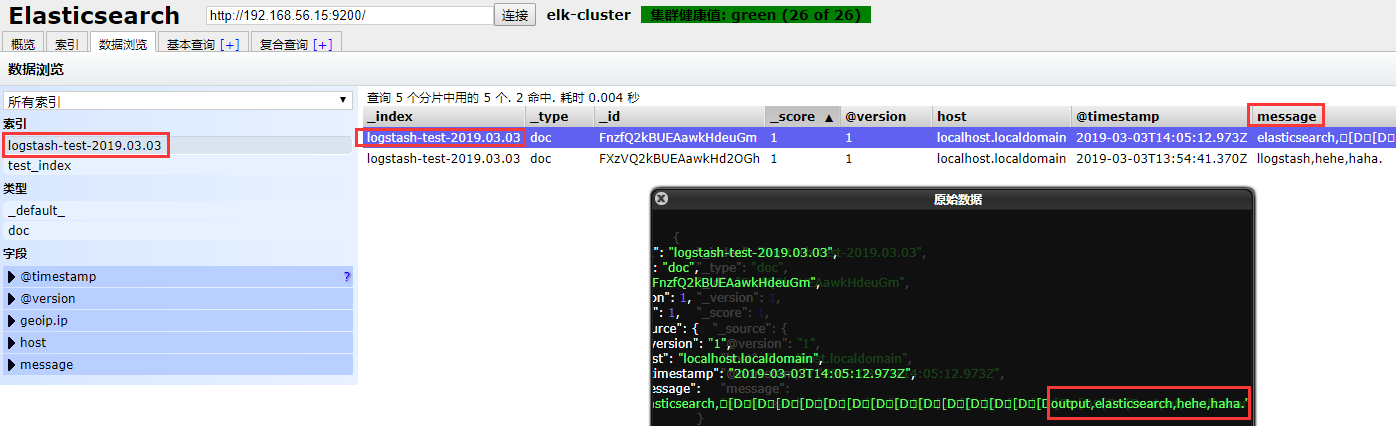

output,elasticsearch,hehe,haha.

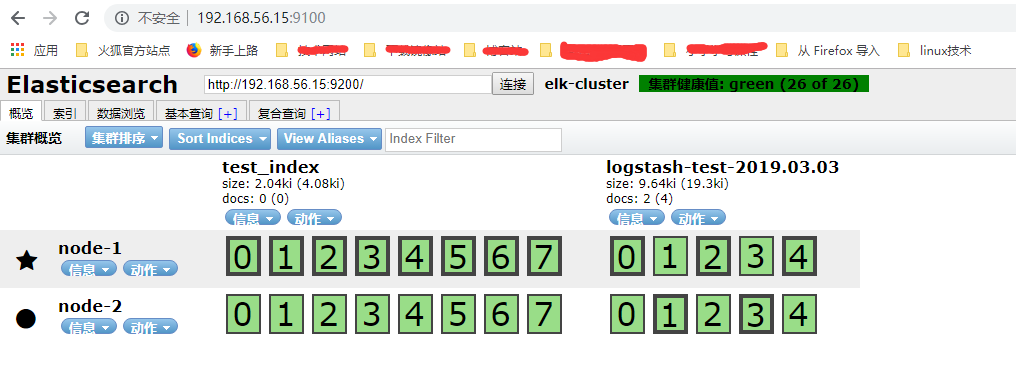

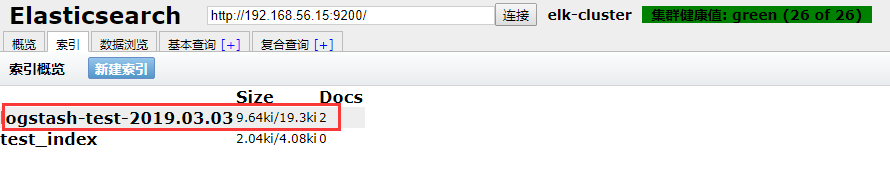

从elasticsearch上查看收集到的数据结果

二、logstash收集日志配置文件

1、logstash收到系统日志输出到Elasticsearch中

# cat /etc/logstash/conf.d/logstash_to_es_systemlog.conf

input {

file {

path => "/var/log/messages"

type => "systemlog"

start_position => "beginning"

stat_interval => "2"

}

}

output {

elasticsearch {

hosts => ["192.168.56.15:9200"]

index => "logstash-systemlog-%{+YYYY.MM.dd}"

}

}

2、logstash从标准输入收集日志,并通过filter进行过滤,输出到标准输出示例。

grok:用于分析并结构化文本数据;目前 是logstash中将非结构化日志数据转化为结构化的可查询数据的不二之选。

# logstash配置文件

-------------------------

# cat /etc/logstash/conf.d/groksample.conf

input {

stdin {}

}

filter {

grok {

match => { "message" => "%{IP:clientip} %{WORD:method} %{URIPATHPARAM:request} %{NUMBER:bytes} %{NUMBER:duration}" }

}

}

output {

stdout {

codec => rubydebug

}

}

# 执行logstash命令

------------------------

# /usr/share/logstash/bin/logstash -f groksample.conf

WARNING: Could not find logstash.yml which is typically located in $LS_HOME/config or /etc/logstash. You can specify the path using --path.settings. Continuing using the defaults

Could not find log4j2 configuration at path /usr/share/logstash/config/log4j2.properties. Using default config which logs errors to the console

[WARN ] 2019-03-11 10:37:01.338 [LogStash::Runner] multilocal - Ignoring the 'pipelines.yml' file because modules or command line options are specified

[INFO ] 2019-03-11 10:37:01.349 [LogStash::Runner] runner - Starting Logstash {"logstash.version"=>"6.6.1"}

[INFO ] 2019-03-11 10:37:06.167 [Converge PipelineAction::Create<main>] pipeline - Starting pipeline {:pipeline_id=>"main", "pipeline.workers"=>2, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>50}

[INFO ] 2019-03-11 10:37:06.377 [Converge PipelineAction::Create<main>] pipeline - Pipeline started successfully {:pipeline_id=>"main", :thread=>"#<Thread:0x85155c9 run>"}

The stdin plugin is now waiting for input:

[INFO ] 2019-03-11 10:37:06.439 [Ruby-0-Thread-1: /usr/share/logstash/lib/bootstrap/environment.rb:6] agent - Pipelines running {:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]}

[INFO ] 2019-03-11 10:37:06.647 [Api Webserver] agent - Successfully started Logstash API endpoint {:port=>9600}

# 输入结果

------------------

1.1.1.1 GET /index.html 30 0.23

{

"@version" => "1",

"host" => "localhost",

"message" => "1.1.1.1 GET /index.html 30 0.23",

"clientip" => "1.1.1.1",

"request" => "/index.html",

"@timestamp" => 2019-03-11T02:37:26.067Z,

"duration" => "0.23",

"bytes" => "30",

"method" => "GET"

}

3、查看模式定义位置及内容

# rpm -ql logstash | grep patterns /usr/share/logstash/vendor/bundle/jruby/2.3.0/gems/coderay-1.1.2/lib/coderay/scanners/ruby/patterns.rb /usr/share/logstash/vendor/bundle/jruby/2.3.0/gems/logstash-patterns-core-4.1.2/CHANGELOG.md /usr/share/logstash/vendor/bundle/jruby/2.3.0/gems/logstash-patterns-core-4.1.2/CONTRIBUTORS /usr/share/logstash/vendor/bundle/jruby/2.3.0/gems/logstash-patterns-core-4.1.2/Gemfile /usr/share/logstash/vendor/bundle/jruby/2.3.0/gems/logstash-patterns-core-4.1.2/LICENSE /usr/share/logstash/vendor/bundle/jruby/2.3.0/gems/logstash-patterns-core-4.1.2/NOTICE.TXT /usr/share/logstash/vendor/bundle/jruby/2.3.0/gems/logstash-patterns-core-4.1.2/README.md /usr/share/logstash/vendor/bundle/jruby/2.3.0/gems/logstash-patterns-core-4.1.2/lib/logstash/patterns/core.rb /usr/share/logstash/vendor/bundle/jruby/2.3.0/gems/logstash-patterns-core-4.1.2/logstash-patterns-core.gemspec /usr/share/logstash/vendor/bundle/jruby/2.3.0/gems/logstash-patterns-core-4.1.2/patterns/aws /usr/share/logstash/vendor/bundle/jruby/2.3.0/gems/logstash-patterns-core-4.1.2/patterns/bacula /usr/share/logstash/vendor/bundle/jruby/2.3.0/gems/logstash-patterns-core-4.1.2/patterns/bind /usr/share/logstash/vendor/bundle/jruby/2.3.0/gems/logstash-patterns-core-4.1.2/patterns/bro /usr/share/logstash/vendor/bundle/jruby/2.3.0/gems/logstash-patterns-core-4.1.2/patterns/exim /usr/share/logstash/vendor/bundle/jruby/2.3.0/gems/logstash-patterns-core-4.1.2/patterns/firewalls /usr/share/logstash/vendor/bundle/jruby/2.3.0/gems/logstash-patterns-core-4.1.2/patterns/grok-patterns /usr/share/logstash/vendor/bundle/jruby/2.3.0/gems/logstash-patterns-core-4.1.2/patterns/haproxy /usr/share/logstash/vendor/bundle/jruby/2.3.0/gems/logstash-patterns-core-4.1.2/patterns/httpd /usr/share/logstash/vendor/bundle/jruby/2.3.0/gems/logstash-patterns-core-4.1.2/patterns/java /usr/share/logstash/vendor/bundle/jruby/2.3.0/gems/logstash-patterns-core-4.1.2/patterns/junos /usr/share/logstash/vendor/bundle/jruby/2.3.0/gems/logstash-patterns-core-4.1.2/patterns/linux-syslog /usr/share/logstash/vendor/bundle/jruby/2.3.0/gems/logstash-patterns-core-4.1.2/patterns/maven /usr/share/logstash/vendor/bundle/jruby/2.3.0/gems/logstash-patterns-core-4.1.2/patterns/mcollective /usr/share/logstash/vendor/bundle/jruby/2.3.0/gems/logstash-patterns-core-4.1.2/patterns/mcollective-patterns /usr/share/logstash/vendor/bundle/jruby/2.3.0/gems/logstash-patterns-core-4.1.2/patterns/mongodb /usr/share/logstash/vendor/bundle/jruby/2.3.0/gems/logstash-patterns-core-4.1.2/patterns/nagios /usr/share/logstash/vendor/bundle/jruby/2.3.0/gems/logstash-patterns-core-4.1.2/patterns/postgresql /usr/share/logstash/vendor/bundle/jruby/2.3.0/gems/logstash-patterns-core-4.1.2/patterns/rails /usr/share/logstash/vendor/bundle/jruby/2.3.0/gems/logstash-patterns-core-4.1.2/patterns/redis /usr/share/logstash/vendor/bundle/jruby/2.3.0/gems/logstash-patterns-core-4.1.2/patterns/ruby /usr/share/logstash/vendor/bundle/jruby/2.3.0/gems/logstash-patterns-core-4.1.2/patterns/squid /usr/share/logstash/vendor/bundle/jruby/2.3.0/specifications/logstash-patterns-core-4.1.2.gemspec

4、logstash 收集apache日志,通过filter grok匹配apache日志,并输出到标准输出。

# cat /etc/logstash/conf.d/apachelog.conf

input {

file {

path => ["/var/log/httpd/access_log"]

type => "apachelog"

start_position => "beginning"

}

}

filter {

grok {

match => { "message" => "%{COMBINEDAPACHELOG}" }

}

}

output {

stdout {

codec => rubydebug

}

}

5、logstash 收集nginx日志,通过filter grok匹配nginx日志,并输出到标准输出。

5-1 自定义nginx log日志grok匹配模式

-------------------------------------

# cat /usr/share/logstash/vendor/bundle/jruby/2.3.0/gems/logstash-patterns-core-4.1.2/patterns/nginx

NGUSERNAME [a-zA-Z\.\@\-\+_%]+

NGUSER %{NGUSERNAME}

NGINXACCESS %{IPORHOST:clientip} - %{NOTSPACE:remote_user} \[%{HTTPDATE:timestamp}\] \"(?:%{WORD:verb} %{NOTSPACE:request}(?: HTTP/%{NUMBER:httpversion})?|%{DATA:rawrequest})\" %{NUMBER:response} (?:%{NUMBER:bytes}|-) %{QS:referrer} %{QS:agent} %{NOTSPACE:http_x_forwarded_for}

5-2 logstash收集nginx日志,输出到标准输出

--------------------------------------

# cat /etc/logstash/conf.d/nginxlog.conf

input {

file {

path => ["/var/log/nginx/access.log"]

type => "nginxlog"

start_position => "beginning"

}

}

filter {

grok {

match => { "message" => "%{NGINXACCESS}" }

}

}

output {

stdout {

codec => rubydebug

}

}

6、logstash收集nginx日志,并输出到redis数据库中

# cat /etc/logstash/conf.d/logstash-to-redis-nginxlog.conf

input {

file {

path => ["/var/log/nginx/access.log"]

type => "nginxlog"

start_position => "beginning"

}

}

filter {

grok {

match => { "message" => "%{NGINXACCESS}" }

}

}

output {

if [type] == "nginxlog" {

redis {

data_type => "list"

host => "192.168.56.12"

db => "0"

port => "6379"

key => "nginxlog"

}

}

}

7、logstash作为服务端使用

7-1 logstash作为服务端使用,从redis中读取数据,然后输出到标准输出

# cat /etc/logstash/conf.d/redis-logstash-stdout.conf

input {

redis {

data_type => "list"

host => "192.168.56.12"

db => "0"

port => "6379"

key => "nginxlog"

}

}

output {

stdout {

codec => rubydebug

}

}

7-2 logstash作为服务端使用,从redis数据库中读取数据,然后输出到Elasticsearch服务器中。

# cat /etc/logstash/conf.d/redis-logstash-es.conf

input {

redis {

data_type => "list"

host => "192.168.56.12"

db => "0"

port => "6379"

key => "nginxlog"

}

}

output {

elasticsearch {

hosts => ["192.168.56.15:9200"]

index => "nginxlog-%{+YYYY.MM.dd}"

}

}

浙公网安备 33010602011771号

浙公网安备 33010602011771号