TVM 模型量化

TVM 模型量化

[RFC] Search-based Automated Quantization

- I proposed a new quantization framework, which brings hardware and learning method in the loop.

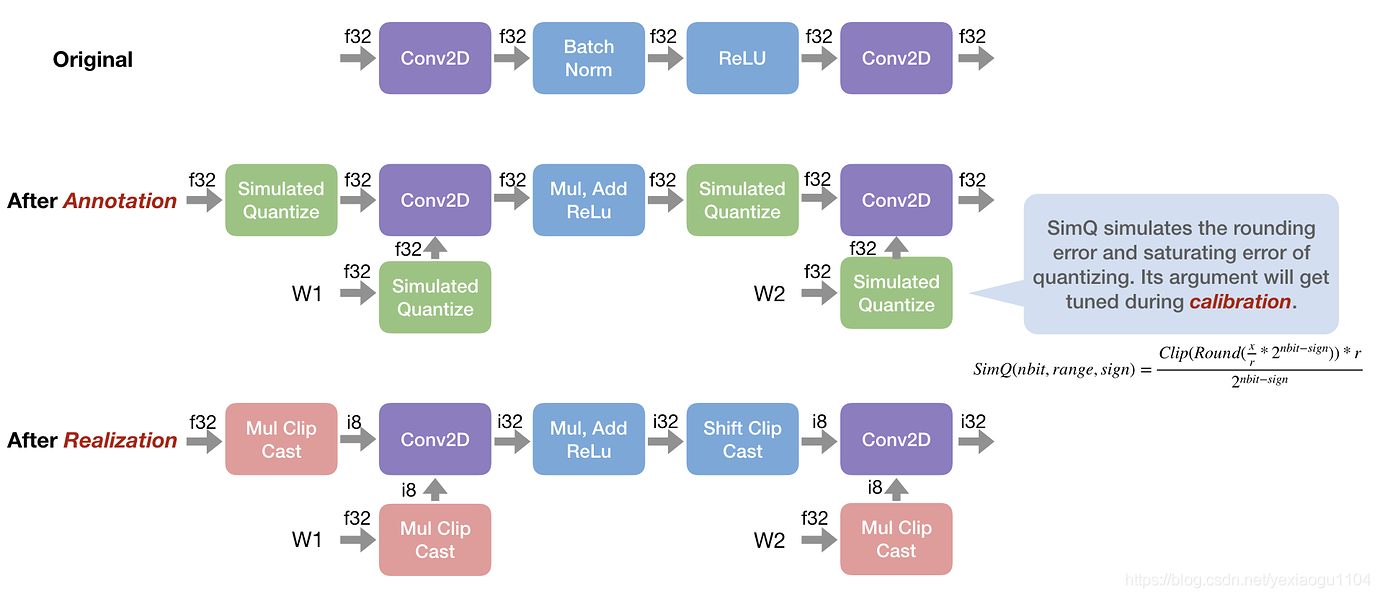

- Brought the idea from some existing quantization frameworks, I choose to adopt the annotation-calibration-realization 3-phases design:

- Annotation: The annotation pass rewrites the graph and inserts simulated quantize operation according to the rewrite function of each operator. The simulated quantize operation simulates the rounding error and saturating error of quantizing from float to integer,

- Calibration: The calibration pass will adjust thresholds of simulated quantize operations to reduce the accuracy dropping.

- Realization: The realization pass transforms the simulation graph, which computes with float32 actually, to a real low-precision integer graph.

参考:

https://www.twblogs.net/a/5eedc7fee3ae0757d21ab20e/?lang=zh-cn

https://discuss.tvm.apache.org/t/int8-quantization-quantizing-models/517/4

https://discuss.tvm.apache.org/t/int8-quantization-proposal/516

https://discuss.tvm.apache.org/t/rfc-improvements-to-automatic-quantization-for-bare-metal/7108

https://discuss.tvm.apache.org/t/quantization-pytorch-dynamic-quantization/10294

https://discuss.tvm.apache.org/t/rfc-quantization-quantization-in-tvm/9161

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 开源Multi-agent AI智能体框架aevatar.ai,欢迎大家贡献代码

· Manus重磅发布:全球首款通用AI代理技术深度解析与实战指南

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· 没有Manus邀请码?试试免邀请码的MGX或者开源的OpenManus吧

· 园子的第一款AI主题卫衣上架——"HELLO! HOW CAN I ASSIST YOU TODAY