QR decomposition

QR decompostion

[Wiki](QR decomposition - Wikipedia): QR decomposition is a composition of a matrix A into the product of an orthogonal matrix Q and an upper triangular matrix R.

Let's firtst begin with an example (refer to [Video](QR decomposition - YouTube))

Suppose we have an matrix \(A\) which is represented by:

Then we shall apply the Gram-Schmidt Orthogonalization:

And we assign the orthogonal matrix Q to:

Therefore, R can be obtained by:

Is it a coincidence that R is upper triangular matrix? Let's view the process again by substitute those values with the corresponding vector notation:

Since the projection part of \(v_2\) onto \(v_1\)(\(u_1\)) is eliminated from \(v_2\) which yields \(u_2\)(normalized to \(w_2\)),therefore \(w_2\) is orthogonal to \(u_1\) and therefore the lower triangular entry \(w_2^Tu_1\) becomes zero.

And we can extend this to general cases. According to orthogonalization procedures, the \(i\)-th orthonormal vector \(w_i\) of the matrix \(A_{m\times n}=\begin{bmatrix}v_1&v_2&\cdots&v_i&\cdots&v_n\end{bmatrix}\) is constructed to be orthogonal to all the \(v_j(j<i)\) vectors. ( In other words, the project parts of \(v_i\) onto all \(v_j\)s is eliminated from \(v_i\) which yiels \(u_i\) (normalized to \(w_i\))). And it does not ensure that \(w_i\) is orthogonal to other \(v_j\)s when \(j>i\).

Therefore, for those entries whose row index \(i\) is smaller than column index \(j\), the values will become zero. That's why we finally obtain an upper triangular matrix R.

Consider the more general case. We can factor a complex \(m\times n\) matrix \(A\), with \(m\geq n\), as the prodcut of an \(m\times m\) unitary matrix \(Q\) and an \(m\times n\) upper triangular matrix \(R\), and \(R_1\) refers to the \(n\times n\) non-zero upper triangular matrix of matrix \(R\), while the remaining \(m-n\) rows are all zeros.

Since matrix \(A\) only has \(n\) columns, when deriving the matrix \(R\), the multiplication of \(i\)-th ( \(n<i\leq m\)) row of \(Q^T\) (refer to \(Q_2^T\), and \(Q_1^T\) refer to those rows with index \(i\leq n\)) with the additional all-zero columns of matrix \(A\) (in order to make the matrix size to be \(m\times m\)) will lead to zero.

Therefore, we have:

Example1:

Using QR decomposition to get the least square solution of linear equation \(Ax=b\):

And the least square solution can be obtain by solving the following equation:

Then by using the QR decomposition, we can obtain:

And finally, the equation becomes:

Some we just need to solve the following linear equations:

which is easier because owing to the upper triangular matrix R, we already know the value of last variable \(x_n\), and we can do this backward recursively until we get the solution. Pretty cool, right?

Example2:

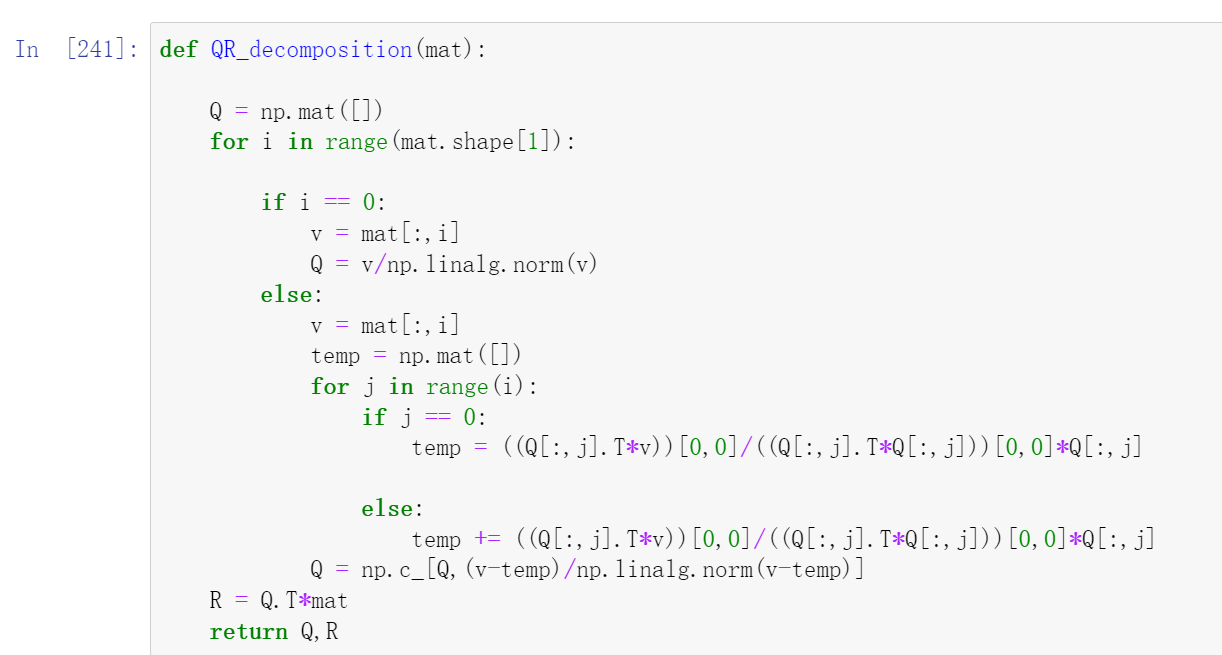

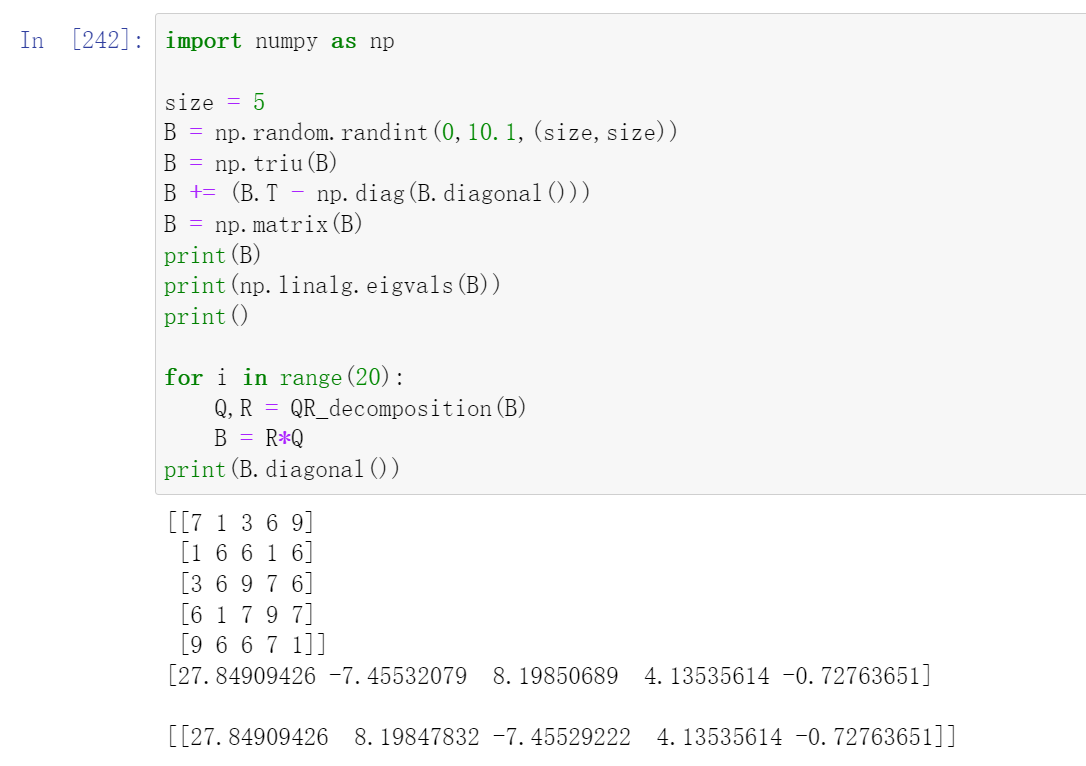

Using QR itertation to calculate the eigenvalue and eigenvectors of a matrix. The basic idea is to iteratively performing the QR decomposition.

Under the assumption of QR decomposition, arbitary matrix \(A\) can be decomposed into an orthogonal matrix \(Q\) and an upper triangular matrix \(R\):

Consider another equation:

If we bring \(R\) into this equation, we obtain:

Since \(Q\) is an orthogonal matrix, the above operation is actually an orthogonal(similarity) transformation which results in a similar matrix \(A^{\prime}\) with respect to \(A\). Since similarity transformation will not change the eigenvalues, we can continue to perform this until the values on the diagonal converge to the eigenvalues.