深度学习面试题24:在每个深度上分别卷积(depthwise卷积)

目录

举例

单个张量与多个卷积核在深度上分别卷积

参考资料

|

举例 |

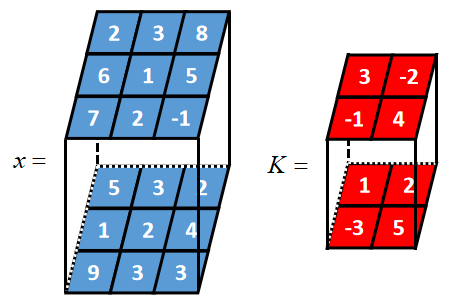

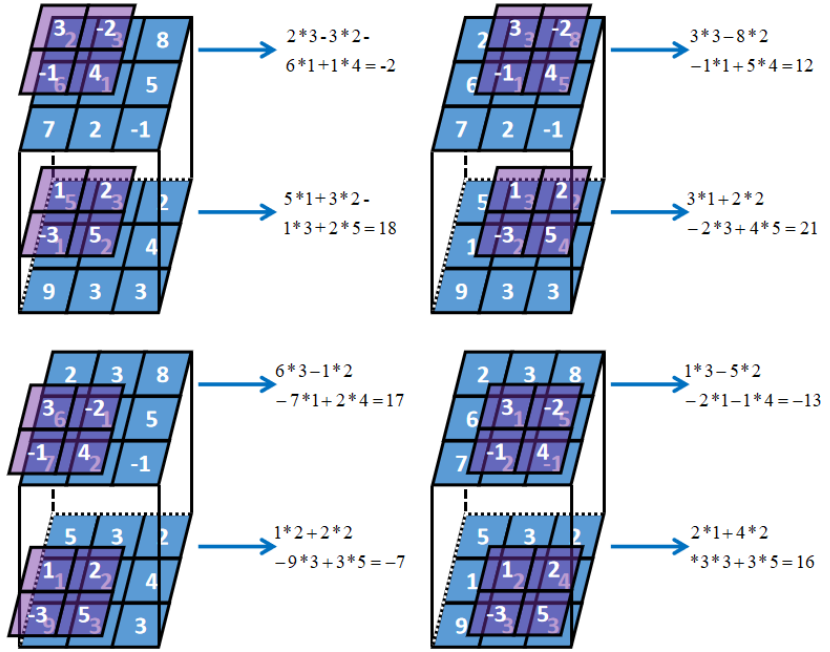

如下张量x和卷积核K进行depthwise_conv2d卷积

结果为:

depthwise_conv2d和conv2d的不同之处在于conv2d在每一深度上卷积,然后求和,depthwise_conv2d没有求和这一步,对应代码为:

import tensorflow as tf

# [batch, in_height, in_width, in_channels]

input =tf.reshape( tf.constant([2,5,3,3,8,2,6,1,1,2,5,4,7,9,2,3,-1,3], tf.float32),[1,3,3,2])

# [filter_height, filter_width, in_channels, out_channels]

kernel = tf.reshape(tf.constant([3,1,-2,2,-1,-3,4,5], tf.float32),[2,2,2,1])

print(tf.Session().run(tf.nn.depthwise_conv2d(input,kernel,[1,1,1,1],"VALID")))

[[[[ -2. 18.]

[ 12. 21.]]

[[ 17. -7.]

[-13. 16.]]]]

|

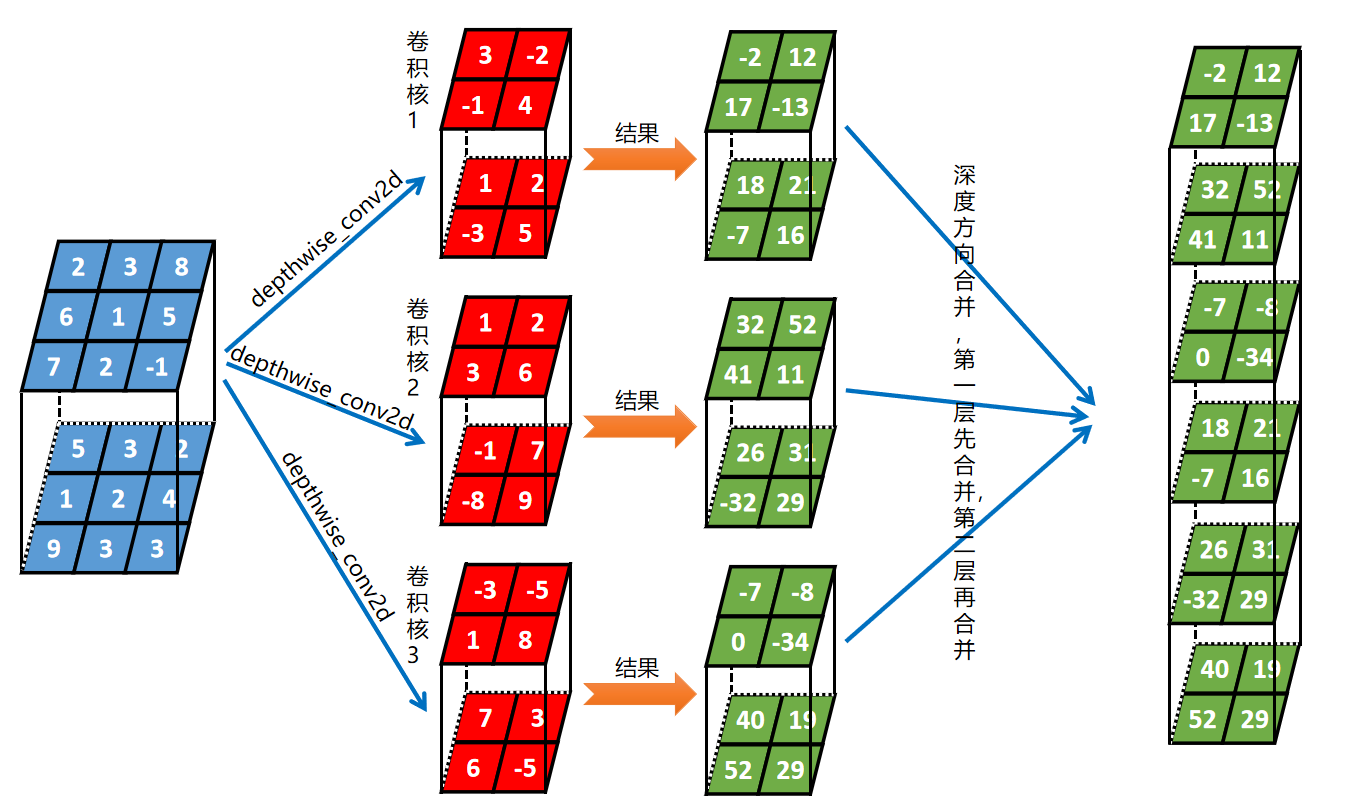

单个张量与多个卷积核在深度上分别卷积 |

对应代码为:

import tensorflow as tf # [batch, in_height, in_width, in_channels] input =tf.reshape( tf.constant([2,5,3,3,8,2,6,1,1,2,5,4,7,9,2,3,-1,3], tf.float32),[1,3,3,2]) # [filter_height, filter_width, in_channels, out_channels] kernel = tf.reshape(tf.constant([3,1,-3,1,-1,7,-2,2,-5,2,7,3,-1,3,1,-3,-8,6,4,6,8,5,9,-5], tf.float32),[2,2,2,3]) print(tf.Session().run(tf.nn.depthwise_conv2d(input,kernel,[1,1,1,1],"VALID"))) [[[[ -2. 32. -7. 18. 26. 40.] [ 12. 52. -8. 21. 31. 19.]] [[ 17. 41. 0. -7. -32. 52.] [-13. 11. -34. 16. 29. 29.]]]]

|

参考资料 |

《图解深度学习与神经网络:从张量到TensorFlow实现》_张平

浙公网安备 33010602011771号

浙公网安备 33010602011771号