elk安装搭建

基础环境安装

ELK功能:Logstash用来收集数据,Elasticsearch用来存储数据,Kibana用来展现数据。

实验环境:centos7.5 ,关闭防火墙,关闭selinux

ip地址:192.168.10.50 部署Kibana、ES

ip地址:192.168.10.51 部署Logstash

JDK1.8和Kibana安装部署

192.168.10.50

[root@zyxy01 local]# cd /usr/local/src/ [root@zyxy01 src]# tar -zxvf jdk-8u201-linux-x64.tar.gz [root@zyxy01 src]# mv jdk1.8.0_201 /usr/local/ [root@zyxy01 local]# vim /etc/profile export JAVA_HOME=/usr/local/jdk1.8.0_201/ export PATH=$PATH:$JAVA_HOME/bin export CLASSPATH=.:$JAVA_HOME/lib/tools.jar:$JAVA_HOME/lib/dt.jar:$CLASSPATH [root@zyxy01 local]# source /etc/profile [root@zyxy01 local]# java -version java version "1.8.0_201" Java(TM) SE Runtime Environment (build 1.8.0_201-b09) Java HotSpot(TM) 64-Bit Server VM (build 25.201-b09, mixed mode) [root@zyxy01 local]#

kibana安装启动:

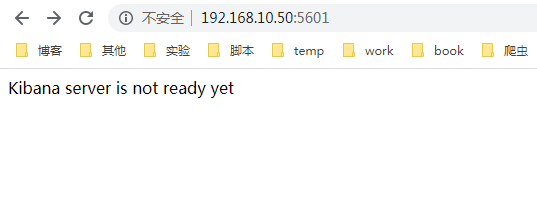

[root@zyxy01 src]# tar -zxvf kibana-6.6.0-linux-x86_64.tar.gz [root@zyxy01 src]#mv kibana-6.6.0-linux-x86_64 /usr/local/kibana-6.6.0 [root@zyxy01 src]#vim /usr/local/kibana-6.6.0/config/kibana.yml server.port: 5601 server.host: "0.0.0.0" #elasticsearch.url: "http://localhost:9200" #elasticsearch.username: "user" #elasticsearch.password: "pass" Kibana的启动和访问: 1、前台启动Kibana:/usr/local/kibana-6.6.0/bin/kibana 2、后台启动Kibana:nohup /usr/local/kibana-6.6.0/bin/kibana >/tmp/kibana.log 2>/tmp/kibana.log & 3、访问Kibana,需要开放5601端口

前端页面出现如下图所示表示安装成功。

Kibana用Nginx实现认证:

[root@zyxy01 src]#yum install -y lrzsz wget gcc gcc-c++ make pcre pcre-devel zlib zlib-devel

[root@zyxy01 src]#tar -zxvf nginx-1.14.2.tar.gz

[root@zyxy01 src]#cd nginx-1.14.2

[root@zyxy01 src]#./configure --prefix=/usr/local/nginx && make && make install

[root@zyxy01 src]#/usr/local/nginx/sbin/nginx -c /usr/local/nginx/conf/nginx.conf

[root@zyxy01 src]# vim /etc/profile

export PATH=$PATH:/usr/local/nginx/sbin/

[root@zyxy01 src]# source /etc/profile

[root@zyxy01 src]# nginx -V

#1、nginx限制源ip地址访问,

[root@zyxy01 src]#vim /usr/local/nginx/conf/nginx.conf

pid /usr/local/nginx/logs/nginx.pid; #填写pid路径,打开注释。

#以下四行日志打开注释

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log logs/access.log main;

#然后配置location限制访问的源ip

server {

listen 80;

location / {

allow 127.0.0.1;

allow 192.168.10.1;

deny all;

proxy_pass http://127.0.0.1:5601;

}

}

#重启nginx生效

[root@zyxy01 nginx-1.14.2]# nginx -s reload

#修改kibana只能本地访问,

[root@zyxy01 src]# vim /usr/local/kibana-6.6.0/config/kibana.yml

server.host: "127.0.0.1"

#杀kibana进程,重启服务生效。

#Nginx访问日志,可以看到源ip地址192.168.10.1

[root@zyxy01 nginx-1.14.2]# tail -f /usr/local/nginx/logs/access.log

192.168.10.1 - - [18/May/2020:22:43:50 +0800] "GET / HTTP/1.1" 403 571 "-" "Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/81.0.4044.138 Safari/537.36" "-"

192.168.10.1 - - [18/May/2020:22:43:50 +0800] "GET /favicon.ico HTTP/1.1" 403 571 "http://192.168.10.50/" "Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/81.0.4044.138 Safari/537.36" "-"

192.168.10.1 - - [18/May/2020:22:43:53 +0800] "GET / HTTP/1.1" 403 571 "-" "Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/81.0.4044.138 Safari/537.36" "-"

192.168.10.1 - - [18/May/2020:22:43:53 +0800] "GET /favicon.ico HTTP/1.1" 403 571 "http://192.168.10.50/" "Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/81.0.4044.138 Safari/537.36" "-"

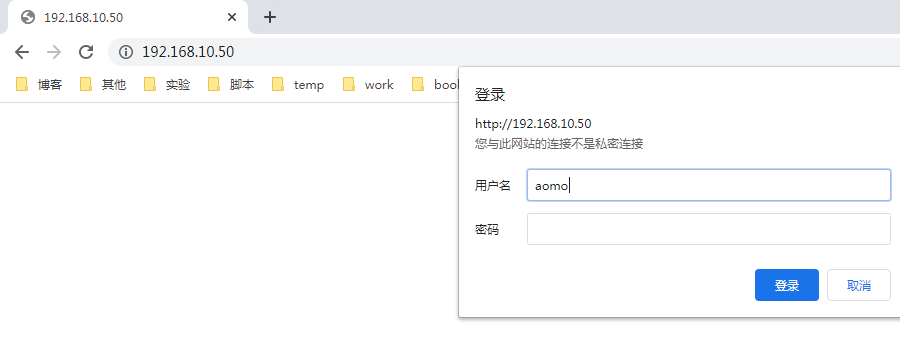

#2、nginx账号密码登录方式,只需要在1的基础上修改Nginx的 location配置。

location / {

auth_basic "elk auth";

auth_basic_user_file /usr/local/nginx/conf/htpasswd;

proxy_pass http://127.0.0.1:5601;

}

#重启nginx生效

[root@zyxy01 nginx-1.14.2]# nginx -s reload

#然后设置用户名和密码

[root@zyxy01 nginx-1.14.2]# [root@zyxy01 nginx-1.14.2]# printf "aomo:$(openssl passwd -1 password)\n" >/usr/local/nginx/conf/htpasswd

#再次网页访问 192.168.10.50 输入用户名 aomo 密码 password才可以跳转访问到kibana.

Elasticsearch安装启动操作

[root@zyxy01 ~]# cd /usr/local/src/ [root@zyxy01 src]# tar -zxf elasticsearch-6.6.0.tar.gz [root@zyxy01 src]# mv elasticsearch-6.6.0 /usr/local/ [root@zyxy01 src]# vim /usr/local/elasticsearch-6.6.0/config/elasticsearch.yml #打开以下4行注释并修改存放数据和日志路径,监听端口 path.data: /usr/local/elasticsearch-6.6.0/data path.logs: /usr/local/elasticsearch-6.6.0/logs network.host: 0.0.0.0 http.port: 9200 [root@zyxy01 src]# vim /usr/local/elasticsearch-6.6.0/config/jvm.options #JVM的内存限制更改,修改以下两项参数 -Xms128M -Xmx128M #Elasticsearch的启动,需要用普通用户启动,root启动会报错。 [root@zyxy01 src]# useradd -s /sbin/nologin elk [root@zyxy01 src]# chown -R elk:elk /usr/local/elasticsearch-6.6.0/ [root@zyxy01 src]# su - elk -s /bin/bash #切换到elk用户,启动并查看日志,端口,再次访问kibana页面正常。 [elk@zyxy01 ~]$ /usr/local/elasticsearch-6.6.0/bin/elasticsearch -d [elk@zyxy01 ~]$ tail -f /usr/local/elasticsearch-6.6.0/logs/elasticsearch.log

[root@zyxy01 logs]# ss -lnt

浏览器输入 192.168.10.50 回车 输入 aomo 密码password出现kibana界面。

Elasticsearch监听在非127.0.0.1 监听在0.0.0.0或者内网地址,以上两种监听都需要调整系统参数。

ES启动监听非127.0.0.1 三个报错的处理需要调整以下三个系统参数: 最大文件打开数、最大打开进程数、内核参数调整。

[1]: max file descriptors [4096] for elasticsearch process is too low, increase to at least [65536]

[2]: max number of threads [3829] for user [elk] is too low, increase to at least [4096]

[3]: max virtual memory areas vm.max_map_count [65530] is too low, increase to at least [262144]

[root@zyxy01 logs]# sysctl -a |grep vm.max_map_count sysctl: reading key "net.ipv6.conf.all.stable_secret" sysctl: reading key "net.ipv6.conf.default.stable_secret" sysctl: reading key "net.ipv6.conf.ens33.stable_secret" sysctl: reading key "net.ipv6.conf.lo.stable_secret" vm.max_map_count = 65530 [root@zyxy01 logs]# vim /etc/sysctl.conf [root@zyxy01 logs]# sysctl -p fs.file-max = 6553560 vm.max_map_count = 262144 [root@zyxy01 logs]# sysctl -a |grep vm.max_map_count sysctl: reading key "net.ipv6.conf.all.stable_secret" sysctl: reading key "net.ipv6.conf.default.stable_secret" sysctl: reading key "net.ipv6.conf.ens33.stable_secret" sysctl: reading key "net.ipv6.conf.lo.stable_secret" vm.max_map_count = 262144 [root@zyxy01 logs]# vim /etc/security/limits.conf * soft nproc 8192 * hard nproc 16384 * soft nofile 8192 * hard nofile 65536 # End of file [root@zyxy01 logs]# ulimit -a core file size (blocks, -c) 0 data seg size (kbytes, -d) unlimited scheduling priority (-e) 0 file size (blocks, -f) unlimited pending signals (-i) 14989 max locked memory (kbytes, -l) 64 max memory size (kbytes, -m) unlimited open files (-n) 8192 pipe size (512 bytes, -p) 8 POSIX message queues (bytes, -q) 819200 real-time priority (-r) 0 stack size (kbytes, -s) 8192 cpu time (seconds, -t) unlimited max user processes (-u) 16384 virtual memory (kbytes, -v) unlimited file locks (-x) unlimited

Elasticsearch的3个概念:

索引 ->类似于Mysql中的数据库

类型 ->类似于Mysql中的数据表

文档 ->存储数据

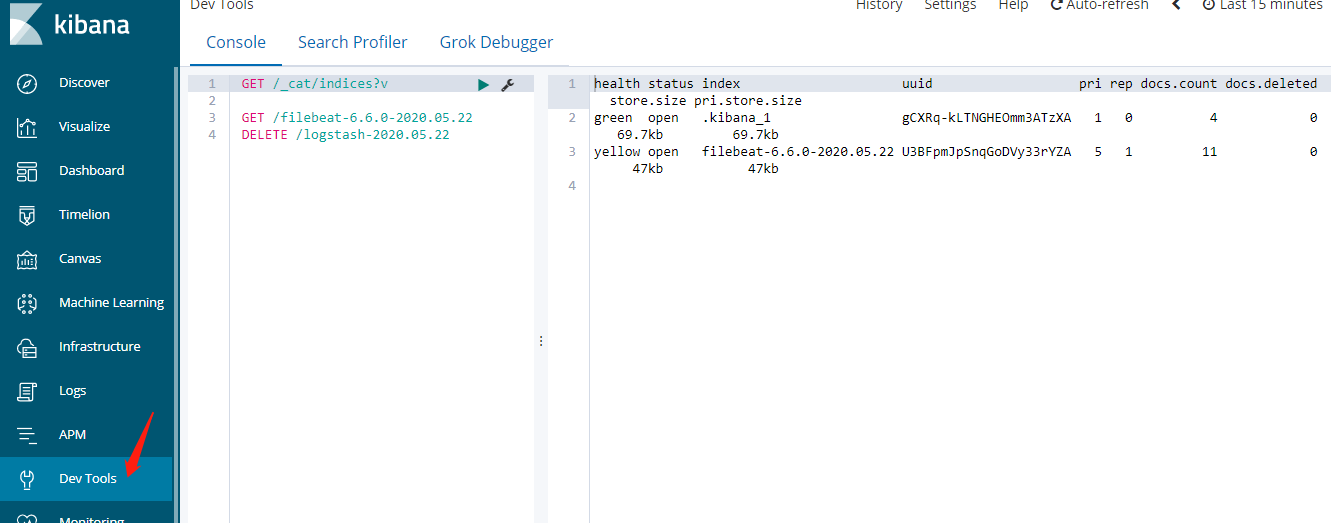

Elasticsearch的数据操作常在kibana 左列 Dev Tools里面操作。

创建索引: PUT /aomo

删除索引: DELETE /aomo

获取所有索引: GET /_cat/indices?v

Elasticsearch增删改查

ES插入数据

PUT /aomo/users/1

{

"name":"aomo",

"age": 27

}

ES查询数据

11. GET /aomo/users/1

12. GET /aomo/_search?q=*

修改数据、覆盖

PUT /aomo/users/1

{

"name": "it",

"age": 40

}

ES删除数据

DELETE /aomo/users/1

修改某个字段、不覆盖

POST /aomo/users/2/_update

{

"doc": {

"age": 29

}

}

修改所有的数据

POST /aomo/_update_by_query

{

"script": {

"source": "ctx._source['age']=27"

},

"query": {

"match_all": {}

}

}

增加一个字段

POST /aomo/_update_by_query

{

"script":{

"source": "ctx._source['city']='hangzhou'"

},

"query":{

"match_all": {}

}

}

Logstash安装和ES结合

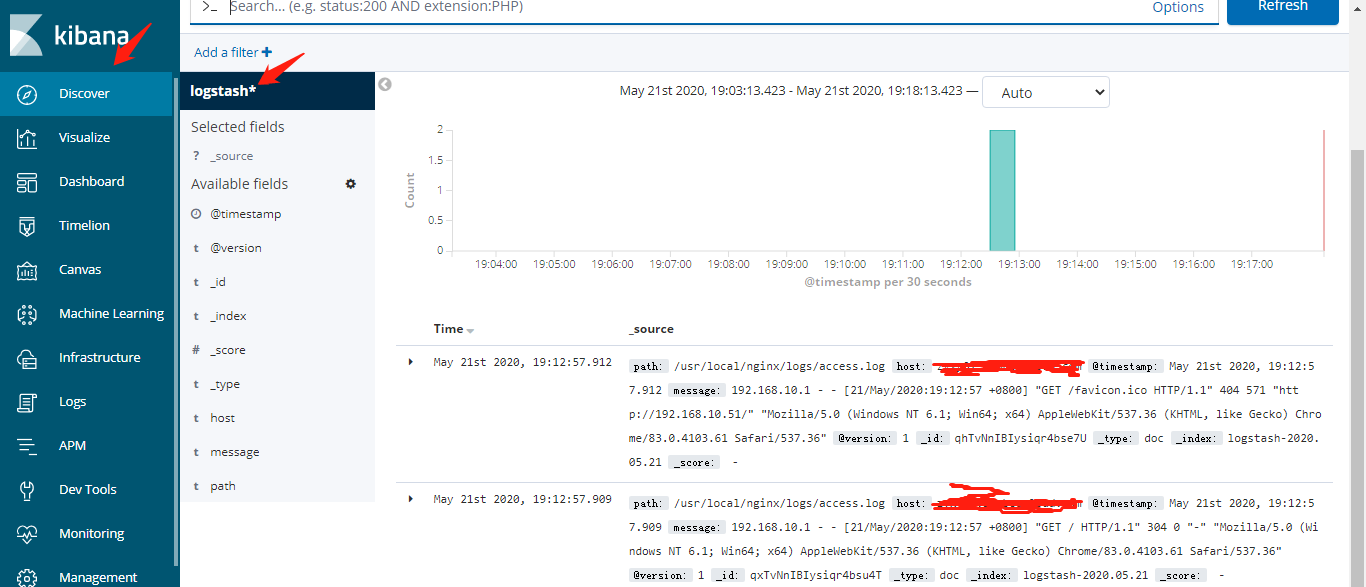

192.168.10.51安装logstash,需要安装jdk1.8 然后安装nginx,浏览器访问192.168.10.51触发access.log日志生成,发送到10.50的es,kibana展示日志。

Logstash的安装命令

cd /usr/local/src

tar -zxf logstash-6.6.0.tar.gz

mv logstash-6.6.0 /usr/local/

Logstash的JVM配置文件更新/usr/local/logstash-6.6.0/config/jvm.options

-Xms200M

-Xmx200M

Logstash配置发送日志到ES数据库/usr/local/logstash-6.6.0/config/logstash.conf

input {

file {

path => "/usr/local/nginx/logs/access.log"

}

}

output {

elasticsearch {

hosts => ["http://192.168.10.50:9200"]

}

}

Logstash的启动:

yum -y install epel-release

yum install haveged -y

systemctl enable haveged

systemctl start haveged

前台启动:/usr/local/logstash-6.6.0/bin/logstash -f /usr/local/logstash-6.6.0/config/logstash.conf

后台启动:nohup /usr/local/logstash-6.6.0/bin/logstash -f /usr/local/logstash-6.6.0/config/logstash.conf >/tmp/logstash.log 2>/tmp/logstash.log &

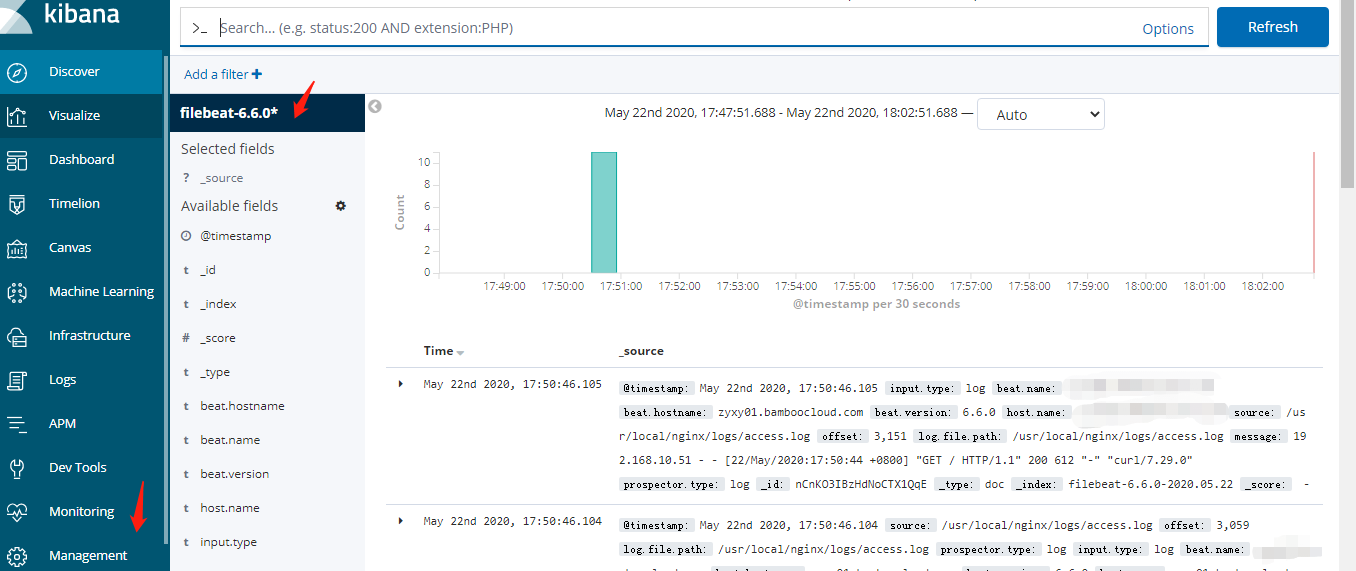

然后在kibana , Discover--Mangerment 新建索引 logstash*,能看到nginx的访问日志。

Filebeat的安装

Logstash收集日志:依赖于Java环境,用来收集日志比较重,占用内存和CPU。Filebeat相对轻量,占用服务器资源小,一般选用Filebeat来进行日志收集。

192.168.10.51安装Filebeat

cd /usr/local/src/

tar -zxf filebeat-6.6.0-linux-x86_64.tar.gz

mv filebeat-6.6.0-linux-x86_64 /usr/local/filebeat-6.6.0

Filebeat发送日志到ES配置/usr/local/filebeat-6.6.0/filebeat.yml

filebeat.inputs:

- type: log

tail_files: true

backoff: "1s"

paths:

- /usr/local/nginx/logs/access.log

output:

elasticsearch:

hosts: ["192.168.10.50:9200"]

启动Filebeat

前台启动: /usr/local/filebeat-6.6.0/filebeat -e -c /usr/local/filebeat-6.6.0/filebeat.yml

后台启动:nohup /usr/local/filebeat-6.6.0/filebeat -e -c /usr/local/filebeat-6.6.0/filebeat.yml >/tmp/filebeat.log 2>&1 &

Kibana上查看日志数据

GET /xxx/_search?q=*

GET /_cat/indices?v #查看所有索引

kibana Management 创建Filebeat新索引,