苦读书网站小说爬虫

看小说消磨时间,但是广告太多了,且大多都是不健康的广告,

本着无聊练手的想法写了个小说爬虫,可以爬取小说并按小说名章节目录存储

网站是众多盗版网站中的一个

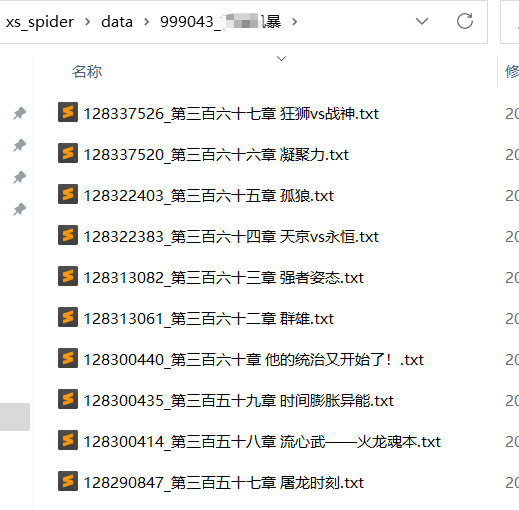

爬取效果如下

# xs_spider\xs_spider\spiders\sx_spider_01.py

import scrapy

from scrapy.http import Request

from xs_spider.items import XsSpiderItem

from pathlib import Path

from re import sub

class SxSpider01Spider(scrapy.Spider):

name = 'sx_spider_01'

allowed_domains = ['m.kudushu.net']

start_urls = [

'http://m.kudushu.net/book/999043/'

]

base_url = 'http://m.kudushu.net'

def parse(self, response):

章节分页 = response.xpath('.//select[@name="pageselect"]//@value').getall()

for i in 章节分页:

yield Request(url=rf'{self.base_url}{i}',callback=self.parse2)

def parse2(self,response):

章节元素 = response.xpath('.//div[@class="info_menu1"]/div[@class="list_xm"]/ul/li/a')

书名 = response.xpath('/html/body/div/div[4]/div[2]/h3/text()').get()

for 章节 in 章节元素:

章节链接 = self.base_url+章节.xpath('./@href').get()

章节标题 = 章节.xpath('./text()').get()

item = XsSpiderItem()

章节id =[i for i in 章节链接.split(r'/') if i != ''][-1]

小说id =[i for i in 章节链接.split(r'/') if i != ''][-2]

item['小说id'] = 小说id + '_' + 书名

item['章节id'] = 章节id

item['标题'] = 章节标题

item['链接'] = 章节链接

文件路径 = Path(rf"./data/{item['小说id']}/{item['章节id']}_{item['标题']}.txt")

if 文件路径.is_file():

continue

yield Request(url=章节链接,callback=self.parse3,meta={'item':item})

def parse3(self,response):

item = response.meta.get('item')

texts = response.xpath('//*[@id="novelcontent"]//text()').getall()[1:]

text = ','.join(texts).replace(u'\xa0','').replace(u'\n,','').replace(r',\n','')

text = text.replace('(本章未完,请点击下一页继续阅读)','').replace(u'\r\n','')

text = text.replace('。,','。')

text = text.replace('最新网址:m.kudushu.net,','')

text = text.replace('hedgeogbm();','')

text = text.replace(' ','')

text = text.replace(',上—章','')

text = text.replace('下—页','')

text = text.replace('加入书签','')

text = text.replace('上—页','')

text = text.replace('返回目录','')

text = text.replace(' ','')

text = text.replace(',,',',')

text = text.replace(',,',',')

text = text.replace(',,',',')

text = text.replace(',;,;,,','')

text = text.replace(';,','')

text = text.replace(';,','')

text = text.replace(';,','')

text = text.replace(';,','')

text = sub(r'hedgeo.+?\)','',text)

text = text.replace(',,',',')

text = text.replace(',,',',')

text = text.replace('\n\n\n',',')

text = text.replace('\n\n',',')

if '在手打中,请稍等片刻,内容更新后' in text:

return

if item.get('内容') is None:

item['内容'] = text

else:

item['内容'] = item['内容']+'\n\n'+text

if response.xpath('.//ul[@class="novelbutton"]/li/p[@class="p1 p3"]/a/text()').get() == '下—页':

下一页链接 = response.xpath('.//ul[@class="novelbutton"]/li/p[@class="p1 p3"]/a//@href').get()

yield Request(rf'{self.base_url}/{下一页链接}',meta={'item':item,},callback=self.parse3)

else:

yield item

# xs_spider\xs_spider\items.py

import scrapy

class XsSpiderItem(scrapy.Item):

# define the fields for your item here like:

标题 = scrapy.Field()

链接 = scrapy.Field()

内容 = scrapy.Field()

章节id = scrapy.Field()

小说id = scrapy.Field()

# pass

# xs_spider\xs_spider\pipelines.py

from itemadapter import ItemAdapter

from pathlib import Path

class XsSpiderPipeline:

def process_item(self, item, spider):

文件路径 = rf"./data/{item['小说id']}/"

文件路径 = Path(文件路径)

if not 文件路径.is_dir():

文件路径.mkdir(parents=True, exist_ok=True)

with open(rf"./data/{item['小说id']}/{item['章节id']}_{item['标题']}.txt",'w',encoding='utf-8') as f:

f.write(item['内容'])

return item

# xs_spider\run.py

# 运行爬虫

from scrapy.cmdline import execute

execute("scrapy crawl sx_spider_01".split(' '))

出处: https://www.cnblogs.com/meizhengchao/p/17085958.html

本文版权归作者和博客园共有,欢迎转载,但未经作者同意必须保留此段声明,且在文章页面明显位置给出, 原文链接 如有问题, 可邮件(meizhengchao@qq.com)咨询.