Debezium Oracle 基于Logminer测试

准备docker镜像

只测试Source端数据抽取

使用oracle官方镜像

- 启动docker容器

docker run -d --name oracle12c-test container-registry.oracle.com/database/enterprise:12.2.0.1 - 如果本地没有该镜像 需要执行

docker login container-registry.oracle.com依次根据提示输入oracle账户的用户名密码 - 执行

docker ps |grep oracle12c-test - Up 8 minutes (healthy) 状态是healthy状态后 可以进入容器操作

- 镜像默认开启PDB模式 默认CDB名称为ORCLCDB 默认PDB名称为ORCLPDB1

- 镜像默认sys密码为Orcldoc_db1

准备设置数据库

开启日志归档

- 进入容器 开始操作

docker exec -it 容器的ID bash sqlplus sys/Orcldoc_db1 as sysdba或者sqlplus sys / as sysdba- 检查是否开启了归档 默认的容器里是没有开启的

- 执行

archive log list如果结果显示Database log mode No Archive Mode说明我们的数据库是没有开启归档的

我们依次执行

shutdown immediate;

startup mount;

alter database archivelog;

alter database open;

结果应该如虚线以下提示

----------------------------------

SQL> shutdown immediate;

Database closed.

Database dismounted.

ORACLE instance shut down.

SQL> startup mount;

ORACLE instance started.

Total System Global Area 1342177280 bytes

Fixed Size 8792536 bytes

Variable Size 989857320 bytes

Database Buffers 318767104 bytes

Redo Buffers 24760320 bytes

Database mounted.

SQL> alter database archivelog;

Database altered.

SQL> alter database open;

Database altered.

---------------------------------------

再次查看是否开启归档 已经显示处于归档模式

SQL> archive log list;

Database log mode Archive

创建DBZ用户相关

create user c##dbzuser identified by dbz default tablespace users container=all;

ALTER USER c##dbzuser QUOTA UNLIMITED ON users;

GRANT CREATE SESSION TO c##dbzuser CONTAINER=ALL;

GRANT SET CONTAINER TO c##dbzuser CONTAINER=ALL;

GRANT SELECT ON V_$DATABASE to c##dbzuser CONTAINER=ALL;

GRANT FLASHBACK ANY TABLE TO c##dbzuser CONTAINER=ALL;

GRANT SELECT ANY TABLE TO c##dbzuser CONTAINER=ALL;

GRANT SELECT_CATALOG_ROLE TO c##dbzuser CONTAINER=ALL;

GRANT EXECUTE_CATALOG_ROLE TO c##dbzuser CONTAINER=ALL;

GRANT SELECT ANY TRANSACTION TO c##dbzuser CONTAINER=ALL;

GRANT LOGMINING TO c##dbzuser CONTAINER=ALL;

GRANT CREATE TABLE TO c##dbzuser CONTAINER=ALL;

GRANT LOCK ANY TABLE TO c##dbzuser CONTAINER=ALL;

GRANT CREATE SEQUENCE TO c##dbzuser CONTAINER=ALL;

GRANT EXECUTE ON DBMS_LOGMNR TO c##dbzuser CONTAINER=ALL;

GRANT EXECUTE ON DBMS_LOGMNR_D TO c##dbzuser CONTAINER=ALL;

GRANT SELECT ON V_$LOG TO c##dbzuser CONTAINER=ALL;

GRANT SELECT ON V_$LOG_HISTORY TO c##dbzuser CONTAINER=ALL;

GRANT SELECT ON V_$LOGMNR_LOGS TO c##dbzuser CONTAINER=ALL;

GRANT SELECT ON V_$LOGMNR_CONTENTS TO c##dbzuser CONTAINER=ALL;

GRANT SELECT ON V_$LOGMNR_PARAMETERS TO c##dbzuser CONTAINER=ALL;

GRANT SELECT ON V_$LOGFILE TO c##dbzuser CONTAINER=ALL;

GRANT SELECT ON V_$ARCHIVED_LOG TO c##dbzuser CONTAINER=ALL;

GRANT SELECT ON V_$ARCHIVE_DEST_STATUS TO c##dbzuser CONTAINER=ALL;

准备相关组件

- 启动zookeeper、kafka、connect组件

IP 换成实际IP

docker run -d --name zookeeper -p 2181:2181 -p 2888:2888 -p 3888:3888 -p 9011:9011 debezium/zookeeper:1.6

docker run -d --name kafka -p 9092:9092 -p 9012:9012 --link zookeeper:zookeeper debezium/kafka:1.6

docker run -d --name connect -p 8083:8083 -p 9010:9010 -e GROUP_ID=1 -e CONFIG_STORAGE_TOPIC=my_connect_configs -e OFFSET_STORAGE_TOPIC=my_connect_offsets -e STATUS_STORAGE_TOPIC=my_connect_statuses -e CONNECT_MAX_REQUEST_SIZE=20000000 -e CONNECT_BUFFER_MEMORY=800000000 -e CONNECT_FETCH_MAX_BYTES=20000000 -e CONNECT_MAX_PARTITION_FETCH_BYTES=20000000 -e OFFSET_FLUSH_INTERVAL_MS=10000 -e OFFSET_FLUSH_TIMEOUT_MS=6000000 -e CONNECT_CONNECTIONS_MAX_IDLE_MS=6000000 -e CONNECT_RECEIVE.BUFFER.BYTES=500000000 -e CONNECT_PRODUCER_MAX_REQUEST_SIZE=20000000 --link zookeeper:zookeeper --link kafka:kafka debezium/connect:1.6

- 自行下载ojdbc8.jar

docker cp ojdbc8.jar 容器ID:/kafka/libs

重启connect docker restart connect容器ID

准备测试相关数据

- 创建PDB用户

- 赋予PDB用户权限

- 创建相关表格 插入数据测试

sqlplus / as sysdba

alter session set container = ORCLPDB1;

create user dbz identified by dbz;

ALTER USER dbz QUOTA UNLIMITED ON users;

grant connect, resource to dbz;

切换dbz用户连接到pdb数据库 orclpdb1

conn dbz/dbz@orclpdb1

CREATE TABLE CUSTOMERS (

id NUMBER(9) GENERATED BY DEFAULT ON NULL AS IDENTITY (START WITH 1001) NOT NULL PRIMARY KEY,

first_name VARCHAR2(255) NOT NULL,

last_name VARCHAR2(255) NOT NULL,

email VARCHAR2(255) NOT NULL UNIQUE

);

insert into customers values(1001,'a','b','c');

开启日志增补

sqlplus sys/Orcldoc_db1 as sysdba

修改全局数据库 也可以直接开启ALL级别的全库增补

ALTER DATABASE ADD SUPPLEMENTAL LOG DATA;

SELECT SUPPLEMENTAL_LOG_DATA_MIN FROM V$DATABASE;

切换到pdb数据库下 也可以直接开启ALL级别的全库增补

alter session set container = ORCLPDB1;

ALTER PLUGGABLE DATABASE ADD SUPPLEMENTAL LOG DATA;

ALTER TABLE DBZ.CUSTOMERS ADD SUPPLEMENTAL LOG DATA (ALL) COLUMNS;

SELECT SUPPLEMENTAL_LOG_DATA_MIN FROM V$DATABASE;

测试数据库层面Logminer是否好用

sqlplus / as sysdba

查看redo日志文件位置 下面命令根据显示的填写

select member from v$logfile;

execute dbms_logmnr.add_logfile('/u04/app/oracle/redo/redo001.log',dbms_logmnr.new);

execute dbms_logmnr.add_logfile('/u04/app/oracle/redo/redo002.log',dbms_logmnr.addfile);

execute dbms_logmnr.add_logfile('/u04/app/oracle/redo/redo003.log',dbms_logmnr.addfile);

execute dbms_logmnr.start_logmnr(options=>dbms_logmnr.dict_from_online_catalog+dbms_logmnr.committed_data_only);

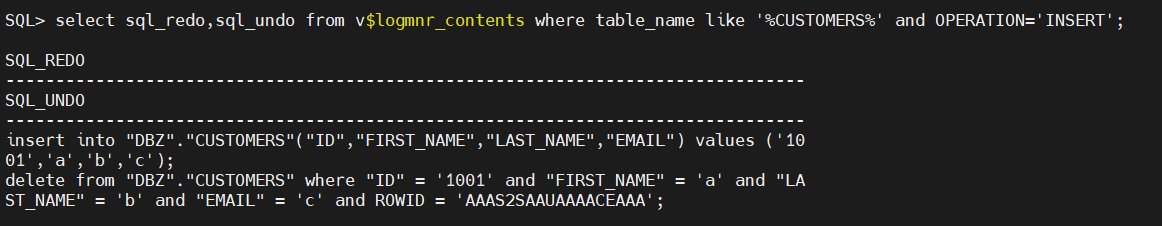

select sql_redo,sql_undo from v$logmnr_contents where table_name like '%CUSTOMERS%' and OPERATION='INSERT';

下面语句为结束语句

execute dbms_logmnr.end_logmnr;

该图片为select sql_redo,sql_undo from v$logmnr_contents where table_name like '%CUSTOMERS%' and OPERATION='INSERT'; 捕获结果说明好用

DBZ测试

准备source文件

- 用户名密码根据实际情况填写 如果完全按照本操作进行 不用修改

- IP 修改为实际数据库容器的IP,IP查看命令docker inspect 数据库容器ID |grep IP

{

"name": "test-connector",

"config": {

"connector.class" : "io.debezium.connector.oracle.OracleConnector",

"tasks.max" : "1",

"database.server.name" : "ORCLCDB",

"database.user" : "c##dbzuser",

"database.password" : "dbz",

"database.url": "jdbc:oracle:thin:@(DESCRIPTION= (ADDRESS= (PROTOCOL=TCP)(HOST=172.17.0.10)(PORT=1521)) (CONNECT_DATA= (SID=ORCLCDB) (SERVER=dedicated)))",

"database.dbname" : "ORCLCDB",

"database.pdb.name" : "ORCLPDB1",

"table.include.list": "DBZ.CUSTOMERS", // 只监控我们的表

"database.history.kafka.bootstrap.servers" : "kafka:9092",

"database.history.kafka.topic": "schema-changes.test"

}

}

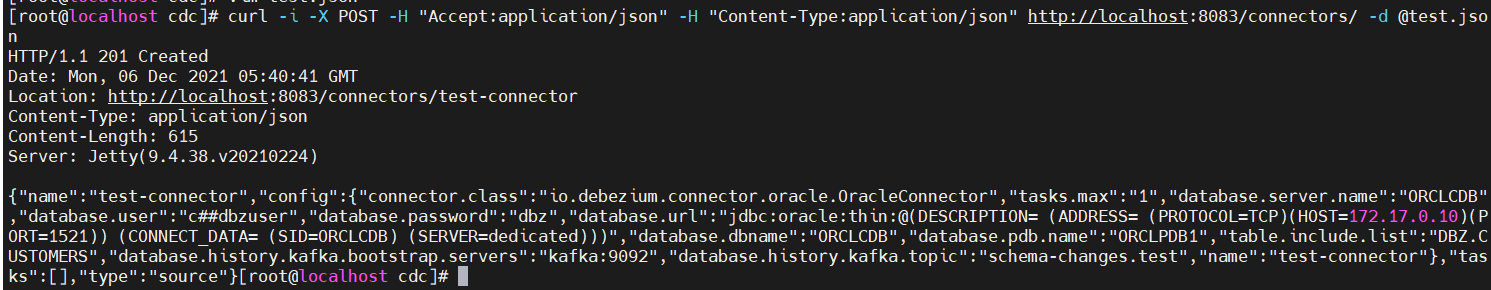

创建source connector

- 执行curl -i -X POST -H "Accept:application/json" -H "Content-Type:application/json" http://localhost:8083/connectors/ -d @test.json 根据实际情况填写

- 结果如图所示

核对捕获数据结果

- 进入到connect容器内部 执行

bin/kafka-topics.sh --list --zookeeper zookeeper:2181

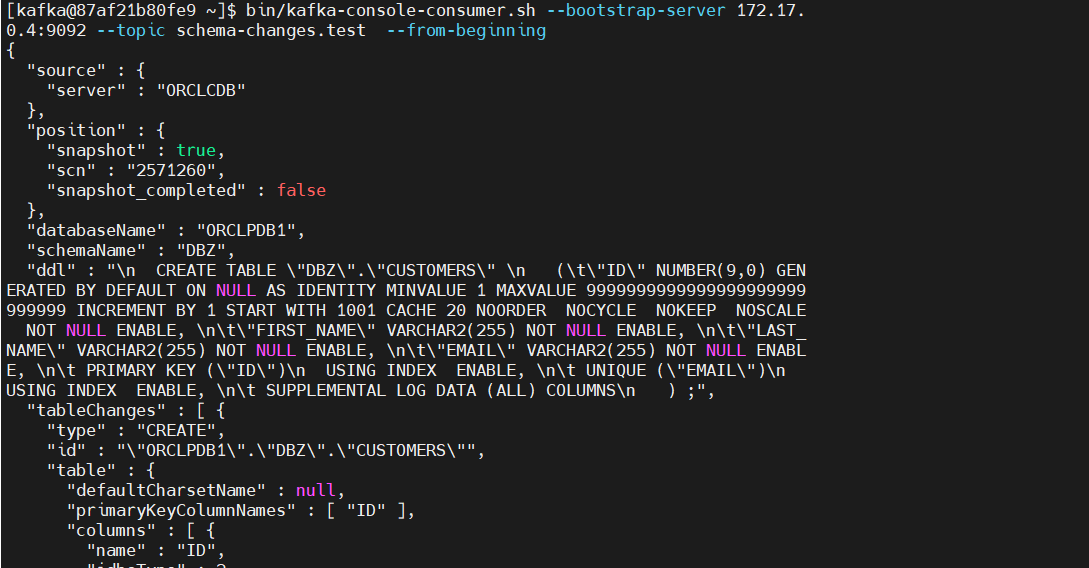

bin/kafka-console-consumer.sh --bootstrap-server 172.17.0.4:9092 --topic schema-changes.test --from-beginning

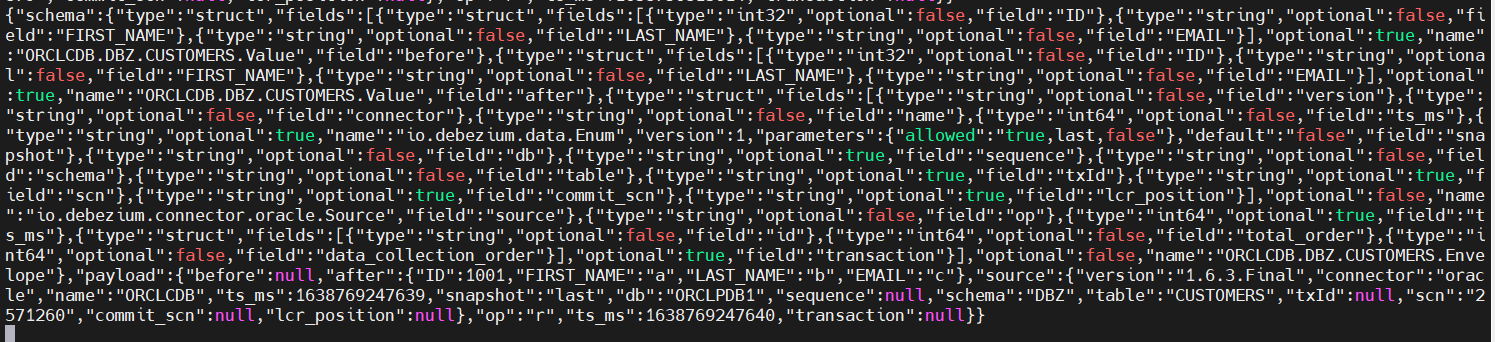

bin/kafka-console-consumer.sh --bootstrap-server 172.17.0.4:9092 --topic ORCLCDB.DBZ.CUSTOMERS --from-beginning

- 核对数据正确

DDL

DML

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· TypeScript + Deepseek 打造卜卦网站:技术与玄学的结合

· 阿里巴巴 QwQ-32B真的超越了 DeepSeek R-1吗?

· 如何调用 DeepSeek 的自然语言处理 API 接口并集成到在线客服系统

· 【译】Visual Studio 中新的强大生产力特性

· 2025年我用 Compose 写了一个 Todo App

2021-01-10 HAProxy之体验总结

2018-01-10 JAVA之使用JNA调用动态链接库