Zookeeper详解(03) - zookeeper的使用

Zookeeper详解(03) - zookeeper的使用

ZK客户端命令行操作

命令基本语法

help:显示所有操作命令

ls path:使用 ls 命令来查看当前znode的子节点

-w 监听子节点变化

-s 附加次级信息

create:普通创建

-s 含有序列

-e 临时(重启或者超时消失)

get path:获得节点的值

-w 监听节点内容变化

-s 附加次级信息

set:设置节点的具体值

stat:查看节点状态

delete:删除节点

deleteall:递归删除节点

- 启动客户端

bin/zkCli.sh

- 显示所有操作命令

[zk: localhost:2181(CONNECTED) 0] help

- 查看当前znode中所包含的内容

[zk: localhost:2181(CONNECTED) 0] ls /

[zookeeper]

- 查看当前节点详细数据

[zk: localhost:2181(CONNECTED) 1] ls -s /

[zookeeper]cZxid = 0x0

ctime = Thu Jan 01 08:00:00 CST 1970

mZxid = 0x0

mtime = Thu Jan 01 08:00:00 CST 1970

pZxid = 0x0

cversion = -1

dataVersion = 0

aclVersion = 0

ephemeralOwner = 0x0

dataLength = 0

numChildren = 1

- 分别创建2个普通节点

[zk: localhost:2181(CONNECTED) 3] create /sanguo "diaochan"

Created /sanguo

[zk: localhost:2181(CONNECTED) 4] create /sanguo/shuguo "liubei"

Created /sanguo/shuguo

- 获得节点的值

[zk: localhost:2181(CONNECTED) 7] get -s /sanguo

diaochan

cZxid = 0x100000004

ctime = Sun Dec 26 02:30:08 CST 2021

mZxid = 0x100000004

mtime = Sun Dec 26 02:30:08 CST 2021

pZxid = 0x100000007

cversion = 1

dataVersion = 0

aclVersion = 0

ephemeralOwner = 0x0

dataLength = 8

numChildren = 1

[zk: localhost:2181(CONNECTED) 8] get -s /sanguo/shuguo

liubei

cZxid = 0x100000008

ctime = Sun Dec 26 02:34:43 CST 2021

mZxid = 0x100000008

mtime = Sun Dec 26 02:34:43 CST 2021

pZxid = 0x100000008

cversion = 0

dataVersion = 0

aclVersion = 0

ephemeralOwner = 0x0

dataLength = 6

numChildren = 0

- 创建临时节点

[zk: localhost:2181(CONNECTED) 7] create -e /sanguo/wuguo "zhouyu"

Created /sanguo/wuguo

在当前客户端是能查看到的

[zk: localhost:2181(CONNECTED) 3] ls /sanguo

[wuguo, shuguo]

退出当前客户端然后再重启客户端

[zk: localhost:2181(CONNECTED) 12] quit

[atguigu@hadoop104 zookeeper-3.5.7]$ bin/zkCli.sh

再次查看根目录下短暂节点已经删除

[zk: localhost:2181(CONNECTED) 0] ls /sanguo

[shuguo]

- 创建带序号的节点

先创建一个普通的根节点/sanguo/weiguo

[zk: localhost:2181(CONNECTED) 1] create /sanguo/weiguo "caocao"

Created /sanguo/weiguo

创建带序号的节点

[zk: localhost:2181(CONNECTED) 2] create /sanguo/weiguo "caocao"

Node already exists: /sanguo/weiguo

[zk: localhost:2181(CONNECTED) 3] create -s /sanguo/weiguo "caocao"

Created /sanguo/weiguo0000000000

[zk: localhost:2181(CONNECTED) 4] create -s /sanguo/weiguo "caocao"

Created /sanguo/weiguo0000000001

[zk: localhost:2181(CONNECTED) 5] create -s /sanguo/weiguo "caocao"

Created /sanguo/weiguo0000000002

[zk: localhost:2181(CONNECTED) 6] ls /sanguo

[shuguo, weiguo, weiguo0000000000, weiguo0000000001, weiguo0000000002, wuguo]

[zk: localhost:2181(CONNECTED) 6]

如果节点下原来没有子节点,序号从0开始依次递增。如果原节点下已有2个节点,则再排序时从2开始,以此类推。

- 修改节点数据值

[zk: localhost:2181(CONNECTED) 6] set /sanguo/weiguo "caopi"

- 节点的值变化监听

在hadoop104主机上注册监听/sanguo节点数据变化

[zk: localhost:2181(CONNECTED) 26] [zk: localhost:2181(CONNECTED) 8] get -w /sanguo

在hadoop103主机上修改/sanguo节点的数据

[zk: localhost:2181(CONNECTED) 1] set /sanguo "xishi"

观察hadoop104主机收到数据变化的监听

WATCHER::

WatchedEvent state:SyncConnected type:NodeDataChanged path:/sanguo

- 节点的子节点变化监听(路径变化)

在hadoop104主机上注册监听/sanguo节点的子节点变化

[zk: localhost:2181(CONNECTED) 1] ls -w /sanguo

[aa0000000001, server101]

在hadoop103主机/sanguo节点上创建子节点

[zk: localhost:2181(CONNECTED) 2] create /sanguo/jin "simayi"

Created /sanguo/jin

观察hadoop104主机收到子节点变化的监听

WATCHER::

WatchedEvent state:SyncConnected type:NodeChildrenChanged path:/sanguo

- 删除节点

[zk: localhost:2181(CONNECTED) 4] delete /sanguo/jin

- 递归删除节点

[zk: localhost:2181(CONNECTED) 15] deleteall /sanguo/shuguo

- 查看节点状态

[zk: localhost:2181(CONNECTED) 11] stat /sanguo

cZxid = 0x100000004

ctime = Sun Dec 26 02:30:08 CST 2021

mZxid = 0x100000018

mtime = Sun Dec 26 02:43:01 CST 2021

pZxid = 0x10000001b

cversion = 13

dataVersion = 1

aclVersion = 0

ephemeralOwner = 0x0

dataLength = 5

numChildren = 5

Java API应用

IDEA环境搭建

- 创建一个Maven 项目

- 添加pom文件

<dependencies>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>RELEASE</version>

</dependency>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-core</artifactId>

<version>2.8.2</version>

</dependency>

<dependency>

<groupId>org.apache.zookeeper</groupId>

<artifactId>zookeeper</artifactId>

<version>3.5.7</version>

</dependency></dependencies>

- 拷贝log4j.properties文件到项目根目录

需要在项目的src/main/resources目录下,新建一个文件,命名为"log4j.properties",在文件中填入。

log4j.rootLogger=INFO, stdout

log4j.appender.stdout=org.apache.log4j.ConsoleAppender

log4j.appender.stdout.layout=org.apache.log4j.PatternLayout

log4j.appender.stdout.layout.ConversionPattern=%d %p [%c] - %m%n

log4j.appender.logfile=org.apache.log4j.FileAppender

log4j.appender.logfile.File=target/spring.log

log4j.appender.logfile.layout=org.apache.log4j.PatternLayout

log4j.appender.logfile.layout.ConversionPattern=%d %p [%c] - %m%n

初始化ZooKeeper客户端

package com.zhangjk.zookeeper;

import org.apache.zookeeper.WatchedEvent;

import org.apache.zookeeper.Watcher;

import org.apache.zookeeper.ZooKeeper;

import org.junit.After;

import org.junit.Before;

import java.io.IOException;

public class Zookeeper {

private String connectString;

private int sessionTimeout;

private ZooKeeper zkClient;

@Before //获取客户端对象

public void init() throws IOException {

connectString = "hadoop102:2181,hadoop103:2181,hadoop104:2181";

int sessionTimeout = 10000;

//参数解读 1集群连接字符串 2连接超时时间 单位:毫秒 3当前客户端默认的监控器

zkClient = new ZooKeeper(connectString, sessionTimeout, new Watcher(){

@Override

public void process(WatchedEvent event) {

}

});

}

@After //关闭客户端对象

public void close() throws InterruptedException {

zkClient.close();

}

}

操作zookeeper

获取子节点列表,不监听

@Test

public void ls() throws IOException, KeeperException, InterruptedException {

//用客户端对象做各种操作

List<String> children = zkClient.getChildren("/", false);

System.out.println(children);

}

获取子节点列表,并监听

@Test

public void lsAndWatch() throws KeeperException, InterruptedException {

List<String> children = zkClient.getChildren("/hadoop", new Watcher() {

@Override

public void process(WatchedEvent event) {

System.out.println(event);

}

});

System.out.println(children);

//因为设置了监听,所以当前线程不能结束

Thread.sleep(Long.MAX_VALUE);

}

启动前需创建/hadoop节点

create /hadoop

在/hadoop目录下创建子节点,就可以看到监听的打印日志

WatchedEvent state:SyncConnected type:NodeChildrenChanged path:/hadoop

创建子节点

@Test

public void create() throws KeeperException, InterruptedException {

//参数解读 1节点路径 2节点存储的数据

//3节点的权限(使用Ids选个OPEN即可) 4节点类型 短暂 持久 短暂带序号 持久带序号

String path = zkClient.create("/hadoop1", "hive".getBytes(), ZooDefs.Ids.OPEN_ACL_UNSAFE, CreateMode.PERSISTENT);

//创建临时节点

//String path = zkClient.create("/hadoop2", "hbase".getBytes(), ZooDefs.Ids.OPEN_ACL_UNSAFE, CreateMode.EPHEMERAL);

System.out.println(path);

//创建临时节点的话,需要线程阻塞,在客户端退出后临时节点就立即失效

//Thread.sleep(10000);

}

判断Znode是否存在

@Test

public void exist() throws Exception {

Stat stat = zkClient.exists("/hadoop", false);

System.out.println(stat == null ? "not exist" : "exist");

}

获取子节点存储的数据,不监听

@Test

public void get() throws KeeperException, InterruptedException {

//判断节点是否存在

Stat stat = zkClient.exists("/hadoop", false);

if (stat == null) {

System.out.println("节点不存在...");

return;

}

byte[] data = zkClient.getData("/hadoop", false, stat);

System.out.println(new String(data));

}

获取子节点存储的数据,并监听

@Test

public void getAndWatch() throws KeeperException, InterruptedException {

//判断节点是否存在

Stat stat = zkClient.exists("/hadoop", false);

if (stat == null) {

System.out.println("节点不存在...");

return;

}

byte[] data = zkClient.getData("/hadoop", new Watcher() {

@Override

public void process(WatchedEvent event) {

System.out.println(event);

}

}, stat);

System.out.println(new String(data));

//线程阻塞

Thread.sleep(Long.MAX_VALUE);

}

程序运行后改变/hadoop的值

[zk: localhost:2181(CONNECTED) 18] set /hadoop "112"

控制栏输出日志:

WatchedEvent state:SyncConnected type:NodeDataChanged path:/hadoop

设置节点的值

@Test

public void set() throws KeeperException, InterruptedException {

//判断节点是否存在

Stat stat = zkClient.exists("/hadoop", false);

if (stat == null) {

System.out.println("节点不存在...");

return;

}

//参数解读 1节点路径 2节点的值 3版本号

zkClient.setData("/hadoop", "bbb".getBytes(), stat.getVersion());

}

删除空节点

@Test

public void delete() throws KeeperException, InterruptedException {

//判断节点是否存在

Stat stat = zkClient.exists("/hadoop1", false);

if (stat == null) {

System.out.println("节点不存在...");

return;

}

zkClient.delete("/hadoop1", stat.getVersion());

}

删除非空节点,递归实现

//封装一个方法,方便递归调用

public void deleteAll(String path, ZooKeeper zk) throws KeeperException, InterruptedException {

//判断节点是否存在

Stat stat = zkClient.exists(path, false);

if (stat == null) {

System.out.println("节点不存在...");

return;

}

//先获取当前传入节点下的所有子节点

List<String> children = zk.getChildren(path, false);

if (children.isEmpty()) {

//说明传入的节点没有子节点,可以直接删除

zk.delete(path, stat.getVersion());

} else {

//如果传入的节点有子节点,循环所有子节点

for (String child : children) {

//删除子节点,但是不知道子节点下面还有没有子节点,所以递归调用

deleteAll(path + "/" + child, zk);

}

//删除完所有子节点以后,记得删除传入的节点

zk.delete(path, stat.getVersion());

}

}

//测试deleteAll

@Test

public void testDeleteAll() throws KeeperException, InterruptedException {

deleteAll("/hadoop",zkClient);

}

zookeeper应用案例(了解)

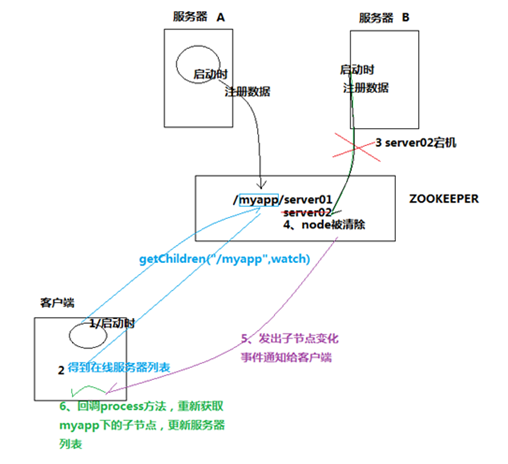

分布式应用(主节点HA)及客户端动态更新主节点状态

某分布式系统中,主节点可以有多台,可以动态上下线

任意一台客户端都能实时感知到主节点服务器的上下线

- 客户端实现

package com.zhangjk.zookeeper.ha;

import org.apache.zookeeper.WatchedEvent;

import org.apache.zookeeper.Watcher;

import org.apache.zookeeper.ZooKeeper;

import org.apache.zookeeper.data.Stat;

import java.util.ArrayList;

import java.util.List;

public class AppClient {

private String groupNode = "sgroup";

private ZooKeeper zk;

private Stat stat = new Stat();

private volatile List<String> serverList;

/**

* 连接zookeeper

*/

public void connectZookeeper() throws Exception {

zk = new ZooKeeper("hadoop102:2181,hadoop103:2181,hadoop104:2181", 5000, new Watcher() {

public void process(WatchedEvent event) {

// 如果发生了"/sgroup"节点下的子节点变化事件, 更新server列表, 并重新注册监听

if (event.getType() == Event.EventType.NodeChildrenChanged

&& ("/" + groupNode).equals(event.getPath())) {

try {

updateServerList();

} catch (Exception e) {

e.printStackTrace();

}

}

}

});

updateServerList();

}

/**

* 更新server列表

*/

private void updateServerList() throws Exception {

List<String> newServerList = new ArrayList<String>();

// 获取并监听groupNode的子节点变化

// watch参数为true, 表示监听子节点变化事件.

// 每次都需要重新注册监听, 因为一次注册, 只能监听一次事件, 如果还想继续保持监听, 必须重新注册

List<String> subList = zk.getChildren("/" + groupNode, true);

for (String subNode : subList) {

// 获取每个子节点下关联的server地址

byte[] data = zk.getData("/" + groupNode + "/" + subNode, false, stat);

newServerList.add(new String(data, "utf-8"));

}

// 替换server列表

serverList = newServerList;

System.out.println("server list updated: " + serverList);

}

/**

* client的工作逻辑写在这个方法中

* 此处不做任何处理, 只让client sleep

*/

public void handle() throws InterruptedException {

Thread.sleep(Long.MAX_VALUE);

}

public static void main(String[] args) throws Exception {

AppClient ac = new AppClient();

ac.connectZookeeper();

ac.handle();

}

}

- 服务器端实现

package com.zhangjk.zookeeper.ha;

import org.apache.zookeeper.*;

public class AppServer {

private String groupNode = "sgroup";

private String subNode = "sub";

/**

* 连接zookeeper

* @param address server的地址

*/

public void connectZookeeper(String address) throws Exception {

ZooKeeper zk = new ZooKeeper("hadoop102:2181,hadoop103:2181,hadoop104:2181", 5000, new Watcher() {

public void process(WatchedEvent event) {

// 不做处理

}

});

// 在"/sgroup"下创建子节点

// 子节点的类型设置为EPHEMERAL_SEQUENTIAL, 表明这是一个临时节点, 且在子节点的名称后面加上一串数字后缀

// 将server的地址数据关联到新创建的子节点上

String createdPath = zk.create("/" + groupNode + "/" + subNode, address.getBytes("utf-8"),

ZooDefs.Ids.OPEN_ACL_UNSAFE, CreateMode.EPHEMERAL_SEQUENTIAL);

System.out.println("create: " + createdPath);

}

/**

* server的工作逻辑写在这个方法中

* 此处不做任何处理, 只让server sleep

*/

public void handle() throws InterruptedException {

Thread.sleep(Long.MAX_VALUE);

}

public static void main(String[] args) throws Exception {

// 在参数中指定server的地址

if (args.length == 0) {

System.err.println("The first argument must be server address");

System.exit(1);

}

AppServer as = new AppServer();

as.connectZookeeper(args[0]);

as.handle();

}

}

分布式共享锁的简单实现

- 客户端A

package com.zhangjk.zookeeper.distsharelock;

import org.apache.zookeeper.*;

import org.apache.zookeeper.data.Stat;

import java.util.Collections;

import java.util.List;

import java.util.concurrent.CountDownLatch;

public class DistributedClient {

// 超时时间

private static final int SESSION_TIMEOUT = 5000;

// zookeeper server列表

private String hosts = "hadoop102:2181,hadoop103:2181,hadoop104:2181";

private String groupNode = "locks";

private String subNode = "sub";

private ZooKeeper zk;

// 当前client创建的子节点

private String thisPath;

// 当前client等待的子节点

private String waitPath;

private CountDownLatch latch = new CountDownLatch(1);

/**

* 连接zookeeper

*/

public void connectZookeeper() throws Exception {

zk = new ZooKeeper(hosts, SESSION_TIMEOUT, new Watcher() {

public void process(WatchedEvent event) {

try {

// 连接建立时, 打开latch, 唤醒wait在该latch上的线程

if (event.getState() == Event.KeeperState.SyncConnected) {

latch.countDown();

}

// 发生了waitPath的删除事件

if (event.getType() == Event.EventType.NodeDeleted && event.getPath().equals(waitPath)) {

doSomething();

}

} catch (Exception e) {

e.printStackTrace();

}

}

});

// 等待连接建立

latch.await();

// 创建子节点

thisPath = zk.create("/" + groupNode + "/" + subNode, null, ZooDefs.Ids.OPEN_ACL_UNSAFE,

CreateMode.EPHEMERAL_SEQUENTIAL);

// wait一小会, 让结果更清晰一些

Thread.sleep(10);

// 注意, 没有必要监听"/locks"的子节点的变化情况

List<String> childrenNodes = zk.getChildren("/" + groupNode, false);

// 列表中只有一个子节点, 那肯定就是thisPath, 说明client获得锁

if (childrenNodes.size() == 1) {

doSomething();

} else {

String thisNode = thisPath.substring(("/" + groupNode + "/").length());

// 排序

Collections.sort(childrenNodes);

int index = childrenNodes.indexOf(thisNode);

if (index == -1) {

// never happened

} else if (index == 0) {

// inddx == 0, 说明thisNode在列表中最小, 当前client获得锁

doSomething();

} else {

// 获得排名比thisPath前1位的节点

this.waitPath = "/" + groupNode + "/" + childrenNodes.get(index - 1);

// 在waitPath上注册监听器, 当waitPath被删除时, zookeeper会回调监听器的process方法

zk.getData(waitPath, true, new Stat());

}

}

}

private void doSomething() throws Exception {

try {

System.out.println("gain lock: " + thisPath);

Thread.sleep(2000);

// do something

} finally {

System.out.println("finished: " + thisPath);

// 将thisPath删除, 监听thisPath的client将获得通知

// 相当于释放锁

zk.delete(this.thisPath, -1);

}

}

public static void main(String[] args) throws Exception {

for (int i = 0; i < 10; i++) {

new Thread() {

public void run() {

try {

DistributedClient dl = new DistributedClient();

dl.connectZookeeper();

} catch (Exception e) {

e.printStackTrace();

}

}

}.start();

}

Thread.sleep(Long.MAX_VALUE);

}

}

- 分布式多进程模式实现

package com.zhangjk.zookeeper.distsharelock;

import org.apache.zookeeper.*;

import java.util.Collections;

import java.util.List;

import java.util.Random;

public class DistributedClientMy {

// 超时时间

private static final int SESSION_TIMEOUT = 5000;

// zookeeper server列表

private String hosts = "hadoop102:2181,hadoop103:2181,hadoop104:2181";

private String groupNode = "locks";

private String subNode = "sub";

private boolean haveLock = false;

private ZooKeeper zk;

// 当前client创建的子节点

private volatile String thisPath;

/**

* 连接zookeeper

*/

public void connectZookeeper() throws Exception {

zk = new ZooKeeper("spark01:2181", SESSION_TIMEOUT, new Watcher() {

public void process(WatchedEvent event) {

try {

// 子节点发生变化

if (event.getType() == Watcher.Event.EventType.NodeChildrenChanged && event.getPath().equals("/" + groupNode)) {

// thisPath是否是列表中的最小节点

List<String> childrenNodes = zk.getChildren("/" + groupNode, true);

String thisNode = thisPath.substring(("/" + groupNode + "/").length());

// 排序

Collections.sort(childrenNodes);

if (childrenNodes.indexOf(thisNode) == 0) {

doSomething();

thisPath = zk.create("/" + groupNode + "/" + subNode, null, ZooDefs.Ids.OPEN_ACL_UNSAFE,

CreateMode.EPHEMERAL_SEQUENTIAL);

}

}

} catch (Exception e) {

e.printStackTrace();

}

}

});

// 创建子节点

thisPath = zk.create("/" + groupNode + "/" + subNode, null, ZooDefs.Ids.OPEN_ACL_UNSAFE,

CreateMode.EPHEMERAL_SEQUENTIAL);

// wait一小会, 让结果更清晰一些

Thread.sleep(new Random().nextInt(1000));

// 监听子节点的变化

List<String> childrenNodes = zk.getChildren("/" + groupNode, true);

// 列表中只有一个子节点, 那肯定就是thisPath, 说明client获得锁

if (childrenNodes.size() == 1) {

doSomething();

thisPath = zk.create("/" + groupNode + "/" + subNode, null, ZooDefs.Ids.OPEN_ACL_UNSAFE,

CreateMode.EPHEMERAL_SEQUENTIAL);

}

}

/**

* 共享资源的访问逻辑写在这个方法中

*/

private void doSomething() throws Exception {

try {

System.out.println("gain lock: " + thisPath);

Thread.sleep(2000);

// do something

} finally {

System.out.println("finished: " + thisPath);

// 将thisPath删除, 监听thisPath的client将获得通知

// 相当于释放锁

zk.delete(this.thisPath, -1);

}

}

public static void main(String[] args) throws Exception {

DistributedClientMy dl = new DistributedClientMy();

dl.connectZookeeper();

Thread.sleep(Long.MAX_VALUE);

}

}

本文作者:莲藕淹,转载请注明原文链接:https://www.cnblogs.com/meanshift/p/15732822.html

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 开发者必知的日志记录最佳实践

· SQL Server 2025 AI相关能力初探

· Linux系列:如何用 C#调用 C方法造成内存泄露

· AI与.NET技术实操系列(二):开始使用ML.NET

· 记一次.NET内存居高不下排查解决与启示

· 开源Multi-agent AI智能体框架aevatar.ai,欢迎大家贡献代码

· Manus重磅发布:全球首款通用AI代理技术深度解析与实战指南

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· 没有Manus邀请码?试试免邀请码的MGX或者开源的OpenManus吧

· 园子的第一款AI主题卫衣上架——"HELLO! HOW CAN I ASSIST YOU TODAY