targetcli 配置在reboot后丢失,restore报错 device in use

配置后的targetcli在映射出去,客户端重新使用lvm创建了 lv 之后,再次重启服务器出现targetcli配置丢失

首先要使target开机启动enable

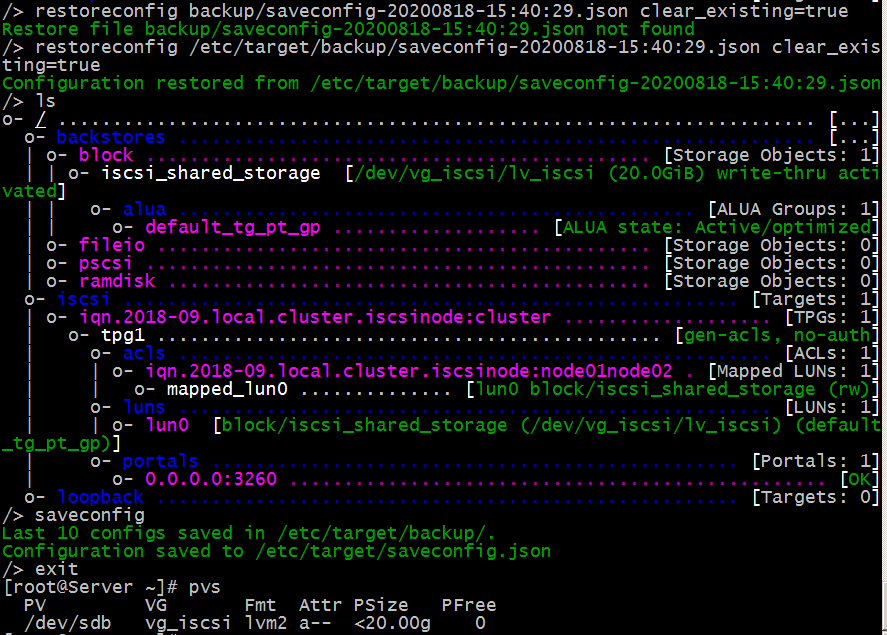

除此之外,找了很多资料,都说的不清楚,后来只能看英文资料,察觉有人说centos 7 需要使用第二层 lvm。于是重新配置,使用客户端创建的lv 可以成功创建,但是客户端映射的数据丢失了,所以这个也不是解决方法。后来发现使用lvm filter 得到解决

/> ls

o- / ..................................................................... [...]

o- backstores .......................................................... [...]

| o- block .............................................. [Storage Objects: 0]

| o- fileio ............................................. [Storage Objects: 0]

| o- pscsi .............................................. [Storage Objects: 0]

| o- ramdisk ............................................ [Storage Objects: 0]

o- iscsi ........................................................ [Targets: 1]

| o- iqn.2018-09.local.cluster.iscsinode:cluster ................... [TPGs: 1]

| o- tpg1 .............................................. [gen-acls, no-auth]

| o- acls ...................................................... [ACLs: 1]

| | o- iqn.2018-09.local.cluster.iscsinode:node01node02 . [Mapped LUNs: 0]

| o- luns ...................................................... [LUNs: 0]

| o- portals ................................................ [Portals: 1]

| o- 0.0.0.0:3260 ................................................. [OK]

o- loopback ..................................................... [Targets: 0]

/> restoreconfig backup/saveconfig-20200818-15:40:29.json clear_existing=true

Configuration restored, 3 recoverable errors:

Could not create StorageObject iscsi_shared_storage: Cannot configure StorageObject because device /dev/vg_iscsi/lv_iscsi is already in use, skipped

Could not find matching StorageObject for LUN 0, skipped

Could not find matching TPG LUN 0 for MappedLUN 0, skipped

[root@Server target]# pvs

PV VG Fmt Attr PSize PFree

/dev/sda2 centos lvm2 a-- <19.00g 0

/dev/sdb vg_iscsi lvm2 a-- <20.00g 0

/dev/vg_iscsi/lv_iscsi vg_apache lvm2 a-- 19.99g 0

[root@Server target]# targetcli

targetcli shell version 2.1.fb49

Copyright 2011-2013 by Datera, Inc and others.

For help on commands, type 'help'.

/backstores/block> create iscsi_shared_storage /dev/vg_apache/lv_apache

Created block storage object iscsi_shared_storage using /dev/vg_apache/lv_apache.

/backstores/block> ls

o- block .................................................. [Storage Objects: 1]

o- iscsi_shared_storage [/dev/vg_apache/lv_apache (20.0GiB) write-thru deactivated]

o- alua ................................................... [ALUA Groups: 1]

o- default_tg_pt_gp ....................... [ALUA state: Active/optimized]

/backstores/block> cd ..

/backstores> cd ..

/> ls

o- / ..................................................................... [...]

o- backstores .......................................................... [...]

| o- block .............................................. [Storage Objects: 1]

| | o- iscsi_shared_storage [/dev/vg_apache/lv_apache (20.0GiB) write-thru deactivated]

| | o- alua ............................................... [ALUA Groups: 1]

| | o- default_tg_pt_gp ................... [ALUA state: Active/optimized]

| o- fileio ............................................. [Storage Objects: 0]

| o- pscsi .............................................. [Storage Objects: 0]

| o- ramdisk ............................................ [Storage Objects: 0]

o- iscsi ........................................................ [Targets: 1]

| o- iqn.2018-09.local.cluster.iscsinode:cluster ................... [TPGs: 1]

| o- tpg1 .............................................. [gen-acls, no-auth]

| o- acls ...................................................... [ACLs: 1]

| | o- iqn.2018-09.local.cluster.iscsinode:node01node02 . [Mapped LUNs: 0]

| o- luns ...................................................... [LUNs: 0]

| o- portals ................................................ [Portals: 1]

| o- 0.0.0.0:3260 ................................................. [OK]

o- loopback ..................................................... [Targets: 0]

/> cd iscsi/iqn.2018-09.local.cluster.iscsinode:cluster/tpg1/luns

/iscsi/iqn.20...ter/tpg1/luns> create /backstores/block/iscsi_shared_storage

Created LUN 0.

Created LUN 0->0 mapping in node ACL iqn.2018-09.local.cluster.iscsinode:node01node02

/iscsi/iqn.20...ter/tpg1/luns> cd

/iscsi/iqn.20...ter/tpg1/luns>

/iscsi/iqn.20...ter/tpg1/luns>

/iscsi/iqn.20...ter/tpg1/luns> cd /

/> saveconfig

Configuration saved to /etc/target/saveconfig.json

/> exit

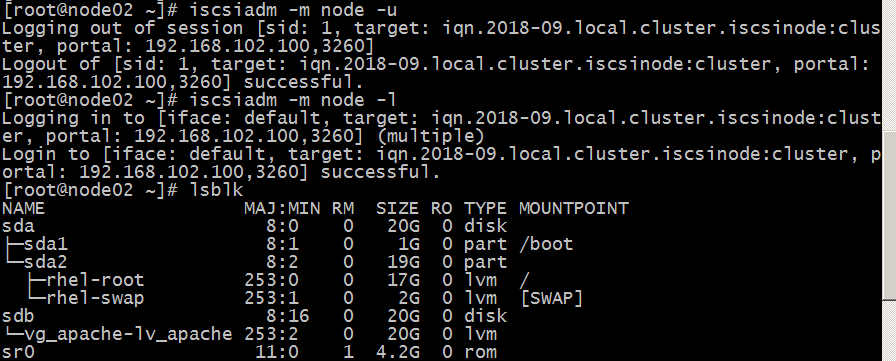

客户端重新加载 原来的数据丢失,所以这个方法不对

[root@node02 ~]# netstat -ano |grep 3260

[root@node02 ~]# iscsiadm -m node -l

Logging in to [iface: default, target: iqn.2018-09.local.cluster.iscsinode:cluster, portal: 192.168.102.100,3260] (multiple)

Login to [iface: default, target: iqn.2018-09.local.cluster.iscsinode:cluster, portal: 192.168.102.100,3260] successful.

[root@node02 ~]# netstat -ano |grep 3260

tcp 0 0 192.168.102.12:39910 192.168.102.100:3260 ESTABLISHED off (0.00/0/0)

[root@node02 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 20G 0 disk

├─sda1 8:1 0 1G 0 part /boot

└─sda2 8:2 0 19G 0 part

├─rhel-root 253:0 0 17G 0 lvm /

└─rhel-swap 253:1 0 2G 0 lvm [SWAP]

sdb 8:16 0 20G 0 disk

sr0 11:0 1 4.2G 0 rom

解决问题的方法:

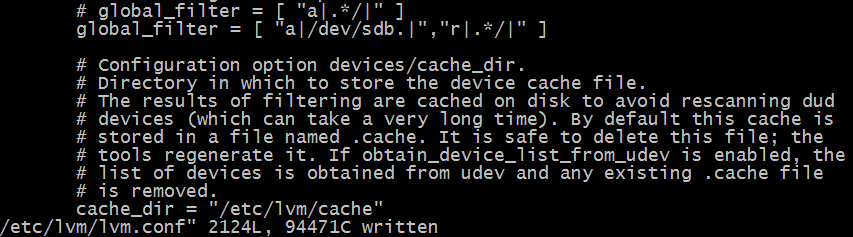

服务端修改配置文件/etc/lvm/lvm.conf

[root@Server target]# vi /etc/lvm/lvm.conf

global_filter = [ "a|/dev/sdb.|","r|.*/|" ]

重启主机,之后可以restore最早的正确配置

客户端重新退出登录iscsi。数据恢复

In case you use LVM managed storage pool for backstore devices, you should make certain that LVM/Devicemapper discards the second layer VGs/LVs.

What i meant by second layer VGs/LVs; for example:

Assume that the below LVs (DISK_1) has another VG initilized by iSCSI client, and used for the services within client. There will be two different VG layer in one disk, one VG within another.

If your LVM subsystem scans for VGs within the first layer LVs, newly discovered second Layer VGs and LVs within it will be mapped to the Target server. Since LV's are mapped to the target server (by devicemapper), lio_target modules will fail to load them as backstores.

[root@target ~]# pvs

PV VG Fmt Attr PSize PFree

/dev/mapper/mpatha STORAGE_POOL lvm2 a-- 12.00t 2.50t

[root@target ~]# lvs

LV VG Attr LSize

DISK_1 STORAGE_POOL -wi-ao---- 5.00t

DISK_2 STORAGE_POOL -wi-ao---- 1.00t

DISK_3 STORAGE_POOL -wi-ao---- 2.50t

DISK_4 STORAGE_POOL -wi-ao---- 1.00t

[root@target ~]#

LVM searches for VGs and LVs during booting OS. That is why you didn't realized the issue in first place.

You should set a LVM filter to scan for new VGs within disks. See lvm.conf manual for global_filter. Using this configuration, you will be able to discard second layer VGs. Below is a sample for above storage architecture, only to scan VGs within PVs, and discard rest of all block devices.

[root@target ~]# diff /etc/lvm/lvm.conf{,.orginal}

152d151

< global_filter = [ "a|/dev/mapper/mpath.|", "r|.*/|" ]

[root@target ~]#

You can simply use a script for running "vgchange -an 2nd_layer_VG" after bootup and restore LIO-target configuration. However i suggest using LVM's "global_filter" feature.

Note: Before CentOS 7/Red Hat 7, there was no problem on initilizing the second layer LVs, targetd were still able to load them as LUNs. However, new linux-iscsi(LIO) failt in that situation. I didn't reaserch the issue further.

Regards...

浙公网安备 33010602011771号

浙公网安备 33010602011771号