Lagrange Dual Theory for NLP

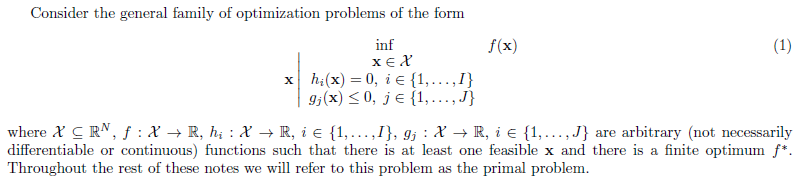

- Classic form of nonlinear programming

F1: \(f,h,g\) are arbitrary (not necessarily diferentiable or continuous) functions.

F2:

F3:

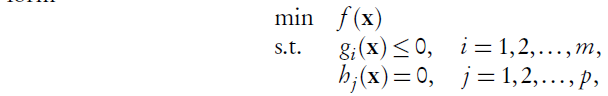

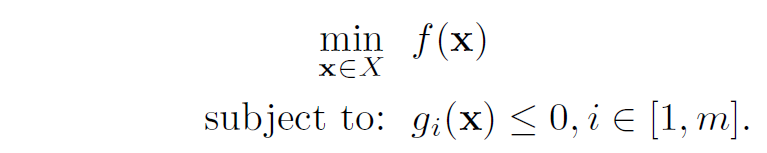

As \(h(x)=0\) can be equivalently written as two inequality constraints \(h(x)\leq 0\) and \(-h(x)\leq 0\), we only consider

\(\color{red}{\mbox{Denote the primal domain by}}\) \(D=X\cap \{x|g(x)\leq 0, h(x)=0\}\).}

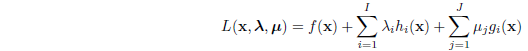

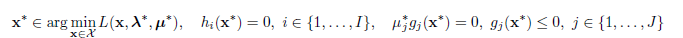

- Lagrange function and its dual

- Lagrange function:\(\mu \geq 0\) is called the Lagrange multiplier.

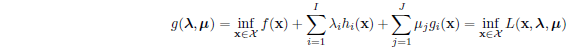

2)Lagrange dual function

[Remark]Observe that the minimization to calculate the

dual is carried out over all \(x \in X\), rather than just those within the constraint set. For this reason, we can prove that for primal feasilbe \(x\in D\) and dual feasible \((\lambda, \mu \geq 0)\) , we have

So we have for \(\mu \geq 0\) and \(x\in D\),

If primal is convex optimization, then KKT is sufficient.

-

Strong Duality: convex optimization with slater condition

\(f, g\) is convex, \(h\) is affine and there exists point \(x\) in relative interior of the constraint set such that all of the (nonlinear convex) inequality constraints hold with strict inequality.

In such case, dual gap disappeared and the KKT conditions are both necessary and sufficient for \(x\) to be the global solution to the primal problem. -

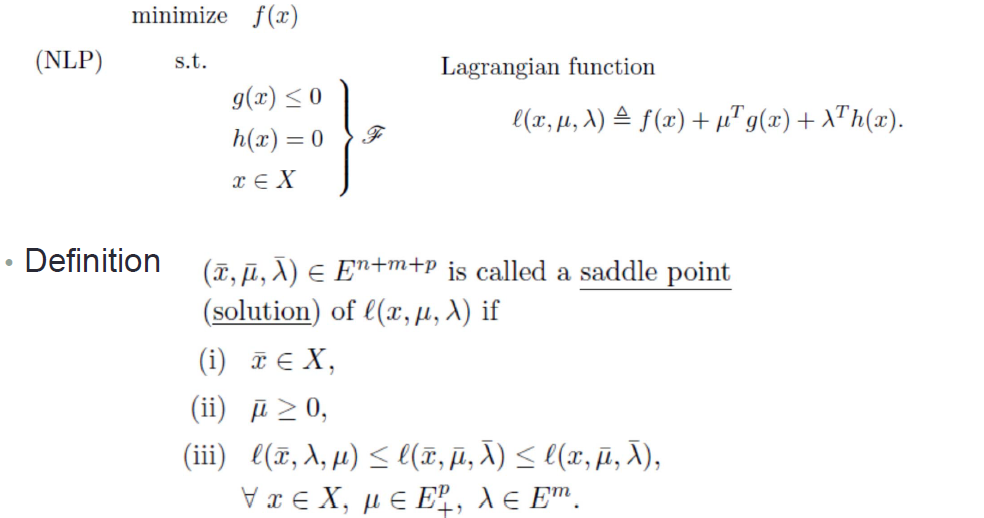

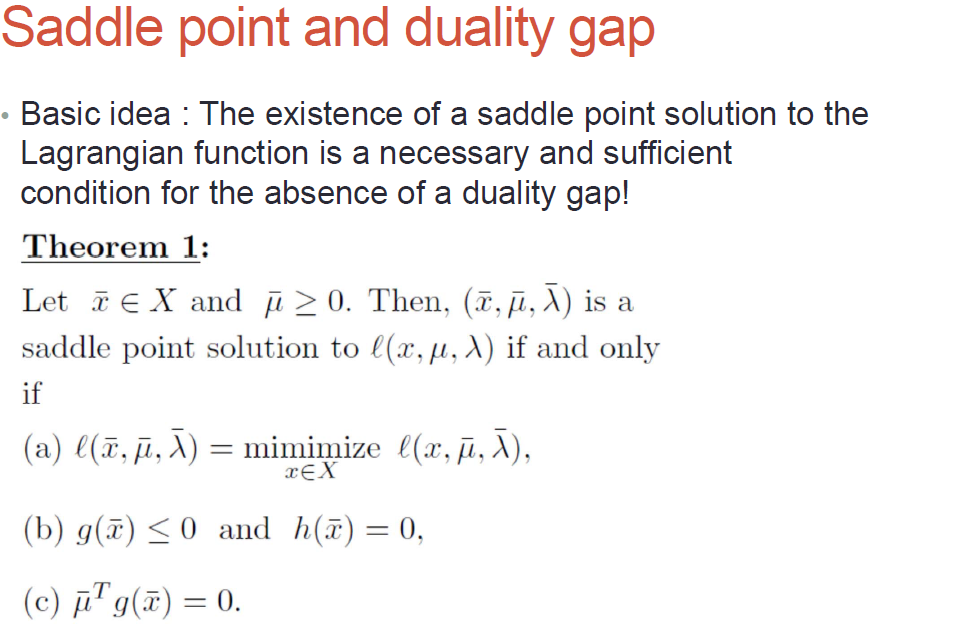

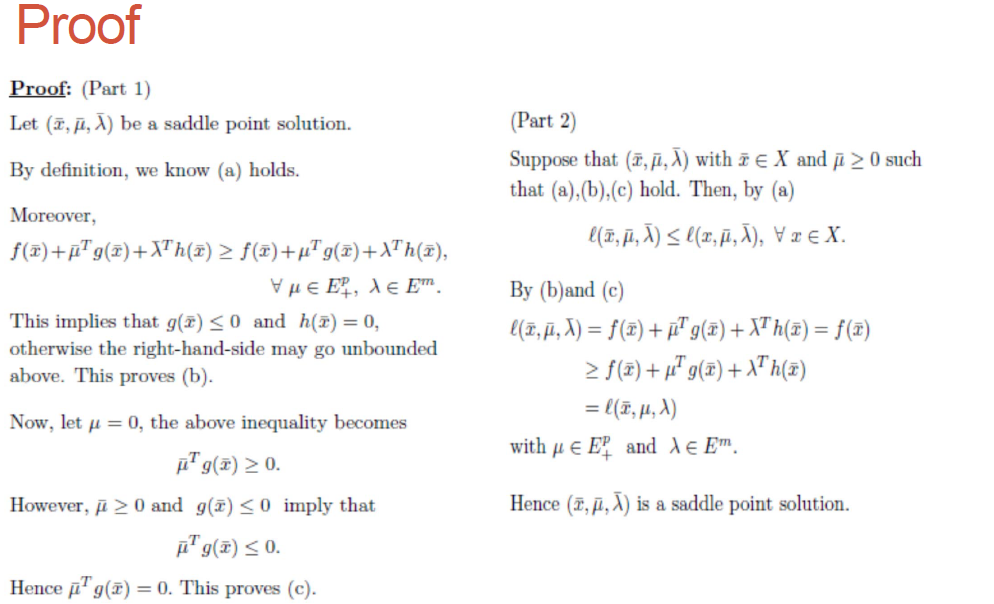

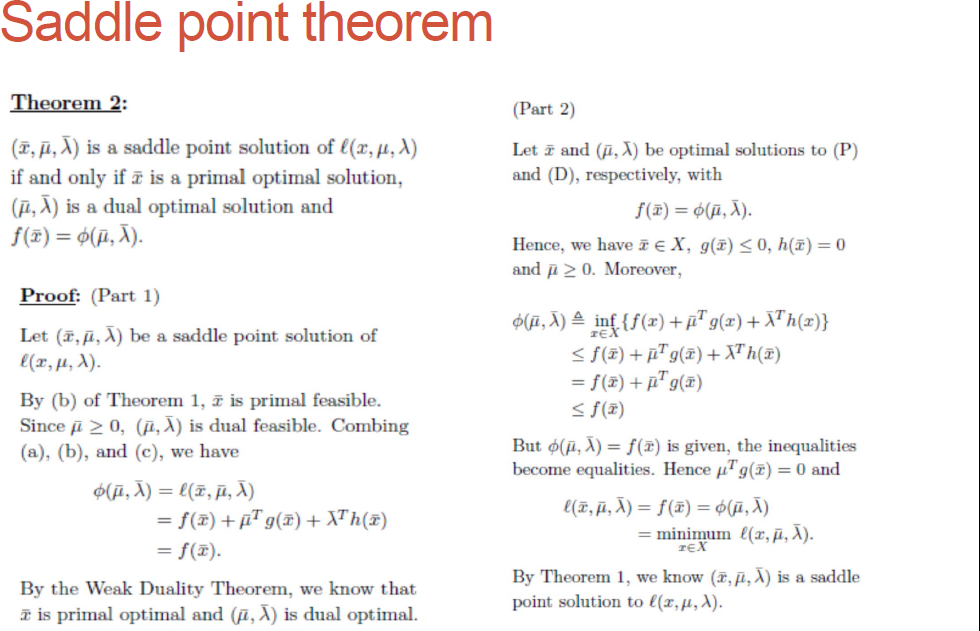

Saddle point and dualilty gap

-

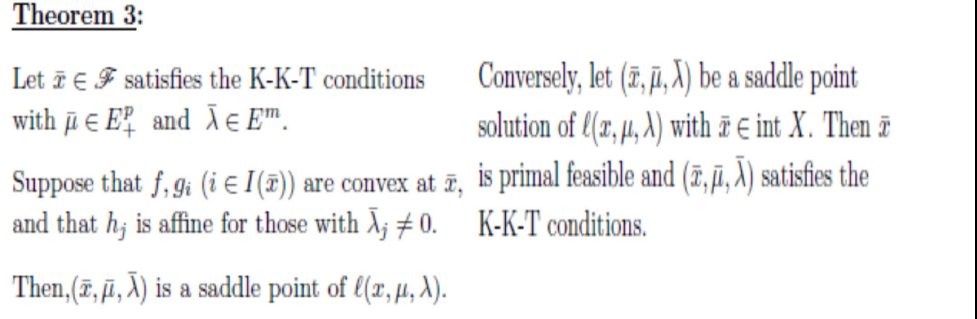

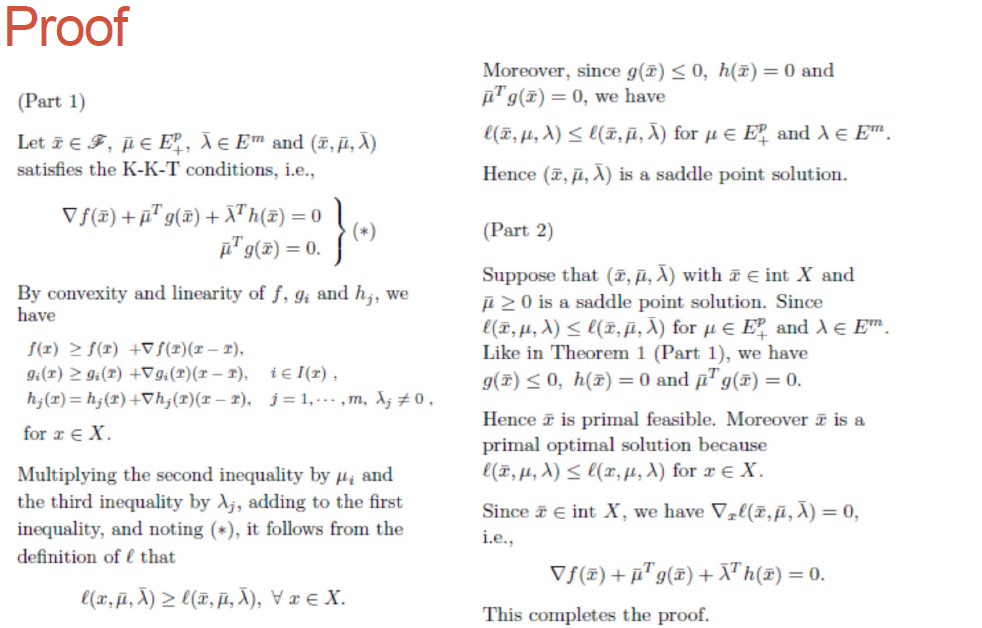

Saddle point and KKT condtions

\(\color{red}{\mbox{Remark:}} \mu^Tg(x)=0\) and \(g(x)\leq 0\) and \(\mu\geq 0\) means \(\alpha_i g_i=0\). -

KKT point is optimizer when dealing with convex ptimization

Any point which satisfies KKT conditions is an optimizer when dealing with a convex problem no matter Slater's holds or not but if it holds, an optimizer must hold the KKT conditions.

浙公网安备 33010602011771号

浙公网安备 33010602011771号