Spark Dataframe 转 Json

import org.apache.spark.sql.DataFrame import org.apache.spark.sql.functions._ import org.apache.spark.sql.types._ // Convenience function for turning JSON strings into DataFrames. def jsonToDataFrame(json: String, schema: StructType = null): DataFrame = { // SparkSessions are available with Spark 2.0+ val reader = spark.read Option(schema).foreach(reader.schema) reader.json(sc.parallelize(Array(json))) }

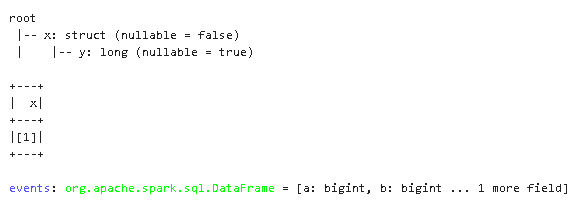

// Using a struct val schema = new StructType().add("a", new StructType().add("b", IntegerType)) val events = jsonToDataFrame(""" { "a": { "b": 1 } } """, schema) events.select("a.b").show()

val events = jsonToDataFrame(""" { "a": 1, "b": 2, "c": 3 } """) events.select(struct('a as 'y) as 'x).printSchema() events.select(struct('a as 'y) as 'x).show()

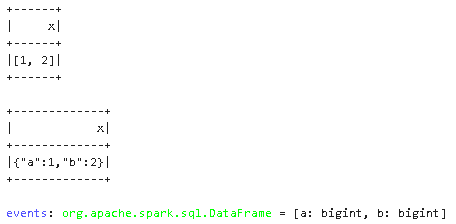

val events = jsonToDataFrame(""" { "a": 1, "b": 2 } """) events.select((struct("*") as 'x)).show() events.select(to_json(struct("*")) as 'x).show()

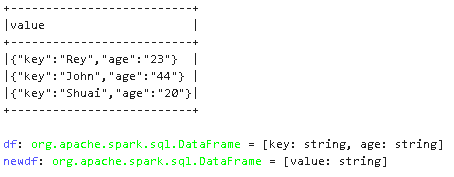

val df = Seq(("Rey", "23"), ("John", "44"),("Shuai", "20") ).toDF("key", "age")

df.columns.map(column)

val newdf = df.select(to_json(struct(df.columns.map(column):_*)).alias("value"))

newdf.show(false)

Ref:

https://docs.databricks.com/_static/notebooks/transform-complex-data-types-scala.html

转载请注明出处 http://www.cnblogs.com/mashuai-191/