爬虫之scrapy框架

概述

scrapy是为了爬取网站数据提取数据而写的框架,内置了多功能,通用性强,容易学习的一个爬虫框架

安装scrapy

pip install scrapy -i https://pypi.douban.com/simple

在window中安装scrapy需要安装twisted和pywin32,安装twisted,在http://www.lfd.uci.edu/~gohlke/pythonlibs/#twisted下载,然后通过cmd进入目录输入命令pip install Twisted-19.2.1-cp36-cp36m-win_amd64.whl安装,安装pywin32之间pip install pywin32 -i https://pypi.couban.com/simple

使用

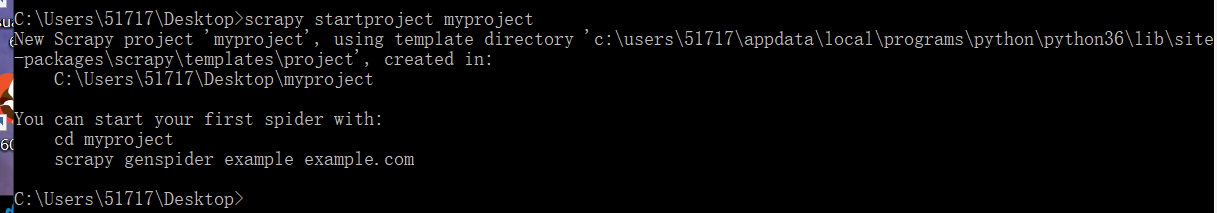

创建项目:通过命令行输入scrapy startproject 项目名

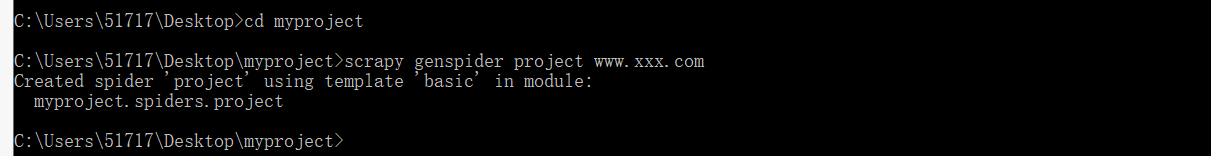

创建普通爬虫文件:cd到项目中scrapy genspider 爬虫文件名 初始url(随便设置,后面可以自己在爬虫文件中设置)

启动普通爬虫文件项目:scrapy crawl 文件名

启动项目是有可选参数 --nolog是取消查看日志信息, -o 文件名 是将结果输出到自定义文件名中,一般情况下不用

项目创建有以下目录所示

└─myproject │ items.py # 定义提交到管道的属性 │ middlewares.py # 中间件文件 │ pipelines.py # 管道文件 │ settings.py # 配置文件 │ __init__.py │ ├─spiders # 爬虫文件夹 │ │ project.py # 自定义创建的爬虫文件 │ │ __init__.py

各个文件的使用

settings.py(之挑选了经常使用的配置)

# UA伪装字段 USER_AGENT = 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/77.0.3865.120 Safari/537.36' # 日志显示配置 LOG_LEVEL = 'INFO' # robots协议配置 ROBOTSTXT_OBEY = False #线程数 CONCURRENT_REQUESTS = 32 #开启中间件 DOWNLOADER_MIDDLEWARES = { 'wangyi.middlewares.WangyiDownloaderMiddleware': 543, } #管道开启 ITEM_PIPELINES = { 'wangyi.pipelines.WangyiPipeline': 300, }

爬虫文件

# -*- coding: utf-8 -*- import scrapy class ProjectSpider(scrapy.Spider): name = 'project' # 允许爬虫的网站,一般情况下注释 # allowed_domains = ['www.xxx.com'] # 看是爬虫的初始url start_urls = ['http://www.xxx.com/'] def parse(self, response): ''' 访问url后的回调函数 :param response: 响应对象 :return: ''' pass

items.py

# -*- coding: utf-8 -*- # Define here the models for your scraped items # # See documentation in: # https://docs.scrapy.org/en/latest/topics/items.html import scrapy class MyprojectItem(scrapy.Item): ''' 提交管道字段,用scrapy.Field()定义即可 ''' # define the fields for your item here like: # name = scrapy.Field() pass

middlewares.py

由于在中间件中有自动生成的两个类,这里只介绍其中一个常用的类,且只介绍里面常用的方法常用的

# -*- coding: utf-8 -*- # Define here the models for your spider middleware # # See documentation in: # https://docs.scrapy.org/en/latest/topics/spider-middleware.html from scrapy import signals # 一般情况下不适用该各类 class MyprojectSpiderMiddleware(object): # Not all methods need to be defined. If a method is not defined, # scrapy acts as if the spider middleware does not modify the # passed objects. @classmethod def from_crawler(cls, crawler): # This method is used by Scrapy to create your spiders. s = cls() crawler.signals.connect(s.spider_opened, signal=signals.spider_opened) return s def process_spider_input(self, response, spider): # Called for each response that goes through the spider # middleware and into the spider. # Should return None or raise an exception. return None def process_spider_output(self, response, result, spider): # Called with the results returned from the Spider, after # it has processed the response. # Must return an iterable of Request, dict or Item objects. for i in result: yield i def process_spider_exception(self, response, exception, spider): # Called when a spider or process_spider_input() method # (from other spider middleware) raises an exception. # Should return either None or an iterable of Request, dict # or Item objects. pass def process_start_requests(self, start_requests, spider): # Called with the start requests of the spider, and works # similarly to the process_spider_output() method, except # that it doesn’t have a response associated. # Must return only requests (not items). for r in start_requests: yield r def spider_opened(self, spider): spider.logger.info('Spider opened: %s' % spider.name) class MyprojectDownloaderMiddleware(object): def process_request(self, request, spider): ''' 拦截所用能够正常访问的请求,即请求前经过治理 :param request: 请求对象 :param spider: 爬虫文件中的类实例化的对象,可以调用其中的属性和方法 :return: ''' return None def process_response(self, request, response, spider): ''' 拦截所有响应,可以在这类对响应对象进行处理 :param request: 请求对象 :param response: 响应对象 :param spider: 爬虫文件中的类实例化的对象,可以调用其中的属性和方法 :return: ''' return response def process_exception(self, request, exception, spider): ''' 拦截所用能够异常访问的请求,即请求前经过治理 :param request: 请求对象 :param spider: 爬虫文件中的类实例化的对象,可以调用其中的属性和方法 :return: ''' pass

pipelines.py

# -*- coding: utf-8 -*- # Define your item pipelines here # # Don't forget to add your pipeline to the ITEM_PIPELINES setting # See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html class MyprojectPipeline(object): def process_item(self, item, spider): # 计较过来的数据通过item接受,可以理解为字典,字典的键是items.py种定义的字段,值是爬虫文件里提交过来的数据 return item

浙公网安备 33010602011771号

浙公网安备 33010602011771号