spark踩坑--WARN ProcfsMetricsGetter: Exception when trying to compute pagesize

spark踩坑--WARN ProcfsMetricsGetter: Exception when trying to compute pagesize的最全解法

问题描述

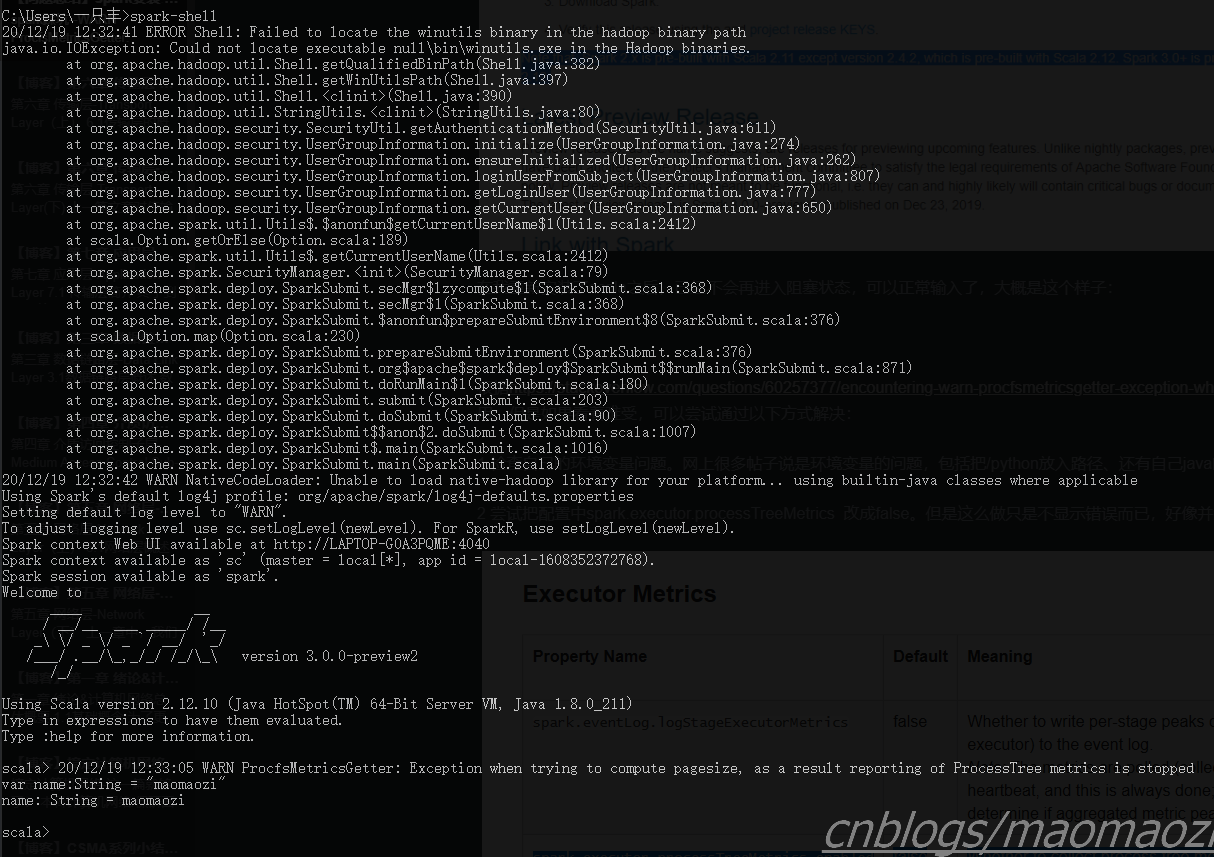

大概是今年上半年的时候装了spark(spark-3.0.0-preview2/hadoop2.7),装完环境之后就一直没管,今天用的时候出现了这个错误:

20/12/17 12:06:34 ERROR Shell: Failed to locate the winutils binary in the hadoop binary path

java.io.IOException: Could not locate executable null\bin\winutils.exe in the Hadoop binaries.

at org.apache.hadoop.util.Shell.getQualifiedBinPath(Shell.java:382)

at org.apache.hadoop.util.Shell.getWinUtilsPath(Shell.java:397)

at org.apache.hadoop.util.Shell.<clinit>(Shell.java:390)

at org.apache.hadoop.util.StringUtils.<clinit>(StringUtils.java:80)

at org.apache.hadoop.security.SecurityUtil.getAuthenticationMethod(SecurityUtil.java:611)

at org.apache.hadoop.security.UserGroupInformation.initialize(UserGroupInformation.java:274)

at org.apache.hadoop.security.UserGroupInformation.ensureInitialized(UserGroupInformation.java:262)

at org.apache.hadoop.security.UserGroupInformation.loginUserFromSubject(UserGroupInformation.java:807)

at org.apache.hadoop.security.UserGroupInformation.getLoginUser(UserGroupInformation.java:777)

at org.apache.hadoop.security.UserGroupInformation.getCurrentUser(UserGroupInformation.java:650)

at org.apache.spark.util.Utils$.$anonfun$getCurrentUserName$1(Utils.scala:2412)

at scala.Option.getOrElse(Option.scala:189)

at org.apache.spark.util.Utils$.getCurrentUserName(Utils.scala:2412)

at org.apache.spark.SecurityManager.<init>(SecurityManager.scala:79)

at org.apache.spark.deploy.SparkSubmit.secMgr$lzycompute$1(SparkSubmit.scala:368)

at org.apache.spark.deploy.SparkSubmit.secMgr$1(SparkSubmit.scala:368)

at org.apache.spark.deploy.SparkSubmit.$anonfun$prepareSubmitEnvironment$8(SparkSubmit.scala:376)

at scala.Option.map(Option.scala:230)

at org.apache.spark.deploy.SparkSubmit.prepareSubmitEnvironment(SparkSubmit.scala:376)

at org.apache.spark.deploy.SparkSubmit.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:871)

at org.apache.spark.deploy.SparkSubmit.doRunMain$1(SparkSubmit.scala:180)

at org.apache.spark.deploy.SparkSubmit.submit(SparkSubmit.scala:203)

at org.apache.spark.deploy.SparkSubmit.doSubmit(SparkSubmit.scala:90)

at org.apache.spark.deploy.SparkSubmit$$anon$2.doSubmit(SparkSubmit.scala:1007)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:1016)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

20/12/17 12:06:34 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

Spark context Web UI available at http://LAPTOP-G0A3PQME:4040

Spark context available as 'sc' (master = local[*], app id = local-1608178002520).

Spark session available as 'spark'.

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 3.0.0-preview2

/_/

Using Scala version 2.12.10 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_211)

Type in expressions to have them evaluated.

Type :help for more information.

scala> 20/12/17 12:06:59 WARN ProcfsMetricsGetter: Exception when trying to compute pagesize, as a result reporting of ProcessTree metrics is stopped

WARN ProcfsMetricsGetter: Exception when trying to compute pagesize, as a result reporting of ProcessTree metrics is stopped,跳出warn之后就进入了阻塞状态,只能ctrlc关闭。试了一圈别人总结的方法后发现都没有解决,所以把我的方法总结在下面:

解决方法

1 建议首先检查下pyspark和scala是否已经下载。如果已经下载,确定一下版本问题。

我回忆了一下,可能是因为上半年我重新配了一遍各种环境所以导致了问题。命令行看一下

scala -version

检查pyspark已经下载,检查scala已经安装并且版本合适。(不同版本的spark对于hadoop和scala有要求,官网上有介绍,比如下图)

在我重新安装了scala之后,已经不会再进入阻塞状态,可以正常输入了,大概是这个样子:

这个时候应该就可以正常使用了,到目前为止我也没有遇到什么明显的问题。且根据https://stackoverflow.com/questions/60257377/encountering-warn-procfsmetricsgetter-exception-when-trying-to-compute-pagesi,这个WARN本身应该不影响正常使用。但是如果看着难受,也可以尝试通过以下方式解决:

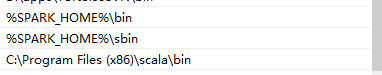

2 检查自己的环境变量问题

网上很多帖子说是环境变量的问题,包括把%SPARK_HOME%\python放入路径、还有自己java的路径没写对、以及/bin和/sbin(我尝试了还是没有解决,但读者们还是可以试一下)

注意都放到系统变量里面。

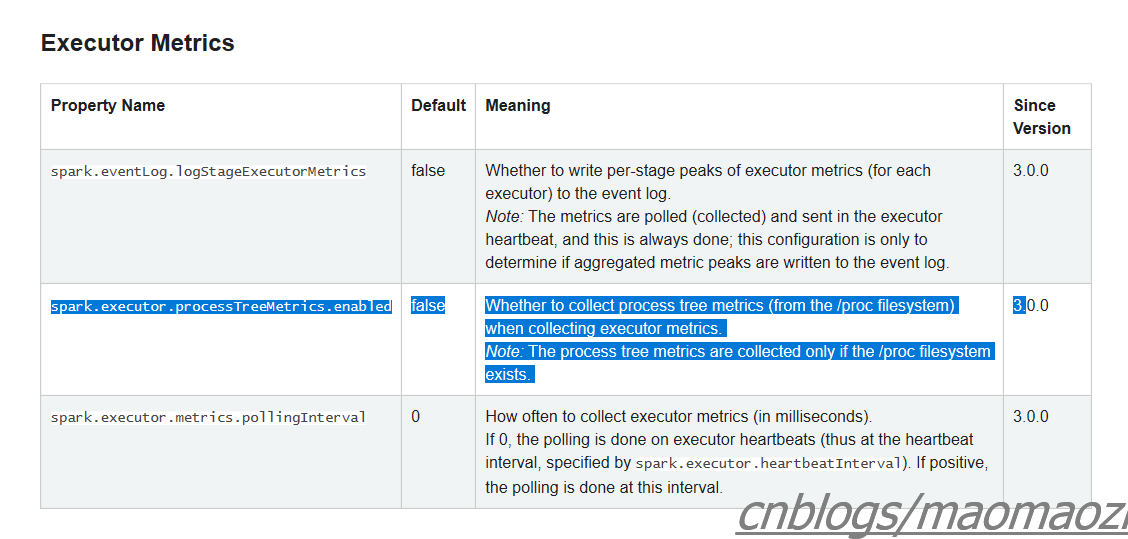

3 把配置中spark.executor.processTreeMetrics 改成false

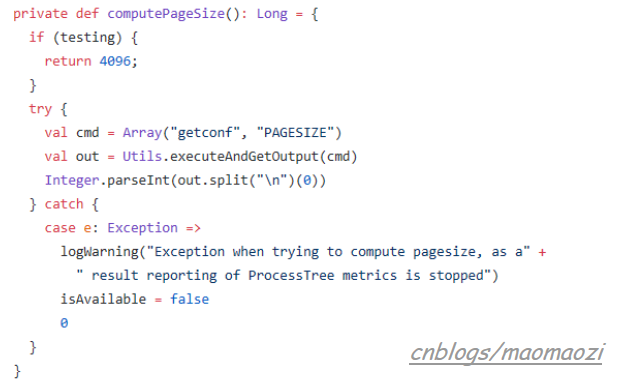

去git上看了一下这个错误位置,应该是这个地方抛出的:

但这是个linux下的指令,不知道是不是apache自己的疏忽。但不管怎么说,把配置中spark.executor.processTreeMetrics 改成false应该就可以了,这么做大概相当于屏蔽掉问题。我看了一下目前网上的相似问题,能查到的基本都是spark3.0才才会出现。且根据apache发的公告来说,这是一个3.0版本后才发布的改动(https://spark.apache.org/docs/3.0.1/configuration.html)。当把这个改成true的时候,spark可以高频率的收集执行指标。(if spark.executor.processTreeMetrics.enabled=true; The optional configuration spark.executor.metrics.pollingInterval allows to gather executor metrics at high frequency, see doc. )

4 如果还是不行,换一个旧点的版本。

因为是3.0.0才发布的改动,如果还是不行的话应该只能换个旧一点的版本了(比如2.4.6)。如果不是企业级开发的话,也不会有太大问题。毕竟spark配置也不算很繁琐。

内容参考

https://www.aws-senior.com/apache-spark-3-0-memory-monitoring-improvements/

浙公网安备 33010602011771号

浙公网安备 33010602011771号