记一次很憨批的文件句柄泄露!!!

1.最近在公司负责一个很简单的小模块,它的功能很简单,就是频繁(并非特别频繁,0.25东 的时间吧,哈哈)扫描 /proc/$pid/status,然后检查对应的进程名,窃以为

这种纯扫描的办法确实很憨批(小声逼逼),如果对应的进程名不存在,则重启即可,可奇怪的是有人使用 system(),这个函数,这个

函数处理不好就会文件句柄泄露,为什么?因为它会调用execl,并且,如果你在父进程打开了文件,没有 设置 close on execl,那么肯定泄露了。

所以,你不确定是否泄露很简单,在/proc/$pid/fd下面检查是否有过多文件,使用 ls -al 命令查看对应的文件名,当然,你遇到socket,那宁就倒霉了,

因为socket只能看到 socket:[inode-no],当然看不到路径,所以就得用netstat -anop | grep "1/systemd" | grep 16712,这条命令就行了确实,netstat 是通过打开

/peoc/net/unix 得到的。

2.实际上我那天晚上搞了一晚上,我当时没有发现如此方便的方法,我用了几个方法。

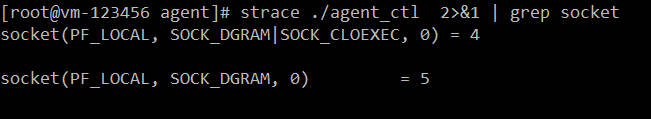

我那天晚上发现,会有两个socket,为啥啊,我当时不知道 syslog() 会连接一个,尼玛,我第一步先先确定确实打开了两个socket,

我给机器上安装了 strace,当时我用 strace ./xxx 2>&1 | grep socket

然后俺就发现确实会创建一个unix socket连接,俺在想是什么创建的连接啊,俺想进内核看看啊,俺想用 systemtap

或者 bpftrace 看就行了,于是发现,俺这个系统没网而且不能使用,而且依赖复杂,俺就想啊,用 kprobe 吧,

于是写了个kprobe 内核模块吧,于是俺发现编译内核模块需要很多依赖于是俺就想用交叉编译,就是下载需要kprobe的kernel dev 包,

放在虚拟机里面的,于是乎,代码如下:

1 #include <linux/module.h> 2 #include <linux/file.h> 3 #include <linux/uaccess.h> 4 #include <linux/kallsyms.h> 5 #include <linux/kprobes.h> 6 #include <linux/string.h> 7 8 // 9 // cat /boot/System.map | grep sysmbols 10 // 11 static struct kprobe kp = { 12 //.symbol_name = "SyS_socket", 13 .symbol_name = "sys_socket", 14 }; 15 static int handler_pre(struct kprobe *p, struct pt_regs *regs) 16 { 17 // 18 // dump statck , check !!! 19 // 20 if (!strcmp(current->comm, "agent_monitor") || !strcmp(current->comm, "agent_ctl")) { 21 pr_err("------ process %s dump stack begin!!!-----\n", current->comm); 22 dump_stack(); 23 pr_err("------ process %s dump stack end!!!-----\n", current->comm); 24 } 25 26 27 28 29 /* 在这里可以调用内核接口函数dump_stack打印出栈的内容*/ 30 return 0; 31 } 32 33 static void handler_post(struct kprobe *p, struct pt_regs *regs, 34 unsigned long flags) 35 { 36 // 37 //printk(KERN_INFO "post_handler: p->addr = 0x%p, flags = 0x%lx\n", 38 // p->addr, regs->flags); 39 40 if (!strcmp(current->comm, "agent_monitor") || !strcmp(current->comm, "agent_ctl")) { 41 pr_err("process : %s, pid: %d do :%s\n", current->comm, current->pid, kp.symbol_name); 42 } 43 44 //pr_err("pid: %d do fork\n", current->pid); 45 //pr_err("ppid: %d do fork\n", current->parent->pid); 46 47 } 48 49 /*在pre-handler或post-handler中的任何指令或者kprobe单步执行的被探测指令产生了例外时,会调用fault_handler*/ 50 static int handler_fault(struct kprobe *p, struct pt_regs *regs, int trapnr) 51 { 52 printk(KERN_INFO "fault_handler: p->addr = 0x%p, trap #%dn", 53 p->addr, trapnr); 54 /* 不处理错误时应该返回*/ 55 return 0; 56 } 57 58 static int __init driver_init(void) 59 { 60 61 62 int ret; 63 64 kp.pre_handler = handler_pre; 65 kp.post_handler = handler_post; 66 kp.fault_handler = handler_fault; 67 68 pr_err("driver_init"); 69 70 ret = register_kprobe(&kp); /*注册kprobe*/ 71 if (ret < 0) { 72 printk(KERN_INFO "register_kprobe failed, returned %d\n", ret); 73 return ret; 74 } 75 76 printk(KERN_INFO "socket: kprobe at %p\n", kp.addr); 77 78 return 0; 79 } 80 81 static void __exit driver_exit(void) 82 { 83 unregister_kprobe(&kp); 84 printk(KERN_INFO "kprobe at %p unregistered\n", kp.addr); 85 pr_err("driver_exit"); 86 } 87 88 89 90 91 MODULE_LICENSE("GPL"); 92 module_init(driver_init); 93 module_exit(driver_exit);

1 ifeq ($(KERNELRELEASE),) 2 3 #KERNELDIR ?=/lib/modules/$(shell uname -r)/build 4 #KERNELDIR ?=/root/kernel/usr/src/kernels/3.10.0-1127.el7.x86_64 5 KERNELDIR ?=/root/kernel/usr/src/kernels/2.6.32-696.el6.x86_64 6 7 8 PWD := $(shell pwd) 9 10 modules: 11 $(MAKE) -C $(KERNELDIR) M=$(PWD) modules 12 13 modules_install: 14 $(MAKE) -C $(KERNELDIR) M=$(PWD) modules_install 15 16 clean: 17 rm -rf *.o *~ core .depend .*.cmd *.ko *.mod.c .tmp_versions modules* Module* 18 19 .PHONY: modules modules_install clean 20 21 else 22 obj-m += kprobe_.o 23 kprobe_-objs := kprobe.o 24 endif

俺的系统运行环境是 uname -a,

宿主机:

Linux AutoCloud 3.10.0-1127.el7.x86_64 #1 SMP Tue Mar 31 23:36:51 UTC 2020 x86_64 x86_64 x86_64 GNU/Linux

虚拟机:

Linux vm 2.6.32-696.el6.x86_64 #1 SMP Tue Feb 21 00:53:17 EST 2017 x86_64 x86_64 x86_64 GNU/Linux

其中虚拟机的centos版本:Red Hat Enterprise Linux Server release 6.9 (Santiago)

于是乎下载 这个包,kernel-devel-2.6.32-696.el6.x86_64.rpm

rpm2cpio kernel-devel-2.6.32-696.el6.x86_64.rpm | cpio -div

解压到某个目录,把makefile KERNELDIR变量替换成自己解压的位置,

然后make编译,放在虚拟机里面 insmod ,启动程序,然后就发现了

1 Feb 6 00:04:16 vm kernel: ------ process agent_ctl dump stack begin!!!----- 2 Feb 6 00:04:16 vm kernel: Pid: 1451, comm: agent_ctl Not tainted 2.6.32-696.el6.x86_64 #1 3 Feb 6 00:04:16 vm kernel: Call Trace: 4 Feb 6 00:04:16 vm kernel: <#DB> [<ffffffffa01f7102>] ? handler_pre+0x72/0x85 [kprobe_] 5 Feb 6 00:04:16 vm kernel: [<ffffffff8155119b>] ? aggr_pre_handler+0x5b/0xb0 6 Feb 6 00:04:16 vm kernel: [<ffffffff81465bc0>] ? sys_socket+0x0/0x80 7 Feb 6 00:04:16 vm kernel: [<ffffffff81465bc1>] ? sys_socket+0x1/0x80 8 Feb 6 00:04:16 vm kernel: [<ffffffff81550bb5>] ? kprobe_exceptions_notify+0x3d5/0x430 9 Feb 6 00:04:16 vm kernel: [<ffffffff81550e25>] ? notifier_call_chain+0x55/0x80 10 Feb 6 00:04:16 vm kernel: [<ffffffff81550e8a>] ? atomic_notifier_call_chain+0x1a/0x20 11 Feb 6 00:04:16 vm kernel: [<ffffffff810acc1e>] ? notify_die+0x2e/0x30 12 Feb 6 00:04:16 vm kernel: [<ffffffff8154e6f5>] ? do_int3+0x35/0xb0 13 Feb 6 00:04:16 vm kernel: [<ffffffff8154df63>] ? int3+0x33/0x40 14 Feb 6 00:04:16 vm kernel: [<ffffffff81465bc1>] ? sys_socket+0x1/0x80 15 Feb 6 00:04:16 vm kernel: <<EOE>> [<ffffffff8100b0d2>] ? system_call_fastpath+0x16/0x1b 16 Feb 6 00:04:16 vm kernel: ------ process agent_ctl dump stack end!!!----- 17 Feb 6 00:04:16 vm kernel: process : agent_ctl, pid: 1451 do :sys_socket 18 Feb 6 00:04:16 vm agent_ctl: vport name : /dev/vport0p2 19 Feb 6 00:04:16 vm agent_ctl: set coredump pattern :echo 'core.0.000000e+00.(nil)_%' > /proc/sys/kernel/core_pattern 20 Feb 6 00:04:16 vm kernel: ------ process agent_ctl dump stack begin!!!----- 21 Feb 6 00:04:16 vm kernel: Pid: 1451, comm: agent_ctl Not tainted 2.6.32-696.el6.x86_64 #1 22 Feb 6 00:04:16 vm kernel: Call Trace: 23 Feb 6 00:04:16 vm kernel: <#DB> [<ffffffffa01f7102>] ? handler_pre+0x72/0x85 [kprobe_] 24 Feb 6 00:04:16 vm kernel: [<ffffffff8155119b>] ? aggr_pre_handler+0x5b/0xb0 25 Feb 6 00:04:16 vm kernel: [<ffffffff81465bc0>] ? sys_socket+0x0/0x80 26 Feb 6 00:04:16 vm kernel: [<ffffffff81465bc1>] ? sys_socket+0x1/0x80 27 Feb 6 00:04:16 vm kernel: [<ffffffff81550bb5>] ? kprobe_exceptions_notify+0x3d5/0x430 28 Feb 6 00:04:16 vm kernel: [<ffffffff81550e25>] ? notifier_call_chain+0x55/0x80 29 Feb 6 00:04:16 vm kernel: [<ffffffff81550e8a>] ? atomic_notifier_call_chain+0x1a/0x20 30 Feb 6 00:04:16 vm kernel: [<ffffffff810acc1e>] ? notify_die+0x2e/0x30 31 Feb 6 00:04:16 vm kernel: [<ffffffff8154e6f5>] ? do_int3+0x35/0xb0 32 Feb 6 00:04:16 vm kernel: [<ffffffff8154df63>] ? int3+0x33/0x40 33 Feb 6 00:04:16 vm kernel: [<ffffffff81465bc1>] ? sys_socket+0x1/0x80 34 Feb 6 00:04:16 vm kernel: <<EOE>> [<ffffffff8100b0d2>] ? system_call_fastpath+0x16/0x1b 35 Feb 6 00:04:16 vm kernel: ------ process agent_ctl dump stack end!!!----- 36 Feb 6 00:04:16 vm kernel: process : agent_ctl, pid: 1451 do :sys_socket 37 Feb 6 00:04:16 vm agent_ctl: agent_monitor supervisor thread start, cmd: ./agent_monitor 38 Feb 6 00:04:16 vm agent_ctl: find agent_monitor not running, exec cmd : ./agent_monitor 39 Feb 6 00:04:16 vm kernel: ------ process agent_monitor dump stack begin!!!----- 40 Feb 6 00:04:16 vm kernel: Pid: 1455, comm: agent_monitor Not tainted 2.6.32-696.el6.x86_64 #1 41 Feb 6 00:04:16 vm kernel: Call Trace: 42 Feb 6 00:04:16 vm kernel: <#DB> [<ffffffffa01f7102>] ? handler_pre+0x72/0x85 [kprobe_] 43 Feb 6 00:04:16 vm kernel: [<ffffffff8155119b>] ? aggr_pre_handler+0x5b/0xb0 44 Feb 6 00:04:16 vm kernel: [<ffffffff81465bc0>] ? sys_socket+0x0/0x80 45 Feb 6 00:04:16 vm kernel: [<ffffffff81465bc1>] ? sys_socket+0x1/0x80 46 Feb 6 00:04:16 vm kernel: [<ffffffff81550bb5>] ? kprobe_exceptions_notify+0x3d5/0x430 47 Feb 6 00:04:16 vm kernel: [<ffffffff81550e25>] ? notifier_call_chain+0x55/0x80 48 Feb 6 00:04:16 vm kernel: [<ffffffff81550e8a>] ? atomic_notifier_call_chain+0x1a/0x20 49 Feb 6 00:04:16 vm kernel: [<ffffffff810acc1e>] ? notify_die+0x2e/0x30 50 Feb 6 00:04:16 vm kernel: [<ffffffff8154e6f5>] ? do_int3+0x35/0xb0 51 Feb 6 00:04:16 vm kernel: [<ffffffff8154df63>] ? int3+0x33/0x40 52 Feb 6 00:04:16 vm kernel: [<ffffffff81465bc1>] ? sys_socket+0x1/0x80 53 Feb 6 00:04:16 vm kernel: <<EOE>> [<ffffffff8100b0d2>] ? system_call_fastpath+0x16/0x1b 54 Feb 6 00:04:16 vm kernel: ------ process agent_monitor dump stack end!!!----- 55 Feb 6 00:04:16 vm kernel: process : agent_monitor, pid: 1455 do :sys_socket 56 Feb 6 00:04:16 vm agent_monitor: vport name : /dev/vport0p1 57 Feb 6 00:04:16 vm kernel: ------ process agent_monitor dump stack begin!!!----- 58 Feb 6 00:04:16 vm agent_monitor: set coredump pattern :echo 'core.0.000000e+00.(nil)_%' > /proc/sys/kernel/core_pattern 59 Feb 6 00:04:16 vm agent_monitor: agent monitor send start ok ret: 16 60 Feb 6 00:04:16 vm agent_monitor: agent_ctl supervisor thread start, cmd: ./agent_ctl 61 Feb 6 00:04:16 vm agent_monitor: event thread start 62 Feb 6 00:04:16 vm kernel: Pid: 1455, comm: agent_monitor Not tainted 2.6.32-696.el6.x86_64 #1 63 Feb 6 00:04:16 vm kernel: Call Trace: 64 Feb 6 00:04:16 vm kernel: <#DB> [<ffffffffa01f7102>] ? handler_pre+0x72/0x85 [kprobe_] 65 Feb 6 00:04:16 vm kernel: [<ffffffff8155119b>] ? aggr_pre_handler+0x5b/0xb0 66 Feb 6 00:04:16 vm kernel: [<ffffffff81465bc0>] ? sys_socket+0x0/0x80 67 Feb 6 00:04:16 vm kernel: [<ffffffff81465bc1>] ? sys_socket+0x1/0x80 68 Feb 6 00:04:16 vm kernel: [<ffffffff81550bb5>] ? kprobe_exceptions_notify+0x3d5/0x430 69 Feb 6 00:04:16 vm kernel: [<ffffffff81550e25>] ? notifier_call_chain+0x55/0x80 70 Feb 6 00:04:16 vm kernel: [<ffffffff81550e8a>] ? atomic_notifier_call_chain+0x1a/0x20 71 Feb 6 00:04:16 vm kernel: [<ffffffff810acc1e>] ? notify_die+0x2e/0x30 72 Feb 6 00:04:16 vm kernel: [<ffffffff8154e6f5>] ? do_int3+0x35/0xb0 73 Feb 6 00:04:16 vm kernel: [<ffffffff8154df63>] ? int3+0x33/0x40 74 Feb 6 00:04:16 vm kernel: [<ffffffff81465bc1>] ? sys_socket+0x1/0x80 75 Feb 6 00:04:16 vm kernel: <<EOE>> [<ffffffff8100b0d2>] ? system_call_fastpath+0x16/0x1b 76 Feb 6 00:04:16 vm kernel: ------ process agent_monitor dump stack end!!!----- 77 Feb 6 00:04:16 vm kernel: process : agent_monitor, pid: 1455 do :sys_socket

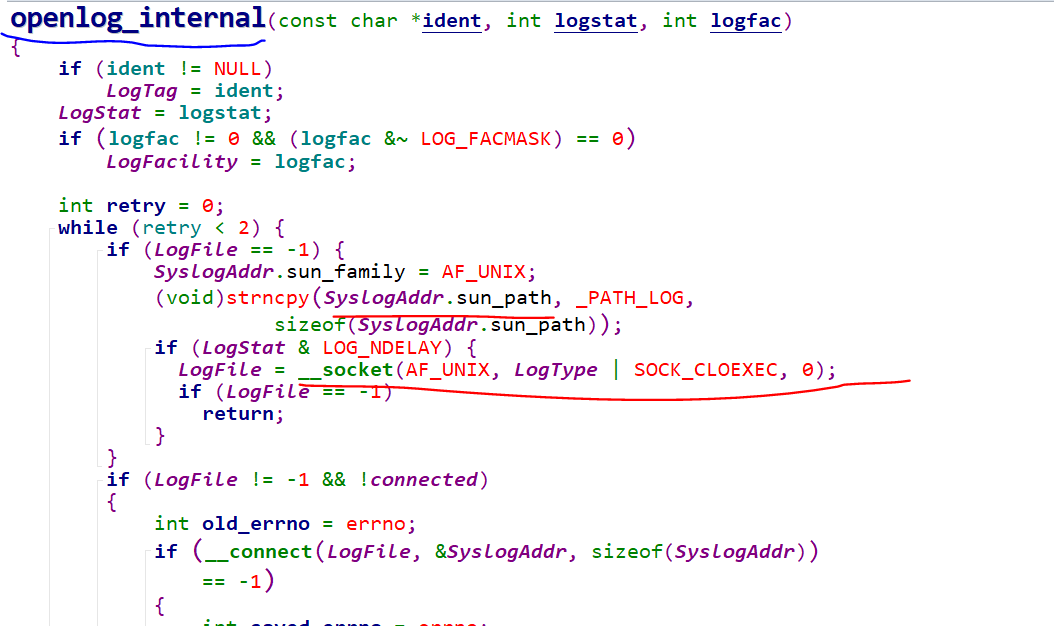

然后就发现了原来是syslog需要连接 /dev/log unix socket,

于是乎 查看syslog源代码,