Redis主从复制

一、主从复制

主机数据更新后根据配置和策略,自动同步到备份机的master/slaver机制,Master以写为主,Slave以读为主;

主从复制的优点

读写分离;

容灾的快速恢复;

二、搭建主从复制

[root@localhost ~]# mkdir /myredis

[root@localhost ~]# cd /myredis/

复制配置文件

[root@localhost myredis]# cp /root/Redis/redis-6.2.1/redis.conf /myredis [root@localhost myredis]# ls redis.conf

修改刚才复制过来的配置文件

appendonly no

创建实验用的三个配置文件

第一个

[root@localhost myredis]# vi redis6379.conf

include /myredis/redis.conf

pidfile /var/run/redis_6379.pid

port 6379

dbfilename dump6379.rdb

第二个

include /myredis/redis.conf pidfile /var/run/redis_6380.pid port 6380 dbfilename dump6380.rdb

第三个

include /myredis/redis.conf pidfile /var/run/redis_6381.pid port 6381 dbfilename dump6381.rdb

启动三台redis

[root@localhost myredis]# redis-server redis6379.conf [root@localhost myredis]# redis-server redis6380.conf [root@localhost myredis]# redis-server redis6381.conf

连接并查看

[root@localhost myredis]# redis-cli -p 6379 127.0.0.1:6379> info replication # Replication role:master connected_slaves:0 master_failover_state:no-failover master_replid:8ed9add3abb3b1002330bcaa6e85726554034a06 master_replid2:0000000000000000000000000000000000000000 master_repl_offset:0 second_repl_offset:-1 repl_backlog_active:0 repl_backlog_size:1048576 repl_backlog_first_byte_offset:0 repl_backlog_histlen:0

登陆6380,执行命令使之成为6379的从机

[root@localhost myredis]# redis-cli -p 6380 127.0.0.1:6380> slaveof 127.0.0.1 6379 OK

登陆6381,执行命令使之成为6379的从机,并查看

[root@localhost myredis]# redis-cli -p 6381 127.0.0.1:6381> slaveof 127.0.0.1 6379 OK 127.0.0.1:6381> info replication # Replication role:slave master_host:127.0.0.1 master_port:6379 master_link_status:up master_last_io_seconds_ago:3 master_sync_in_progress:0 slave_repl_offset:154 slave_priority:100 slave_read_only:1 connected_slaves:0 master_failover_state:no-failover master_replid:c01e043a63bbca57acc6039f7cd7744ed5fd3285 master_replid2:0000000000000000000000000000000000000000 master_repl_offset:154 second_repl_offset:-1 repl_backlog_active:1 repl_backlog_size:1048576 repl_backlog_first_byte_offset:99 repl_backlog_histlen:56

查看主机的配置

127.0.0.1:6379> info replication # Replication role:master connected_slaves:0 master_failover_state:no-failover master_replid:8ed9add3abb3b1002330bcaa6e85726554034a06 master_replid2:0000000000000000000000000000000000000000 master_repl_offset:0 second_repl_offset:-1 repl_backlog_active:0 repl_backlog_size:1048576 repl_backlog_first_byte_offset:0 repl_backlog_histlen:0 127.0.0.1:6379> info replication # Replication role:master connected_slaves:2 slave0:ip=127.0.0.1,port=6380,state=online,offset=210,lag=0 slave1:ip=127.0.0.1,port=6381,state=online,offset=210,lag=0 master_failover_state:no-failover master_replid:c01e043a63bbca57acc6039f7cd7744ed5fd3285 master_replid2:0000000000000000000000000000000000000000 master_repl_offset:210 second_repl_offset:-1 repl_backlog_active:1 repl_backlog_size:1048576 repl_backlog_first_byte_offset:1 repl_backlog_histlen:210

一主二从的特点:

当从服务器挂了以后,重启以后,不再是从服务器,需要重新添加;

当从服务器重启后,加入主服务器后,数据开始从头复制;

主服务器重启以后,还是主服务器;

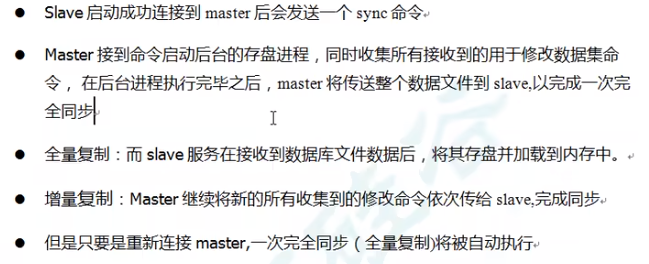

三、主从复制原理

薪火相传

反客为主

当master宕机后,后面的slave立刻称为master

主服务器关闭服务

127.0.0.1:6379> shutdown

从服务器执行

127.0.0.1:6380> slaveof no one OK 127.0.0.1:6380> info replication # Replication role:master connected_slaves:0 master_failover_state:no-failover master_replid:9ad670f17804fb62e0b74c0c663abf59b406914d master_replid2:c01e043a63bbca57acc6039f7cd7744ed5fd3285 master_repl_offset:7376 second_repl_offset:7377 repl_backlog_active:1 repl_backlog_size:1048576 repl_backlog_first_byte_offset:1 repl_backlog_histlen:7376

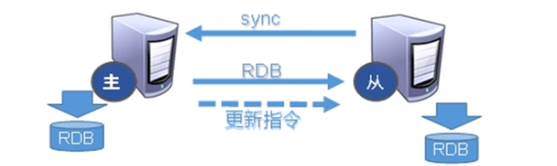

四、哨兵模式

反客为主的自动版,能够后台监控主机是否故障,如果故障了根据投票数自动将从库转换为主库;

首先配置一主二从的环境;

[root@localhost myredis]# redis-server redis6379.conf [root@localhost myredis]# redis-server redis6380.conf [root@localhost myredis]# redis-server redis6381.conf

加入主机

127.0.0.1:6380> slaveof 127.0.0.1 6379 127.0.0.1:6381> slaveof 127.0.0.1 6379

创建哨兵配置文件

[root@localhost myredis]# vi sentinel.conf sentinel monitor mymaster 127.0.0.1 6379 1

其中 mymaster 为监控对象起的服务器名称,1为至少多少个哨兵统一迁移的数量。

启动哨兵

[root@localhost myredis]# redis-sentinel sentinel.conf 1708:X 18 Nov 2023 23:18:03.377 # oO0OoO0OoO0Oo Redis is starting oO0OoO0OoO0Oo 1708:X 18 Nov 2023 23:18:03.377 # Redis version=6.2.1, bits=64, commit=00000000, modified=0, pid=1708, just started 1708:X 18 Nov 2023 23:18:03.377 # Configuration loaded 1708:X 18 Nov 2023 23:18:03.379 * Increased maximum number of open files to 10032 (it was originally set to 1024). 1708:X 18 Nov 2023 23:18:03.379 * monotonic clock: POSIX clock_gettime _._ _.-``__ ''-._ _.-`` `. `_. ''-._ Redis 6.2.1 (00000000/0) 64 bit .-`` .-```. ```\/ _.,_ ''-._ ( ' , .-` | `, ) Running in sentinel mode |`-._`-...-` __...-.``-._|'` _.-'| Port: 26379 | `-._ `._ / _.-' | PID: 1708 `-._ `-._ `-./ _.-' _.-' |`-._`-._ `-.__.-' _.-'_.-'| | `-._`-._ _.-'_.-' | http://redis.io `-._ `-._`-.__.-'_.-' _.-' |`-._`-._ `-.__.-' _.-'_.-'| | `-._`-._ _.-'_.-' | `-._ `-._`-.__.-'_.-' _.-' `-._ `-.__.-' _.-' `-._ _.-' `-.__.-' 1708:X 18 Nov 2023 23:18:03.381 # WARNING: The TCP backlog setting of 511 cannot be enforced because /proc/sys/net/core/somaxconn is set to the lower value of 128. 1708:X 18 Nov 2023 23:18:03.399 # Sentinel ID is 71abd4781fad8a34b1c5bce88ee68e2af8baabc6 1708:X 18 Nov 2023 23:18:03.399 # +monitor master mymaster 127.0.0.1 6379 quorum 1 1708:X 18 Nov 2023 23:18:03.402 * +slave slave 127.0.0.1:6380 127.0.0.1 6380 @ mymaster 127.0.0.1 6379 1708:X 18 Nov 2023 23:18:03.410 * +slave slave 127.0.0.1:6381 127.0.0.1 6381 @ mymaster 127.0.0.1 6379

哨兵模式的规则:

主服务器宕机后,从服务器接替成为主服务器;

当之前的主服务器重新启动后,成为从服务器;

五、集群

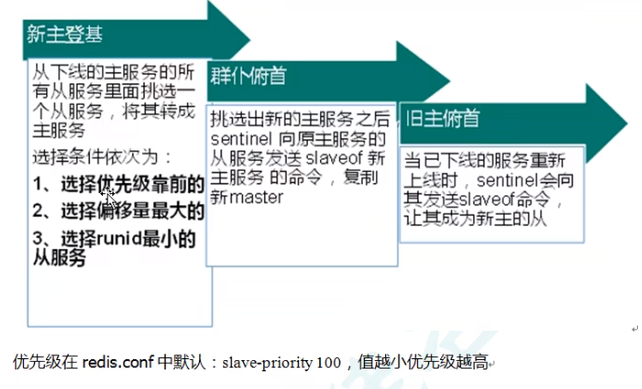

中心化集群

Redis集群实现了对Redis的水平扩容,即启动N个redis节点,将整个数据库分布存储在这N个节点中,每个节点存储总数据的1/N。

搭建Redis集群,共搭建6台;

[root@localhost myredis]# vi redis6379.conf include /myredis/redis.conf pidfile /var/run/redis_6379.pid port 6379 dbfilename dump6379.rdb cluster-enabled yes cluster-config-file nodes-6379.conf cluster-node-timeout 15000

同样的方式创建另外5个节点,并修改其他方式

[root@localhost myredis]# ls

redis6379.conf redis6381.conf redis6383.conf redis.conf

redis6380.conf redis6382.conf redis6384.conf sentinel.conf

启动6个redis

[root@localhost myredis]# redis-server redis6379.conf [root@localhost myredis]# redis-server redis6380.conf [root@localhost myredis]# redis-server redis6381.conf [root@localhost myredis]# redis-server redis6382.conf [root@localhost myredis]# redis-server redis6383.conf [root@localhost myredis]# redis-server redis6384.conf

查看启动的redis

[root@localhost myredis]# ps -ef | grep redis root 2199 1 0 07:31 ? 00:00:00 redis-server *:6379 [cluster] root 2207 1 1 07:32 ? 00:00:00 redis-server *:6380 [cluster] root 2213 1 0 07:32 ? 00:00:00 redis-server *:6381 [cluster] root 2219 1 0 07:32 ? 00:00:00 redis-server *:6382 [cluster] root 2225 1 0 07:32 ? 00:00:00 redis-server *:6383 [cluster] root 2231 1 0 07:32 ? 00:00:00 redis-server *:6384 [cluster]

将6个节点合为一个集群,--replicas 1 采用最简单的方式配置集群,一台主机,一台从机,正好三组

[root@localhost ~]# cd /root/Redis/redis-6.2.1/src/ [root@localhost src]# redis-cli --cluster create --cluster-replicas 1 192.168.43.25:6379 192.168.43.25:6380 192.168.43.25:6381 192.168.43.25:6382 192.168.43.25:6383 192.168.43.25:6384 >>> Performing hash slots allocation on 6 nodes... Master[0] -> Slots 0 - 5460 Master[1] -> Slots 5461 - 10922 Master[2] -> Slots 10923 - 16383 Adding replica 192.168.43.25:6383 to 192.168.43.25:6379 Adding replica 192.168.43.25:6384 to 192.168.43.25:6380 Adding replica 192.168.43.25:6382 to 192.168.43.25:6381 >>> Trying to optimize slaves allocation for anti-affinity [WARNING] Some slaves are in the same host as their master M: 39cf1445c509d34d91648078a2b064496fa59475 192.168.43.25:6379 slots:[0-5460] (5461 slots) master M: 5c16c6d9b3b1da8a77bed8177d58facc66a7361d 192.168.43.25:6380 slots:[5461-10922] (5462 slots) master M: 66b3edfcb7777de287e8fc4db18cc3956c39bbbc 192.168.43.25:6381 slots:[10923-16383] (5461 slots) master S: 383a6c5aaa9a8e35051b829cc2581fa922187ce5 192.168.43.25:6382 replicates 39cf1445c509d34d91648078a2b064496fa59475 S: 0963660e5053e89373418faaee7cb2d6d99d5174 192.168.43.25:6383 replicates 5c16c6d9b3b1da8a77bed8177d58facc66a7361d S: b9253fb2e810c87f8a2877ba1359ce4b01a6b38f 192.168.43.25:6384 replicates 66b3edfcb7777de287e8fc4db18cc3956c39bbbc Can I set the above configuration? (type 'yes' to accept): yes >>> Nodes configuration updated >>> Assign a different config epoch to each node >>> Sending CLUSTER MEET messages to join the cluster Waiting for the cluster to join . >>> Performing Cluster Check (using node 192.168.43.25:6379) M: 39cf1445c509d34d91648078a2b064496fa59475 192.168.43.25:6379 slots:[0-5460] (5461 slots) master 1 additional replica(s) S: 383a6c5aaa9a8e35051b829cc2581fa922187ce5 192.168.43.25:6382 slots: (0 slots) slave replicates 39cf1445c509d34d91648078a2b064496fa59475 S: b9253fb2e810c87f8a2877ba1359ce4b01a6b38f 192.168.43.25:6384 slots: (0 slots) slave replicates 66b3edfcb7777de287e8fc4db18cc3956c39bbbc M: 5c16c6d9b3b1da8a77bed8177d58facc66a7361d 192.168.43.25:6380 slots:[5461-10922] (5462 slots) master 1 additional replica(s) S: 0963660e5053e89373418faaee7cb2d6d99d5174 192.168.43.25:6383 slots: (0 slots) slave replicates 5c16c6d9b3b1da8a77bed8177d58facc66a7361d M: 66b3edfcb7777de287e8fc4db18cc3956c39bbbc 192.168.43.25:6381 slots:[10923-16383] (5461 slots) master 1 additional replica(s) [OK] All nodes agree about slots configuration. >>> Check for open slots... >>> Check slots coverage... [OK] All 16384 slots covered.

查看集群信息,命令必须加-c -p参数

[root@localhost src]# redis-cli -c -p 6379 127.0.0.1:6379> cluster nodes 383a6c5aaa9a8e35051b829cc2581fa922187ce5 192.168.43.25:6382@16382 slave 39cf1445c509d34d91648078a2b064496fa59475 0 1700398976351 1 connected b9253fb2e810c87f8a2877ba1359ce4b01a6b38f 192.168.43.25:6384@16384 slave 66b3edfcb7777de287e8fc4db18cc3956c39bbbc 0 1700398975000 3 connected 5c16c6d9b3b1da8a77bed8177d58facc66a7361d 192.168.43.25:6380@16380 master - 0 1700398976000 2 connected 5461-10922 39cf1445c509d34d91648078a2b064496fa59475 192.168.43.25:6379@16379 myself,master - 0 1700398973000 1 connected 0-5460 0963660e5053e89373418faaee7cb2d6d99d5174 192.168.43.25:6383@16383 slave 5c16c6d9b3b1da8a77bed8177d58facc66a7361d 0 1700398975000 2 connected 66b3edfcb7777de287e8fc4db18cc3956c39bbbc 192.168.43.25:6381@16381 master - 0 1700398976000 3 connected 10923-16383

已经搭建好的主备需要注意的问题

尽量保障,每个主数据库运行在不同的服务器上

集群中的slot,数据在每个节点上的分布;

插入数据,slot反馈出数据的位置:

127.0.0.1:6379> set k1 v1 -> Redirected to slot [12706] located at 192.168.43.25:6381 OK

集群的故障恢复

集群中如果主机挂了,从机会成为主机,之前的主机启动后,成为从机;

集群的优点:

实现扩容;

分摊压力;

无中心配置相对简单;

集群的不足:

多键操作不被支持;

多键的Redis事务是不被支持的,lua脚本不被支持;

六、Redis使用过程中遇到的问题

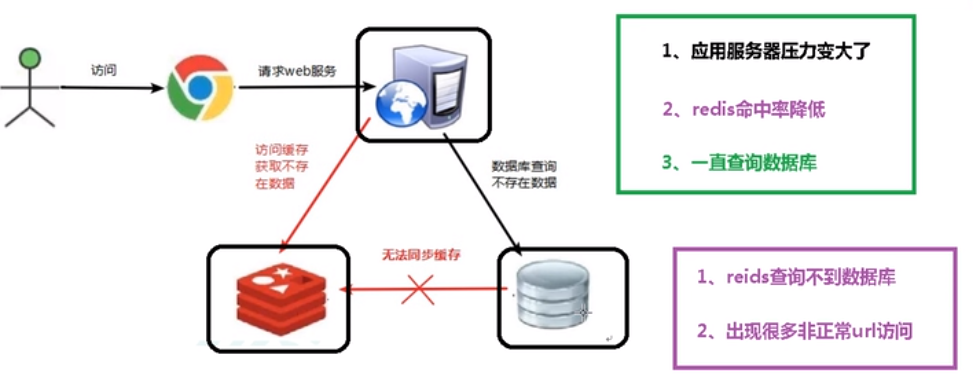

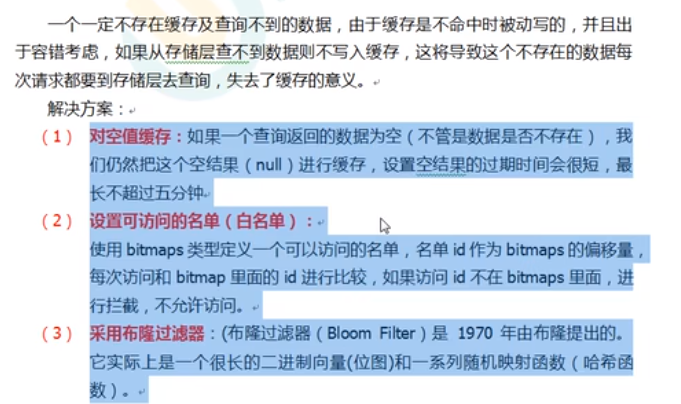

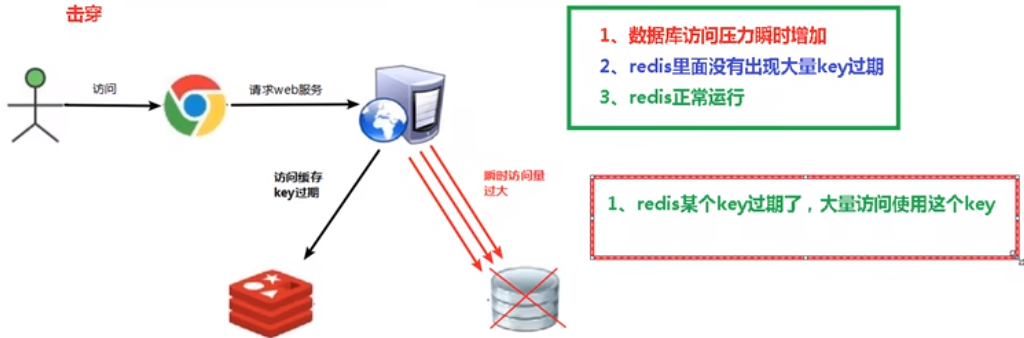

6.1、缓存穿透

解决办法:

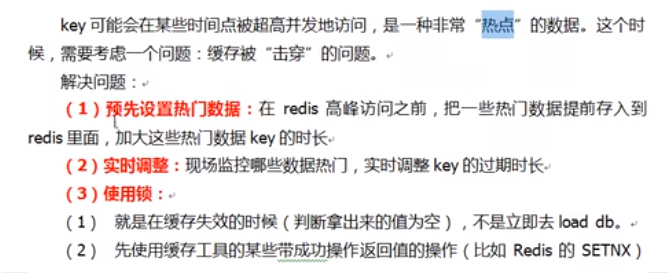

6.2、缓存击穿

解决方案:

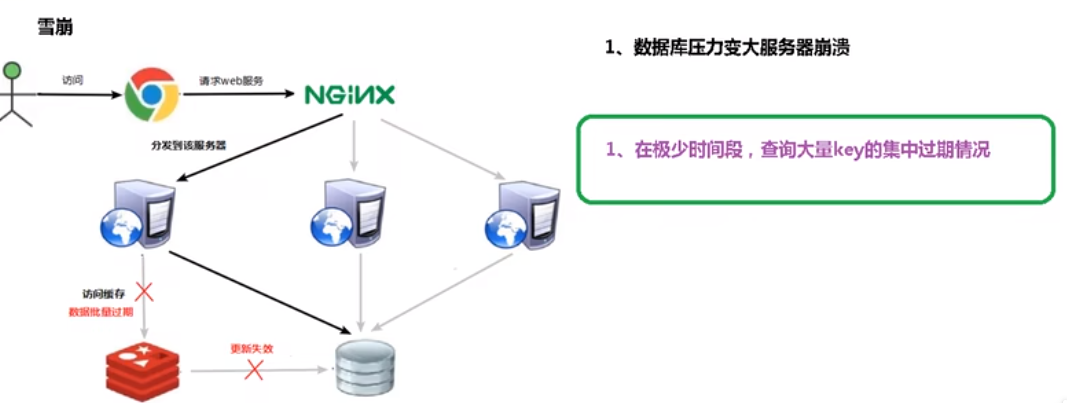

6.3、缓存雪崩

解决方案

七、Redis的分布式锁

上锁,设置过期时间

127.0.0.1:6381> set users 10 nx ex 12 OK 127.0.0.1:6381> ttl users (integer) 8

浙公网安备 33010602011771号

浙公网安备 33010602011771号