k8s知识点总结

一、k8s特点

1、自动装箱

2、自我修复

3、水平扩展

4、服务发现

5、滚动更新

6、版本回退

7、密钥和配置管理

8、存储编排

9、批处理

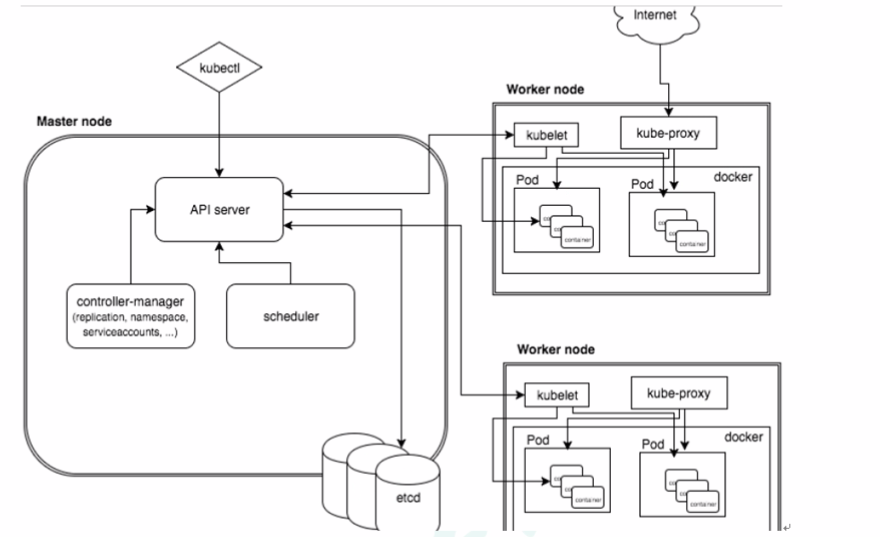

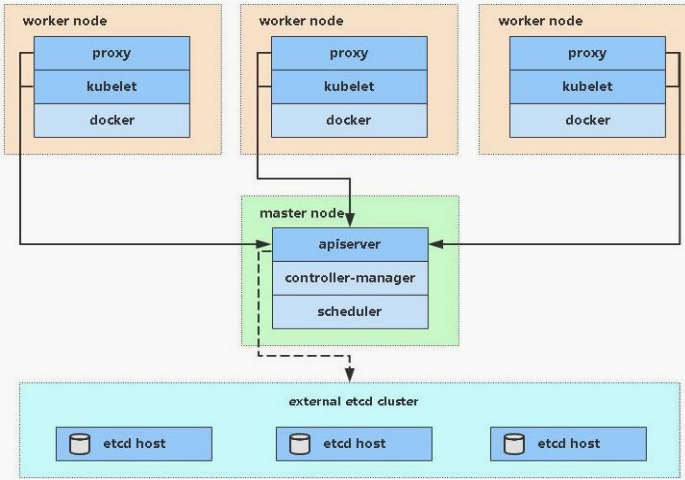

二、集群组件

1、master节点

API server:Kubernetes API,集群的统一入口,各组件协调者,以RESTfulAPI提供接口服务,所有对象资源的增删改查和监听操作都交给APIServer处理后在提交给Etcd存储。

scheduler:根据调度算法为新创建的Pod选择一个Node节点,可以任意部署,可以部署在同一节点上,也可以部署在不同节点上。

controller-manager:处理集群中后台常规任务,一个资源对应一个控制器,处理集群中常规后台任务,一个资源对应一个控制器,而ControllerManager就是负责管理这些控制器的

etcd:存储集群的数据,k8s的数据库。分布式键值存储系统,用于保存集群状态数据,比如Pod,Service等对象信息。

2、node节点

kubelet:kubelet是Master在Node节点上的Agent,管理本机运行容器的生命周期,比如创建容器、Pod挂载数据卷,下载secret、获取容器和节点状态等工作

kube-proxy:在Node节点上实现Pod网路代理,维护网络规则和四层负载均衡工作;

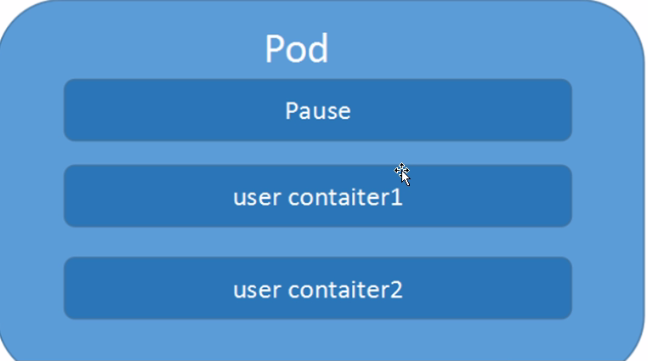

3、pod

最小部署单元、一组容器的集合、共享网络、具有生命周期

4、controller

确保预期的pod副本数量,有状态和无状态应用部署,确保所有node运行同一个pod,一次性任务和定时任务。

5、service

定义一组pod的访问规则。

三、kubectl命令

kubectl api-versions 查看版本

尝试运行

[root@master01 ~]# kubectl create deployment web --image=nginx -o yaml --dry-run W0528 16:05:40.362387 100364 helpers.go:535] --dry-run is deprecated and can be replaced with --dry-run=client. apiVersion: apps/v1 kind: Deployment metadata: creationTimestamp: null labels: app: web name: web spec: replicas: 1 selector: matchLabels: app: web strategy: {} template: metadata: creationTimestamp: null labels: app: web spec: containers: - image: nginx name: nginx resources: {} status: {}

kubectl get deployment -o yaml > web2.yaml

四、Pod

Pod是k8s系统中可以创建和管理的最小单元,是资源对象模型中有用户创近啊或部署的最小资源对象模型,k8s不会直接处理容器,二是pod,Pod是有一个或多个container组成。

一个Pod中容器共享网络命名空间。

每个Pod偶有一个特殊的被称为“根容器”的Pause容器,Pause容器对应的镜像属于Kubernetes平台的一部分,除了Pause容器,么个Pod还包含一个或者多个紧密相关的用户业务容器。

Pod里多个业务容器共享Pause容器的IP。

Kubernetes为每个Pod都分配了一个唯一的IP地址,称之为Pod IP,一个POD里边多个容器共享Pod IP地址。

Pod是多进程设计,运行多个应用程序,一个Pod有多个容器,一个容器运行一个应用程序。

两个应用之间进行交互,网络之间调用,两个应用需要频繁调用。

4.1Pod实现机制

(1)共享网络

容器本身之间是相互隔离的,namespace,Cgroup;

Pod之间共享网络机制:

Pause容器(info容器);

业务容器加入到Pause容器,业务容器在同一个namespace,保存业务容器的ip,mac,port,从而实现网络共享。

(2)共享存储

引入数据卷的概念Volume,使用Volume实现数据持久化。

4.2镜像拉取策略

apiVersion: v1 kind: Pod metadata: name: web002 spec: containers: - name: nginx2 image: nginx imagePullPolicy: Always

imagePullPolicy 镜像拉去策略:

IfNotPresent:默认值,镜像在主机上不存在时候才拉取;

Always:每次创建Pod都会拉取镜像;

Never:Pod永远不会主动拉去这个镜像

4.3 Pod中资源的限制

apiVersion: v1 kind: Pod metadata: name: web003 spec: containers: - name: nginx2 image: nginx imagePullPolicy: Always resources: requests: memory: "64Mi" cpu: "250m" limits: memory: "128Mi" cpu: "500m"

restartPolicy: Never

4.4 重启策略

restartPolicy: Never

Never 当容器终止退出,从不重启

Always 当容器终止退出,总是重启容器,默认策略

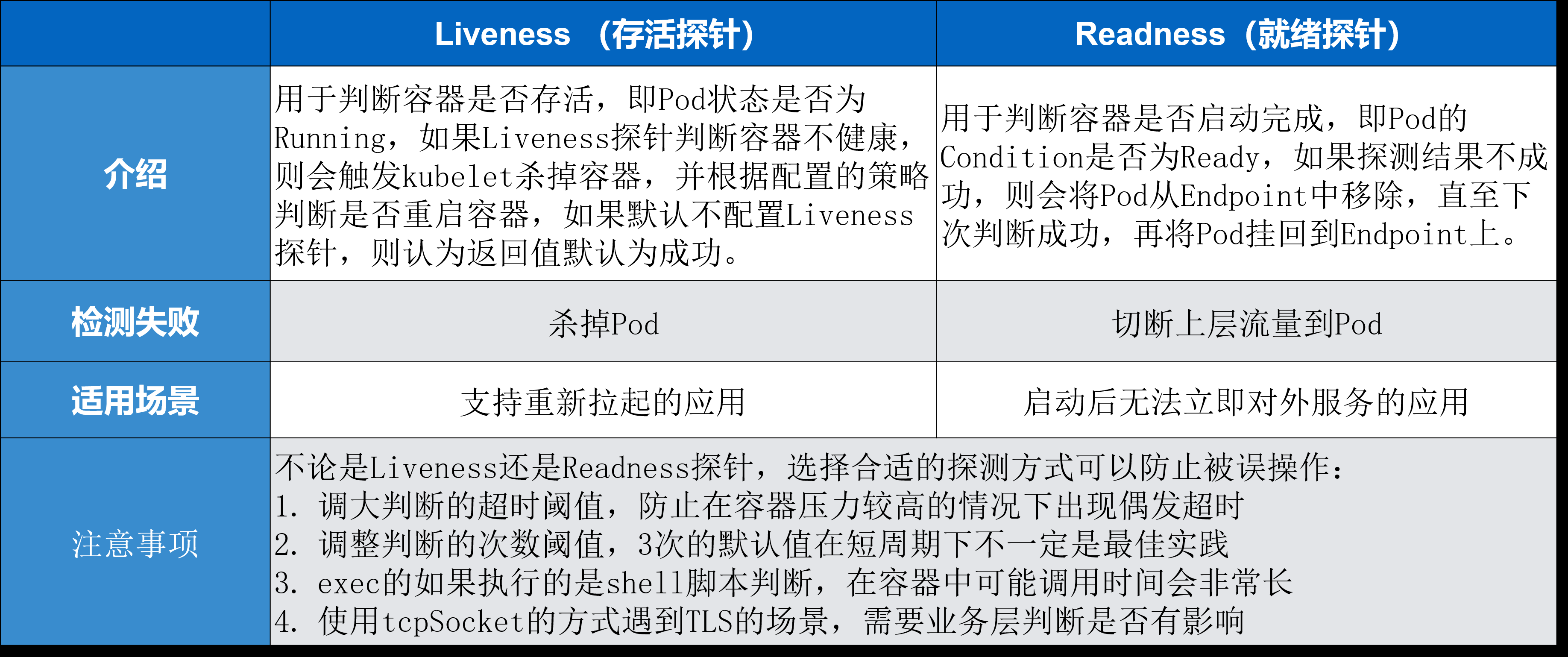

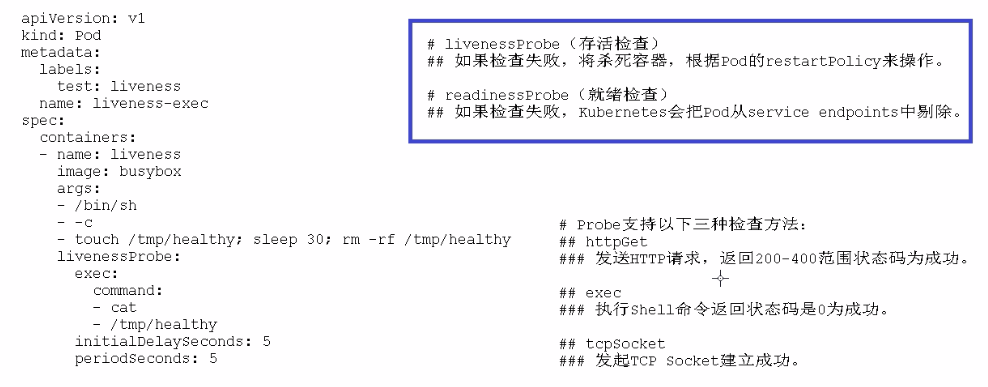

4.5 健康检查

两者的区别

Liveness 指针是存活指针,它用来判断容器是否存活、判断 pod 是否 running。如果 Liveness 指针判断容器不健康,此时会通过 kubelet 杀掉相应的 pod,并根据重启策略来判断是否重启这个容器。如果默认不配置 Liveness 指针,则默认情况下认为它这个探测默认返回是成功的。

Readiness 指针用来判断这个容器是否启动完成,即 pod 的 condition 是否 ready。如果探测的一个结果是不成功,那么此时它会从 pod 上 Endpoint 上移除,也就是说从接入层上面会把前一个 pod 进行摘除,直到下一次判断成功,这个 pod 才会再次挂到相应的 endpoint 之上。

对于检测失败上面来讲 Liveness 指针是直接杀掉这个 pod,而 Readiness 指针是切掉 endpoint 到这个 pod 之间的关联关系,也就是说它把这个流量从这个 pod 上面进行切掉。

Probe支持以下三种检查方法:

httpGet 发送HTTP请求,返回200-400范围状态码为成功

exec 执行Shell命令,返回状态码为0为成功

tcpSocket 发起TCP Socket建立成功

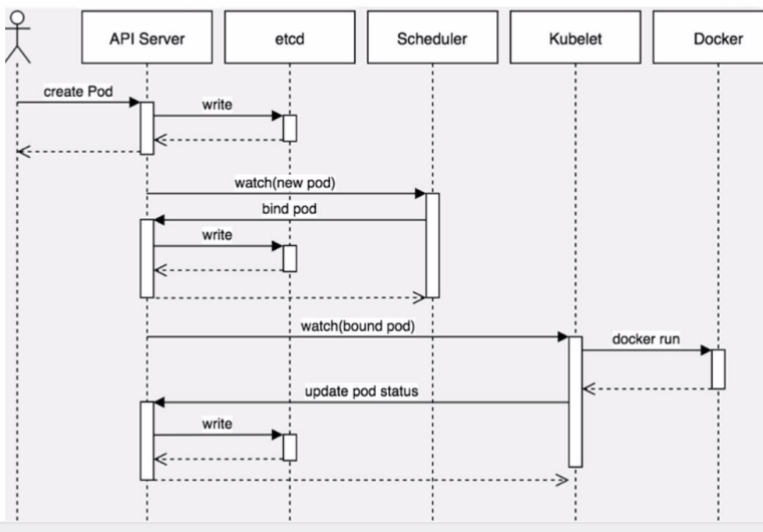

4.6 Pod创建过程

4.7 Pod调度

影响调度属性:

(1)Pod资源限制对Pod调度产生影响;

resources、requests

(2)节点选择器标签

首先给节点添加标签

[root@master01 ~]# kubectl label node work01 env_role=dev

node/work01 labeled

在node中添加节点标签选择器:

apiVersion: v1 kind: Pod metadata: name: web005 spec: nodeSelector: env_role: dev containers: - name: nginx2 image: nginx imagePullPolicy: Always resources: requests: memory: "64Mi" cpu: "250m" limits: memory: "128Mi" cpu: "500m" restartPolicy: Never

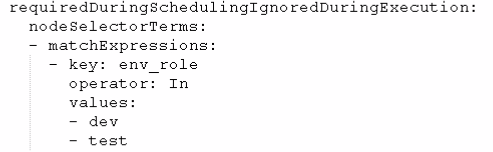

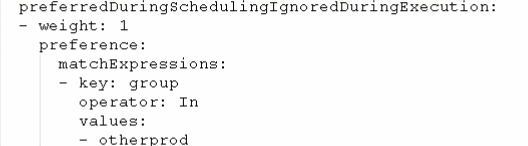

(3)节点亲和性

硬亲和性:

约束条件必须满足:

软亲和性:

尝试满足:

operator参数有: In 、NotIn、Exists、Gt大于、Lt小于、DoesNotExists

(4)污点和污点容忍

nodeSelector和nodeAffinity:Pod调度到某些节点上,Pod属性,调度的时候实现。

Taint污点,节点不做普通的分配调度,是节点属性。

应用场景:

专用节点;配置特别硬件节点;基于Taint驱逐。

查看节点污点:

[root@master01 ~]# kubectl describe node master01 | grep Taint

Taints: node-role.kubernetes.io/master:NoSchedule

污点的值有三个:

NoSchedule: 一定不被调度

PreferNoShcedule: 尽量不被调度

NoExcute: 不会调度,并且还会驱逐Node上已有的Pod

给node打上污点:

[root@master01 ~]# kubectl describe node work01 | grep Taints

Taints: key1=value1:NoSchedule

描述:这个设置为node1加上了一个Taint,该Taint的键为key1,值为value1,Taint的效果是NoSchedule,这意味着除非Pod明确声明可以容忍这个Taint,否则不会被调度到node1上。

开始创建pod

[root@master01 ~]# kubectl create deployment web --image=nginx [root@master01 ~]# kubectl scale deployment web --replicas=5

查看调度结果

[root@master01 ~]# kubectl get pod -owide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES web-5dcb957ccc-jvkdw 1/1 Running 0 2m14s 10.224.75.76 work02 <none> <none> web-5dcb957ccc-km78z 1/1 Running 0 2m14s 10.224.75.74 work02 <none> <none> web-5dcb957ccc-mqb62 1/1 Running 0 2m14s 10.224.75.75 work02 <none> <none> web-5dcb957ccc-t5nx5 1/1 Running 0 2m14s 10.224.75.73 work02 <none> <none> web-5dcb957ccc-twc2k 1/1 Running 0 3m32s 10.224.75.72 work02 <none> <none>

取消Taint

[root@master01 ~]# kubectl taint node work01 key1=value1:NoSchedule-

node/work01 untainted

节点设置污点容忍

tolerations: - key: "key1" operator: "Equal" value: "value1" effect: "NoSchedule"

五、Controller

controller:集群上管理和运行容器的对象;

controller实现Pod的滚动升级,弹性伸缩;

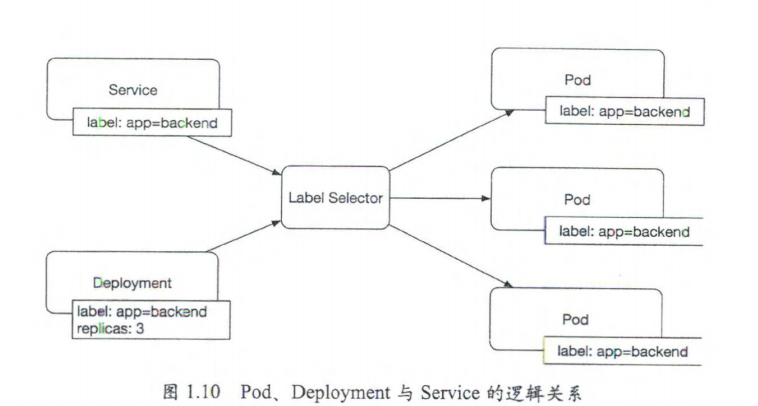

Pod通过Label,selector标签来实现和Controller建立关联;

5.1Deployment控制器

应用场景:

1、部署无状态应用;

2、管理Pod和ReplicaSet

3、部署、滚动升级等

(有状态应用:请求端的相关信息再发起请求前,已经存在服务端,否则无法完成请求;也可以理解为请求方的相关状态信息是由请求方还是响应方负责保存;无状态应用:请求端的请求中包含请求端自身的状态信息,不存在服务端;也可以理解为请求方的相关状态信息是由请求方自身负责保存;)

5.2 编写yaml文件

生产一个模版:

[root@master01 ~]# kubectl create deployment myweb --image=nginx --dry-run -o yaml>myweb.yaml

也可以自己编写

apiVersion: apps/v1 kind: Deployment metadata: name: myweb01 namespace: default labels: app: myweb01 spec: replicas: 2 selector: matchLabels: app: myweb template: metadata: labels: app: myweb spec: containers: - image: nginx name: nginx

5.3 升级

[root@master01 ~]# kubectl set image deployment myweb01 nginx=nginx:1.15 deployment.apps/myweb01 image updated

查看升级结果

[root@master01 ~]# kubectl rollout status deployment myweb01 deployment "myweb01" successfully rolled out

查看历史版本

[root@master01 ~]# kubectl rollout history deployment myweb01 deployment.apps/myweb01 REVISION CHANGE-CAUSE 1 <none> 2 <none>

5.4、还原

[root@master01 ~]# kubectl rollout undo deployment myweb01

deployment.apps/myweb01 rolled back

退回指定的版本

[root@master01 ~]# kubectl rollout history deployment myweb01 deployment.apps/myweb01 REVISION CHANGE-CAUSE 2 <none> 3 <none> [root@master01 ~]# kubectl rollout undo deployment myweb01 --to-revision=2 deployment.apps/myweb01 rolled back

5.5 弹性伸缩

[root@master01 ~]# kubectl scale deployment myweb01 --replicas=3 deployment.apps/myweb01 scaled

六、Service

6.1、Service存在的意义

(1)、防止pod失联(服务发现)

(2)、负载均衡

apiVersion: v1 kind: Service metadata: name: mywebservice spec: selector: app: myweb ports: - protocol: TCP port: 80 targetPort: 8080 type: NodePort

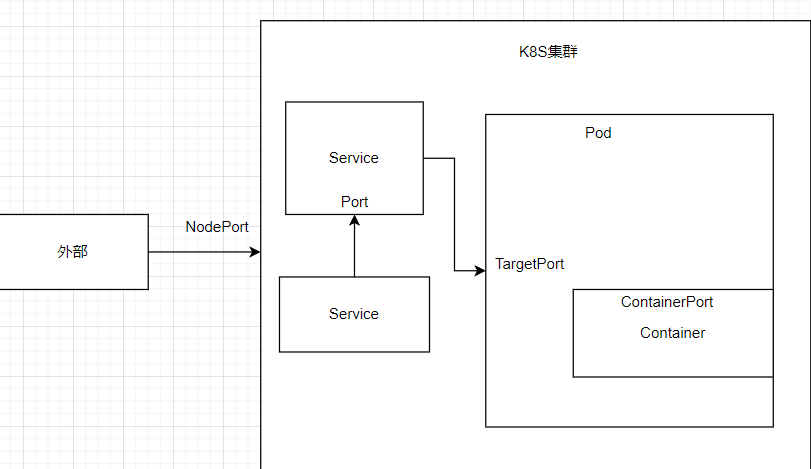

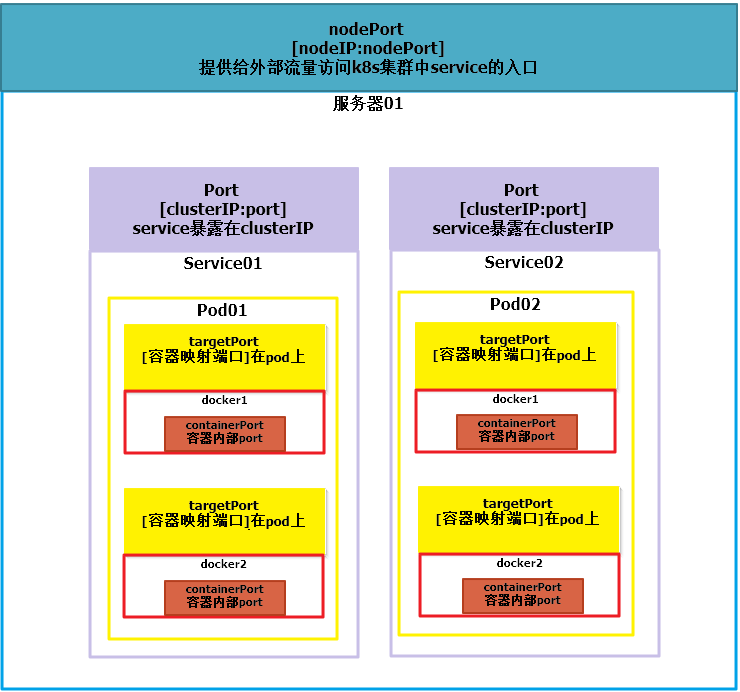

6.2 Service的三种类型

Service主要用于提供网络服务,通过Service的定义,能够为客户端应用提供稳定的访问地址(域名或IP地址)和负载均衡,以及能够屏蔽后端的Endpoint的变化,是Kubernetes实现微服务的核心资源。

(1)ClusterIP 集群内部使用

(2)NodePort 对外访问应用

(3)LoadBalance 对外访问,公有云的负载均衡

CluserIP

apiVersion: v1 kind: Service metadata: name: mywebservice spec: selector: app: myweb ports: - protocol: TCP port: 80 targetPort: 8080 type: ClusterIP

NodePort

apiVersion: v1 kind: Service metadata: name: mywebservice spec: selector: app: myweb ports: - protocol: TCP port: 80 targetPort: 8080 type: NodePort

Headless Service的概念和应用

在某些应用场景中,客户端应用不需要通过Kubernetes内置Service实现的负载均衡功能。

Headless Service的概念是这种服务没有入口访问地址(无ClusterIP地址),kube-proxy不会为其创建负载均衡转发规则。

七、Statefulset

7.1 、有状态,无状态

无状态: 认为Pod都一样;没有启动顺序;不考虑在哪个node上运行;随意的伸缩扩容

有状态:无状态的因素都要考虑到,每个Pod都是独立的,保持每个pod顺序和唯一性;唯一的网络标识符,持久存储;有序,比如MySQL主从节点

7.2、deployment和statefulset区别:

statefulset有唯一标识(每个pod有唯一主机名,唯一的域名,格式:主机名称,service名称.名称空间.svc.cluster.local)

apiVersion: v1 kind: Service metadata: name: nginx002 labels: app: mynginx spec: ports: - port: 80 name: web clusterIP: None selector: app: nginx --- apiVersion: apps/v1 kind: StatefulSet metadata: name: nginx-statfulset namespace: default spec: serviceName: nginx replicas: 3 selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: containers: - name: nginx image: nginx ports: - containerPort: 80

八、守护进程DaemonSet

每个node上运行一个pod

apiVersion: apps/v1 kind: DaemonSet metadata: name: ds-test labels: app: filebeat spec: selector: matchLabels: app: fileb template: metadata: labels: app: fileb spec: containers: - name: logs image: nginx ports: - containerPort: 80 volumeMounts: - name: varlog mountPath: /tmp/log volumes: - name: varlog hostPath: path: /var/log

九、Job

apiVersion: batch/v1 kind: Job metadata: name: pi spec: template: spec: containers: - name: pi image: perl command: ["perl", "-Mbignum=bpi", "-wle", "print bpi(2000)"] restartPolicy: Never backoffLimit: 4

查看job

[root@master01 ~]# kubectl get job NAME COMPLETIONS DURATION AGE pi 1/1 7m44s 9m23s

cronjob

apiVersion: batch/v1beta1 kind: CronJob metadata: name: hello spec: schedule: "*/1 * * * *" jobTemplate: spec: template: spec: containers: - name: hello image: busybox args: - /bin/sh - -c - date; echo Hello from the Kubernetes restartPolicy: OnFailure

查看cronjob

[root@master01 ~]# kubectl get cronjob NAME SCHEDULE SUSPEND ACTIVE LAST SCHEDULE AGE hello */1 * * * * False 1 10s 3m46s

查看日志:

[root@master01 ~]# kubectl logs hello-1686012420-gd76m Tue Jun 6 00:47:24 UTC 2023 Hello from the Kubernetes

十、Secret

作用:加密数据存在etcd里边,让Pod容器以挂载Volume方式进行访问。

应用场景: 凭据

[root@master01 ~]# echo -n 'admin' | base64 YWRtaW4=

创建secret加密数据

apiVersion: v1 kind: Secret metadata: name: mysecret type: Opaque data: username: YWRtaW4= password: YWRtaW4xMjM0

查看secret

[root@master01 ~]# kubectl get secret NAME TYPE DATA AGE default-token-mbnxd kubernetes.io/service-account-token 3 11d mysecret Opaque 2 3m16s

10.1、以变量方式应用:

apiVersion: v1 kind: Pod metadata: name: mypod spec: containers: - name: nginxsecret image: nginx env: - name: SECRET_USERNAME valueFrom: secretKeyRef: name: mysecret key: username - name: SECRET_PASSWORD valueFrom: secretKeyRef: name: mysecret key: password

进入pod查看

[root@master01 ~]# kubectl exec -it mypod bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl kubectl exec [POD] -- [COMMAND] instead.

root@mypod:/# echo $SECRET_USERNAME

admin

root@mypod:/# echo $SECRET_PASSWORD

admin1234

10.2、以volume挂载的方式使用secret

apiVersion: v1 kind: Pod metadata: name: mypod02 spec: containers: - name: nginx002 image: nginx volumeMounts: - name: foo mountPath: "/etc/foo" readOnly: true volumes: - name: foo secret: secretName: mysecret

查看挂载:

[root@master01 ~]# kubectl exec -it mypod02 bash kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl kubectl exec [POD] -- [COMMAND] instead. root@mypod02:/# cd /etc/f fonts/ foo/ fstab root@mypod02:/# cd /etc/foo root@mypod02:/etc/foo# ls password username root@mypod02:/etc/foo# cat password admin1234root@mypod02:/etc/foo# cat username

十一、ConfigMap

作用:存储不加密的数据到etcd中,让pod以变量或者volume挂载形式使用;

场景: 配置文件

11.1、创建ConfigMap

首先创建一个变量文件

vi redis.properties redis.host=127.0.0.1 redis.port=6379 redis.password=123456

创建configmap

[root@master01 ~]# kubectl create configmap redis-config --from-file=redis.properties configmap/redis-config created

查看创建的configmap

[root@master01 ~]# kubectl get configmap NAME DATA AGE redis-config 1 55s

查看configmap的详细信息

[root@master01 ~]# kubectl create configmap redis-config --from-file=redis.properties configmap/redis-config created [root@master01 ~]# kubectl get configmap NAME DATA AGE redis-config 1 55s [root@master01 ~]# kubectl describe configmap redis-config Name: redis-config Namespace: default Labels: <none> Annotations: <none> Data ==== redis.properties: ---- redis.host=127.0.0.1 redis.port=6379 redis.password=123456 Events: <none>

11.2、以volume形式挂载configmap

apiVersion: v1 kind: Pod metadata: name: mypod007 spec: containers: - name: busybox image: busybox command: [ "/bin/sh","-c","cat /etc/config/redis.properties" ] volumeMounts: - name: config-volume mountPath: /etc/config volumes: - name: config-volume configMap: name: redis-config restartPolicy: Never

查看日志

[root@master01 ~]# kubectl logs mypod007 redis.host=127.0.0.1 redis.port=6379 redis.password=123456

11.3、以变量形式应用configMap

apiVersion: v1 kind: ConfigMap metadata: name: myconfig namespace: default data: special.level: info special.type: hello

创建一个pod

apiVersion: v1 kind: Pod metadata: name: mypod009 spec: containers: - name: busybox009 image: busybox command: [ "/bin/sh", "-c", "echo $(LEVEL) $(TYPE)" ] env: - name: LEVEL valueFrom: configMapKeyRef: name: myconfig key: sepcial.level - name: TYPE valueFrom: configMapKeyRef: name: myconfig key: special.type restartPolicy: Never

12、集群安全机制

12.1、 访问k8s的时候,需要经过三个步骤:

(1)认证

(2)鉴权

(3)准入控制

访问的时候都要经过apiserver,apiserver做统一的协调,访问过程需要证书、token、用户名密码,如果访问pod,需要serviceAccount。

第一步认证,传输安全

*传输安全:对外不暴露8080,只能内部访问,对外使用6443

*认证:客户端身份认证常用方式,https证书认证,基于ca证书;http认证,token认证,通过token识别用户;http基本认证,用户名+密码;

第二步: *鉴权:基于RBAC进行鉴权操作,基于角色的访问控制

第三部: 准入控制,通过准入控制器列表。

12.2 RBAC基于角色的访问控制

*角色

**role:特定命名空间访问权限

**ClusterRole:所有命名空间访问权限

*角色绑定

**roleBinding:角色绑定到主题

**ClusterRoleBingding:集群角色绑定到主题

*主体

user:用户

group:用户组

serviceAccount:服务账号

12.3鉴权实现

创建一个命名空间

[root@master01 ~]# kubectl create namespace roledemo namespace/roledemo created

在命名空间下创建一个pod

[root@master01 ~]# kubectl run nginx --image=nginx -n roledemo pod/nginx created [root@master01 ~]# kubectl get pod -n roledemo NAME READY STATUS RESTARTS AGE nginx 1/1 Running 0 78s

创建一个role

kind: Role apiVersion: rbac.authorization.k8s.io/v1 metadata: namespace: roledemo name: pod-reader rules: - apiGroups: [""] # "" indicates the core API group resources: ["pods"] verbs: ["get", "watch", "list"]

创建查看

[root@master01 ~]# kubectl create -f role.yaml role.rbac.authorization.k8s.io/pod-reader created [root@master01 ~]# kubectl get role -n roledemo NAME CREATED AT pod-reader 2023-06-06T08:04:17Z

创建角色绑定

kind: RoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: read-pods namespace: roledemo subjects: - kind: User name: lucy apiGroup: rbac.authorization.k8s.io roleRef: kind: Role name: pod-reader apiGroup: rbac.authorization.k8s.io [root@master01 ~]# kubectl apply -f rolebinding.yaml rolebinding.rbac.authorization.k8s.io/read-pods created

查看

[root@master01 ~]# kubectl get role,rolebinding -n roledemo NAME CREATED AT role.rbac.authorization.k8s.io/pod-reader 2023-06-06T08:04:17Z NAME ROLE AGE rolebinding.rbac.authorization.k8s.io/read-pods Role/pod-reader 115s

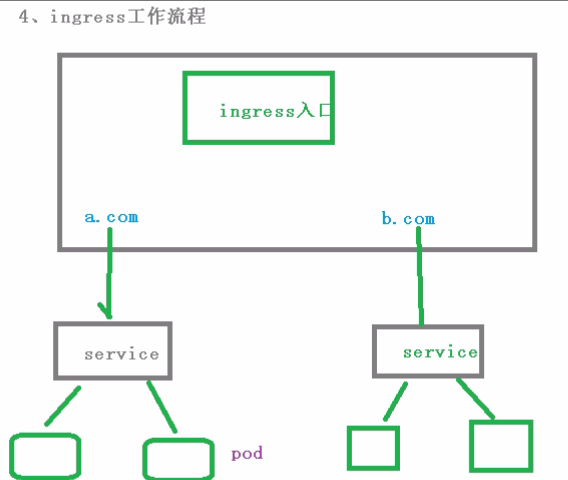

十三、Ingress

把端口对外暴露,通过IP+端口的方式进行访问,使用service里边NodePort实现,

NodePort缺陷:每个节点都会起到端口,在访问的时候通过任何节点,通过ip+端口访问;

意味着每个端口只能使用一次,一个端口对应一个应用;

实际访问中都是通过域名,不同的域名跳转到不同的端口应用。

13.1、Ingress和pod的关系

pod和Ingress是通过service关联的;

Ingress作为统一入口,由service关联一组Pod

13.2 Ingress工作流程

13.3 Ingress对外暴露应用

创建一个pod,service

[root@master01 ~]# kubectl create deployment web --image=nginx deployment.apps/web created [root@master01 ~]# kubectl expose deployment web --port=80 --target-port=80 --type=NodePort service/web exposed

创建Ingress-controler

apiVersion: v1 kind: Namespace metadata: name: ingress-nginx labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx --- kind: ConfigMap apiVersion: v1 metadata: name: nginx-configuration namespace: ingress-nginx labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx --- kind: ConfigMap apiVersion: v1 metadata: name: tcp-services namespace: ingress-nginx labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx --- kind: ConfigMap apiVersion: v1 metadata: name: udp-services namespace: ingress-nginx labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx --- apiVersion: v1 kind: ServiceAccount metadata: name: nginx-ingress-serviceaccount namespace: ingress-nginx labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx --- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRole metadata: name: nginx-ingress-clusterrole labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx rules: - apiGroups: - "" resources: - configmaps - endpoints - nodes - pods - secrets verbs: - list - watch - apiGroups: - "" resources: - nodes verbs: - get - apiGroups: - "" resources: - services verbs: - get - list - watch - apiGroups: - "" resources: - events verbs: - create - patch - apiGroups: - "extensions" - "networking.k8s.io" resources: - ingresses verbs: - get - list - watch - apiGroups: - "extensions" - "networking.k8s.io" resources: - ingresses/status verbs: - update --- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: Role metadata: name: nginx-ingress-role namespace: ingress-nginx labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx rules: - apiGroups: - "" resources: - configmaps - pods - secrets - namespaces verbs: - get - apiGroups: - "" resources: - configmaps resourceNames: # Defaults to "<election-id>-<ingress-class>" # Here: "<ingress-controller-leader>-<nginx>" # This has to be adapted if you change either parameter # when launching the nginx-ingress-controller. - "ingress-controller-leader-nginx" verbs: - get - update - apiGroups: - "" resources: - configmaps verbs: - create - apiGroups: - "" resources: - endpoints verbs: - get --- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: RoleBinding metadata: name: nginx-ingress-role-nisa-binding namespace: ingress-nginx labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: nginx-ingress-role subjects: - kind: ServiceAccount name: nginx-ingress-serviceaccount namespace: ingress-nginx --- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRoleBinding metadata: name: nginx-ingress-clusterrole-nisa-binding labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: nginx-ingress-clusterrole subjects: - kind: ServiceAccount name: nginx-ingress-serviceaccount namespace: ingress-nginx --- apiVersion: apps/v1 kind: Deployment metadata: name: nginx-ingress-controller namespace: ingress-nginx labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx spec: replicas: 1 selector: matchLabels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx template: metadata: labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx annotations: prometheus.io/port: "10254" prometheus.io/scrape: "true" spec: hostNetwork: true # wait up to five minutes for the drain of connections terminationGracePeriodSeconds: 300 serviceAccountName: nginx-ingress-serviceaccount nodeSelector: kubernetes.io/os: linux containers: - name: nginx-ingress-controller image: lizhenliang/nginx-ingress-controller:0.30.0 args: - /nginx-ingress-controller - --configmap=$(POD_NAMESPACE)/nginx-configuration - --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services - --udp-services-configmap=$(POD_NAMESPACE)/udp-services - --publish-service=$(POD_NAMESPACE)/ingress-nginx - --annotations-prefix=nginx.ingress.kubernetes.io securityContext: allowPrivilegeEscalation: true capabilities: drop: - ALL add: - NET_BIND_SERVICE # www-data -> 101 runAsUser: 101 env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace ports: - name: http containerPort: 80 protocol: TCP - name: https containerPort: 443 protocol: TCP livenessProbe: failureThreshold: 3 httpGet: path: /healthz port: 10254 scheme: HTTP initialDelaySeconds: 10 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 10 readinessProbe: failureThreshold: 3 httpGet: path: /healthz port: 10254 scheme: HTTP periodSeconds: 10 successThreshold: 1 timeoutSeconds: 10 lifecycle: preStop: exec: command: - /wait-shutdown --- apiVersion: v1 kind: LimitRange metadata: name: ingress-nginx namespace: ingress-nginx labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx spec: limits: - min: memory: 90Mi cpu: 100m type: Container

创建

[root@master01 ~]# kubectl create deployment web --image=nginx deployment.apps/web created [root@master01 ~]# kubectl expose deployment web --port=80 --target-port=80 --type=NodePort service/web exposed [root@master01 ~]# vi ingress.yaml [root@master01 ~]# kubectl create -f ingress.yaml namespace/ingress-nginx created configmap/nginx-configuration created configmap/tcp-services created configmap/udp-services created serviceaccount/nginx-ingress-serviceaccount created clusterrole.rbac.authorization.k8s.io/nginx-ingress-clusterrole created role.rbac.authorization.k8s.io/nginx-ingress-role created rolebinding.rbac.authorization.k8s.io/nginx-ingress-role-nisa-binding created clusterrolebinding.rbac.authorization.k8s.io/nginx-ingress-clusterrole-nisa-binding created deployment.apps/nginx-ingress-controller created limitrange/ingress-nginx created

查看创建的ingress

[root@master01 ~]# kubectl get pod -n ingress-nginx NAME READY STATUS RESTARTS AGE nginx-ingress-controller-766fb9f77-2mm5d 1/1 Running 0 2m44s

Ingress规则的配置

apiVersion: networking.k8s.io/v1beta1 kind: Ingress metadata: name: example-ingress spec: rules: - host: example.ingredemo.com http: paths: - path: / backend: serviceName: web servicePort: 80

创建

[root@master01 ~]# kubectl create -f ingress01.yaml

ingress.networking.k8s.io/example-ingress created

查看运行的主机

[root@master01 ~]# kubectl get pod -n ingress-nginx -owide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-ingress-controller-766fb9f77-2mm5d 1/1 Running 0 10m 192.168.43.92 work02 <none> <none>

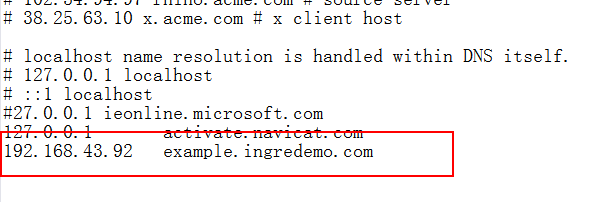

修改windows的HOTST文件

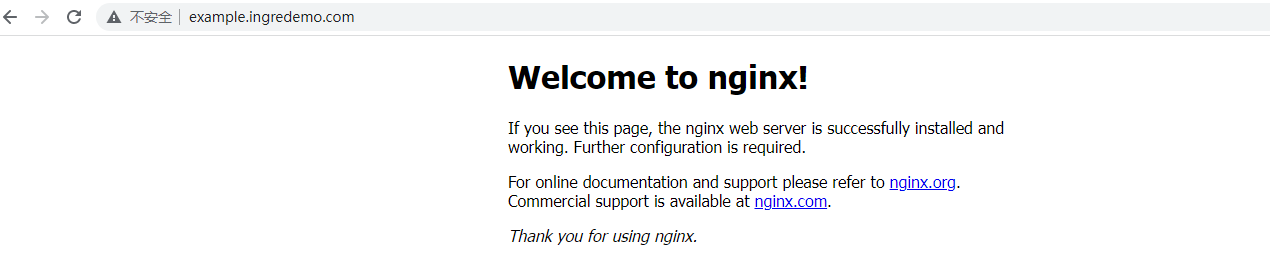

本地测试

十四、Helm

Helm是Kubernetes的包管理工具,就想linux的包管理工具,可以很方便的将打包好的yaml部署到Kubernetes上。

14.1、解决的问题:

(1)Helm将yaml文件整体管理;

(2)yaml文件高效复用;

(3)应用级别的版本管理。

14.2、三个重要概念

(1)Helm

命令行工具,

(2)Chart

应用描述,把yaml打包,是yaml的集合,

(3)Release

基于Chart部署实体,一个Chart被Helm运行后,将会生成一个对应的Release,

14.3、安装

下载、上传、解压

[root@master01 helm]# tar -zxvf helm-v3.0.0-linux-amd64.tar.gz linux-amd64/ linux-amd64/helm linux-amd64/README.md linux-amd64/LICENSE

移动文件

[root@master01 helm]# ls helm-v3.0.0-linux-amd64.tar.gz linux-amd64 [root@master01 helm]# cd linux-amd64 [root@master01 linux-amd64]# ls helm LICENSE README.md [root@master01 linux-amd64]# cp helm /usr/bin

14.4、配置仓库

添加微软仓库地址:

[root@master01 linux-amd64]# helm repo add stable http://mirror.azure.cn/kubernetes/charts "stable" has been added to your repositories

添加阿里云的仓库地址:

[root@master01 ~]# helm repo add aliyun https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts "aliyun" has been added to your repositories

查看仓库地址

[root@master01 ~]# helm repo list NAME URL stable http://mirror.azure.cn/kubernetes/charts aliyun https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

更新仓库

[root@master01 ~]# helm repo update Hang tight while we grab the latest from your chart repositories... ...Successfully got an update from the "aliyun" chart repository ...Successfully got an update from the "stable" chart repository Update Complete. ⎈ Happy Helming!⎈

移除仓库

[root@master01 ~]# helm repo remove stable "stable" has been removed from your repositories

14.5、使用Helm快速部署应用

搜索应用

[root@master01 ~]# helm search repo weave NAME CHART VERSION APP VERSION DESCRIPTION aliyun/weave-cloud 0.1.2 Weave Cloud is a add-on to Kubernetes which pro... aliyun/weave-scope 0.9.2 1.6.5 A Helm chart for the Weave Scope cluster visual...

安装搜索到的应用

[root@master01 ~]# helm install myui aliyun/aliyun/weave-scope

Error: unable to build kubernetes objects from release manifest: [unable to recognize no matches for kind "Deployment" in version "apps/v1beta1", unable to recognize no matches for kind "DaemonSet" in version "extensions/v1beta1"]

出现报错,解决办法

换个仓库地址

[root@master01 ~]# helm repo remove aliyun "aliyun" has been removed from your repositories [root@master01 ~]# helm repo add stable http://mirror.azure.cn/kubernetes/charts "stable" has been added to your repositories

继续安装

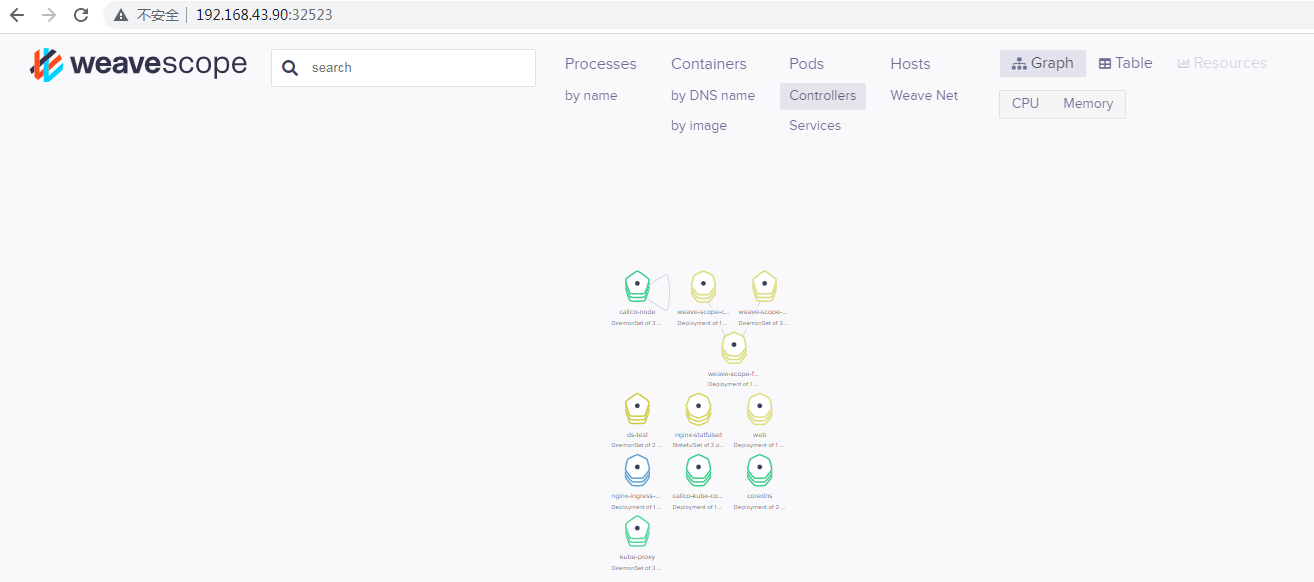

[root@master01 ~]# helm install myui stable/weave-scope NAME: myui LAST DEPLOYED: Wed Jun 7 10:27:14 2023 NAMESPACE: default STATUS: deployed REVISION: 1 NOTES: You should now be able to access the Scope frontend in your web browser, by using kubectl port-forward: kubectl -n default port-forward $(kubectl -n default get endpoints \ myui-weave-scope -o jsonpath='{.subsets[0].addresses[0].targetRef.name}') 8080:4040 then browsing to http://localhost:8080/. For more details on using Weave Scope, see the Weave Scope documentation: https://www.weave.works/docs/scope/latest/introducing/

查看

[root@master01 ~]# helm list NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION myui default 1 2023-06-07 10:27:14.579747015 +0800 CST deployed weave-scope-1.1.12 1.12.0

[root@master01 ~]# helm status myui NAME: myui LAST DEPLOYED: Wed Jun 7 10:27:14 2023 NAMESPACE: default STATUS: deployed REVISION: 1 NOTES: You should now be able to access the Scope frontend in your web browser, by using kubectl port-forward: kubectl -n default port-forward $(kubectl -n default get endpoints \ myui-weave-scope -o jsonpath='{.subsets[0].addresses[0].targetRef.name}') 8080:4040 then browsing to http://localhost:8080/. For more details on using Weave Scope, see the Weave Scope documentation: https://www.weave.works/docs/scope/latest/introducing/

修改Service的类型

[root@master01 ~]# kubectl edit service myui-weave-scope

将service的type改为NodePort

[root@master01 ~]# kubectl get service NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 13d myui-weave-scope NodePort 10.97.121.55 <none> 80:32523/TCP 11m

访问部署的应用:

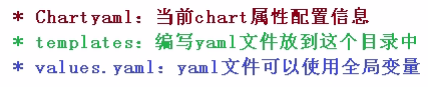

14.6、自己创建一个Chart

[root@master01 ~]# helm create mychart Creating mychart [root@master01 ~]# cd mychart/ [root@master01 mychart]# ls charts Chart.yaml templates values.yaml

进入tempates中,创建自己的yaml文件,这一步只是为了创建出两个yaml文件

[root@master01 templates]# kubectl create deployment web1 --image=nginx --dry-run -o yaml>deployment.yaml [root@master01 templates]# kubectl create -f deployment.yaml deployment.apps/web1 created [root@master01 templates]# kubectl expose deployment web1 --port=80 --target-port=80 --dry-run -o yaml>service.yaml W0607 11:00:06.512588 32868 helpers.go:535] --dry-run is deprecated and can be replaced with --dry-run=clien [root@master01 templates]# kubectl delete -f deployment.yaml deployment.apps "web1" deleted

安装mychart(命令中的mychart是我们开始创建的文件夹名称)

[root@master01 ~]# helm install web1 mychart NAME: web1 LAST DEPLOYED: Wed Jun 7 11:04:32 2023 NAMESPACE: default STATUS: deployed REVISION: 1

应用升级

[root@master01 ~]# helm upgrade web1 mychart Release "web1" has been upgraded. Happy Helming! NAME: web1 LAST DEPLOYED: Wed Jun 7 11:15:50 2023 NAMESPACE: default STATUS: deployed REVISION: 2

14.7、helm文件的高效复用

[root@master01 ~]# cd mychart/

[root@master01 mychart]# ls

charts Chart.yaml templates values.yaml

[root@master01 mychart]# vi values.yaml

replicas: 1

image: nginx

tag: 1.16

label: nginx

port: 80

修改template下的yaml文件,参数改为引用变量

apiVersion: apps/v1 kind: Deployment metadata: creationTimestamp: null labels: app: web1 name: {{ .Release.Name}}-deploy spec: replicas: 1 selector: matchLabels: app: {{ .Values.label}} strategy: {} template: metadata: creationTimestamp: null labels: app: {{ .Values.label}} spec: containers: - image: {{ .Values.image}} name: nginx resources: {} status: {}

service也修改

apiVersion: v1 kind: Service metadata: creationTimestamp: null labels: app: web1 name: {{ .Release.Name}}-svc spec: ports: - port: {{ .Value.port}} protocol: TCP targetPort: 80 selector: app: {{ .Value.label}} status: loadBalancer: {}

尝试运行测试

[root@master01 ~]# helm install --dry-run web2 mychart NAME: web2 LAST DEPLOYED: Wed Jun 7 12:00:51 2023 NAMESPACE: default STATUS: pending-install REVISION: 1 TEST SUITE: None HOOKS: MANIFEST: --- # Source: mychart/templates/service.yaml apiVersion: v1 kind: Service metadata: creationTimestamp: null labels: app: web1 name: web2-svc spec: ports: - port: 80 protocol: TCP targetPort: 80 selector: app: nginx status: loadBalancer: {} --- # Source: mychart/templates/deployment.yaml apiVersion: apps/v1 kind: Deployment metadata: creationTimestamp: null labels: app: web1 name: web2-deploy spec: replicas: 1 selector: matchLabels: app: nginx strategy: {} template: metadata: creationTimestamp: null labels: app: nginx spec: containers: - image: nginx name: nginx resources: {} status: {}

创建一个新的应用

[root@master01 ~]# helm install web2 mychart NAME: web2 LAST DEPLOYED: Wed Jun 7 14:26:15 2023 NAMESPACE: default STATUS: deployed REVISION: 1 TEST SUITE: None

十五、持久存储

15.1、nfs

找一台服务器作为nfs-server服务器

yum install nfs-utils

设置存储路径

mkdir -p /data/nfs

vi /etc/exports

/data/nfs *(rw,no_root_squash)

安装完成并启动

systemctl start nfs

在k8s的node节点安装nfs

yum install -y nfs-utils

创建一个Deployment挂载nfs

apiVersion: apps/v1 kind: Deployment metadata: name: nginx-nfs spec: replicas: selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: containers: - name: nginx image: nginx volumeMounts: - name: wwwroot mountPath: /usr/share/nginx/html ports: - containerPort: 80 volumes: - name: wwwroot nfs: server: 192.168.43.80 path: /data/nfs

在服务端/data/nfs下创建一个inde.html

[root@master nfs]# ls

[root@master nfs]# vi index.html

在进入pod中查看,发现文件存在

[root@master01 pv]# kubectl exec -it nginx-nfs-7b4899bd85-ww6dn bash kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl kubectl exec [POD] -- [COMMAND] instead. root@nginx-nfs-7b4899bd85-ww6dn:/# cd /usr/share/nginx/html root@nginx-nfs-7b4899bd85-ww6dn:/usr/share/nginx/html# ls index.html

15.2、PV和PVC

PV:持久化存储,对资源进行抽象,对外提供可以调用的地方;

PVC:用户申领存储,用于调用,不需要关心内部的实现细节;

pv创建

apiVersion: v1 kind: PersistentVolume metadata: name: my-pv spec: capacity: storage: 5Gi accessModes: - ReadWriteMany nfs: path: /k8s/nfs server: 192.168.43.80

pvc创建

apiVersion: v1 kind: PersistentVolumeClaim metadata: name: my-pvc spec: accessModes: - ReadWriteMany resources: requests: storage: 5Gi

创建应用调用

apiVersion: apps/v1 kind: Deployment metadata: name: nginx-dep1 spec: replicas: 3 selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: containers: - name: nginx image: nginx volumeMounts: - name: wwwroot mountPath: /usr/share/nginx/html ports: - containerPort: 80 volumes: - name: wwwroot persistentVolumeClaim: claimName: my-pvc

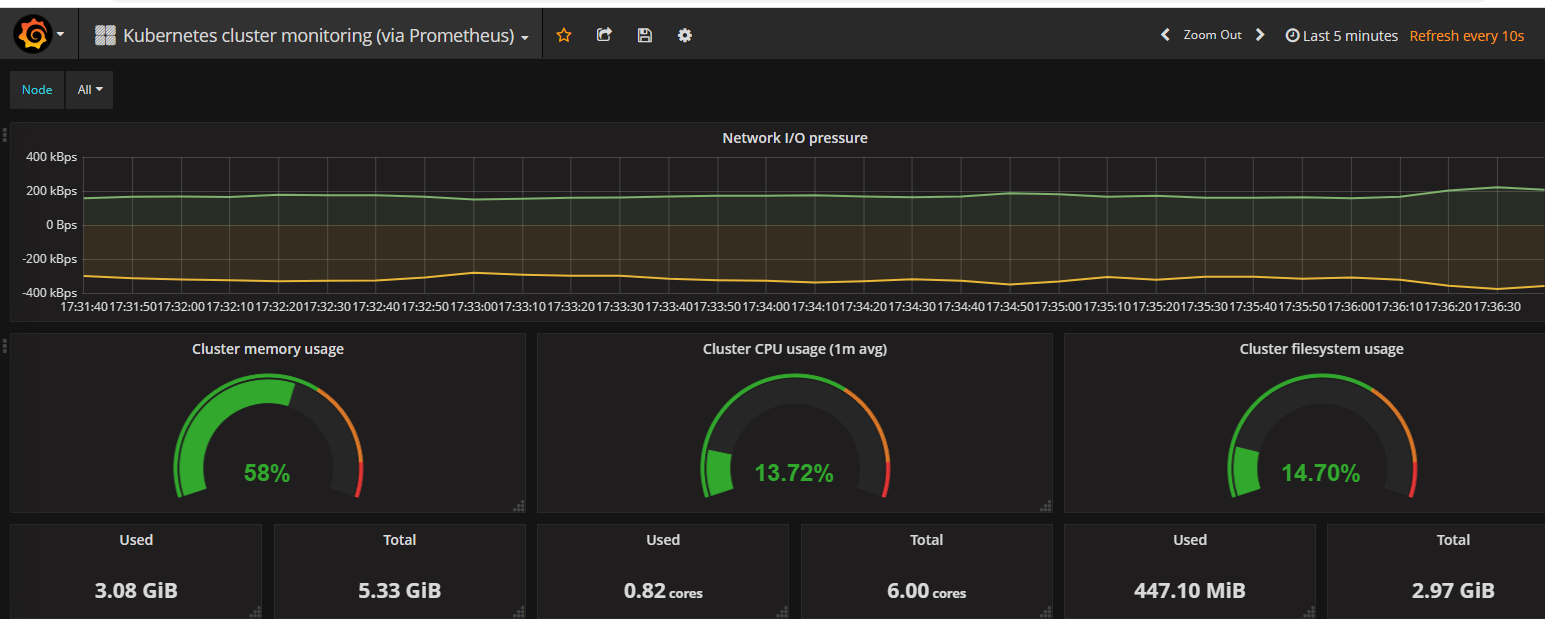

十六、资源监控

16.1、部署prometheus

DaemonSet部署

--- apiVersion: apps/v1 kind: DaemonSet metadata: name: node-exporter namespace: kube-system labels: k8s-app: node-exporter spec: selector: matchLabels: k8s-app: node-exporter template: metadata: labels: k8s-app: node-exporter spec: containers: - image: prom/node-exporter name: node-exporter ports: - containerPort: 9100 protocol: TCP name: http --- apiVersion: v1 kind: Service metadata: labels: k8s-app: node-exporter name: node-exporter namespace: kube-system spec: ports: - name: http port: 9100 nodePort: 31672 protocol: TCP type: NodePort selector: k8s-app: node-exporter

rbac

apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: prometheus rules: - apiGroups: [""] resources: - nodes - nodes/proxy - services - endpoints - pods verbs: ["get", "list", "watch"] - apiGroups: - extensions resources: - ingresses verbs: ["get", "list", "watch"] - nonResourceURLs: ["/metrics"] verbs: ["get"] --- apiVersion: v1 kind: ServiceAccount metadata: name: prometheus namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: prometheus roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: prometheus subjects: - kind: ServiceAccount name: prometheus namespace: kube-system

configmap

apiVersion: v1 kind: ConfigMap metadata: name: prometheus-config namespace: kube-system data: prometheus.yml: | global: scrape_interval: 15s evaluation_interval: 15s scrape_configs: - job_name: 'kubernetes-apiservers' kubernetes_sd_configs: - role: endpoints scheme: https tls_config: ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token relabel_configs: - source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name] action: keep regex: default;kubernetes;https - job_name: 'kubernetes-nodes' kubernetes_sd_configs: - role: node scheme: https tls_config: ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token relabel_configs: - action: labelmap regex: __meta_kubernetes_node_label_(.+) - target_label: __address__ replacement: kubernetes.default.svc:443 - source_labels: [__meta_kubernetes_node_name] regex: (.+) target_label: __metrics_path__ replacement: /api/v1/nodes/${1}/proxy/metrics - job_name: 'kubernetes-cadvisor' kubernetes_sd_configs: - role: node scheme: https tls_config: ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token relabel_configs: - action: labelmap regex: __meta_kubernetes_node_label_(.+) - target_label: __address__ replacement: kubernetes.default.svc:443 - source_labels: [__meta_kubernetes_node_name] regex: (.+) target_label: __metrics_path__ replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor - job_name: 'kubernetes-service-endpoints' kubernetes_sd_configs: - role: endpoints relabel_configs: - source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape] action: keep regex: true - source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme] action: replace target_label: __scheme__ regex: (https?) - source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path] action: replace target_label: __metrics_path__ regex: (.+) - source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port] action: replace target_label: __address__ regex: ([^:]+)(?::\d+)?;(\d+) replacement: $1:$2 - action: labelmap regex: __meta_kubernetes_service_label_(.+) - source_labels: [__meta_kubernetes_namespace] action: replace target_label: kubernetes_namespace - source_labels: [__meta_kubernetes_service_name] action: replace target_label: kubernetes_name - job_name: 'kubernetes-services' kubernetes_sd_configs: - role: service metrics_path: /probe params: module: [http_2xx] relabel_configs: - source_labels: [__meta_kubernetes_service_annotation_prometheus_io_probe] action: keep regex: true - source_labels: [__address__] target_label: __param_target - target_label: __address__ replacement: blackbox-exporter.example.com:9115 - source_labels: [__param_target] target_label: instance - action: labelmap regex: __meta_kubernetes_service_label_(.+) - source_labels: [__meta_kubernetes_namespace] target_label: kubernetes_namespace - source_labels: [__meta_kubernetes_service_name] target_label: kubernetes_name - job_name: 'kubernetes-ingresses' kubernetes_sd_configs: - role: ingress relabel_configs: - source_labels: [__meta_kubernetes_ingress_annotation_prometheus_io_probe] action: keep regex: true - source_labels: [__meta_kubernetes_ingress_scheme,__address__,__meta_kubernetes_ingress_path] regex: (.+);(.+);(.+) replacement: ${1}://${2}${3} target_label: __param_target - target_label: __address__ replacement: blackbox-exporter.example.com:9115 - source_labels: [__param_target] target_label: instance - action: labelmap regex: __meta_kubernetes_ingress_label_(.+) - source_labels: [__meta_kubernetes_namespace] target_label: kubernetes_namespace - source_labels: [__meta_kubernetes_ingress_name] target_label: kubernetes_name - job_name: 'kubernetes-pods' kubernetes_sd_configs: - role: pod relabel_configs: - source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape] action: keep regex: true - source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path] action: replace target_label: __metrics_path__ regex: (.+) - source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port] action: replace regex: ([^:]+)(?::\d+)?;(\d+) replacement: $1:$2 target_label: __address__ - action: labelmap regex: __meta_kubernetes_pod_label_(.+) - source_labels: [__meta_kubernetes_namespace] action: replace target_label: kubernetes_namespace - source_labels: [__meta_kubernetes_pod_name] action: replace target_label: kubernetes_pod_name

deplyment

--- apiVersion: apps/v1 kind: Deployment metadata: labels: name: prometheus-deployment name: prometheus namespace: kube-system spec: replicas: 1 selector: matchLabels: app: prometheus template: metadata: labels: app: prometheus spec: containers: - image: prom/prometheus:v2.0.0 name: prometheus command: - "/bin/prometheus" args: - "--config.file=/etc/prometheus/prometheus.yml" - "--storage.tsdb.path=/prometheus" - "--storage.tsdb.retention=24h" ports: - containerPort: 9090 protocol: TCP volumeMounts: - mountPath: "/prometheus" name: data - mountPath: "/etc/prometheus" name: config-volume resources: requests: cpu: 100m memory: 100Mi limits: cpu: 500m memory: 2500Mi serviceAccountName: prometheus volumes: - name: data emptyDir: {} - name: config-volume configMap: name: prometheus-config

service

kind: Service apiVersion: v1 metadata: labels: app: prometheus name: prometheus namespace: kube-system spec: type: NodePort ports: - port: 9090 targetPort: 9090 nodePort: 30003 selector: app: prometheus

查看

[root@master01 prometheus]# kubectl get pod -n kube-system NAME READY STATUS RESTARTS AGE calico-kube-controllers-65f8bc95db-tlwqv 1/1 Running 9 13d calico-node-52rhs 1/1 Running 9 13d calico-node-8cqp4 1/1 Running 9 13d calico-node-b5dwh 1/1 Running 9 13d coredns-7ff77c879f-b9nhr 1/1 Running 9 13d coredns-7ff77c879f-pfhzm 1/1 Running 9 13d etcd-master01 1/1 Running 9 13d kube-apiserver-master01 1/1 Running 9 13d kube-controller-manager-master01 1/1 Running 9 13d kube-proxy-gns5n 1/1 Running 9 13d kube-proxy-kz9h4 1/1 Running 9 13d kube-proxy-x6vqb 1/1 Running 9 13d kube-scheduler-master01 1/1 Running 9 13d node-exporter-58xnc 1/1 Running 0 31m node-exporter-nsw56 1/1 Running 0 31m prometheus-7486bf7f4b-hk8np 1/1 Running 0 4m9s

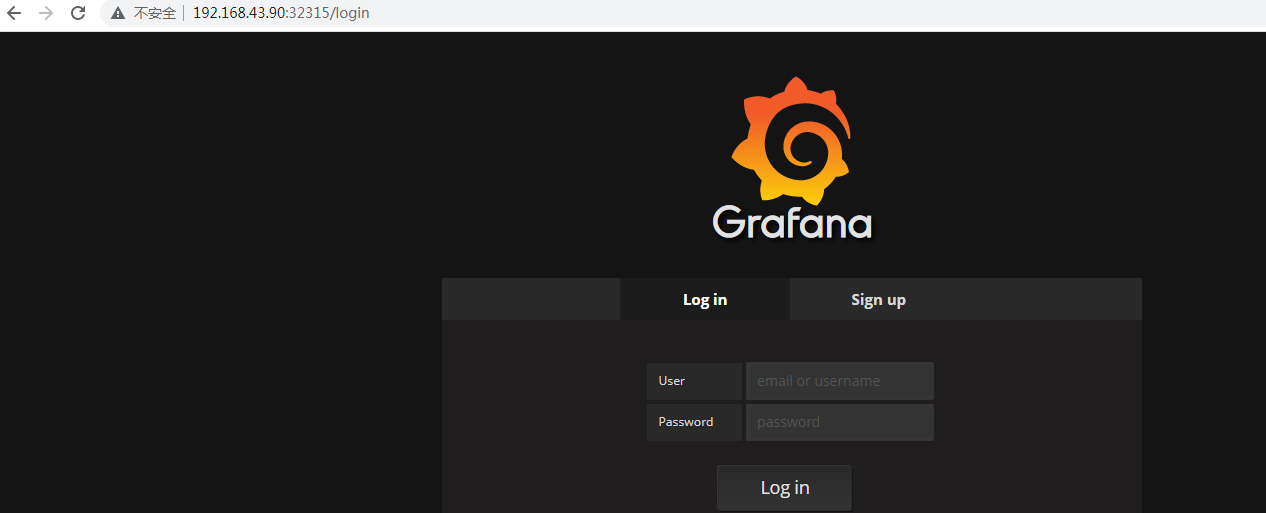

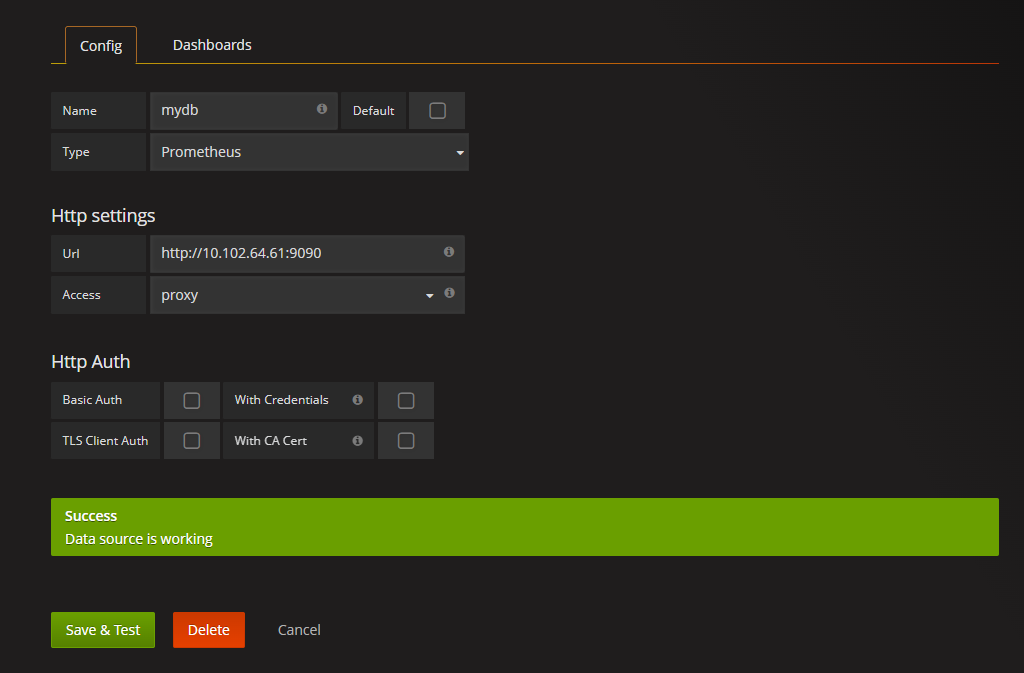

16.2、部署granafa

apiVersion: apps/v1 kind: Deployment metadata: name: grafana-core namespace: kube-system labels: app: grafana component: core spec: replicas: 1 selector: matchLabels: app: grafana component: core template: metadata: labels: app: grafana component: core spec: containers: - image: grafana/grafana:4.2.0 name: grafana-core imagePullPolicy: IfNotPresent # env: resources: # keep request = limit to keep this container in guaranteed class limits: cpu: 100m memory: 100Mi requests: cpu: 100m memory: 100Mi env: # The following env variables set up basic auth twith the default admin user and admin password. - name: GF_AUTH_BASIC_ENABLED value: "true" - name: GF_AUTH_ANONYMOUS_ENABLED value: "false" # - name: GF_AUTH_ANONYMOUS_ORG_ROLE # value: Admin # does not really work, because of template variables in exported dashboards: # - name: GF_DASHBOARDS_JSON_ENABLED # value: "true" readinessProbe: httpGet: path: /login port: 3000 # initialDelaySeconds: 30 # timeoutSeconds: 1 volumeMounts: - name: grafana-persistent-storage mountPath: /var volumes: - name: grafana-persistent-storage emptyDir: {}

apiVersion: apps/v1 kind: Deployment metadata: name: grafana-core namespace: kube-system labels: app: grafana component: core spec: replicas: 1 selector: matchLabels: app: grafana component: core template: metadata: labels: app: grafana component: core spec: containers: - image: grafana/grafana:4.2.0 name: grafana-core imagePullPolicy: IfNotPresent # env: resources: # keep request = limit to keep this container in guaranteed class limits: cpu: 100m memory: 100Mi requests: cpu: 100m memory: 100Mi env: # The following env variables set up basic auth twith the default admin user and admin password. - name: GF_AUTH_BASIC_ENABLED value: "true" - name: GF_AUTH_ANONYMOUS_ENABLED value: "false" # - name: GF_AUTH_ANONYMOUS_ORG_ROLE # value: Admin # does not really work, because of template variables in exported dashboards: # - name: GF_DASHBOARDS_JSON_ENABLED # value: "true" readinessProbe: httpGet: path: /login port: 3000 # initialDelaySeconds: 30 # timeoutSeconds: 1 volumeMounts: - name: grafana-persistent-storage mountPath: /var volumes: - name: grafana-persistent-storage emptyDir: {}

ingress

apiVersion: extensions/v1beta1 kind: Ingress metadata: name: grafana namespace: kube-system spec: rules: - host: k8s.grafana http: paths: - path: / backend: serviceName: grafana servicePort: 3000

service

apiVersion: v1 kind: Service metadata: name: grafana namespace: kube-system labels: app: grafana component: core spec: type: NodePort ports: - port: 3000 selector: app: grafana component: core

登陆granafa

[root@master01 grafana]# kubectl get service -owide -n kube-system NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR grafana NodePort 10.107.69.160 <none> 3000:32315/TCP 3m13s app=grafana,component=core kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 13d k8s-app=kube-dns node-exporter NodePort 10.99.183.46 <none> 9100:31672/TCP 52m k8s-app=node-exporter prometheus NodePort 10.102.64.61 <none> 9090:30003/TCP 23m app=prometheus

admin admin

添加数据源

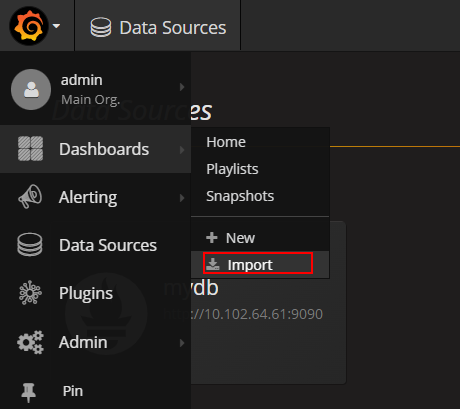

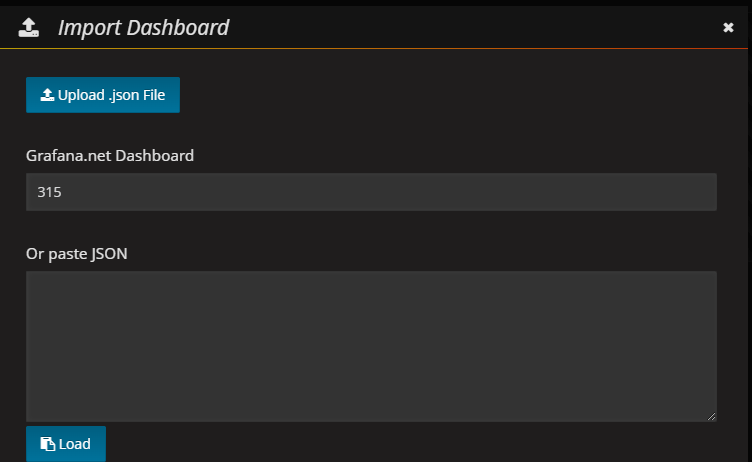

添加显示模版

十七、项目部署

浙公网安备 33010602011771号

浙公网安备 33010602011771号