搭建Kubernetes

使用kubeadm搭建一主两从的环境:

1、主机规划

| 角色 | IP地址 | 操作系统 | 配置 |

| MASTER | 192.168.1.10 | CentOS7.5 | 2C,2G,50G |

| NODE1 | 192.168.1.11 | CentOS7.5 | 2C,2G,50G |

| NODE2 | 192.168.1.12 | CentOS7.5 | 2C,2G,50G |

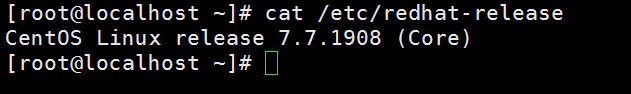

2、检查系统版本

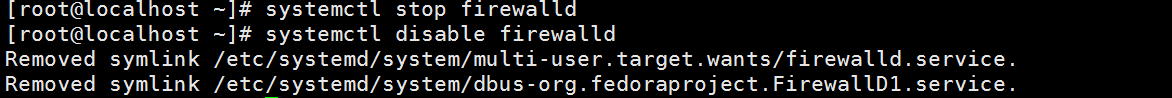

3、关闭防火墙

4、关闭selinux

[root@localhost ~]# vi /etc/selinux/config

5、设置主机名

[root@localhost ~]# hostnamectl set-hostname k8s-master

[root@localhost ~]# hostnamectl set-hostname k8s-node1

[root@localhost ~]# hostnamectl set-hostname k8s-node2

6、主机名解析

cat >> /etc/hosts << EOF

192.168.18.100 k8s-master

192.168.18.101 k8s-node1

192.168.18.102 k8s-node2

EOF

7、搭建在线yum

[root@localhost yum.repos.d]# vi /etc/yum.repos.d/centos.repo

[kub]

name=kub

baseurl=http://mirrors.aliyun.com/centos/7/os/x86_64/

enable=1

gpgcheck=0

8、安装时间同步

[root@localhost yum.repos.d]# yum install ntpdate -y

[root@localhost yum.repos.d]# ntpdate time.windows.com

9、关闭swap分区

[root@localhost yum.repos.d]# vi /etc/fstab

#/dev/mapper/centos-swap swap swap defaults 0 0

需要重启

10、允许 iptables 检查桥接流量,三台都执行

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

vm.swappiness = 0

EOF

加载 br_netfilter模块

modprobe br_netfilter

查看是否加载成功

lsmod | grep br_netfilter

生效

[root@k8s-node2 ~]# sudo sysctl --system

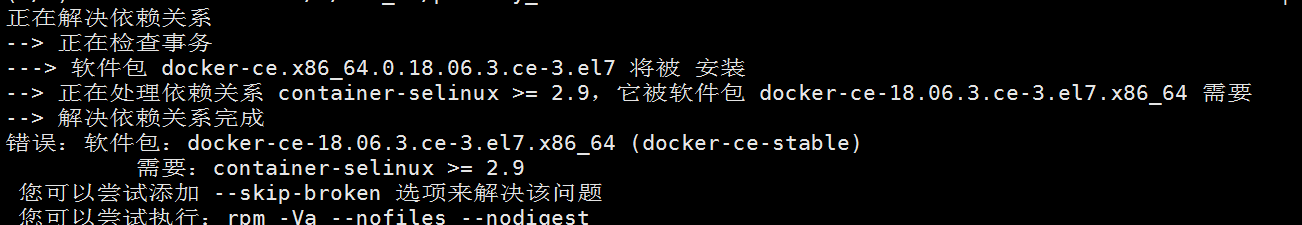

11、安装Docker

yum -y install docker-ce-18.06.3.ce-3.el7

遇到如下报错:

错误:软件包:docker-ce-18.06.3.ce-3.el7.x86_64 (docker-ce-stable)

需要:container-selinux >= 2.9

您可以尝试添加 --skip-broken 选项来解决该问题

您可以尝试执行:rpm -Va --nofiles --nodigest

解决办法:

$ wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

$ yum install epel-release -y

$ yum install container-selinux -y

然后重新安装:

yum -y install docker-ce-18.06.3.ce-3.el7

设置开起启动:

systemctl enable docker && systemctl start docker

12、设置Docker镜像加速器

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"exec-opts": ["native.cgroupdriver=systemd"],

"registry-mirrors": ["https://du3ia00u.mirror.aliyuncs.com"],

"live-restore": true,

"log-driver":"json-file",

"log-opts": {"max-size":"500m", "max-file":"3"},

"storage-driver": "overlay2"

}

EOF

[root@k8s-node2 yum.repos.d]# sudo systemctl daemon-reload

[root@k8s-node2 yum.repos.d]# sudo systemctl restart docker

13、添加阿里云的镜像源

cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

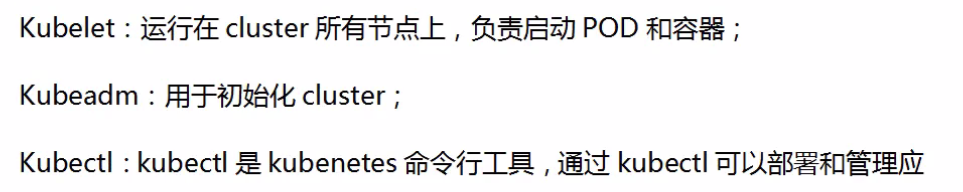

14、安装kubeadm、kubelet、kubectl(所有节点)

yum install -y kubelet-1.20.0 kubeadm-1.20.0 kubectl-1.20.0

安装完成后查看:

[root@k8s-node2 ~]# yum list installed | grep kubelet

kubelet.x86_64 1.20.0-0 @kubernetes

[root@k8s-node2 ~]# yum list installed | grep kubeadm

kubeadm.x86_64 1.20.0-0 @kubernetes

[root@k8s-node2 ~]# yum list installed | grep kubectl

kubectl.x86_64 1.20.0-0

设置开机启动:

systemctl enable kubelet && systemctl start kubelet

15、初始化master节点(这一步只是在主节点执行)

kubeadm init --apiserver-advertise-address=192.168.1.10 --image-repository registry.aliyuncs.com/google_containers --kubernetes-version v1.20.0 --service-cidr=10.1.0.0/16 --pod-network-cidr=10.244.0.0/16

初始化成功:

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.1.10:6443 --token 7kdewg.3p8lbmf08ztc53q3 \

--discovery-token-ca-cert-hash sha256:943e5e468da78903324f3712d59dac1a7a274b4aabac41809a0303762f8902ad

16、配置kubectl(这一步只是在主节点执行)

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

17、部署node节点(两台node节点执行)

[root@k8s-node1 yum.repos.d]# kubeadm join 192.168.1.10:6443 --token 7kdewg.3p8lbmf08ztc53q3 \

> --discovery-token-ca-cert-hash sha256:943e5e468da78903324f3712d59dac1a7a274b4aabac41809a0303762f8902ad

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

节点二:

[root@k8s-node2 yum.repos.d]# kubeadm join 192.168.1.10:6443 --token 7kdewg.3p8lbmf08ztc53q3 \

> --discovery-token-ca-cert-hash sha256:943e5e468da78903324f3712d59dac1a7a274b4aabac41809a0303762f8902ad

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

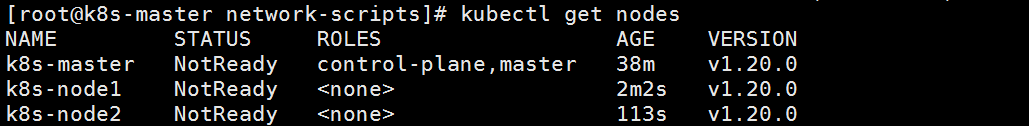

18、查看节点状态

kubectl get nodes

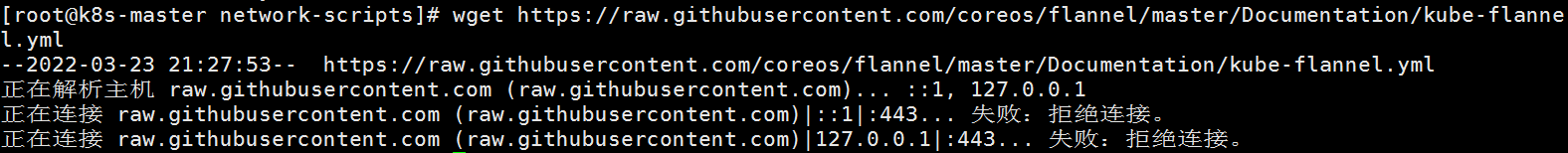

19、安装CNI网络插件

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

结果报错:

直接复制网上的代码,重命名为 kube-flannel.yml

--- apiVersion: policy/v1beta1 kind: PodSecurityPolicy metadata: name: psp.flannel.unprivileged annotations: seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default spec: privileged: false volumes: - configMap - secret - emptyDir - hostPath allowedHostPaths: - pathPrefix: "/etc/cni/net.d" - pathPrefix: "/etc/kube-flannel" - pathPrefix: "/run/flannel" readOnlyRootFilesystem: false # Users and groups runAsUser: rule: RunAsAny supplementalGroups: rule: RunAsAny fsGroup: rule: RunAsAny # Privilege Escalation allowPrivilegeEscalation: false defaultAllowPrivilegeEscalation: false # Capabilities allowedCapabilities: ['NET_ADMIN'] defaultAddCapabilities: [] requiredDropCapabilities: [] # Host namespaces hostPID: false hostIPC: false hostNetwork: true hostPorts: - min: 0 max: 65535 # SELinux seLinux: # SELinux is unused in CaaSP rule: 'RunAsAny' --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: flannel rules: - apiGroups: ['extensions'] resources: ['podsecuritypolicies'] verbs: ['use'] resourceNames: ['psp.flannel.unprivileged'] - apiGroups: - "" resources: - pods verbs: - get - apiGroups: - "" resources: - nodes verbs: - list - watch - apiGroups: - "" resources: - nodes/status verbs: - patch --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: flannel roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: flannel subjects: - kind: ServiceAccount name: flannel namespace: kube-system --- apiVersion: v1 kind: ServiceAccount metadata: name: flannel namespace: kube-system --- kind: ConfigMap apiVersion: v1 metadata: name: kube-flannel-cfg namespace: kube-system labels: tier: node app: flannel data: cni-conf.json: | { "name": "cbr0", "cniVersion": "0.3.1", "plugins": [ { "type": "flannel", "delegate": { "hairpinMode": true, "isDefaultGateway": true } }, { "type": "portmap", "capabilities": { "portMappings": true } } ] } net-conf.json: | { "Network": "10.244.0.0/16", "Backend": { "Type": "vxlan" } } --- apiVersion: apps/v1 kind: DaemonSet metadata: name: kube-flannel-ds-amd64 namespace: kube-system labels: tier: node app: flannel spec: selector: matchLabels: app: flannel template: metadata: labels: tier: node app: flannel spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: kubernetes.io/os operator: In values: - linux - key: kubernetes.io/arch operator: In values: - amd64 hostNetwork: true tolerations: - operator: Exists effect: NoSchedule serviceAccountName: flannel initContainers: - name: install-cni image: quay.io/coreos/flannel:v0.12.0-amd64 command: - cp args: - -f - /etc/kube-flannel/cni-conf.json - /etc/cni/net.d/10-flannel.conflist volumeMounts: - name: cni mountPath: /etc/cni/net.d - name: flannel-cfg mountPath: /etc/kube-flannel/ containers: - name: kube-flannel image: quay.io/coreos/flannel:v0.12.0-amd64 command: - /opt/bin/flanneld args: - --ip-masq - --kube-subnet-mgr resources: requests: cpu: "100m" memory: "50Mi" limits: cpu: "100m" memory: "50Mi" securityContext: privileged: false capabilities: add: ["NET_ADMIN"] env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace volumeMounts: - name: run mountPath: /run/flannel - name: flannel-cfg mountPath: /etc/kube-flannel/ volumes: - name: run hostPath: path: /run/flannel - name: cni hostPath: path: /etc/cni/net.d - name: flannel-cfg configMap: name: kube-flannel-cfg --- apiVersion: apps/v1 kind: DaemonSet metadata: name: kube-flannel-ds-arm64 namespace: kube-system labels: tier: node app: flannel spec: selector: matchLabels: app: flannel template: metadata: labels: tier: node app: flannel spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: kubernetes.io/os operator: In values: - linux - key: kubernetes.io/arch operator: In values: - arm64 hostNetwork: true tolerations: - operator: Exists effect: NoSchedule serviceAccountName: flannel initContainers: - name: install-cni image: quay.io/coreos/flannel:v0.12.0-arm64 command: - cp args: - -f - /etc/kube-flannel/cni-conf.json - /etc/cni/net.d/10-flannel.conflist volumeMounts: - name: cni mountPath: /etc/cni/net.d - name: flannel-cfg mountPath: /etc/kube-flannel/ containers: - name: kube-flannel image: quay.io/coreos/flannel:v0.12.0-arm64 command: - /opt/bin/flanneld args: - --ip-masq - --kube-subnet-mgr resources: requests: cpu: "100m" memory: "50Mi" limits: cpu: "100m" memory: "50Mi" securityContext: privileged: false capabilities: add: ["NET_ADMIN"] env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace volumeMounts: - name: run mountPath: /run/flannel - name: flannel-cfg mountPath: /etc/kube-flannel/ volumes: - name: run hostPath: path: /run/flannel - name: cni hostPath: path: /etc/cni/net.d - name: flannel-cfg configMap: name: kube-flannel-cfg --- apiVersion: apps/v1 kind: DaemonSet metadata: name: kube-flannel-ds-arm namespace: kube-system labels: tier: node app: flannel spec: selector: matchLabels: app: flannel template: metadata: labels: tier: node app: flannel spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: kubernetes.io/os operator: In values: - linux - key: kubernetes.io/arch operator: In values: - arm hostNetwork: true tolerations: - operator: Exists effect: NoSchedule serviceAccountName: flannel initContainers: - name: install-cni image: quay.io/coreos/flannel:v0.12.0-arm command: - cp args: - -f - /etc/kube-flannel/cni-conf.json - /etc/cni/net.d/10-flannel.conflist volumeMounts: - name: cni mountPath: /etc/cni/net.d - name: flannel-cfg mountPath: /etc/kube-flannel/ containers: - name: kube-flannel image: quay.io/coreos/flannel:v0.12.0-arm command: - /opt/bin/flanneld args: - --ip-masq - --kube-subnet-mgr resources: requests: cpu: "100m" memory: "50Mi" limits: cpu: "100m" memory: "50Mi" securityContext: privileged: false capabilities: add: ["NET_ADMIN"] env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace volumeMounts: - name: run mountPath: /run/flannel - name: flannel-cfg mountPath: /etc/kube-flannel/ volumes: - name: run hostPath: path: /run/flannel - name: cni hostPath: path: /etc/cni/net.d - name: flannel-cfg configMap: name: kube-flannel-cfg --- apiVersion: apps/v1 kind: DaemonSet metadata: name: kube-flannel-ds-ppc64le namespace: kube-system labels: tier: node app: flannel spec: selector: matchLabels: app: flannel template: metadata: labels: tier: node app: flannel spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: kubernetes.io/os operator: In values: - linux - key: kubernetes.io/arch operator: In values: - ppc64le hostNetwork: true tolerations: - operator: Exists effect: NoSchedule serviceAccountName: flannel initContainers: - name: install-cni image: quay.io/coreos/flannel:v0.12.0-ppc64le command: - cp args: - -f - /etc/kube-flannel/cni-conf.json - /etc/cni/net.d/10-flannel.conflist volumeMounts: - name: cni mountPath: /etc/cni/net.d - name: flannel-cfg mountPath: /etc/kube-flannel/ containers: - name: kube-flannel image: quay.io/coreos/flannel:v0.12.0-ppc64le command: - /opt/bin/flanneld args: - --ip-masq - --kube-subnet-mgr resources: requests: cpu: "100m" memory: "50Mi" limits: cpu: "100m" memory: "50Mi" securityContext: privileged: false capabilities: add: ["NET_ADMIN"] env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace volumeMounts: - name: run mountPath: /run/flannel - name: flannel-cfg mountPath: /etc/kube-flannel/ volumes: - name: run hostPath: path: /run/flannel - name: cni hostPath: path: /etc/cni/net.d - name: flannel-cfg configMap: name: kube-flannel-cfg --- apiVersion: apps/v1 kind: DaemonSet metadata: name: kube-flannel-ds-s390x namespace: kube-system labels: tier: node app: flannel spec: selector: matchLabels: app: flannel template: metadata: labels: tier: node app: flannel spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: kubernetes.io/os operator: In values: - linux - key: kubernetes.io/arch operator: In values: - s390x hostNetwork: true tolerations: - operator: Exists effect: NoSchedule serviceAccountName: flannel initContainers: - name: install-cni image: quay.io/coreos/flannel:v0.12.0-s390x command: - cp args: - -f - /etc/kube-flannel/cni-conf.json - /etc/cni/net.d/10-flannel.conflist volumeMounts: - name: cni mountPath: /etc/cni/net.d - name: flannel-cfg mountPath: /etc/kube-flannel/ containers: - name: kube-flannel image: quay.io/coreos/flannel:v0.12.0-s390x command: - /opt/bin/flanneld args: - --ip-masq - --kube-subnet-mgr resources: requests: cpu: "100m" memory: "50Mi" limits: cpu: "100m" memory: "50Mi" securityContext: privileged: false capabilities: add: ["NET_ADMIN"] env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace volumeMounts: - name: run mountPath: /run/flannel - name: flannel-cfg mountPath: /etc/kube-flannel/ volumes: - name: run hostPath: path: /run/flannel - name: cni hostPath: path: /etc/cni/net.d - name: flannel-cfg configMap: name: kube-flannel-cfg

继续安装:

[root@k8s-master ~]# kubectl apply -f kube-flannel.yml

podsecuritypolicy.policy/psp.flannel.unprivileged created

Warning: rbac.authorization.k8s.io/v1beta1 ClusterRole is deprecated in v1.17+, unavailable in v1.22+; use rbac.authorization.k8s.io/v1 ClusterRole

clusterrole.rbac.authorization.k8s.io/flannel created

Warning: rbac.authorization.k8s.io/v1beta1 ClusterRoleBinding is deprecated in v1.17+, unavailable in v1.22+; use rbac.authorization.k8s.io/v1 ClusterRoleBinding

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds-amd64 created

daemonset.apps/kube-flannel-ds-arm64 created

daemonset.apps/kube-flannel-ds-arm created

daemonset.apps/kube-flannel-ds-ppc64le created

daemonset.apps/kube-flannel-ds-s390x created

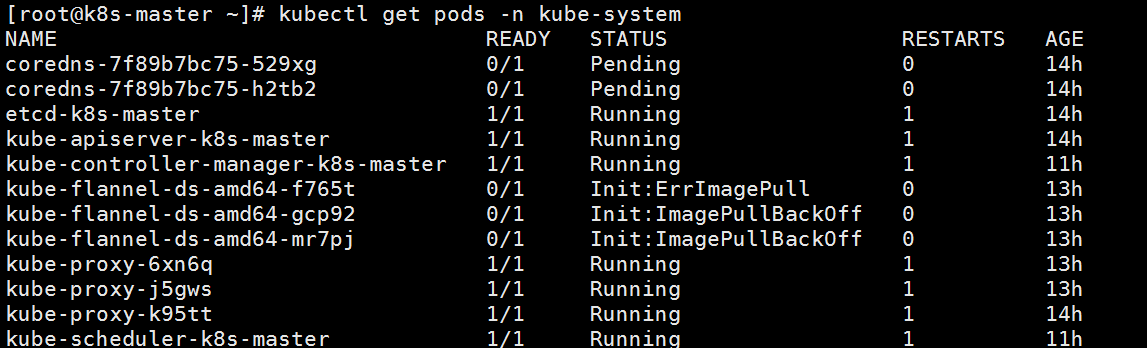

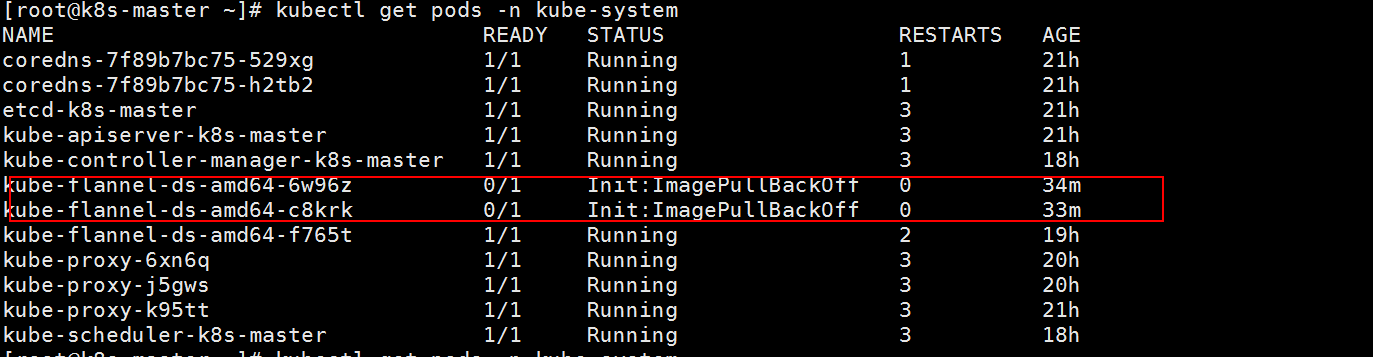

20、在master查看所有pod

[root@k8s-master ~]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-7f89b7bc75-529xg 0/1 Pending 0 100m

coredns-7f89b7bc75-h2tb2 0/1 Pending 0 100m

etcd-k8s-master 1/1 Running 0 100m

kube-apiserver-k8s-master 1/1 Running 0 100m

kube-controller-manager-k8s-master 1/1 Running 0 100m

kube-flannel-ds-amd64-f765t 0/1 Init:ImagePullBackOff 0 6m25s

kube-flannel-ds-amd64-gcp92 0/1 Init:ImagePullBackOff 0 6m25s

kube-flannel-ds-amd64-mr7pj 0/1 Init:0/1 0 6m25s

kube-proxy-6xn6q 1/1 Running 0 64m

kube-proxy-j5gws 1/1 Running 0 64m

kube-proxy-k95tt 1/1 Running 0 100m

kube-scheduler-k8s-master 1/1 Running 0 100m

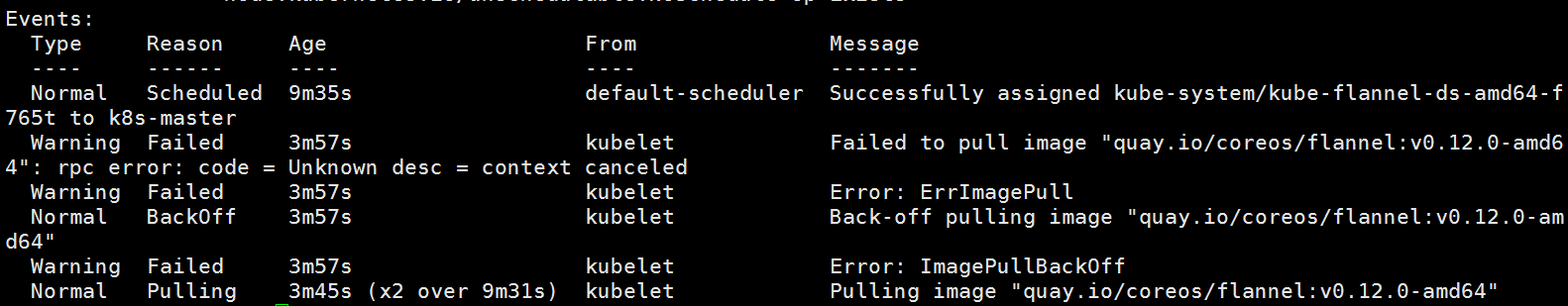

有异常的

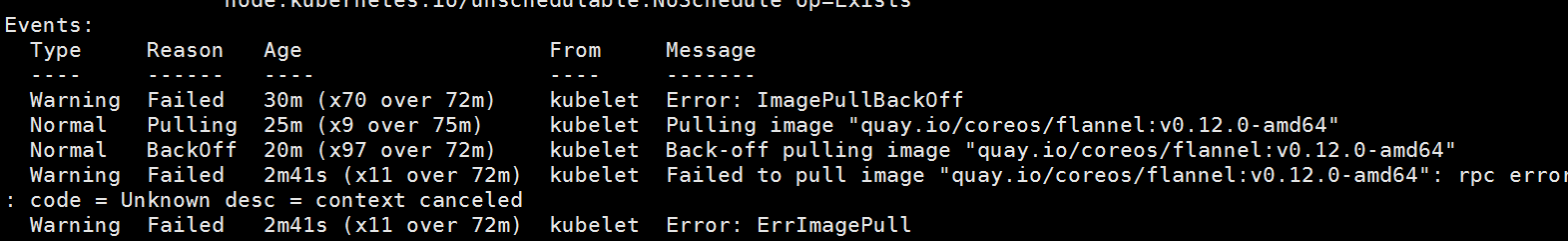

[root@k8s-master ~]# kubectl describe pod -n kube-system kube-flannel-ds-amd64-f765t

镜像问题,重新下载镜像。(这个地放下载有误,应该下载12版本的,具体版本号要看 kube-flannel.yml这个文件里边的版本号,我下面下载的是14的版本,所以问题没有解决,后面继续下载12的版本)

[root@k8s-master ~]# docker pull quay.io/coreos/flannel:v0.14.0

[root@k8s-master ~]# docker pull quay.io/coreos/flannel:v0.14.0

v0.14.0: Pulling from coreos/flannel

801bfaa63ef2: Downloading [===========> ] 658.5kB/2.799MB

801bfaa63ef2: Pull complete

e4264a7179f6: Pull complete

bc75ea45ad2e: Pull complete

78648579d12a: Downloading [==================================================>] 13.2MB/13.2MB

3393447261e4: Download complete

071b96dd834b: Download complete

4de2f0468a91: Download complete

read tcp 192.168.1.29:60040->13.225.94.35:443: read: connection reset by peer

21、kubectl get cs报错

[root@k8s-master ~]# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

scheduler Unhealthy Get "http://127.0.0.1:10251/healthz": dial tcp 127.0.0.1:10251: connect: connection refused

controller-manager Unhealthy Get "http://127.0.0.1:10252/healthz": dial tcp 127.0.0.1:10252: connect: connection refused

etcd-0 Healthy {"health":"true"}

原因分析

出现这种情况,是/etc/kubernetes/manifests/下的kube-controller-manager.yaml和kube-scheduler.yaml设置的默认端口是0导致的,解决方式是注释掉对应的port即可,操作如下:

[root@k8s-master ~]# cd /etc/kubernetes/manifests/

[root@k8s-master manifests]# ls

etcd.yaml kube-apiserver.yaml kube-controller-manager.yaml kube-scheduler.yaml

[root@k8s-master manifests]# vi kube-controller-manager.yaml

23 - --controllers=*,bootstrapsigner,tokencleaner

24 - --kubeconfig=/etc/kubernetes/controller-manager.conf

25 - --leader-elect=true

26 # - --port=0

27 - --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt

28 - --root-ca-file=/etc/kubernetes/pki/ca.crt

29 - --service-account-private-key-file=/etc/kubernetes/pki/sa.key

[root@k8s-master manifests]# vi kube-scheduler.yaml

17 - --kubeconfig=/etc/kubernetes/scheduler.conf

18 - --leader-elect=true

19 # - --port=0

20 image: registry.aliyuncs.com/google_containers/kube-scheduler:v1.20.0

21 imagePullPolicy: IfNotPresent

重启服务:

[root@k8s-master manifests]# systemctl restart kubelet.service

[root@k8s-master manifests]# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true"}

22、查看集群健康状态

[root@k8s-master manifests]# kubectl cluster-info

Kubernetes control plane is running at https://192.168.1.10:6443

KubeDNS is running at https://192.168.1.10:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

23、查看集群状态报错,发现是NotReady的状态,

[root@k8s-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master NotReady control-plane,master 14h v1.20.0

k8s-node1 NotReady <none> 13h v1.20.0

k8s-node2 NotReady <none> 13h v1.20.0

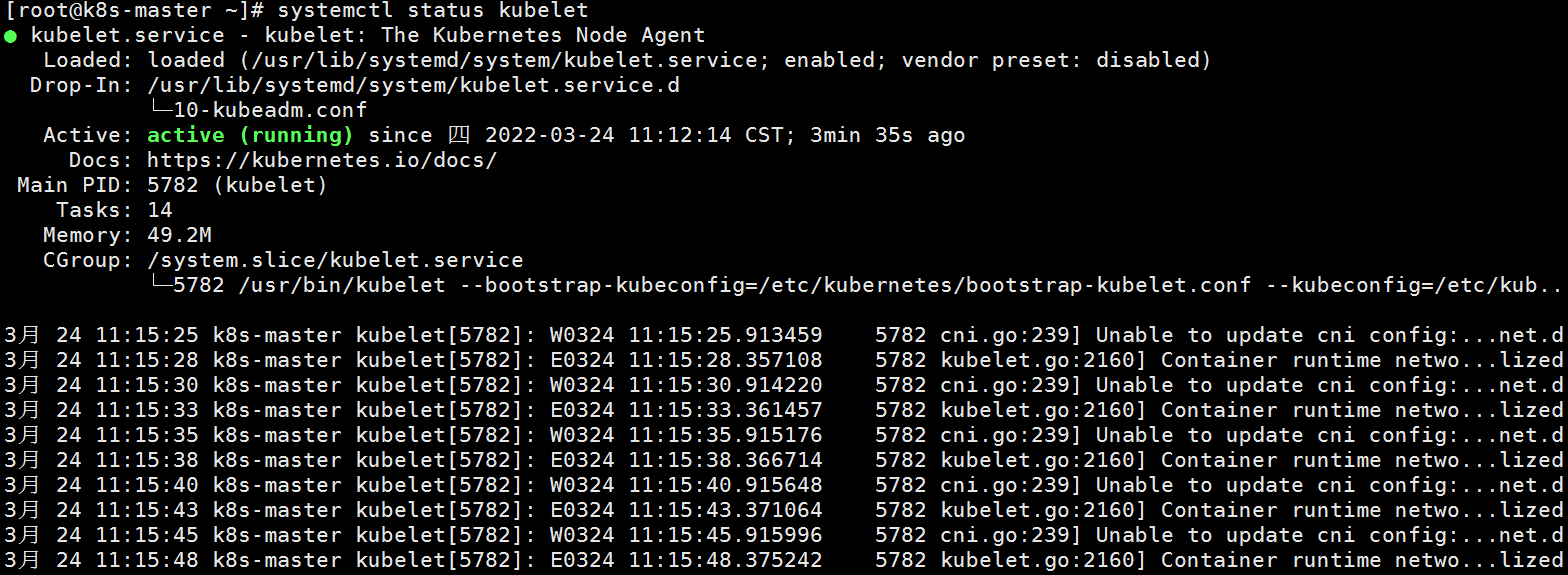

查看 systemctl status kubectl

查看服务状态:

[root@k8s-master ~]# kubectl get pods -n kube-system

[root@k8s-master ~]# kubectl describe pod -n kube-system kube-flannel-ds-amd64-f765t

Name: kube-flannel-ds-amd64-f765t

Namespace: kube-system

Priority: 0

继续下载这个镜像: 最后这个镜像下载还是失败!!!!!!

[root@k8s-master ~]# docker pull quay.io/coreos/flannel:v0.12.0-amd64

v0.12.0-amd64: Pulling from coreos/flannel

921b31ab772b: Downloading [============> ] 690.9kB/2.79MB

4882ae1d65d3: Downloading [=========> ] 621.1kB/3.132MB

ac6ef98d5d6d: Download complete

8ba0f465eea4: Downloading [========> ] 1.572MB/9.701MB

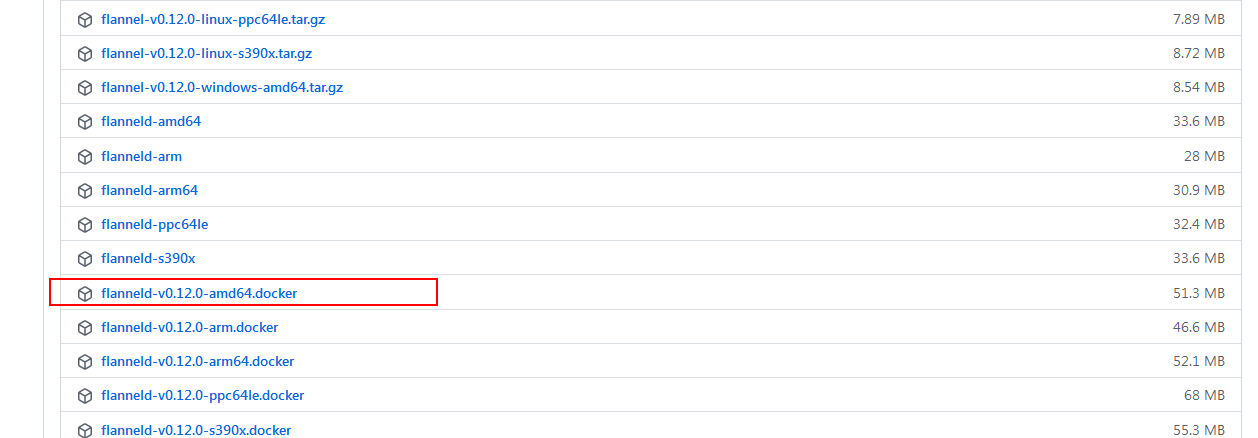

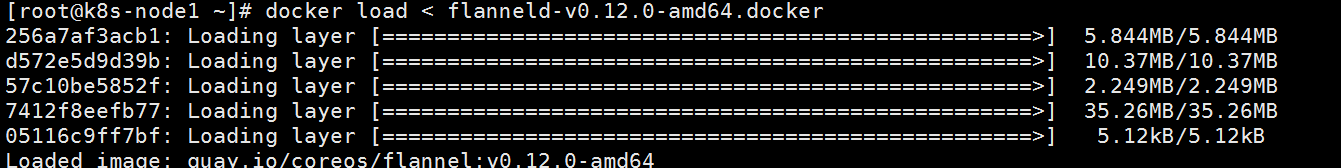

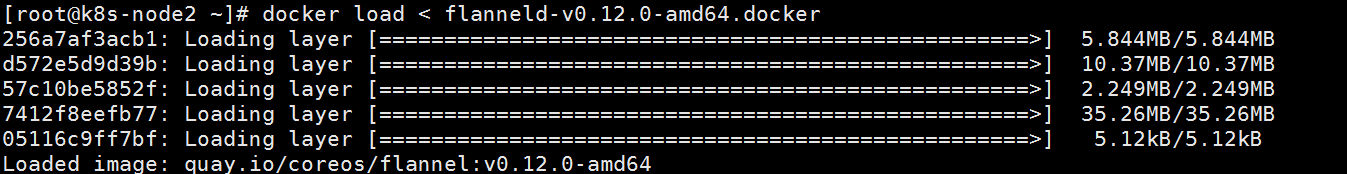

直接去官方网站去下载这个镜像:

https://github.com/flannel-io/flannel/releases

然后加载镜像:

[root@k8s-master ~]# docker load < flanneld-v0.12.0-amd64.docker

256a7af3acb1: Loading layer [==================================================>] 5.844MB/5.844MB

d572e5d9d39b: Loading layer [==================================================>] 10.37MB/10.37MB

57c10be5852f: Loading layer [==================================================>] 2.249MB/2.249MB

7412f8eefb77: Loading layer [==================================================>] 35.26MB/35.26MB

05116c9ff7bf: Loading layer [==================================================>] 5.12kB/5.12kB

Loaded image: quay.io/coreos/flannel:v0.12.0-amd64

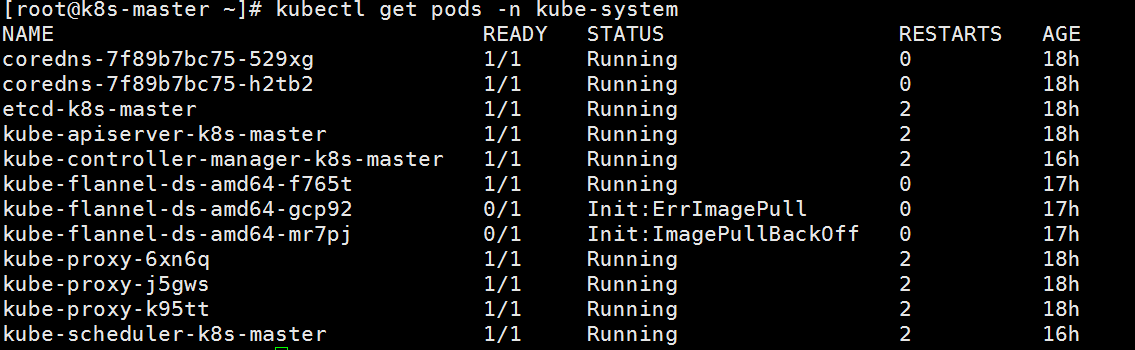

加载镜像后继续查看服务状态:还是有两个失败

镜像的问题解决好以后,依然有问题:

解决办法:在node节点重新加载这个镜像

常用的命令总结:

查看节点命令: kubectl get nodes

查看运行容器POD :kubectl get pods -n kube-system

查看集群健康状况: kubectl get cs

浙公网安备 33010602011771号

浙公网安备 33010602011771号