Thread-Safety with the AutoResetEvent, ManualResetEvent Class(Synchronization of .net)

AutoResetEvent

From MSDN:

"A thread waits for a signal by calling

WaitOneon theAutoResetEvent. If theAutoResetEventis in the non-signaled state, the thread blocks, waiting for the thread that currently controls the resource to signal that the resource is available by callingSet.Calling

SetsignalsAutoResetEventto release a waiting thread.AutoResetEventremains signaled until a single waiting thread is released, and then automatically returns to the non-signaled state. If no threads are waiting, the state remains signaled indefinitely."

In laymen's terms, when using a AutoResetEvent, when the AutoResetEvent is set to signaled, the first thread that stops blocking (stops waiting) will cause the AutoResetEvent to be put into a reset state, such that(以至于) any other thread that is waiting on the AutoResetEvent must wait for it to be signaled again.

Let's consider a small example where two threads are started. The first thread will run for a period of time, and will then signal (calling Set) a AutoResetEvent that started out in a non-signaled state; then a second thread will wait for the AutoResetEvent to be signaled. The second thread will also wait for a second AutoResetEvent; the only difference being that the second AutoResetEvent starts out in the signaled state, so this will not need to be waited for.

Here is some code that illustrates this:

using System;

using System.Threading;

namespace AutoResetEventTest

{

class Program

{

public static Thread T1;

public static Thread T2;

//This AutoResetEvent starts out non-signalled

public static AutoResetEvent ar1 = new AutoResetEvent(false);

//This AutoResetEvent starts out signalled

public static AutoResetEvent ar2 = new AutoResetEvent(true);

static void Main(string[] args)

{

T1 = new Thread((ThreadStart)delegate

{

Console.WriteLine(

"T1 is simulating some work by sleeping for 5 secs");

//calling sleep to simulate some work

Thread.Sleep(5000);

Console.WriteLine(

"T1 is just about to set AutoResetEvent ar1");

//alert waiting thread(s)

ar1.Set();

});

T2 = new Thread((ThreadStart)delegate

{

//wait for AutoResetEvent ar1, this will wait for ar1 to be signalled

//from some other thread

Console.WriteLine(

"T2 starting to wait for AutoResetEvent ar1, at time {0}",

DateTime.Now.ToLongTimeString());

ar1.WaitOne();//阻塞并等待ar1的信号

Console.WriteLine(

"T2 finished waiting for AutoResetEvent ar1, at time {0}",

DateTime.Now.ToLongTimeString());

//wait for AutoResetEvent ar2, this will skip straight through

//as AutoResetEvent ar2 started out in the signalled state

Console.WriteLine(

"T2 starting to wait for AutoResetEvent ar2, at time {0}",

DateTime.Now.ToLongTimeString());

ar2.WaitOne();//由于构造为初始有信息号的方式,不用等待ar2接收信号

Console.WriteLine(

"T2 finished waiting for AutoResetEvent ar2, at time {0}",

DateTime.Now.ToLongTimeString());

});

T1.Name = "T1";

T2.Name = "T2";

T1.Start();

T2.Start();

Console.ReadLine();

}

}

}

This results in the following output, where it can be seen that T1 waits for 5 secs (simulated work) and T2 waits for the AutoResetEvent "ar1" to be put into signaled state, but doesn't have to wait for AutoResetEvent "ar2" as it's already in the signaled state when it is constructed.

here is the another way explaination of AutoResetEvent, check it out :

An AutoResetEvent is like a ticket turnstile: inserting a ticket lets exactly one person through. The “auto” in the class’s name refers to the fact that an open turnstile automatically closes or “resets” after someone steps through. A thread waits, or blocks, at the turnstile by calling WaitOne (wait at this “one” turnstile until it opens), and a ticket is inserted by calling the Set method. If a number of threads call WaitOne, a queue builds up behind the turnstile. (As with locks, the fairness of the queue can sometimes be violated due to nuances in the operating system). A ticket can come from any thread; in other words, any (unblocked) thread with access to the AutoResetEvent object can callSet on it to release one blocked thread.

You can create an AutoResetEvent in two ways. The first is via its constructor:

var auto = new AutoResetEvent (false);

(Passing true into the constructor is equivalent to immediately calling Set upon it.) The second way to create anAutoResetEvent is as follows:

var auto = new EventWaitHandle (false, EventResetMode.AutoReset);

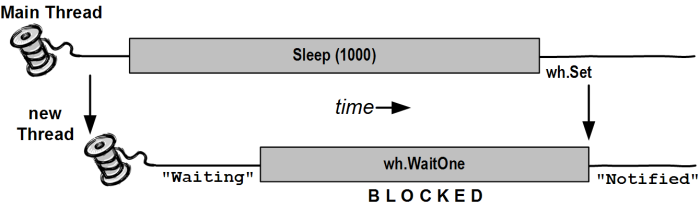

In the following example, a thread is started whose job is simply to wait until signaled by another thread:

class BasicWaitHandle

{

static EventWaitHandle _waitHandle = new AutoResetEvent (false);

static void Main()

{

new Thread (Waiter).Start();

Thread.Sleep (1000); // Pause for a second...

_waitHandle.Set(); // Wake up the Waiter.

}

static void Waiter()

{

Console.WriteLine ("Waiting...");

_waitHandle.WaitOne(); // Wait for notification

Console.WriteLine ("Notified");

}

}

the console window will write the message showing below:

"Waiting... (pause) Notified.

If Set is called when no thread is waiting, the handle stays open for as long as it takes until some thread callsWaitOne. This behavior helps avoid a race between a thread heading for the turnstile, and a thread inserting a ticket (“Oops, inserted the ticket a microsecond too soon, bad luck, now you’ll have to wait indefinitely!”). However, callingSet repeatedly on a turnstile at which no one is waiting doesn’t allow a whole party through when they arrive: only the next single person is let through and the extra tickets are “wasted.” (hehe, very funny right? but how about many thread waitone ,but only one tickect, which one could be through the turnstile?)

Calling Reset on an AutoResetEvent closes the turnstile (should it be open) without waiting or blocking.

WaitOne accepts an optional timeout parameter, returning false if the wait ended because of a timeout rather than obtaining the signal.

Disposing Wait Handles

Once you’ve finished with a wait handle, you can call its Close method to release the operating system resource. Alternatively, you can simply drop all references to the wait handle and allow the garbage collector to do the job for you sometime later (wait handles implement the disposal pattern whereby the finalizer calls Close). This is one of the few scenarios where relying on this backup is (arguably) acceptable, because wait handles have a light OS burden (asynchronous delegates rely on exactly this mechanism to release their IAsyncResult’s wait handle).

Wait handles are released automatically when an application domain unloads.

Two-way signaling

Let’s say we want the main thread to signal a worker thread three times in a row. If the main thread simply calls Seton a wait handle several times in rapid succession, the second or third signal may get lost, since the worker may take time to process each signal.

The solution is for the main thread to wait until the worker’s ready before signaling it. This can be done with anotherAutoResetEvent, as follows:

class TwoWaySignaling { static EventWaitHandle _ready = new AutoResetEvent (false); static EventWaitHandle _go = new AutoResetEvent (false); static readonly object _locker = new object(); static string _message; static void Main() { new Thread (Work).Start(); _ready.WaitOne(); // First wait until worker is ready lock (_locker) _message = "ooo"; _go.Set(); // Tell worker to go _ready.WaitOne(); lock (_locker) _message = "ahhh"; // Give the worker another message _go.Set(); _ready.WaitOne(); lock (_locker) _message = null; // Signal the worker to exit _go.Set(); } static void Work() { while (true) { _ready.Set(); // Indicate that we're ready _go.WaitOne(); // Wait to be kicked off... lock (_locker) { if (_message == null) return; // Gracefully exit Console.WriteLine (_message); } } } }

let 's see the console message below:

ooo

ahhh

Here, we’re using a null message to indicate that the worker should end. With threads that run indefinitely, it’s important to have an exit strategy!

Producer/consumer queue

A producer/consumer queue is a common requirement in threading. Here’s how it works:

- A queue is set up to describe work items — or data upon which work is performed.

- When a task needs executing, it’s enqueued, allowing the caller to get on with other things.

- One or more worker threads plug away in the background, picking off and executing queued items.

The advantage of this model is that you have precise control over how many worker threads execute at once. This can allow you to limit consumption of not only CPU time, but other resources as well. If the tasks perform intensive disk I/O, for instance, you might have just one worker thread to avoid starving the operating system and other applications. Another type of application may have 20. You can also dynamically add and remove workers throughout the queue’s life. The CLR’s thread pool itself is a kind of producer/consumer queue.

A producer/consumer queue typically holds items of data upon which (the same) task is performed. For example, the items of data may be filenames, and the task might be to encrypt those files.

In the example below, we use a single AutoResetEvent to signal a worker, which waits when it runs out of tasks (in other words, when the queue is empty). We end the worker by enqueing a null task:

using System;

using System.Threading;

using System.Collections.Generic;

class ProducerConsumerQueue : IDisposable

{

EventWaitHandle _wh = new AutoResetEvent (false);

Thread _worker;

readonly object _locker = new object();

Queue<string> _tasks = new Queue<string>();

public ProducerConsumerQueue()

{

_worker = new Thread (Work);

_worker.Start();

}

public void EnqueueTask (string task)

{

lock (_locker) _tasks.Enqueue (task);

_wh.Set();

}

public void Dispose()

{

EnqueueTask (null); // Signal the consumer to exit.

_worker.Join(); // Wait for the consumer's thread to finish.

_wh.Close(); // Release any OS resources.

}

void Work()

{

while (true)

{

string task = null;

lock (_locker)

if (_tasks.Count > 0)

{

task = _tasks.Dequeue();

if (task == null) return;

}

if (task != null)

{

Console.WriteLine ("Performing task: " + task);

Thread.Sleep (1000); // simulate work...

}

else

_wh.WaitOne(); // No more tasks - wait for a signal

}

}

}

To ensure thread safety, we used a lock to protect access to the Queue<string> collection. We also explicitly closed the wait handle in our Dispose method, since we could potentially create and destroy many instances of this class within the life of the application.

Here's a main method to test the queue:

static void Main()

{

using (ProducerConsumerQueue q = new ProducerConsumerQueue())

{

q.EnqueueTask ("Hello");

for (int i = 0; i < 10; i++) q.EnqueueTask ("Say " + i);

q.EnqueueTask ("Goodbye!");

}

// Exiting the using statement calls q's Dispose method, which

// enqueues a null task and waits until the consumer finishes.

}

Performing task: Hello Performing task: Say 1 Performing task: Say 2 Performing task: Say 3 ... ... Performing task: Say 9 Goodbye!

ManualResetEvent

From MSDN:

"When a thread begins an activity that must complete before other threads proceed, it calls

Resetto putManualResetEventin the non-signaled state. This thread can be thought of as controlling theManualResetEvent. Threads that callWaitOneon theManualResetEventwill block, awaiting the signal. When the controlling thread completes the activity, it calls Set to signal that the waiting threads can proceed. All waiting threads are released.Once it has been signaled,

ManualResetEventremains signaled until it is manually reset. That is, calls toWaitOnereturn immediately."

In laymen's terms, when using a ManualResetEvent, when the ManualResetEvent is set to signaled, all threads that were blocking (waiting) on it will now be allowed to proceed, until the ManualResetEvent is put into a reset state.

Consider the following code snippet:

using System;

using System.Threading;

namespace ManualResetEventTest

{

/// <summary>

/// This simple class demonstrates the usage of an ManualResetEvent

/// in 2 different scenarios, bith in the non-signalled state and the

/// signalled state

/// </summary>

class Program

{

public static Thread T1;

public static Thread T2;

public static Thread T3;

//This ManualResetEvent starts out non-signalled

public static ManualResetEvent mr1 = new ManualResetEvent(false);

static void Main(string[] args)

{

T1 = new Thread((ThreadStart)delegate

{

Console.WriteLine(

"T1 is simulating some work by sleeping for 5 secs");

//calling sleep to simulate some work

Thread.Sleep(5000);

Console.WriteLine(

"T1 is just about to set ManualResetEvent ar1");

//alert waiting thread(s)

mr1.Set();

});

T2 = new Thread((ThreadStart)delegate

{

//wait for ManualResetEvent mr1, this will wait for ar1

//to be signalled from some other thread

Console.WriteLine(

"T2 starting to wait for ManualResetEvent mr1, at time {0}",

DateTime.Now.ToLongTimeString());

mr1.WaitOne();

Console.WriteLine(

"T2 finished waiting for ManualResetEvent mr1, at time {0}",

DateTime.Now.ToLongTimeString());

});

T3 = new Thread((ThreadStart)delegate

{

//wait for ManualResetEvent mr1, this will wait for ar1

//to be signalled from some other thread

Console.WriteLine(

"T3 starting to wait for ManualResetEvent mr1, at time {0}",

DateTime.Now.ToLongTimeString());

mr1.WaitOne();

Console.WriteLine(

"T3 finished waiting for ManualResetEvent mr1, at time {0}",

DateTime.Now.ToLongTimeString());

});

T1.Name = "T1";

T2.Name = "T2";

T3.Name = "T3";

T1.Start();

T2.Start();

T3.Start();

Console.ReadLine();

}

}

}

Which results in the following screenshot:

It can be seen that three threads (T1-T3) are started, T2 and T3 are both waiting on the ManualResetEvent"mr1", which is only put into the signaled state within thread T1's code block. When T1 puts theManualResetEvent "mr1" into the signaled state (by calling the Set() method), this allows waiting threads to proceed. As T2 and T3 are both waiting on the ManualResetEvent "mr1", and it is in the signaled state, both T2 and T3 proceed. The ManualResetEvent "mr1" is never reset, so both threads T2 and T3 are free to proceed to run their respective code blocks.

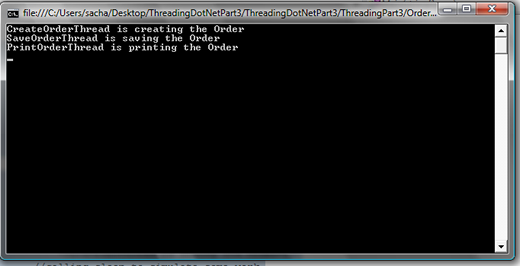

Back to the Original Problem

Recall the original problem:

- We need to create an order

- We need to save the order, but can't do this until we get an order number

- We need to print the order, but only once it's saved in the database

This should now be pretty easy to solve. All we need are some WaitHandles that are used to control the execution order, where by step 2 waits on a WaitHandle that step 1 signals, and step 3 waits on a WaitHandle that step 2 signals. Simple, huh? Shall we see some example code? Here is some, I simply chose to use an AutoResetEvent:

using System;

using System;

using System.Threading;

namespace OrderSystem

{

/// <summary>

/// This simple class demonstrates the usage of an AutoResetEvent

/// to create some synchronized threads that will carry out the

/// following

/// -CreateOrder

/// -SaveOrder

/// -PrintOrder

///

/// Where it is assumed that these 3 task MUST be executed in this

/// order, and are interdependant

/// </summary>

public class Program

{

public static Thread CreateOrderThread;

public static Thread SaveOrderThread;

public static Thread PrintOrderThread;

//This AutoResetEvent starts out non-signalled

public static AutoResetEvent ar1 = new AutoResetEvent(false);

public static AutoResetEvent ar2 = new AutoResetEvent(false);

static void Main(string[] args)

{

CreateOrderThread = new Thread((ThreadStart)delegate

{

Console.WriteLine(

"CreateOrderThread is creating the Order");

//calling sleep to simulate some work

Thread.Sleep(5000);

//alert waiting thread(s)

ar1.Set();

});

SaveOrderThread = new Thread((ThreadStart)delegate

{

//wait for AutoResetEvent ar1, this will wait for ar1

//to be signalled from some other thread

ar1.WaitOne();

Console.WriteLine(

"SaveOrderThread is saving the Order");

//calling sleep to simulate some work

Thread.Sleep(5000);

//alert waiting thread(s)

ar2.Set();

});

PrintOrderThread = new Thread((ThreadStart)delegate

{

//wait for AutoResetEvent ar1, this will wait for ar1

//to be signalled from some other thread

ar2.WaitOne();

Console.WriteLine(

"PrintOrderThread is printing the Order");

//calling sleep to simulate some work

Thread.Sleep(5000);

});

CreateOrderThread.Name = "CreateOrderThread";

SaveOrderThread.Name = "SaveOrderThread";

PrintOrderThread.Name = "PrintOrderThread";

CreateOrderThread.Start();

SaveOrderThread.Start();

PrintOrderThread.Start();

Console.ReadLine();

}

}

}

Which produces the following screenshot:

Another way of explaining ManualResetEvent , check it out :

A ManualResetEvent functions like an ordinary gate. Calling Set opens the gate, allowing any number of threads calling WaitOne to be let through. Calling Reset closes the gate. Threads that call WaitOne on a closed gate will block; when the gate is next opened, they will be released all at once. Apart from these differences, aManualResetEvent functions like an AutoResetEvent.

As with AutoResetEvent, you can construct a ManualResetEvent in two ways:

var manual1 = new ManualResetEvent (false); var manual2 = new EventWaitHandle (false, EventResetMode.ManualReset);

Signaling Constructs and Performance

Waiting or signaling an AutoResetEvent or ManualResetEvent takes about one microsecond (assuming no blocking).

ManualResetEventSlim and CountdownEvent can be up to 50 times faster in short-wait scenarios, because of their nonreliance on the operating system and judicious use of spinning constructs.

In most scenarios, however, the overhead of the signaling classes themselves doesn’t create a bottleneck, and so is rarely a consideration. An exception is with highly concurrent code, which we’ll discuss in Part 5.

A ManualResetEvent is useful in allowing one thread to unblock many other threads. The reverse scenario is covered by CountdownEvent.

CountdownEvent

CountdownEvent lets you wait on more than one thread. The class is new to Framework 4.0 and has an efficient fully managed implementation.

To use CountdownEvent, instantiate the class with the number of threads or “counts” that you want to wait on:

var countdown = new CountdownEvent (3); // Initialize with "count" of 3.

Calling Signal decrements the “count”; calling Wait blocks until the count goes down to zero. For example:

static CountdownEvent _countdown = new CountdownEvent (3);

static void Main()

{

new Thread (SaySomething).Start ("I am thread 1");

new Thread (SaySomething).Start ("I am thread 2");

new Thread (SaySomething).Start ("I am thread 3");

_countdown.Wait(); // Blocks until Signal has been called 3 times

Console.WriteLine ("All threads have finished speaking!");

}

static void SaySomething (object thing)

{

Thread.Sleep (1000);

Console.WriteLine (thing);

_countdown.Signal();

}

You can reincrement a CountdownEvent’s count by calling AddCount. However, if it has already reached zero, this throws an exception: you can’t “unsignal” a CountdownEvent by calling AddCount. To avoid the possibility of an exception being thrown, you can instead call TryAddCount, which returns false if the countdown is zero.

To unsignal a countdown event, call Reset: this both unsignals the construct and resets its count to the original value.

Like ManualResetEventSlim, CountdownEvent exposes a WaitHandle property for scenarios where some other class or method expects an object based on WaitHandle.

posted on 2012-06-02 21:24 malaikuangren 阅读(5342) 评论(0) 编辑 收藏 举报