logistic回归学习

根据机器学习实战书的第五章,logistic回归,以下为具体步骤。

1 基于最优化方法的最佳回归系数确定

1.1 梯度上升法

1 #!/usr/bin/env python 2 # -*- coding: utf-8 -*- 3 4 from numpy import * 5 6 def loadDataSet(): 7 ''' 8 打开文件并逐行读取 9 :return: 修改后的数据集,标签集 10 ''' 11 dataMat = []; labelMat = [] 12 fr = open('testSet.txt') 13 for line in fr.readlines(): 14 lineArr = line.strip().split() 15 dataMat.append([1.0, float(lineArr[0]), float(lineArr[1])]) 16 labelMat.append(int(lineArr[2])) 17 return dataMat,labelMat 18 19 20 def sigmoid(inX): 21 return 1.0/(1+exp(-inX)) 22 23 24 def gradAscent(dataMatIn, classLabels): 25 ''' 26 logistic回归梯度上升优化算法 27 :param dataMatIn: 处理后的数据集 28 :param classLabels: 分类标签 29 :return: 权重值 30 ''' 31 dataMatrix = mat(dataMatIn) # 转化为numpy矩阵数据类型,第一列为1,100X3 32 labelMat = mat(classLabels).transpose() # 转化为numpy矩阵数据类型,100行 33 m,n = shape(dataMatrix) # m=100,n=3 34 alpha = 0.001 # 向目标移动的步长 35 maxCycles = 500 # 最大迭代次数 36 weights = ones((n,1)) # 返回一个已知大小的新的数组,用1填充,三行一列的数组 37 for k in range(maxCycles): #heavy on matrix operations 38 h = sigmoid(dataMatrix*weights) #matrix mult,h是一个列向量100x1维 39 error = (labelMat - h) #vector subtraction 40 weights = weights + alpha * dataMatrix.transpose()* error #matrix mult 41 return weights 42 43 44 def plotBestFit(weights): 45 ''' 46 画出数据集和logisitic回归最佳拟合直线的函数 47 :param weights: 48 :return: 49 ''' 50 import matplotlib.pyplot as plt 51 dataMat,labelMat=loadDataSet() 52 dataArr = array(dataMat) 53 n = shape(dataArr)[0] 54 xcord1 = []; ycord1 = [] 55 xcord2 = []; ycord2 = [] 56 for i in range(n): 57 if int(labelMat[i])== 1: 58 xcord1.append(dataArr[i,1]); ycord1.append(dataArr[i,2]) 59 else: 60 xcord2.append(dataArr[i,1]); ycord2.append(dataArr[i,2]) 61 fig = plt.figure() 62 ax = fig.add_subplot(111) 63 ax.scatter(xcord1, ycord1, s=30, c='red', marker='s') 64 ax.scatter(xcord2, ycord2, s=30, c='green') 65 x = arange(-3.0, 3.0, 0.1) 66 y = (-weights[0]-weights[1]*x)/weights[2] 67 ax.plot(x, y) 68 plt.xlabel('X1'); plt.ylabel('X2'); 69 plt.show() 70 71 if __name__ == '__main__': 72 dataArr,labelMat=loadDataSet() 73 weight=gradAscent(dataArr,labelMat) # weight: [[ 4.12414349][ 0.48007329] [-0.6168482 ]] 74 plotBestFit(weight.getA()) # getA,以 ndarray 形式

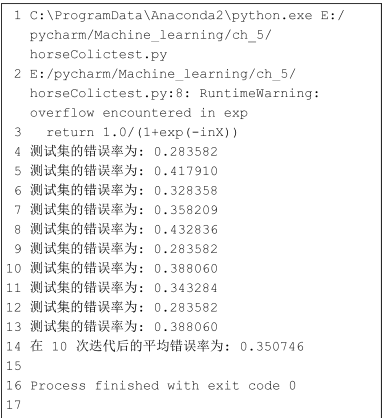

结果图如下:

1.2随机梯度上升

由于梯度上升算法在每次更新回归系数时都需要遍历整个数据集,计算复杂度高,改进的方法是一次只用一个样本点来更新回归系数,就是随机梯度上升算法。

1 #!/usr/bin/env python 2 # -*- coding: utf-8 -*- 3 4 from numpy import * 5 6 def loadDataSet(): 7 ''' 8 打开文件并逐行读取 9 :return: 修改后的数据集,标签集 10 ''' 11 dataMat = []; labelMat = [] 12 fr = open('testSet.txt') 13 for line in fr.readlines(): 14 lineArr = line.strip().split() 15 dataMat.append([1.0, float(lineArr[0]), float(lineArr[1])]) 16 labelMat.append(int(lineArr[2])) 17 return dataMat,labelMat 18 19 20 def sigmoid(inX): 21 return 1.0/(1+exp(-inX)) 22 23 24 def stocGradAscent0(dataMatrix, classLabels): 25 m,n = shape(dataMatrix) 26 alpha = 0.01 27 weights = ones(n) #ndarry,三行一列 28 for i in range(m): 29 h = sigmoid(sum(dataMatrix[i]*weights)) 30 error = classLabels[i] - h 31 weights = weights + alpha * error * dataMatrix[i] 32 return weights 33 34 def plotBestFit(weights): 35 ''' 36 画出数据集和logisitic回归最佳拟合直线的函数 37 :param weights: 38 :return: 39 ''' 40 import matplotlib.pyplot as plt 41 dataMat,labelMat=loadDataSet() 42 dataArr = array(dataMat) 43 n = shape(dataArr)[0] 44 xcord1 = []; ycord1 = [] 45 xcord2 = []; ycord2 = [] 46 for i in range(n): 47 if int(labelMat[i])== 1: 48 xcord1.append(dataArr[i,1]); ycord1.append(dataArr[i,2]) 49 else: 50 xcord2.append(dataArr[i,1]); ycord2.append(dataArr[i,2]) 51 fig = plt.figure() 52 ax = fig.add_subplot(111) 53 ax.scatter(xcord1, ycord1, s=30, c='red', marker='s') 54 ax.scatter(xcord2, ycord2, s=30, c='green') 55 x = arange(-3.0, 3.0, 0.1) 56 y = (-weights[0]-weights[1]*x)/weights[2] 57 ax.plot(x, y) 58 plt.xlabel('X1'); plt.ylabel('X2'); 59 plt.show() 60 61 if __name__ == '__main__': 62 dataArr,labelMat=loadDataSet() 63 #weight=stocGradAscent0(dataArr,labelMat) 64 weight = stocGradAscent0(array(dataArr), labelMat) 65 #plotBestFit(weight.getA()) # getA,以 ndarray 形式 66 ''' 67 ndarry是numpy中的一个N维数组对象,可以进行矢量算术运算, 68 它是一个通用的同构数据多维容器,即其中的所有元素必须是相同类型的。 69 可以使用array函数创建数组,每个数组都有一个shape(一个表示各维度大小的元组) 70 和一个dtype(一个用于说明数组数据类型的对象) 71 ''' 72 plotBestFit(weight)

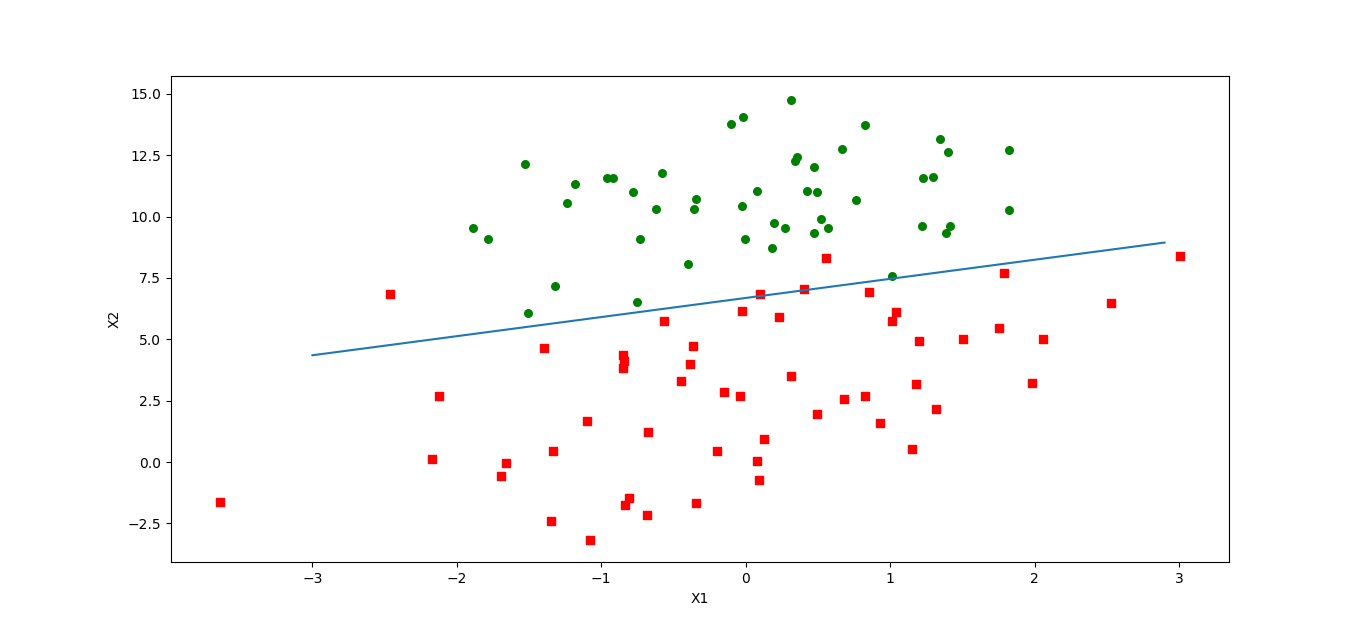

实验结果如下:

分类器错分了三分之一的样本。

1.3从疝气病症预测病马的死亡率

改进了随机梯度上升算法:(1)alpah每次迭代时都进行调整 (2)随机选取样本来更新回归系数

1 #!/usr/bin/env python 2 # -*- coding: utf-8 -*- 3 4 from numpy import * 5 6 7 def sigmoid(inX): 8 return 1.0/(1+exp(-inX)) 9 10 11 def stocGradAscent1(dataMatrix, classLabels, numIter=150): 12 m,n = shape(dataMatrix) 13 weights = ones(n) #initialize to all ones 14 for j in range(numIter): 15 dataIndex = range(m) 16 for i in range(m): 17 alpha = 4/(1.0+j+i)+0.0001 #apha decreases with iteration, does not 18 randIndex = int(random.uniform(0,len(dataIndex)))#go to 0 because of the constant 19 h = sigmoid(sum(dataMatrix[randIndex]*weights)) 20 error = classLabels[randIndex] - h 21 weights = weights + alpha * error * dataMatrix[randIndex] 22 del(dataIndex[randIndex]) 23 return weights 24 25 26 def classifyVector(inX, weights): 27 ''' 28 29 :param inX: 特征向量 30 :param weights: 回归系数 31 :return: 0或者1 32 ''' 33 prob = sigmoid(sum(inX*weights)) 34 if prob > 0.5: return 1.0 35 else: return 0.0 36 37 38 def colicTest(): 39 ''' 40 打开测试集或者训练集 41 :return: 42 ''' 43 frTrain = open('horseColicTraining.txt'); frTest = open('horseColicTest.txt') 44 trainingSet = []; trainingLabels = [] 45 for line in frTrain.readlines(): 46 currLine = line.strip().split('\t') 47 lineArr =[] 48 for i in range(21): 49 lineArr.append(float(currLine[i])) 50 trainingSet.append(lineArr) 51 trainingLabels.append(float(currLine[21])) 52 # 计算回归系数向量 53 trainWeights = stocGradAscent1(array(trainingSet), trainingLabels, 1000) 54 errorCount = 0; numTestVec = 0.0 55 for line in frTest.readlines(): 56 numTestVec += 1.0 57 currLine = line.strip().split('\t') 58 lineArr =[] 59 for i in range(21): 60 lineArr.append(float(currLine[i])) 61 if int(classifyVector(array(lineArr), trainWeights))!= int(currLine[21]): 62 errorCount += 1 63 errorRate = (float(errorCount)/numTestVec) 64 print "测试集的错误率为: %f" % errorRate 65 return errorRate 66 67 68 def multiTest(): 69 numTests = 10; errorSum=0.0 70 for k in range(numTests): 71 errorSum += colicTest() 72 print "在 %d 次迭代后的平均错误率为: %f" % (numTests, errorSum/float(numTests)) 73 74 if __name__ == '__main__': 75 multiTest()

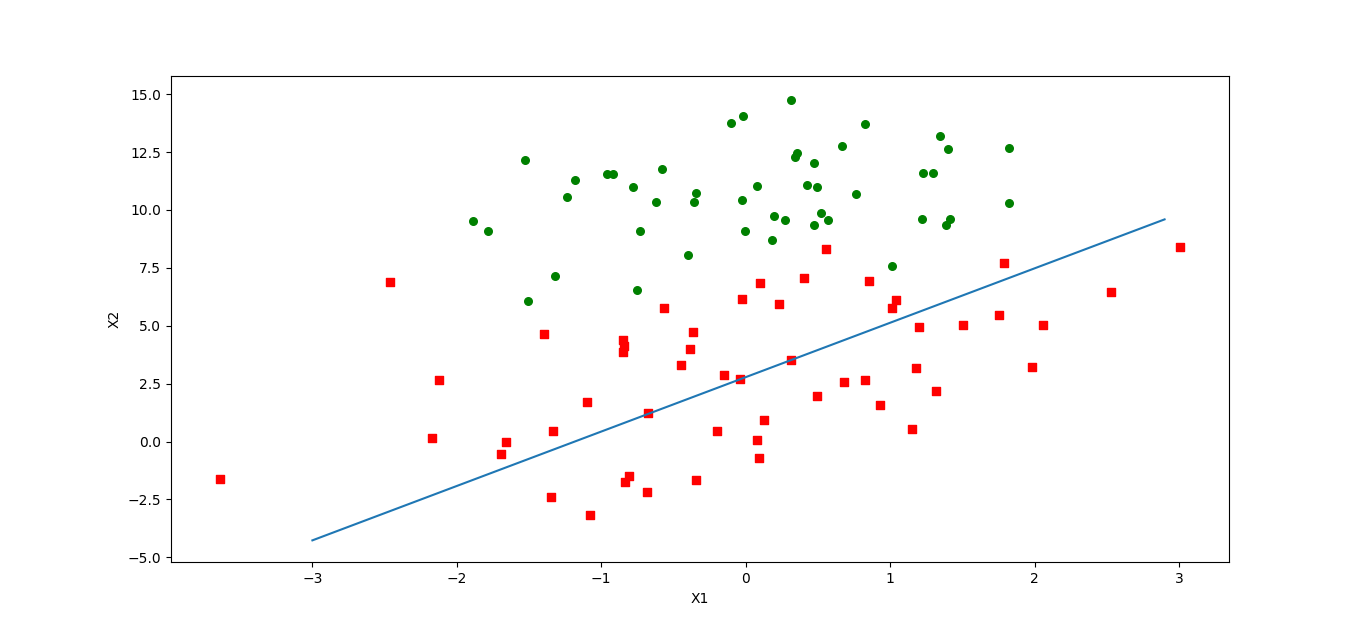

结果如下: