Kubernetes08-深入掌握Service

- Service是Kubernetes的核心概念,通过创建Service,可以为一组具有相同功能的容器应用提供一个统一的入口地址,并且将请求负载分发到后端的各个容器应用上。

1、Service定义详解

apiVersion: v1

kind: Service

metadata: #元数据

name: string #Service的名称,需符合RFC1035规范

namespace: string #名称空间,不指定系统时将使用名为“default”的名称空间

labels: #自定义标签属性列表

- name: string

annotations: #自定义注解属性列表

- name: string

spec:

selector: [] #Label Selector配置,将选择具有指定Label(标签)的Pod作为它的后端

type: string #Service的类型,指定Service的访问方式。可选值是ClusterIP、NodePort或LoadBalancer,默认值是ClusterIP。

clusterIP: string #Service的IP地址。当type=ClusterIP、NodeIP时,如果不指定,则系统会自动分配;当type=LoadBalancer时,则需要指定。

sessionAffinity: string #是否支持Session,可选值是None、ClientIP,默认值为None。ClientIP:表示将同一个客户端(根据客户端的IP地址决定)的请求都转发到同一个后端Pod。

ports: #Service需要暴露的端口列表

- name: string #端口名称

protocol: string #端口协议,支持TCP和UDP,默认值是TCP

port: int #Service监听的端口号

targetPort: int #后端Pod的端口号

nodePort: int #当spec.type=NodePort时,指定映射到物理机的端口号

status: #当spec.type=LoadBalancer时,设置外部负载均衡器的地址,用于公有云环境

loadBalancer: #外部负载均衡器

ingress: #外部负载均衡器

ip: string #外部负我均衡苦苦的IP 地址

hostname: string #外部负载均衡器的主机名

2、Service的基本用法

- 为什么要使用Service?

- Pod是非永久性资源,并且每个Pod都有自己的IP地址。

- Deployment可能会在同一时刻运行多个Pod,并且可能会随时销毁或创建pod(即pod的ip地址是变动的)。

- Service可以通过的标签选择器选择一组Pod,并提供一个对外的固定端口。

- Service通过Endpoints获取后端pod:

- endpoint是k8s集群中的一个资源对象,存储在etcd中,用来记录一个service对应的所有pod的IP地址。

- 只有service配置了selector,endpoint controller才会自动创建对应的endpoint对象(与Servic同名);否则,就不会创建endpoint对象。

- 获取资源Endpoints(kubectl get ep -o wide -A)

- 当Pod的地址发生变化时,EndPoints也随之变化。Service接收到请求时,就能通过EndPoints找到请求转发的目标地址。

- 通过spec.type设定Service的类型:

- ClusterIP:虚拟的服务IP地址,只能在Kubemetes集群内部对Pod进行访问,在Node上kube-proxy通过设置的iptables规则进行转发。

- NodePort:使用宿主机的端口,使客户端可以通过各<节点 IP>:<节点端口>访问pod中的服务。

- LoadBalancer:使用外接负载均衡器完成到服务的负载分发,需要在spec.status.loadBalancer字段指定外部负载均衡器的IP地址,并同时定义nodePort和clusterIP ,用于公有云环境。

- ExternalName:通过返回CNAME和对应值,可以将服务映射到externalName字段的内容(例如,foo.bar.example.com)。无需创建任何类型代理。(让pod访问k8s集群外部的服务)

示例:创建用于Service示例的Deployment

1、创建Deployment的yaml文件

apiVersion: apps/v1

kind: Deployment

metadata:

name: svc-nginx-deployment

namespace: default

spec:

selector:

matchLabels:

app: svc-nginx-pod

replicas: 2

template:

metadata:

labels:

app: svc-nginx-pod

spec:

volumes:

- name: html

hostPath:

path: /apps/html

containers:

- name: svc-nginx-container

image: nginx:latest

imagePullPolicy: Never

command: ["/usr/sbin/nginx", "-g", "daemon off;"]

ports:

- containerPort: 80

volumeMounts:

- mountPath: /usr/share/nginx/html

name: html

2、创建并查看pod

]# kubectl apply -f svc-nginx-deployment.yaml ]# kubectl get pod -o wide -A NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES default svc-nginx-deployment-58c7c77486-6x4c6 1/1 Running 0 10m 10.10.169.131 k8s-node2 <none> <none> default svc-nginx-deployment-58c7c77486-phcqm 1/1 Running 0 10m 10.10.36.67 k8s-node1 <none> <none>

3、通过PodIP:podPort访问nginx

]# echo '<h1>12</h1>' > /apps/html/index.html ]# echo '<h1>13</h1>' > /apps/html/index.html ]# curl 10.10.169.131:80 ]# curl 10.10.36.67:80

2.1、Service类型之ClusterIP

- 只能在Kubemetes集群内部对Pod进行访问。

- 配置clusterIP:

- 在Service创建的请求中,可以通过设置spec.clusterIP字段来指定自己的集群IP地址。

- 用户选择的IP地址必须合法,并且这个IP地址在service-cluster-ip-range CIDR范围内,这对API服务器来说是通过一个标识来指定的。如果IP地址不合法,API服务器会返回HTTP状态码422,表示值不合法。

示例:

- 使用svc-nginx-deployment控制器创建的Pod。

1、创建Service的yaml文件

apiVersion: v1

kind: Service

metadata:

name: nginx-svc

namespace: default

labels:

app: nginx-svc

spec:

selector:

app: svc-nginx-pod

type: ClusterIP #type的默认值是ClusterIP。因此当type是ClusterIP时,可以不指定。

clusterIP: 10.20.20.20 #虚拟服务IP地址。如果不指定,则系统会自动分配

ports:

- name: nginx

port: 8888

targetPort: 80

2、创建Service

]# kubectl apply -f nginx-svc.yaml

3、查看Service

]# kubectl get svc -o wide -A NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR default nginx-svc ClusterIP 10.20.20.20 <none> 8888/TCP 31s app=svc-nginx-pod

4、访问nginx

//通过PodIP:podPort访问 ]# curl 10.10.169.131:80 ]# curl 10.10.36.67:80 //通过ServiceIP:ServicePort访问 ]# curl 10.20.20.20:8888

2.2、Service类型之NodePort

- 客户端可以通过各<节点 IP>:<节点端口>访问pod中的服务。

示例:

- 使用svc-nginx-deployment控制器创建的Pod。

1、创建Service的yaml文件

apiVersion: v1

kind: Service

metadata:

name: nginx-svc

namespace: default

labels:

app: nginx-svc

spec:

selector:

app: svc-nginx-pod

type: NodePort #type=NodePort

clusterIP: 10.20.20.20 #虚拟服务IP地址。如果不指定,则系统会自动分配

ports:

- name: nginx

port: 8888

nodePort: 8880

targetPort: 80

2、创建Service

]# kubectl apply -f nginx-svc.yaml

3、查看Service

]# kubectl get svc -o wide -A NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR default nginx-svc NodePort 10.20.20.20 <none> 8888:8880/TCP 14s app=svc-nginx-pod

4、访问nginx

//通过PodIP:podPort访问 ]# curl 10.10.169.131:80 ]# curl 10.10.36.67:80 //通过ServiceIP:ServicePort访问 ]# curl 10.20.20.20:8888 //通过NodeIP:NodePort访问 ]# curl 10.1.1.12:8880

2.3、多端口Service

- 如果一个pod应用提供了多个端口,那么在Service的定义中也可以相应地设置多个端口,并一一对应到各个pod端口。

示例1:

apiVersion: v1

kind: Service

metadata:

name: test-svc

namespace: default

labels:

app: test-svc

spec:

selector:

app: test-pod

ports:

- name: web #!

port: 80

targetPort: 80

- name: management #!

port: 7676

targetPort: 7676

示例2:

- 两个端口号使用了不同的4层协议—TCP和UDP

apiVersion: v1

kind: Service

metadata:

name: test-svc

namespace: default

labels:

app: test-svc

spec:

selector:

app: test-pod

ports:

- name: dns

port: 53

targetPort: 53

protocol: UDP #!

- name: dns-tcp

port: 53

targetPort: 53

protocol: TCP #!

2.4、外部服务Service

- 外部服务Service可以将Kubernetes外部的一个进程,或将另一个Kubernetes集群或Namespace中的pod作为它的端点。

- 希望在生产环境中使用Kubernetes外部的数据库集群,但测试环境使用Kubernetes内部的数据库。

- 希望Service指向另一个名字空间(Namespace)中或其它Kubernetes集群中的pod。

- 正在将工作负载迁移到Kubernetes。在评估该方法时,你仅在Kubernetes中运行一部分后端。

- 实现外部服务Service只需要不配置标签选择器即可(将service.spec.selector设置为空,或者不配置)

- (1)创建一个不带标签选择器的Service,该Service不会选择后端的Pod,系统也不会自动创建Endpoint。

- (2)需要手动创建一个和该Service同名的Endpoint,用于指向实际的后端访问地址。

- Endpoints中的IP地址不能是Kubernetes的Serice IP,因为kube-proxy不支持将虚拟IP作为目标。

示例:

1、安装nginx

- 在10.1.1.11上安装nginx

]# yum install nginx ]# systemctl start nginx ]# echo '<h1>nginx 10.1.1.11</h1>' > /usr/share/nginx/html/index.html ]# curl 10.1.1.11:80 <h1>nginx 10.1.1.11</h1>

2、创建Service和Endpoints的yaml文件

- 如果在ServicePort中定义了“name”字段,则EndpointPort中的“name”字段的值必须和它一样,否则将不能正常访问服务。

- Service和Endpoint的名字要相同.

apiVersion: v1

kind: Service

metadata:

name: ep-nginx-svc #!

spec:

ports:

- port: 18888

targetPort: 80

name: nginx #!

---

apiVersion: v1

kind: Endpoints

metadata:

name: ep-nginx-svc #!

subsets:

- addresses:

- ip: 10.1.1.11 #外部服务所在机器的IP地址

ports:

- port: 80 #外部服务监听的端口

name: nginx #!

3、创建,并查看Service和Endpoints

//创建Service和Endpoints ]# kubectl apply -f ep-nginx-svc.yaml //查看Service ]# kubectl get svc -o wide -A NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR default ep-nginx-svc ClusterIP 10.20.199.164 <none> 18888/TCP 91s <none> //查看Endpoints ]# kubectl get ep -o wide -A NAMESPACE NAME ENDPOINTS AGE default ep-nginx-svc 10.1.1.11:80 106s //查看Service的详情 ]# kubectl describe svc ep-nginx-svc Name: ep-nginx-svc Namespace: default Labels: <none> Annotations: <none> Selector: <none> Type: ClusterIP IP Families: <none> IP: 10.20.199.164 IPs: 10.20.199.164 Port: nginx 18888/TCP TargetPort: 80/TCP Endpoints: 10.1.1.11:80 Session Affinity: None Events: <none>

4、访问nginx

//通过ServiceIP:ServicePort访问 ]# curl 10.20.199.164:18888 <h1>nginx 10.1.1.11</h1>

2.5、无头服务(Headless Service)

- 无头服务(Headless Service):只是用Service选定后端的Pod,然后将PodIP提供给开发人员。

- 使用Headless Service的场景:

- 开发人员希望自己控制负载均衡的策略,不使用Service提供的默认负载均衡的功能。

- 应用程序希望知道属于同组服务的其他实例。

- 实现Headless Service:只需将Cluster IP(spec.clusterIP)的值设置为"None"。即不为Service设置ClusterIP(入口IP地址),仅通过Label Selector将后端的Pod列表返回给调用的客户端。

- 无头服务不会分配Cluster IP,kube-proxy不会处理它们,而且平台也不会为它们进行负载均衡和路由。DNS如何实现自动配置,依赖于Service是否定义了标签选择器。

示例:

1、创建Service的yaml文件

apiVersion: v1

kind: Service

metadata:

name: nginx-svc

namespace: default

labels:

app: nginx-svc

spec:

selector:

app: svc-nginx-pod

clusterIP: None #clusterIP=None

ports:

- port: 8888 #需要设定,否则不能选择到pod,即查看service详情时Endpoints为空

2、创建Service

]# kubectl apply -f nginx-svc.yaml

3、查看Service

]# kubectl get svc -o wide -A NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR default nginx-svc ClusterIP None <none> 8888/TCP 4s app=svc-nginx-pod ]# kubectl describe svc nginx-svc Name: nginx-svc Namespace: default Labels: app=nginx-svc Annotations: <none> Selector: app=svc-nginx-pod Type: ClusterIP IP Families: <none> IP: None IPs: None Port: <unset> 8888/TCP TargetPort: 8888/TCP Endpoints: 10.10.169.131:8888,10.10.36.67:8888 Session Affinity: None Events: <none>

- 无头服务不具有一个特定的ClusterIP地址。对其进行访问时要先获得包含Label “app=svc-nginx-pod”的全部Pod列表,然后客户端程序自行决定如何处理这个Pod列表。例如,StatefulSet就是使用Headless Service为客户端返回多个服务地址的。

- 对于“去中心化”类的应用集群,Headless Service将非常有用。

3、从集群外部访问Pod或Service

- 由于Pod和Service都是Kubernetes集群范围内的虚拟概念,所以集群外的客户端系统无法通过Pod的IP地址或者Service的虚拟IP地址和虚拟端口号访问它们。为了让外部客户端可以访问这些服务,可以将Pod或Service的端口号映射到宿主机,以使客户端应用能够通过物理机访问容器应用。

3.1、将容器的端口映射到物理机

3.1.1、设置容器级别的hostPort

- 通过设置容器级别的hostPort,将容器应用的端口号映射到物理机上。

- 只能通过pod所在的宿主机物理地址IP访问。

示例:

1、创建deployment的yaml文件

apiVersion: apps/v1

kind: Deployment

metadata:

name: network-nginx-deployment

namespace: default

spec:

selector:

matchLabels:

app: network-nginx-pod

replicas: 2

template:

metadata:

labels:

app: network-nginx-pod

spec:

volumes:

- name: html

hostPath:

path: /apps/html

containers:

- name: network-nginx-container

image: nginx:latest

imagePullPolicy: Never

command: ["/usr/sbin/nginx", "-g", "daemon off;"]

ports:

- containerPort: 80

hostPort: 18081 #指定hostPort

volumeMounts:

- mountPath: /usr/share/nginx/html

name: html

2、创建并查看pod

]# kubectl apply -f network-nginx-deployment.yaml ]# kubectl get pods -o wide -A NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES default network-nginx-deployment-57c88d84d6-878jx 1/1 Running 0 19s 10.10.169.132 k8s-node2 <none> <none> default network-nginx-deployment-57c88d84d6-r9xrg 1/1 Running 0 19s 10.10.36.68 k8s-node1 <none> <none>

3、访问pod

]# echo '<h1>12</h1>' > /apps/html/index.html ]# echo '<h1>13</h1>' > /apps/html/index.html //通过NodeIP:NodePort访问 ]# curl 10.1.1.12:18081 ]# curl 10.1.1.13:18081

3.1.2、设置Pod级别的hostNetwork

- 通过设置Pod级别的hostNetwork=true,该Pod中所有容器的端口号都将被直接映射到物理机上。

- 只能通过pod所在的宿主机物理地址IP访问。

- 在设置hostNetwork=true时需要注意:

- 在容器的ports定义部分,如果不指定hostPort,则默认hostPort等于containerPort。

- 在容器的ports定义部分,如果指定了hostPort,则hostPort必须等于containerPort的值。

示例:

1、创建Service的yaml文件

apiVersion: apps/v1

kind: Deployment

metadata:

name: network-nginx-deployment

namespace: default

spec:

selector:

matchLabels:

app: network-nginx-pod

replicas: 2

template:

metadata:

labels:

app: network-nginx-pod

spec:

volumes:

- name: html

hostPath:

path: /apps/html

containers:

- name: network-nginx-container

image: nginx:latest

imagePullPolicy: Never

command: ["/usr/sbin/nginx", "-g", "daemon off;"]

ports:

- containerPort: 80 #!

hostPort: 80 #!

volumeMounts:

- mountPath: /usr/share/nginx/html

name: html

hostNetwork: true #!

2、创建并查看pod

]# kubectl apply -f network-nginx-deployment.yaml ]# kubectl get pods -o wide -A NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES default network-nginx-deployment-6f8db995f9-cmvb7 1/1 Running 0 11s 10.1.1.12 k8s-node1 <none> <none> default network-nginx-deployment-6f8db995f9-sdhps 1/1 Running 0 11s 10.1.1.13 k8s-node2 <none> <none>

3、访问pod

]# echo '<h1>12</h1>' > /apps/html/index.html ]# echo '<h1>13</h1>' > /apps/html/index.html //通过NodeIP:NodePort访问 ]# curl 10.1.1.12:80 ]# curl 10.1.1.13:80

3.2、将Service的端口号映射到物理机

3.2.1、设置Service的类型为NodePort

- 通过设置nodePort映射到物理机,同时设置Service的类型为NodePort。

示例:

- 见本页的“2.2、Service类型之NodePort”

3.2.2、设置Service的类型为LoadBalancer

- 通过设置LoadBalancer映射到云服务商提供的LoadBalancer地址。这种用法仅用于在公有云服务提供商的云平台上设置Service的场景。

4、DNS服务搭建和配置指南

- 作为服务发现机制的基本功能,在集群内需要能够通过服务名对服务进行访问,这就需要一个集群范围内的DNS服务来完成从服务名到ClusterIP的解析。

- DNS服务在Kubernetes的发展过程中经历了3个阶段。

- 在Kubernetes 1.2版本时,DNS服务是由SkyDNS提供的,它由4个容器组成:kube2sky、skydns、etcd和healthz。

- kube2sky容器监控Kubernetes中Service资源的变化,根据Service的名称和IP地址信息生成DNS记录,并将其保存到etcd中;

- skydns容器从etcd中读取DNS记录,并为客户端容器应用提供DNS查询服务;

- healthz容器提供对skydns服务的健康检查功能。

- 从Kubernetes 1.4版本开始,SkyDNS组件便被KubeDNS替换,主要考虑是SkyDNS组件之间通信较多,整体性能不高。KubeDNS由3个容器组成:kubedns、dnsmasq和sidecar,去掉了SkyDNS中的etcd存储,将DNS记录直接保存在内存中,以提高查询性能。

- kubedns容器监控Kubernetes中Service资源的变化,根据Service的名称和IP地址生成DNS记录,并将DNS记录保存在内存中;

- dnsmasq容器从kubedns中获取DNS记录,提供DNS缓存,为客户端容器应用提供DNS查询服务;

- sidecar提供对kubedns和dnsmasq服务的健康检查功能。

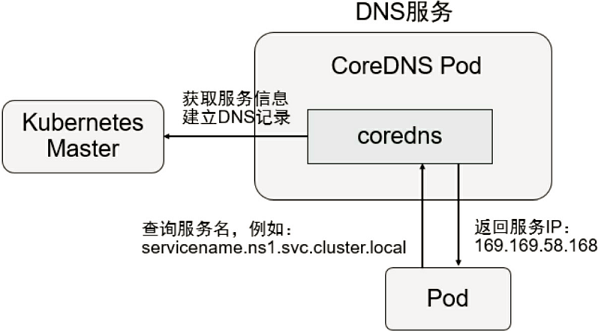

- 从Kubernetes 1.11版本开始,Kubernetes集群的DNS服务由CoreDNS提供。CoreDNS是CNCF基金会的一个项目,是用Go语言实现的高性能、插件式、易扩展的DNS服务端。CoreDNS解决了KubeDNS的一些问题,例如dnsmasq的安全漏洞、externalName不能使用stubDomains设置,等等。

- CoreDNS支持自定义DNS记录及配置upstream DNS Server,可以统一管理Kubernetes基于服务的内部DNS和数据中心的物理DNS。

- CoreDNS没有使用多个容器的架构,只用一个容器便实现了KubeDNS内3个容器的全部功能。

- CoreDNS的总体架构,如图所示:

4.1、安装CoreDNS

- 参见部署Kubernetes

4.2、Pod级别的DNS配置说明

- Pod级别的DNS策略(spec.dnsPolicy,默认为“ClusterFirst”):

- Default:继承Pod所在宿主机的DNS设置。

- ClusterFirst:优先使用Kubernetes环境的DNS服务(如CoreDNS提供的域名解析服务),将无法解析的域名转发到从宿主机继承的DNS服务器。

- ClusterFirstWithHostNet:与ClusterFirst相同,对于以hostNetwork模式运行的Pod,应明确指定使用该策略。

- None:忽略Kubernetes环境的DNS配置,通过spec.dnsConfig自定义DNS配置。这个选项从Kubernetes 1.9版本开始引入,到Kubernetes 1.10版本升级为Beta版,到Kubernetes 1.14版本升级为稳定版。

- DNSConfig中给出的DNS参数将与dnsppolicy中选择的策略合并。如果dnsPolicy选项与hostNetwork一起设置,必须明确指定DNS策略为'ClusterFirstWithHostNet'。

- 自定义DNS配置可以通过spec.dnsConfig字段进行设置,可以设置下列信息:

- nameservers:一组DNS服务器的列表,最多可以设置3个。

- searches:一组用于域名搜索的DNS域名后缀,最多可以设置6个。

- options:配置其他可选DNS参数,例如ndots、timeout等,以name或name/value对的形式表示。

示例:

1、创建Deployment的yaml文件

apiVersion: apps/v1

kind: Deployment

metadata:

name: dns-busybox-deployment

namespace: default

spec:

selector:

matchLabels:

app: dns-busybox-pod

replicas: 1

template:

metadata:

labels:

app: dns-busybox-pod

spec:

containers:

- name: dns-busybox-container

image: busybox:1.28

imagePullPolicy: IfNotPresent

command: ["/bin/sleep", "3600"]

dnsPolicy: Default #!

dnsConfig: #!

nameservers:

- 1.2.3.4

searches:

- nsl.svc.cluster.local

- my.dns.search.suffix

options:

- name: ndots

value: "2"

- name: edns0

2、创建,并查看pod

]# kubectl apply -f dns-busybox-deployment.yaml ]# kubectl get pods -o wide -A NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES default dns-busybox-deployment-658df4ccdd-8drvl 1/1 Running 0 5s 10.10.169.134 k8s-node2 <none> <none>

3、进入pod中,查看/etc/resolv.conf

- “nameserver 114.114.114.114”是宿主机/etc/resolv.conf 中的内容。

]# kubectl exec -it dns-busybox-deployment-658df4ccdd-8drvl -- /bin/sh / # cat /etc/resolv.conf nameserver 114.114.114.114 nameserver 1.2.3.4 search nsl.svc.cluster.local my.dns.search.suffix options ndots:2 edns0

5、Ingress:HTTP 7层路由机制

- ingress说明:https://kubernetes.io/zh/docs/concepts/services-networking/ingress/

- ingress controller说明:https://kubernetes.io/zh/docs/concepts/services-networking/ingress-controllers/

- https://kubernetes.github.io/ingress-nginx/

5.1、Ingress介绍

5.1.1、Ingress是什么?

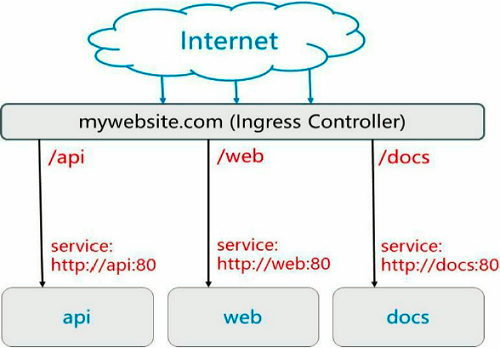

- 对于基于HTTP的服务来说,不同的URL地址经常对应到不同的后端服务或者虚拟服务器(Virtual Host),这些应用层的转发机制仅通过Kubernetes的Service机制是无法实现的。从Kubernetes 1.1版本开始新增Ingress资源对象,用于将不同URL的请求转发到后端不同的Service,以实现HTTP层的业务路由机制。

- Ingress公开了从集群外部到集群内服务(Service)的HTTP和HTTPS路由。 流量路由由Ingress资源上定义的规则控制。

- Ingress不会公开任意端口或协议。将HTTP和HTTPS以外的服务公开到Internet时,通常使用Service.Type=NodePort或Service.Type=LoadBalancer 类型的Service。

- Ingress controller通常使用负载均衡器来实现Ingress,尽管它也可以配置边缘路由器或其他前端来帮助处理流量。

- Ingress可为Service提供外部可访问的URL、负载均衡流量、终止SSL/TLS,以及基于名称的虚拟托管。

- 一个典型的HTTP层路由的例子,如图所示:

- 对http://mywebsite.com/api的访问将被路由到后端名为api的Service;

- 对http://mywebsite.com/web的访问将被路由到后端名为web的Service;

- 对http://mywebsite.com/docs的访问将被路由到后端名为docs的Service。

5.1.2、Ingress环境准备

- 使用Ingress实现一个完整的七层负载均衡器,需要两步:

- (1)安装Ingress Controller。

- (2)定义一个Ingress资源对象,用来关联需要暴露出去的Pod的Service。

- 必须有一个Ingress Controller才能满足Ingress的要求。仅创建Ingress资源本身没有任何效果。

- 可以从许多Ingress Controller中进行选择一个进行安装。不同的Ingress控制器操作略有不同。

- Haproxy:最不受待见

- Nginx:默认是应用

- Traefik:为微服务而生,

- Envoy:服务网格,做微服务倾向使用Envoy

5.1.3、Ingress规则

- kubectl explain ingress.spec.rules(每个rules都包含以下信息)

- host:

- 未指定host:适用于通过指定IP地址的所有HTTP的请求。

- 指定host:

- 如果Host是精确的,则http的Host头等于Host,则匹配该规则。

- 如果Host是一个通配符,那么如果http主机报头等于通配符规则的后缀(删除第一个标签),则请求匹配该规则。

- http:

- paths:

- backend:定义将请求转发到哪个Service。

- path:与请求的路径相匹配。路径必须以“/”开头。当未指定时,将匹配来自传入请求的所有路径。

- pathType:决定对Path匹配的解释。可选值有Exact和Prefix。

- Exact:精确匹配URL路径。

- Prefix:匹配基于URL路径前缀分割'/'。如果Prefix的值是请求路径的前缀子串,就匹配。

- paths:

- 与host和path匹配的对Ingress的HTTP(和HTTPS)请求将会被发送到backend。

- host:

5.1.4、默认后端

- kubectl explain ingress.spec.defaultBackend

- resource:是Ingress对象名称空间中另一个Kubernetes资源对象。与“Service”互斥。

- apiGroup:是被引用资源的组。如果不指定APIGroup,则指定的Kind必须在核心API组中。对于任何其他第三方类型,都需要指定APIGroup。

- kind:是被引用的资源的类型。

- name:是被引用的资源的名称。

- service:引用一个Service作为后端。与“Resource”互斥。

- name:被引用的Service。该Service必须与Ingress对象在同一个名称空间中。

- port:被引用Service的端口。IngressServiceBackend需要一个端口名称或端口号。

- resource:是Ingress对象名称空间中另一个Kubernetes资源对象。与“Service”互斥。

- 一个没有设置rules的Ingress会将所有的请求发送到defaultbackend定义的默认后端。

- defaultBackend通常是Ingress控制器的一个配置选项,不会在Ingress资源中指定。

- 如果没有指定.spec.rules,则必须指定.spec.defaultbackend。如果defaultBackend没有设置,不匹配任何规则的请求的处理将由入口控制器。

- 如果所有主机或路径都不匹配Ingress对象中的HTTP请求,流量将被路由到默认后端。

5.2、安装Ingress Controller

- 在定义Ingress策略之前,需要先部署Ingress Controller,以实现为所有后端Service都提供一个统一的入口。

- Ingress Controller需要实现基于不同HTTP URL向后转发请求,并可以灵活设置7层负载分发策略。

- 如果公有云服务商能够提供该类型的HTTP路由LoadBalancer,则也可设置其为Ingress Controller。

- 在Kubernetes中,Ingress Controller将以Pod的形式运行,监控API Server的/ingress接口后端的backend services,如果Service发生变化,则Ingress Controller应自动更新其转发规则。

- ingress controller就是一个或一组拥有七层代理能力或调度能力的应用程序的pod。

- 要安装的Ingress Controller是ingress-nginx-controller。

1、下载ingress-nginx-controller

//下载ingress-nginx-controller-v1.0.4 官网:https://github.com/kubernetes/ingress-nginx 下载:ingress-nginx-controller-v1.0.4.zip //解压 ingress-nginx-controller-v1.0.4.zip //获取ingress-nginx-controller的yaml文件 ingress-nginx-controller-v1.0.4\deploy\static\provider\baremetal\deploy.yaml

- 修改前的deploy.yaml文件

apiVersion: v1 kind: Namespace metadata: name: ingress-nginx labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx --- # Source: ingress-nginx/templates/controller-serviceaccount.yaml apiVersion: v1 kind: ServiceAccount metadata: labels: helm.sh/chart: ingress-nginx-4.0.6 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 1.0.4 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: controller name: ingress-nginx namespace: ingress-nginx automountServiceAccountToken: true --- # Source: ingress-nginx/templates/controller-configmap.yaml apiVersion: v1 kind: ConfigMap metadata: labels: helm.sh/chart: ingress-nginx-4.0.6 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 1.0.4 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: controller name: ingress-nginx-controller namespace: ingress-nginx data: allow-snippet-annotations: 'true' --- # Source: ingress-nginx/templates/clusterrole.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: labels: helm.sh/chart: ingress-nginx-4.0.6 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 1.0.4 app.kubernetes.io/managed-by: Helm name: ingress-nginx rules: - apiGroups: - '' resources: - configmaps - endpoints - nodes - pods - secrets verbs: - list - watch - apiGroups: - '' resources: - nodes verbs: - get - apiGroups: - '' resources: - services verbs: - get - list - watch - apiGroups: - networking.k8s.io resources: - ingresses verbs: - get - list - watch - apiGroups: - '' resources: - events verbs: - create - patch - apiGroups: - networking.k8s.io resources: - ingresses/status verbs: - update - apiGroups: - networking.k8s.io resources: - ingressclasses verbs: - get - list - watch --- # Source: ingress-nginx/templates/clusterrolebinding.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: labels: helm.sh/chart: ingress-nginx-4.0.6 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 1.0.4 app.kubernetes.io/managed-by: Helm name: ingress-nginx roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: ingress-nginx subjects: - kind: ServiceAccount name: ingress-nginx namespace: ingress-nginx --- # Source: ingress-nginx/templates/controller-role.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: Role metadata: labels: helm.sh/chart: ingress-nginx-4.0.6 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 1.0.4 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: controller name: ingress-nginx namespace: ingress-nginx rules: - apiGroups: - '' resources: - namespaces verbs: - get - apiGroups: - '' resources: - configmaps - pods - secrets - endpoints verbs: - get - list - watch - apiGroups: - '' resources: - services verbs: - get - list - watch - apiGroups: - networking.k8s.io resources: - ingresses verbs: - get - list - watch - apiGroups: - networking.k8s.io resources: - ingresses/status verbs: - update - apiGroups: - networking.k8s.io resources: - ingressclasses verbs: - get - list - watch - apiGroups: - '' resources: - configmaps resourceNames: - ingress-controller-leader verbs: - get - update - apiGroups: - '' resources: - configmaps verbs: - create - apiGroups: - '' resources: - events verbs: - create - patch --- # Source: ingress-nginx/templates/controller-rolebinding.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: labels: helm.sh/chart: ingress-nginx-4.0.6 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 1.0.4 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: controller name: ingress-nginx namespace: ingress-nginx roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: ingress-nginx subjects: - kind: ServiceAccount name: ingress-nginx namespace: ingress-nginx --- # Source: ingress-nginx/templates/controller-service-webhook.yaml apiVersion: v1 kind: Service metadata: labels: helm.sh/chart: ingress-nginx-4.0.6 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 1.0.4 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: controller name: ingress-nginx-controller-admission namespace: ingress-nginx spec: type: ClusterIP ports: - name: https-webhook port: 443 targetPort: webhook appProtocol: https selector: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/component: controller --- # Source: ingress-nginx/templates/controller-service.yaml apiVersion: v1 kind: Service metadata: annotations: labels: helm.sh/chart: ingress-nginx-4.0.6 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 1.0.4 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: controller name: ingress-nginx-controller namespace: ingress-nginx spec: type: NodePort ipFamilyPolicy: SingleStack ipFamilies: - IPv4 ports: - name: http port: 80 protocol: TCP targetPort: http appProtocol: http - name: https port: 443 protocol: TCP targetPort: https appProtocol: https selector: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/component: controller --- # Source: ingress-nginx/templates/controller-deployment.yaml apiVersion: apps/v1 kind: Deployment metadata: labels: helm.sh/chart: ingress-nginx-4.0.6 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 1.0.4 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: controller name: ingress-nginx-controller namespace: ingress-nginx spec: selector: matchLabels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/component: controller revisionHistoryLimit: 10 minReadySeconds: 0 template: metadata: labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/component: controller spec: dnsPolicy: ClusterFirst containers: - name: controller image: k8s.gcr.io/ingress-nginx/controller:v1.0.4@sha256:545cff00370f28363dad31e3b59a94ba377854d3a11f18988f5f9e56841ef9ef imagePullPolicy: IfNotPresent lifecycle: preStop: exec: command: - /wait-shutdown args: - /nginx-ingress-controller - --election-id=ingress-controller-leader - --controller-class=k8s.io/ingress-nginx - --configmap=$(POD_NAMESPACE)/ingress-nginx-controller - --validating-webhook=:8443 - --validating-webhook-certificate=/usr/local/certificates/cert - --validating-webhook-key=/usr/local/certificates/key securityContext: capabilities: drop: - ALL add: - NET_BIND_SERVICE runAsUser: 101 allowPrivilegeEscalation: true env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace - name: LD_PRELOAD value: /usr/local/lib/libmimalloc.so livenessProbe: failureThreshold: 5 httpGet: path: /healthz port: 10254 scheme: HTTP initialDelaySeconds: 10 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 1 readinessProbe: failureThreshold: 3 httpGet: path: /healthz port: 10254 scheme: HTTP initialDelaySeconds: 10 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 1 ports: - name: http containerPort: 80 protocol: TCP - name: https containerPort: 443 protocol: TCP - name: webhook containerPort: 8443 protocol: TCP volumeMounts: - name: webhook-cert mountPath: /usr/local/certificates/ readOnly: true resources: requests: cpu: 100m memory: 90Mi nodeSelector: kubernetes.io/os: linux serviceAccountName: ingress-nginx terminationGracePeriodSeconds: 300 volumes: - name: webhook-cert secret: secretName: ingress-nginx-admission --- # Source: ingress-nginx/templates/controller-ingressclass.yaml # We don't support namespaced ingressClass yet # So a ClusterRole and a ClusterRoleBinding is required apiVersion: networking.k8s.io/v1 kind: IngressClass metadata: labels: helm.sh/chart: ingress-nginx-4.0.6 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 1.0.4 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: controller name: nginx namespace: ingress-nginx spec: controller: k8s.io/ingress-nginx --- # Source: ingress-nginx/templates/admission-webhooks/validating-webhook.yaml # before changing this value, check the required kubernetes version # https://kubernetes.io/docs/reference/access-authn-authz/extensible-admission-controllers/#prerequisites apiVersion: admissionregistration.k8s.io/v1 kind: ValidatingWebhookConfiguration metadata: labels: helm.sh/chart: ingress-nginx-4.0.6 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 1.0.4 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: admission-webhook name: ingress-nginx-admission webhooks: - name: validate.nginx.ingress.kubernetes.io matchPolicy: Equivalent rules: - apiGroups: - networking.k8s.io apiVersions: - v1 operations: - CREATE - UPDATE resources: - ingresses failurePolicy: Fail sideEffects: None admissionReviewVersions: - v1 clientConfig: service: namespace: ingress-nginx name: ingress-nginx-controller-admission path: /networking/v1/ingresses --- # Source: ingress-nginx/templates/admission-webhooks/job-patch/serviceaccount.yaml apiVersion: v1 kind: ServiceAccount metadata: name: ingress-nginx-admission namespace: ingress-nginx annotations: helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded labels: helm.sh/chart: ingress-nginx-4.0.6 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 1.0.4 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: admission-webhook --- # Source: ingress-nginx/templates/admission-webhooks/job-patch/clusterrole.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: ingress-nginx-admission annotations: helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded labels: helm.sh/chart: ingress-nginx-4.0.6 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 1.0.4 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: admission-webhook rules: - apiGroups: - admissionregistration.k8s.io resources: - validatingwebhookconfigurations verbs: - get - update --- # Source: ingress-nginx/templates/admission-webhooks/job-patch/clusterrolebinding.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: ingress-nginx-admission annotations: helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded labels: helm.sh/chart: ingress-nginx-4.0.6 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 1.0.4 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: admission-webhook roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: ingress-nginx-admission subjects: - kind: ServiceAccount name: ingress-nginx-admission namespace: ingress-nginx --- # Source: ingress-nginx/templates/admission-webhooks/job-patch/role.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: Role metadata: name: ingress-nginx-admission namespace: ingress-nginx annotations: helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded labels: helm.sh/chart: ingress-nginx-4.0.6 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 1.0.4 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: admission-webhook rules: - apiGroups: - '' resources: - secrets verbs: - get - create --- # Source: ingress-nginx/templates/admission-webhooks/job-patch/rolebinding.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: name: ingress-nginx-admission namespace: ingress-nginx annotations: helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded labels: helm.sh/chart: ingress-nginx-4.0.6 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 1.0.4 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: admission-webhook roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: ingress-nginx-admission subjects: - kind: ServiceAccount name: ingress-nginx-admission namespace: ingress-nginx --- # Source: ingress-nginx/templates/admission-webhooks/job-patch/job-createSecret.yaml apiVersion: batch/v1 kind: Job metadata: name: ingress-nginx-admission-create namespace: ingress-nginx annotations: helm.sh/hook: pre-install,pre-upgrade helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded labels: helm.sh/chart: ingress-nginx-4.0.6 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 1.0.4 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: admission-webhook spec: template: metadata: name: ingress-nginx-admission-create labels: helm.sh/chart: ingress-nginx-4.0.6 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 1.0.4 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: admission-webhook spec: containers: - name: create image: k8s.gcr.io/ingress-nginx/kube-webhook-certgen:v1.1.1@sha256:64d8c73dca984af206adf9d6d7e46aa550362b1d7a01f3a0a91b20cc67868660 imagePullPolicy: IfNotPresent args: - create - --host=ingress-nginx-controller-admission,ingress-nginx-controller-admission.$(POD_NAMESPACE).svc - --namespace=$(POD_NAMESPACE) - --secret-name=ingress-nginx-admission env: - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace restartPolicy: OnFailure serviceAccountName: ingress-nginx-admission nodeSelector: kubernetes.io/os: linux securityContext: runAsNonRoot: true runAsUser: 2000 --- # Source: ingress-nginx/templates/admission-webhooks/job-patch/job-patchWebhook.yaml apiVersion: batch/v1 kind: Job metadata: name: ingress-nginx-admission-patch namespace: ingress-nginx annotations: helm.sh/hook: post-install,post-upgrade helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded labels: helm.sh/chart: ingress-nginx-4.0.6 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 1.0.4 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: admission-webhook spec: template: metadata: name: ingress-nginx-admission-patch labels: helm.sh/chart: ingress-nginx-4.0.6 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 1.0.4 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: admission-webhook spec: containers: - name: patch image: k8s.gcr.io/ingress-nginx/kube-webhook-certgen:v1.1.1@sha256:64d8c73dca984af206adf9d6d7e46aa550362b1d7a01f3a0a91b20cc67868660 imagePullPolicy: IfNotPresent args: - patch - --webhook-name=ingress-nginx-admission - --namespace=$(POD_NAMESPACE) - --patch-mutating=false - --secret-name=ingress-nginx-admission - --patch-failure-policy=Fail env: - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace restartPolicy: OnFailure serviceAccountName: ingress-nginx-admission nodeSelector: kubernetes.io/os: linux securityContext: runAsNonRoot: true runAsUser: 2000

- 修改后的deploy.yaml文件

apiVersion: v1 kind: Namespace metadata: name: ingress-nginx labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx --- # Source: ingress-nginx/templates/controller-serviceaccount.yaml apiVersion: v1 kind: ServiceAccount metadata: labels: helm.sh/chart: ingress-nginx-4.0.6 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 1.0.4 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: controller name: ingress-nginx namespace: ingress-nginx automountServiceAccountToken: true --- # Source: ingress-nginx/templates/controller-configmap.yaml apiVersion: v1 kind: ConfigMap metadata: labels: helm.sh/chart: ingress-nginx-4.0.6 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 1.0.4 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: controller name: ingress-nginx-controller namespace: ingress-nginx data: allow-snippet-annotations: 'true' --- # Source: ingress-nginx/templates/clusterrole.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: labels: helm.sh/chart: ingress-nginx-4.0.6 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 1.0.4 app.kubernetes.io/managed-by: Helm name: ingress-nginx rules: - apiGroups: - '' resources: - configmaps - endpoints - nodes - pods - secrets verbs: - list - watch - apiGroups: - '' resources: - nodes verbs: - get - apiGroups: - '' resources: - services verbs: - get - list - watch - apiGroups: - networking.k8s.io resources: - ingresses verbs: - get - list - watch - apiGroups: - '' resources: - events verbs: - create - patch - apiGroups: - networking.k8s.io resources: - ingresses/status verbs: - update - apiGroups: - networking.k8s.io resources: - ingressclasses verbs: - get - list - watch --- # Source: ingress-nginx/templates/clusterrolebinding.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: labels: helm.sh/chart: ingress-nginx-4.0.6 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 1.0.4 app.kubernetes.io/managed-by: Helm name: ingress-nginx roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: ingress-nginx subjects: - kind: ServiceAccount name: ingress-nginx namespace: ingress-nginx --- # Source: ingress-nginx/templates/controller-role.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: Role metadata: labels: helm.sh/chart: ingress-nginx-4.0.6 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 1.0.4 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: controller name: ingress-nginx namespace: ingress-nginx rules: - apiGroups: - '' resources: - namespaces verbs: - get - apiGroups: - '' resources: - configmaps - pods - secrets - endpoints verbs: - get - list - watch - apiGroups: - '' resources: - services verbs: - get - list - watch - apiGroups: - networking.k8s.io resources: - ingresses verbs: - get - list - watch - apiGroups: - networking.k8s.io resources: - ingresses/status verbs: - update - apiGroups: - networking.k8s.io resources: - ingressclasses verbs: - get - list - watch - apiGroups: - '' resources: - configmaps resourceNames: - ingress-controller-leader verbs: - get - update - apiGroups: - '' resources: - configmaps verbs: - create - apiGroups: - '' resources: - events verbs: - create - patch --- # Source: ingress-nginx/templates/controller-rolebinding.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: labels: helm.sh/chart: ingress-nginx-4.0.6 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 1.0.4 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: controller name: ingress-nginx namespace: ingress-nginx roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: ingress-nginx subjects: - kind: ServiceAccount name: ingress-nginx namespace: ingress-nginx --- # Source: ingress-nginx/templates/controller-service-webhook.yaml apiVersion: v1 kind: Service metadata: labels: helm.sh/chart: ingress-nginx-4.0.6 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 1.0.4 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: controller name: ingress-nginx-controller-admission namespace: ingress-nginx spec: type: ClusterIP ports: - name: https-webhook port: 443 targetPort: webhook appProtocol: https selector: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/component: controller --- # Source: ingress-nginx/templates/controller-service.yaml apiVersion: v1 kind: Service metadata: annotations: labels: helm.sh/chart: ingress-nginx-4.0.6 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 1.0.4 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: controller name: ingress-nginx-controller namespace: ingress-nginx spec: type: NodePort ipFamilyPolicy: SingleStack ipFamilies: - IPv4 ports: - name: http port: 80 nodePort: 32080 protocol: TCP targetPort: http appProtocol: http - name: https port: 443 nodePort: 32443 protocol: TCP targetPort: https appProtocol: https selector: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/component: controller --- # Source: ingress-nginx/templates/controller-deployment.yaml apiVersion: apps/v1 kind: Deployment metadata: labels: helm.sh/chart: ingress-nginx-4.0.6 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 1.0.4 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: controller name: ingress-nginx-controller namespace: ingress-nginx spec: selector: matchLabels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/component: controller revisionHistoryLimit: 10 minReadySeconds: 0 template: metadata: labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/component: controller spec: dnsPolicy: ClusterFirst containers: - name: controller image: registry.cn-hangzhou.aliyuncs.com/google_containers/nginx-ingress-controller:v1.0.4 imagePullPolicy: Never lifecycle: preStop: exec: command: - /wait-shutdown args: - /nginx-ingress-controller - --election-id=ingress-controller-leader - --controller-class=k8s.io/ingress-nginx - --configmap=$(POD_NAMESPACE)/ingress-nginx-controller - --validating-webhook=:8443 - --validating-webhook-certificate=/usr/local/certificates/cert - --validating-webhook-key=/usr/local/certificates/key securityContext: capabilities: drop: - ALL add: - NET_BIND_SERVICE runAsUser: 101 allowPrivilegeEscalation: true env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace - name: LD_PRELOAD value: /usr/local/lib/libmimalloc.so - name: TZ value: Asia/Shanghai livenessProbe: failureThreshold: 5 httpGet: path: /healthz port: 10254 scheme: HTTP initialDelaySeconds: 10 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 1 readinessProbe: failureThreshold: 3 httpGet: path: /healthz port: 10254 scheme: HTTP initialDelaySeconds: 10 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 1 ports: - name: http containerPort: 80 protocol: TCP - name: https containerPort: 443 protocol: TCP - name: webhook containerPort: 8443 protocol: TCP volumeMounts: - name: webhook-cert mountPath: /usr/local/certificates/ readOnly: true resources: requests: cpu: 100m memory: 90Mi nodeSelector: kubernetes.io/os: linux serviceAccountName: ingress-nginx terminationGracePeriodSeconds: 300 volumes: - name: webhook-cert secret: secretName: ingress-nginx-admission --- # Source: ingress-nginx/templates/controller-ingressclass.yaml # We don't support namespaced ingressClass yet # So a ClusterRole and a ClusterRoleBinding is required apiVersion: networking.k8s.io/v1 kind: IngressClass metadata: labels: helm.sh/chart: ingress-nginx-4.0.6 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 1.0.4 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: controller name: nginx namespace: ingress-nginx spec: controller: k8s.io/ingress-nginx --- # Source: ingress-nginx/templates/admission-webhooks/validating-webhook.yaml # before changing this value, check the required kubernetes version # https://kubernetes.io/docs/reference/access-authn-authz/extensible-admission-controllers/#prerequisites apiVersion: admissionregistration.k8s.io/v1 kind: ValidatingWebhookConfiguration metadata: labels: helm.sh/chart: ingress-nginx-4.0.6 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 1.0.4 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: admission-webhook name: ingress-nginx-admission webhooks: - name: validate.nginx.ingress.kubernetes.io matchPolicy: Equivalent rules: - apiGroups: - networking.k8s.io apiVersions: - v1 operations: - CREATE - UPDATE resources: - ingresses failurePolicy: Fail sideEffects: None admissionReviewVersions: - v1 clientConfig: service: namespace: ingress-nginx name: ingress-nginx-controller-admission path: /networking/v1/ingresses --- # Source: ingress-nginx/templates/admission-webhooks/job-patch/serviceaccount.yaml apiVersion: v1 kind: ServiceAccount metadata: name: ingress-nginx-admission namespace: ingress-nginx annotations: helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded labels: helm.sh/chart: ingress-nginx-4.0.6 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 1.0.4 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: admission-webhook --- # Source: ingress-nginx/templates/admission-webhooks/job-patch/clusterrole.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: ingress-nginx-admission annotations: helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded labels: helm.sh/chart: ingress-nginx-4.0.6 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 1.0.4 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: admission-webhook rules: - apiGroups: - admissionregistration.k8s.io resources: - validatingwebhookconfigurations verbs: - get - update --- # Source: ingress-nginx/templates/admission-webhooks/job-patch/clusterrolebinding.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: ingress-nginx-admission annotations: helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded labels: helm.sh/chart: ingress-nginx-4.0.6 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 1.0.4 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: admission-webhook roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: ingress-nginx-admission subjects: - kind: ServiceAccount name: ingress-nginx-admission namespace: ingress-nginx --- # Source: ingress-nginx/templates/admission-webhooks/job-patch/role.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: Role metadata: name: ingress-nginx-admission namespace: ingress-nginx annotations: helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded labels: helm.sh/chart: ingress-nginx-4.0.6 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 1.0.4 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: admission-webhook rules: - apiGroups: - '' resources: - secrets verbs: - get - create --- # Source: ingress-nginx/templates/admission-webhooks/job-patch/rolebinding.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: name: ingress-nginx-admission namespace: ingress-nginx annotations: helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded labels: helm.sh/chart: ingress-nginx-4.0.6 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 1.0.4 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: admission-webhook roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: ingress-nginx-admission subjects: - kind: ServiceAccount name: ingress-nginx-admission namespace: ingress-nginx --- # Source: ingress-nginx/templates/admission-webhooks/job-patch/job-createSecret.yaml apiVersion: batch/v1 kind: Job metadata: name: ingress-nginx-admission-create namespace: ingress-nginx annotations: helm.sh/hook: pre-install,pre-upgrade helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded labels: helm.sh/chart: ingress-nginx-4.0.6 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 1.0.4 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: admission-webhook spec: template: metadata: name: ingress-nginx-admission-create labels: helm.sh/chart: ingress-nginx-4.0.6 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 1.0.4 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: admission-webhook spec: containers: - name: create image: registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen:v1.1.1 imagePullPolicy: Never args: - create - --host=ingress-nginx-controller-admission,ingress-nginx-controller-admission.$(POD_NAMESPACE).svc - --namespace=$(POD_NAMESPACE) - --secret-name=ingress-nginx-admission env: - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace restartPolicy: OnFailure serviceAccountName: ingress-nginx-admission nodeSelector: kubernetes.io/os: linux securityContext: runAsNonRoot: true runAsUser: 2000 --- # Source: ingress-nginx/templates/admission-webhooks/job-patch/job-patchWebhook.yaml apiVersion: batch/v1 kind: Job metadata: name: ingress-nginx-admission-patch namespace: ingress-nginx annotations: helm.sh/hook: post-install,post-upgrade helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded labels: helm.sh/chart: ingress-nginx-4.0.6 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 1.0.4 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: admission-webhook spec: template: metadata: name: ingress-nginx-admission-patch labels: helm.sh/chart: ingress-nginx-4.0.6 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 1.0.4 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: admission-webhook spec: containers: - name: patch image: registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen:v1.1.1 imagePullPolicy: Never args: - patch - --webhook-name=ingress-nginx-admission - --namespace=$(POD_NAMESPACE) - --patch-mutating=false - --secret-name=ingress-nginx-admission - --patch-failure-policy=Fail env: - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace restartPolicy: OnFailure serviceAccountName: ingress-nginx-admission nodeSelector: kubernetes.io/os: linux securityContext: runAsNonRoot: true runAsUser: 2000

2、安装ingress-nginx-controller

//将deploy.yaml改名为ingress-nginx-controller-v1.0.4.yaml //安装ingress-nginx-controller ]# kubectl apply -f ingress-nginx-controller-v1.0.4.yaml //查看ingress-nginx相关的pod ]# kubectl get pods -o wide -A NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES ingress-nginx ingress-nginx-admission-create-299sr 0/1 Completed 0 33m 10.10.36.70 k8s-node1 <none> <none> ingress-nginx ingress-nginx-admission-patch-nsdmp 0/1 Completed 0 33m 10.10.169.133 k8s-node2 <none> <none> ingress-nginx ingress-nginx-controller-8484d5d9f-gkjzt 1/1 Running 0 33m 10.10.169.134 k8s-node2 <none> <none> //查看ingress-nginx相关的service ]# kubectl get svc -o wide -A NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR ingress-nginx ingress-nginx-controller NodePort 10.20.234.166 <none> 80:32080/TCP,443:32443/TCP 35m app.kubernetes.io/component=controller,app.kubernetes.io/instance=ingress-nginx,app.kubernetes.io/name=ingress-nginx ingress-nginx ingress-nginx-controller-admission ClusterIP 10.20.227.2 <none> 443/TCP 35m app.kubernetes.io/component=controller,app.kubernetes.io/instance=ingress-nginx,app.kubernetes.io/name=ingress-nginx

3、访问ingress-nginx-controller

- 四个请求的返回值是一样的,都是404,因为还没有后端服务。

//通过serviceIP访问 ]# curl http://10.20.234.166:80 ]# curl -k https://10.20.234.166:443 //通过nodeIP访问 ]# curl http://10.1.1.11:32080 ]# curl -k https://10.1.1.11:32443 <html> <head><title>404 Not Found</title></head> <body> <center><h1>404 Not Found</h1></center> <hr><center>nginx</center> </body> </html>

5.3、定义Ingress策略

- 由于Ingress Controller容器通过hostPort将服务端口号32080和32443映射到了所有Node上,所以客户端可以通过任意Node访问Service后端Pod提供的服务。

- ingress的一些注解(annotations)

- https://kubernetes.github.io/ingress-nginx/user-guide/nginx-configuration/annotations/

//指定使用的ingress controller的类别,如果有多个ingress controller的时候很重要 kubernetes.io/ingress.class: "nginx" //指定rules的path可以使用正则表达式,如果不使用正则表达式,此项则可不使用 nginx.ingress.kubernetes.io/use-regex: "true" //连接超时时间,默认为5s nginx.ingress.kubernetes.io/proxy-connect-timeout: "600" //后端服务器回转数据超时时间,默认为60s nginx.ingress.kubernetes.io/proxy-send-timeout: "600" //后端服务器响应超时时间,默认为60s nginx.ingress.kubernetes.io/proxy-read-timeout: "600" //客户端上传文件的最大大小,默认为20m nginx.ingress.kubernetes.io/proxy-body-size: "10m" //关闭强制启用HTTPS ingress.kubernetes.io/sslredirect=false

5.3.1、转发到单个后端服务上

- 客户端到Ingress Controller的访问请求都将被转发到后端的唯一Service上,在这种情况下Ingress无须定义任何rule。

示例:

1、创建一个service和其后端pod

apiVersion: v1 kind: Service metadata: name: my-service spec: selector: app: ingress-nginx-pod ports: - port: 11180 targetPort: 80 protocol: TCP --- apiVersion: apps/v1 kind: Deployment metadata: name: ingress-nginx-deployment spec: selector: matchLabels: app: ingress-nginx-pod replicas: 2 template: metadata: labels: app: ingress-nginx-pod spec: volumes: - name: html hostPath: path: /apps/html containers: - name: ingress-nginx-container image: nginx:latest imagePullPolicy: Never command: ["/usr/sbin/nginx", "-g", "daemon off;"] ports: - containerPort: 80 volumeMounts: - mountPath: /usr/share/nginx/html name: html

- 创建index.html文件

]# echo 'ingress 10.1.1.12' > /apps/html/index.html ]# echo 'ingress 10.1.1.13' > /apps/html/index.html

2、创建ingress规则

- 在Ingress生效之前,需要先将Service部署完成。

- Ingress中path需要与后端真实Service提供的path一致,否则将被转发到一个不存在的path上,引发错误。

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: my-ingress

annotations:

kubernetes.io/ingress.class: "nginx" #必须有,否则创建出的ingress可能不正常

spec:

defaultBackend:

service:

name: my-service #Service的名称

port:

number: 11180 #Service的端口

- 创建,并查看ingress

- 在成功创建该Ingress后,查看其ADDRESS列,如果显示了所有nginx-ingress-controller Pod的IP地址,则表示controller已经设置好后端Service的Endpoint,该Ingress可以正常工作了。如果ADDRESS列为空,则通常说明controller未能正确连接到后端Service,需要排错。

]# kubectl apply -f my-ingress.yaml ]# kubectl get ingress -o wide -A NAMESPACE NAME CLASS HOSTS ADDRESS PORTS AGE default my-ingress <none> * 10.1.1.13 80 41s

3、访问服务

- 被随机转发到后端的pod上

]# curl 10.1.1.13:32080 ingress 10.1.1.13 ]# curl -k https://10.1.1.13:32443 ingress 10.1.1.12

5.3.2、同一域名下,不同的URL路径被转发到不同的服务上

- 常用于一个网站通过不同的路径提供不同的服务的场景,例如/web表示访问Web页面,/api表示访问API接口,对应到后端的两个服务(Service),通过Ingress的设置很容易就能将基于URL路径的转发规则定义出来。

示例:

- 对“localhost/web/”的访问请求将被转发到“myweb-service”服务上;对“localhost/api/”的访问请求将被转发到“myapi-service”服务上。

1、创建第一个service和其后端pod

apiVersion: v1 kind: Service metadata: name: myweb-service spec: selector: app: ingress-nginx-pod1 ports: - port: 11180 targetPort: 80 protocol: TCP --- apiVersion: apps/v1 kind: Deployment metadata: name: ingress-nginx-deployment1 spec: selector: matchLabels: app: ingress-nginx-pod1 replicas: 2 template: metadata: labels: app: ingress-nginx-pod1 spec: volumes: - name: html hostPath: path: /apps/htmlweb containers: - name: ingress-nginx-container image: nginx:latest imagePullPolicy: Never command: ["/usr/sbin/nginx", "-g", "daemon off;"] ports: - containerPort: 80 volumeMounts: - mountPath: /usr/share/nginx/html name: html

- 创建index.html文件

]# echo 'web 10.1.1.12' > /apps/htmlweb/web/index.html ]# echo 'web 10.1.1.13' > /apps/htmlweb/web/index.html

2、创建第二个service和其后端pod

apiVersion: v1 kind: Service metadata: name: myapi-service spec: selector: app: ingress-nginx-pod2 ports: - port: 11180 targetPort: 80 protocol: TCP --- apiVersion: apps/v1 kind: Deployment metadata: name: ingress-nginx-deployment2 spec: selector: matchLabels: app: ingress-nginx-pod2 replicas: 2 template: metadata: labels: app: ingress-nginx-pod2 spec: volumes: - name: html hostPath: path: /apps/htmlapi containers: - name: ingress-nginx-container image: nginx:latest imagePullPolicy: Never command: ["/usr/sbin/nginx", "-g", "daemon off;"] ports: - containerPort: 80 volumeMounts: - mountPath: /usr/share/nginx/html name: html

- 创建index.html文件

]# echo 'api 10.1.1.12' > /apps/htmlapi/api/index.html ]# echo 'api 10.1.1.13' > /apps/htmlapi/api/index.html

3、创建ingress规则

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: my-ingress

annotations:

kubernetes.io/ingress.class: "nginx" #必须有,否则创建出的ingress可能不正常

spec:

rules:

- host: localhost

http:

paths:

- path: /web #!

pathType: Prefix

backend:

service:

name: myweb-service

port:

number: 11180

- path: /api #!

pathType: Prefix

backend:

service:

name: myapi-service

port:

number: 11180

- 创建,并查看ingress

]# kubectl apply -f my-ingress.yaml ]# kubectl get ingress -o wide -A NAMESPACE NAME CLASS HOSTS ADDRESS PORTS AGE default my-ingress <none> localhost 10.1.1.13 80 42s

4、访问服务

- 客户端只能通过域名访问,这时要求客户端或者DNS将域名解析到后端多个Node的真实IP地址上。

- 通过curl访问服务(可以用--resolve参数模拟DNS解析,目标地址为域名;也可以用-H 'Host:域名'参数设置在HTTP头中要访问的域名,目标地址为IP地址)

]# curl -H "Host:localhost" 10.1.1.11:32080/api/ api 10.1.1.13 ]# curl -H "Host:localhost" 10.1.1.11:32080/web/ web 10.1.1.13

5.3.3、不同的域名(虚拟主机名)被转发到不同的服务上

- 常用于一个网站通过不同的域名或虚拟主机名提供不同服务的场景,例如foo.com域名由foo-service提供服务,bar.com域名由bar-service提供服务。

示例:

- 对“foo.com”的访问请求将被转发到“foo.service:80”服务上,对“bar.com”的访问请求将被转发到“bar-service:80”服务上。

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: my-ingress

annotations:

kubernetes.io/ingress.class: "nginx" #必须有,否则创建出的ingress可能不正常

spec:

rules:

- host: foo.com #!

http:

paths:

- backend:

service:

name: foo-service

port:

number: 80

- host: bar.com #!

http:

paths:

- backend:

service:

name: bar-service

port:

number: 80

5.3.4、不使用域名的转发规则

- 用于一个网站不使用域名直接提供服务的场景,此时通过任意一台运行ingress-controller的Node都能访问到后端的服务。

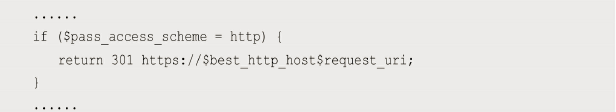

- 注意,使用无域名的Ingress转发规则时,将默认禁用非安全HTTP,强制启用HTTPS。例如,当使用Nginx作为Ingress Controller时,在其配置文件/etc/nginx/nginx.conf中将会自动设置下面的规则,将全部HTTP的访问请求直接返回301错误。

- 可以在Ingress的定义中设置一个annotation“ingress.kubernetes.io/sslredirect=false”来关闭强制启用HTTPS的设置

示例:

- 下面的配置将“<ingresscontroller-ip>/demo”的请求转发到“my-service:8080/demo”服务上

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: my-ingress

annotations:

kubernetes.io/ingress.class: "nginx" #必须有,否则创建出的ingress可能不正常

spec:

rules:

- http:

paths:

- path: /demo

pathType: Prefix

backend:

service:

name: my-service

port:

number: 8080

5.4、Ingress的TLS安全设置

- 为了Ingress提供HTTPS的安全访问,可以为Ingress中的域名进行TLS安全证书的设置。

- 如果Ingress启用了TLS,则控制器会将客户端的HTTP请求永久重定向到HTTPS的443端口。

- Ingress开启TLS前:

- http > http,https > https

- Ingress开启TLS后:

- http>https,https>https

- Ingress开启TLS前:

- 设置Ingress的TLS步骤如下:

- (1)生成ingress SSL的密钥和证书文件。

- (2)将证书保存到Kubernetes中的一个Secret资源对象上。

- (3)将该Secret对象设置到Ingress中。

- 前两步创建SSL证书和Secret对象有两种不同的方式:

- 提供服务的网站域名只有一个

- 提供服务的网站域名有多个

- 第3步对于这两种场景来说是相同的。

5.4.1、创建SSL证书和Secret对象

1、网站只有一个域名

1、通过OpenSSL工具直接生成ingress SSL的密钥和证书,将命令行参数-subj中的/CN设置为网站域名。

]# openssl req -x509 -nodes -days 3650 -newkey rsa:2048 -keyout tls.key -out tls.crt -subj "/CN=hengha.com" ]# ls -l -rw-r--r-- 1 root root 1099 4月 11 02:26 tls.crt -rw-r--r-- 1 root root 1704 4月 11 02:26 tls.key

2、根据tls.key和tls.crt文件创建secret资源对象,有两种方法。

- (1)使用kubectl create secret命令创建

//创建secret ]# kubectl create secret tls hengha-ingress-secret --key tls.key --cert tls.crt //查看secret ]# kubectl get secret NAME TYPE DATA AGE hengha-ingress-secret kubernetes.io/tls 2 26s //查看secret详情 ]# kubectl describe secret hengha-ingress-secret Name: hengha-ingress-secret Namespace: default Labels: <none> Annotations: <none> Type: kubernetes.io/tls Data ==== tls.crt: 1099 bytes tls.key: 1704 bytes

- (2)创建一个yaml文件,将tls.key和tls.crt文件的内容复制进去,使用kubectl create命令进行创建。

apiVersion: v1 kind: Secret metadata: name: hengha-ingress-secret type: kubernetes.io/tls data: tls.crt: MIIC/TCCAeWgAwIBAgIJAI0pZcN8Kv6HMA0GCSqGSIb3DQEBCwUAMBUxEzARBgNVBAMMCmhlbmdoYS5jb20wHhcNMjIwNDEwMTgyNjE2WhcNMzIwNDA3MTgyNjE2WjAVMRMwEQYDVQQDDApoZW5naGEuY29tMIIBIjANBgkqhkiG9w0BAQEFAAOCAQ8AMIIBCgKCAQEA3xJpfkpbE/PMio9sWn/k67jPSDoj+0FFJ5SUUjqDvO26N02bgR74UWmhMN7mPicVS9Ec1VvQkK3kpjDSwOCF1DQIwhvmDLpicOMaPypYbJ0DE4JM3TM+yAJG7D/vtU8yg5xRILrXfSGMn7Cuxpob5hceK2fMsYZ3iw39GZQcuQ9UPSrU2JXYwM7WKlz4OdbxSVDhKah/zWbsY3RJyRB7Kn+GQZSbeO6ZIXU7Qk9wLaWLTO7b8Fm3CkVv5DsJctmx97K5aPUC06rYD/RMqaRgHbUqRpzVXYJQr7Qi11AG/+HVlWKorpoHpwjtKEOeWk09Isr74p+INuzJw0jzL/oVnwIDAQABo1AwTjAdBgNVHQ4EFgQUqrNxObPgtEWLFjswm64JaUTDo4cwHwYDVR0jBBgwFoAUqrNxObPgtEWLFjswm64JaUTDo4cwDAYDVR0TBAUwAwEB/zANBgkqhkiG9w0BAQsFAAOCAQEAB0ND6hekmvme20KVBsoTtlG6frNvgohOXUpiXvyqmIW/RZZJZb+PjATdO9/emMZLJSxX9G3lyYL4ZcNefg3ay0TUc4QSP7eMHKoiEhcUfQPV4qykr4sXA57BmKHIRey6BEyszVNu1Bal+vV2BA+Q6cz2g5DQ9/8BJe9ma9P5b66v1KfqDgvOn+rDxXv1VrLqET61J1nQu0/RoHfXp66b+cJhom4k3s2Rh1fDTdof/KnryM7Om7xYOvS1SGshD99vh7JBkf/ofaEXERZEGPHGZlSNYNA/zWNW1DGqe6atWLGgYCPjzdkCfIMiDvIeTpHW72hn+pR6liyBGJoLWsfnUg== tls.key: MIIEvQIBADANBgkqhkiG9w0BAQEFAASCBKcwggSjAgEAAoIBAQDfEml+SlsT88yKj2xaf+TruM9IOiP7QUUnlJRSOoO87bo3TZuBHvhRaaEw3uY+JxVL0RzVW9CQreSmMNLA4IXUNAjCG+YMumJw4xo/KlhsnQMTgkzdMz7IAkbsP++1TzKDnFEgutd9IYyfsK7GmhvmFx4rZ8yxhneLDf0ZlBy5D1Q9KtTYldjAztYqXPg51vFJUOEpqH/NZuxjdEnJEHsqf4ZBlJt47pkhdTtCT3AtpYtM7tvwWbcKRW/kOwly2bH3srlo9QLTqtgP9EyppGAdtSpGnNVdglCvtCLXUAb/4dWVYqiumgenCO0oQ55aTT0iyvvin4g27MnDSPMv+hWfAgMBAAECggEAZqFJ99it3pgkWvtxlpGQ+QKmG0zkkQyOjI9HTi2tvpaBPkBucxGUnaBDkQB8XcwNeDxVT7RWWLoooG2GCUdDS0ZFYUkEpoZ6FAXoZXdOB2qVp7wjMQIKuGqTxs4Dsx8k1nhsuuo7ik6NLEtVnfn5K/sm3kF7Y5HCHi/paoNCU8Fll8uKo0GVMDwmTaDjJR7xYXO2lI+r7nx0cLz8gA9H0mL6EkdHmdHMyXLmiXcYTVpONAFTI+obI9BQTSfILC5sc/F7e1qX1WhyN97y8uLp/Y9YwUx/rg1gKQxi8w6N8XOoTnXlKC9GaEPLFzRxTs4blEHEkg167pAYY+Q7Ue3TQQKBgQD6Sr4E/b2kMg81rIXOnTTacs5c2Fj9LgfWLqr3lSXZB+Xvb6ZU3NUuVY0IBjJSIgCUgIUuUifZ6Y14ba5v0eJFpsvG7bfMHXMvOa6t34hl5Fui/JvA/7nr06fu4fHVOzBnXR9rs/rYBcLzxxC/KO3nGyjhphjDVp5mXxf8vXMKFQKBgQDkKMDhHg1RIzdAOvGT7XF4KuTvs2fSg9wKEmfOzDwSzfHZvz2X51h2C0gJ+AHu7/pP/r9V9BJ92aRwHW8k+N7DCHJC3FEWhDlcWoHX9vTQCkSfZWZ6xZfpl/Nglppu5geKl+mvgz6LVExl9lxM3DPYa8YGxzTysIftcaspeQnR4wKBgQChyNJO850zl1ARh7TuOtvBIFiX1xiefrR+E6hbZMMUKHDOdkLzs9LwokgJGchJIsVxOCneKSitSLQzVeJdUTOLhidOLLaTb63Wpthsd4L5KcS588LR2/rXnvZ8CPyCskG1bpIy0iGgpQjA/rmqdtBghEPGp9B143V8ApfEvMixiQKBgA2Us25y+H3056wGFi0k/BUYEIqIFkz8llNvJwizNXw1EAlTDdqF5ckZAM+/GHZdiHvMgR0fqx4cn2IhDmWX/sKDNTHvpU/+zN9Hb+UoCQh9I/qM8Z2rN1CrP8xnCohBXv+L7VgKKuXmPanwESxuCxP9zkrG/srXYS/sDTEpyEDbAoGAUbyYjChc2twOEXyBoGLM66ad9iilBZ6Re20ec8O+Y38ZwGyUptK0EQ3RNhnXz+8qYeE5ojGKQnrf7Lhqm/NPuHMcLBMdEiZ0tQ5Q0zaYoabu/P1wgtIQAYbZcAJzg6nYWPcQVya8o8ok3YwzX5e3Ff1bjVgmhzWxd5NN7iJXodI=

2、网站有多个域名

- 如果提供服务的网站有多个域名,则SSL证书需要使用额外的一个x509 v3配置文件辅助完成,在[alt_names]段中完成多个DNS域名的设置。

1、创建openssl.cnf文件

[req] req_extensions = v3_req distinguished_name = req_distinguished_name [req_distinguished_name] [v3_req] basicConstraints = CA:FALSE keyUsage = nonRepudiation, digitalSignature, keyEncipherment subjectAltName = @alt_names [alt_names] DNS.l = hengha.com DNS.2 = hengha2.com

2、生成自签名CA证书

]# openssl genrsa -out ca.key 2048 ]# openssl req -x509 -new -nodes -key ca.key -days 36500 -out ca.crt -subj "/CN=hengha.com"

3、基于openssl.cnf和CA证书生成ingress SSL的密钥和证书

]# openssl genrsa -out ingress.key 2048 ]# openssl req -new -key ingress.key -out ingress.csr -subj "/CN=hengha.com" -config openssl.cnf ]# openssl x509 -req -in ingress.csr -CA ca.crt -CAkey ca.key -CAcreateserial -out ingress.crt -days 3650 -extensions v3_req -extfile openssl.cnf

4、根据ingress.key和ingress.crt文件创建secret资源对象,有两种方法

- (1)通过kubectl create secret tls命令创建secret资源对象

- (2)通过YAML配置文件创建secret资源对象。

//创建secret ]# kubectl create secret tls hengha-ingress-secret2 --key ingress.key --cert ingress.crt //查看secret ]# kubectl get secret NAME TYPE DATA AGE hengha-ingress-secret2 kubernetes.io/tls 2 4s //查看secret详情 ]# kubectl describe secret hengha-ingress-secret2 Name: hengha-ingress-secret2 Namespace: default Labels: <none> Annotations: <none> Type: kubernetes.io/tls Data ==== tls.key: 1675 bytes tls.crt: 1074 bytes

5.4.2、将Secret对象设置到Ingress中

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: tls-my-ingress

annotations:

kubernetes.io/ingress.class: "nginx" #必须有,否则创建出的ingress可能不正常

spec:

tls: #!

- hosts:

- hengha.com

secretName: hengha-ingress-secret

rules:

- host: hengha.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: tls-my-service

port:

number: 8080

5.4.3、实现Ingress的TLS

示例:

- (1)使用ConfigMap修改nginx Pod的配置文件,将域名修改为“hengha.com”。

- (2)创建ingress使用的Service和Pod

- (2)先测试不配置TLS的Ingress

- (3)再测试配置TLS的Ingress

1、生成nginx的ConfigMap文件(tls-cm-nginx80.conf)

- tls-cm-nginx80.conf文件

server { listen 80; server_name hengha.com; #access_log /var/log/nginx/host.access.log main; location / { root /usr/share/nginx/html; index index.html index.htm; } #error_page 404 /404.html; # redirect server error pages to the static page /50x.html # error_page 500 502 503 504 /50x.html; location = /50x.html { root /usr/share/nginx/html; } # proxy the PHP scripts to Apache listening on 127.0.0.1:80 # #location ~ \.php$ { # proxy_pass http://127.0.0.1; #} # pass the PHP scripts to FastCGI server listening on 127.0.0.1:9000 # #location ~ \.php$ { # root html; # fastcgi_pass 127.0.0.1:9000; # fastcgi_index index.php; # fastcgi_param SCRIPT_FILENAME /scripts$fastcgi_script_name; # include fastcgi_params; #} # deny access to .htaccess files, if Apache's document root # concurs with nginx's one # #location ~ /\.ht { # deny all; #}

- 创建,并查看ConfigMap

//创建ConfigMap ]# kubectl create configmap tls-cm-nginx80 --from-file=tls-cm-nginx80.conf //查看ConfigMap ]# kubectl get cm NAME DATA AGE tls-cm-nginx80 1 10s //查看ConfigMap详情 ]# kubectl describe cm tls-cm-nginx80

2、创建ingress使用的Service和Pod

- tls-my-service.yaml文件

apiVersion: v1 kind: Service metadata: name: tls-my-service spec: selector: app: tls-nginx-pod ports: - port: 8080 targetPort: 80 protocol: TCP --- apiVersion: apps/v1 kind: Deployment metadata: name: tls-nginx-deployment spec: selector: matchLabels: app: tls-nginx-pod replicas: 2 template: metadata: labels: app: tls-nginx-pod spec: volumes: - name: html hostPath: path: /apps/html - name: cm-nginx #定义volume的名称 configMap: name: tls-cm-nginx80 #使用“tls-cm-nginx80” ConfigMap items: - key: tls-cm-nginx80.conf #key=tls-cm-nginx80.conf path: default.conf #value将以default.conf文件名进行挂载 containers: - name: tls-nginx-container image: nginx:latest imagePullPolicy: Never command: ["/usr/sbin/nginx", "-g", "daemon off;"] ports: - containerPort: 80 volumeMounts: - mountPath: /usr/share/nginx/html name: html - name: cm-nginx #引用volume的名称 mountPath : /etc/nginx/conf.d/ #挂载到容器内的目录

- 创建,并查看Service和Pod

//创建Service和Pod ]# kubectl apply -f tls-my-service.yaml //查看Pod ]# kubectl get pod -o wide -A NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES default tls-nginx-deployment-7f755d8f57-bkzt9 1/1 Running 0 6s 10.10.36.69 k8s-node1 <none> <none> default tls-nginx-deployment-7f755d8f57-gkjzt 1/1 Running 0 5s 10.10.169.134 k8s-node2 <none> <none> //查看Service ]# kubectl get svc -o wide -A NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR default tls-my-service ClusterIP 10.20.227.2 <none> 8080/TCP 62s app=tls-nginx-pod

- 创建index.html文件

]# echo 'tls 10.1.1.12' > /apps/html/index.html ]# echo 'tls 10.1.1.13' > /apps/html/index.html

3、创建不含有TLS的Ingress

- 创建ingress文件(tls-my-ingress.yaml )

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: tls-my-ingress

annotations:

kubernetes.io/ingress.class: "nginx" #必须有,否则创建出的ingress可能不正常

spec:

rules:

- host: hengha.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: tls-my-service

port:

number: 8080

- 创建,并查看Ingress

//创建Ingress ]# kubectl apply -f tls-my-ingress.yaml //查看Ingress ]# kubectl get ingress -o wide -A NAMESPACE NAME CLASS HOSTS ADDRESS PORTS AGE default tls-my-ingress <none> hengha.com 10.1.1.13 80 19s

- 访问后端nginx Pod

- 可以成功返回正确的值。

]# curl -H "HOST:hengha.com" http://10.1.1.13:32080 tls 10.1.1.12 ]# curl -H "HOST:hengha.com" https://10.1.1.13:32443 -k tls 10.1.1.13

- 删除Ingress

]# kubectl delete -f tls-my-ingress.yaml

4、创建含有TLS的Ingress

- 创建SSL证书和Secret对象

//直接生成ingress SSL的密钥和证书 ]# openssl req -x509 -nodes -days 3650 -newkey rsa:2048 -keyout tls.key -out tls.crt -subj "/CN=hengha.com" //创建secret资源对象 ]# kubectl create secret tls hengha-ingress-secret --key tls.key --cert tls.crt

- 创建ingress文件(tls-my-ingress2.yaml )

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: tls-my-ingress2

annotations:

kubernetes.io/ingress.class: "nginx" #必须有,否则创建出的ingress可能不正常

spec:

tls: #!

- hosts:

- hengha.com

secretName: hengha-ingress-secret

rules:

- host: hengha.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: tls-my-service

port:

number: 8080

- 创建,并查看Ingress

//创建Ingress ]# kubectl apply -f tls-my-ingress2.yaml //查看Ingress ]# kubectl get ingress -o wide -A NAMESPACE NAME CLASS HOSTS ADDRESS PORTS AGE default tls-my-ingress2 <none> hengha.com 80, 443 16s

- 访问后端nginx Pod

- 可以看到https的请求可以正常返回,而http的请求被永久重定向了。

]# curl -H "HOST:hengha.com" http://10.1.1.13:32080 <html> <head><title>308 Permanent Redirect</title></head> <body> <center><h1>308 Permanent Redirect</h1></center> <hr><center>nginx</center> </body> </html> ]# curl -H "HOST:hengha.com" https://10.1.1.13:32443 -k tls 10.1.1.12

1

# #

浙公网安备 33010602011771号

浙公网安备 33010602011771号