pyspark 读写csv、json文件

from pyspark import SparkContext,SparkConf

import os

from pyspark.sql.session import SparkSession

def CreateSparkContex():

sparkconf=SparkConf().setAppName("MYPRO").set("spark.ui.showConsoleProgress","false")

sc=SparkContext(conf=sparkconf)

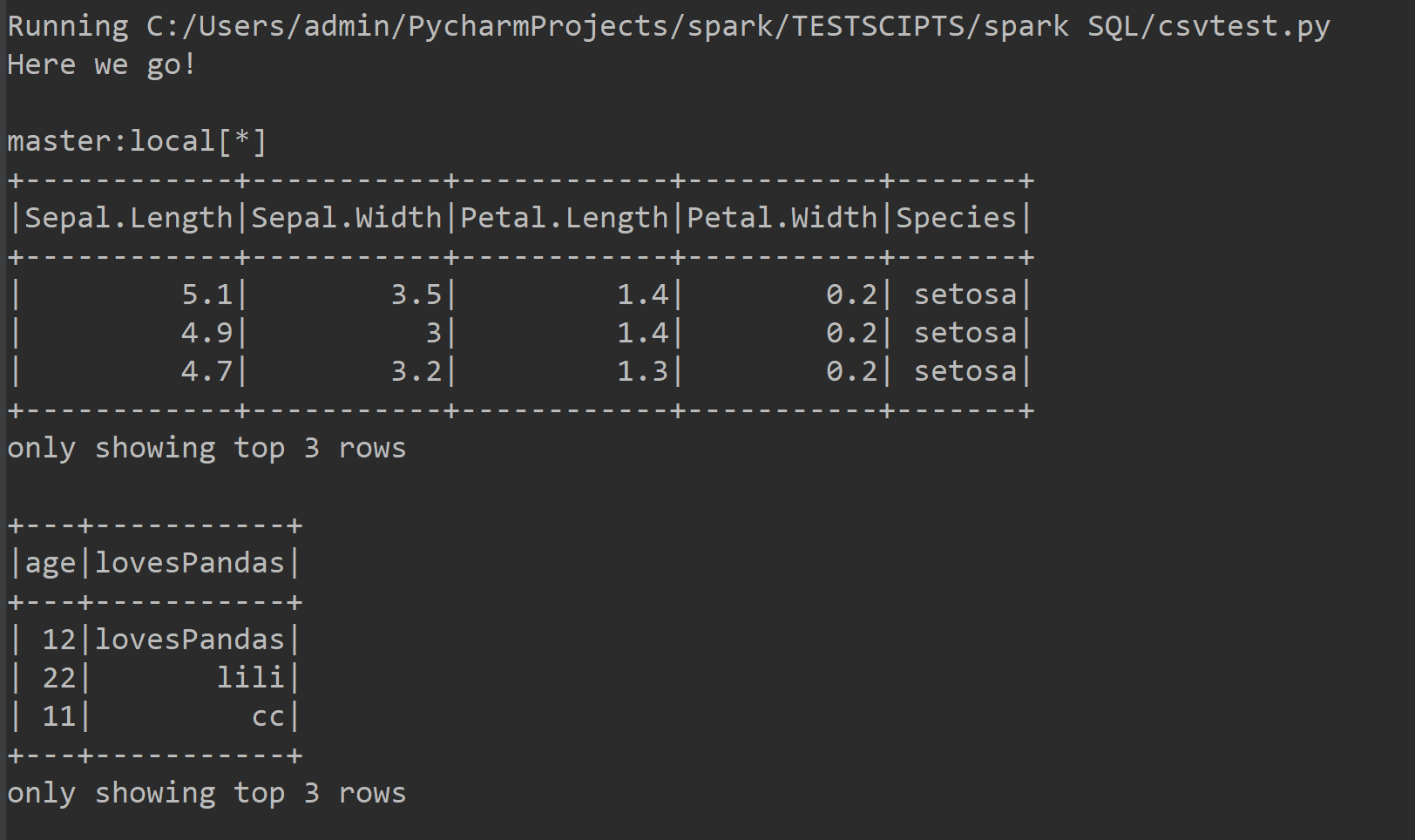

print("master:"+sc.master)

sc.setLogLevel("WARN")

Setpath(sc)

spark = SparkSession.builder.config(conf=sparkconf).getOrCreate()

return sc,spark

def Setpath(sc):

global Path

if sc.master[:5]=="local":

Path="file:/C:/spark/sparkworkspace"

else:

Path="hdfs://test"

if __name__=="__main__":

print("Here we go!\n")

sc,spark=CreateSparkContex()

readcsvpath=os.path.join(Path,'iris.csv')

readjspath=os.path.join(Path,'fd.json')

outcsvpath=os.path.join(Path,'write_iris.csv')

outjspath=os.path.join(Path,'write_js.json')

dfcsv=spark.read.csv(readcsvpath,header=True)

dfjs=spark.read.json(readjspath)

#df.write.csv(outcsvpath)

#df.write.json(outjspath)

dfcsv.show(3)

dfjs.show(3)

sc.stop()

spark.stop()

每天扫一扫,到店付款省钱!

动动小手支持一下作者,谢谢🙏