shell及Python爬虫实例展示

1.shell爬虫实例:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | [root@db01 ~]# vim pa.sh #!/bin/bashwww_link=http://www.cnblogs.com/clsn/default.html?page=for i in {1..8}doa=`curl ${www_link}${i} 2>/dev/null|grep homepage|grep -v "ImageLink"|awk -F "[><\"]" '{print $7"@"$9}' >>bb.txt`#@为自己指定的分隔符.这行是获取内容及内容网址doneegrep -v "pager" bb.txt >ma.txt #将处理后,只剩内容和内容网址的放在一个文件里b=`sed "s# ##g" ma.txt` #将文件里的空格去掉,因为for循环会将每行的空格前后作为两个变量,而不是一行为一个变量,这个坑花了我好长时间。for i in $bdo c=`echo $i|awk -F @ '{print $1}'` #c=内容网址 d=`echo $i|awk -F @ '{print $2}'` #d=内容 echo "<a href='${c}' target='_blank'>${d}</a> " >>cc.txt #cc.txt为生成a标签的文本done |

爬虫结果显示:归档文件中惨绿少年的爬虫结果

注意:爬取结果放入博客应在a标签后加 的空格符或其他,博客园默认不显示字符串

的空格符或其他,博客园默认不显示字符串

2、

2.1Python爬虫学习

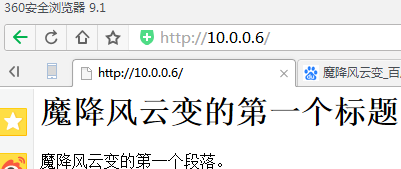

爬取这个网页

1 2 3 4 5 6 7 8 9 10 11 12 | import urllib.request# 网址url = "http://10.0.0.6/"# 请求request = urllib.request.Request(url)# 爬取结果response = urllib.request.urlopen(request)data = response.read()# 设置解码方式data = data.decode('utf-8')# 打印结果print(data) |

结果:

E:\python\python\python.exe C:/python/day2/test.py <html> <meta http-equiv="Content-Type" content="text/html;charset=utf-8"> <body> <h1>魔降风云变的第一个标题</h1> <p>魔降风云变的第一个段落。</p> </body> </html>

1 | print(type(response)) |

结果:

<class 'http.client.HTTPResponse'>

1 | print(response.geturl()) |

结果:

http://10.0.0.6/

1 | print(response.info()) |

结果:

Server: nginx/1.12.2 Date: Fri, 02 Mar 2018 07:45:11 GMT Content-Type: text/html Content-Length: 184 Last-Modified: Fri, 02 Mar 2018 07:38:00 GMT Connection: close ETag: "5a98ff58-b8" Accept-Ranges: bytes

1 | print(response.getcode()) |

结果:

200

2.2爬取网页代码并保存到电脑文件

1 2 3 4 5 6 7 8 9 10 11 | import urllib.request# 网址url = "http://10.0.0.6/"headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) ' 'Chrome/51.0.2704.63 Safari/537.36'}req = urllib.request.Request(url=url, headers=headers)res = urllib.request.urlopen(req)data = res.read()data = data.decode('utf-8')# 打印抓取的内容print(data) |

结果:

<html> <meta http-equiv="Content-Type" content="text/html;charset=utf-8"> <body> <h1>魔降风云变的第一个标题</h1> <p>魔降风云变的第一个段落。</p> </body> </html>

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 | import urllib.request# 定义保存函数 def saveFile(data): #------------------------------------------ path = "E:\\content.txt" f = open(path, 'wb') f.write(data) f.close() #------------------------------------------# 网址url = "http://10.0.0.6/"headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) ' 'Chrome/51.0.2704.63 Safari/537.36'}req = urllib.request.Request(url=url, headers=headers)res = urllib.request.urlopen(req)data = res.read()# 也可以把爬取的内容保存到文件中saveFile(data) #******************************************data = data.decode('utf-8')# 打印抓取的内容print(data) |

添加保存文件的函数并执行的结果:

1 2 3 4 5 | # # 打印爬取网页的各类信息print(type(res))print(res.geturl())print(res.info())print(res.getcode()) |

结果:

<class 'http.client.HTTPResponse'> http://10.0.0.6/ Server: nginx/1.12.2 Date: Fri, 02 Mar 2018 08:09:56 GMT Content-Type: text/html Content-Length: 184 Last-Modified: Fri, 02 Mar 2018 07:38:00 GMT Connection: close ETag: "5a98ff58-b8" Accept-Ranges: bytes 200

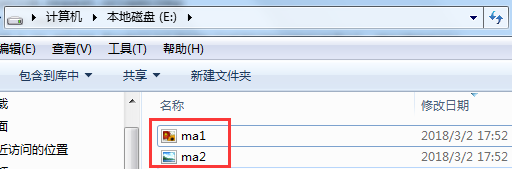

2.3爬取图片

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 | import urllib.request, socket, re, sys, os# 定义文件保存路径targetPath = "E:\\"def saveFile(path): # 检测当前路径的有效性 if not os.path.isdir(targetPath): os.mkdir(targetPath) # 设置每个图片的路径 pos = path.rindex('/') t = os.path.join(targetPath, path[pos + 1:]) return t# 用if __name__ == '__main__'来判断是否是在直接运行该.py文件# 网址url = "http://10.0.0.6/"headers = { 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) ' 'Chrome/51.0.2704.63 Safari/537.36'}req = urllib.request.Request(url=url, headers=headers)res = urllib.request.urlopen(req)data = res.read()print(data)for link, t in set(re.findall(r'(http:[^s]*?(jpg|png|gif))', str(data))): print(link) try: urllib.request.urlretrieve(link, saveFile(link)) except: print('失败') |

2.31

1 2 3 4 5 6 7 8 9 10 11 12 | import urllib.request, socket, re, sys, osurl = "http://10.0.0.6/"headers = { 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) ' 'Chrome/51.0.2704.63 Safari/537.36'}req = urllib.request.Request(url=url, headers=headers)res = urllib.request.urlopen(req)data = res.read()print(req)print(res)print(data)print(str(data)) |

结果:

<urllib.request.Request object at 0x0000000001ECD9E8> <http.client.HTTPResponse object at 0x0000000002D1B128> b'<html>\n<meta http-equiv="Content-Type" content="text/html;charset=utf-8">\n<body>\n<img src="http://10.0.0.6/ma1.png" />\n<img src="http://10.0.0.6/ma2.jpg" />\n<h1>\xe9\xad\x94\xe9\x99\x8d\xe9\xa3\x8e\xe4\xba\x91\xe5\x8f\x98\xe7\x9a\x84\xe7\xac\xac\xe4\xb8\x80\xe4\xb8\xaa\xe6\xa0\x87\xe9\xa2\x98</h1>\n<p>\xe9\xad\x94\xe9\x99\x8d\xe9\xa3\x8e\xe4\xba\x91\xe5\x8f\x98\xe7\x9a\x84\xe7\xac\xac\xe4\xb8\x80\xe4\xb8\xaa\xe6\xae\xb5\xe8\x90\xbd\xe3\x80\x82</p>\n</body>\n</html>\n' b'<html>\n<meta http-equiv="Content-Type" content="text/html;charset=utf-8">\n<body>\n<img src="http://10.0.0.6/ma1.png" />\n<img src="http://10.0.0.6/ma2.jpg" />\n<h1>\xe9\xad\x94\xe9\x99\x8d\xe9\xa3\x8e\xe4\xba\x91\xe5\x8f\x98\xe7\x9a\x84\xe7\xac\xac\xe4\xb8\x80\xe4\xb8\xaa\xe6\xa0\x87\xe9\xa2\x98</h1>\n<p>\xe9\xad\x94\xe9\x99\x8d\xe9\xa3\x8e\xe4\xba\x91\xe5\x8f\x98\xe7\x9a\x84\xe7\xac\xac\xe4\xb8\x80\xe4\xb8\xaa\xe6\xae\xb5\xe8\x90\xbd\xe3\x80\x82</p>\n</body>\n</html>\n'

2.32

1 2 3 4 5 6 7 8 9 10 | import urllib.request, socket, re, sys, osurl = "http://10.0.0.6/"headers = { 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) ' 'Chrome/51.0.2704.63 Safari/537.36'}req = urllib.request.Request(url=url, headers=headers)res = urllib.request.urlopen(req)data = res.read()for link in set(re.findall(r'(http:[^s]*?(jpg|png|gif))', str(data))): print(link) |

结果:

('http://10.0.0.6/ma2.jpg', 'jpg') ('http://10.0.0.6/ma1.png', 'png')

2.33

1 2 | for link,t in set(re.findall(r'(http:[^s]*?(jpg|png|gif))', str(data))): print(link) |

结果:

http://10.0.0.6/ma1.png http://10.0.0.6/ma2.jpg

2.34

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 | import urllib.request, socket, re, sys, os# 定义文件保存路径targetPath = "E:\\"def saveFile(path): # 检测当前路径的有效性 if not os.path.isdir(targetPath): os.mkdir(targetPath) # 设置每个图片的路径 pos = path.rindex('/') t = os.path.join(targetPath, path[pos + 1:]) return t# 用if __name__ == '__main__'来判断是否是在直接运行该.py文件# 网址url = "http://10.0.0.6/"headers = { 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) ' 'Chrome/51.0.2704.63 Safari/537.36'}req = urllib.request.Request(url=url, headers=headers)res = urllib.request.urlopen(req)data = res.read()for link,t in set(re.findall(r'(http:[^s]*?(jpg|png|gif))', str(data))): print(link) try: urllib.request.urlretrieve(link, saveFile(link)) except: print('失败') |

结果:

http://10.0.0.6/ma2.jpg http://10.0.0.6/ma1.png

2.4登录知乎

参考:http://blog.csdn.net/fly_yr/article/details/51535676

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· Linux系列:如何用heaptrack跟踪.NET程序的非托管内存泄露

· 开发者必知的日志记录最佳实践

· SQL Server 2025 AI相关能力初探

· Linux系列:如何用 C#调用 C方法造成内存泄露

· AI与.NET技术实操系列(二):开始使用ML.NET

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本

· C#/.NET/.NET Core优秀项目和框架2025年2月简报

· 什么是nginx的强缓存和协商缓存

· 一文读懂知识蒸馏

· Manus爆火,是硬核还是营销?