自动化部署、通知、分布式构建与并行构建(jenkins pipeline)

jenkins与ansible集成

简单案例

pipeline { agent any stages { stage('deploy') { steps { ansiblePlaybook( playbook: "${env.WORKSPACE}/playbook.yml", inventory: "${env.WORKSPACE}/hosts", credentialsId: 'vagrant' ) } } } }

应该需要在Jenkins上安装一下

[root@mcw15 plugins]# yum install -y ansible

打开配置

[root@mcw15 plugins]# vim /etc/ansible/ansible.cfg [root@mcw15 plugins]# grep host_key_checking /etc/ansible/ansible.cfg host_key_checking = False [root@mcw15 plugins]#

也可以添加工具,添加不同的ansible版本,然后不同的项目用不同的ansible

不通:

[root@mcw15 mcwansible]# [root@mcw15 mcwansible]# ansible -i hosts example -m ping 10.0.0.13 | UNREACHABLE! => { "changed": false, "msg": "Failed to connect to the host via ssh: Permission denied (publickey,password).", "unreachable": true } [root@mcw15 mcwansible]#

分发公钥

[root@mcw15 mcwansible]# ssh-copy-id -i /root/.ssh/id_rsa.pub 10.0.0.13 /usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub" /usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed /usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys root@10.0.0.13's password: Number of key(s) added: 1 Now try logging into the machine, with: "ssh '10.0.0.13'" and check to make sure that only the key(s) you wanted were added. [root@mcw15 mcwansible]# ls fenfa.sh hosts [root@mcw15 mcwansible]# cat hosts [example] 10.0.0.13 [root@mcw15 mcwansible]# ansible -i hosts example -m ping 10.0.0.13 | SUCCESS => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": false, "ping": "pong" } [root@mcw15 mcwansible]#

example2的先不让通

[root@mcw15 mcwansible]# vim hosts [root@mcw15 mcwansible]# cat hosts [example] 10.0.0.13 [example2] 10.0.0.22 [root@mcw15 mcwansible]# ansible -i hosts example2 -m ping 10.0.0.22 | UNREACHABLE! => { "changed": false, "msg": "Failed to connect to the host via ssh: Warning: Permanently added '10.0.0.22' (ECDSA) to the list of known hosts.\r\nPermission denied (publickey,password).", "unreachable": true } [root@mcw15 mcwansible]#

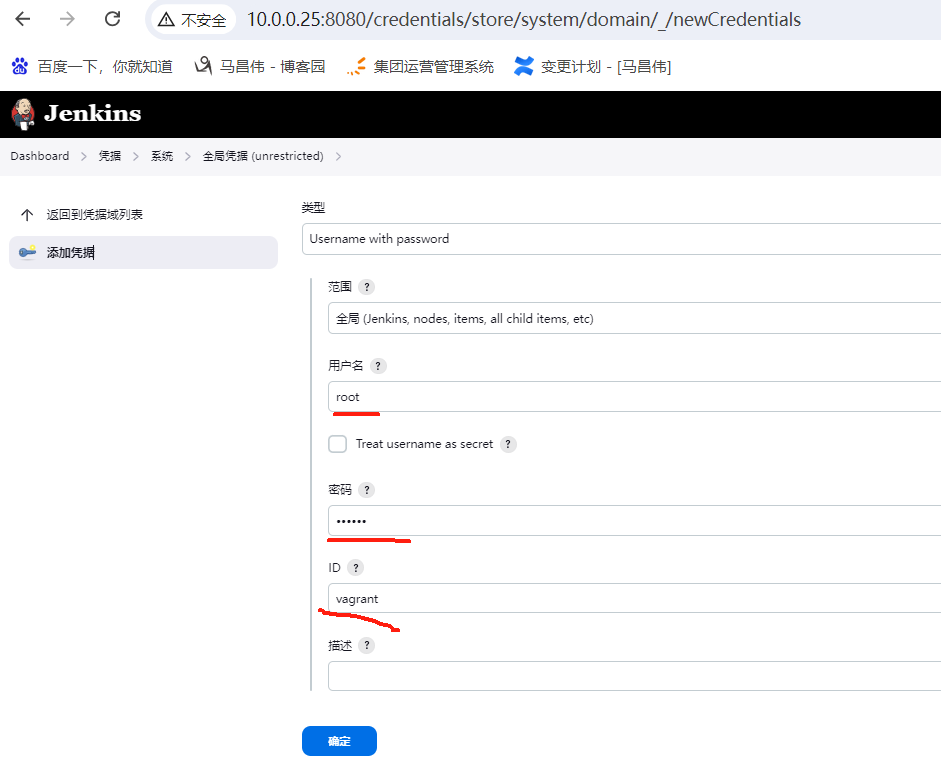

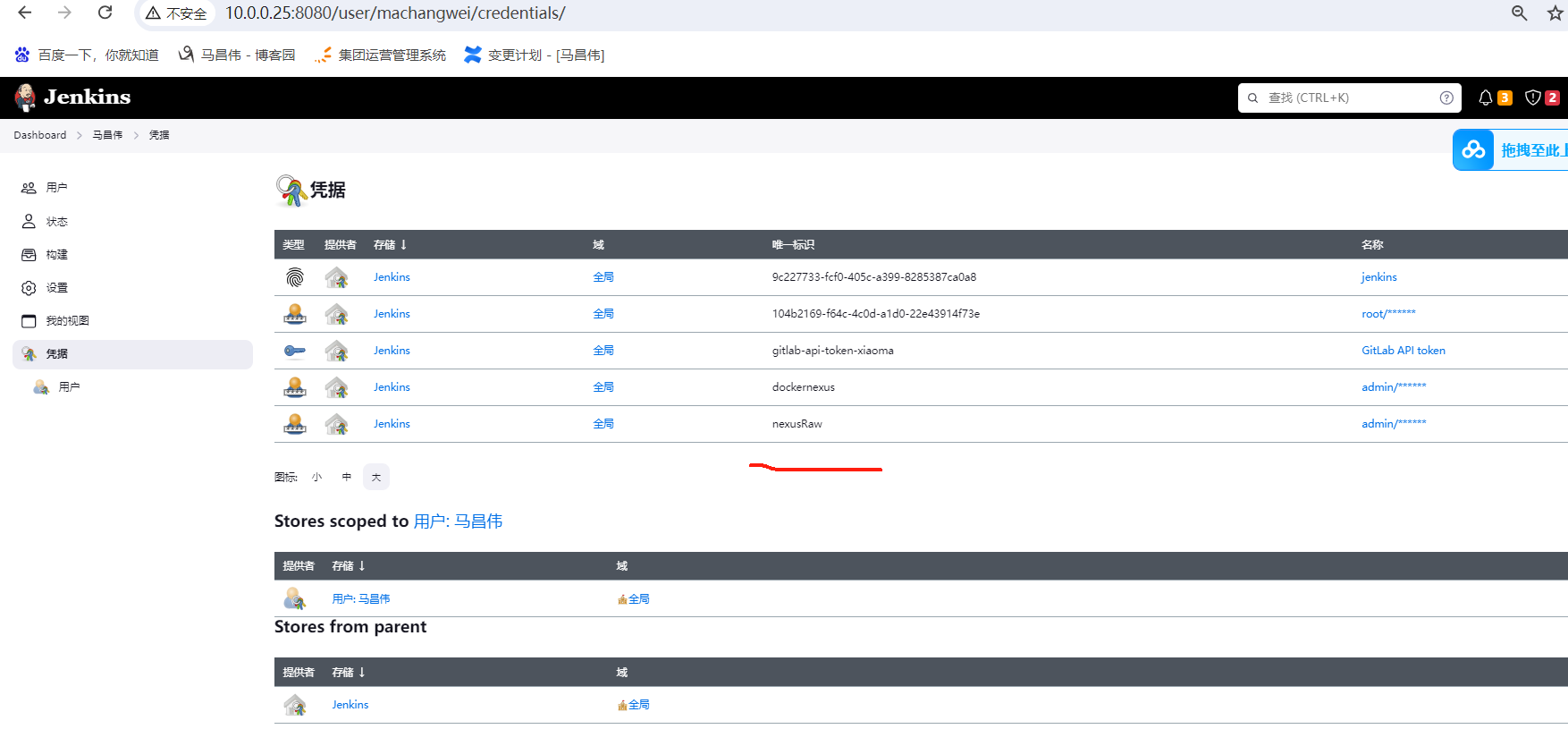

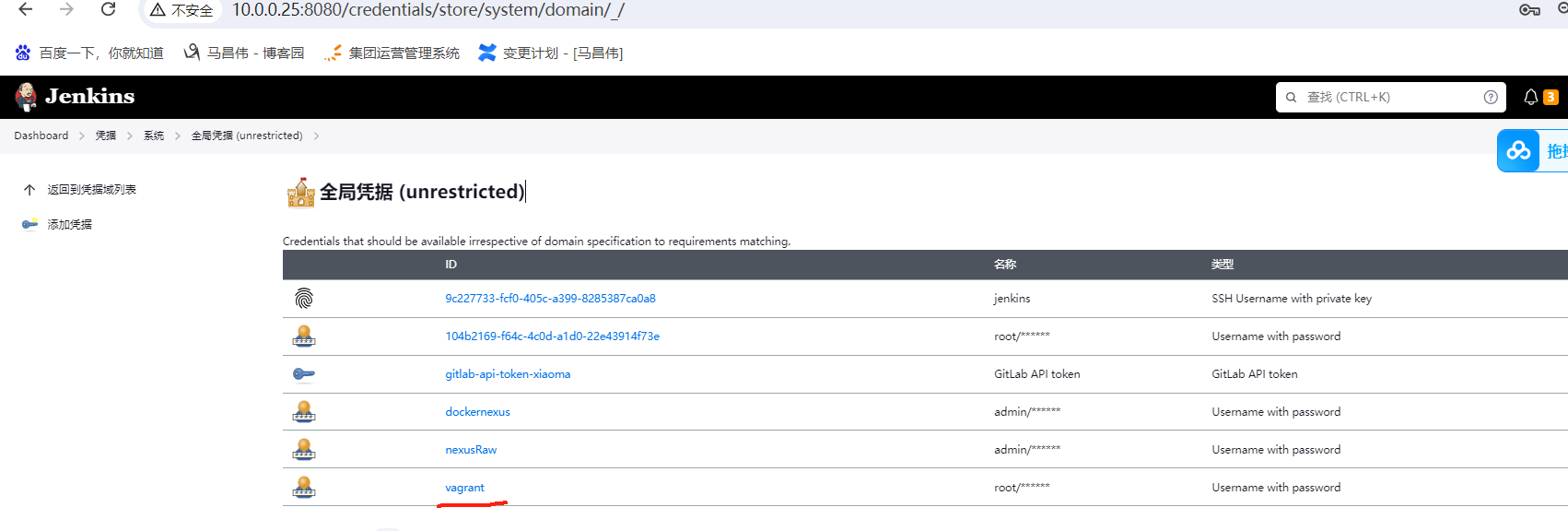

加个root作为凭证

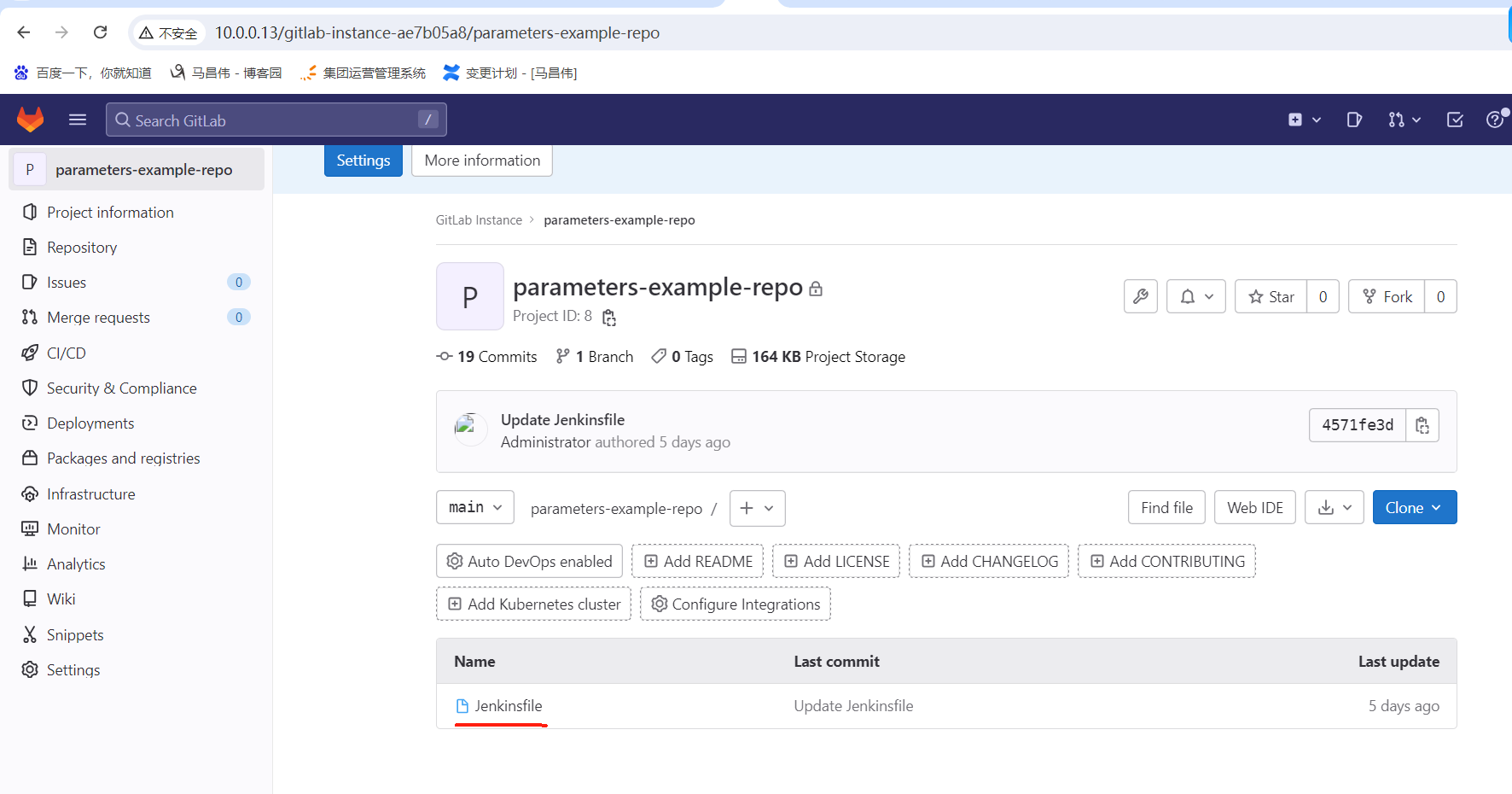

暂时用这个项目做pipeline吧

新增两个文件

hosts

[example] 10.0.0.13 [example2] 10.0.0.22

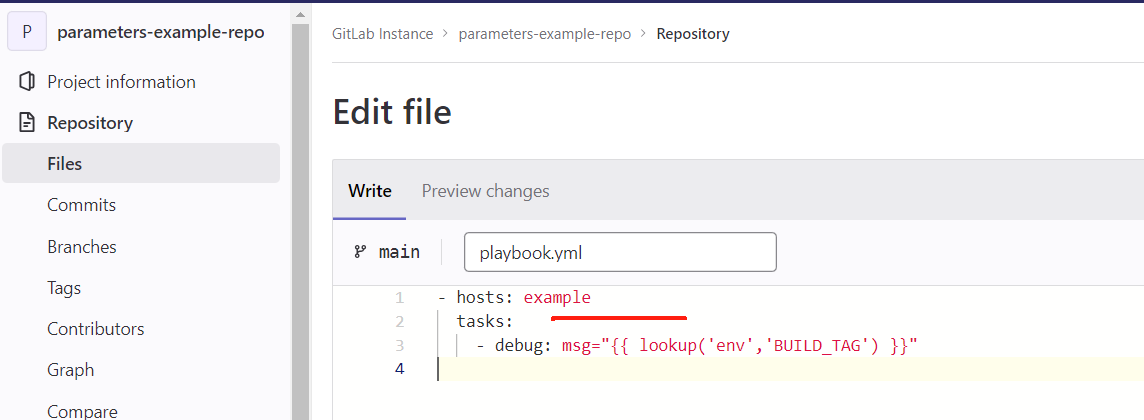

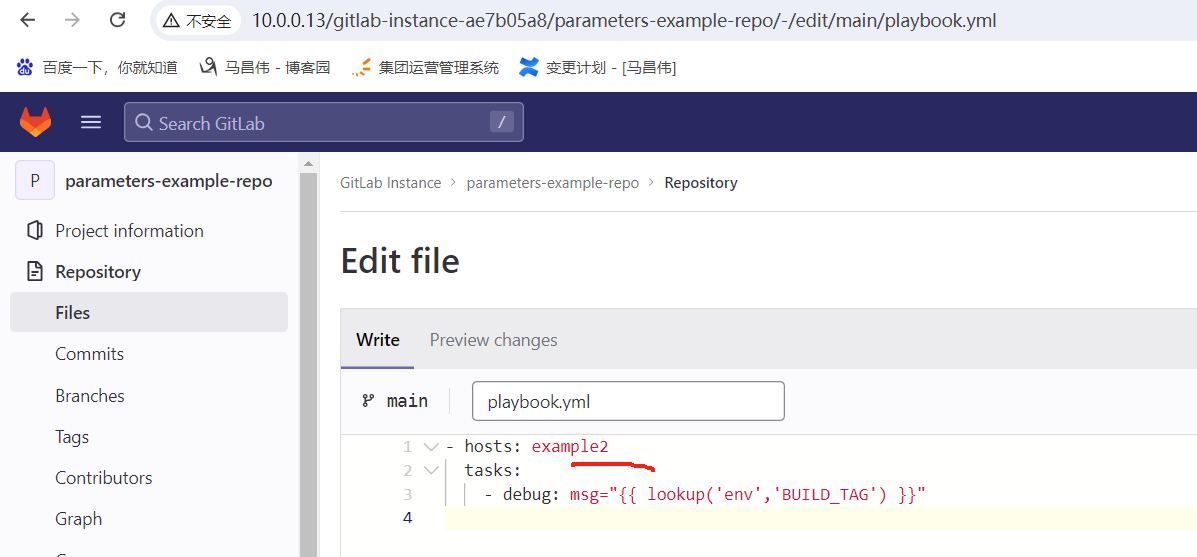

playbook.yml

- hosts: example2 tasks: - debug: msg="{{ lookup('env','BUILD_TAG') }}"

Jenkins file

pipeline { agent any stages { stage('deploy') { steps { ansiblePlaybook( playbook: "${env.WORKSPACE}/playbook.yml", inventory: "${env.WORKSPACE}/hosts", credentialsId: 'vagrant' ) } } } }

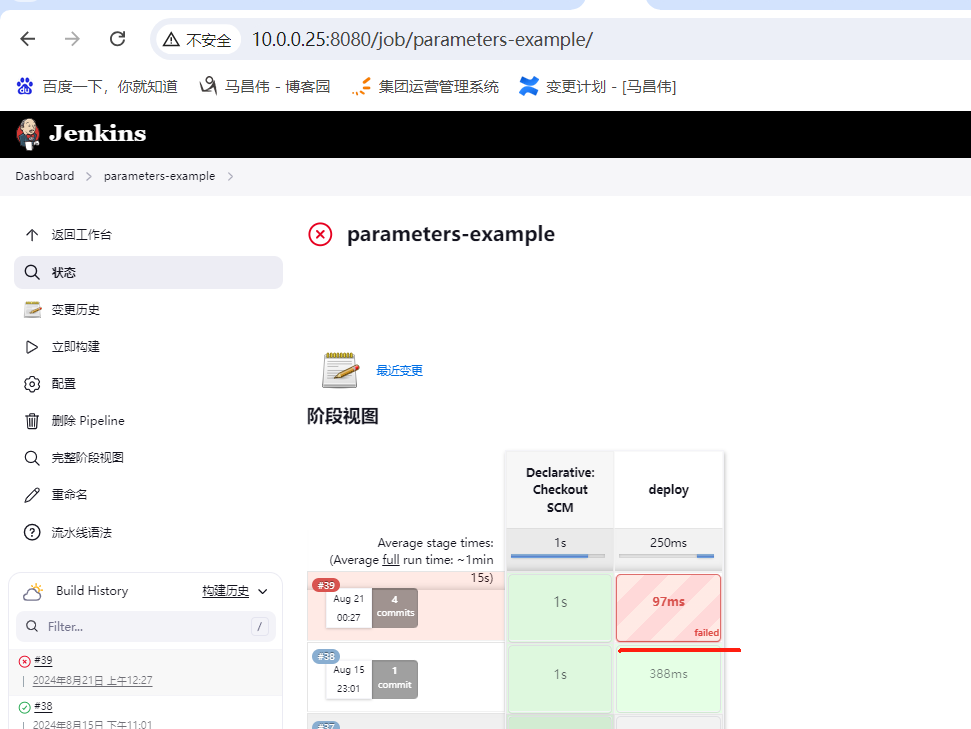

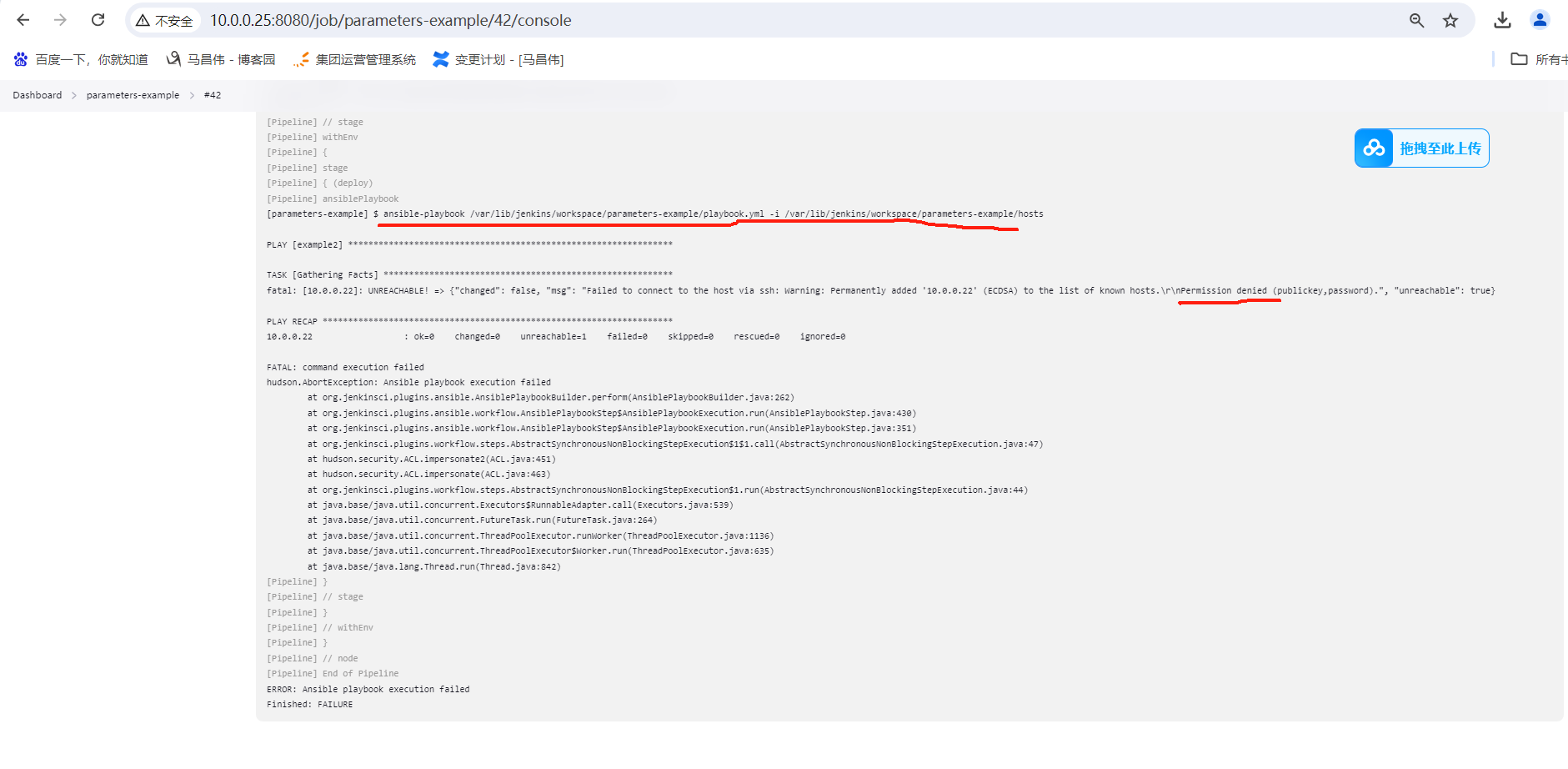

手动触发构建,失败

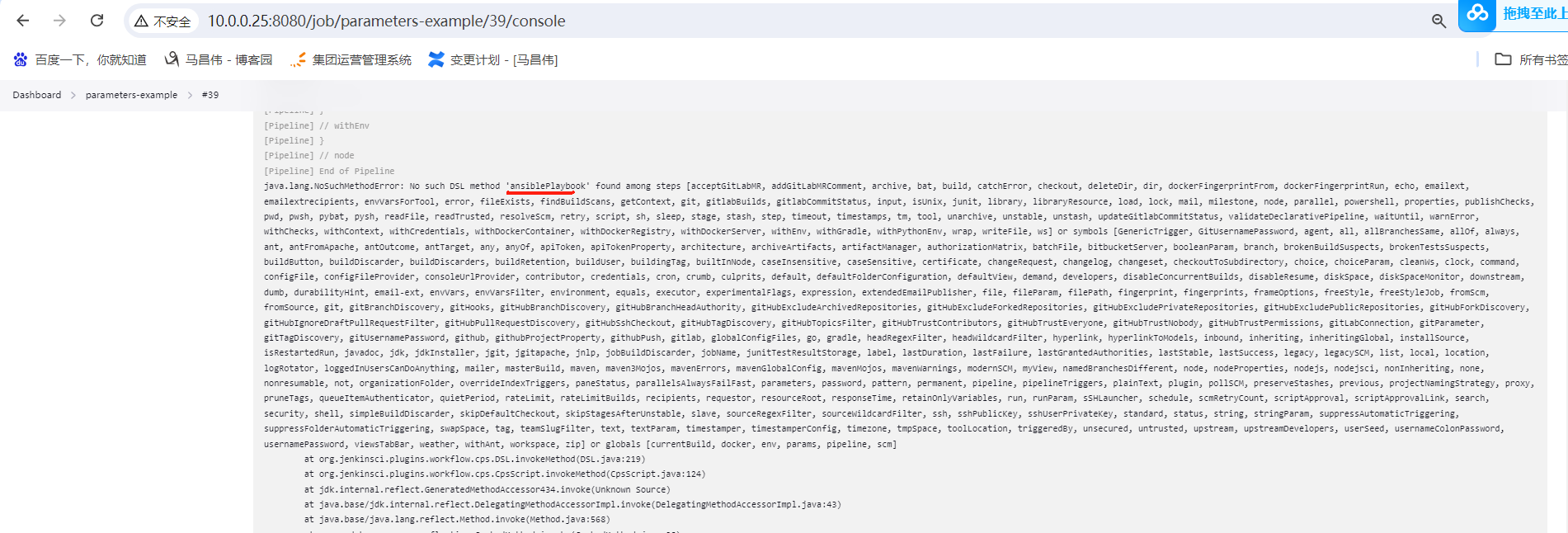

没这个步骤,步骤列表可以看到没有这个,现在有了docker了,之前安装了插件之后

java.lang.NoSuchMethodError: No such DSL method 'ansiblePlaybook' found among steps [

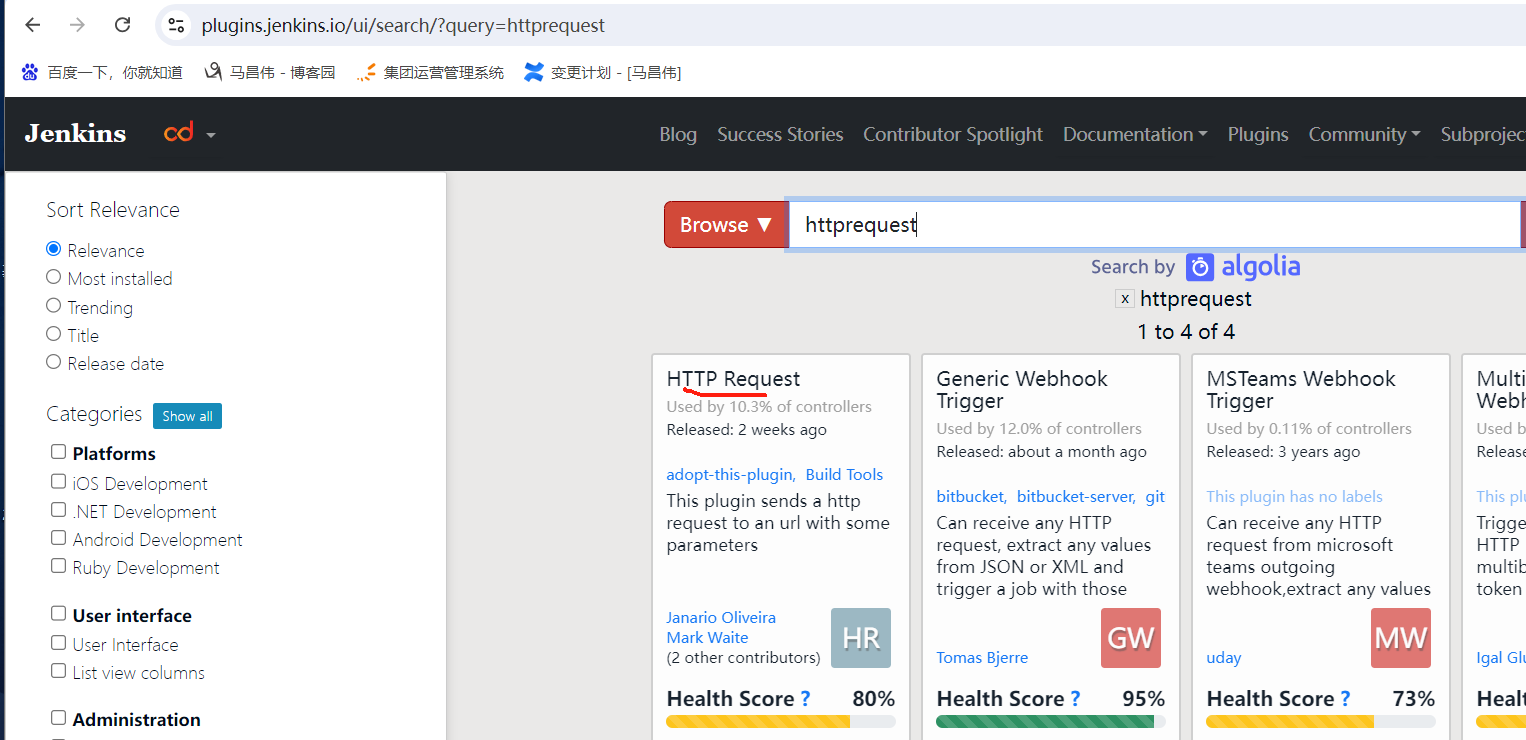

安装ansible插件:https://plugins.jenkins.io/ansible

安装之后,重新构建。报错,权限。可能是我们在Jenkins上加的凭证vagrant没有起到效果吧

把2去掉,用example,这个已经用密钥做了免密登录的

构建结果一样

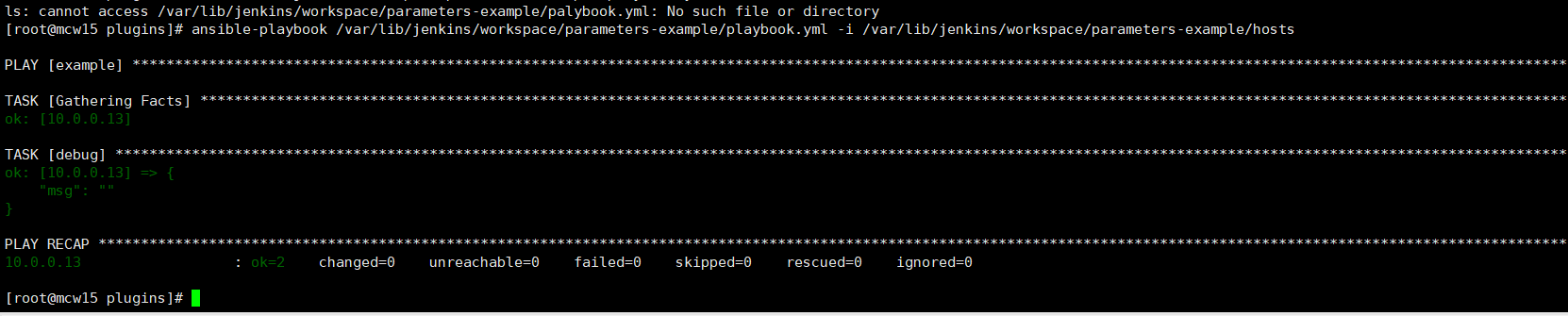

此时在机器上是正常执行命令的

好吧,凭证填写了但是没有确定

新增之后再次触发

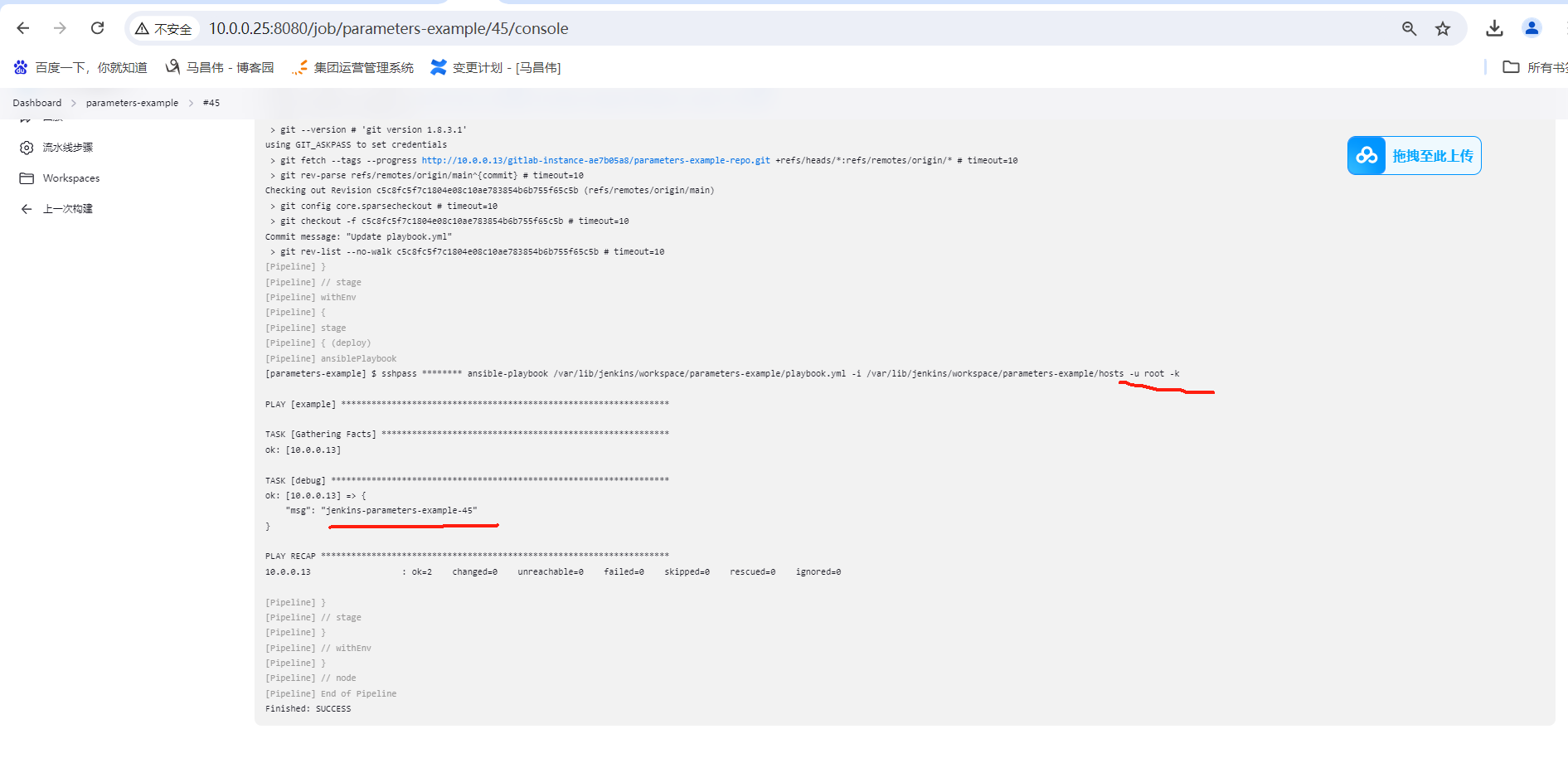

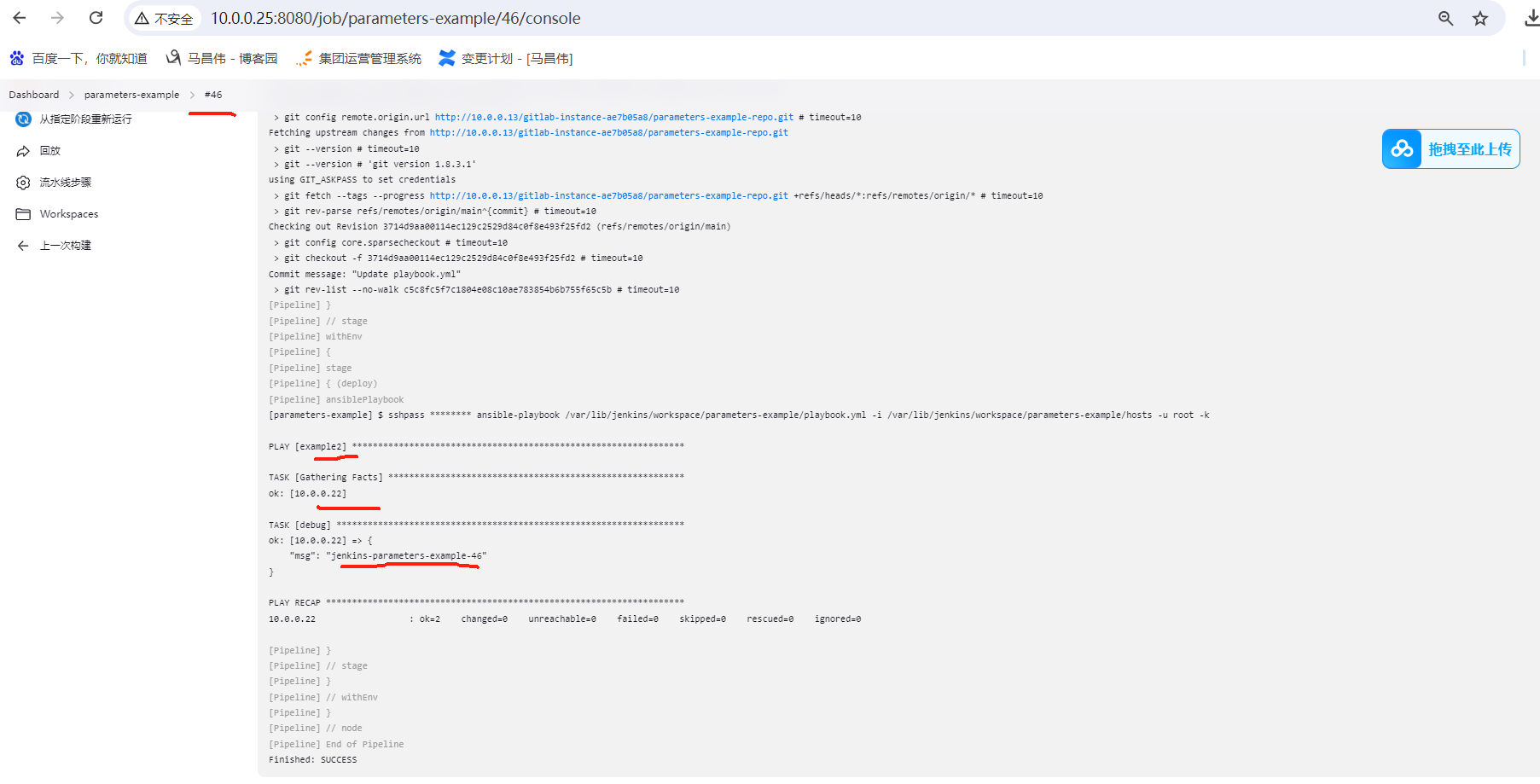

这次可以看到,已经成功了

并且从在yml中用lookup获取到了Jenkins任务运行的环境变量

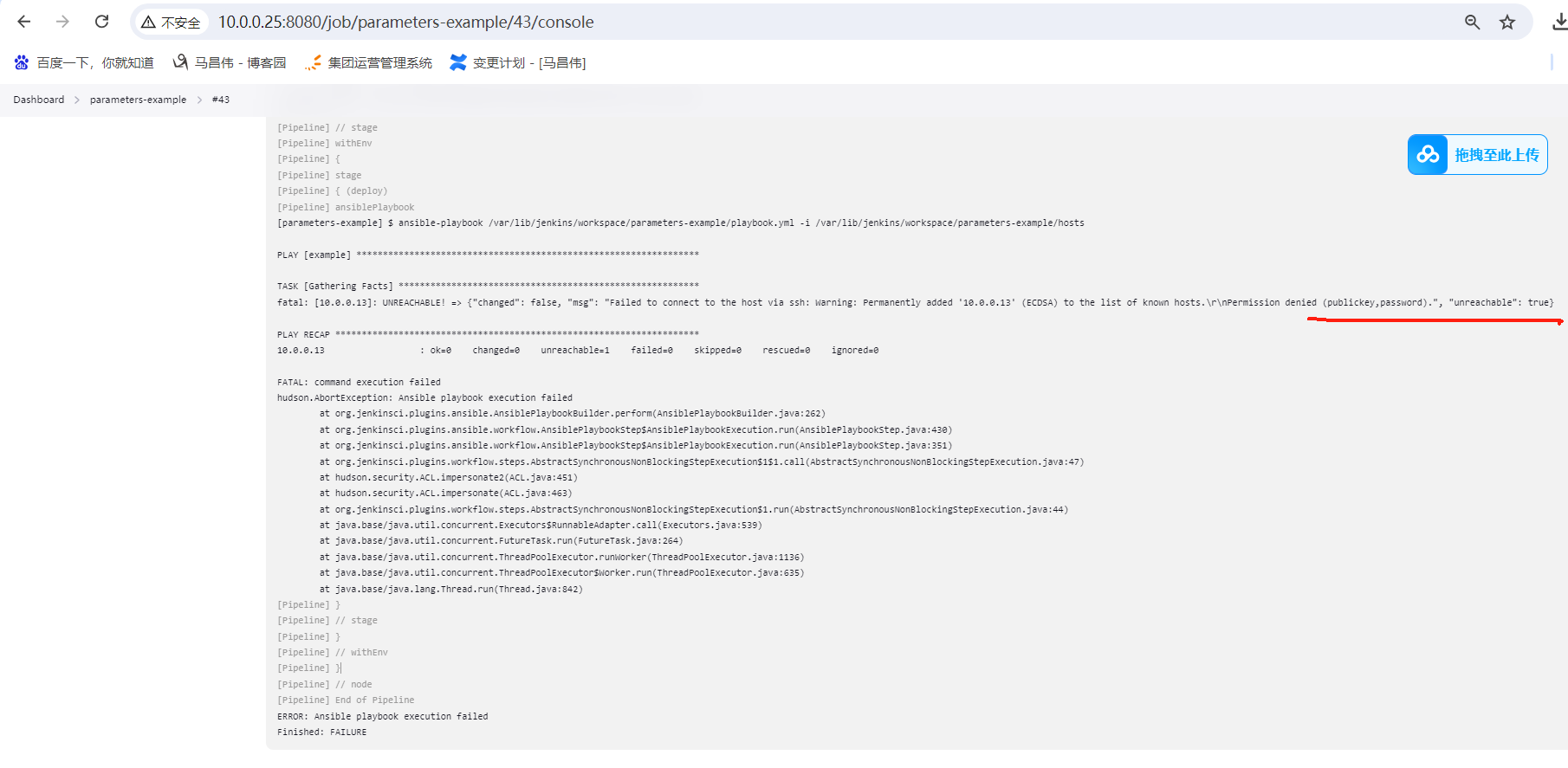

此时改为example2,这个机器和Jenkins本身没有免密,执行命令的时候也会出现权限问题。看这里通过Jenkins凭证,是否可以正常执行

可以看到,example2的也正常可以运行剧本

另外的案例

Jenkinsfile

pipeline { agent any stages { stage('Syntax check ansible playbook') { steps { ansiblePlaybook( disableHostKeyChecking: true, playbook: "${env.WORKSPACE}/playbook.yml", inventory: "${env.WORKSPACE}/hosts", credentialsId: 'vagrant', extras: '--syntax-check' ) } } stage('deploy') { steps { ansiblePlaybook( disableHostKeyChecking: true, playbook: "${env.WORKSPACE}/playbook.yml", inventory: "${env.WORKSPACE}/hosts", credentialsId: 'vagrant', // skippedTags: 'debugtag', forks: 2, limit: 'example,example2', tags: 'debugtag,testtag', extraVars: [ login: 'mylogin', secret_key: [value: 'g4dfKWENpeF6pY05', hidden: true] ] // startAtTask: 'task4' ) } } } }

host

[example] 10.0.0.13 [example2] 10.0.0.22

playbook.yml

- hosts: example2 tasks: - debug: msg="{{ lookup('env','BUILD_TAG') }}"

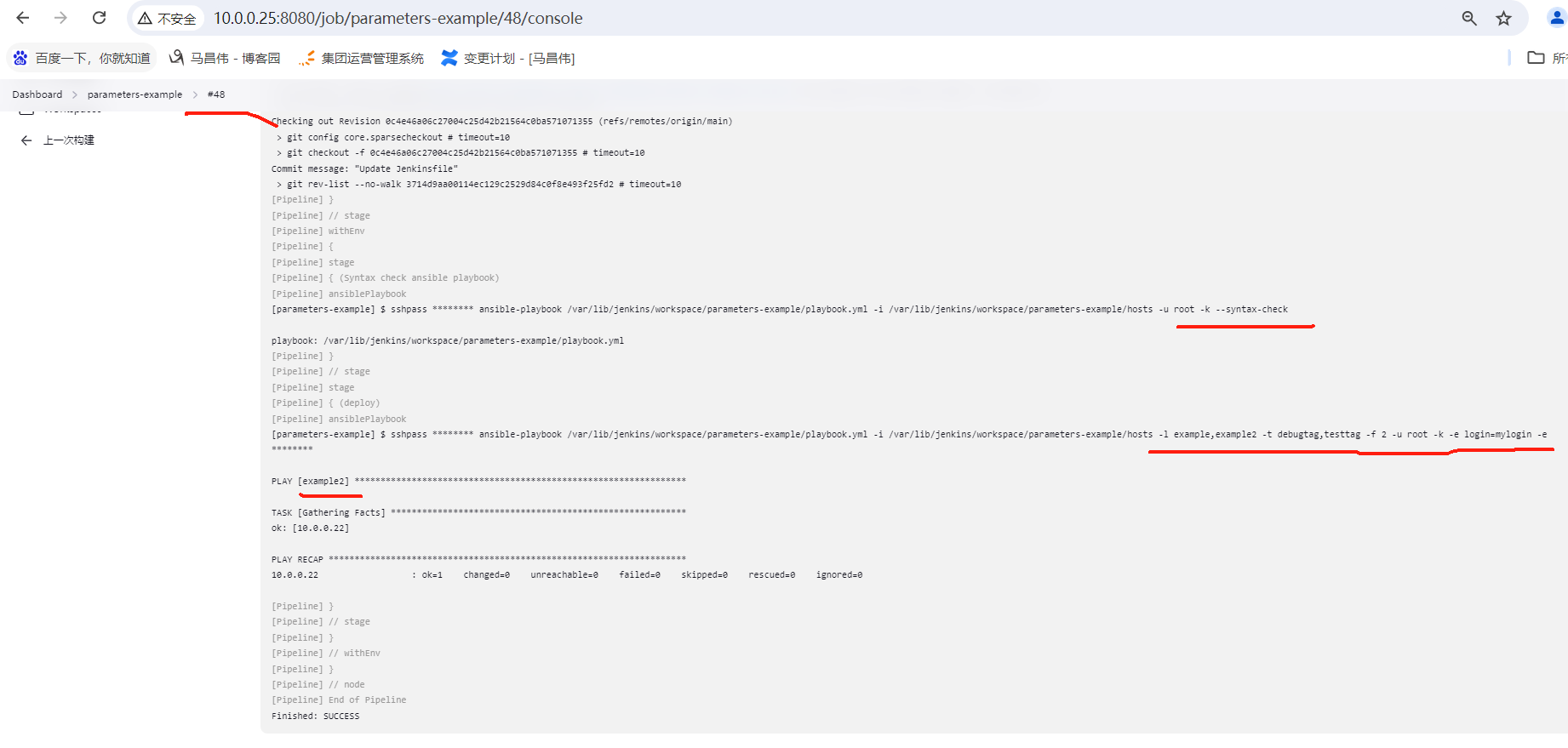

手动触发构建,任务执行成功

不过查看,只执行了example2,没有执行example的,不过这符合正常,因为我们在剧本里写死了是example2的主机运行

ansibleVault步骤

不同资产不同的ansible登录方式

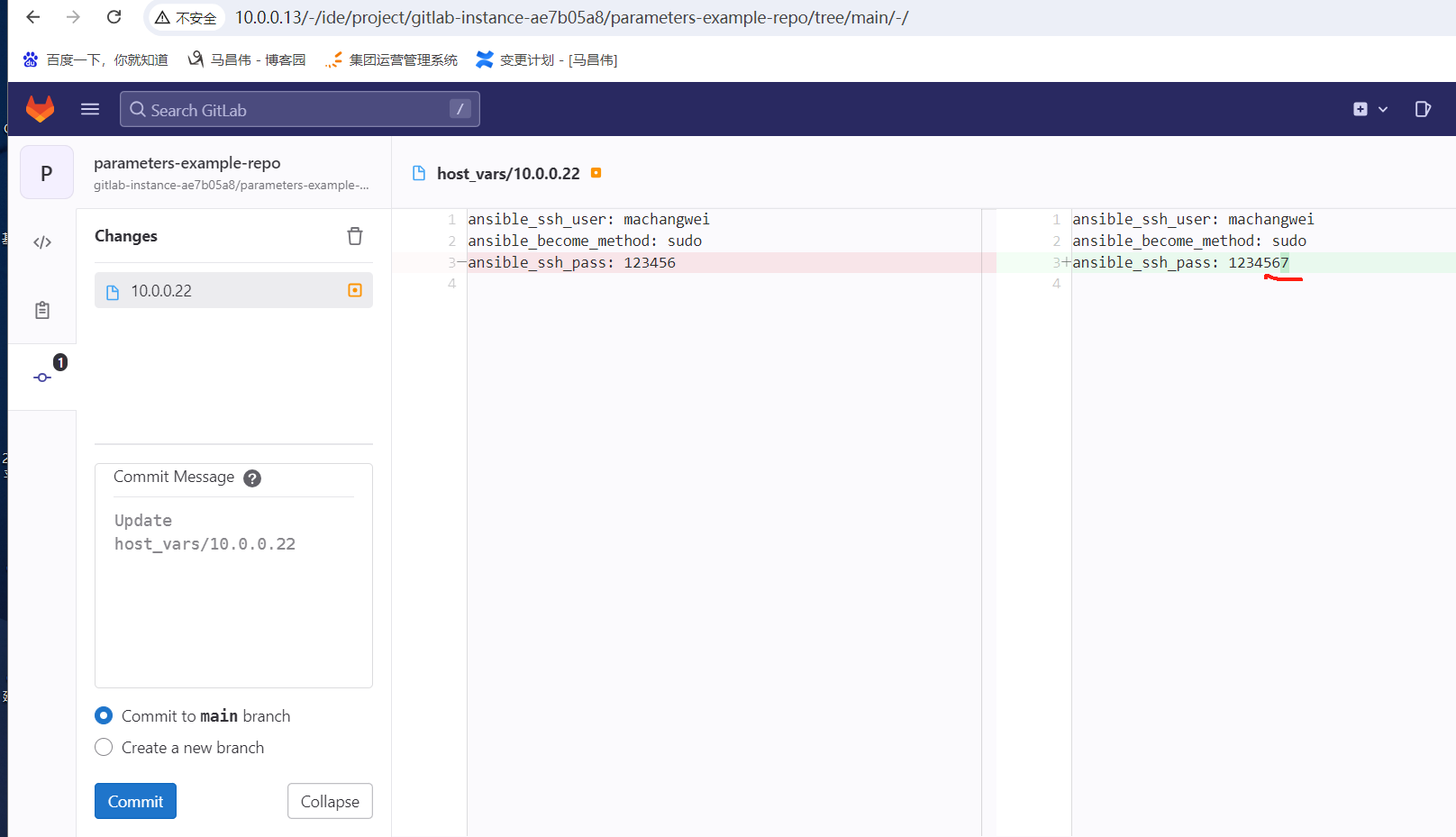

指定这个机器 用这个machangwei用户和密码

10.0.0.22

ansible_ssh_user: machangwei ansible_become_method: sudo ansible_ssh_pass: 123456

hosts

[example] 10.0.0.13 [example2] 10.0.0.22

playbook.yml

- hosts: example2 tasks: - debug: msg="{{ lookup('env','BUILD_TAG') }}"

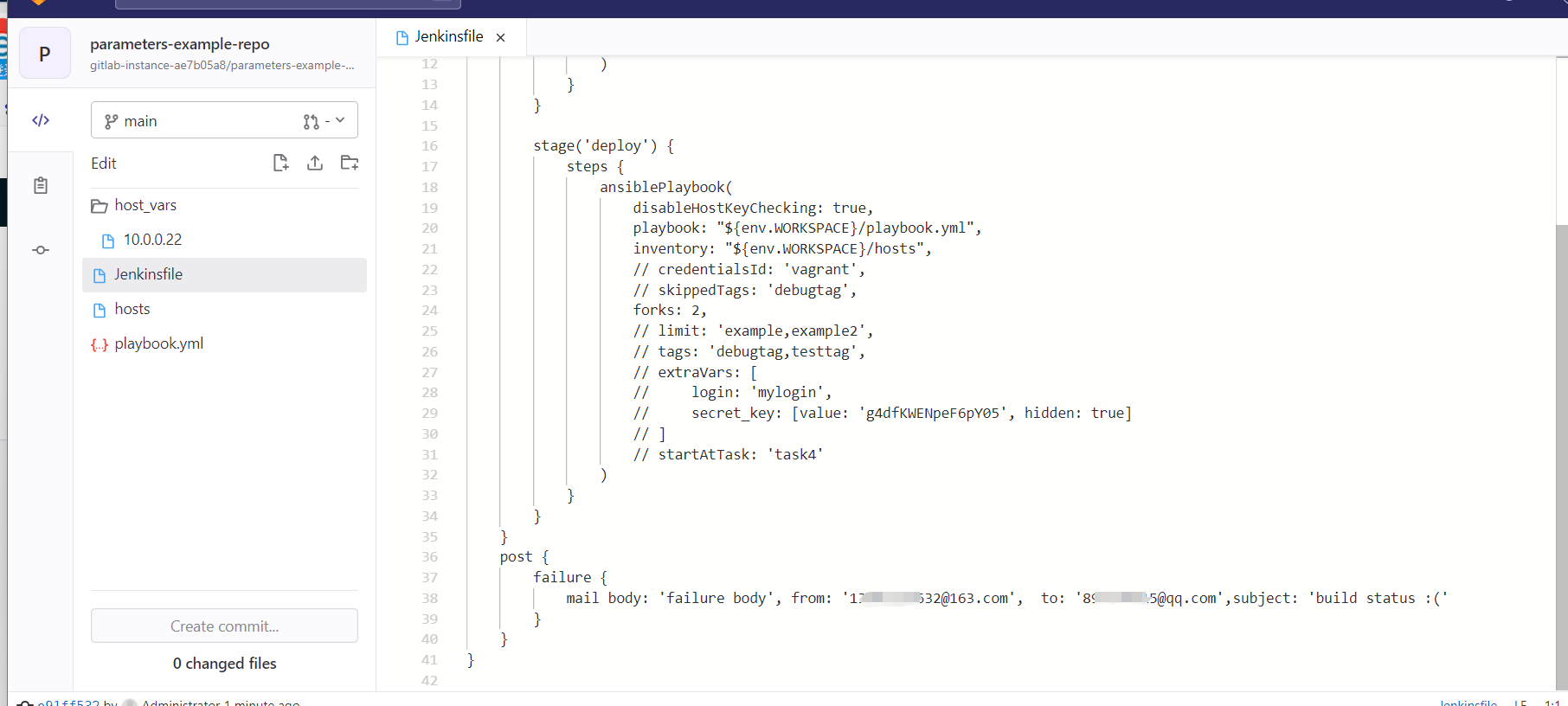

jenkinsfile,

检查的时候用Jenkins凭证,但是执行的时候用的是host_vars里面的machangwei用户了,如果密码有问题,那么执行的时候会报错了

pipeline { agent any stages { stage('Syntax check ansible playbook') { steps { ansiblePlaybook( disableHostKeyChecking: true, playbook: "${env.WORKSPACE}/playbook.yml", inventory: "${env.WORKSPACE}/hosts", credentialsId: 'vagrant', extras: '--syntax-check' ) } } stage('deploy') { steps { ansiblePlaybook( disableHostKeyChecking: true, playbook: "${env.WORKSPACE}/playbook.yml", inventory: "${env.WORKSPACE}/hosts", // credentialsId: 'vagrant', // skippedTags: 'debugtag', forks: 2, // limit: 'example,example2', // tags: 'debugtag,testtag', // extraVars: [ // login: 'mylogin', // secret_key: [value: 'g4dfKWENpeF6pY05', hidden: true] // ] // startAtTask: 'task4' ) } } } }

点击构建,查看检查的时候,是Jenkins凭证,用的root检查的语法。然后执行的时候,没有指定用户,也就是用的host_vars里面的machangwei用户成功运行了,sudo运行的,并且两个机器上都有machangwei系统用户,都是有sudo权限的。

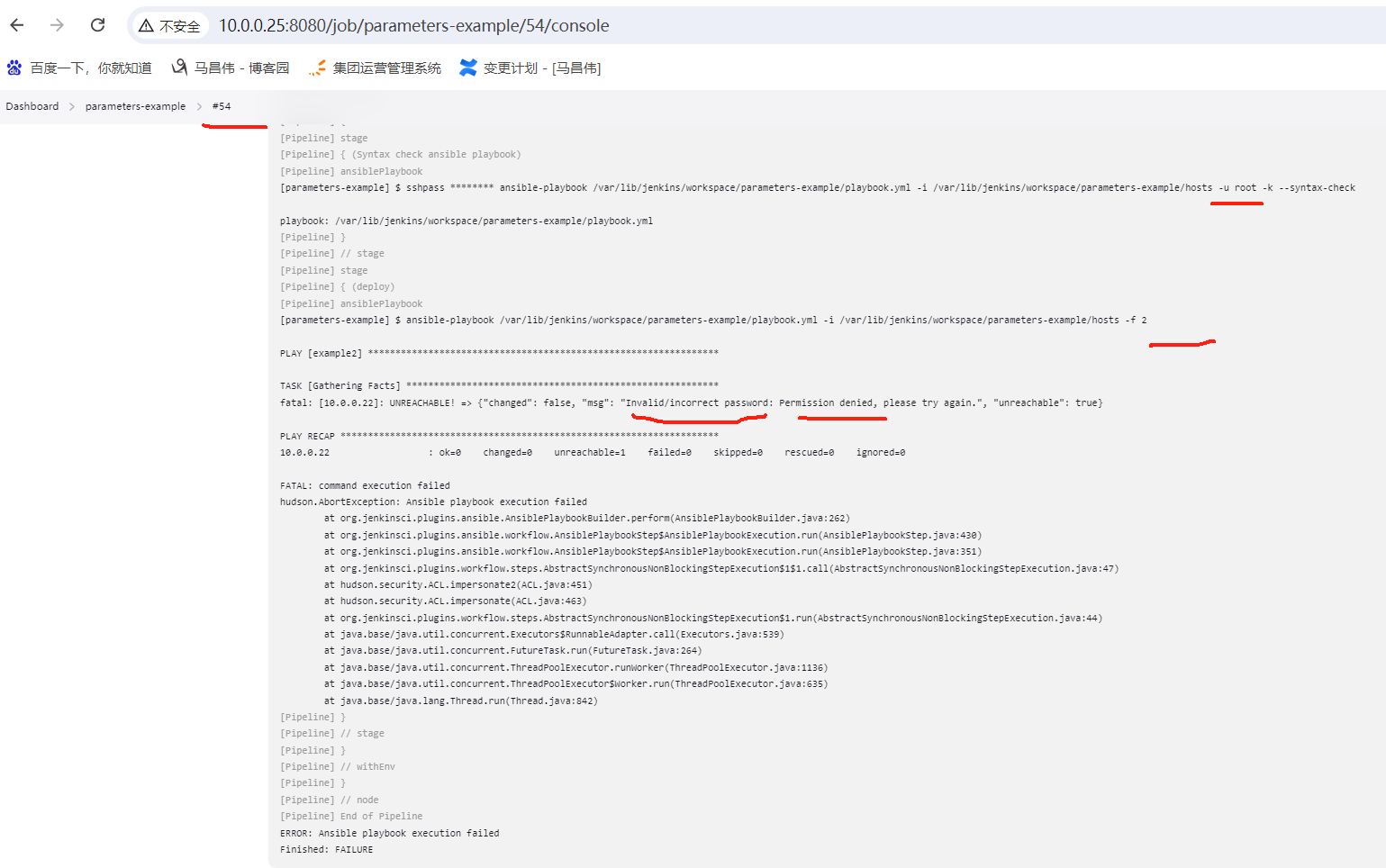

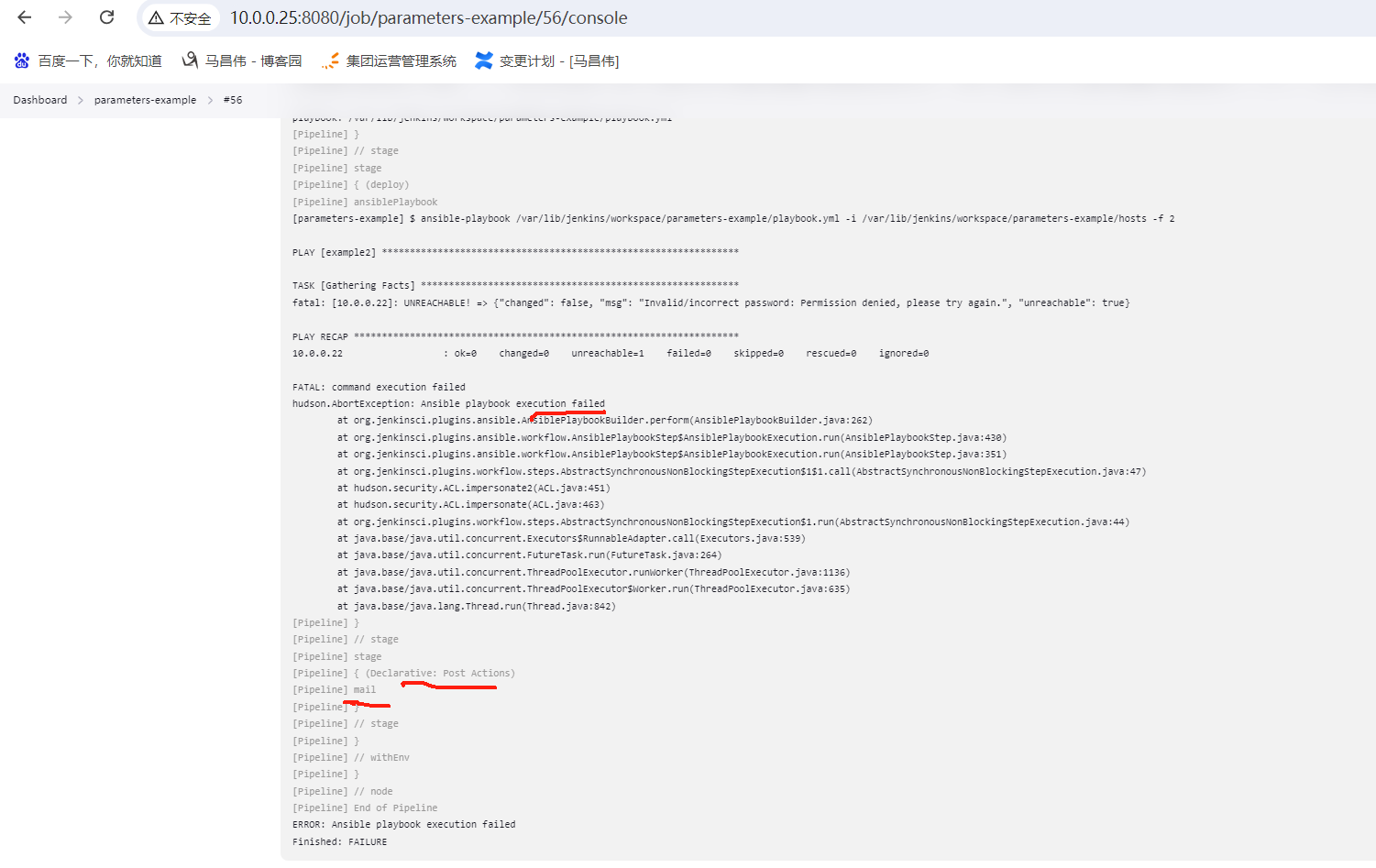

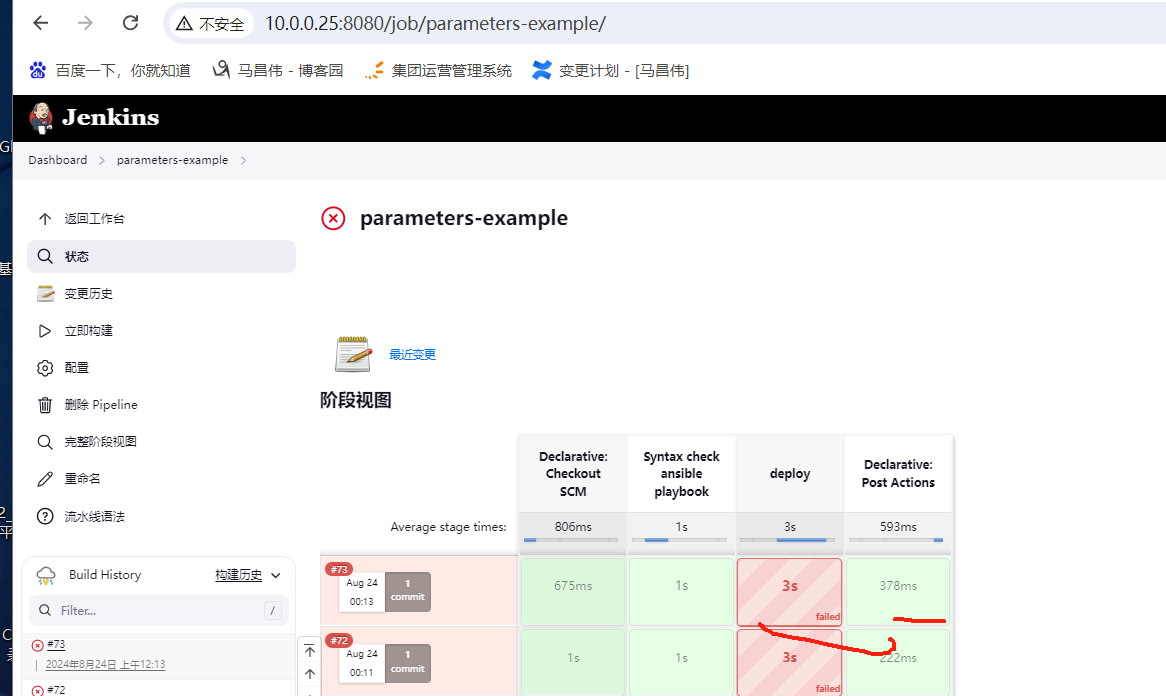

我们这里想要验证下,的确是用的host_vars里面的machangwei用户,可以将里面的密码从123456改为1234567,如果构建数字54失败,报权限错误,那么的确是符合预期的。也就是我们可以根据不同的主机,进行不同的登录方式

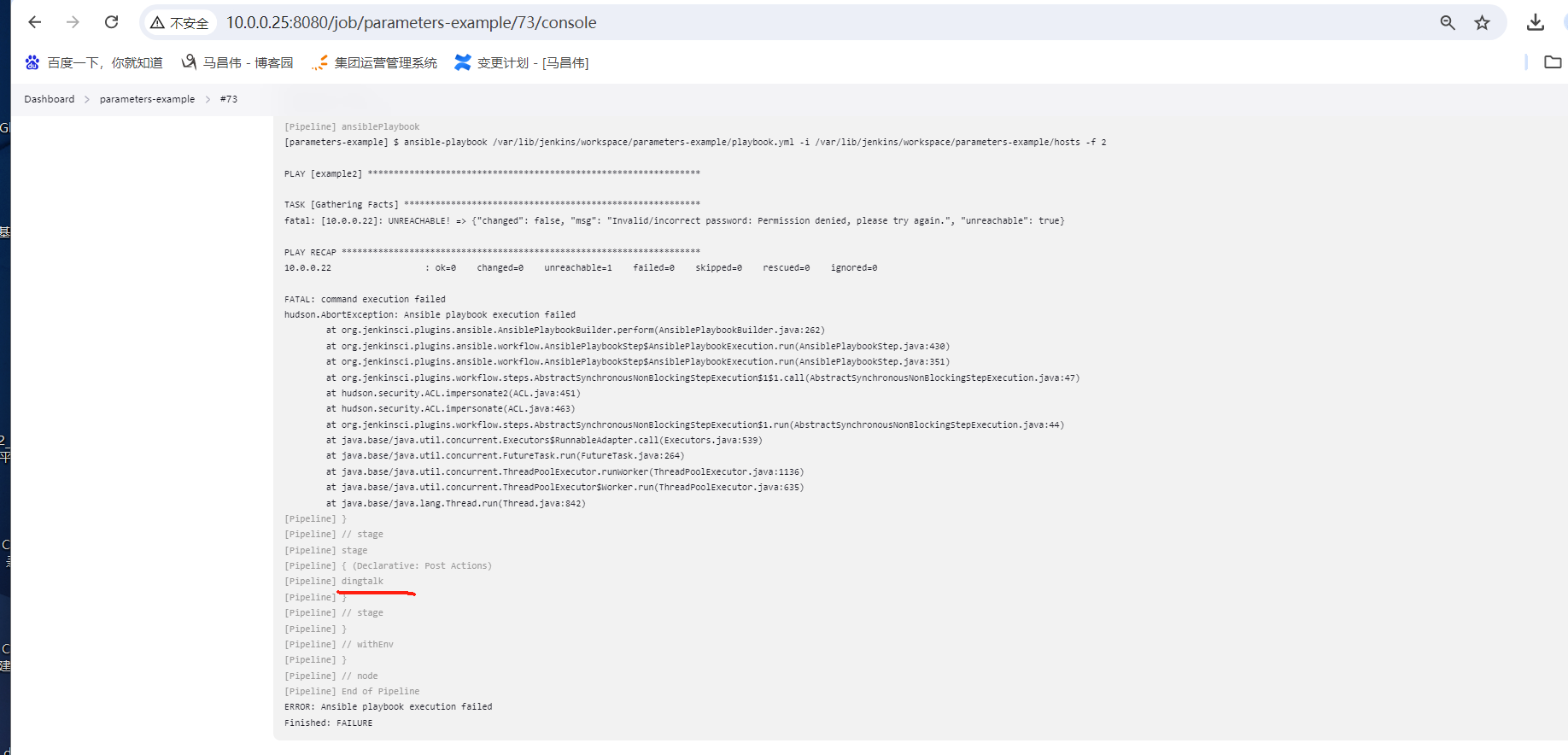

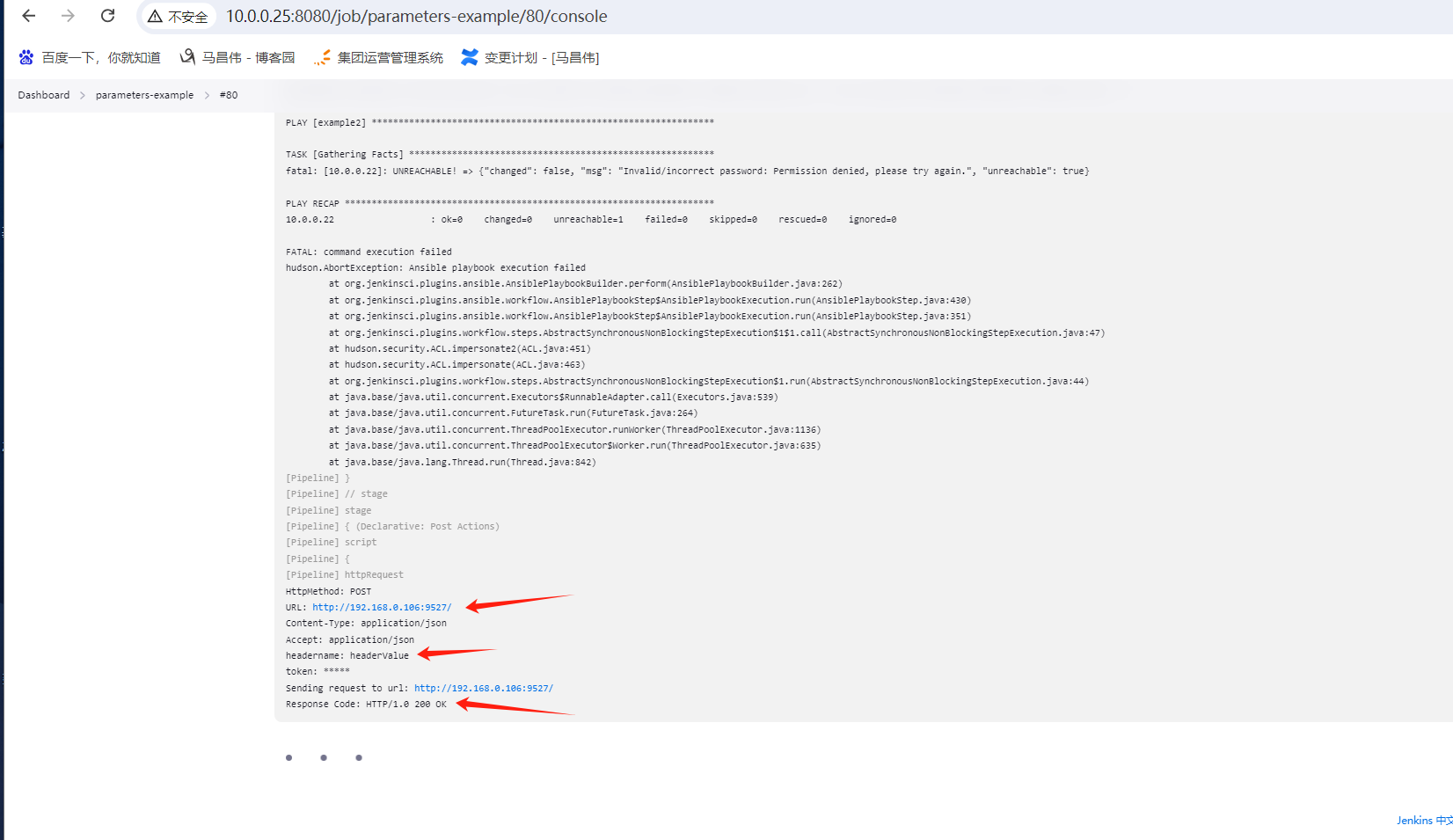

结果就是我们想的那样,报错无效的密码,权限拒绝了。

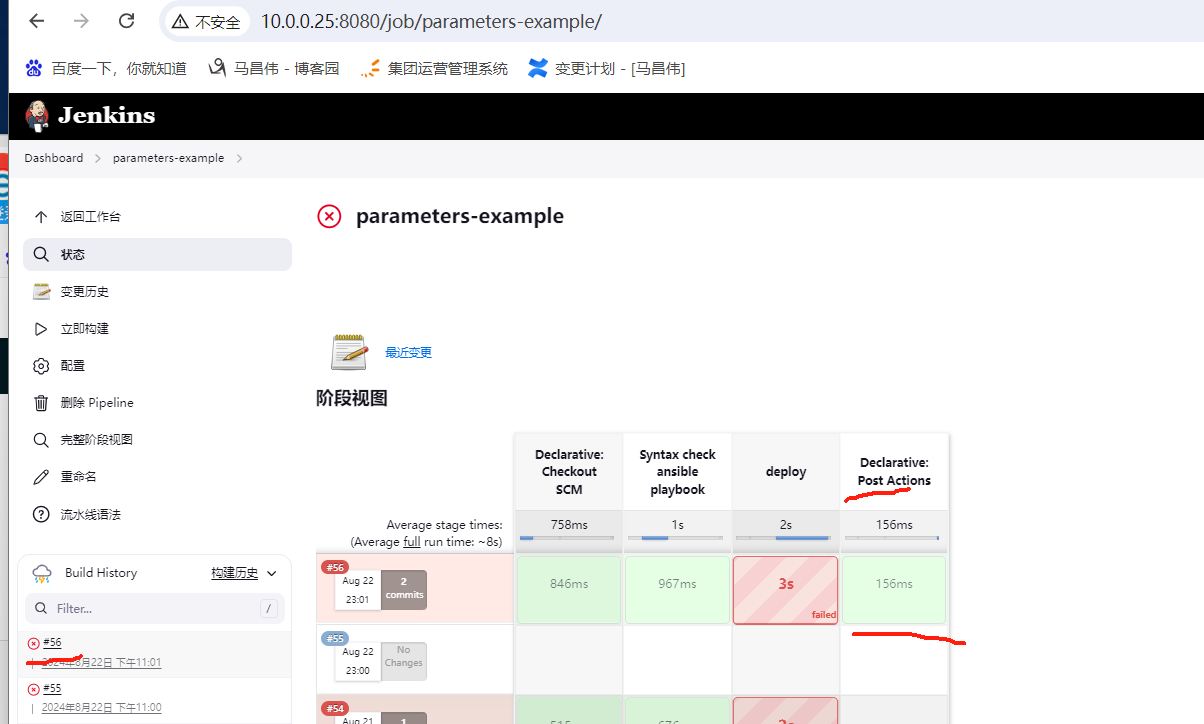

通知

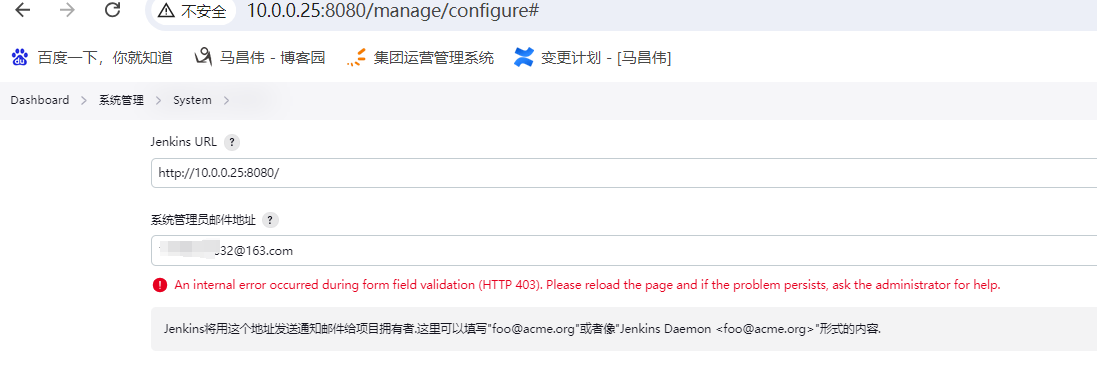

邮箱配置和邮件通知

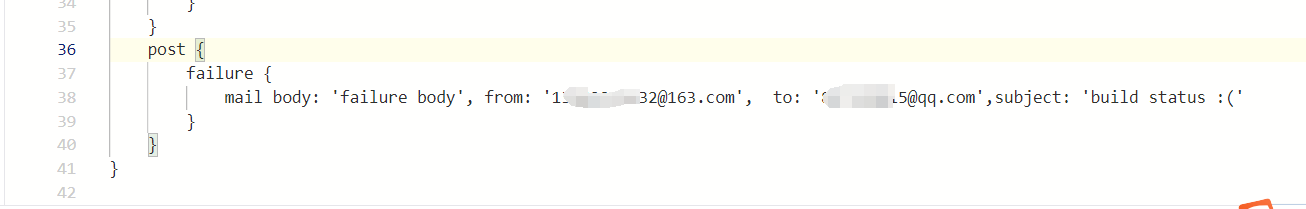

pipeline { agent any stages { stage('Syntax check ansible playbook') { steps { ansiblePlaybook( disableHostKeyChecking: true, playbook: "${env.WORKSPACE}/playbook.yml", inventory: "${env.WORKSPACE}/hosts", credentialsId: 'vagrant', extras: '--syntax-check' ) } } stage('deploy') { steps { ansiblePlaybook( disableHostKeyChecking: true, playbook: "${env.WORKSPACE}/playbook.yml", inventory: "${env.WORKSPACE}/hosts", // credentialsId: 'vagrant', // skippedTags: 'debugtag', forks: 2, // limit: 'example,example2', // tags: 'debugtag,testtag', // extraVars: [ // login: 'mylogin', // secret_key: [value: 'g4dfKWENpeF6pY05', hidden: true] // ] // startAtTask: 'task4' ) } } } post { failure { mail body: 'failure body', from: '1xxx32@163.com', to: '8xx15@qq.com',subject: 'build status :(' } } }

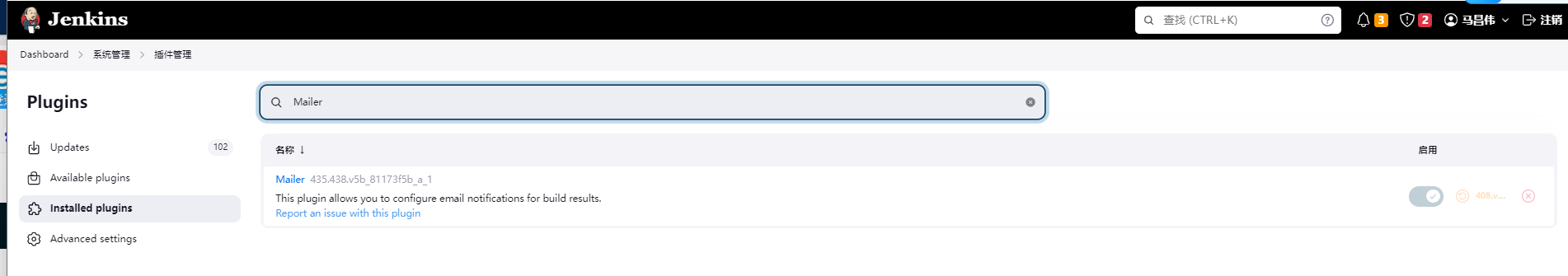

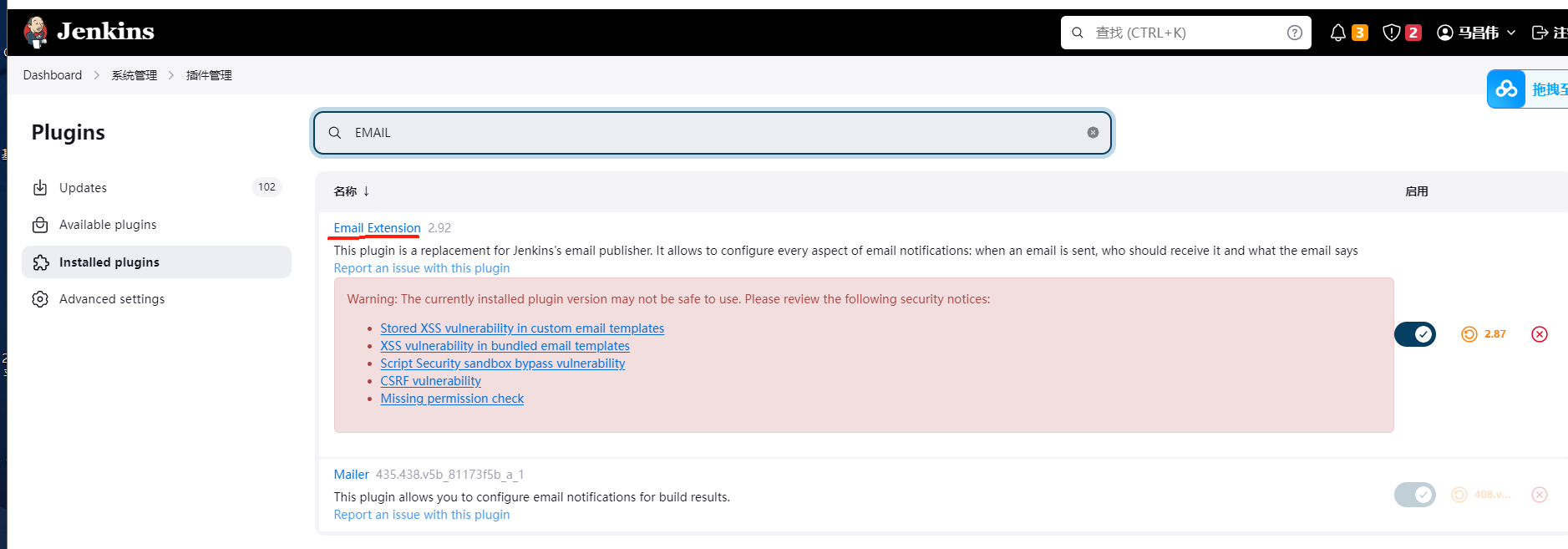

报错,没有安装邮件的插件Mailer Plugin吗?

查看是安装了的

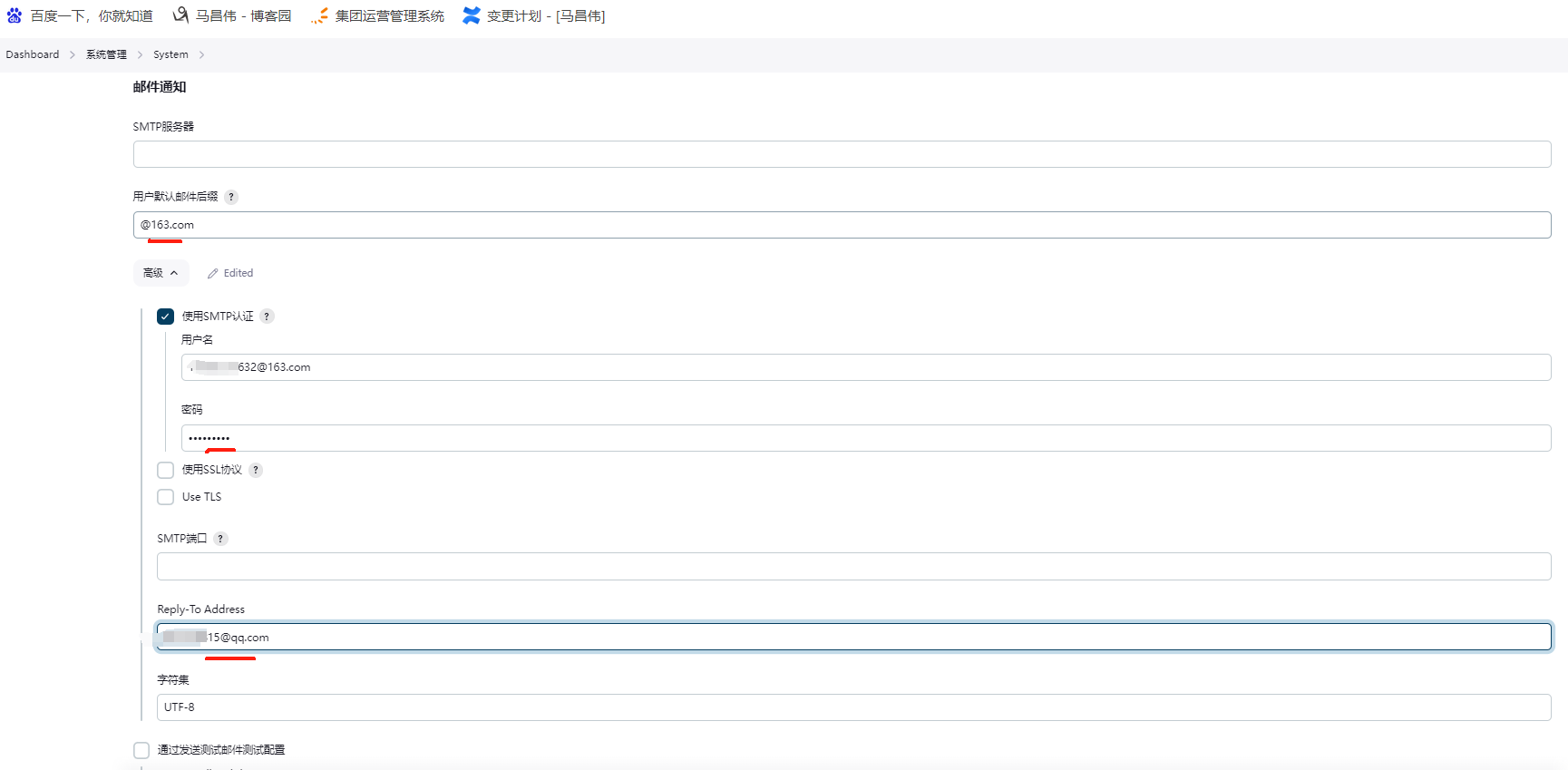

这次没有报错了,按照上面的例子,

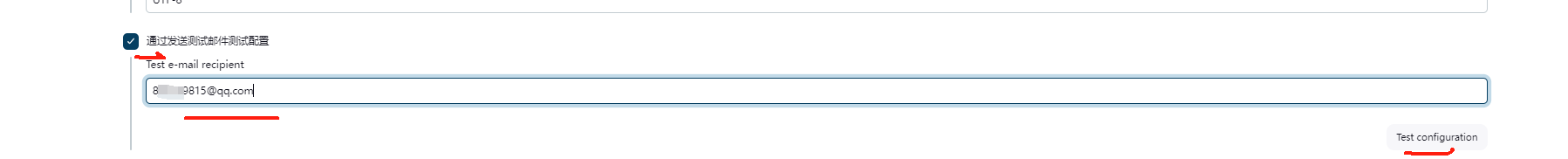

先测试一下,发给下面的测试接收邮箱

提示发送成功,但是却没看到发送以及接收邮件,先不管了,先保存一下

修改添加邮件发送

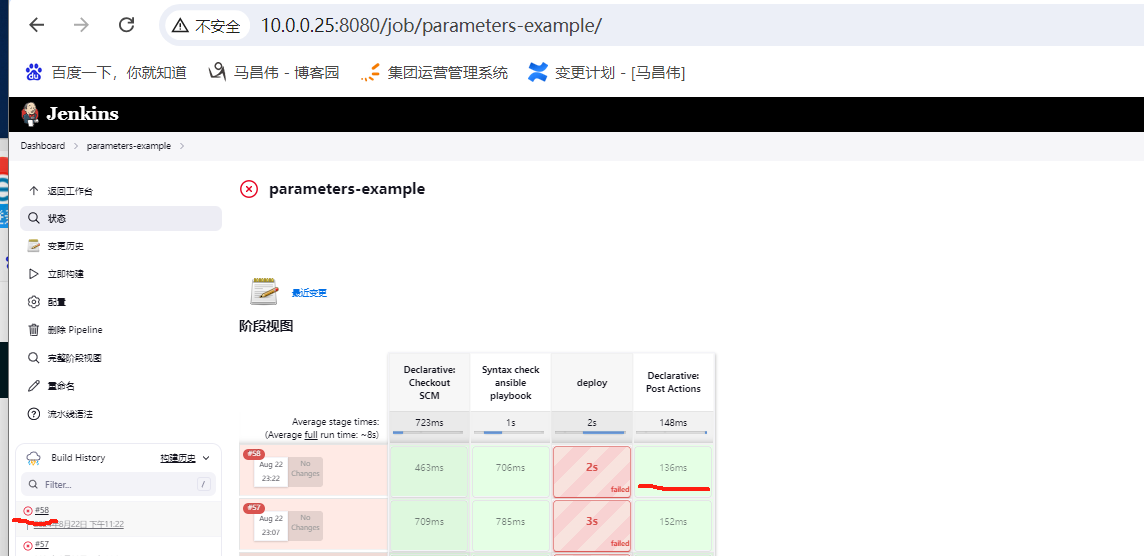

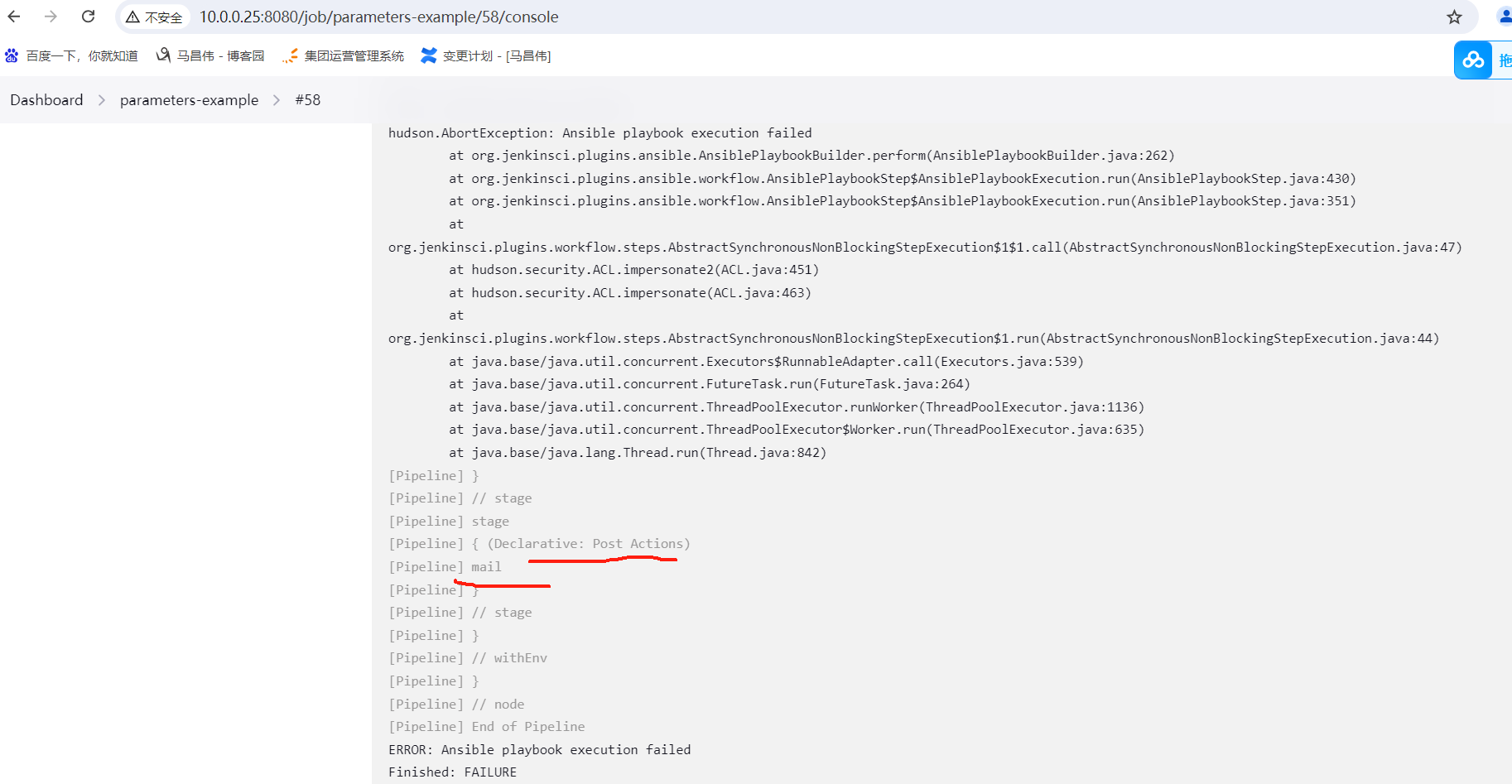

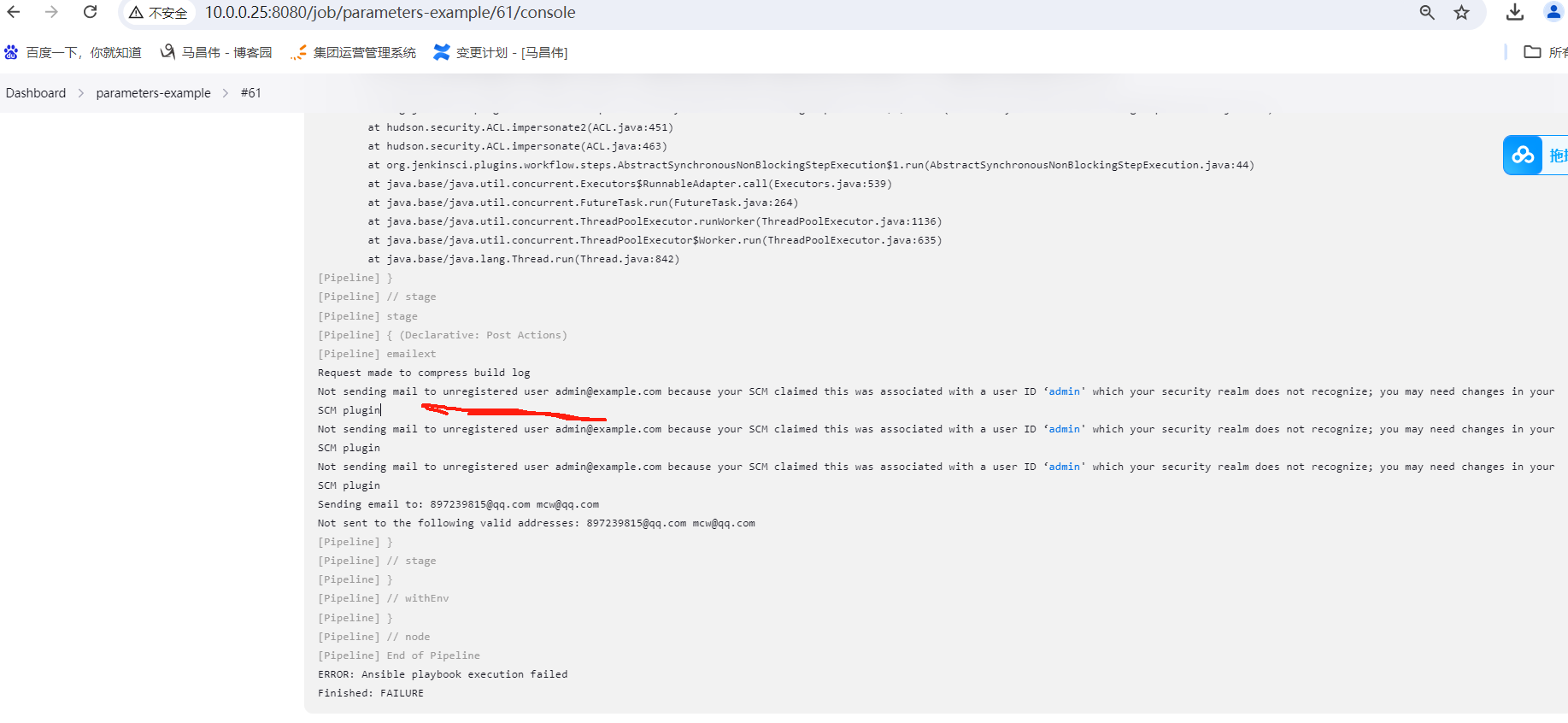

虽然部署阶段失败,但是post发送邮件这里显示成功的

查看日志输出,执行了post了。但是并没有看到邮件的发出和接收

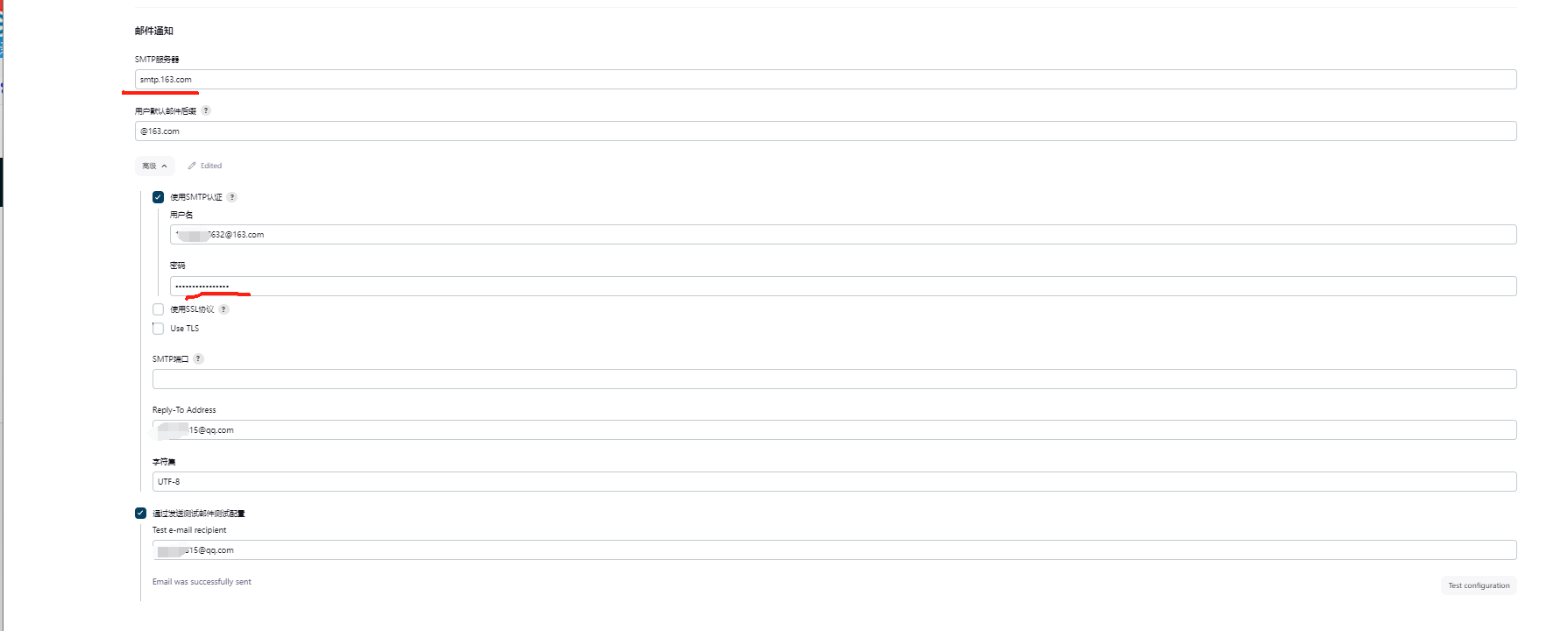

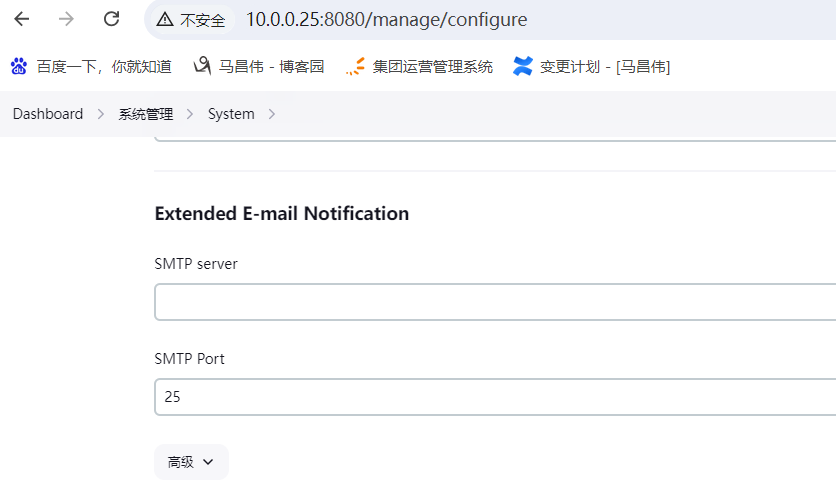

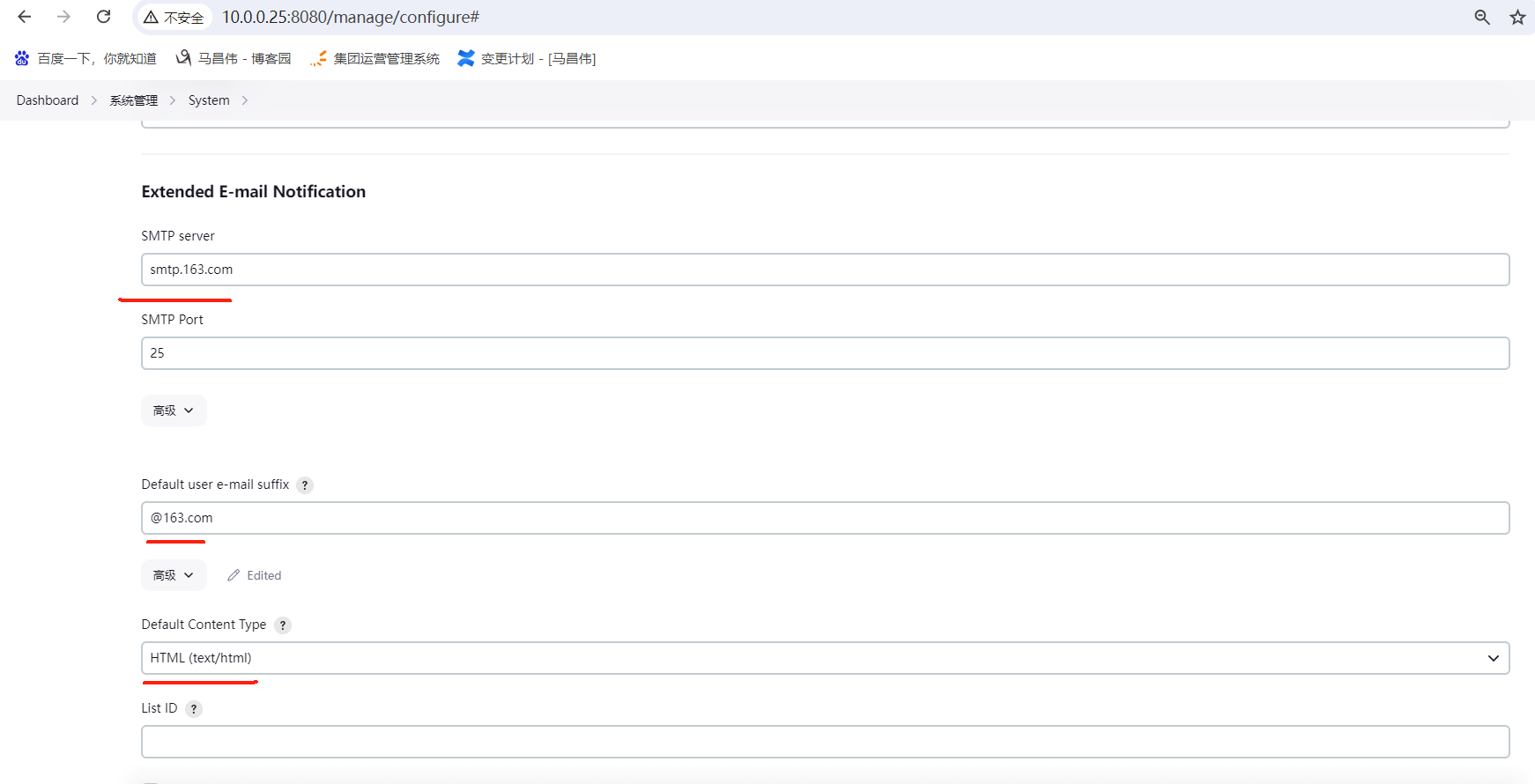

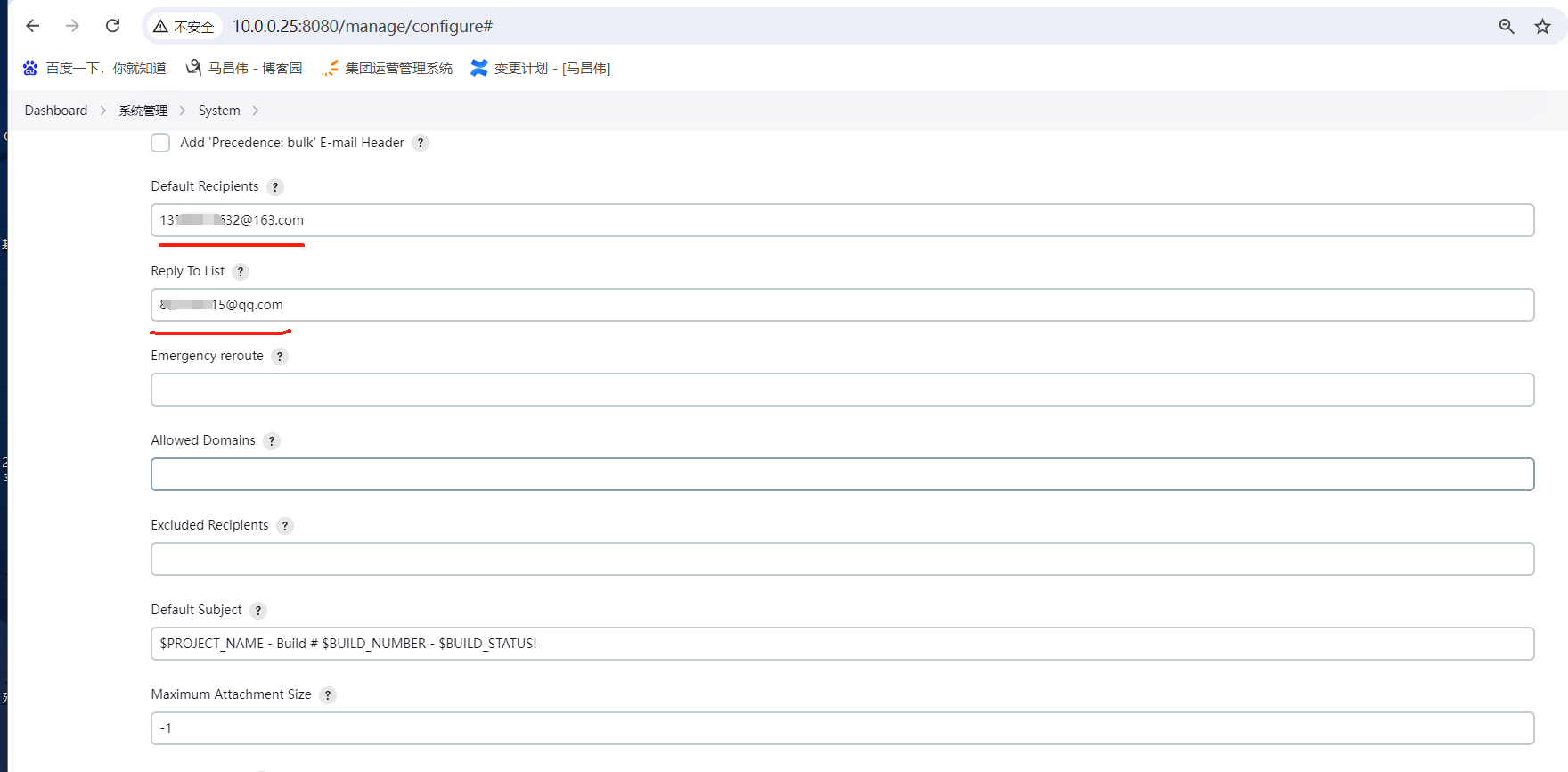

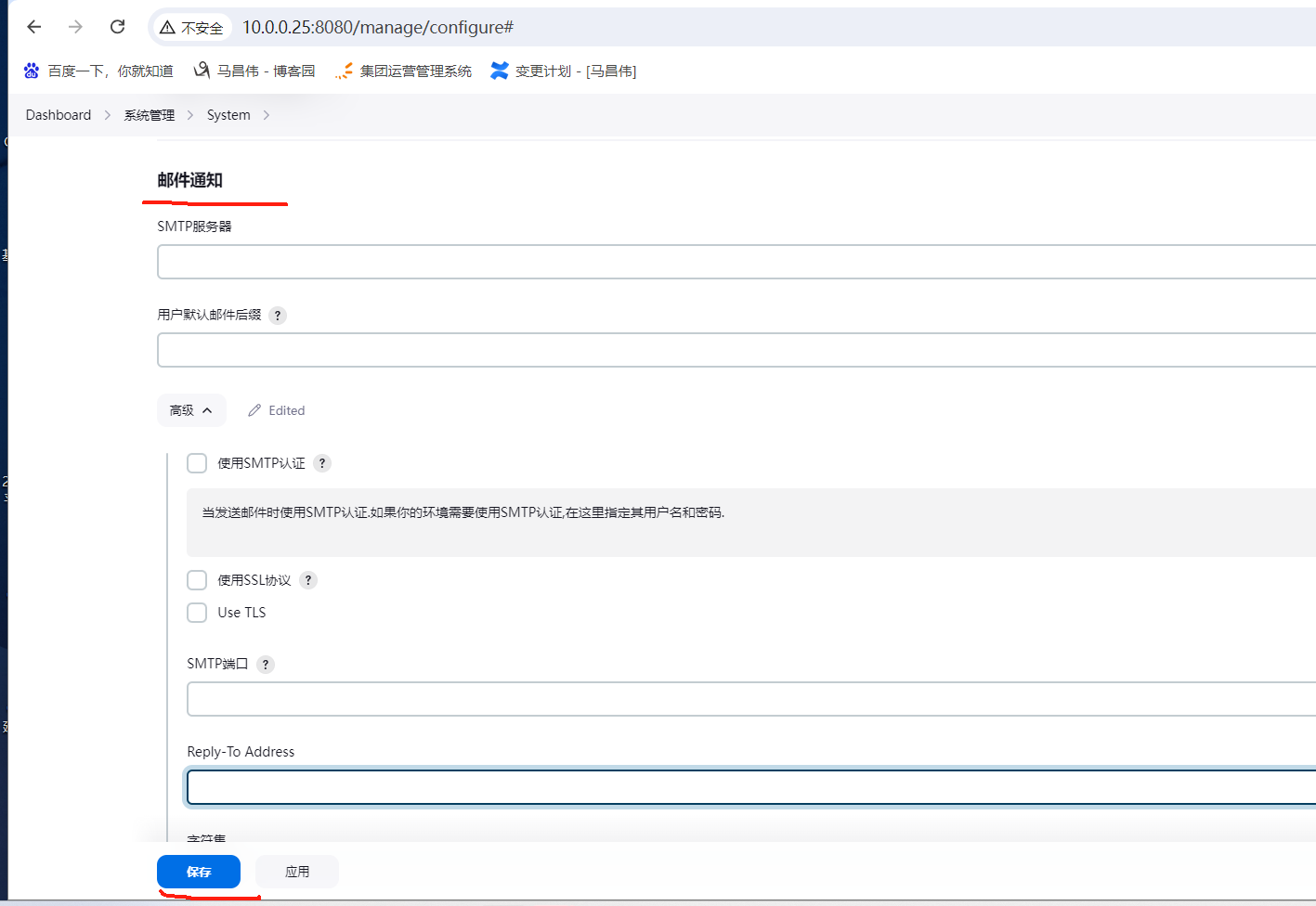

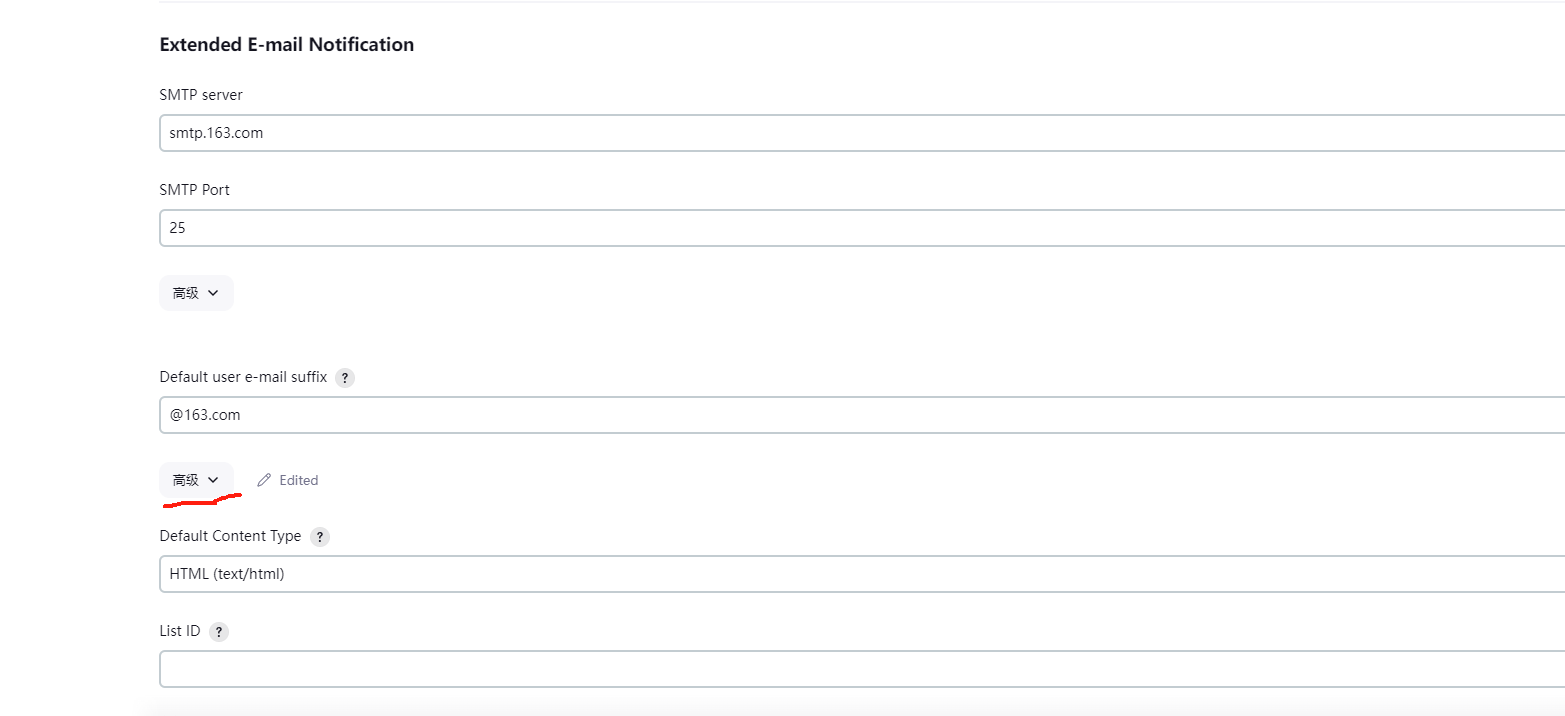

重新做下系统配置,配置邮箱

注意,之前没有填写smtp服务器,是不行的。得填写,并且密码不是平时登录邮箱的密码,而是授权码。

授权码是用于登录第三方邮件客户端的专用密码。

上面再次点击测试,可以看到已经发送并接收到了邮件

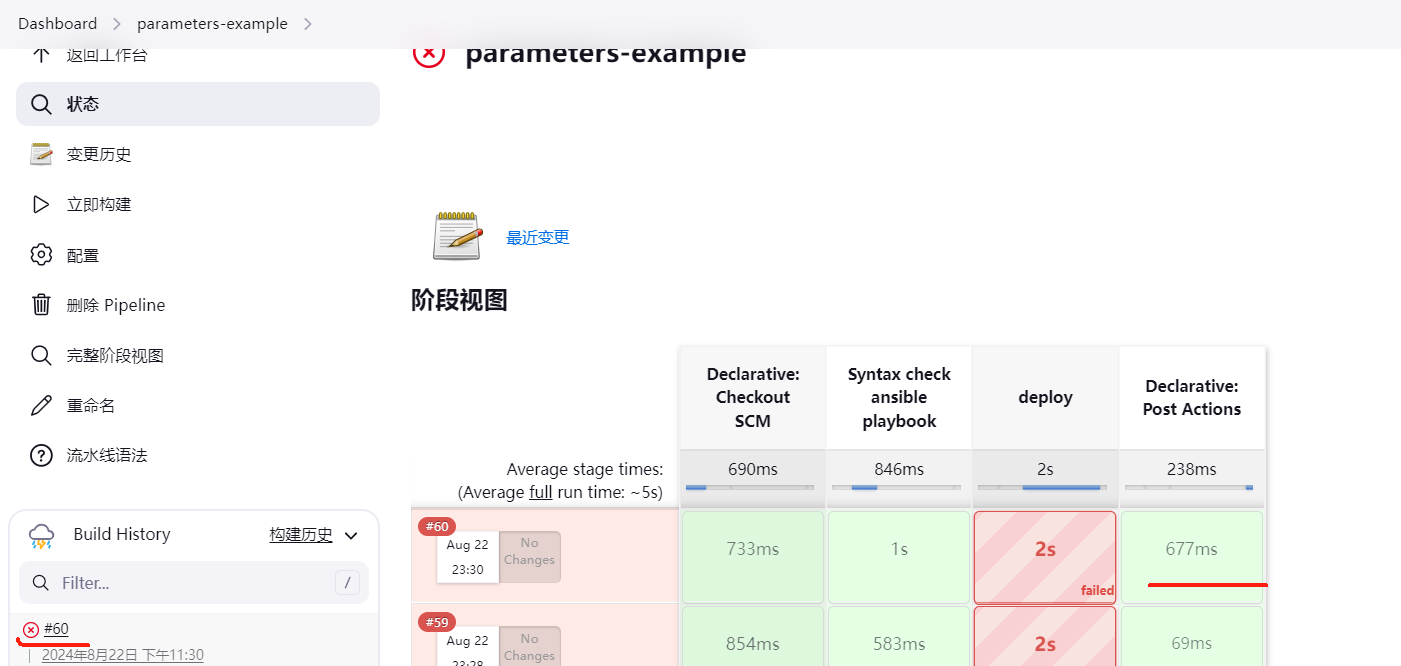

此时再次点击构建,部署失败,就会执行post里失败发送邮件的部分

失败日志

对比pipeline配置,查看邮件内容,发现没有发送邮件,检查了下,刚刚改造邮箱的系统配置后,没有保存。保存一下,重新点击立即构建,然后可以看到发送构建失败的邮件了

对比查看

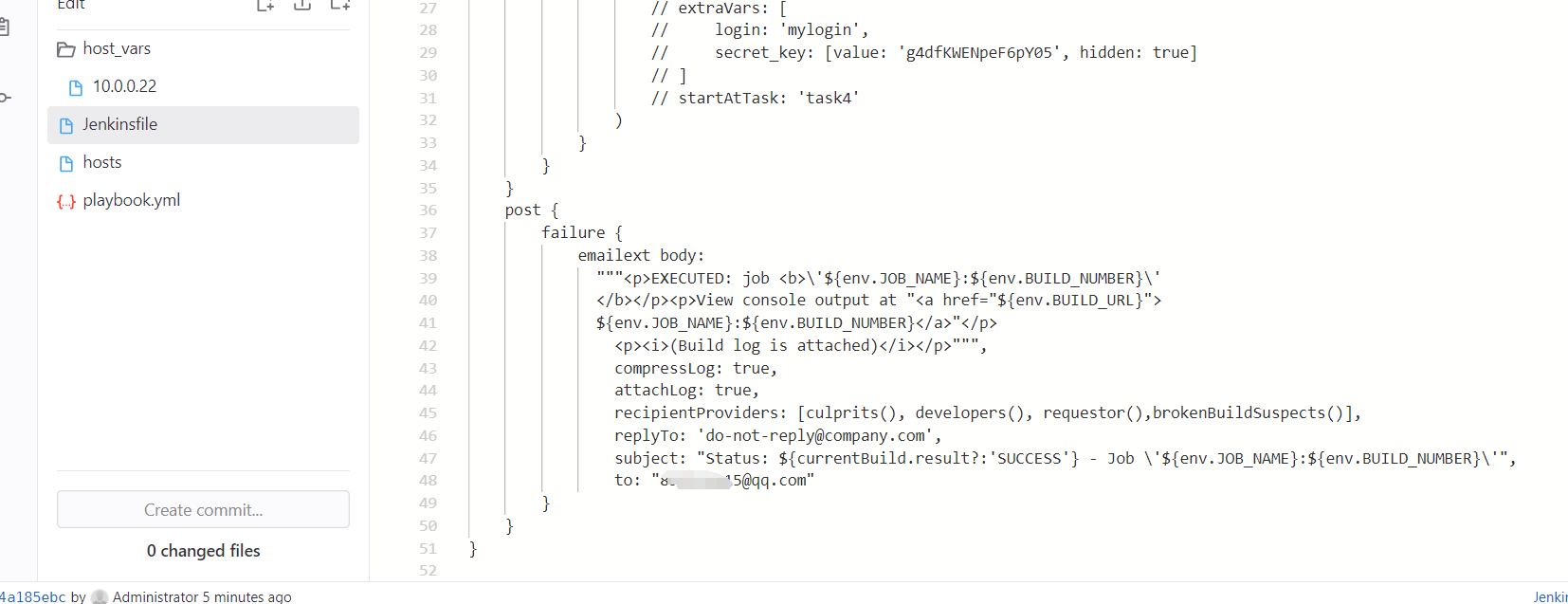

使用Email Extension 插件发送通知

安装 Emai Extension 插件: https://plugins.jenkins.io/email-ext

使用这个

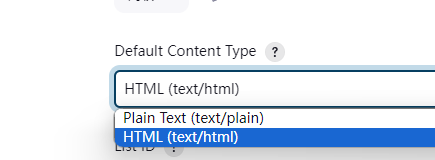

配置

把之前配置的邮件通知的清空

修改,然后立即构建

报错:

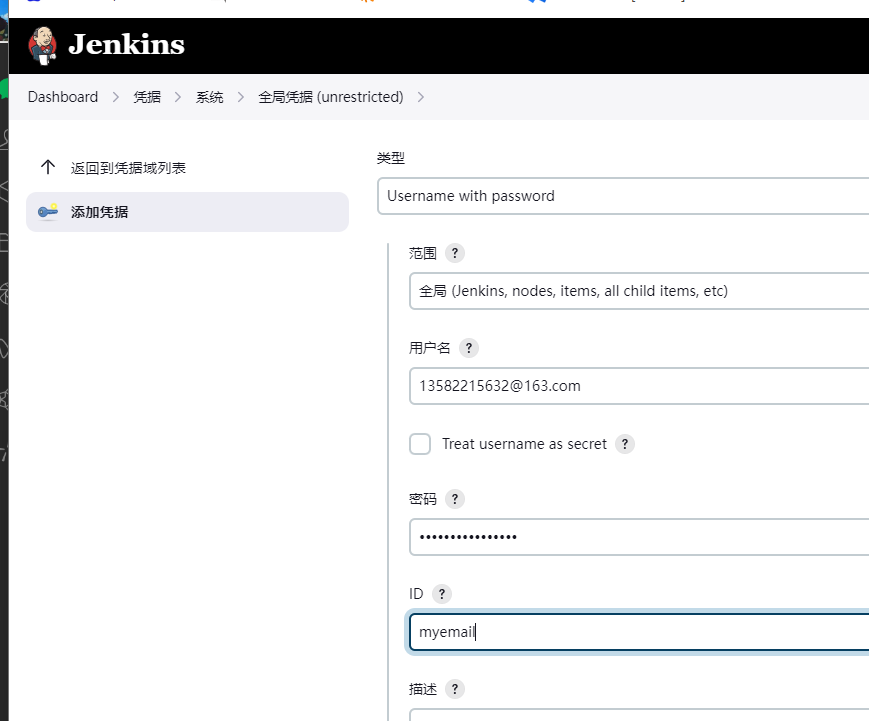

加个凭证,我们上面没有加邮箱的账号密码

失败,不知道咋弄,有时间再研究吧

pipeline { agent any stages { stage('Syntax check ansible playbook') { steps { ansiblePlaybook( disableHostKeyChecking: true, playbook: "${env.WORKSPACE}/playbook.yml", inventory: "${env.WORKSPACE}/hosts", credentialsId: 'vagrant', extras: '--syntax-check' ) } } stage('deploy') { steps { ansiblePlaybook( disableHostKeyChecking: true, playbook: "${env.WORKSPACE}/playbook.yml", inventory: "${env.WORKSPACE}/hosts", // credentialsId: 'vagrant', // skippedTags: 'debugtag', forks: 2, // limit: 'example,example2', // tags: 'debugtag,testtag', // extraVars: [ // login: 'mylogin', // secret_key: [value: 'g4dfKWENpeF6pY05', hidden: true] // ] // startAtTask: 'task4' ) } } } post { failure { emailext body: """<p>EXECUTED: job <b>\'${env.JOB_NAME}:${env.BUILD_NUMBER}\' </b></p><p>View console output at "<a href="${env.BUILD_URL}"> ${env.JOB_NAME}:${env.BUILD_NUMBER}</a>"</p> <p><i>(Build log is attached)</i></p>""", compressLog: true, attachLog: true, recipientProviders: [culprits(), developers(), requestor(),brokenBuildSuspects()], replyTo: '1xx2@163.com', subject: "Status: ${currentBuild.result?:'SUCCESS'} - Job \'${env.JOB_NAME}:${env.BUILD_NUMBER}\'", to: "13xx2@163.com" } } }

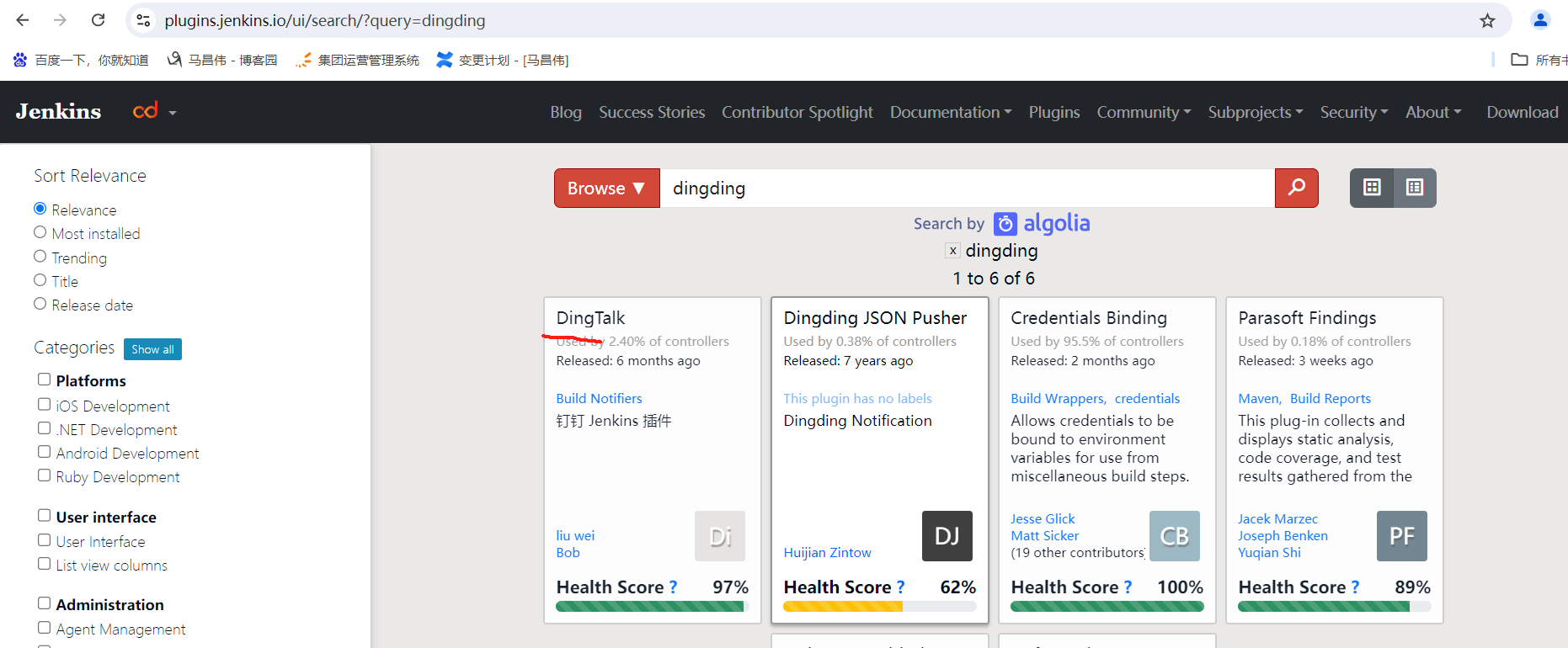

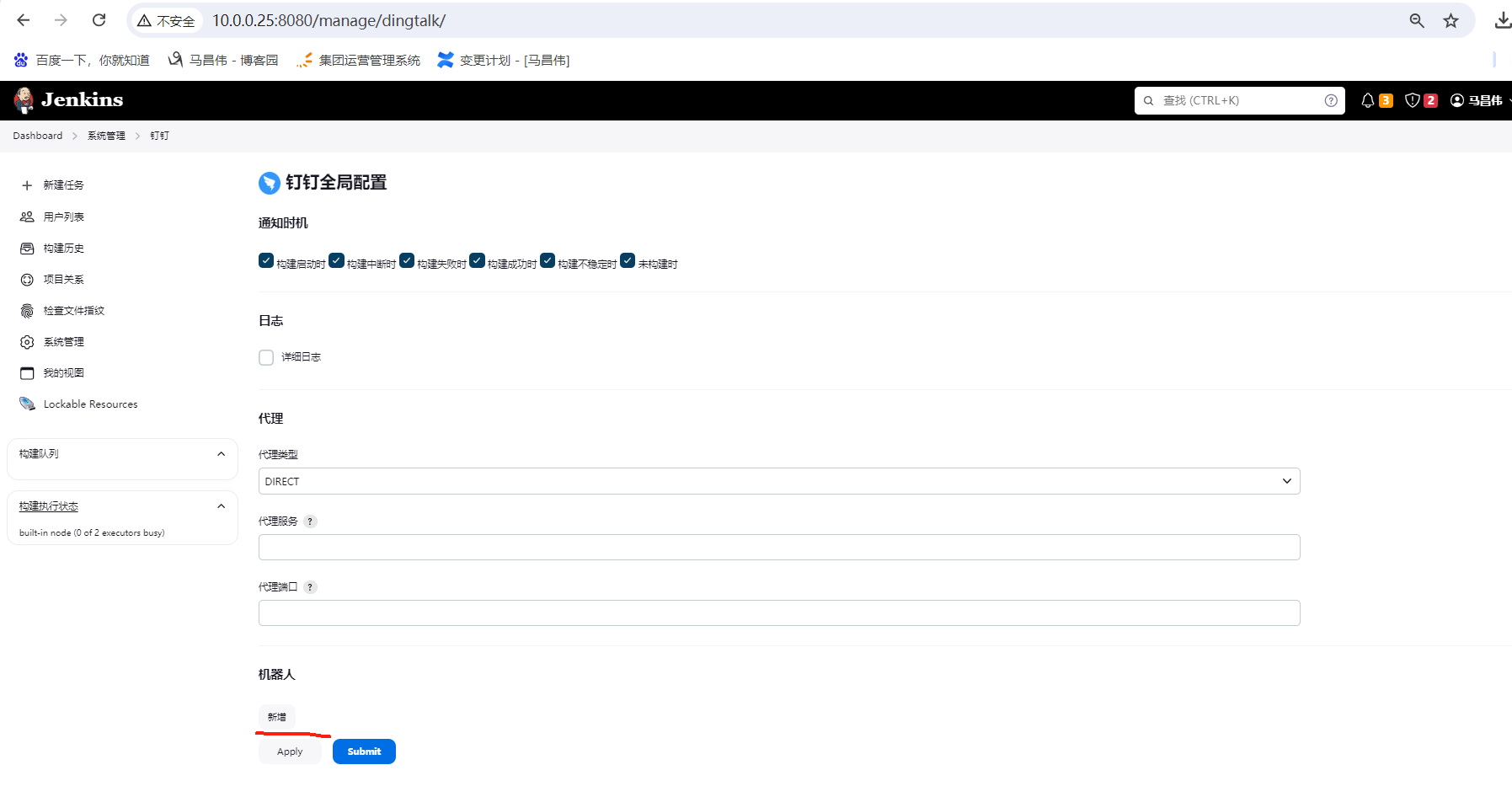

钉钉通知

参考:https://blog.csdn.net/qq_42259469/article/details/136813262

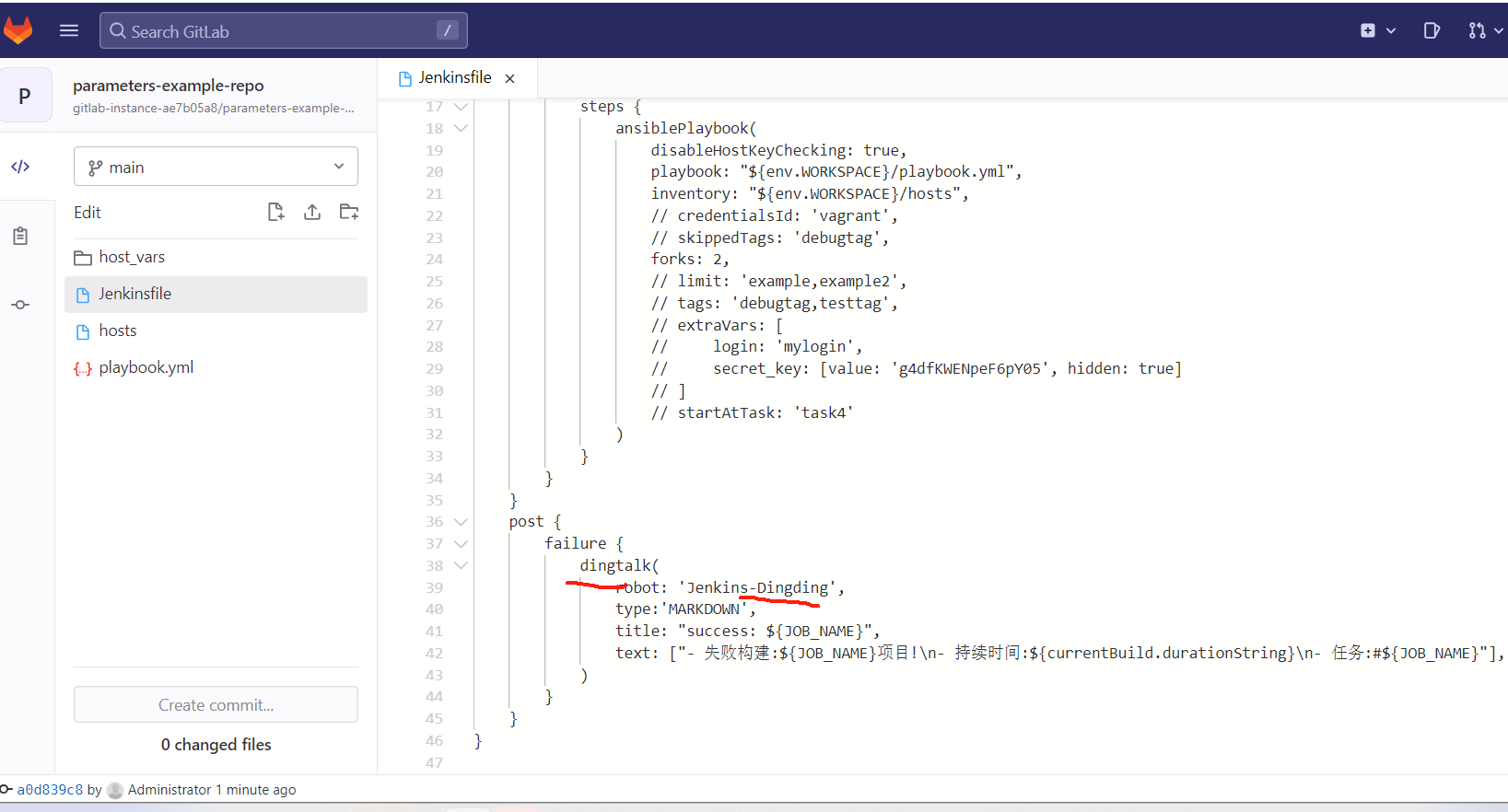

pipeline { agent any stages { stage('Syntax check ansible playbook') { steps { ansiblePlaybook( disableHostKeyChecking: true, playbook: "${env.WORKSPACE}/playbook.yml", inventory: "${env.WORKSPACE}/hosts", credentialsId: 'vagrant', extras: '--syntax-check' ) } } stage('deploy') { steps { ansiblePlaybook( disableHostKeyChecking: true, playbook: "${env.WORKSPACE}/playbook.yml", inventory: "${env.WORKSPACE}/hosts", // credentialsId: 'vagrant', // skippedTags: 'debugtag', forks: 2, // limit: 'example,example2', // tags: 'debugtag,testtag', // extraVars: [ // login: 'mylogin', // secret_key: [value: 'g4dfKWENpeF6pY05', hidden: true] // ] // startAtTask: 'task4' ) } } } post { failure { dingtalk( robot: 'Jenkins-Dingding', type:'MARKDOWN', title: "success: ${JOB_NAME}", text: ["- 失败构建:${JOB_NAME}项目!\n- 持续时间:${currentBuild.durationString}\n- 任务:#${JOB_NAME}\n- 请注意: @庞xx,@史xx 需要检查构建失败原因"], ) } } }

钉钉插件:dingding-notifications

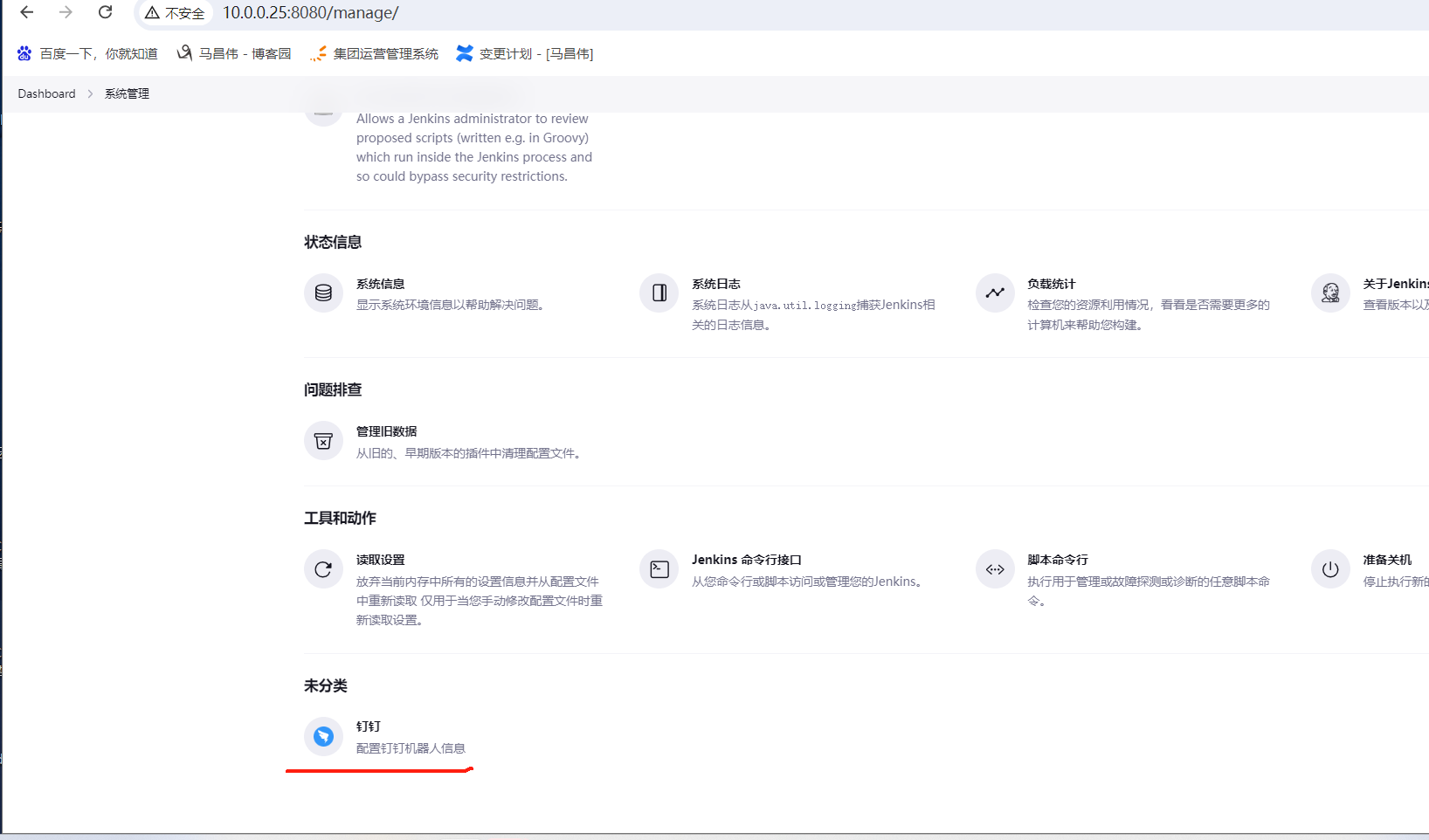

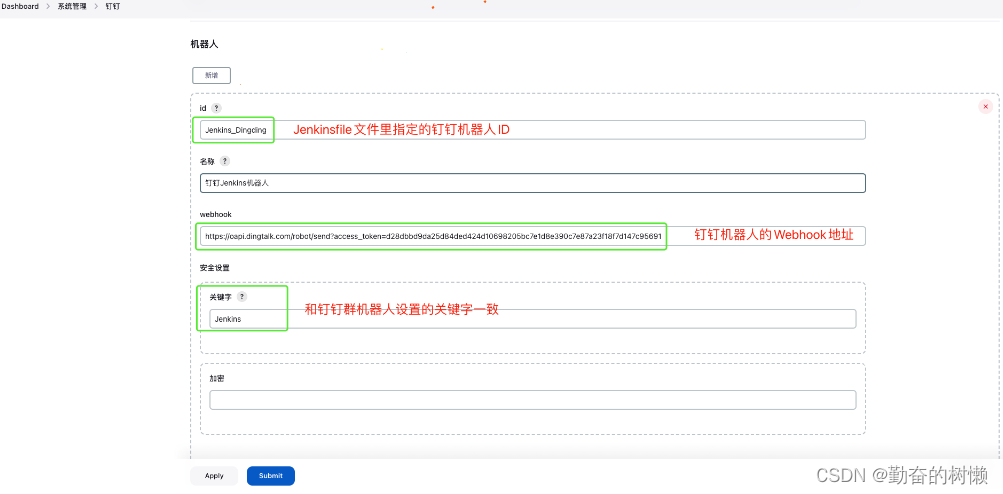

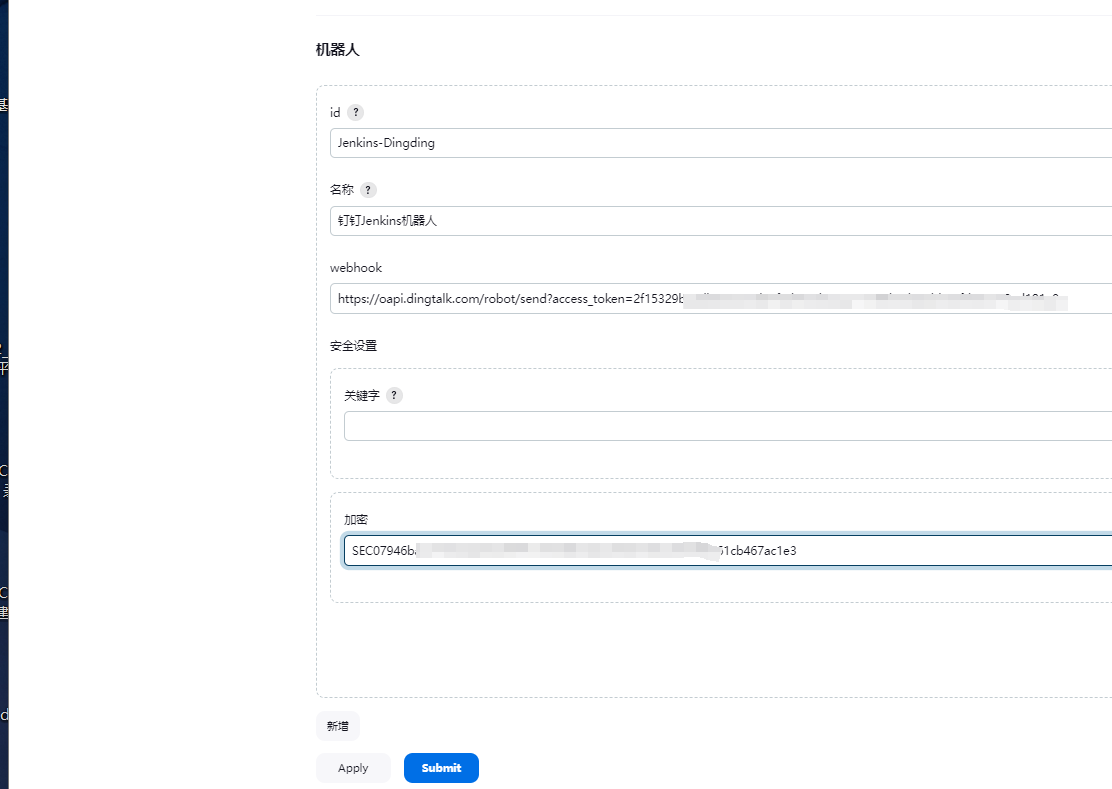

配置一下

加密用的是钉钉里的加签

pipeline这里只需要改 robot: 'Jenkins-Dingding',这里,用Jenkins系统配置里的id

构建失败

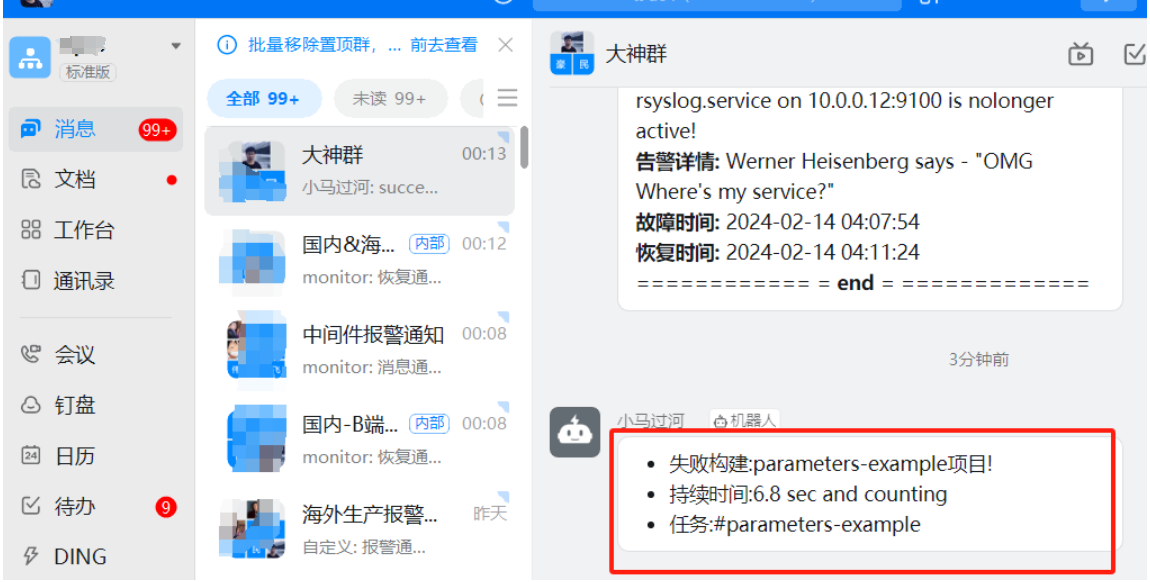

执行失败钉钉通知

发出通知

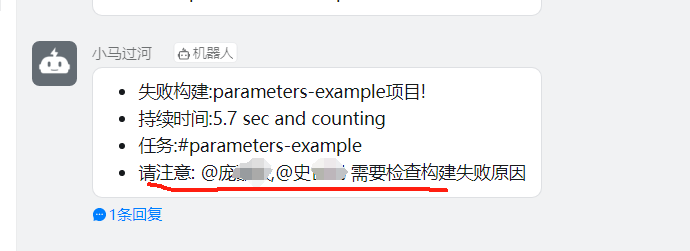

通知消息,多加了一行让谁注意,只是文本,没有@通知的能力

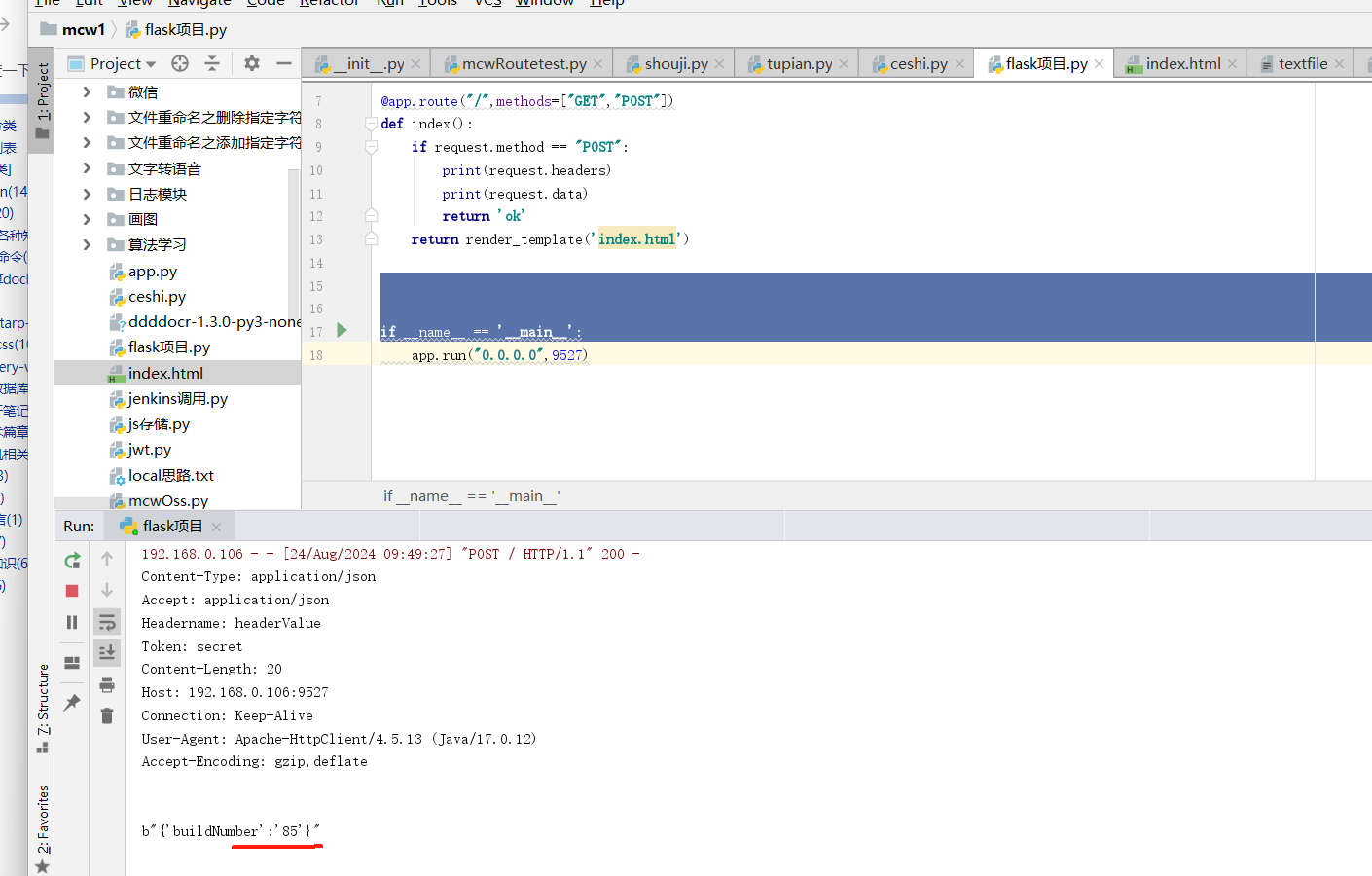

HTTP请求通知

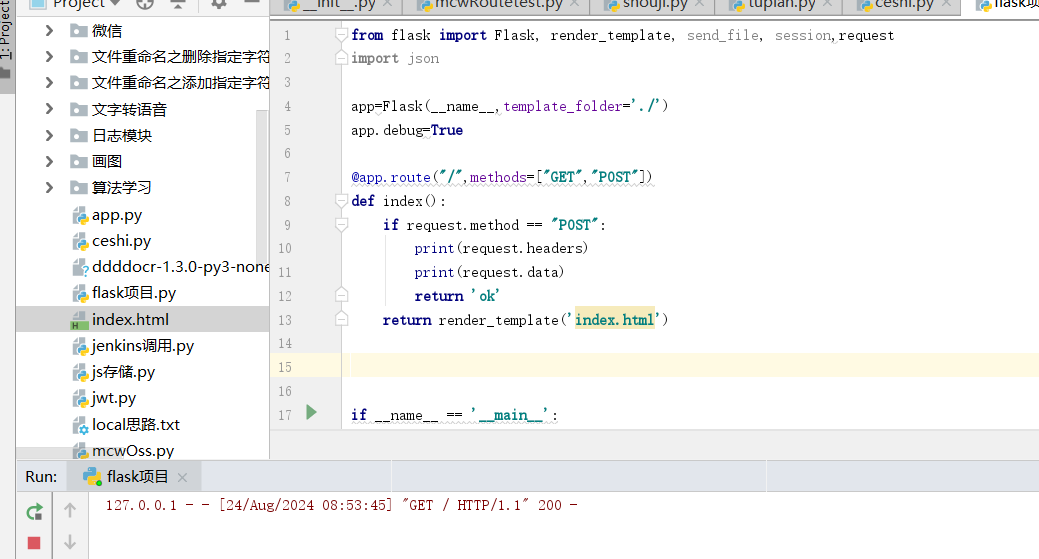

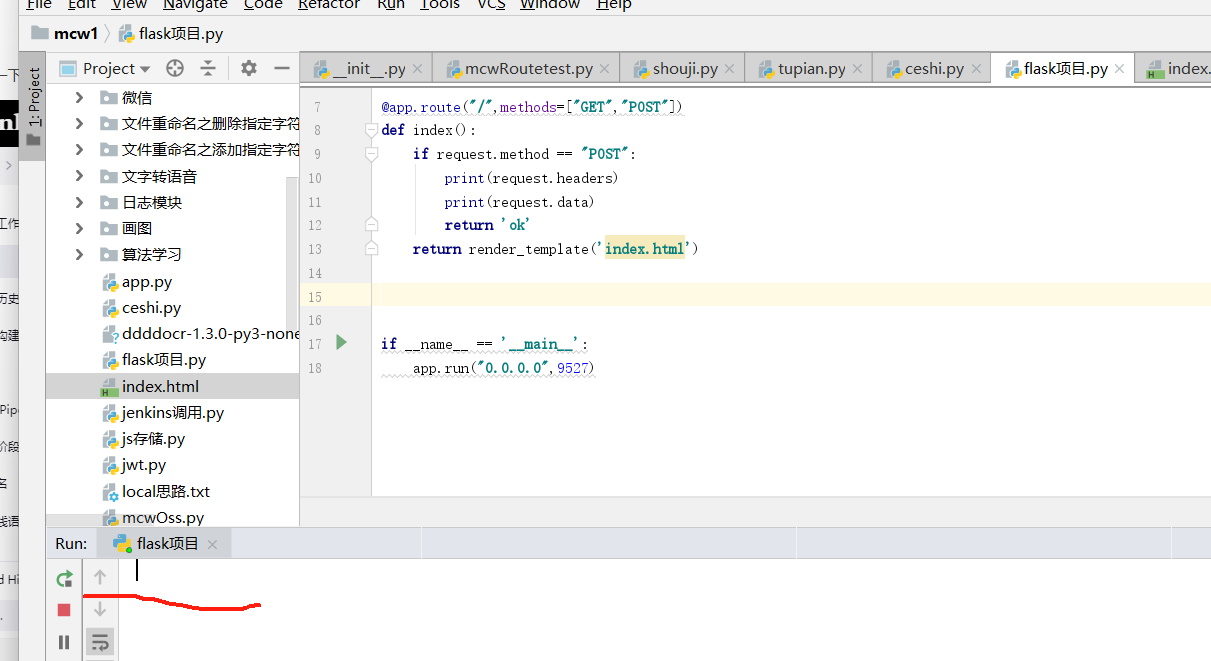

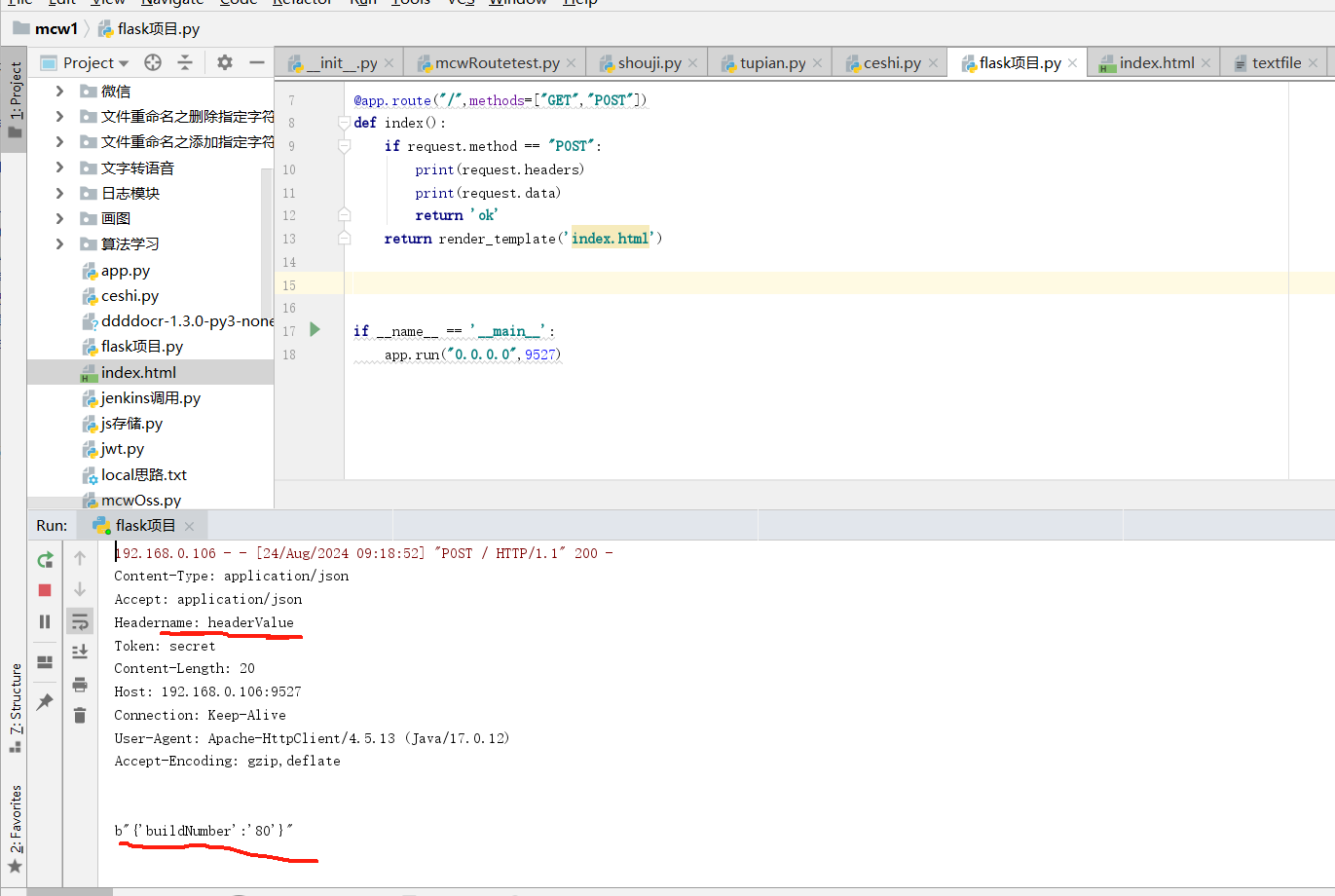

我们先写一个第三方系统

from flask import Flask, render_template, send_file, session,request import json app=Flask(__name__,template_folder='./') app.debug=True @app.route("/",methods=["GET","POST"]) def index(): if request.method == "POST": print(request.headers) print(request.data) return 'ok' return render_template('index.html') if __name__ == '__main__': app.run("0.0.0.0",9527)

开启并访问项目

提交请求

安装插件

开启项目

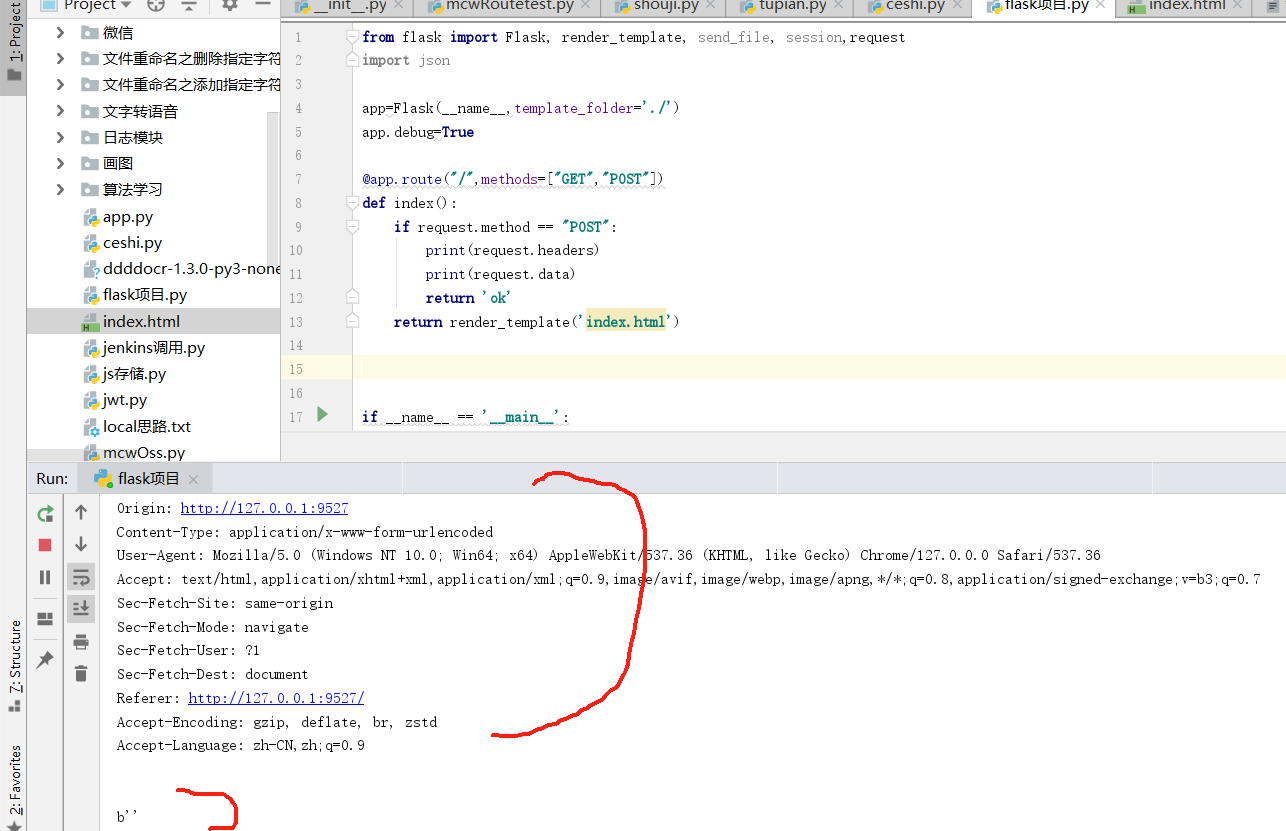

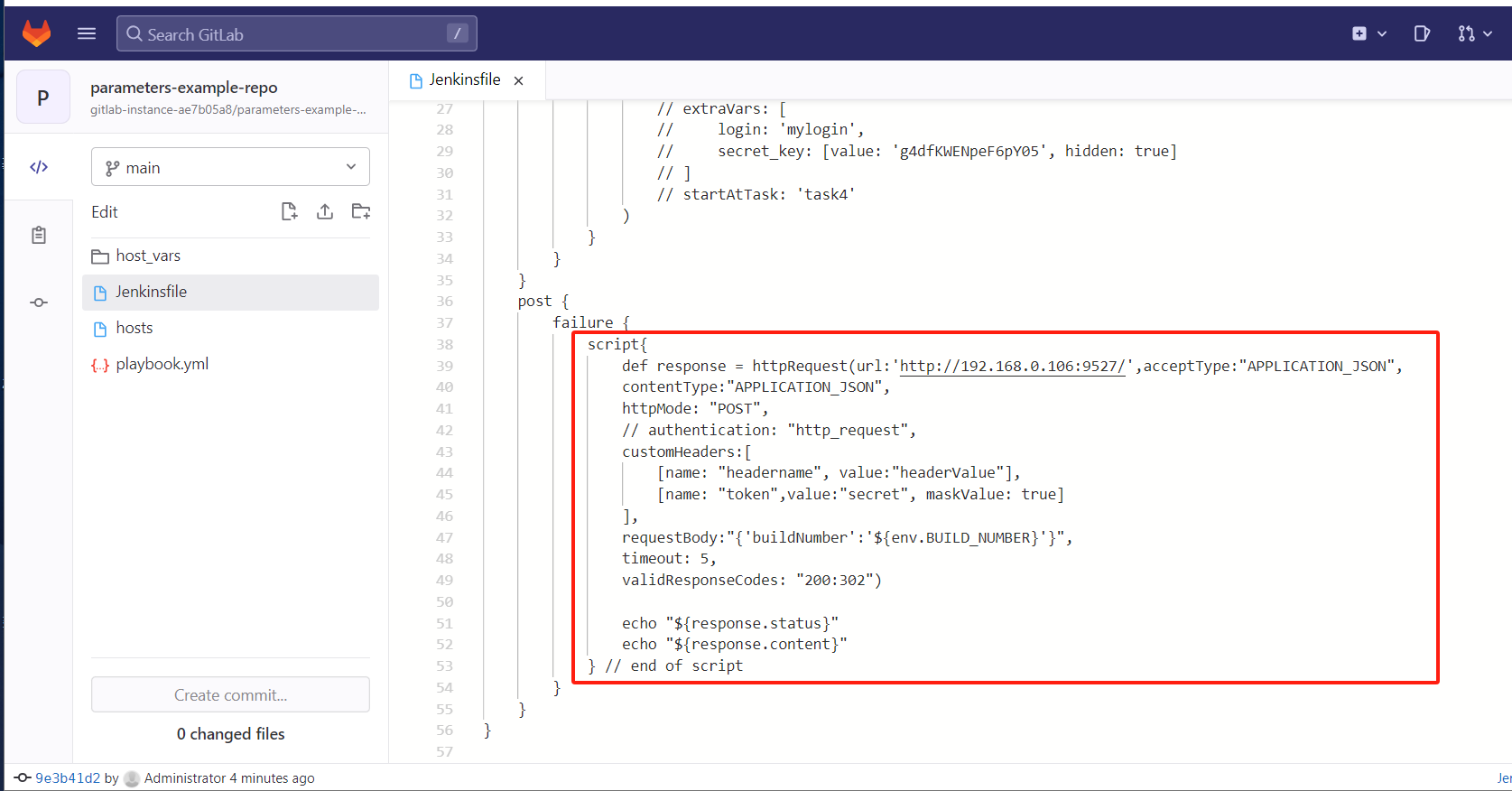

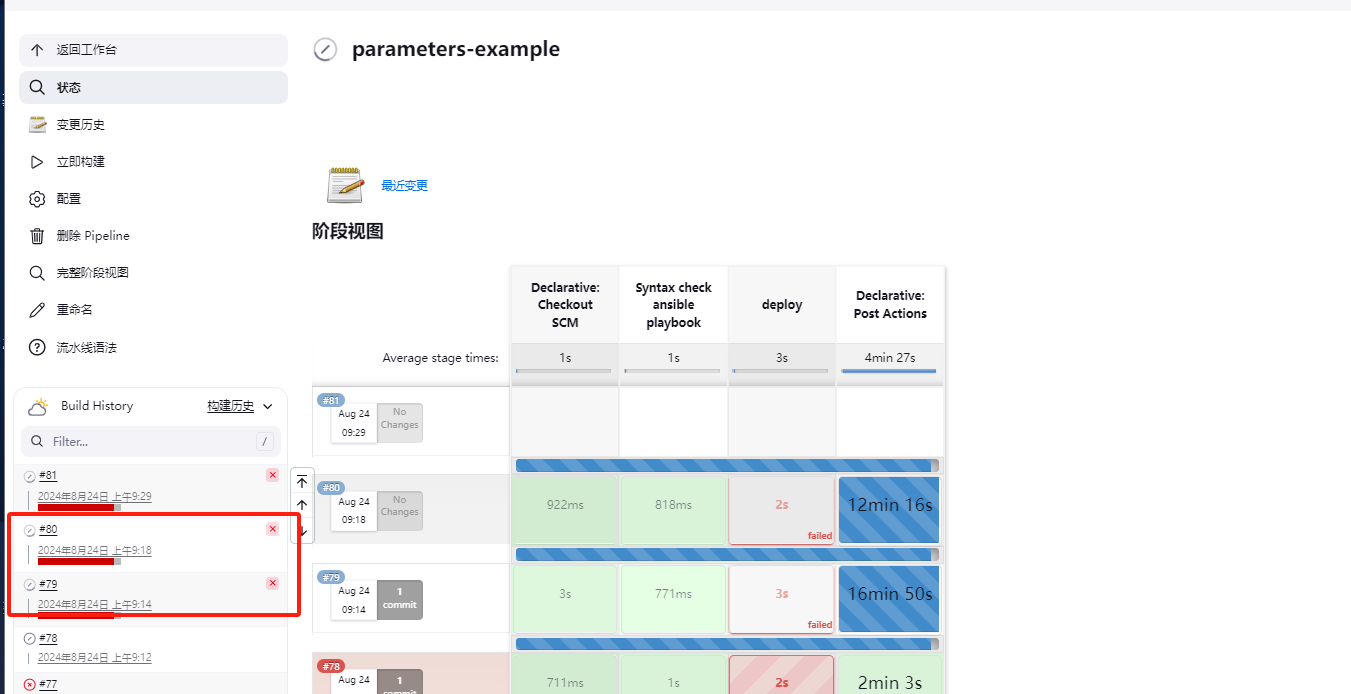

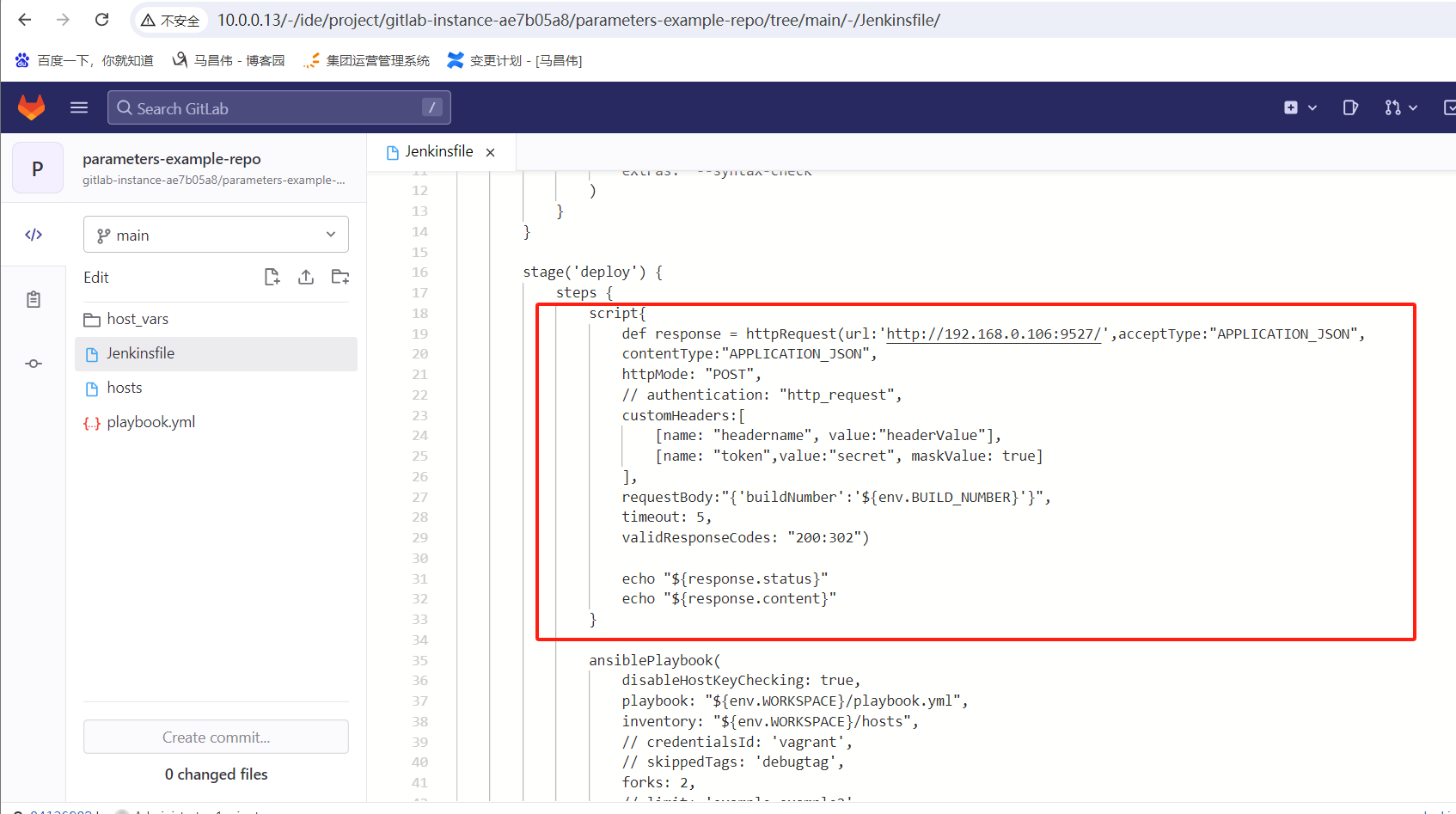

修改Jenkinsfile。我们这里第三方系统,没有认证,所以这里就忽略认证这一步了,也就是注释了authentication

pipeline { agent any stages { stage('Syntax check ansible playbook') { steps { ansiblePlaybook( disableHostKeyChecking: true, playbook: "${env.WORKSPACE}/playbook.yml", inventory: "${env.WORKSPACE}/hosts", credentialsId: 'vagrant', extras: '--syntax-check' ) } } stage('deploy') { steps { ansiblePlaybook( disableHostKeyChecking: true, playbook: "${env.WORKSPACE}/playbook.yml", inventory: "${env.WORKSPACE}/hosts", // credentialsId: 'vagrant', // skippedTags: 'debugtag', forks: 2, // limit: 'example,example2', // tags: 'debugtag,testtag', // extraVars: [ // login: 'mylogin', // secret_key: [value: 'g4dfKWENpeF6pY05', hidden: true] // ] // startAtTask: 'task4' ) } } } post { failure { script{ def response = httpRequest(url:'http://192.168.0.106:9527/',acceptType:"APPLICATION_JSON", contentType:"APPLICATION_JSON", httpMode: "POST", // authentication: "http_request", customHeaders:[ [name: "headername", value:"headerValue"], [name: "token",value:"secret", maskValue: true] ], requestBody:"{'buildNumber':'${env.BUILD_NUMBER}'}", timeout: 5, validResponseCodes: "200:302") echo "${response.status}" echo "${response.content}" } } } }

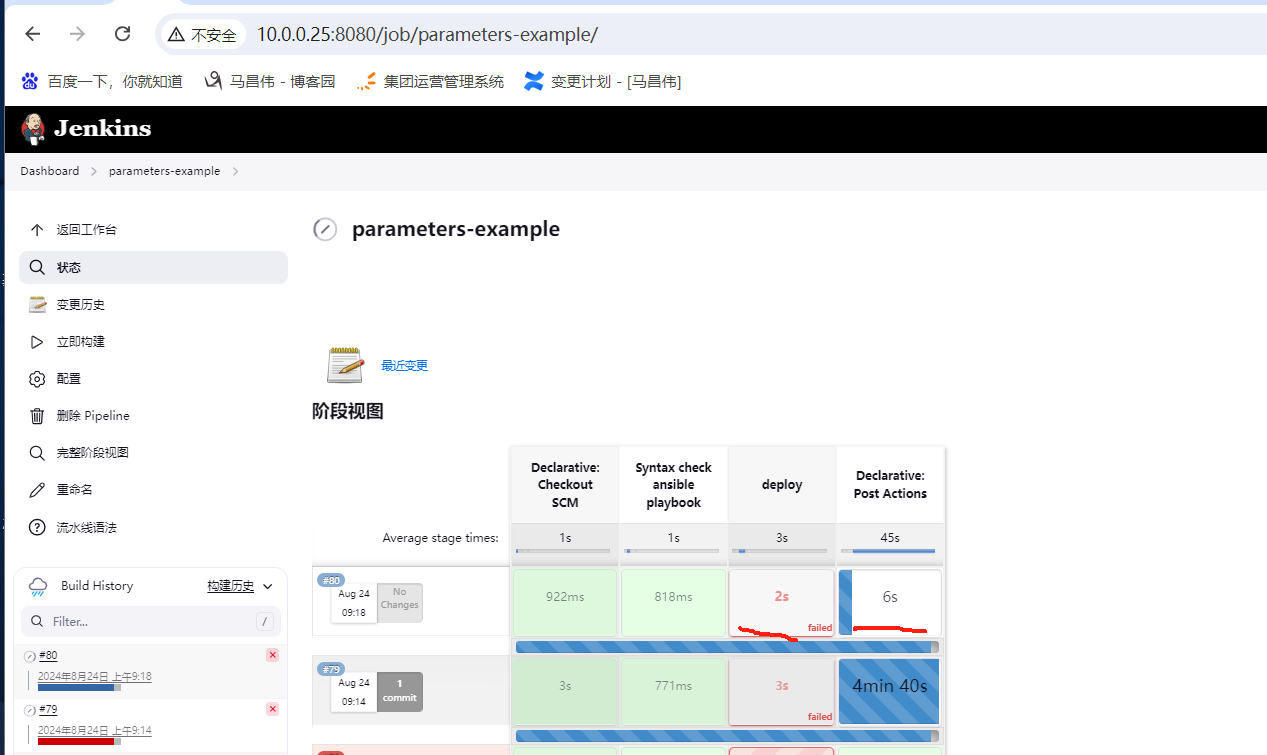

点击立即构建

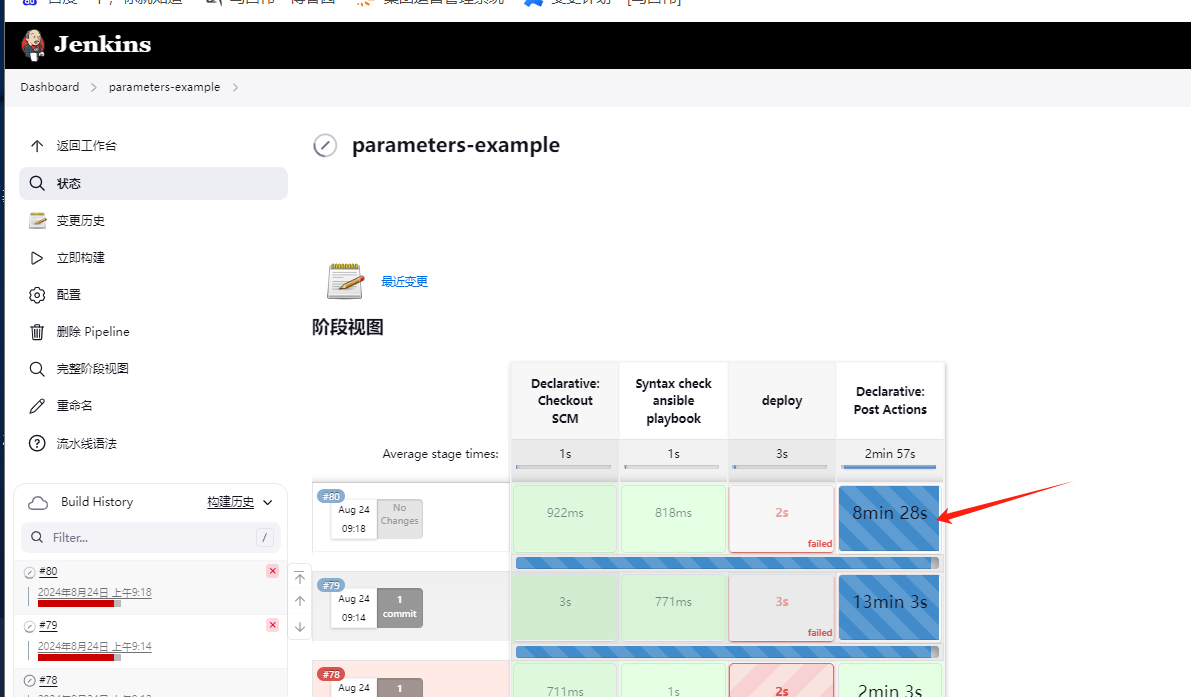

查看日志,pipeline里面已经开启了请求,并且一直卡在这里不动

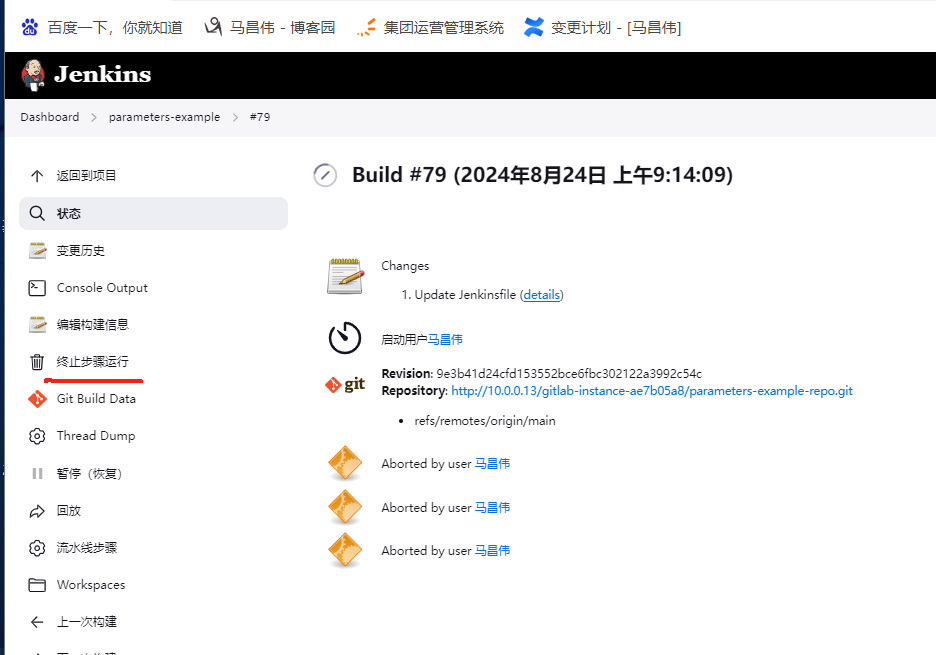

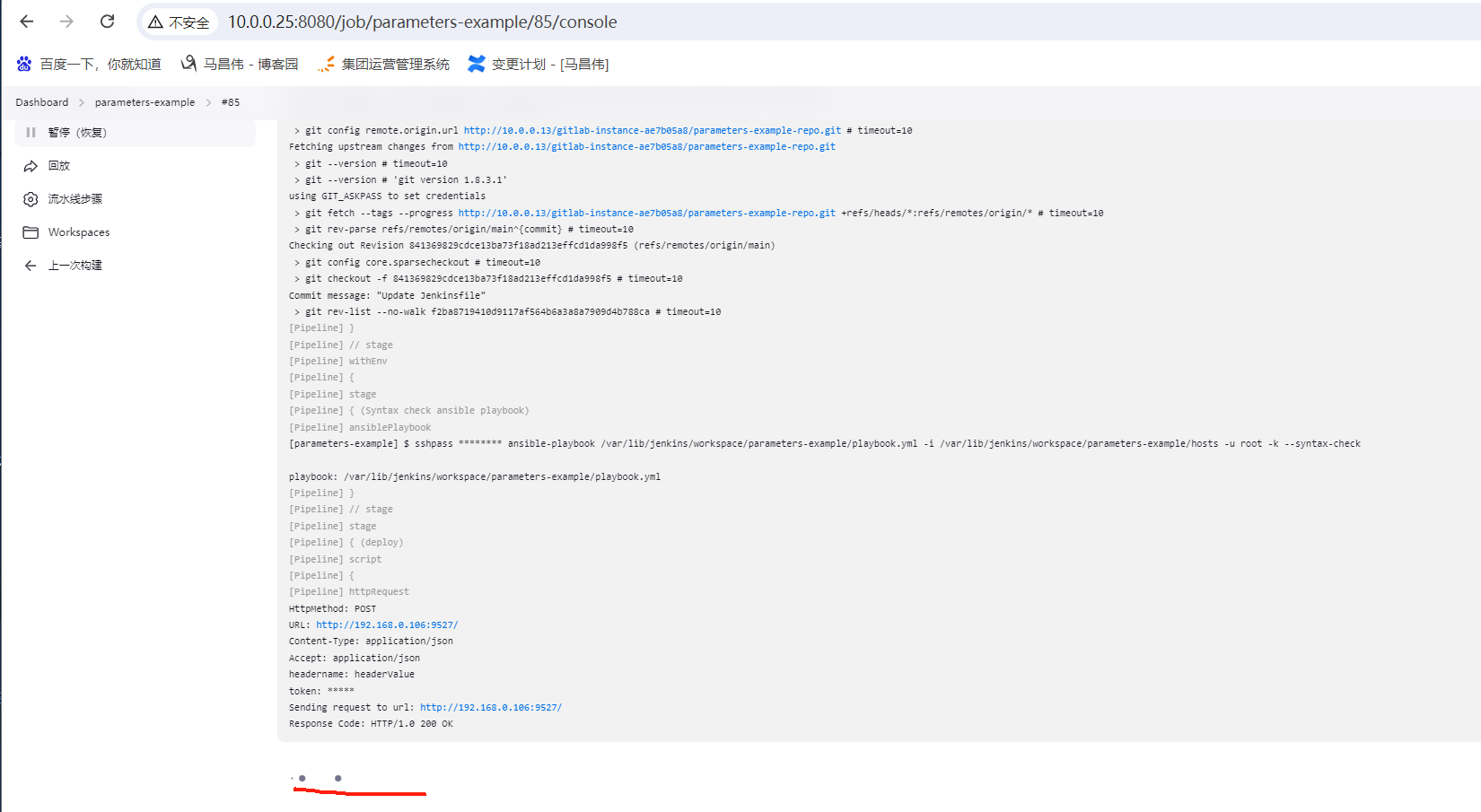

查看第三方系统的服务端,可以看到,已经接收并处理了pipeline发起的请求通知,并且包含了token和headername两个自定义请求头,以及我们发送过去的内容,也就是Jenkins的构建数字。

从服务端的记录时间看,18分收到请求,但是过了快十分钟了,pipeline对第三方系统发起请求之后,这里卡住一直不往下进行,没有去结束pipeline了,这不知道啥情况

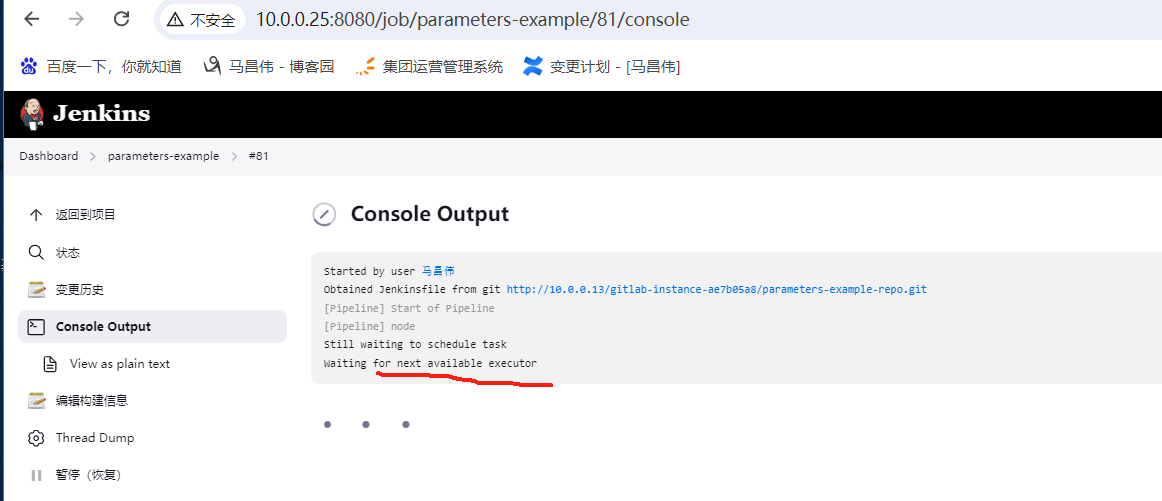

因为上一个构建没有停止,这下一个构建,还在等待可用的执行器,也就是资源还没释放

只是前两个一直没取消成功

一开始卡住的时候没看到这个终止,现在出现 了,那就终止一下

也可以把它从post里面改到stage里面

执行ansible之前,就执行这里并卡柱了,这个构建数字是85

查看服务端已经处理了请求

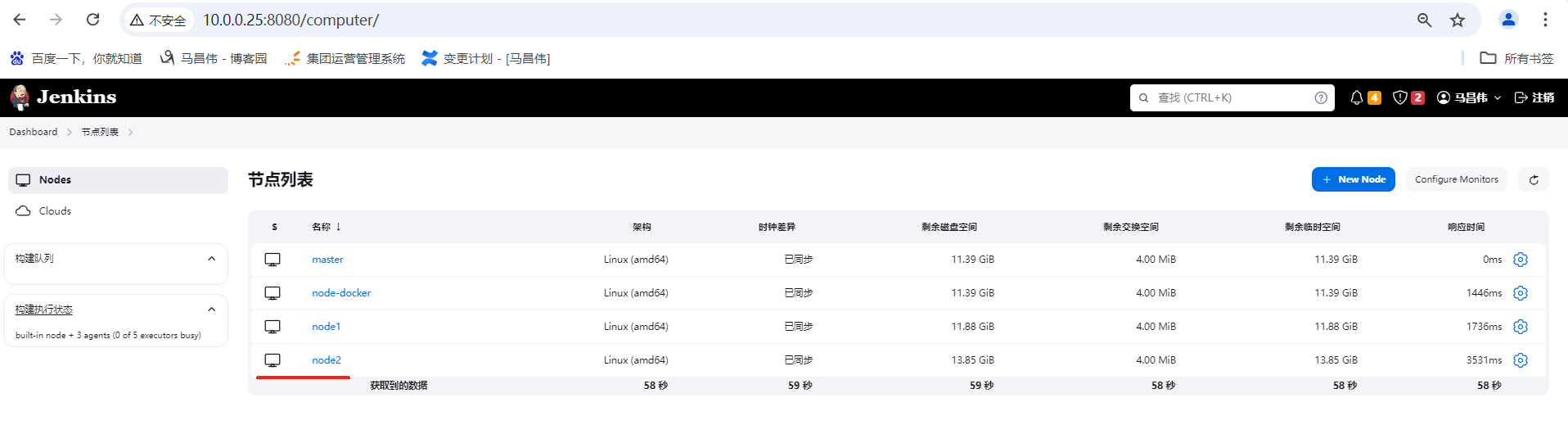

分布式构建 与并行构建

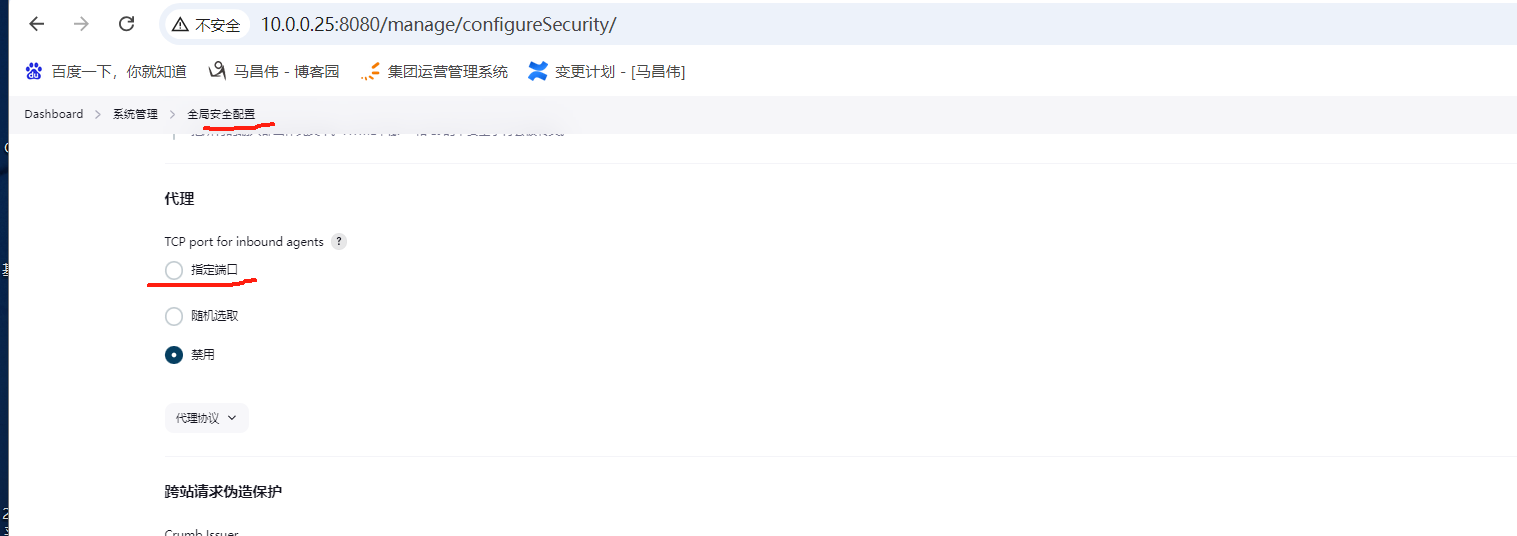

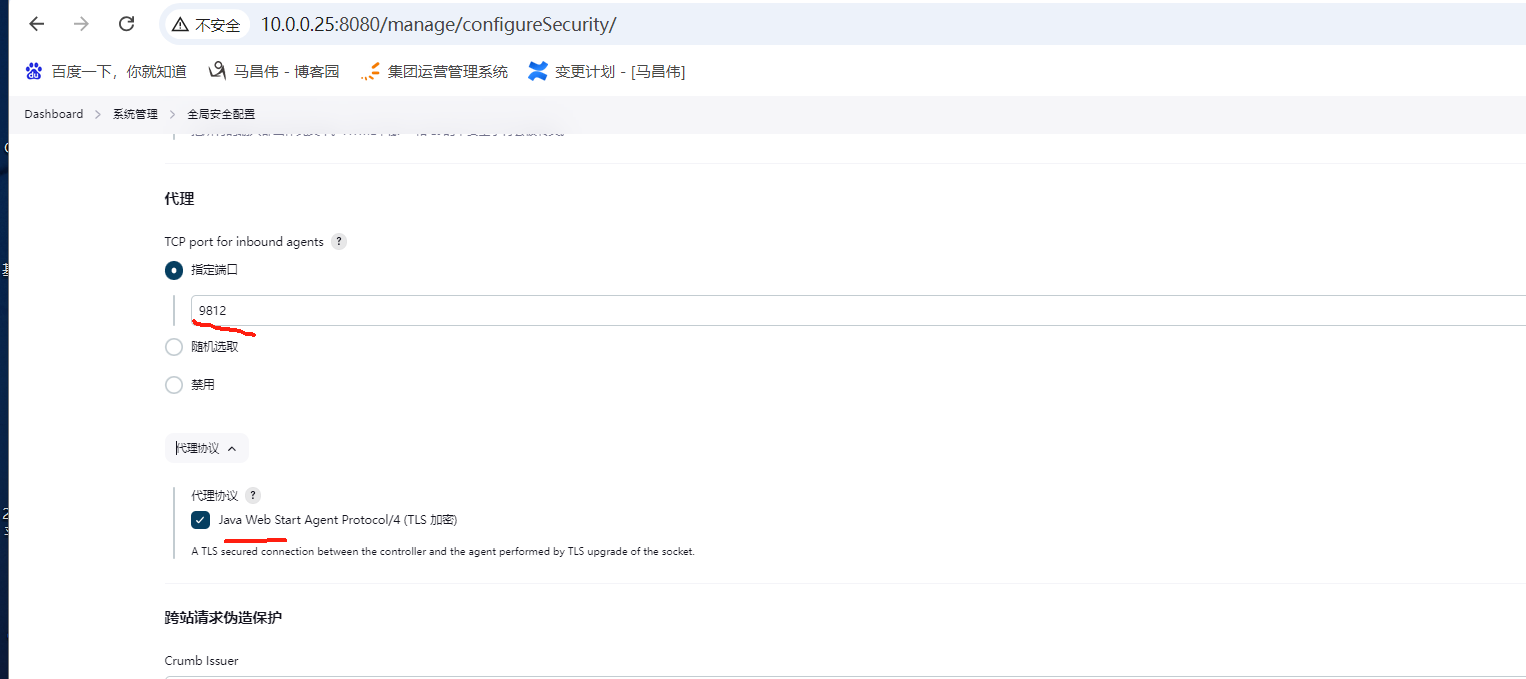

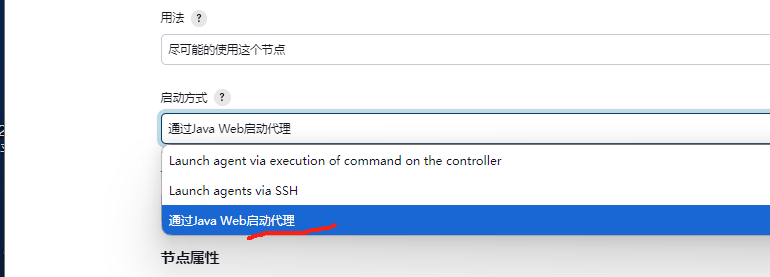

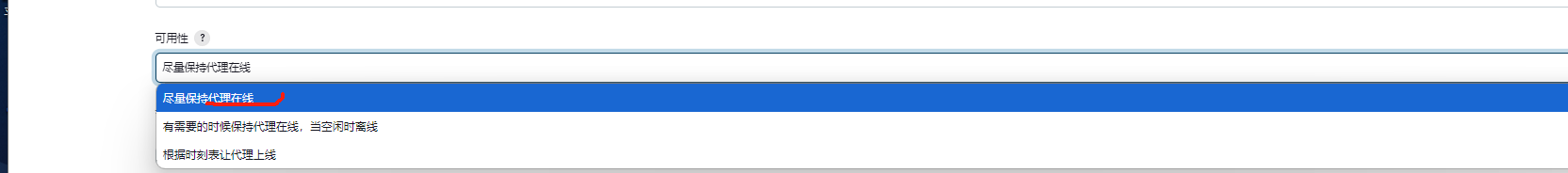

通过JNLP协议增加agent

指定端口

跟书中例子不同,协议少了很多项

agent连接master的方式

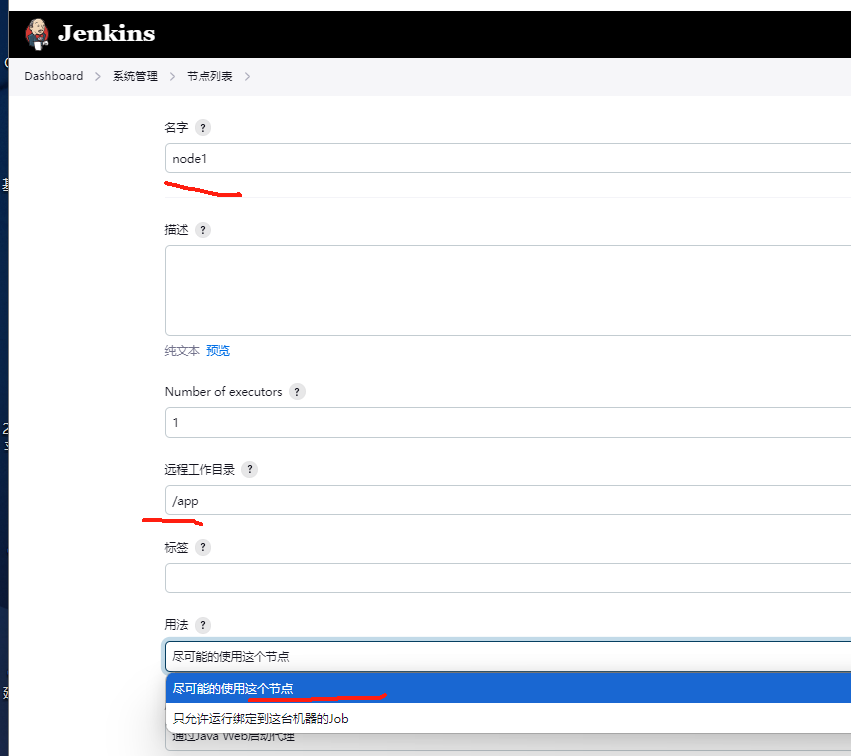

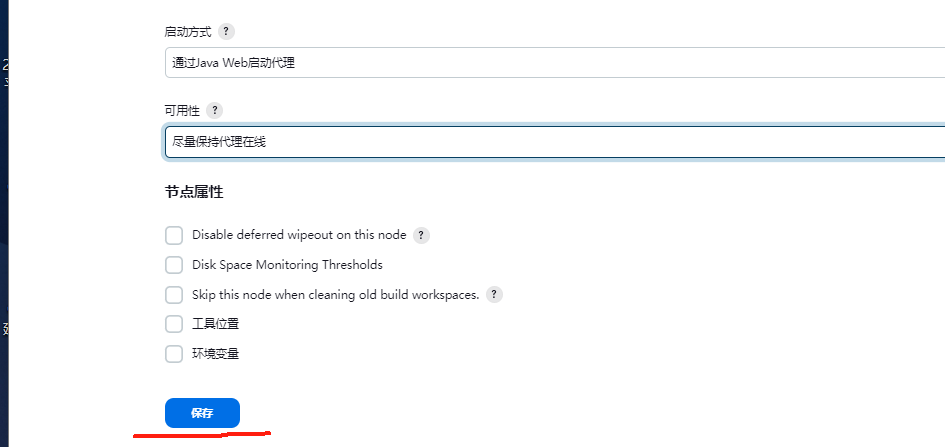

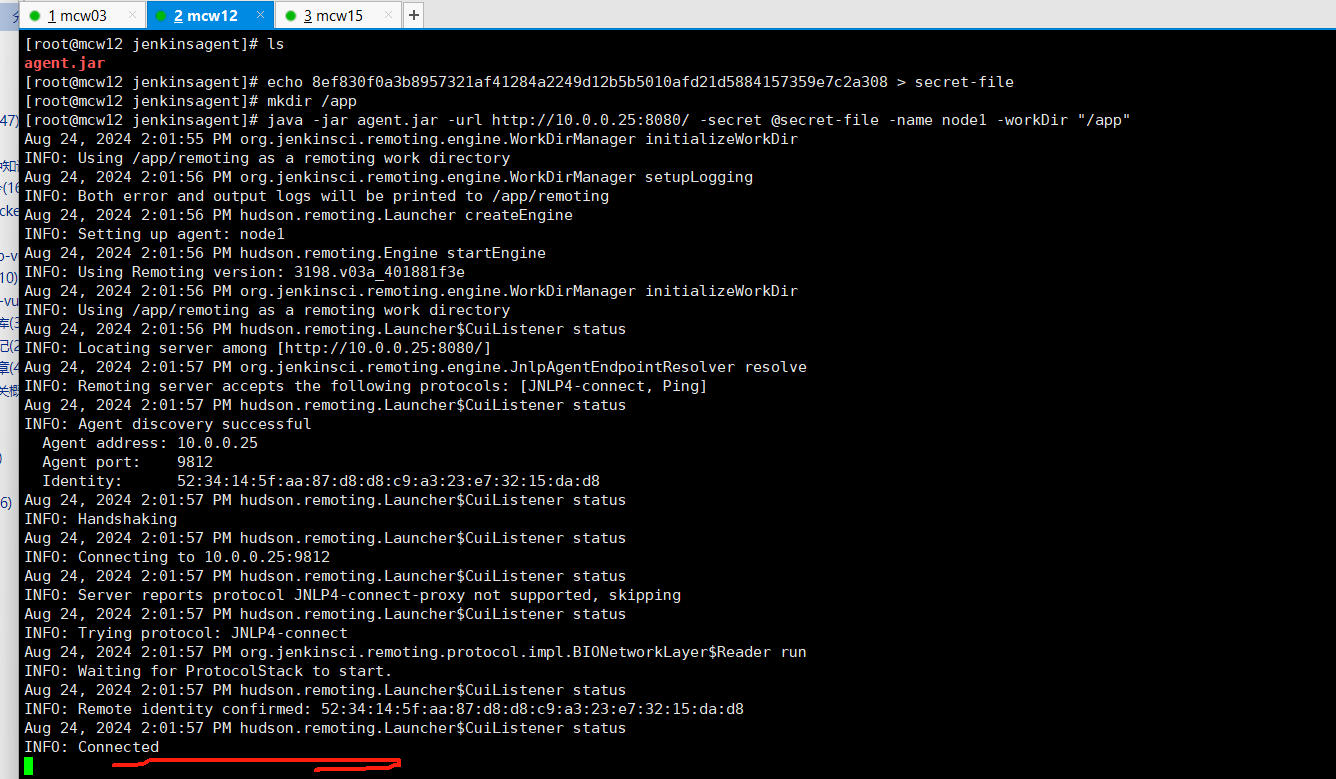

登录到Jenkinsagent机器。我们这里用mcw12,执行上面的增加agent的命令,也就是下载jar包并启动。我们先用unix并且不用密码的

连接之后,就卡在这里,是前台运行,后面可以改成后台运行

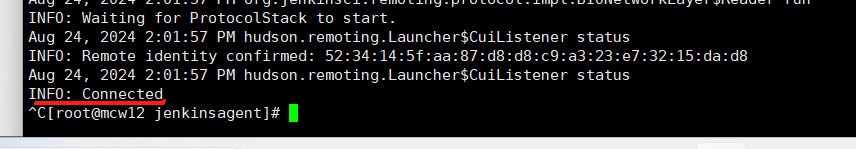

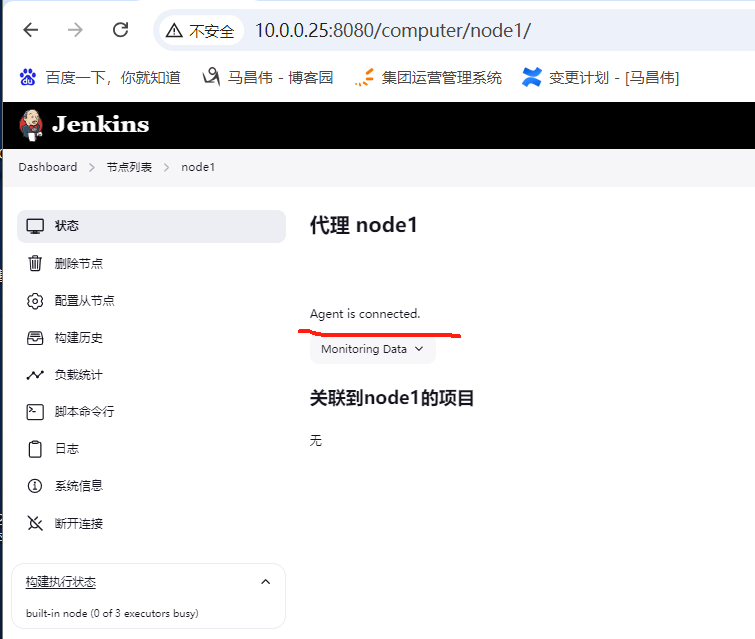

再次回到Jenkins node列表,可以看到node1有信息了,应该从之前没信息的不可用,变为可用节点了

crt c 终止前台运行

查看node1情况,又是不可用节点了

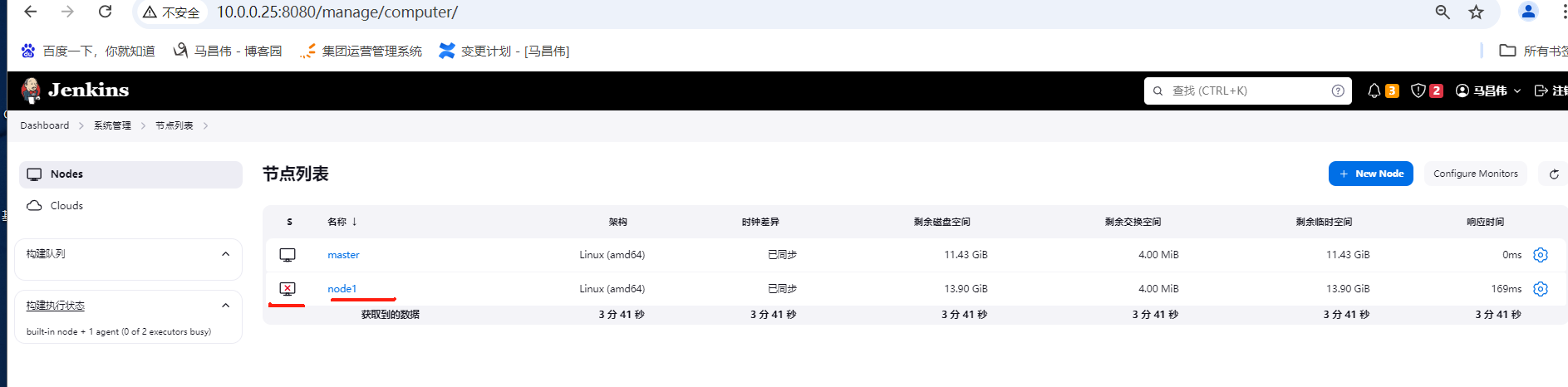

点进去看状态提示,连接中断

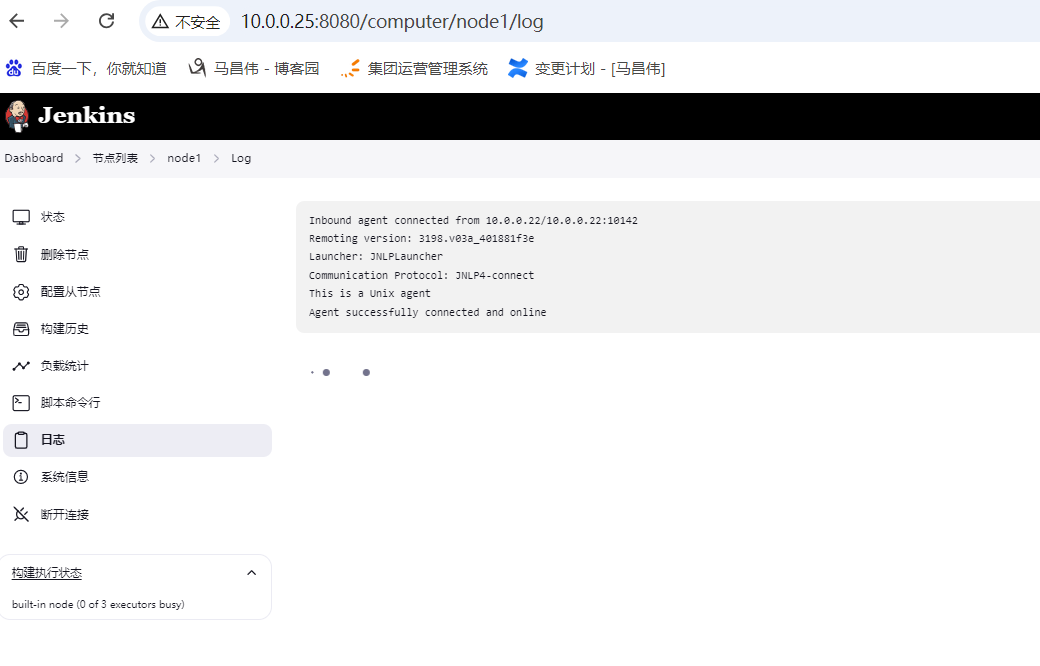

查看日志,连接成功以及连接中断都有记录

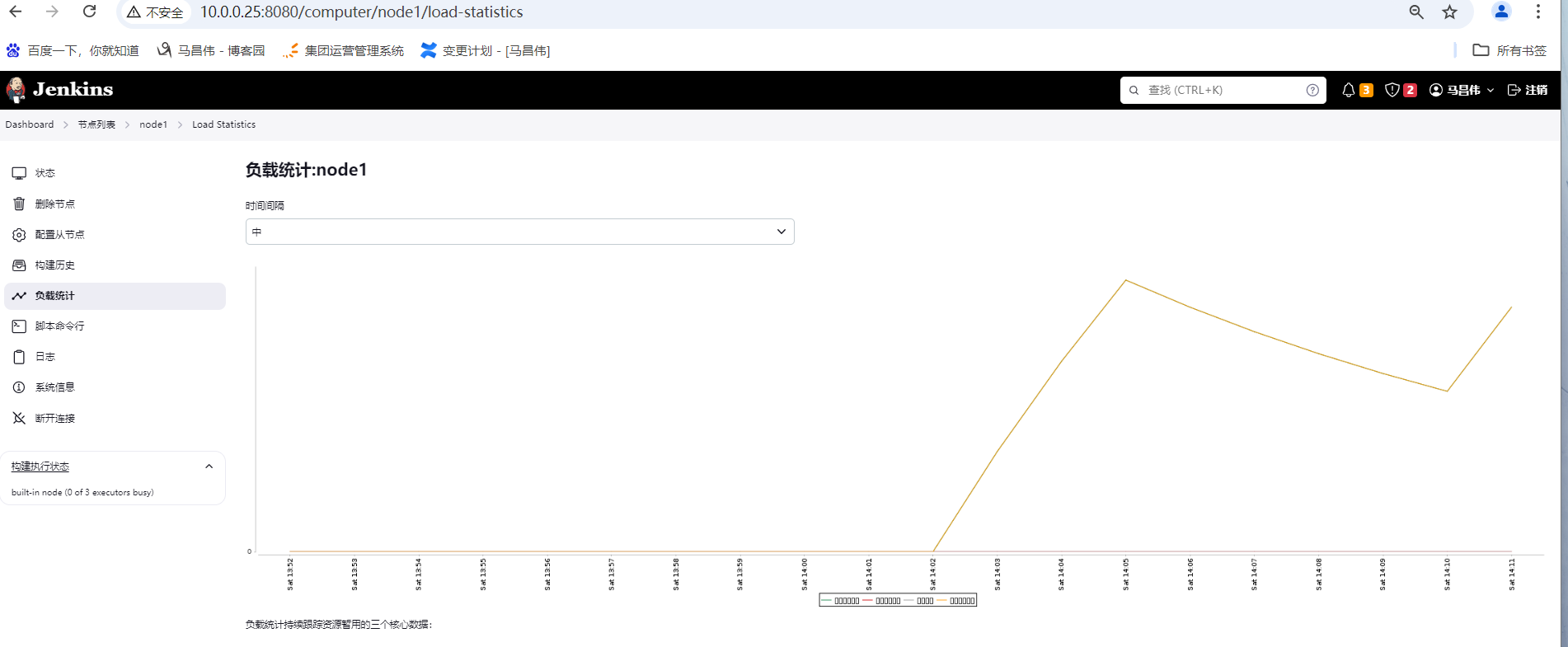

查看负载情况

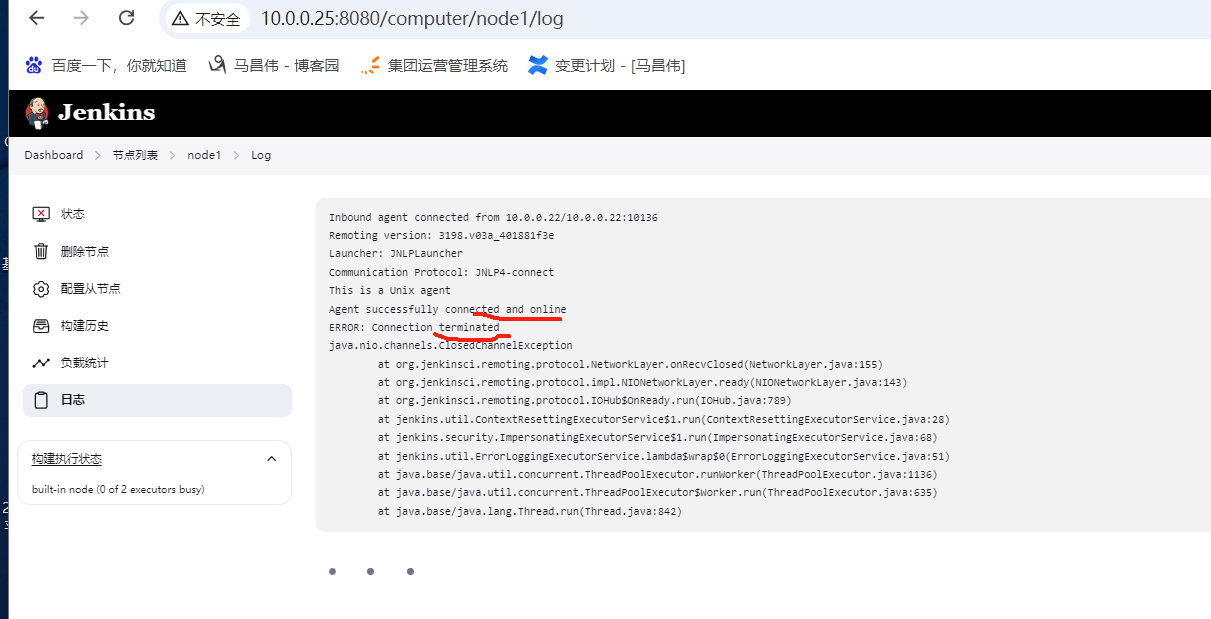

Jenkins agent机器上查看信息,以及日志

^C[root@mcw12 jenkinsagent]# ls agent.jar secret-file [root@mcw12 jenkinsagent]# ls /app/ remoting [root@mcw12 jenkinsagent]# ls /app/remoting/ jarCache logs [root@mcw12 jenkinsagent]# ls /app/remoting/jarCache/ 0F 1D 34 7F AD B2 CE DC EF [root@mcw12 jenkinsagent]# ls /app/remoting/logs/ remoting.log.0 [root@mcw12 jenkinsagent]# less /app/remoting/logs/remoting.log.0 [root@mcw12 jenkinsagent]# [root@mcw12 jenkinsagent]# cat /app/remoting/logs/remoting.log.0 Aug 24, 2024 2:01:56 PM hudson.remoting.Launcher createEngine INFO: Setting up agent: node1 Aug 24, 2024 2:01:56 PM hudson.remoting.Engine startEngine INFO: Using Remoting version: 3198.v03a_401881f3e Aug 24, 2024 2:01:56 PM org.jenkinsci.remoting.engine.WorkDirManager initializeWorkDir INFO: Using /app/remoting as a remoting work directory Aug 24, 2024 2:01:56 PM hudson.remoting.Launcher$CuiListener status INFO: Locating server among [http://10.0.0.25:8080/] Aug 24, 2024 2:01:57 PM org.jenkinsci.remoting.engine.JnlpAgentEndpointResolver resolve INFO: Remoting server accepts the following protocols: [JNLP4-connect, Ping] Aug 24, 2024 2:01:57 PM hudson.remoting.Launcher$CuiListener status INFO: Agent discovery successful Agent address: 10.0.0.25 Agent port: 9812 Identity: 52:34:14:5f:aa:87:d8:d8:c9:a3:23:e7:32:15:da:d8 Aug 24, 2024 2:01:57 PM hudson.remoting.Launcher$CuiListener status INFO: Handshaking Aug 24, 2024 2:01:57 PM hudson.remoting.Launcher$CuiListener status INFO: Connecting to 10.0.0.25:9812 Aug 24, 2024 2:01:57 PM hudson.remoting.Launcher$CuiListener status INFO: Server reports protocol JNLP4-connect-proxy not supported, skipping Aug 24, 2024 2:01:57 PM hudson.remoting.Launcher$CuiListener status INFO: Trying protocol: JNLP4-connect Aug 24, 2024 2:01:57 PM org.jenkinsci.remoting.protocol.impl.BIONetworkLayer$Reader run INFO: Waiting for ProtocolStack to start. Aug 24, 2024 2:01:57 PM hudson.remoting.Launcher$CuiListener status INFO: Remote identity confirmed: 52:34:14:5f:aa:87:d8:d8:c9:a3:23:e7:32:15:da:d8 Aug 24, 2024 2:01:57 PM hudson.remoting.Launcher$CuiListener status INFO: Connected [root@mcw12 jenkinsagent]#

然jar包在后台运行起来

[root@mcw12 jenkinsagent]# ls agent.jar secret-file [root@mcw12 jenkinsagent]# java -jar agent.jar -url http://10.0.0.25:8080/ -secret @secret-file -name node1 -workDir "/app" & [1] 4121 [root@mcw12 jenkinsagent]# Aug 24, 2024 2:09:39 PM org.jenkinsci.remoting.engine.WorkDirManager initializeWorkDir INFO: Using /app/remoting as a remoting work directory Aug 24, 2024 2:09:39 PM org.jenkinsci.remoting.engine.WorkDirManager setupLogging INFO: Both error and output logs will be printed to /app/remoting Aug 24, 2024 2:09:39 PM hudson.remoting.Launcher createEngine INFO: Setting up agent: node1 Aug 24, 2024 2:09:39 PM hudson.remoting.Engine startEngine INFO: Using Remoting version: 3198.v03a_401881f3e Aug 24, 2024 2:09:39 PM org.jenkinsci.remoting.engine.WorkDirManager initializeWorkDir INFO: Using /app/remoting as a remoting work directory Aug 24, 2024 2:09:39 PM hudson.remoting.Launcher$CuiListener status INFO: Locating server among [http://10.0.0.25:8080/] Aug 24, 2024 2:09:39 PM org.jenkinsci.remoting.engine.JnlpAgentEndpointResolver resolve INFO: Remoting server accepts the following protocols: [JNLP4-connect, Ping] Aug 24, 2024 2:09:39 PM hudson.remoting.Launcher$CuiListener status INFO: Agent discovery successful Agent address: 10.0.0.25 Agent port: 9812 Identity: 52:34:14:5f:aa:87:d8:d8:c9:a3:23:e7:32:15:da:d8 Aug 24, 2024 2:09:39 PM hudson.remoting.Launcher$CuiListener status INFO: Handshaking Aug 24, 2024 2:09:39 PM hudson.remoting.Launcher$CuiListener status INFO: Connecting to 10.0.0.25:9812 Aug 24, 2024 2:09:39 PM hudson.remoting.Launcher$CuiListener status INFO: Server reports protocol JNLP4-connect-proxy not supported, skipping Aug 24, 2024 2:09:39 PM hudson.remoting.Launcher$CuiListener status INFO: Trying protocol: JNLP4-connect Aug 24, 2024 2:09:39 PM org.jenkinsci.remoting.protocol.impl.BIONetworkLayer$Reader run INFO: Waiting for ProtocolStack to start. Aug 24, 2024 2:09:39 PM hudson.remoting.Launcher$CuiListener status INFO: Remote identity confirmed: 52:34:14:5f:aa:87:d8:d8:c9:a3:23:e7:32:15:da:d8 Aug 24, 2024 2:09:39 PM hudson.remoting.Launcher$CuiListener status INFO: Connected [root@mcw12 jenkinsagent]#

再次查看,node1状态已经正常

已经连接

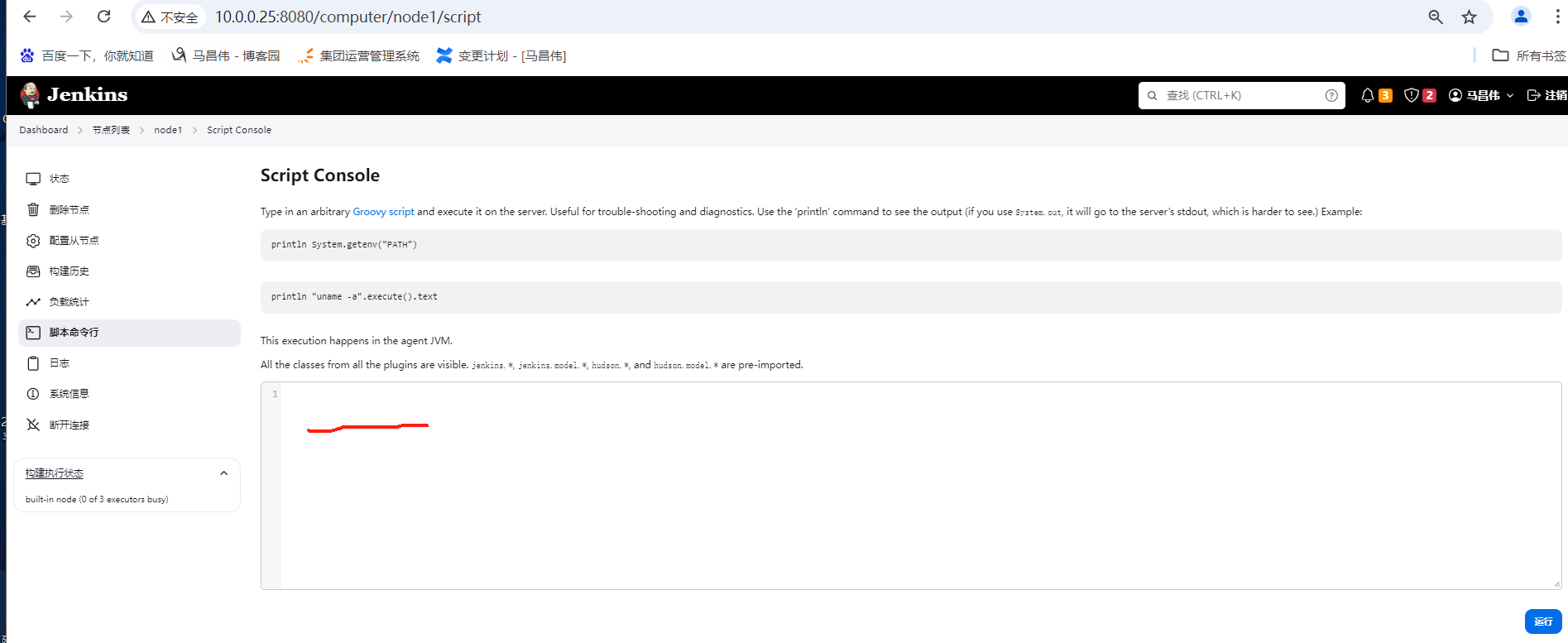

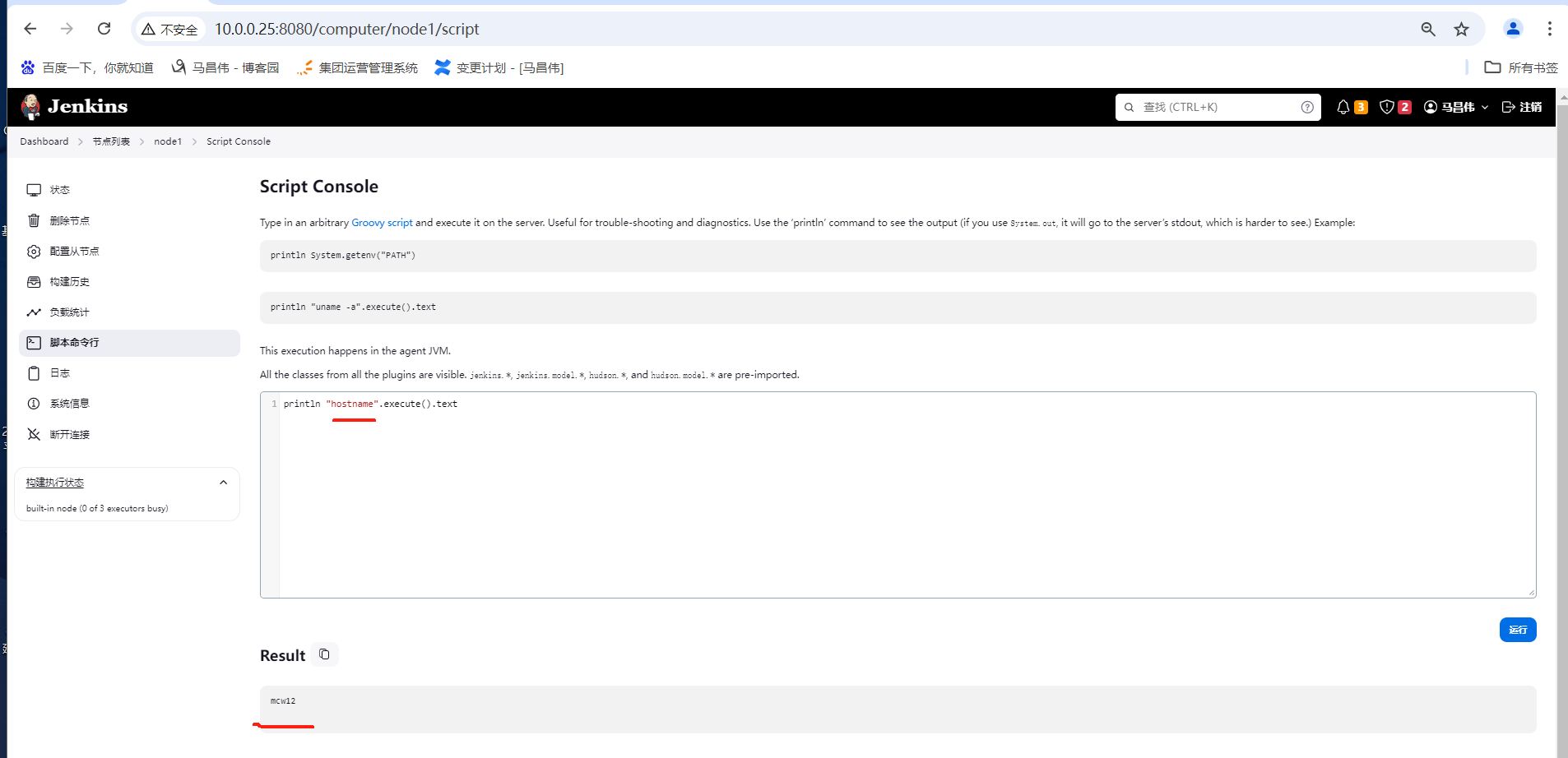

脚本命令行,我们试试

按照他给的例子执行命令,可以看到,的确是在node1 ,也就是mcw12上执行了命令,并返回了结果。

日志

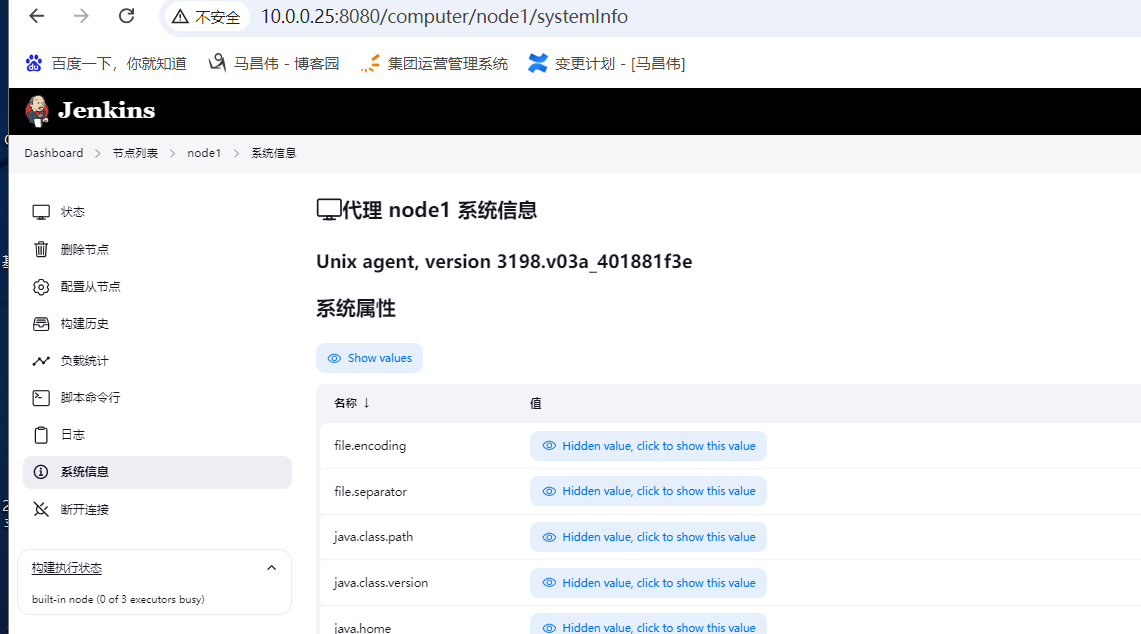

一些系统信息

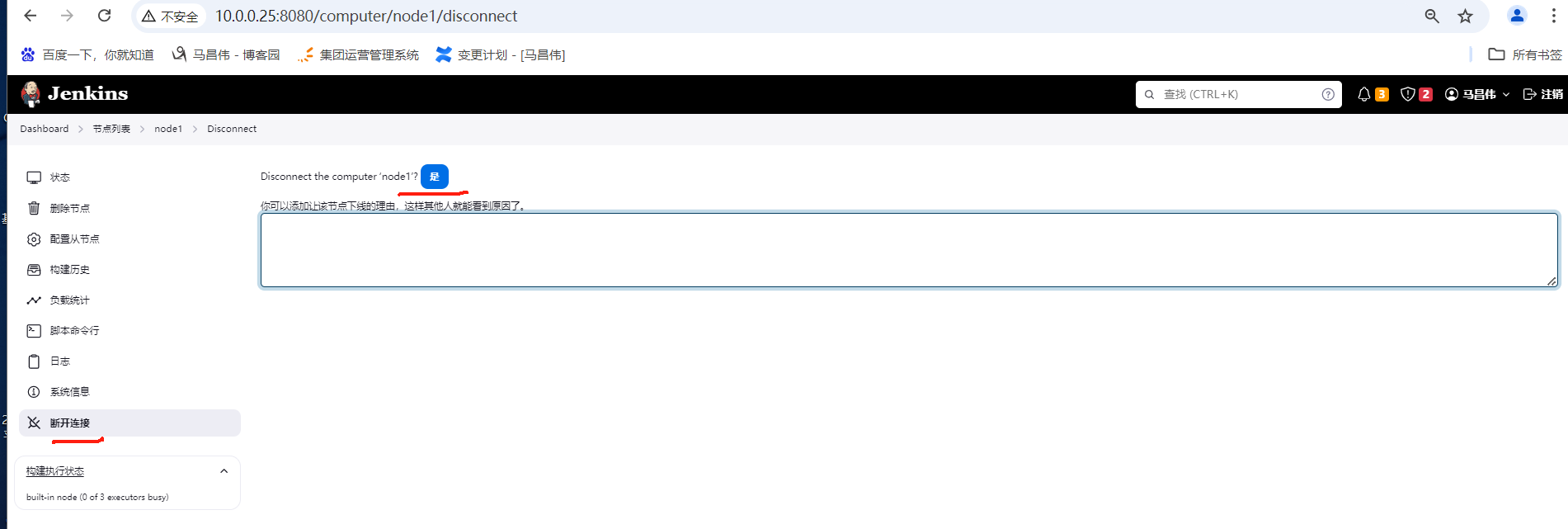

也可以点击断开连接

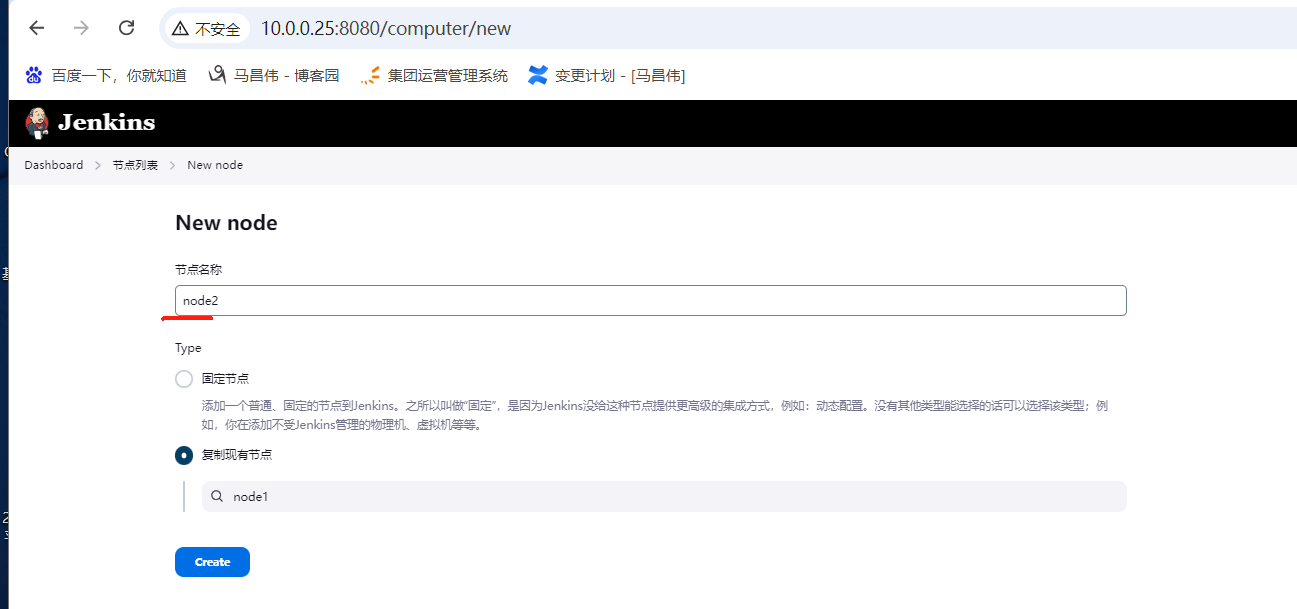

复制现有node1,新增node2

创建,然后不修改配置直接保存,

发现好像jdk版本低了

[root@mcw14 opt]# echo 2ebe228de99679c32e9a2a8ea459f450264b71454db97da0bdffbd6161e6e1f9 > secret-file [root@mcw14 opt]# curl -sO http://10.0.0.25:8080/jnlpJars/agent.jar [root@mcw14 opt]# java -version openjdk version "1.8.0_372" OpenJDK Runtime Environment (build 1.8.0_372-b07) OpenJDK 64-Bit Server VM (build 25.372-b07, mixed mode) [root@mcw14 opt]# mkdir /app [root@mcw14 opt]# java -jar agent.jar -url http://10.0.0.25:8080/ -secret @secret-file -name node2 -workDir "/app" Error: A JNI error has occurred, please check your installation and try again Exception in thread "main" java.lang.UnsupportedClassVersionError: hudson/remoting/Launcher has been compiled by a more recent version of the Java Runtime (class file version 55.0), this version of the Java Runtime only recognizes class file versions up to 52.0 at java.lang.ClassLoader.defineClass1(Native Method) at java.lang.ClassLoader.defineClass(ClassLoader.java:756) at java.security.SecureClassLoader.defineClass(SecureClassLoader.java:142) at java.net.URLClassLoader.defineClass(URLClassLoader.java:473) at java.net.URLClassLoader.access$100(URLClassLoader.java:74) at java.net.URLClassLoader$1.run(URLClassLoader.java:369) at java.net.URLClassLoader$1.run(URLClassLoader.java:363) at java.security.AccessController.doPrivileged(Native Method) at java.net.URLClassLoader.findClass(URLClassLoader.java:362) at java.lang.ClassLoader.loadClass(ClassLoader.java:418) at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:352) at java.lang.ClassLoader.loadClass(ClassLoader.java:351) at sun.launcher.LauncherHelper.checkAndLoadMain(LauncherHelper.java:621) [root@mcw14 opt]#

升级jdk版本和master的一样之后,再次执行,就可以加入到集群了。jdk1.8不行,是因为master是java17,所以node也得同样版本么,不清楚。

[root@mcw14 opt]# java -jar agent.jar -url http://10.0.0.25:8080/ -secret @secret-file -name node2 -workDir "/app" & [1] 2304 [root@mcw14 opt]# Aug 25, 2024 12:05:35 AM org.jenkinsci.remoting.engine.WorkDirManager initializeWorkDir INFO: Using /app/remoting as a remoting work directory Aug 25, 2024 12:05:35 AM org.jenkinsci.remoting.engine.WorkDirManager setupLogging INFO: Both error and output logs will be printed to /app/remoting Aug 25, 2024 12:05:35 AM hudson.remoting.Launcher createEngine INFO: Setting up agent: node2 Aug 25, 2024 12:05:35 AM hudson.remoting.Engine startEngine INFO: Using Remoting version: 3198.v03a_401881f3e Aug 25, 2024 12:05:35 AM org.jenkinsci.remoting.engine.WorkDirManager initializeWorkDir INFO: Using /app/remoting as a remoting work directory Aug 25, 2024 12:05:36 AM hudson.remoting.Launcher$CuiListener status INFO: Locating server among [http://10.0.0.25:8080/] Aug 25, 2024 12:05:36 AM org.jenkinsci.remoting.engine.JnlpAgentEndpointResolver resolve INFO: Remoting server accepts the following protocols: [JNLP4-connect, Ping] Aug 25, 2024 12:05:36 AM hudson.remoting.Launcher$CuiListener status INFO: Agent discovery successful Agent address: 10.0.0.25 Agent port: 9812 Identity: 52:34:14:5f:aa:87:d8:d8:c9:a3:23:e7:32:15:da:d8 Aug 25, 2024 12:05:36 AM hudson.remoting.Launcher$CuiListener status INFO: Handshaking Aug 25, 2024 12:05:36 AM hudson.remoting.Launcher$CuiListener status INFO: Connecting to 10.0.0.25:9812 Aug 25, 2024 12:05:36 AM hudson.remoting.Launcher$CuiListener status INFO: Server reports protocol JNLP4-connect-proxy not supported, skipping Aug 25, 2024 12:05:36 AM hudson.remoting.Launcher$CuiListener status INFO: Trying protocol: JNLP4-connect Aug 25, 2024 12:05:36 AM org.jenkinsci.remoting.protocol.impl.BIONetworkLayer$Reader run INFO: Waiting for ProtocolStack to start. Aug 25, 2024 12:05:36 AM hudson.remoting.Launcher$CuiListener status INFO: Remote identity confirmed: 52:34:14:5f:aa:87:d8:d8:c9:a3:23:e7:32:15:da:d8 Aug 25, 2024 12:05:36 AM hudson.remoting.Launcher$CuiListener status INFO: Connected [root@mcw14 opt]#

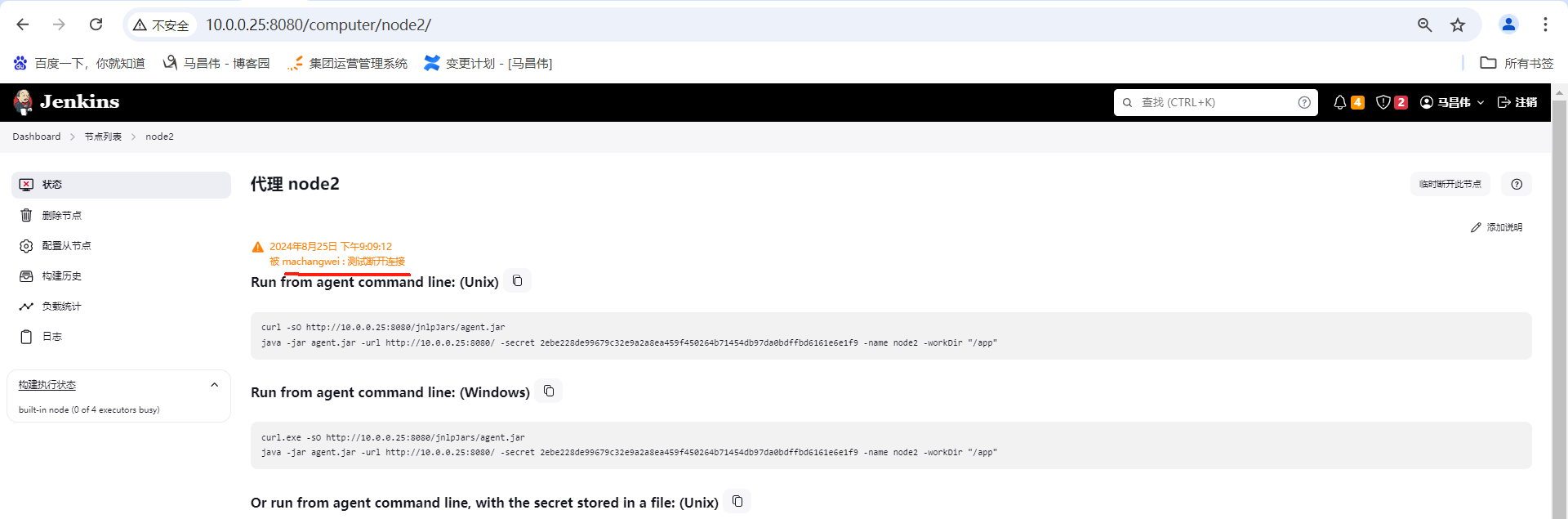

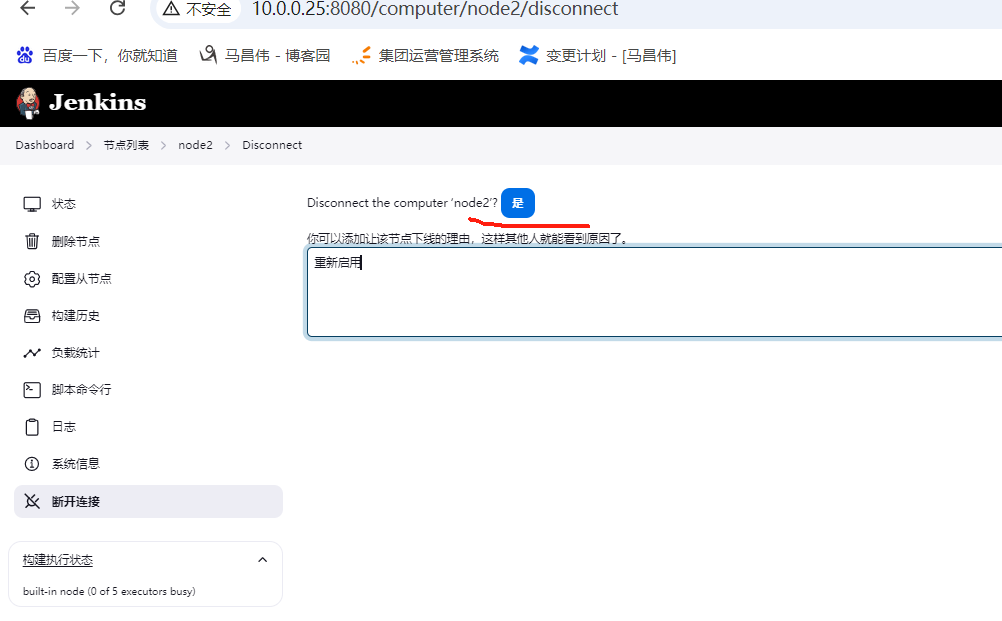

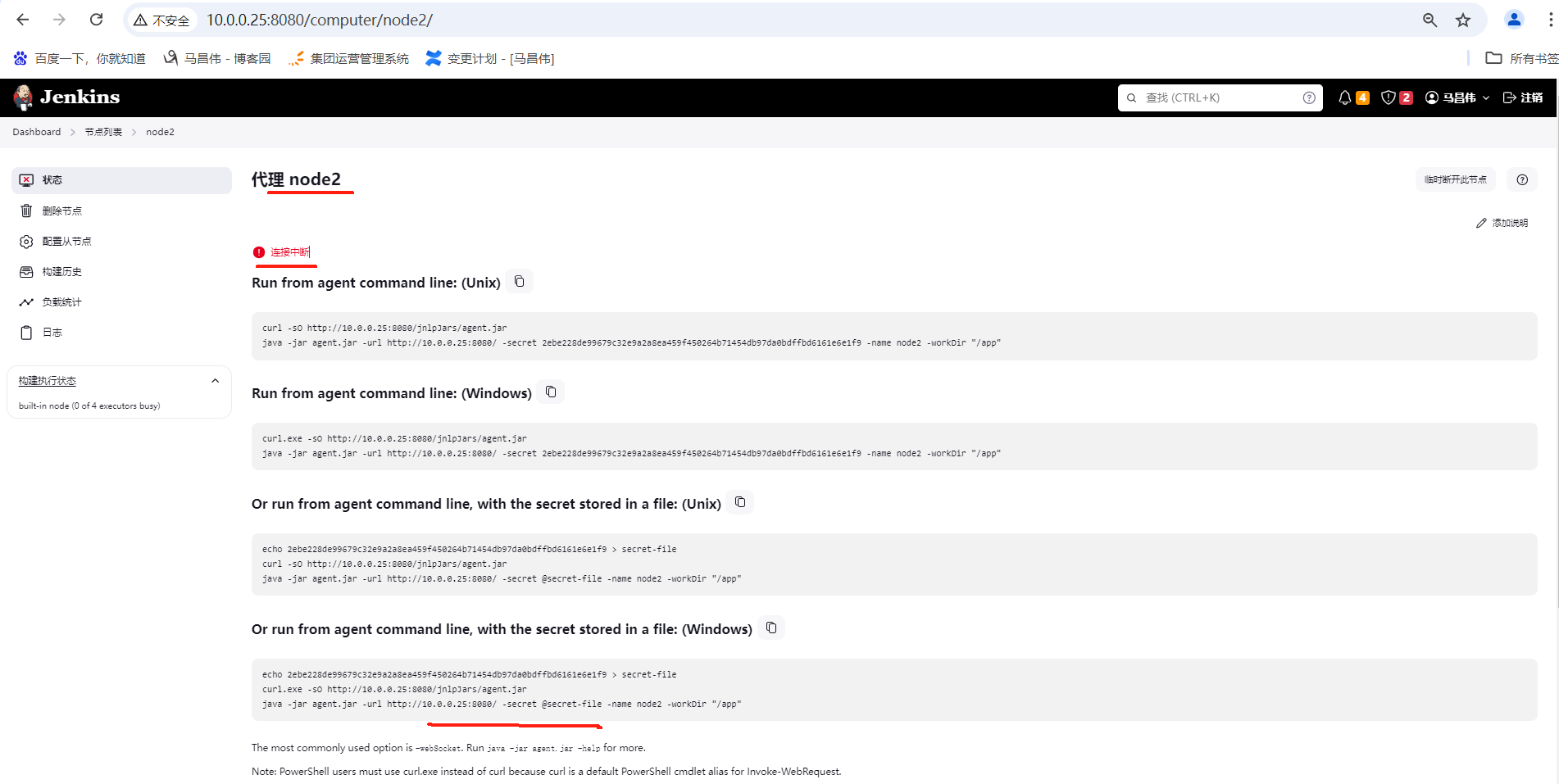

测试断开连接:

重新连接启用

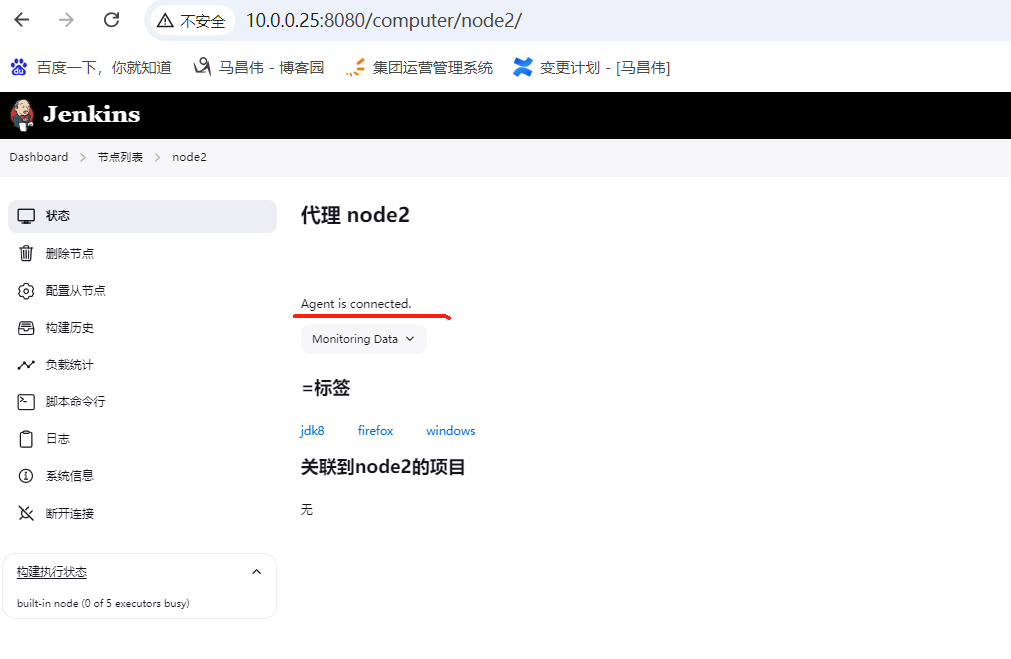

再过一会,可以看到,连接上了

已经恢复为正常可用节点

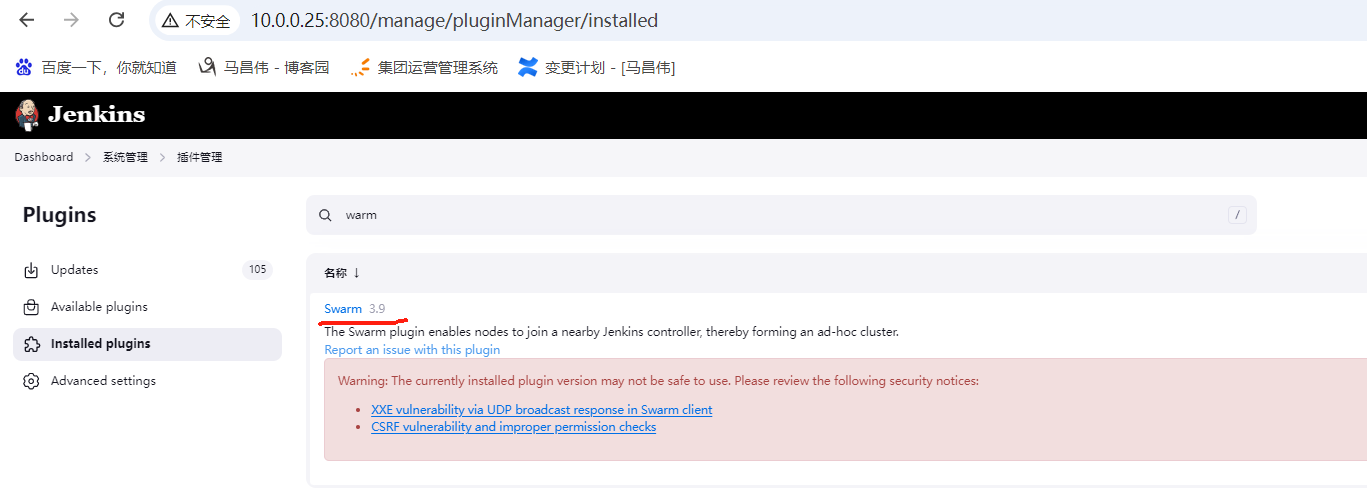

通过swarm插件增加agent

swarm插件: https://plugins.jenkins.io/swarm

swarm客户端: https://repo.jenkins-ci.org/releases/org/jenkins-ci/plugins/swarm-client/3.9/swarm-client-3.9.jar

安装插件

在装了jdk的机器上下载客户端

[root@mcw14 ~]# java -version openjdk version "1.8.0_372" OpenJDK Runtime Environment (build 1.8.0_372-b07) OpenJDK 64-Bit Server VM (build 25.372-b07, mixed mode) [root@mcw14 ~]# cd /opt/ [root@mcw14 opt]# ls otp_src_23.3 otp_src_23.3.tar.gz rabbitmq-server-3.8.16-1.el7.noarch.rpm sonarqube sonarqube-7.6.zip [root@mcw14 opt]# wget https://repo.jenkins-ci.org/releases/org/jenkins-ci/plugins/swarm-client/3.9/swarm-client-3.9.jar [root@mcw14 opt]# ls otp_src_23.3 otp_src_23.3.tar.gz rabbitmq-server-3.8.16-1.el7.noarch.rpm sonarqube sonarqube-7.6.zip swarm-client-3.9.jar [root@mcw14 opt]#

启动jar包,添加node失败,后面有时间再看这种方式的吧,暂时没有解决

[root@mcw14 opt]# java -jar swarm-client-3.9.jar -username machangwei -password 123456 -master http://10.0.0.25:8080/jenkins -name swarm-node Aug 25, 2024 12:03:54 AM hudson.plugins.swarm.Client main INFO: Client.main invoked with: [-username machangwei -password 123456 -master http://10.0.0.25:8080/jenkins -name swarm-node] Aug 25, 2024 12:03:54 AM hudson.plugins.swarm.Client run INFO: Discovering Jenkins master SLF4J: Failed to load class "org.slf4j.impl.StaticLoggerBinder". SLF4J: Defaulting to no-operation (NOP) logger implementation SLF4J: See http://www.slf4j.org/codes.html#StaticLoggerBinder for further details. Aug 25, 2024 12:03:55 AM hudson.plugins.swarm.SwarmClient discoverFromMasterUrl SEVERE: Failed to fetch swarm information from Jenkins, plugin not installed? Aug 25, 2024 12:03:55 AM hudson.plugins.swarm.Client run SEVERE: RetryException occurred hudson.plugins.swarm.RetryException: Failed to fetch swarm information from Jenkins, plugin not installed? at hudson.plugins.swarm.SwarmClient.discoverFromMasterUrl(SwarmClient.java:229) at hudson.plugins.swarm.Client.run(Client.java:146) at hudson.plugins.swarm.Client.main(Client.java:119) Aug 25, 2024 12:03:55 AM hudson.plugins.swarm.Client run INFO: Retrying in 10 seconds

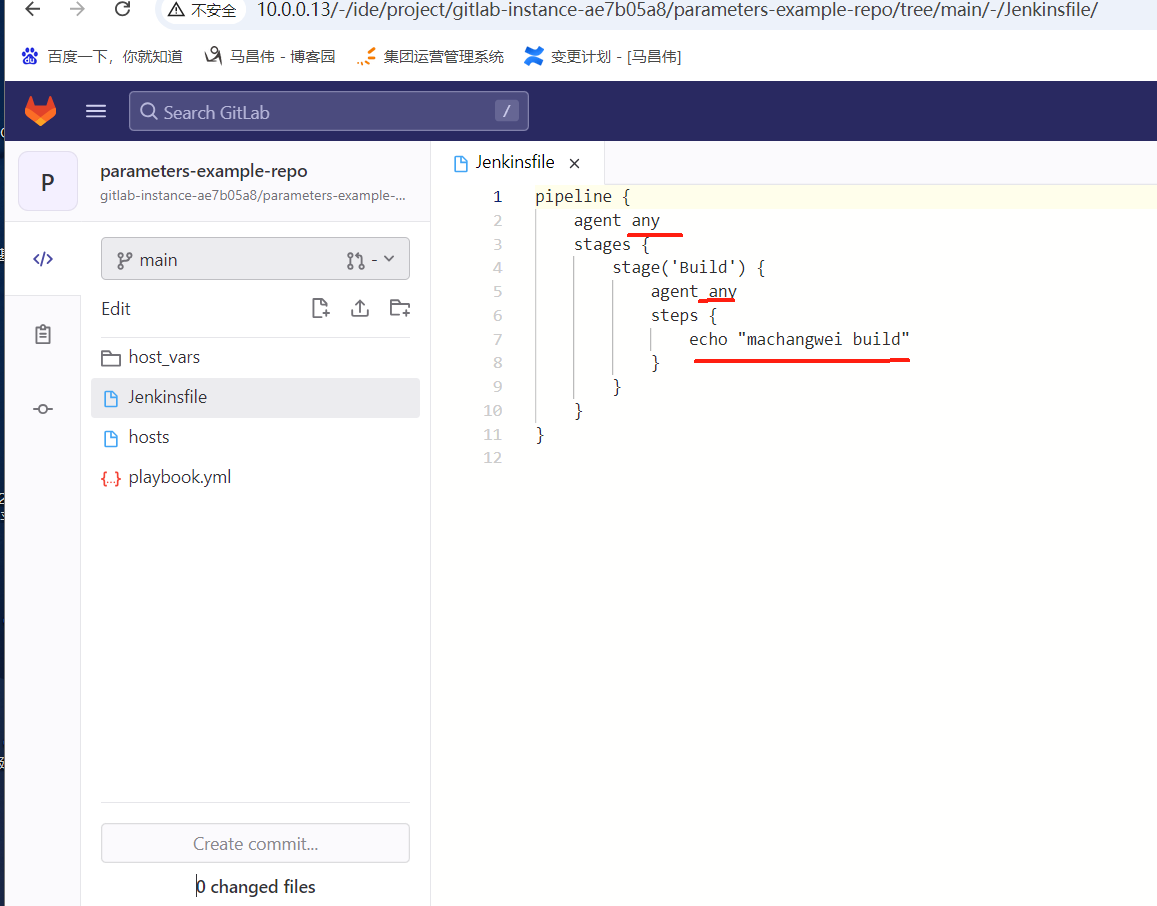

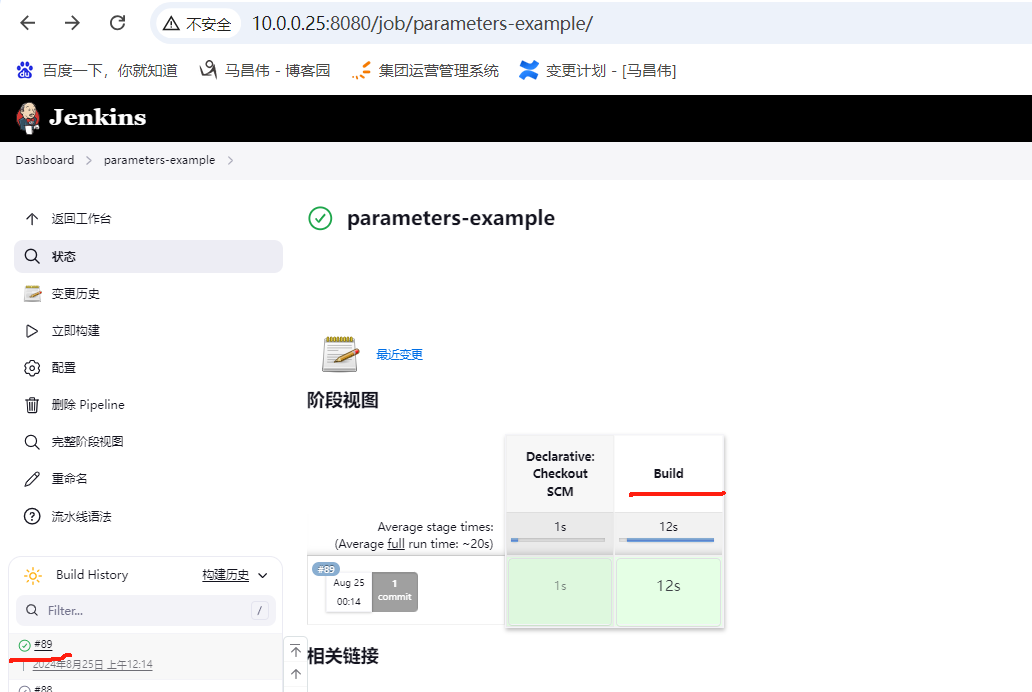

agent部分详解

any

目前有三个节点,node1,node2都还没有运行过任务

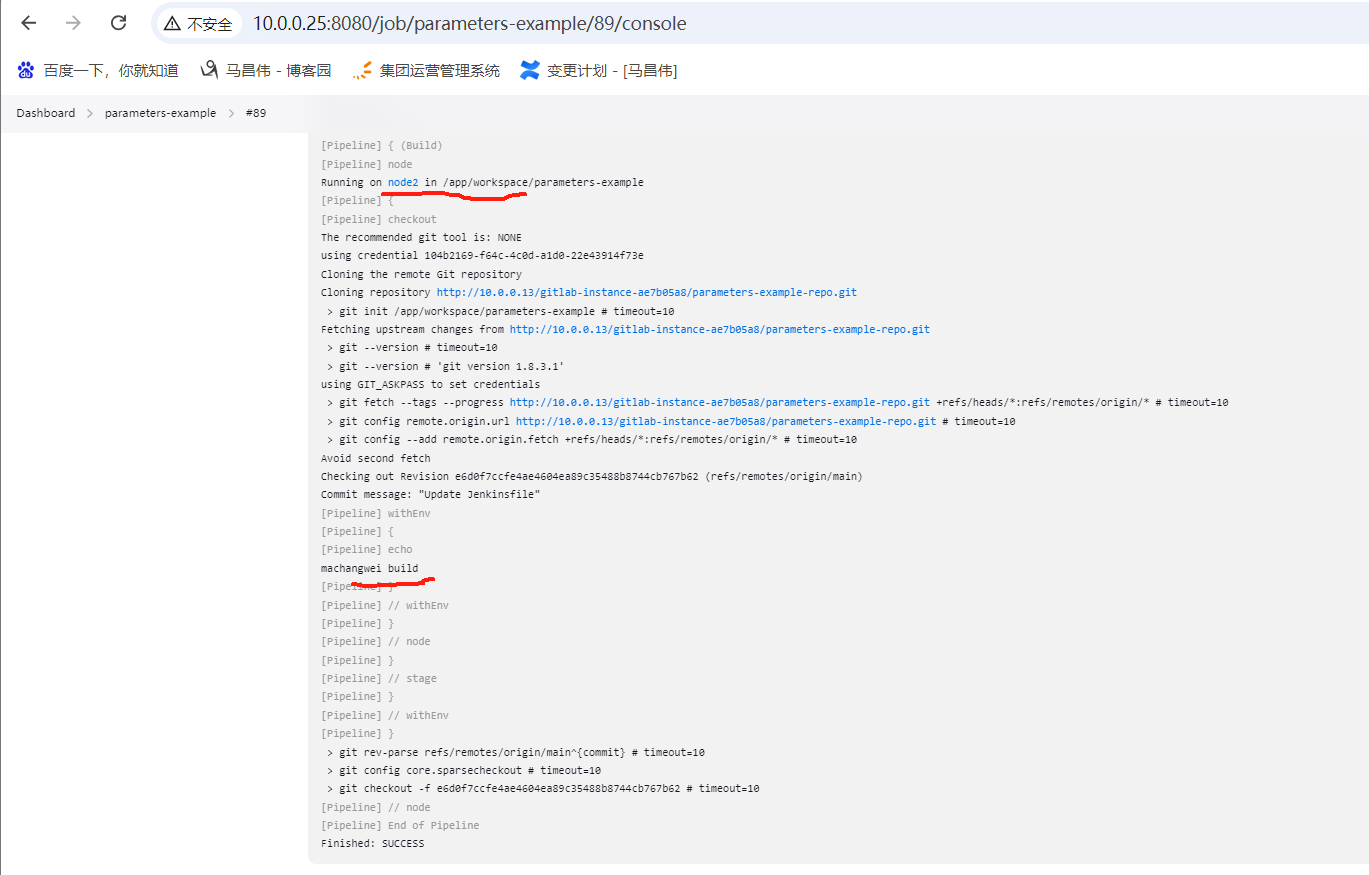

修改文件,点击立即构建

构建成功

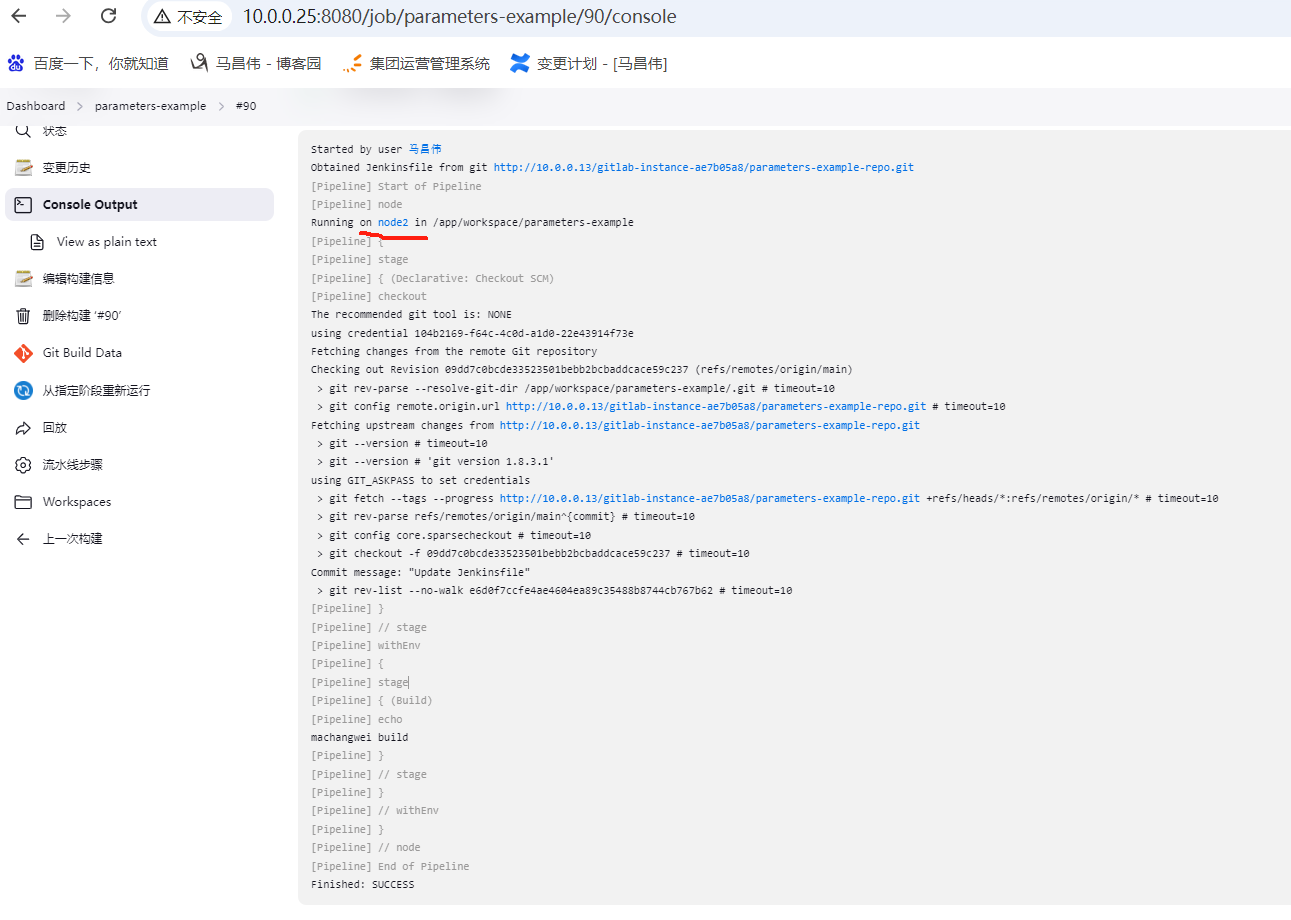

查看日志,运行在node2

查看node2的工作目录,workspace也是新建的目录,

[root@mcw14 opt]# ls /app/ remoting workspace [root@mcw14 opt]# ls /app/remoting/ jarCache logs [root@mcw14 opt]# ls /app/workspace/ parameters-example parameters-example@tmp [root@mcw14 opt]#

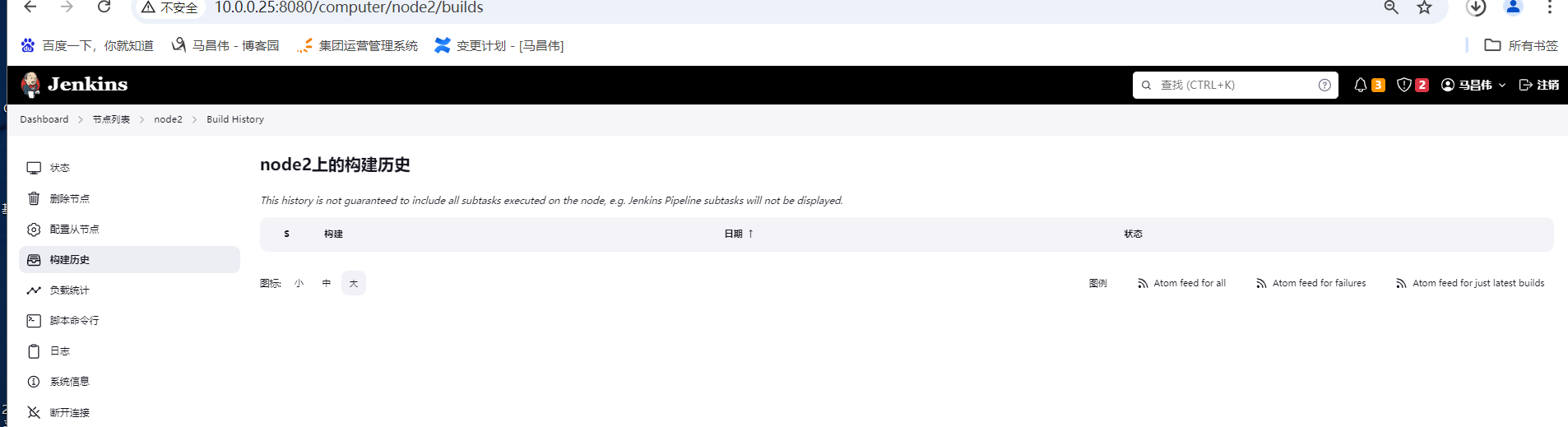

此时查看下node2构建历史,没看到

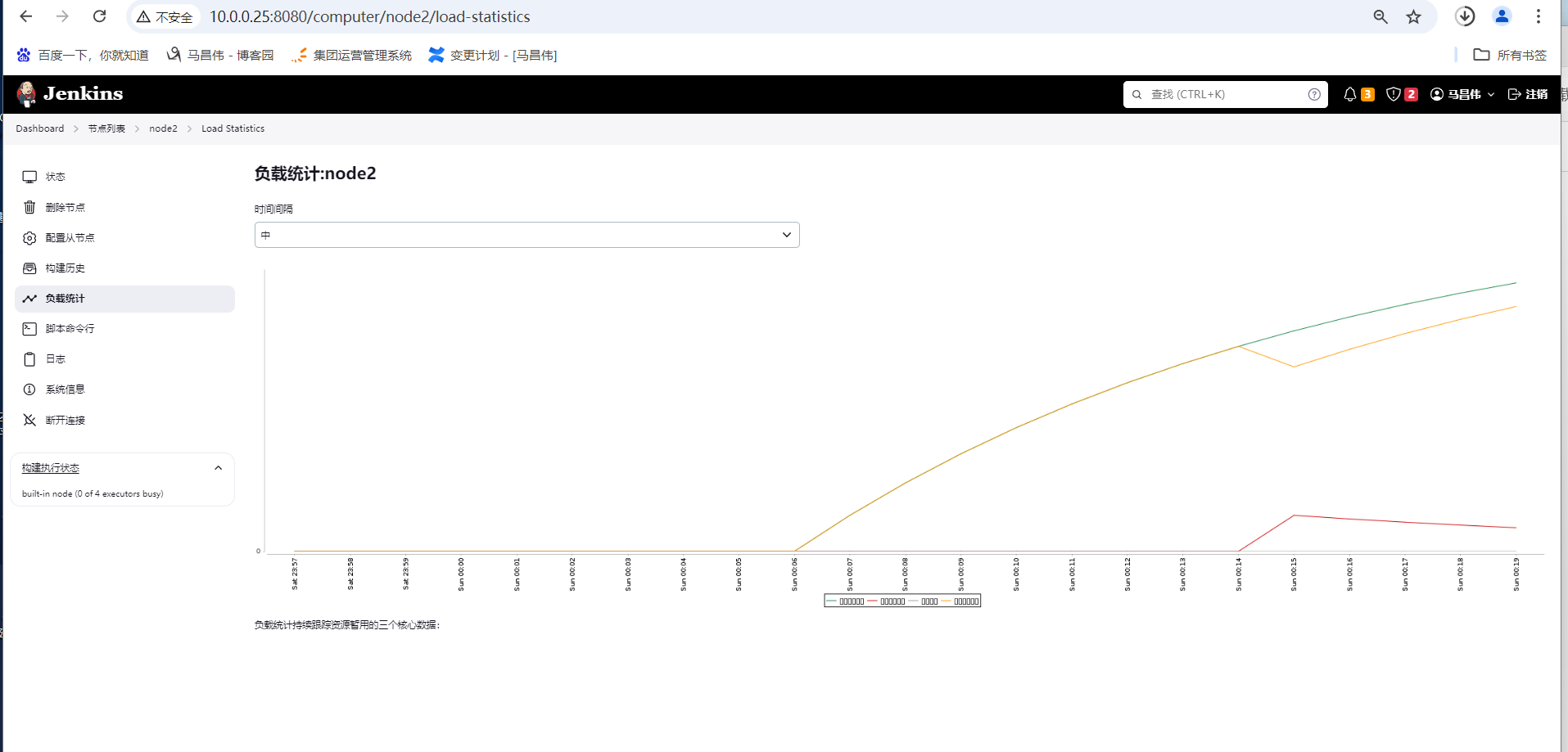

不过负载统计可以看到刚刚在猛增

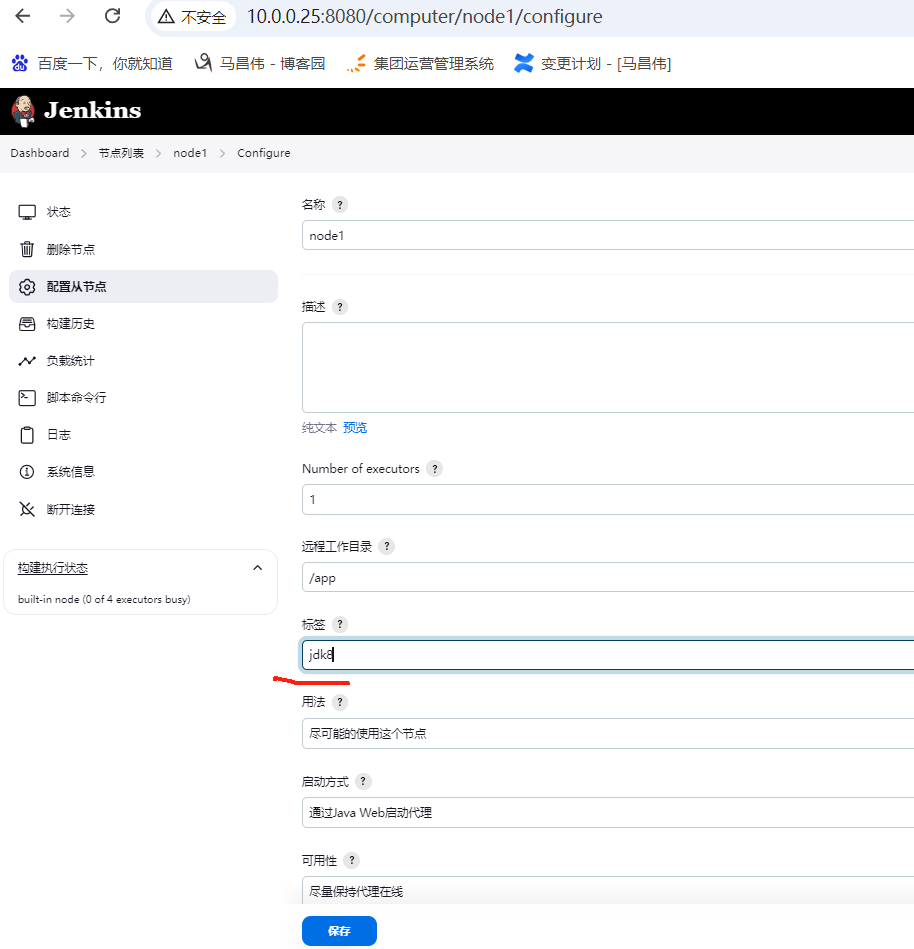

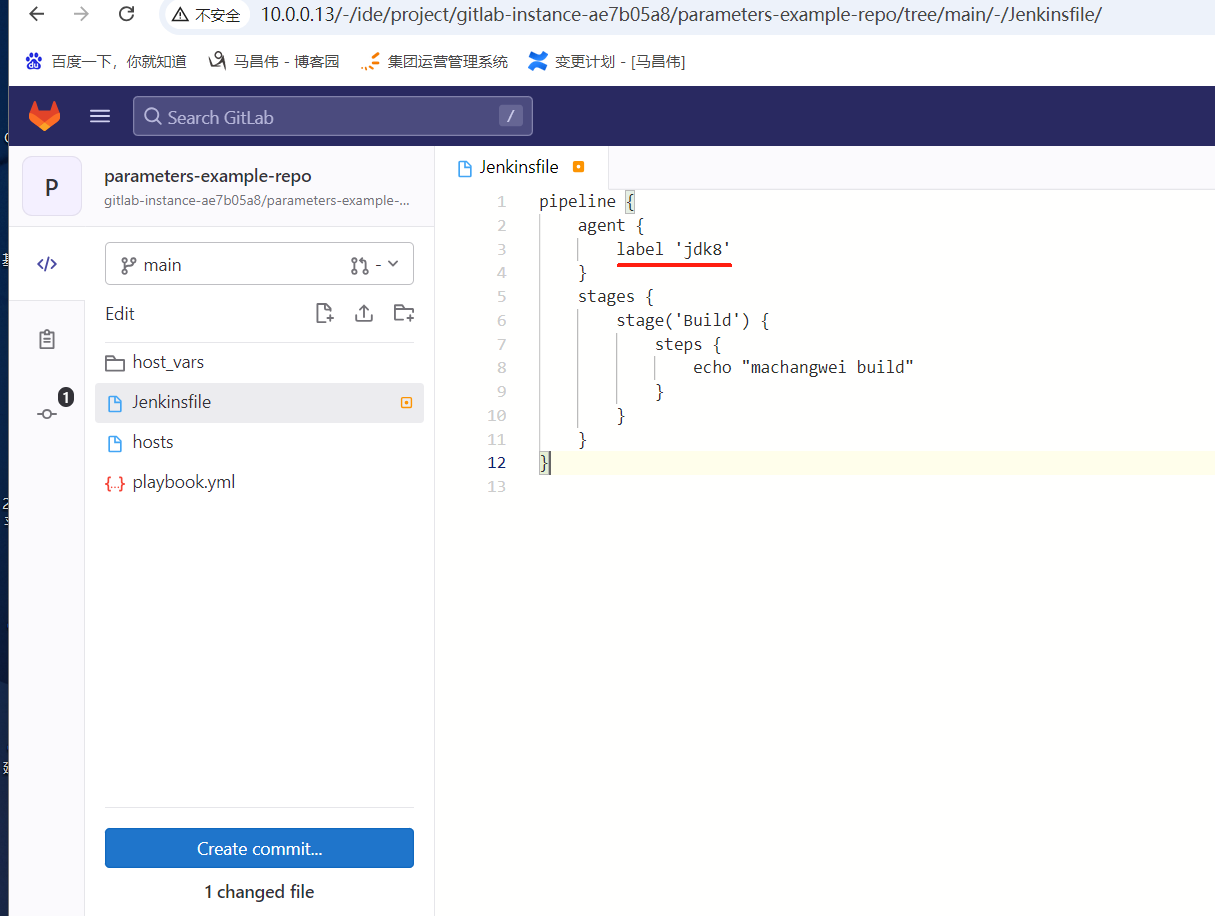

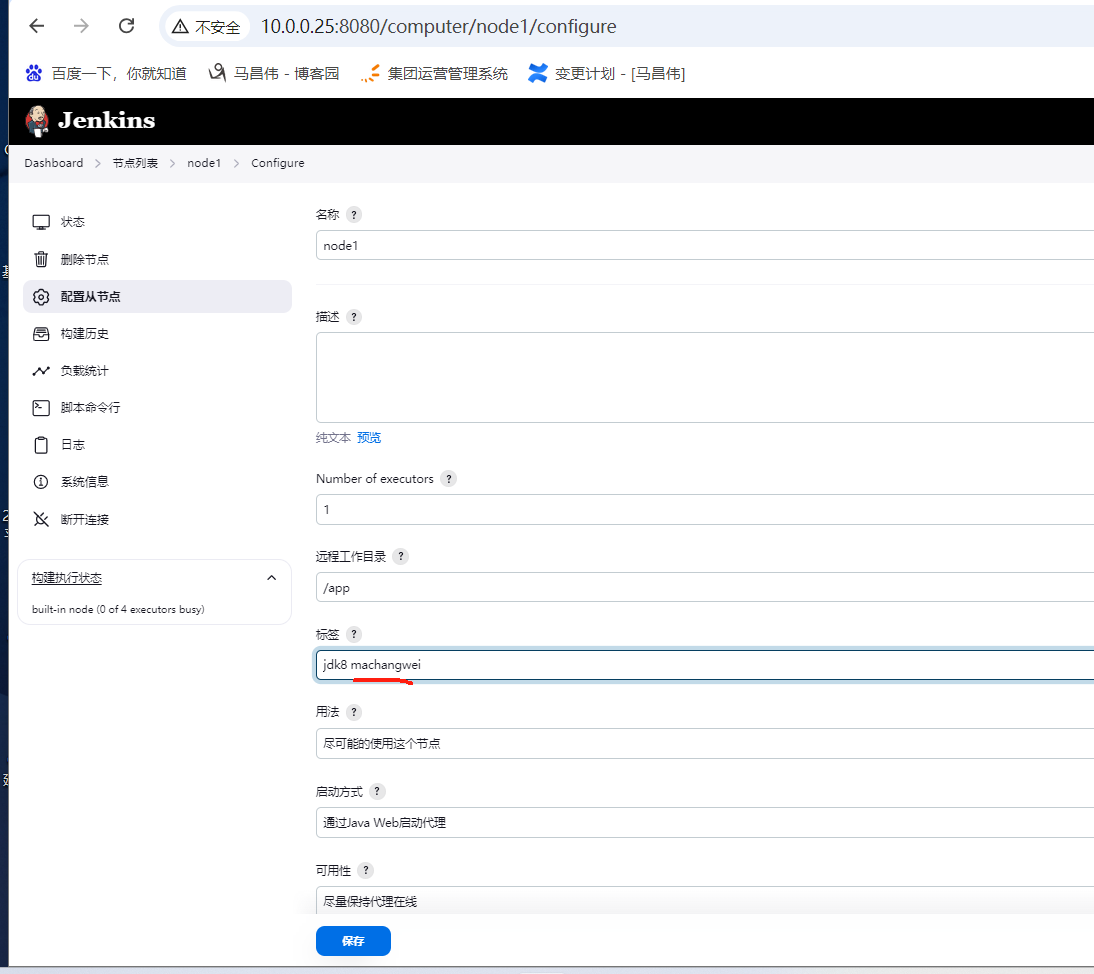

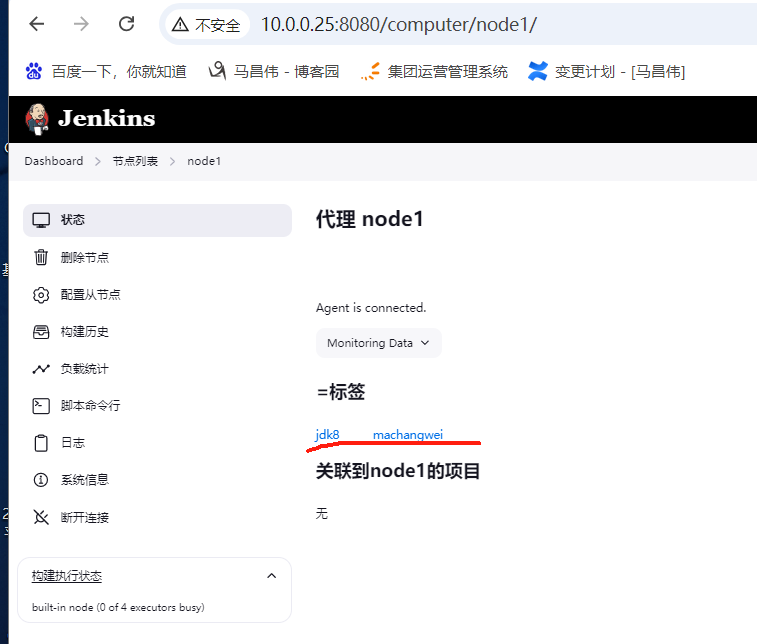

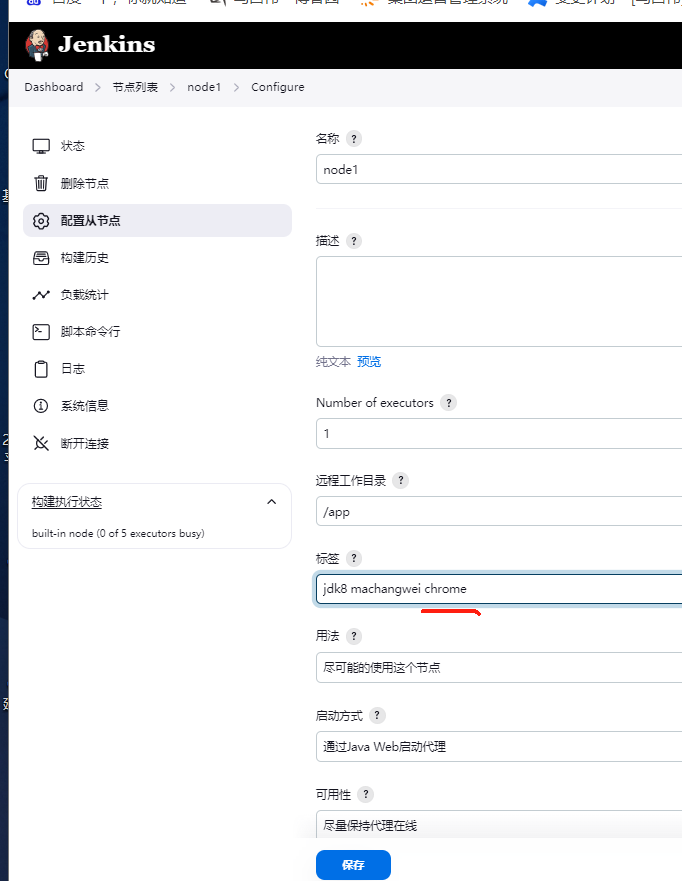

通过标签指定agent

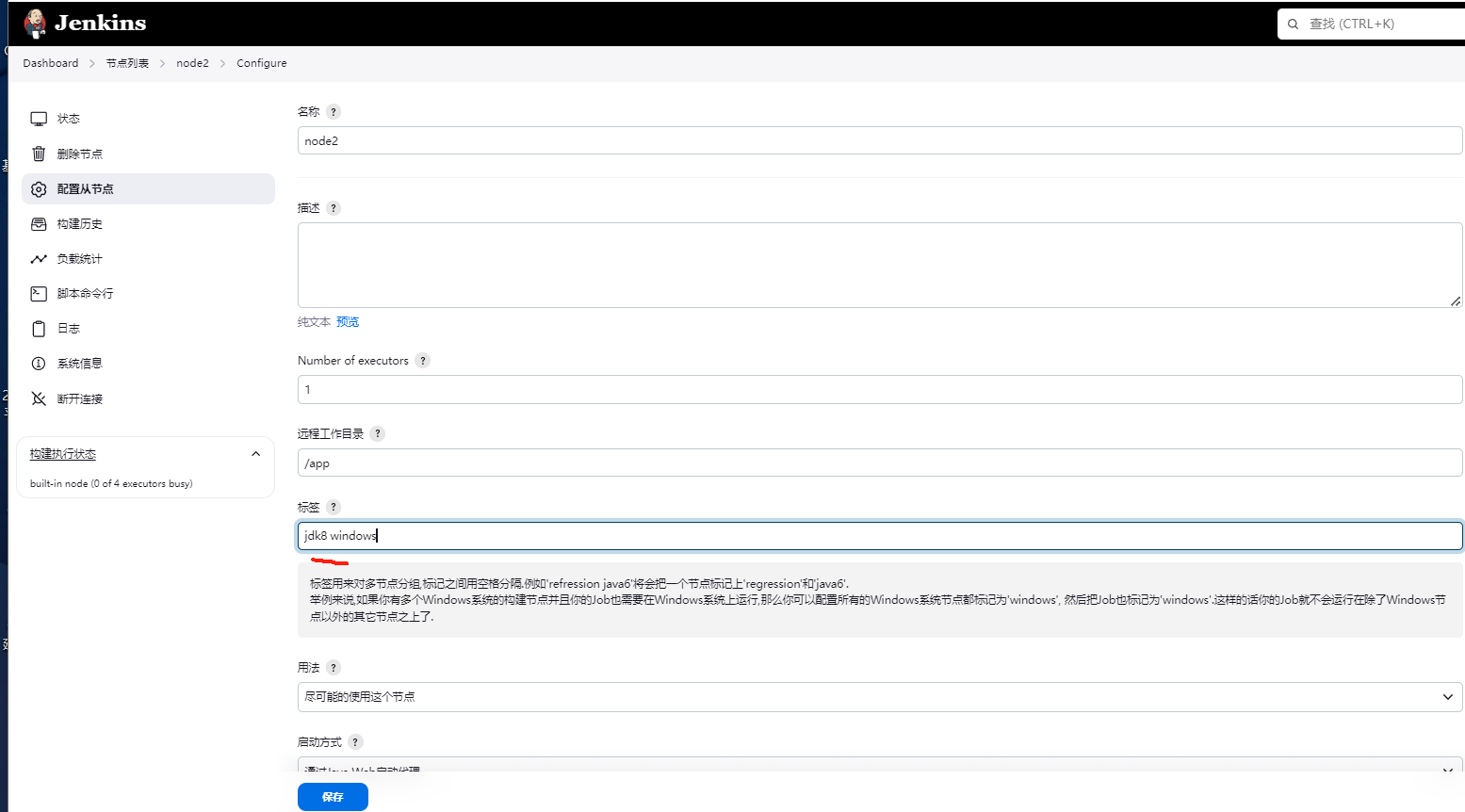

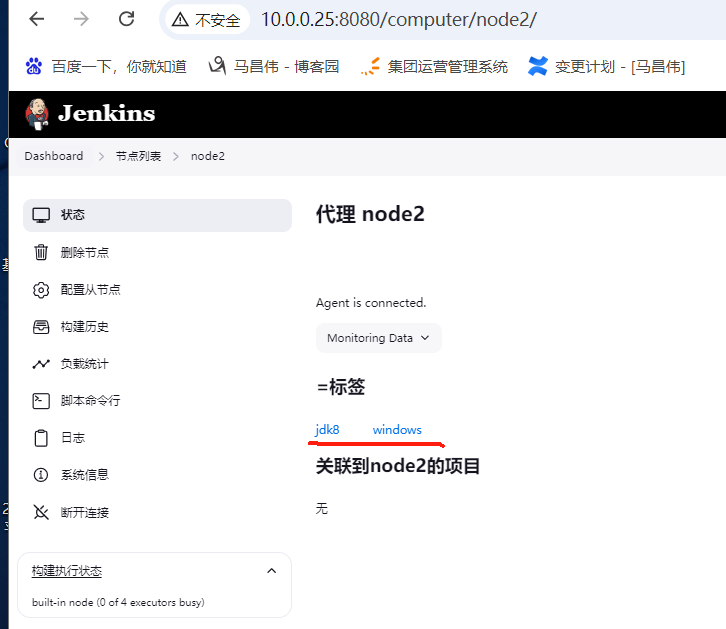

给node2添加标签

node1 只有一个标签,node2两个

指定jdk8的标签,也就是node1或者node2

pipeline { agent { label 'jdk8' } stages { stage('Build') { steps { echo "machangwei build" } } } }

点击构建,运行在node2上了

反复执行,都是调度到node2,改一下,node1添加一个标签

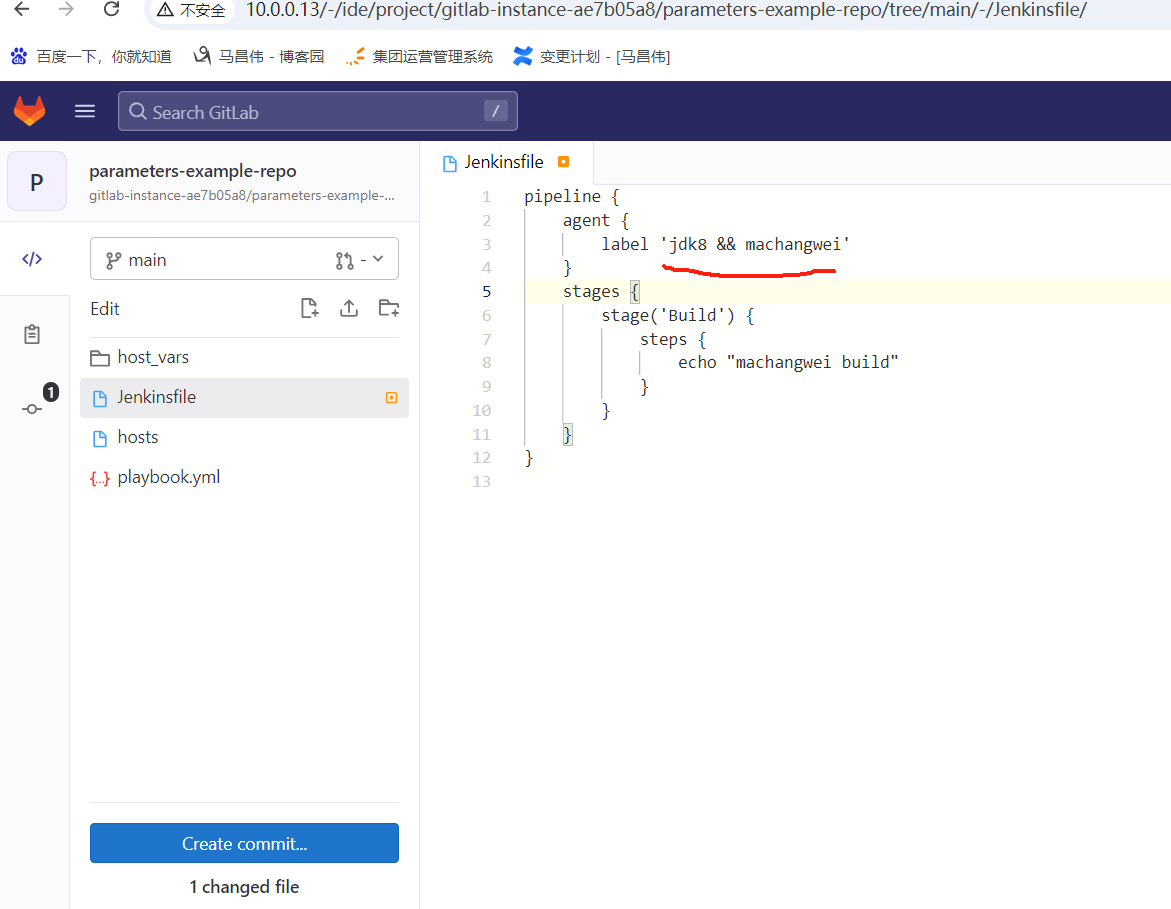

添加一个并且,

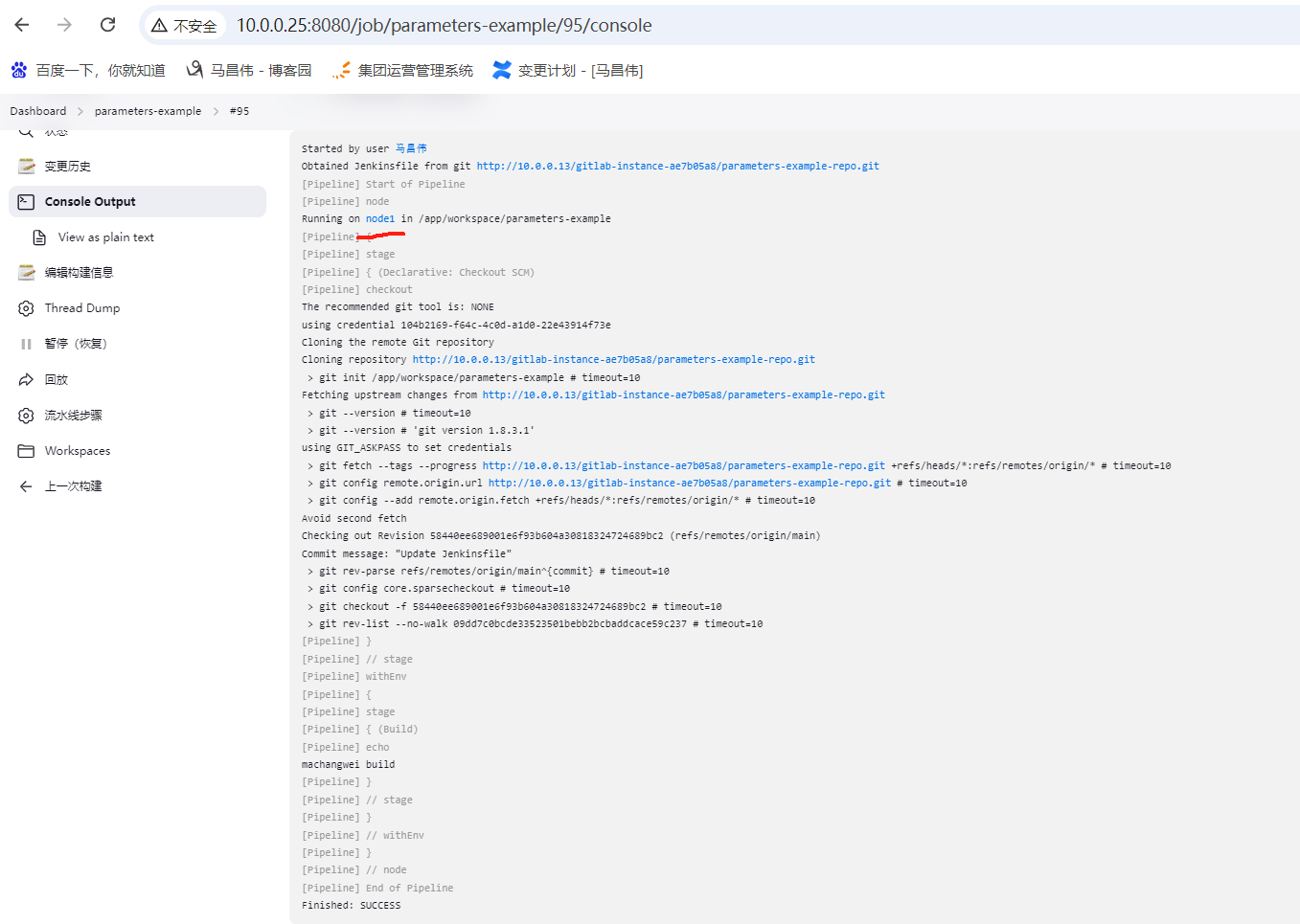

pipeline { agent { label 'jdk8 && machangwei' } stages { stage('Build') { steps { echo "machangwei build" } } } }

这次可以看到,是调度到node1了,只要node1符合。

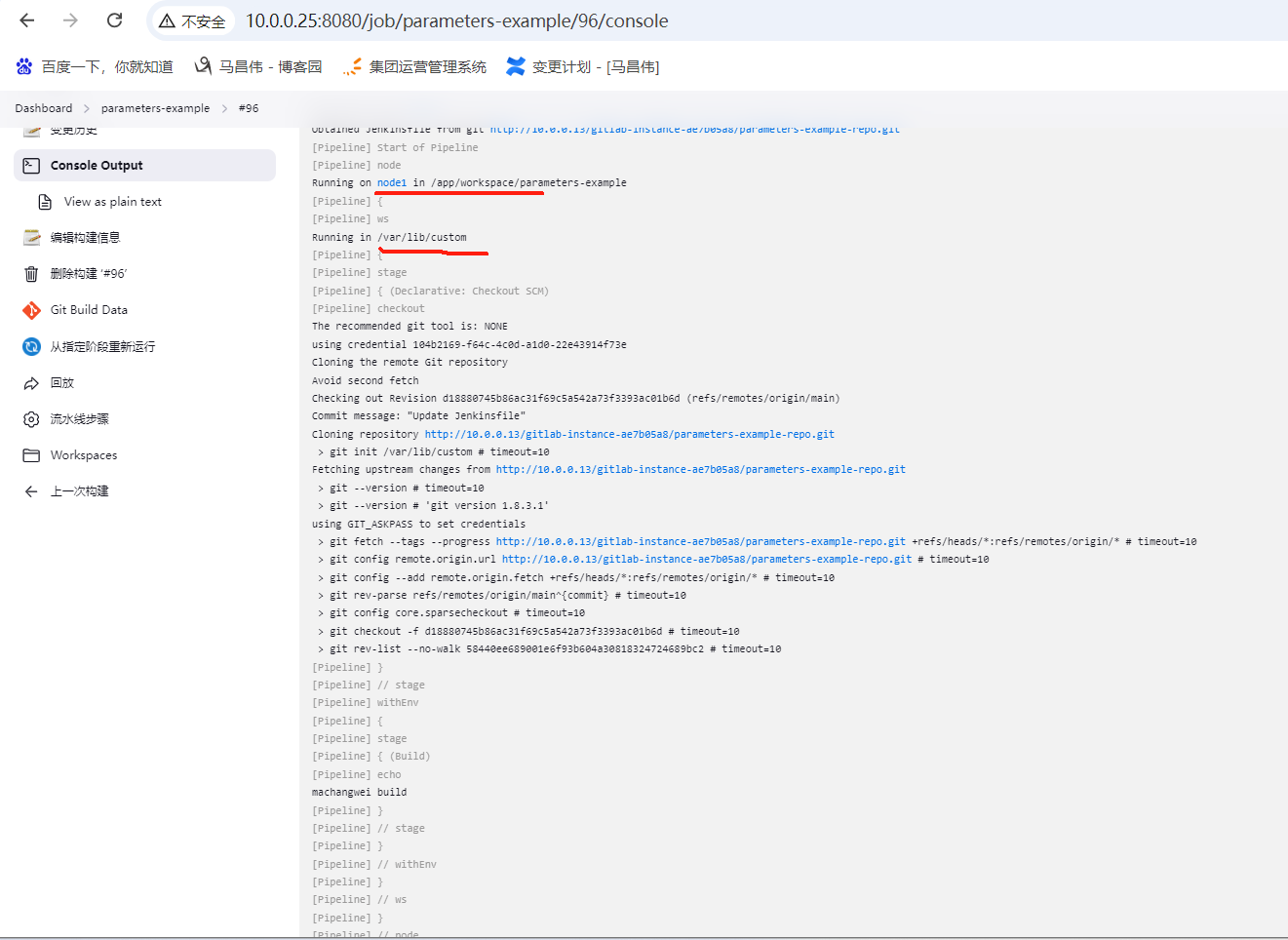

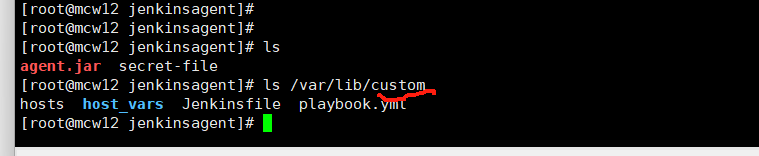

改成agent下面用node,这样还可以用自定义工作目录

pipeline { agent { node { label 'jdk8 && machangwei' customWorkspace '/var/lib/custom' } } stages { stage('Build') { steps { echo "machangwei build" } } } }

构建执行,原本是/app目录下,后面又显示运行在custom这个不存在的目录

可以看到,的确是把代码都拉取到自定义的目录下了,并且这个目录也是新建的

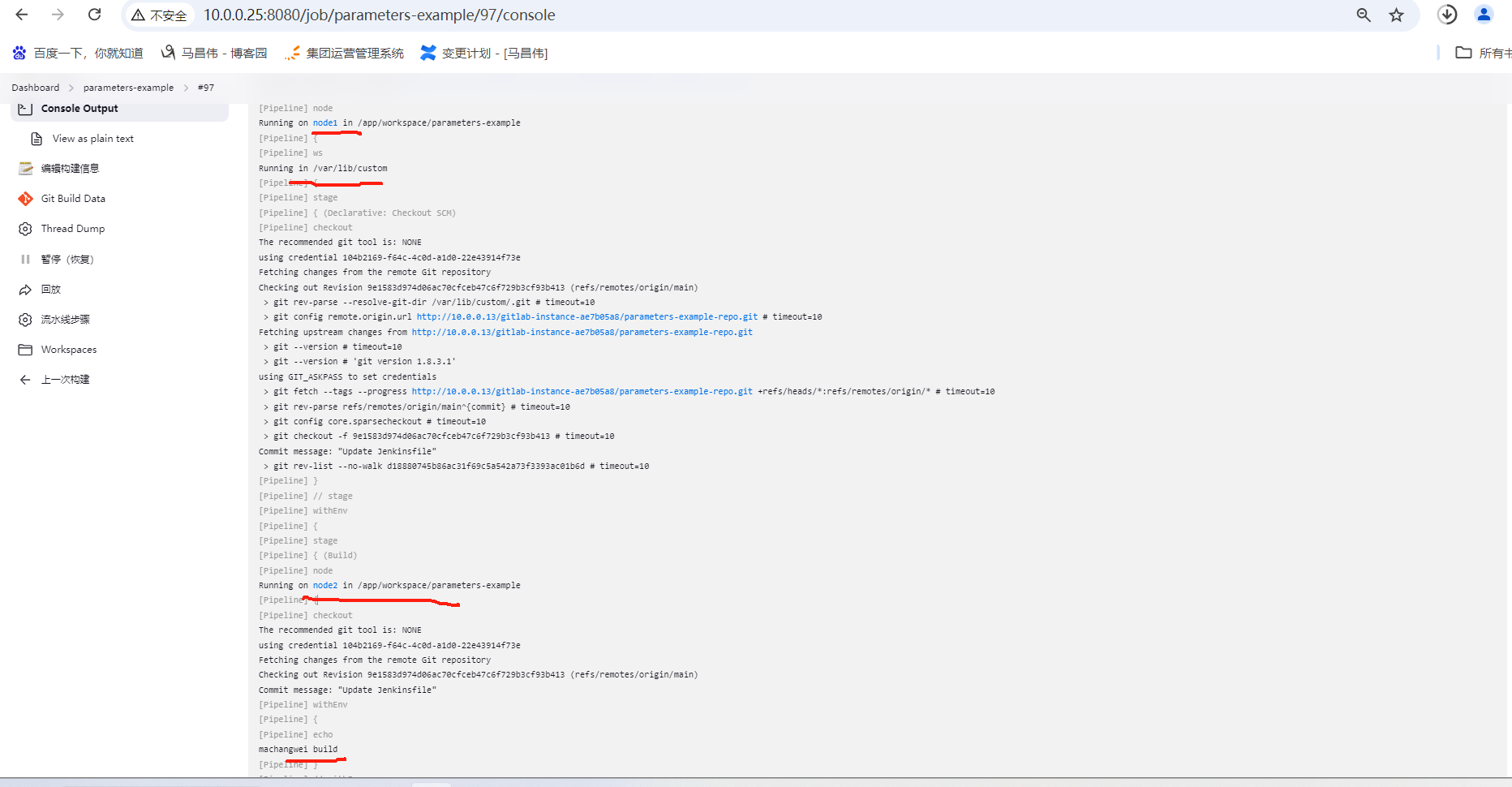

如果两个地方都写了,那么两个地方都有运行的一部分内容

pipeline { agent { node { label 'jdk8 && machangwei' customWorkspace '/var/lib/custom' } } stages { stage('Build') { agent { label 'jdk8 && windows' } steps { echo "machangwei build" } } } }

不分配节点

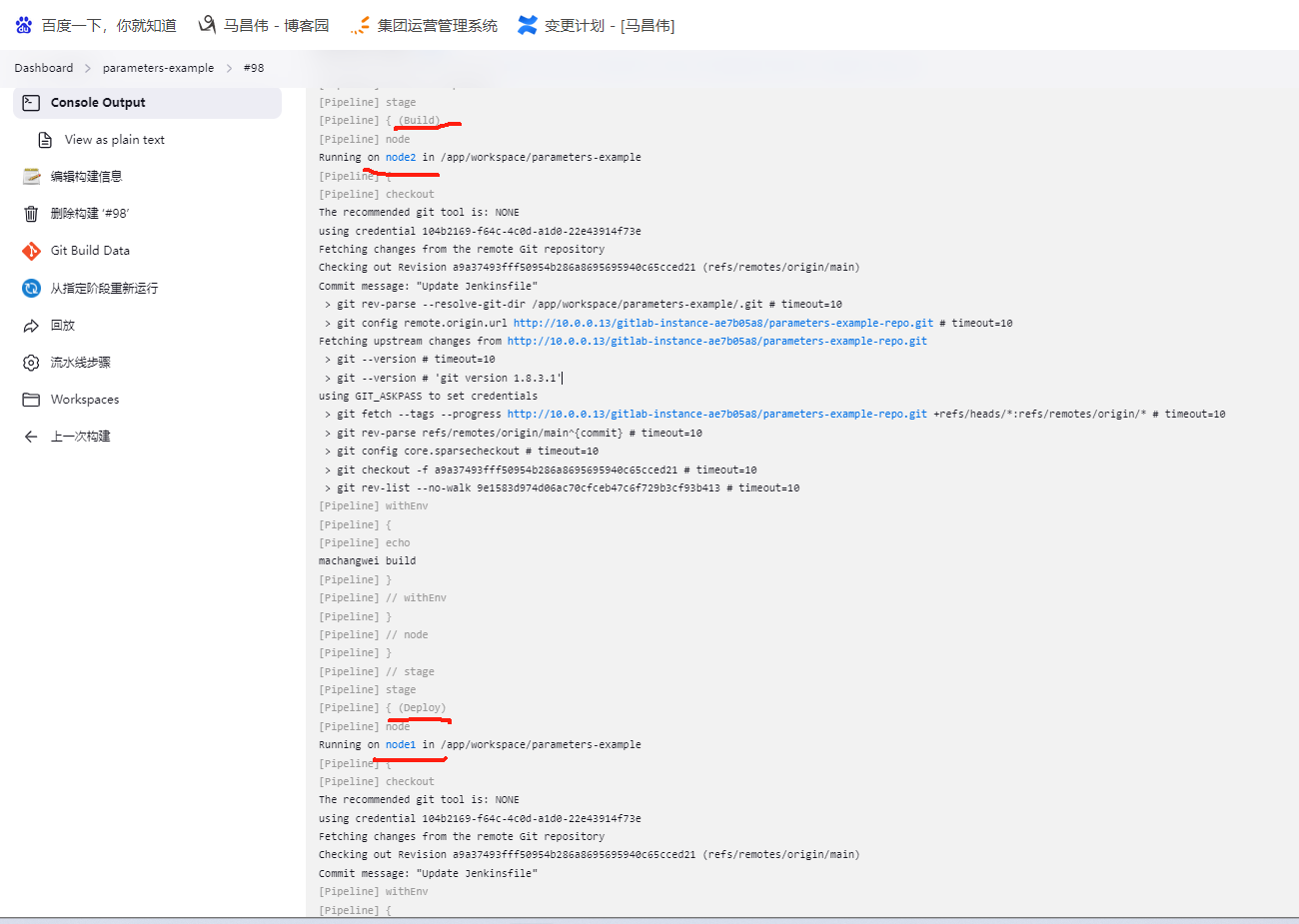

每个阶段都运行在指定的agent,那么最外层的那个agent就设置为none

pipeline { agent none stages { stage('Build') { agent { label 'jdk8 && windows' } steps { echo "machangwei build" } } stage('Deploy') { agent { label 'jdk8 && machangwei' } steps { echo "machangwei deploy" } } } }

带有windows标签的是node2,带有machangwei标签的是node1,如下,build在node2运行,deploy阶段在node1运行,符合我们pipeline的指定agent要求

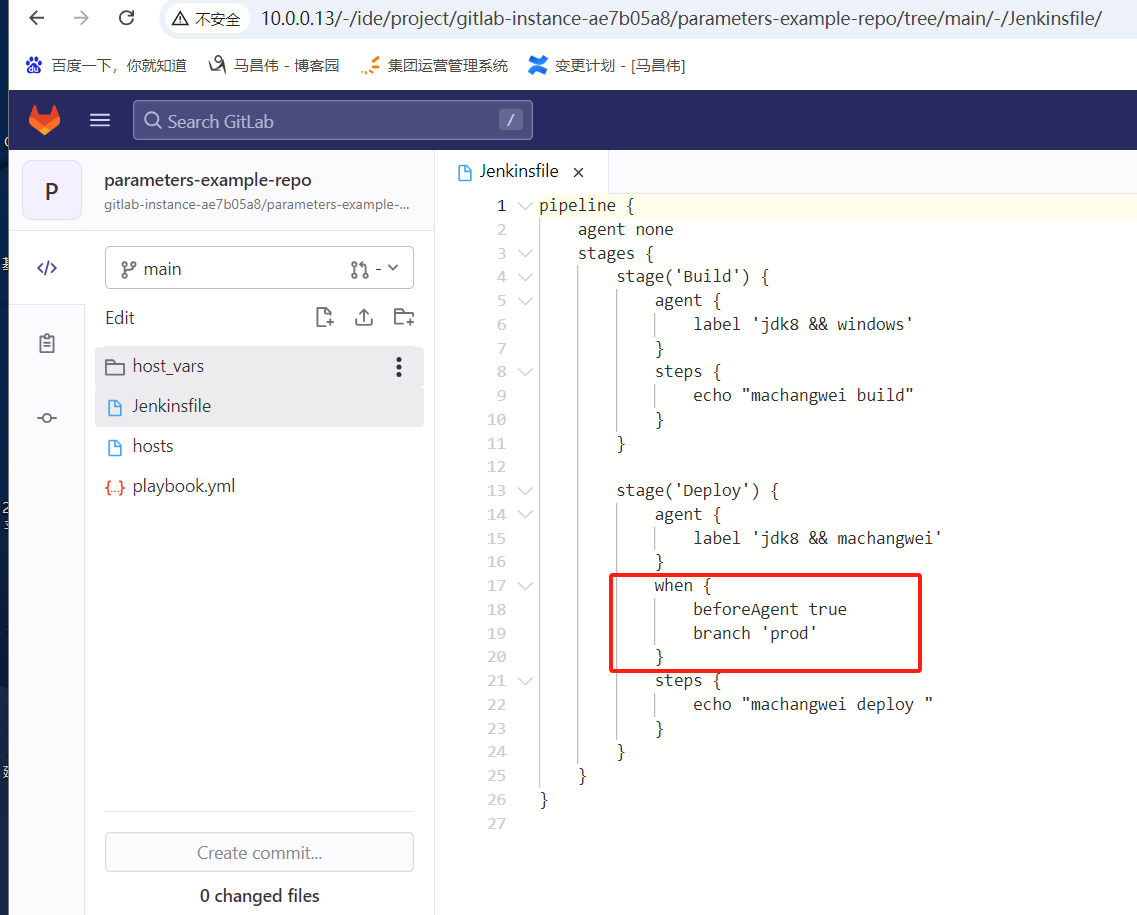

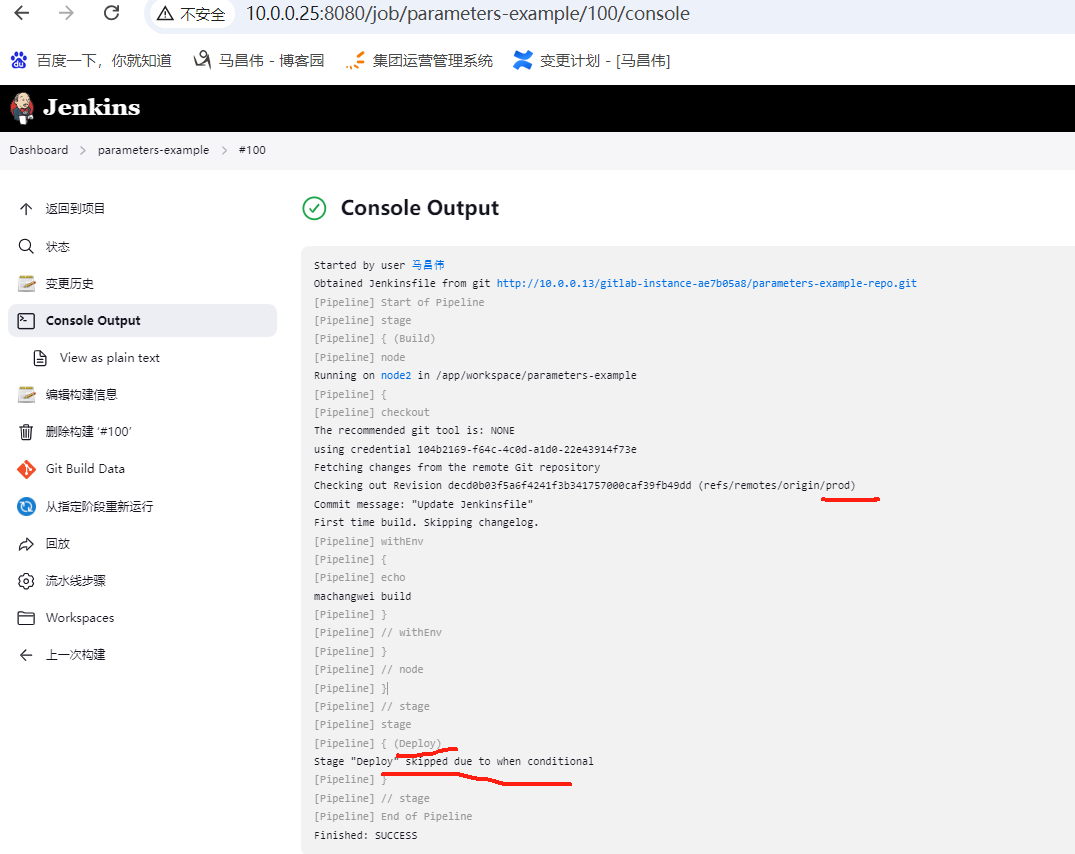

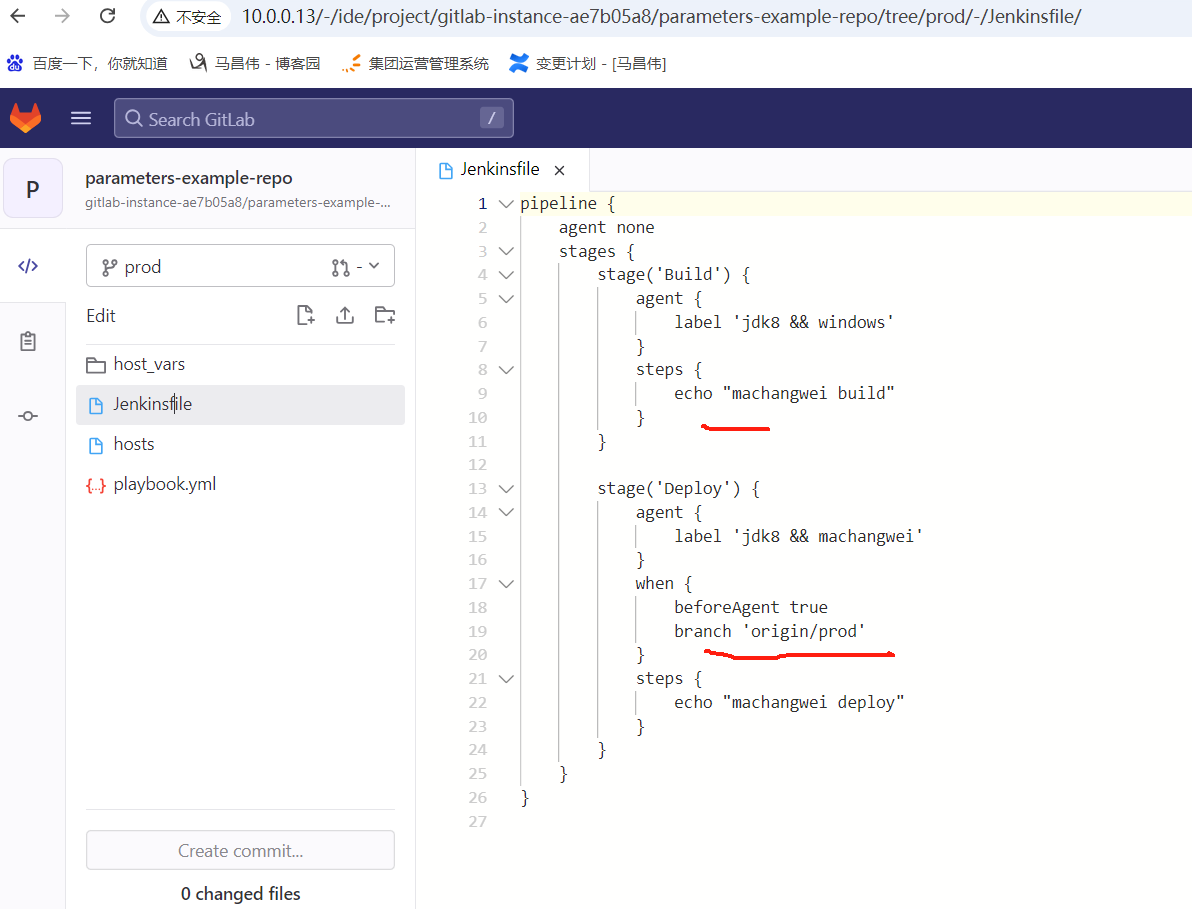

when指令的beforeAgent选项

下面的有点问题,测试没有符合预期

pipeline { agent none stages { stage('Build') { agent { label 'jdk8 && windows' } steps { echo "machangwei build" } } stage('Deploy') { agent { label 'jdk8 && machangwei' } when { beforeAgent true branch 'prod' } steps { echo "machangwei deploy" } } } }

我们把上面那节的jenkinsfile添加一个when判断,

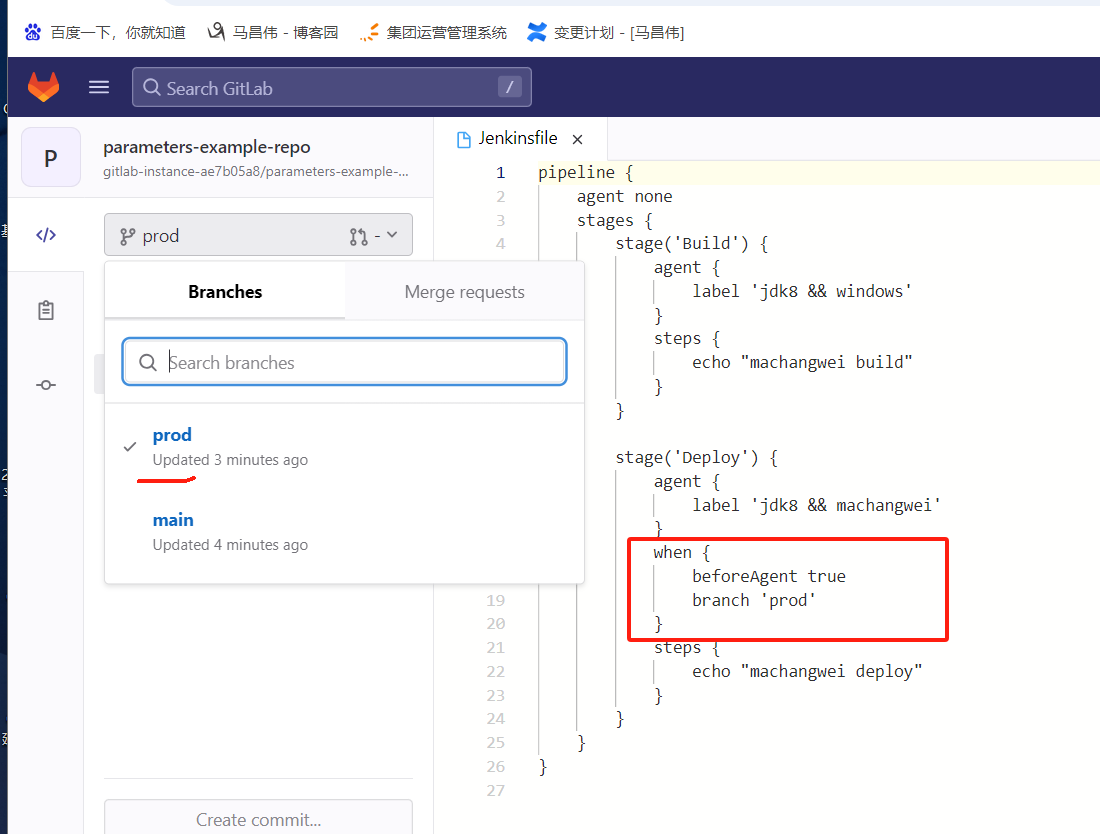

并且新增prod分支

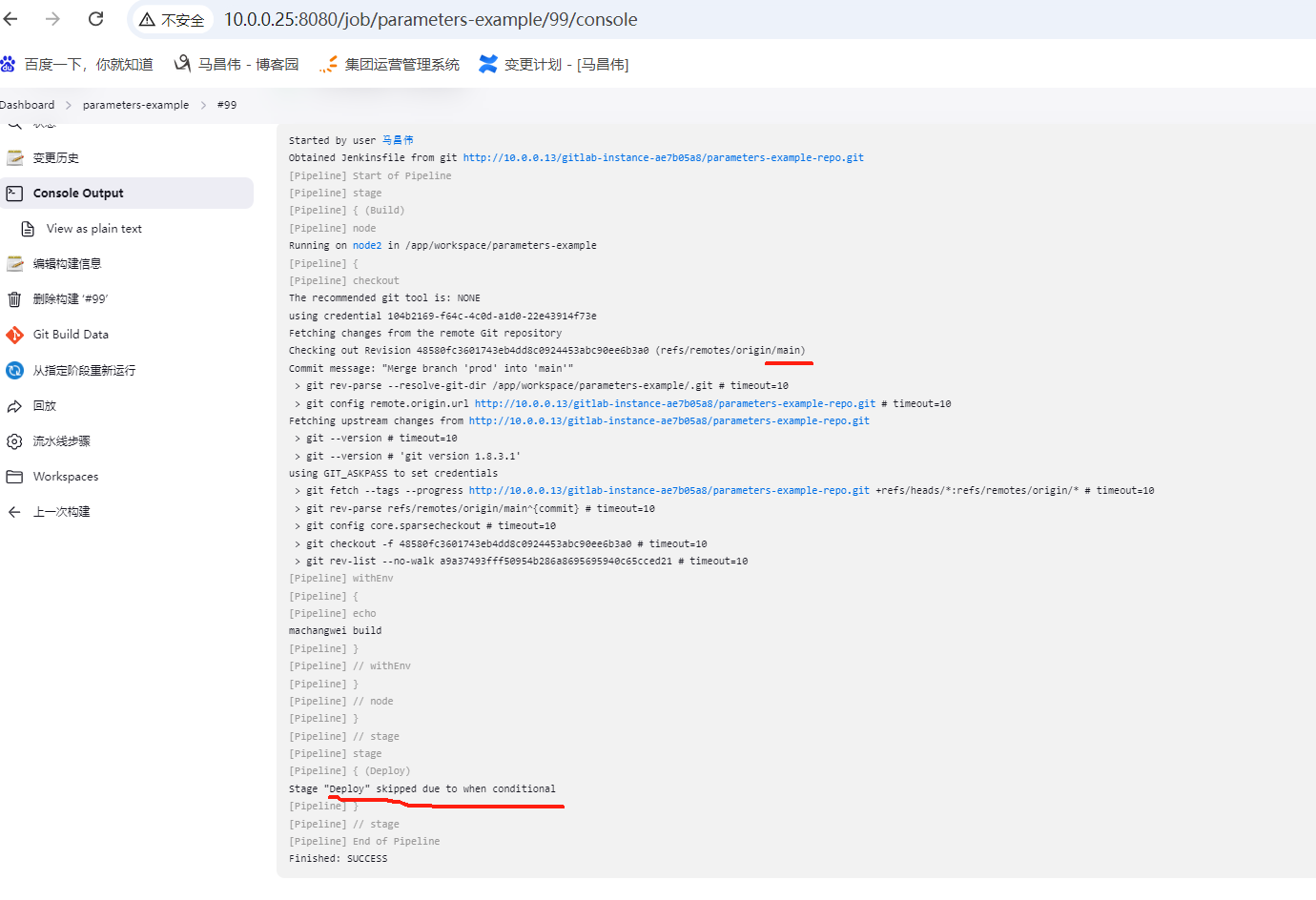

立即构建,因为目前默认拉取的是main分支,不符合when的条件 prod,分支是否是prod返回的不是true,所以这个阶段跳过没运行

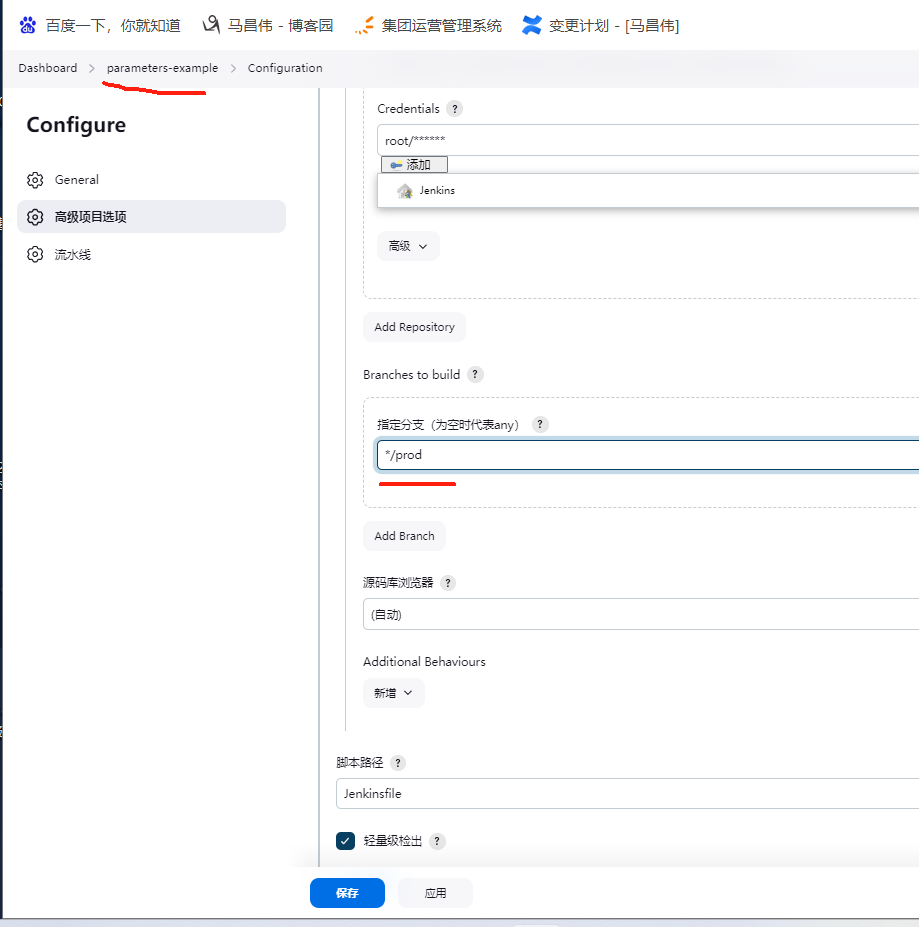

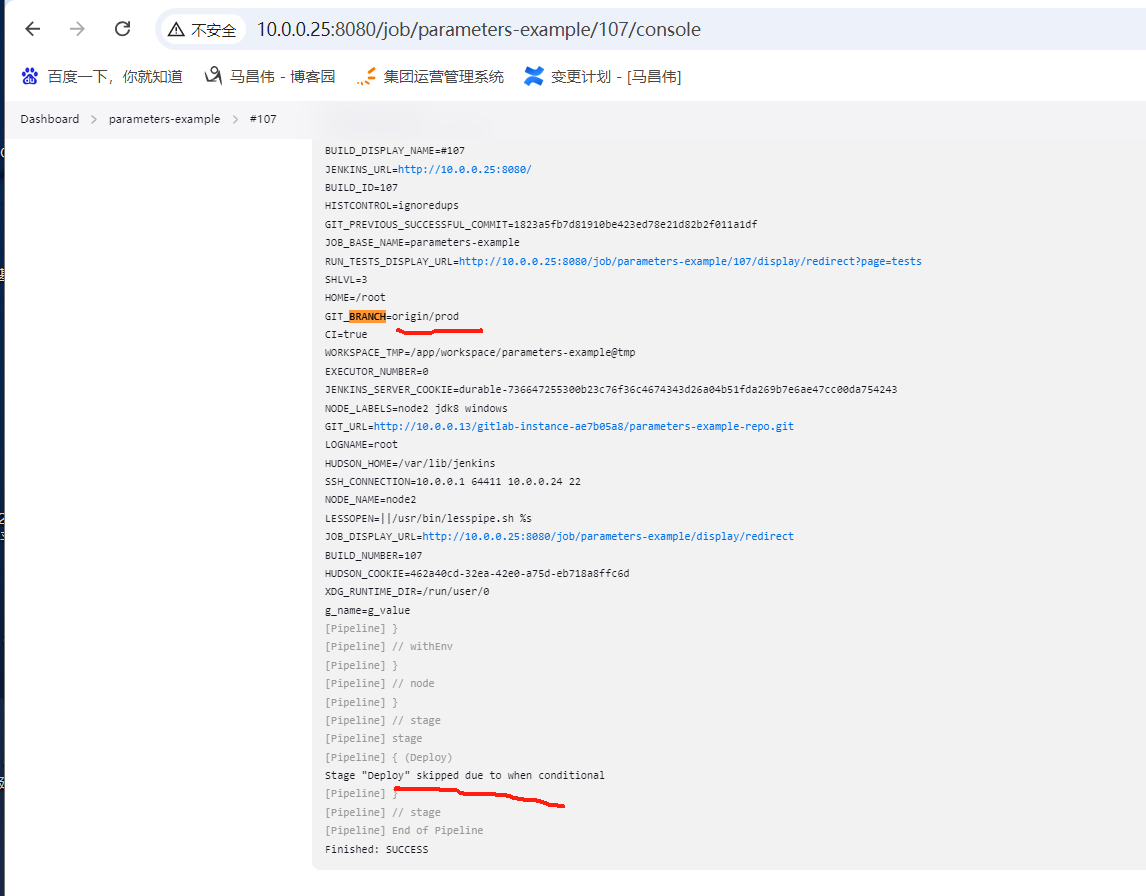

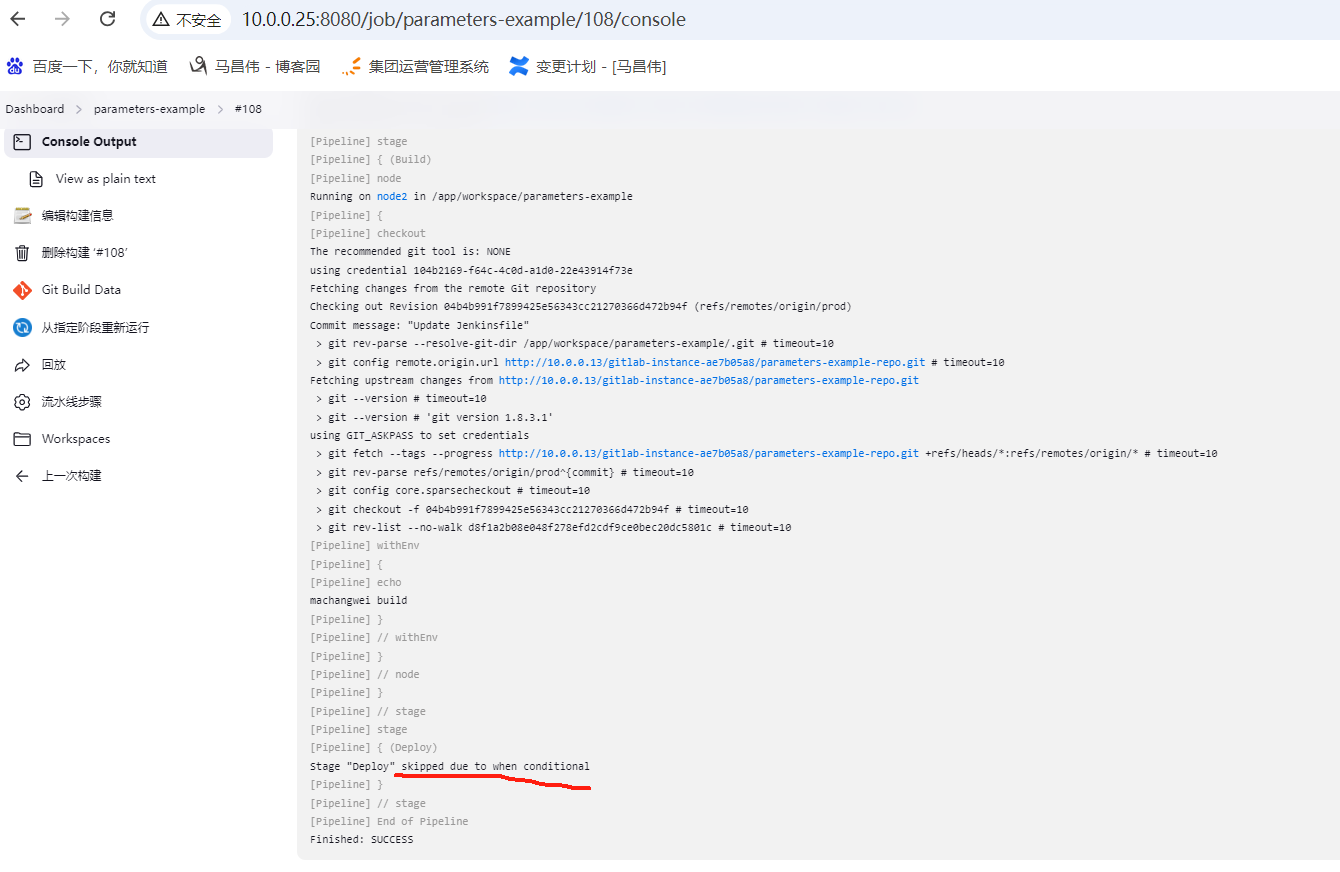

修改该项目的指定拉取分区为prod

查看已经是拉取prod分支了,但是还是没有执行deploy这个阶段

我们打印一下环境变量,可以看到虽然是prod分支,但是环境变量里面是origin/prod,所以跟pipeline里面写的prod没有匹配上,

去掉环境变量的打印,并且将分支名称改为origin/prod

发现结果还是一样,还是没有匹配上

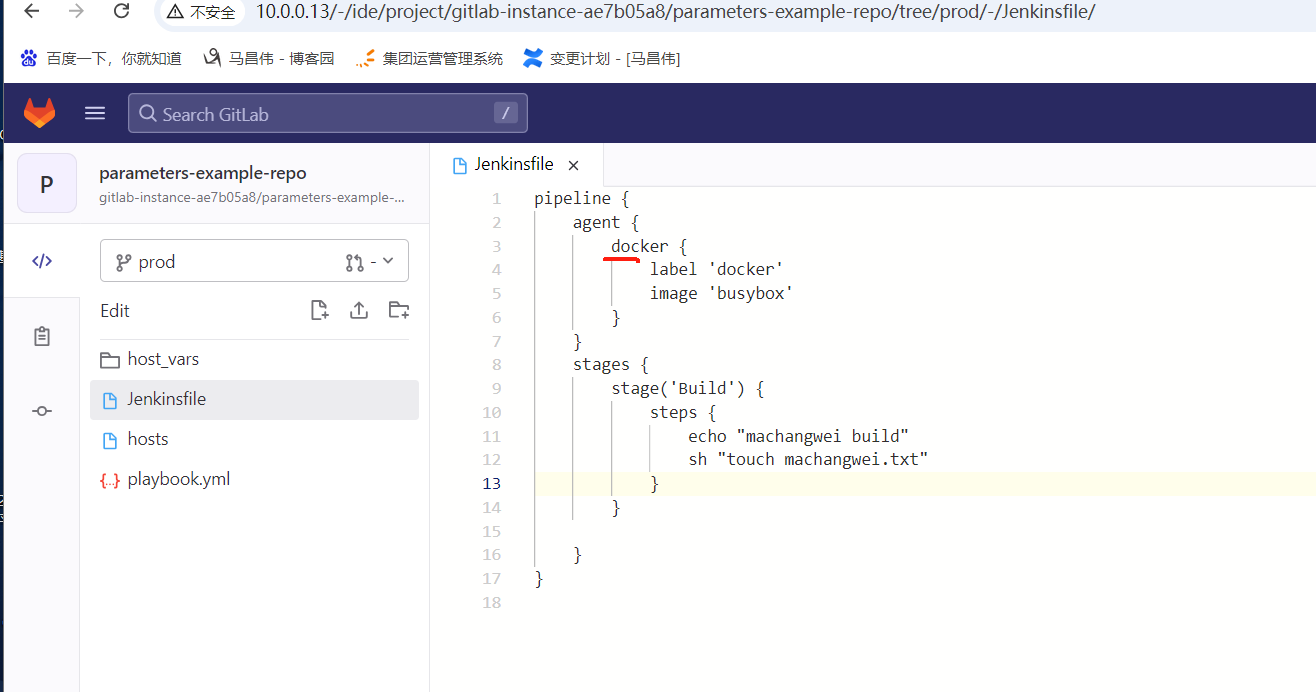

将构建任务交给docker

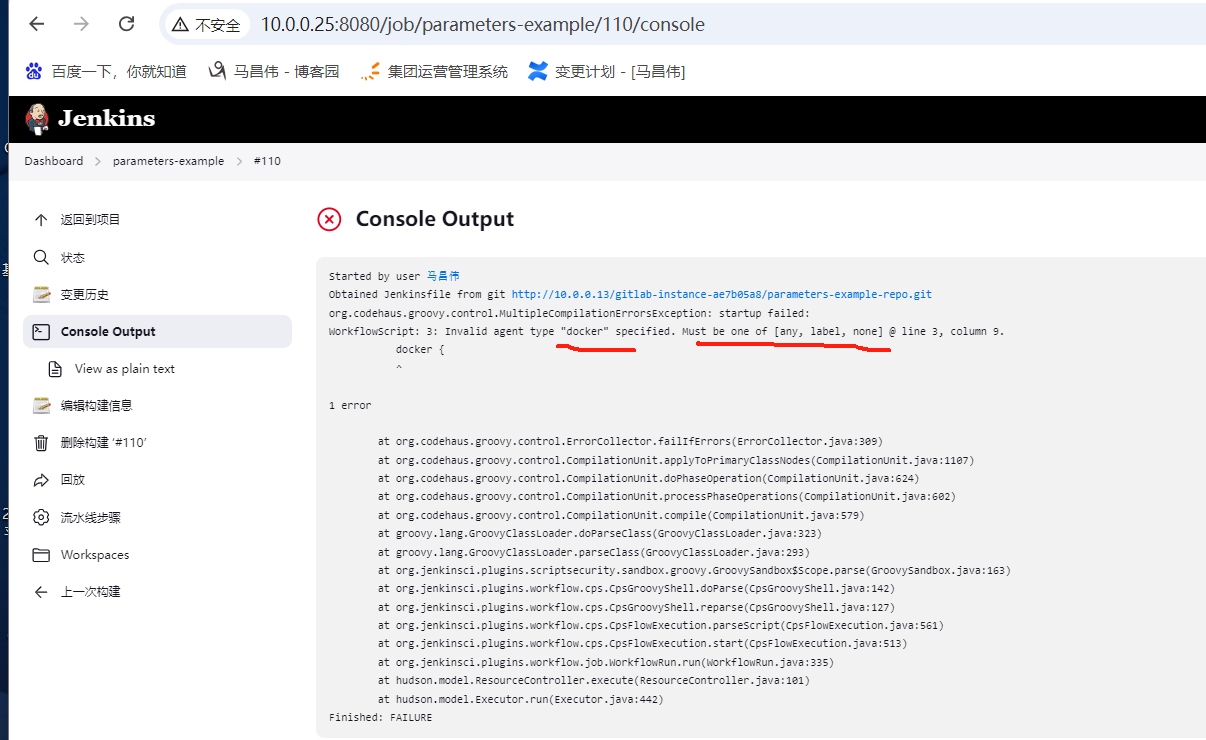

下面的有异常,实验未成功

pipeline { agent { docker { label 'docker' image 'busybox' } } stages { stage('Build') { steps { echo "machangwei build" sh "touch machangwei.txt" } } } }

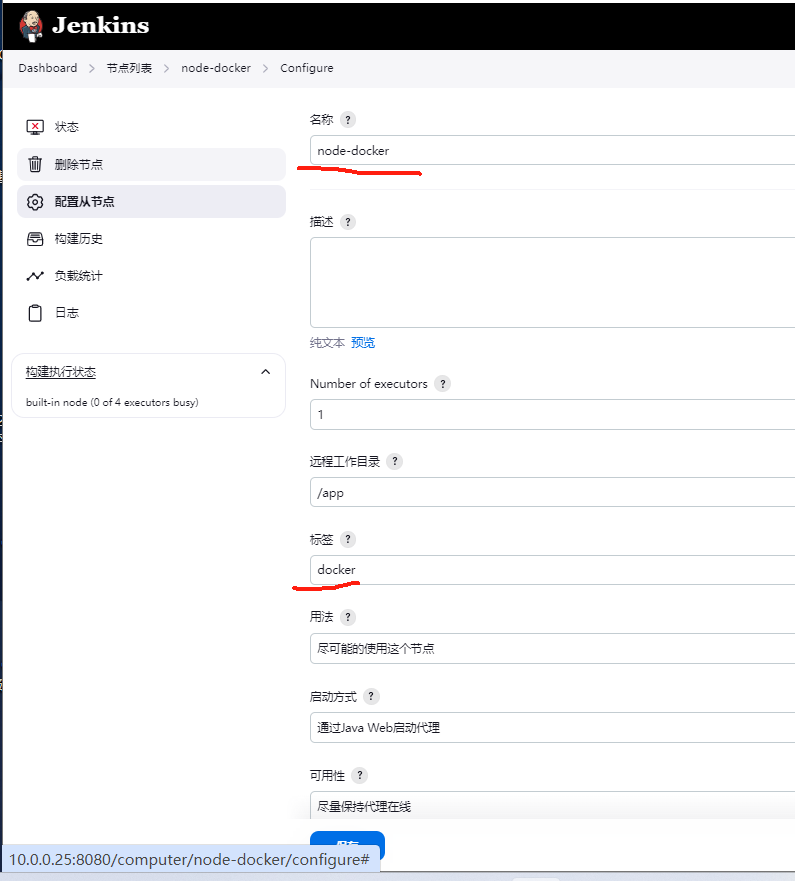

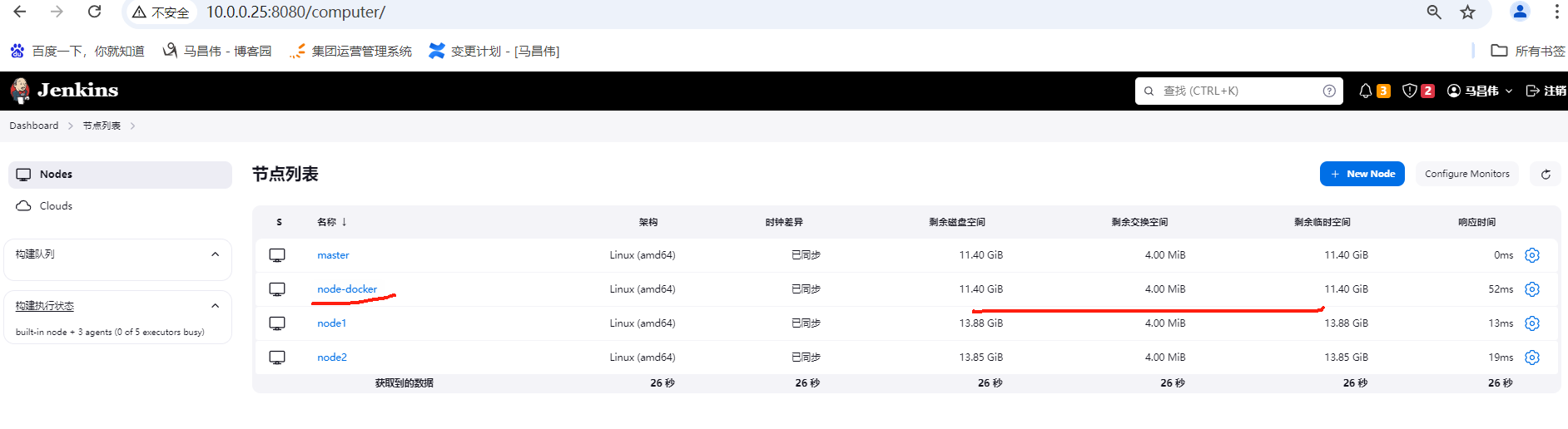

新增一个节点,加上docker标签

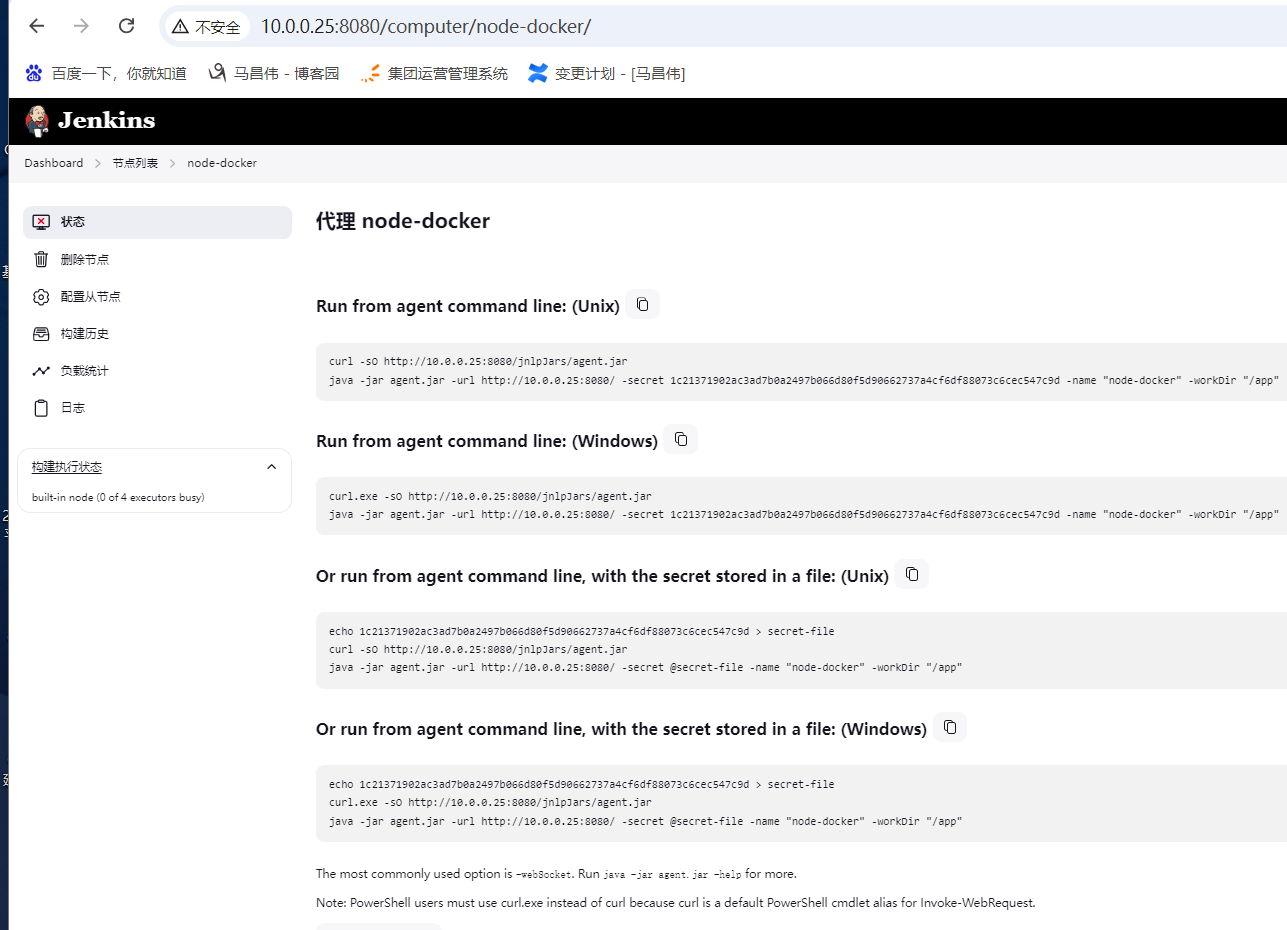

新增节点,直接将它加到jenkins master机器上运行

添加节点

[root@mcw15 opt]# ls containerd nexus-3.71.0-06 nexus-3.71.0-06-unix.tar.gz rakudo-pkg sonatype-work swarm-client-3.9.jar [root@mcw15 opt]# ps -ef|grep java jenkins 36131 1 0 Aug24 ? 00:05:33 /usr/bin/java -Djava.awt.headless=true -jar /usr/share/java/jenkins.war --webroot=%C/jenkins/war --httpPort=8080 root 86112 38851 0 12:01 pts/1 00:00:00 grep --color=auto java [root@mcw15 opt]# echo 1c21371902ac3ad7b0a2497b066d80f5d90662737a4cf6df88073c6cec547c9d > secret-file [root@mcw15 opt]# curl -sO http://10.0.0.25:8080/jnlpJars/agent.jar [root@mcw15 opt]# mkdir /app [root@mcw15 opt]# java -jar agent.jar -url http://10.0.0.25:8080/ -secret @secret-file -name "node-docker" -workDir "/app" & [1] 86161 [root@mcw15 opt]# Aug 25, 2024 12:02:40 PM org.jenkinsci.remoting.engine.WorkDirManager initializeWorkDir INFO: Using /app/remoting as a remoting work directory Aug 25, 2024 12:02:40 PM org.jenkinsci.remoting.engine.WorkDirManager setupLogging INFO: Both error and output logs will be printed to /app/remoting Aug 25, 2024 12:02:40 PM hudson.remoting.Launcher createEngine INFO: Setting up agent: node-docker Aug 25, 2024 12:02:40 PM hudson.remoting.Engine startEngine INFO: Using Remoting version: 3198.v03a_401881f3e Aug 25, 2024 12:02:40 PM org.jenkinsci.remoting.engine.WorkDirManager initializeWorkDir INFO: Using /app/remoting as a remoting work directory Aug 25, 2024 12:02:40 PM hudson.remoting.Launcher$CuiListener status INFO: Locating server among [http://10.0.0.25:8080/] Aug 25, 2024 12:02:40 PM org.jenkinsci.remoting.engine.JnlpAgentEndpointResolver resolve INFO: Remoting server accepts the following protocols: [JNLP4-connect, Ping] Aug 25, 2024 12:02:40 PM hudson.remoting.Launcher$CuiListener status INFO: Agent discovery successful Agent address: 10.0.0.25 Agent port: 9812 Identity: 52:34:14:5f:aa:87:d8:d8:c9:a3:23:e7:32:15:da:d8 Aug 25, 2024 12:02:40 PM hudson.remoting.Launcher$CuiListener status INFO: Handshaking Aug 25, 2024 12:02:40 PM hudson.remoting.Launcher$CuiListener status INFO: Connecting to 10.0.0.25:9812 Aug 25, 2024 12:02:40 PM hudson.remoting.Launcher$CuiListener status INFO: Server reports protocol JNLP4-connect-proxy not supported, skipping Aug 25, 2024 12:02:40 PM hudson.remoting.Launcher$CuiListener status INFO: Trying protocol: JNLP4-connect Aug 25, 2024 12:02:40 PM org.jenkinsci.remoting.protocol.impl.BIONetworkLayer$Reader run INFO: Waiting for ProtocolStack to start. Aug 25, 2024 12:02:41 PM hudson.remoting.Launcher$CuiListener status INFO: Remote identity confirmed: 52:34:14:5f:aa:87:d8:d8:c9:a3:23:e7:32:15:da:d8 Aug 25, 2024 12:02:41 PM hudson.remoting.Launcher$CuiListener status INFO: Connected

已经正常。这个应该一个服务器里面可以跑多个node程序的。这样应该可以提高并发处理能力。增加执行器。

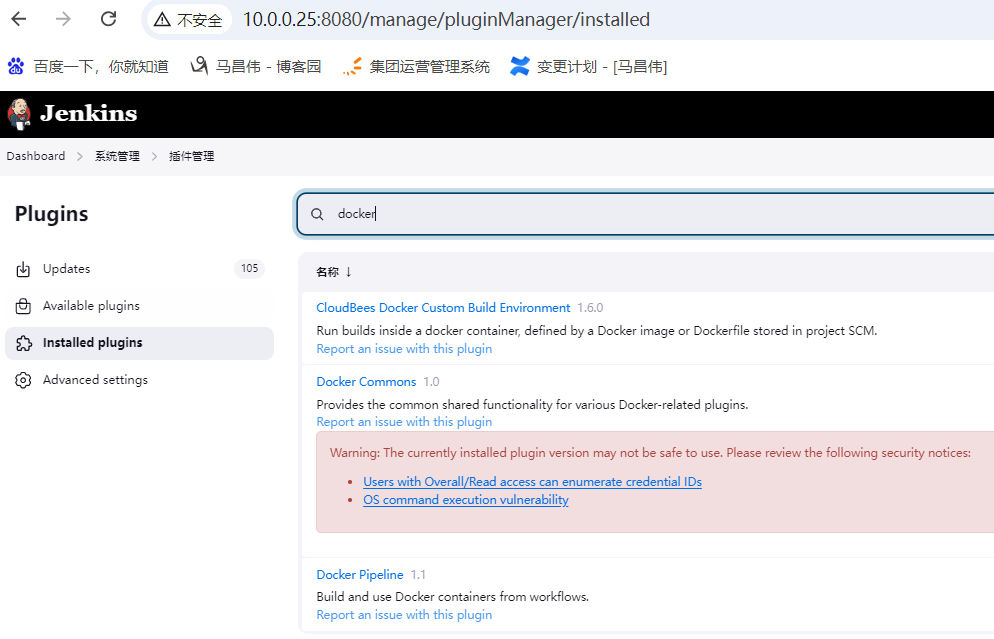

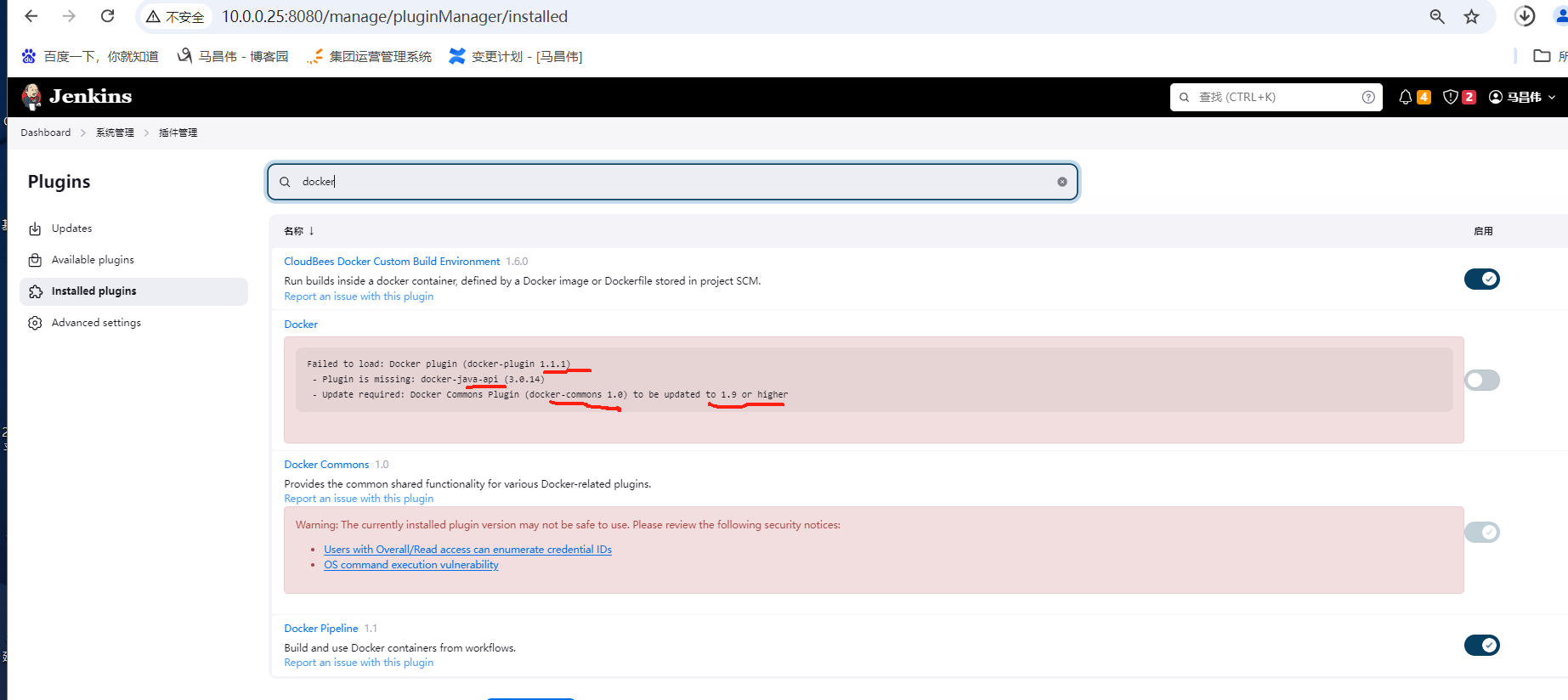

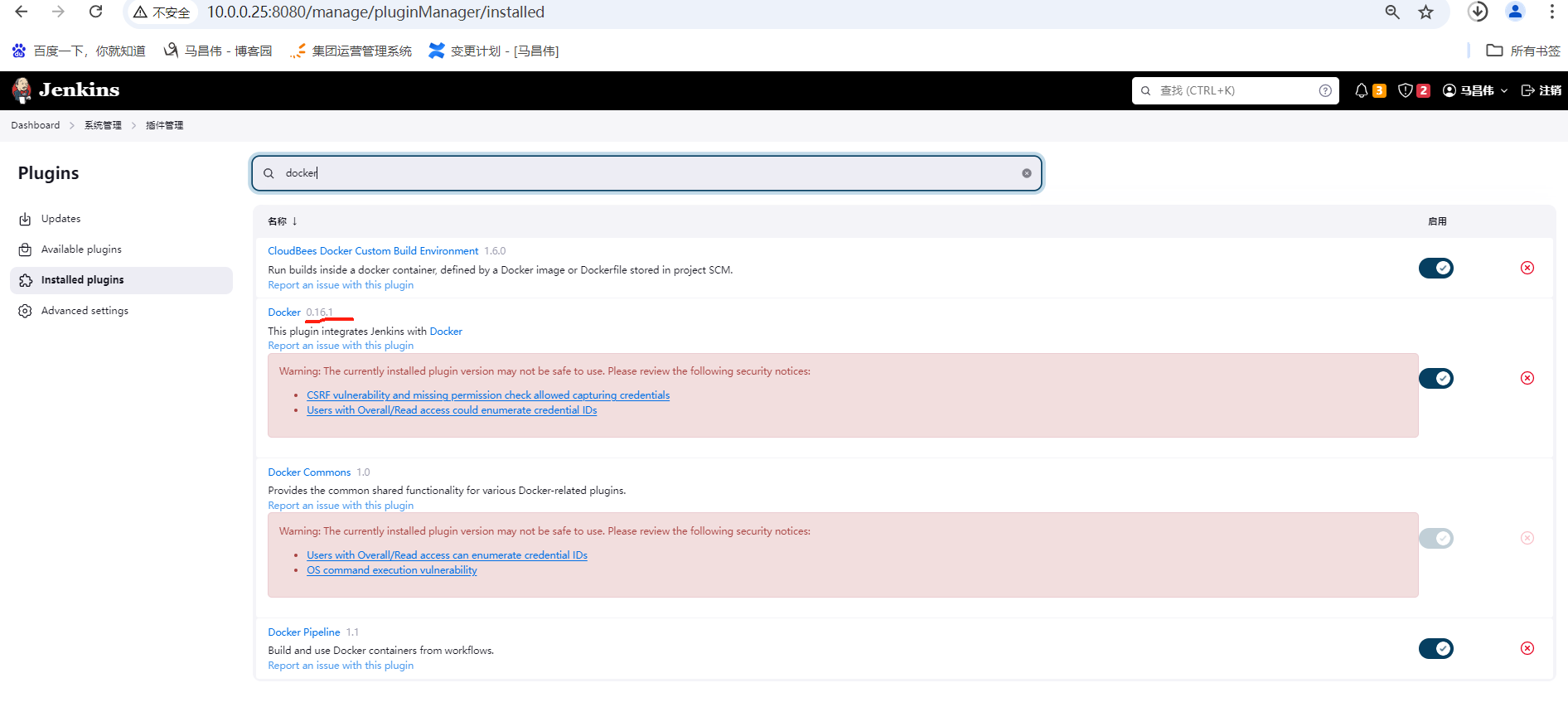

docker插件安装了的,不过不是我们用的那个

下面这个才是:https://plugins.jenkins.io/docker-plugin,而不是下面那个workflow

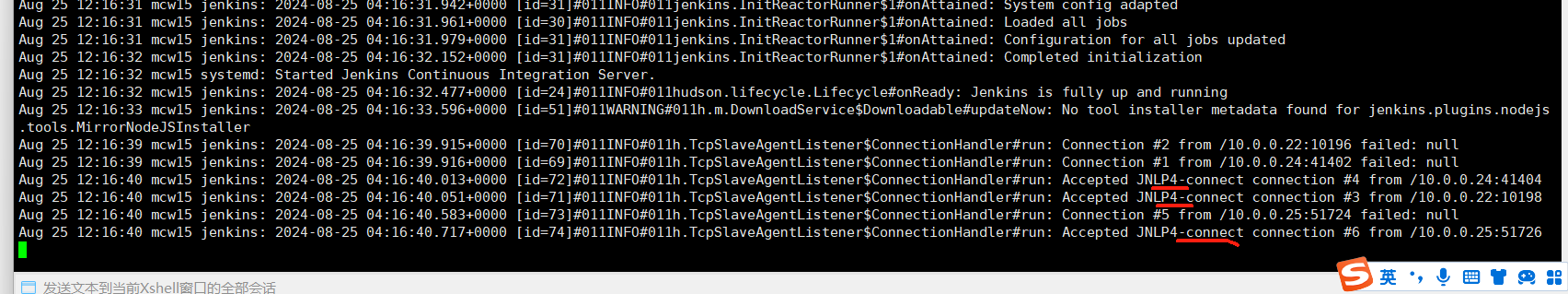

安装好插件重启jenkins服务的时候,各个agent也是会被终止重连

[root@mcw14 opt]# Aug 25, 2024 12:16:15 PM hudson.remoting.Launcher$CuiListener status INFO: Terminated Aug 25, 2024 12:16:28 PM org.jenkinsci.remoting.engine.JnlpAgentEndpointResolver waitForReady INFO: Controller isn't ready to talk to us on http://10.0.0.25:8080/tcpSlaveAgentListener/. Will try again: response code=503 Aug 25, 2024 12:16:38 PM hudson.remoting.Launcher$CuiListener status INFO: Performing onReconnect operation. Aug 25, 2024 12:16:39 PM jenkins.slaves.restarter.JnlpSlaveRestarterInstaller$EngineListenerAdapterImpl onReconnect INFO: Restarting agent via jenkins.slaves.restarter.UnixSlaveRestarter@7a46cfb1 Aug 25, 2024 12:16:39 PM org.jenkinsci.remoting.engine.WorkDirManager initializeWorkDir INFO: Using /app/remoting as a remoting work directory Aug 25, 2024 12:16:39 PM org.jenkinsci.remoting.engine.WorkDirManager setupLogging INFO: Both error and output logs will be printed to /app/remoting Aug 25, 2024 12:16:39 PM hudson.remoting.Launcher createEngine INFO: Setting up agent: node2 Aug 25, 2024 12:16:39 PM hudson.remoting.Engine startEngine INFO: Using Remoting version: 3198.v03a_401881f3e Aug 25, 2024 12:16:39 PM org.jenkinsci.remoting.engine.WorkDirManager initializeWorkDir INFO: Using /app/remoting as a remoting work directory Aug 25, 2024 12:16:39 PM hudson.remoting.Launcher$CuiListener status INFO: Locating server among [http://10.0.0.25:8080/] Aug 25, 2024 12:16:39 PM org.jenkinsci.remoting.engine.JnlpAgentEndpointResolver resolve INFO: Remoting server accepts the following protocols: [JNLP4-connect, Ping] Aug 25, 2024 12:16:39 PM hudson.remoting.Launcher$CuiListener status INFO: Agent discovery successful Agent address: 10.0.0.25 Agent port: 9812 Identity: 52:34:14:5f:aa:87:d8:d8:c9:a3:23:e7:32:15:da:d8 Aug 25, 2024 12:16:39 PM hudson.remoting.Launcher$CuiListener status INFO: Handshaking Aug 25, 2024 12:16:39 PM hudson.remoting.Launcher$CuiListener status INFO: Connecting to 10.0.0.25:9812 Aug 25, 2024 12:16:39 PM hudson.remoting.Launcher$CuiListener status INFO: Server reports protocol JNLP4-connect-proxy not supported, skipping Aug 25, 2024 12:16:39 PM hudson.remoting.Launcher$CuiListener status INFO: Trying protocol: JNLP4-connect Aug 25, 2024 12:16:39 PM org.jenkinsci.remoting.protocol.impl.BIONetworkLayer$Reader run INFO: Waiting for ProtocolStack to start. Aug 25, 2024 12:16:41 PM hudson.remoting.Launcher$CuiListener status INFO: Remote identity confirmed: 52:34:14:5f:aa:87:d8:d8:c9:a3:23:e7:32:15:da:d8 Aug 25, 2024 12:16:41 PM hudson.remoting.Launcher$CuiListener status INFO: Connected

jenkins日志

需要新增一个插件,并且docker-plugin插件需要降低版本,

版本适配了,也不需要依赖包了

使用这个之后,发现还是不行。可能是版本太低,不支持吧

WorkflowScript: 3: Invalid agent type "docker" specified. Must be one of [any, label, none] @ line 3, column 9.

配置docker私有仓库

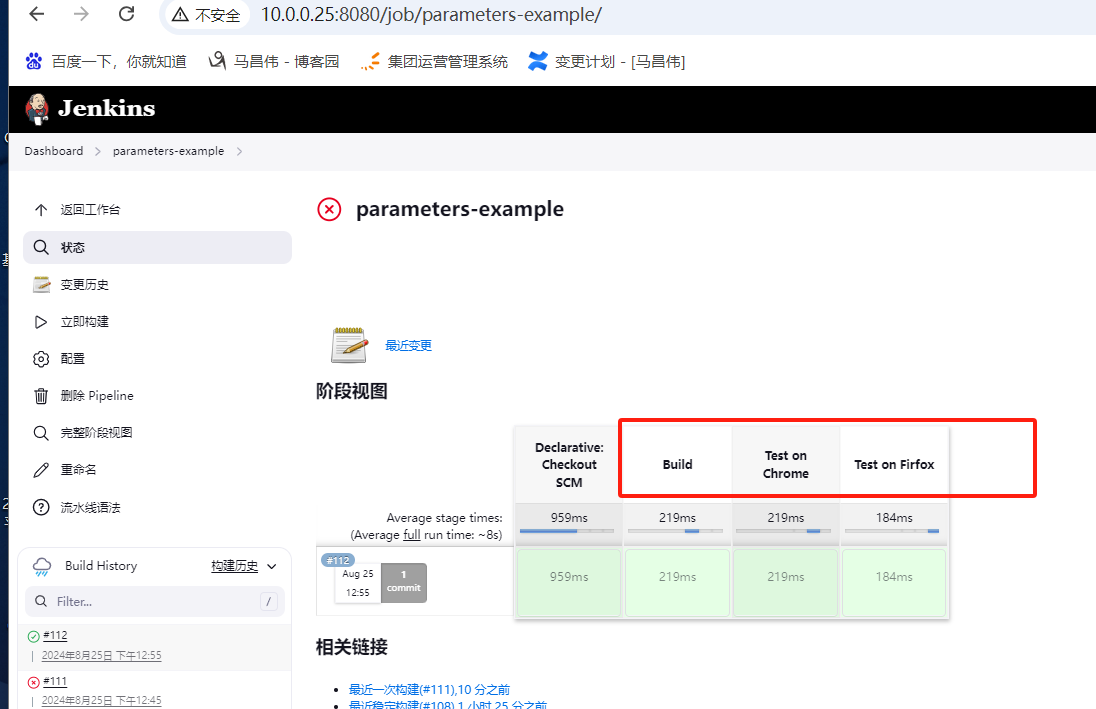

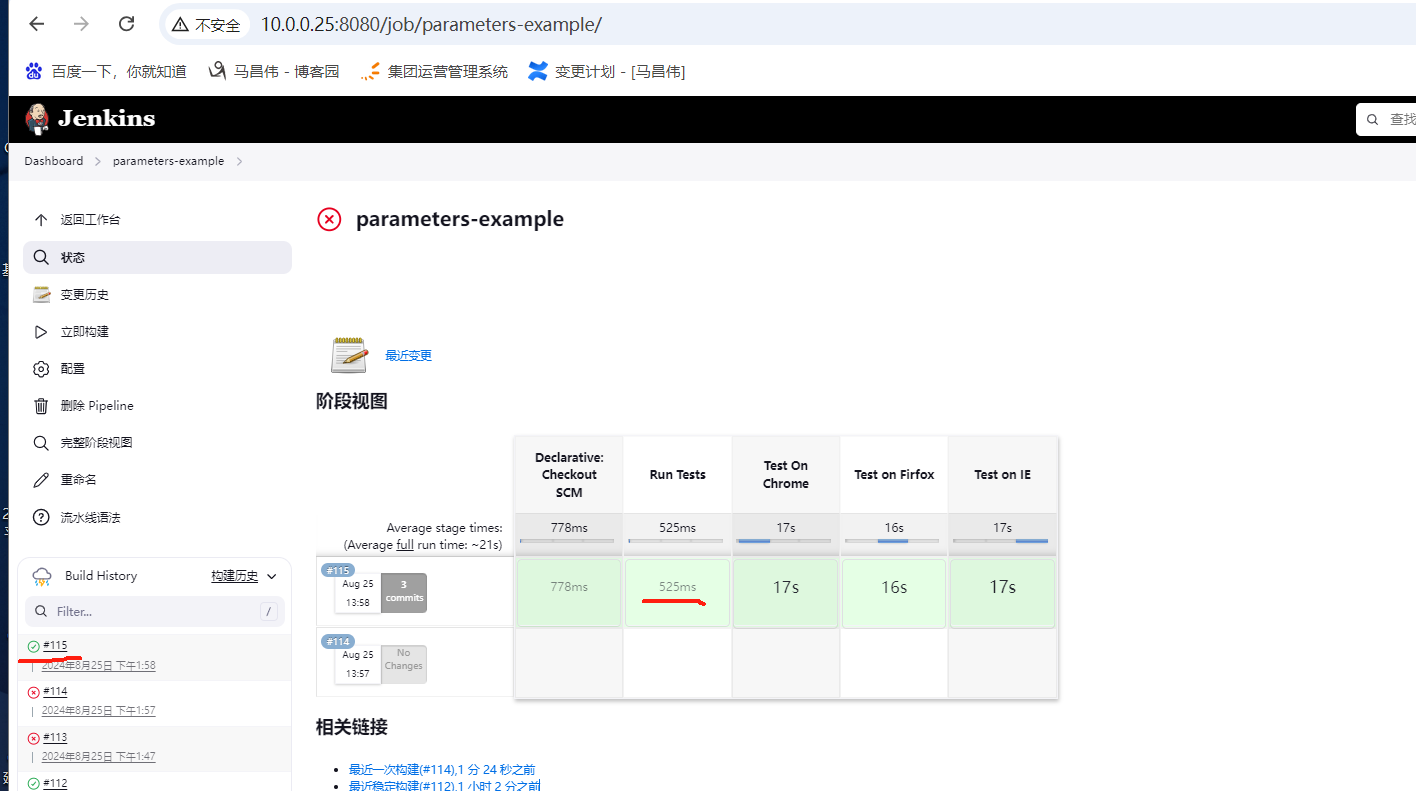

并行构建

如下,分别在Chrome,Firefox,IE等浏览器的不同版本对用一个web应用进行UI测试,用下面方法,按照顺序执行,测试效率太慢了

pipeline { agent any stages { stage('Build') { steps { echo "Building..." } } stage('Test on Chrome') { steps { echo "Testing..." } } stage('Test on Firfox') { steps { echo "Testing..." } } } }

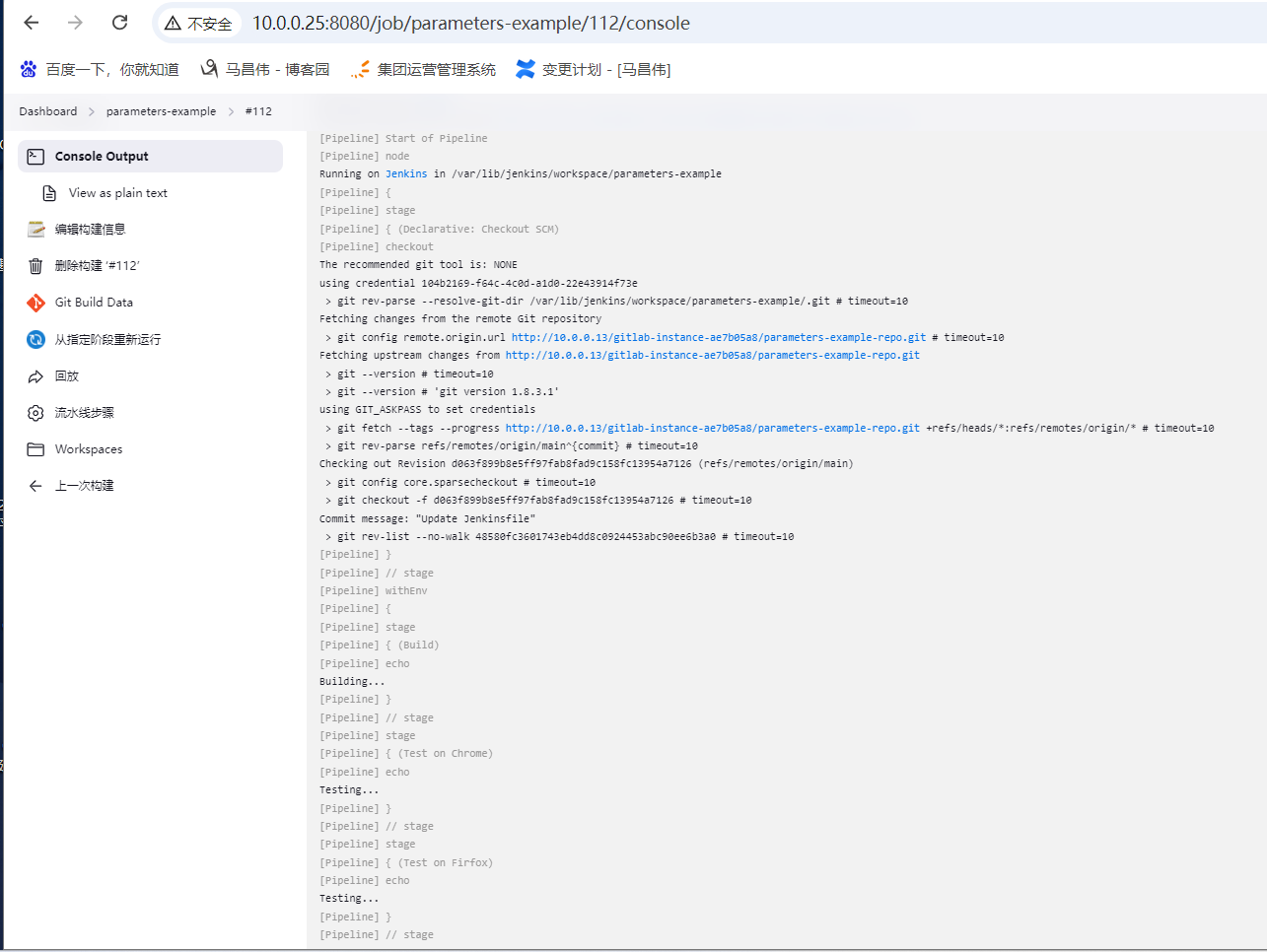

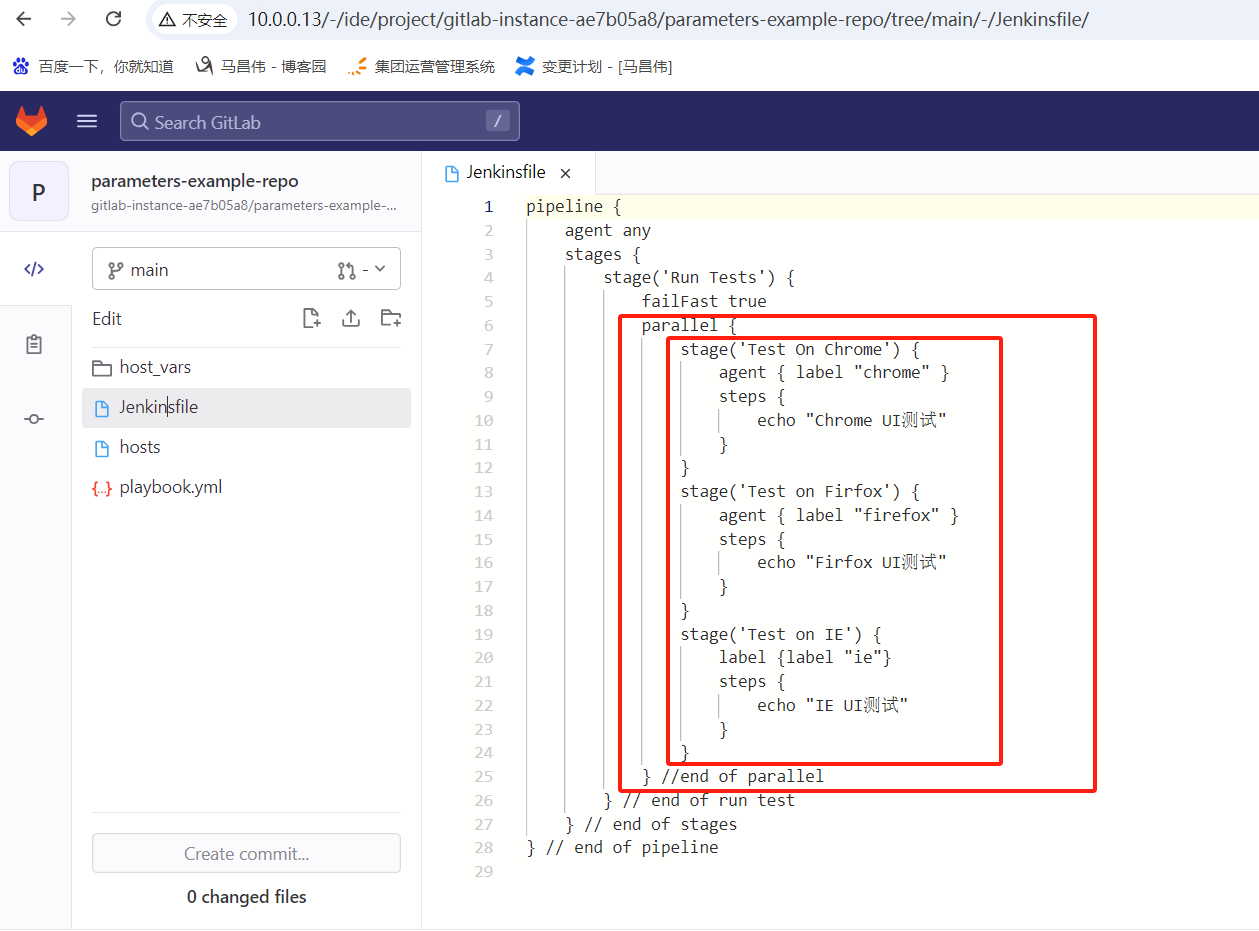

修改,使用并行构建

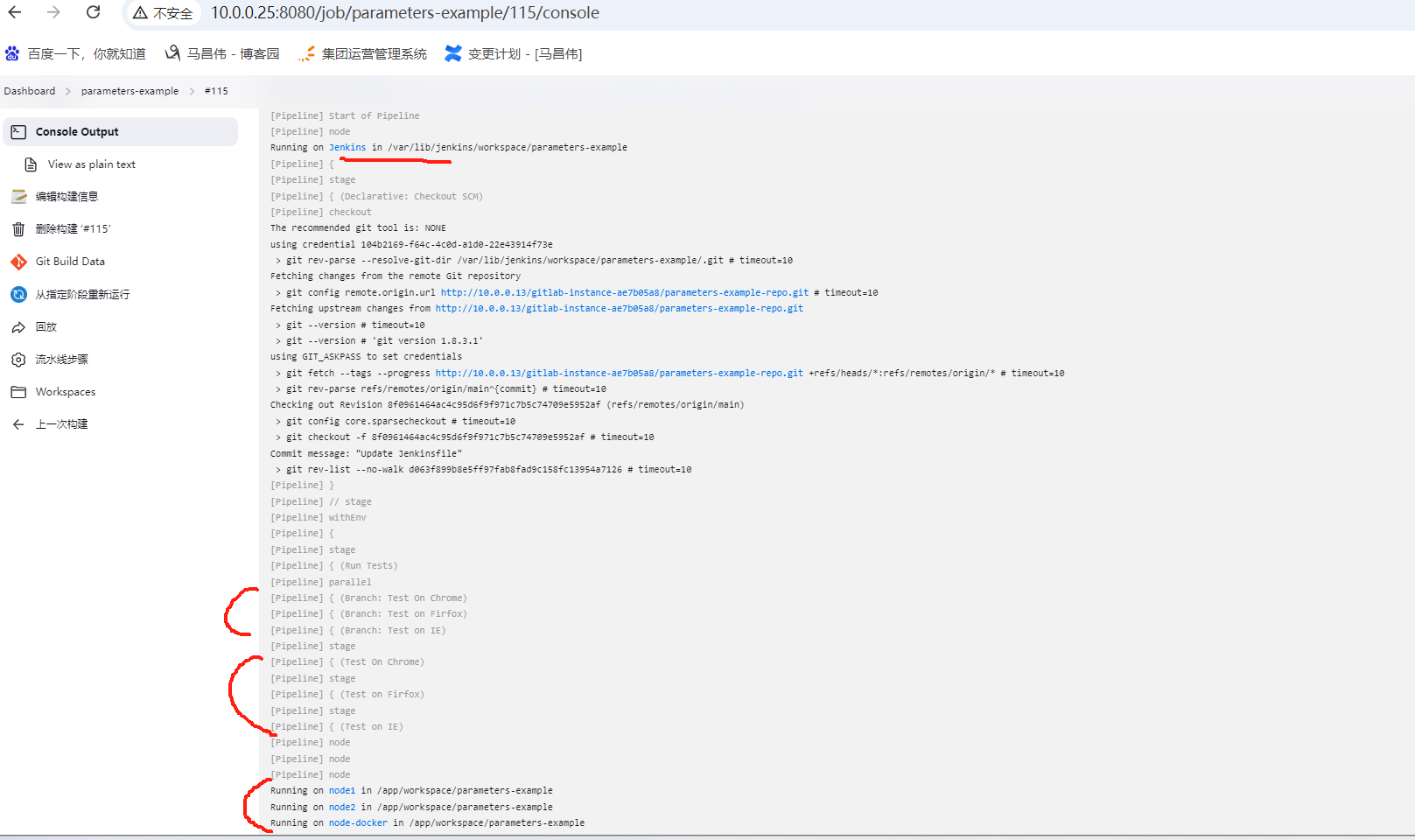

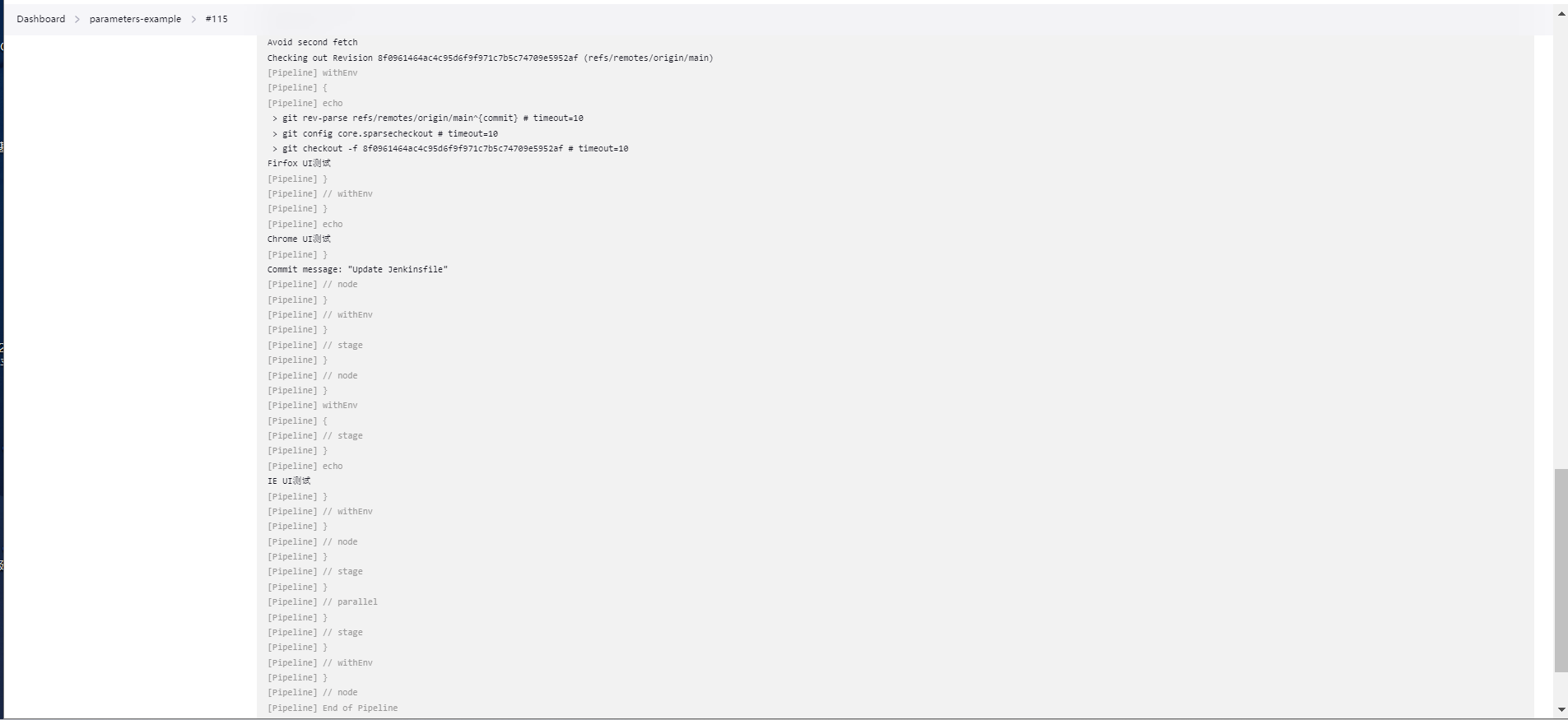

pipeline { agent any stages { stage('Run Tests') { failFast true parallel { stage('Test On Chrome') { agent { label "chrome" } steps { echo "Chrome UI测试" } } stage('Test on Firfox') { agent { label "firefox" } steps { echo "Firfox UI测试" } } stage('Test on IE') { agent { label "ie" } steps { echo "IE UI测试" } } } //end of parallel } // end of run test } // end of stages } // end of pipeline

给三个agent上分别添加上上面对应的三个标签

总共花了778ms,而之前的pipeline执行是花了959ms,这效率变高了

从日志输出也可以看出,整个pipeline在master上运行,然后三个阶段分别在三个agent上运行,并且不是按照顺序完成一个,才开始下一个阶段的运行,而是并行。如果是顺序执行的话,那么running on 节点的日志输出不会放在一起出来,而是中间隔了好多行其它输出,也就是等一个阶段的所有输出结算之后,才会进行第二个阶段的日志输出。也就是这里是已经实现了并行,提高了效率。

在不同的分支上应用并行构建

并行步骤

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本

· C#/.NET/.NET Core优秀项目和框架2025年2月简报

· 什么是nginx的强缓存和协商缓存

· 一文读懂知识蒸馏

· Manus爆火,是硬核还是营销?

2021-08-21 设计模式之共享模式

2019-08-21 python中分页使用