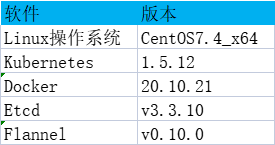

kubernetes 之二进制方式部署

我的资料链接:https://pan.baidu.com/s/18g0sar1N-FMhzY-FCMqOog

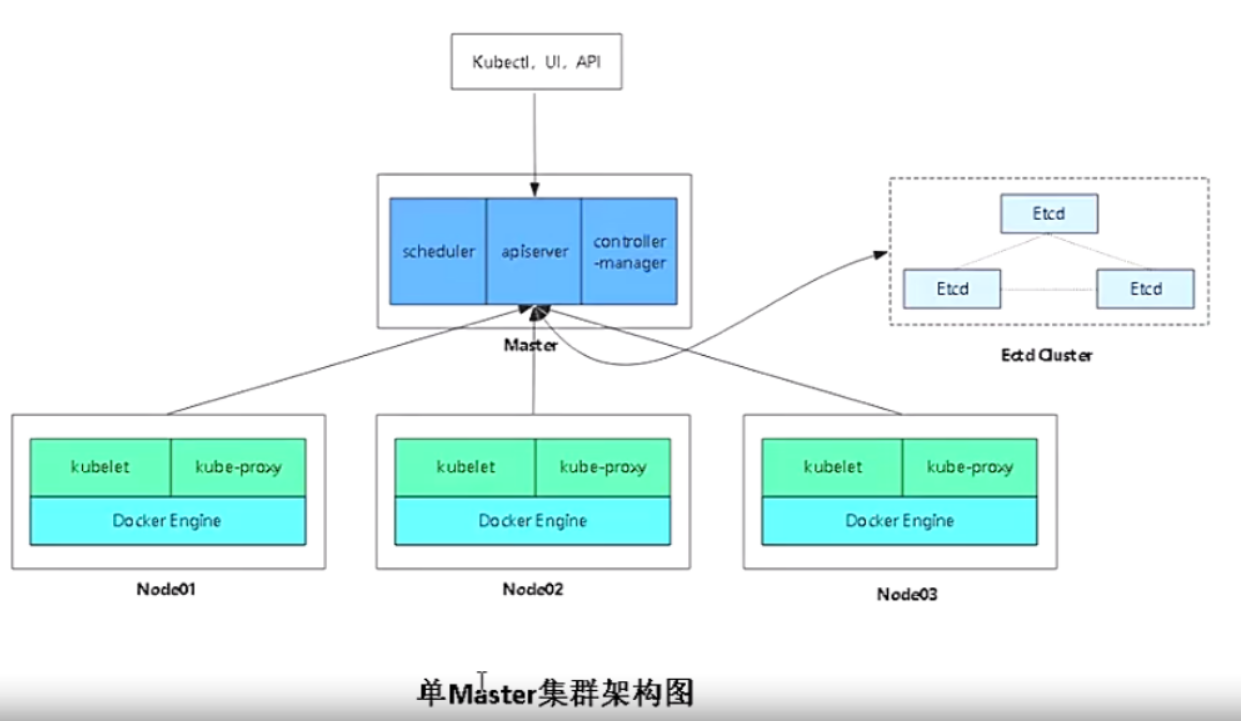

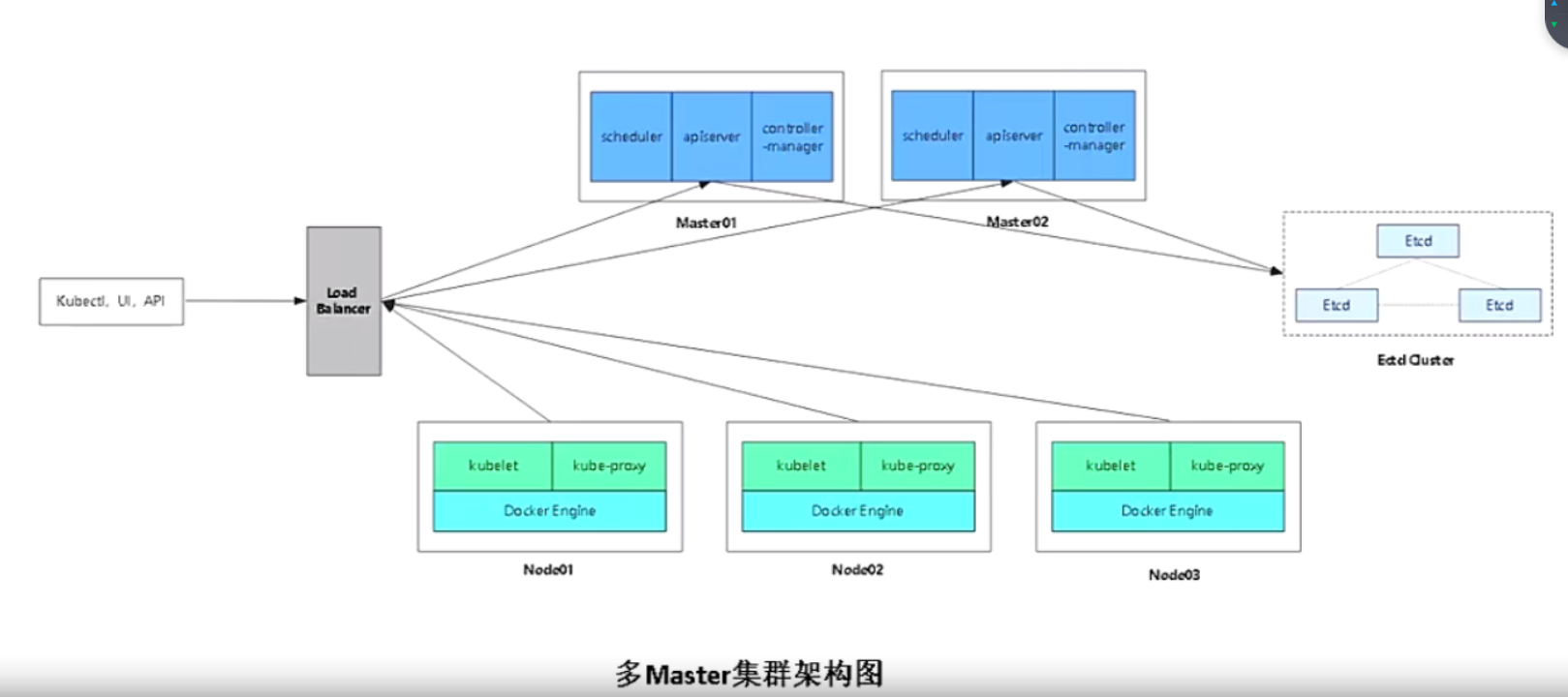

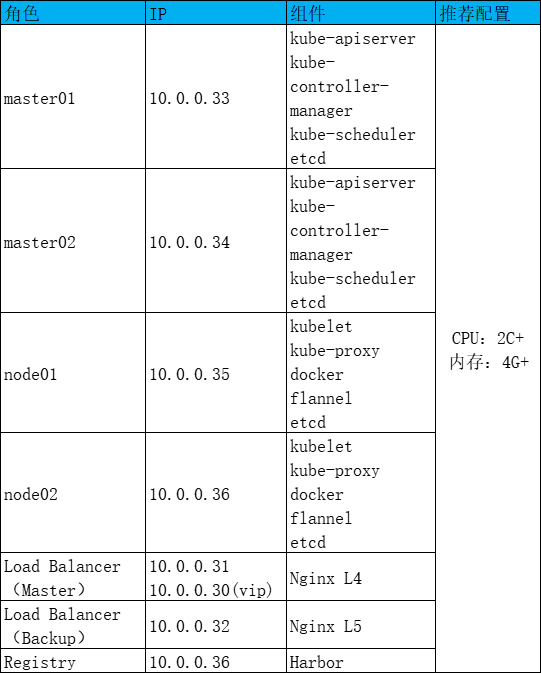

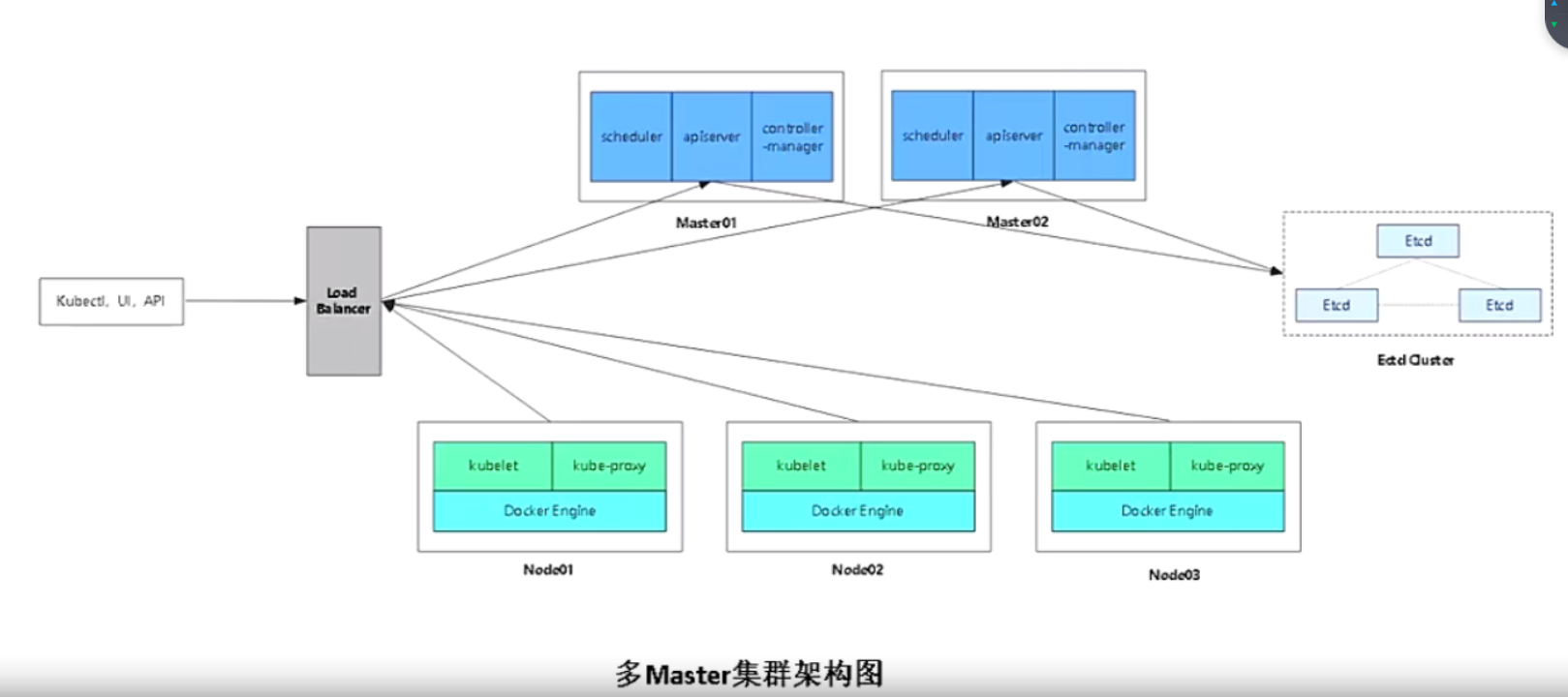

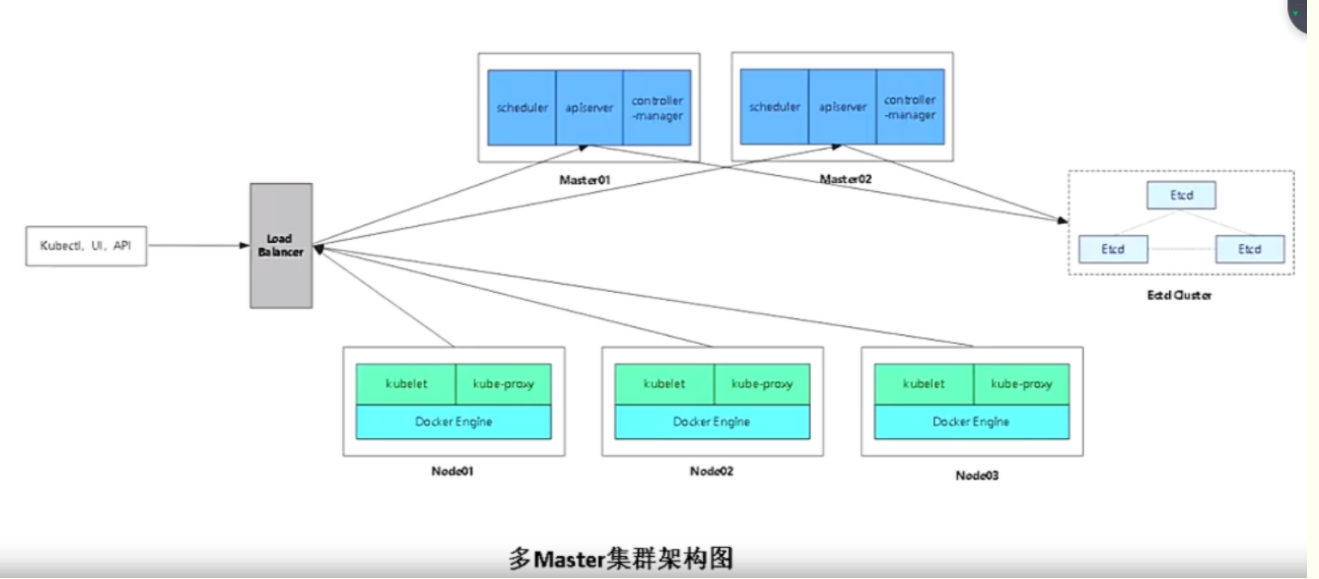

两种集群架构图

多master需要在集群上面加个lb,所有的node都需要连接lb,lb帮你转发到apiserver,apiserver是http服务。kubectl ui api等都要连接lb的地址,它帮你转发到apiserver,apiserver再进行往下帮你操作。

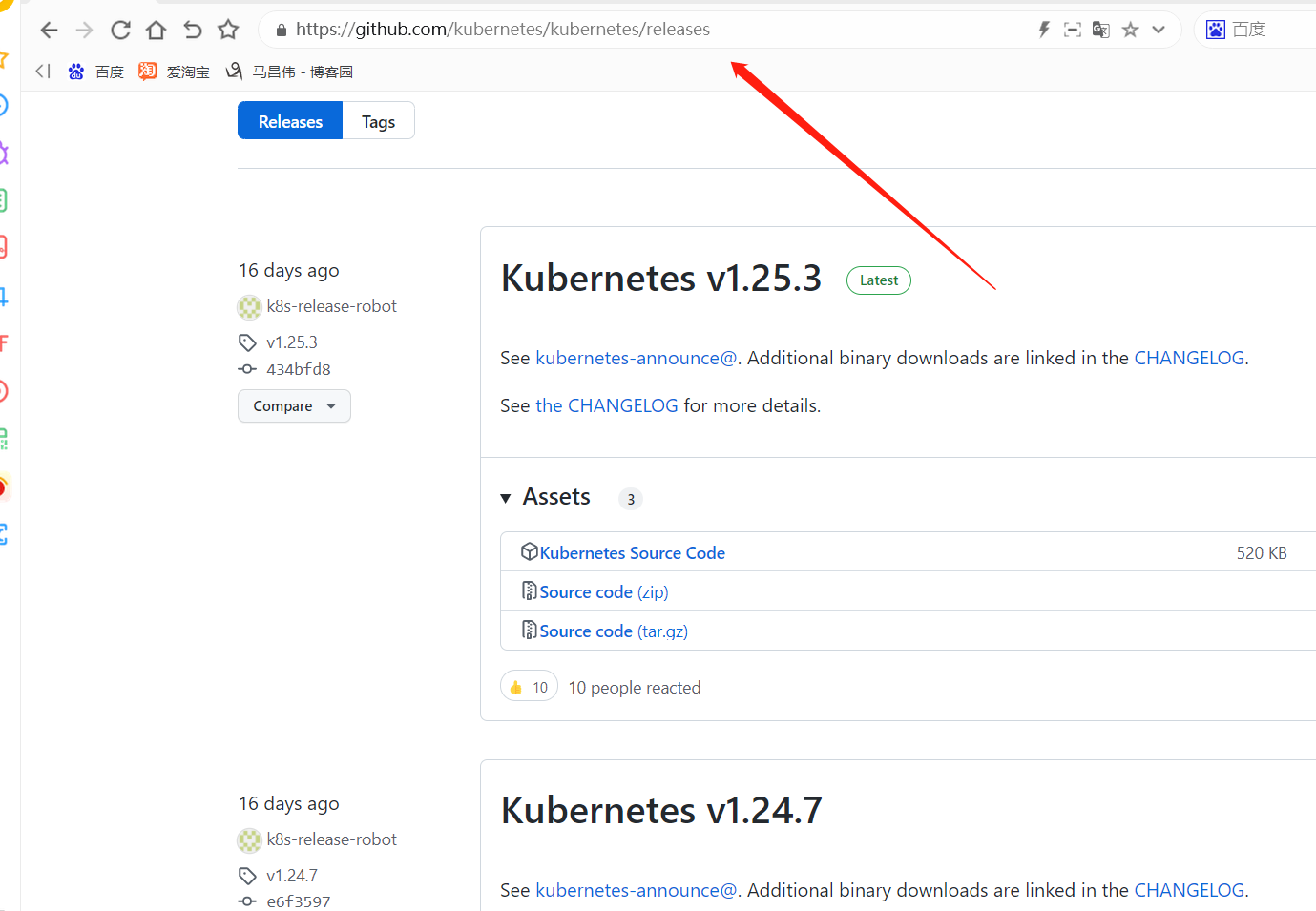

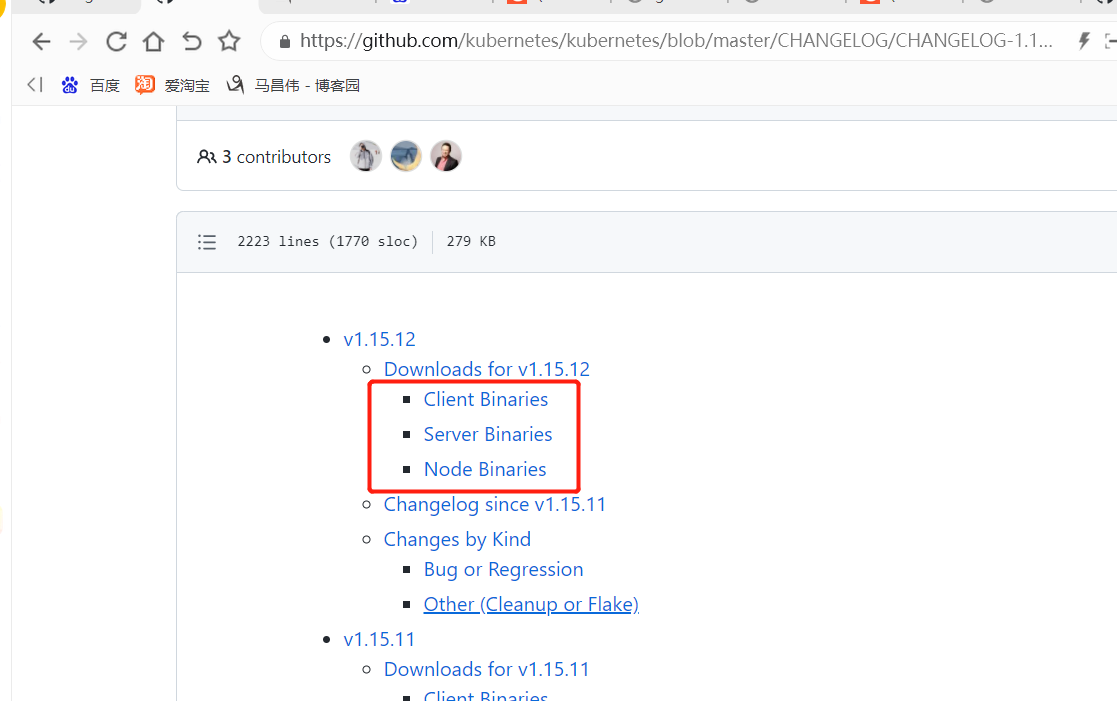

下载包

下载地址: https://github.com/kubernetes

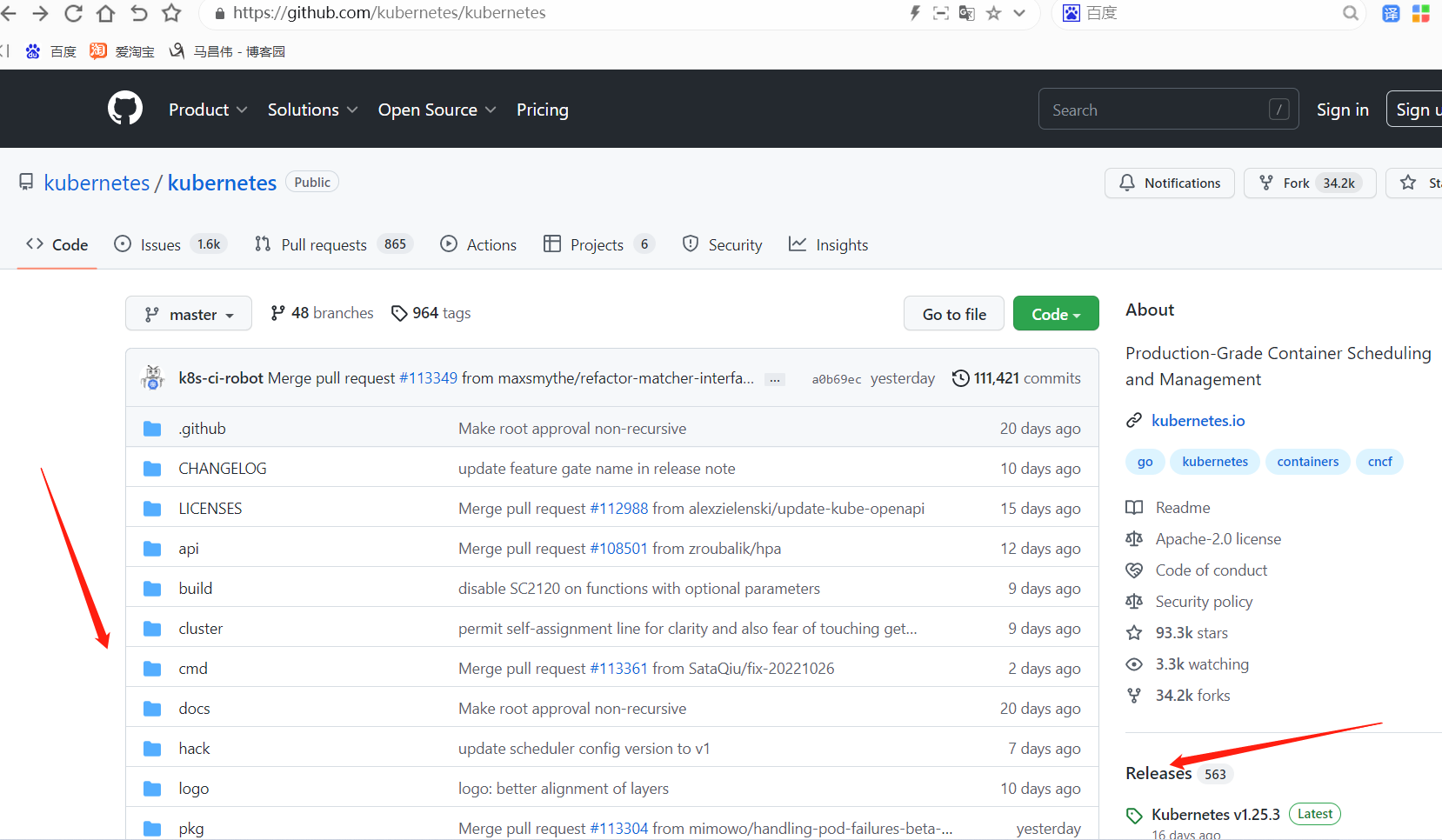

https://github.com/kubernetes/kubernetes/releases

https://storage.googleapis.com/kubernetes-release/release/v1.15.12/kubernetes-server-linux-amd64.tar.gz #某个版本的地址

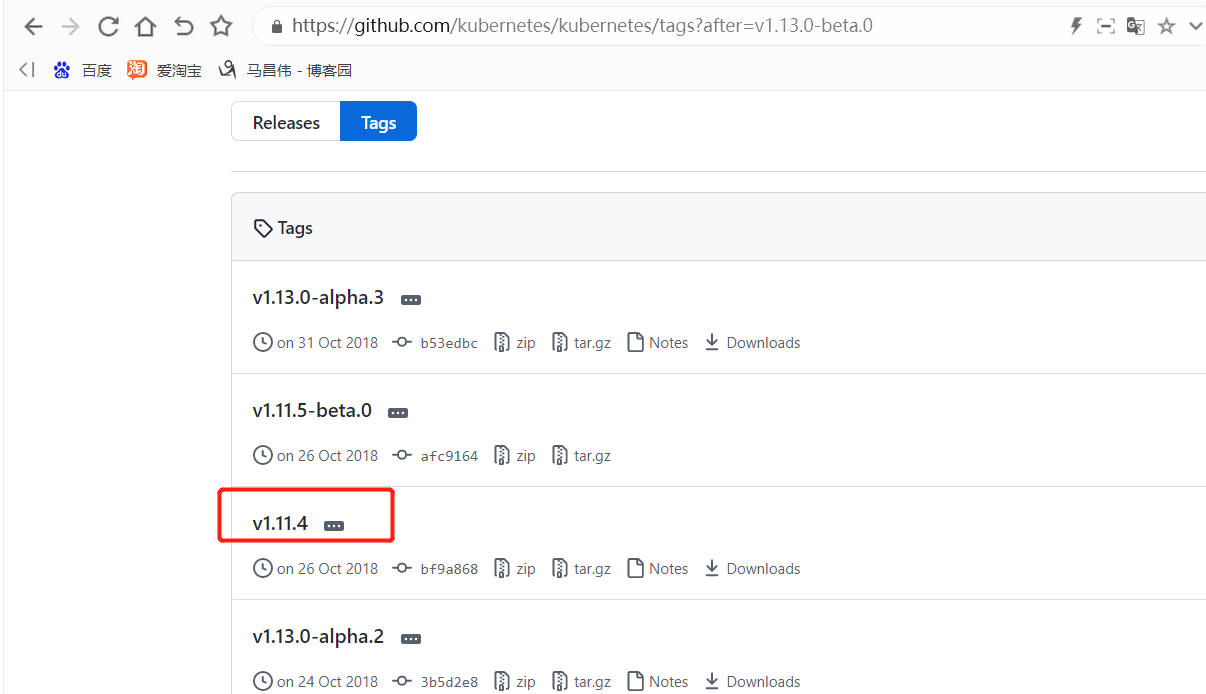

源码包版本地址

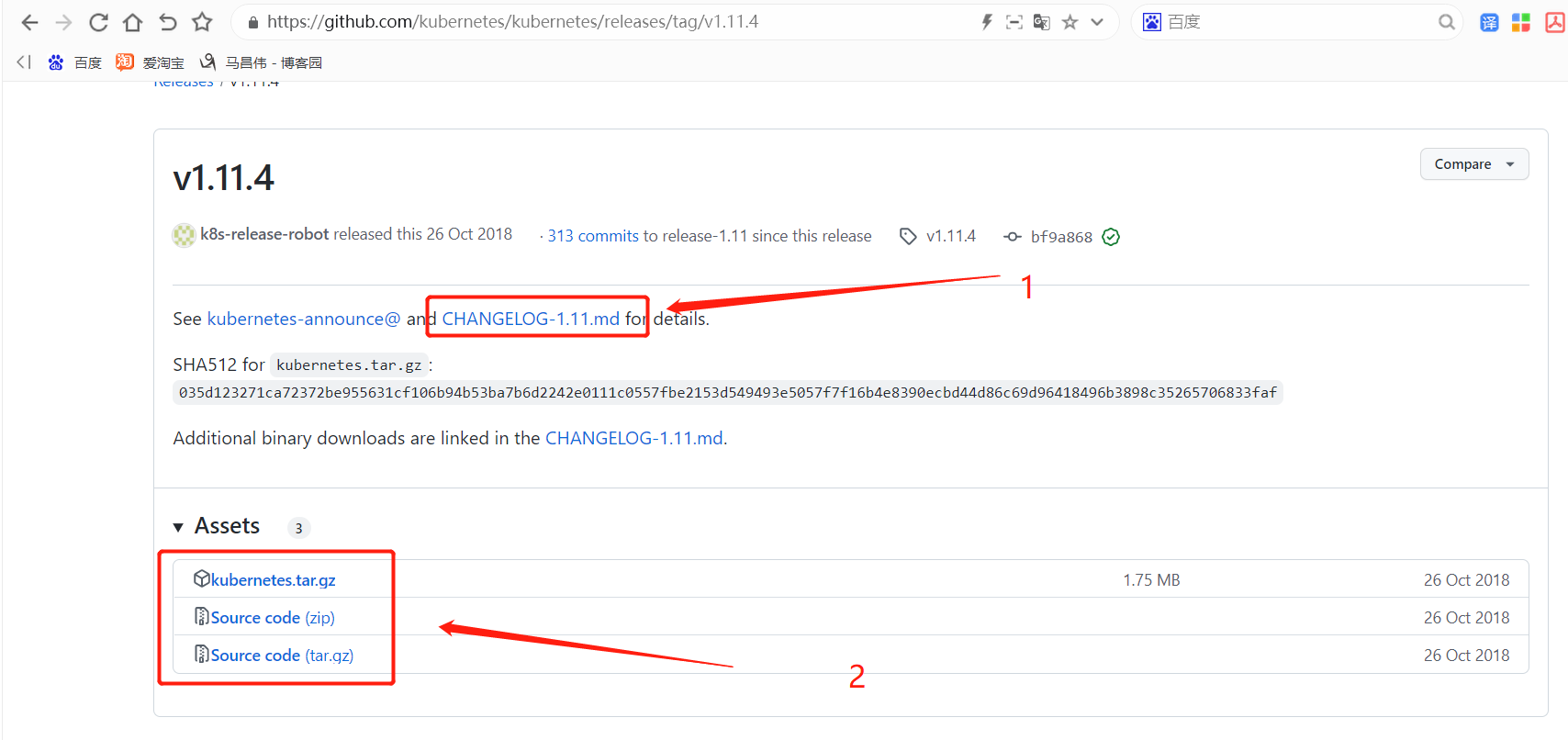

这里根据tag选了一个1.12.14版本的

2处是源码包,需要编译构建,1处是官方构建好的二进制包

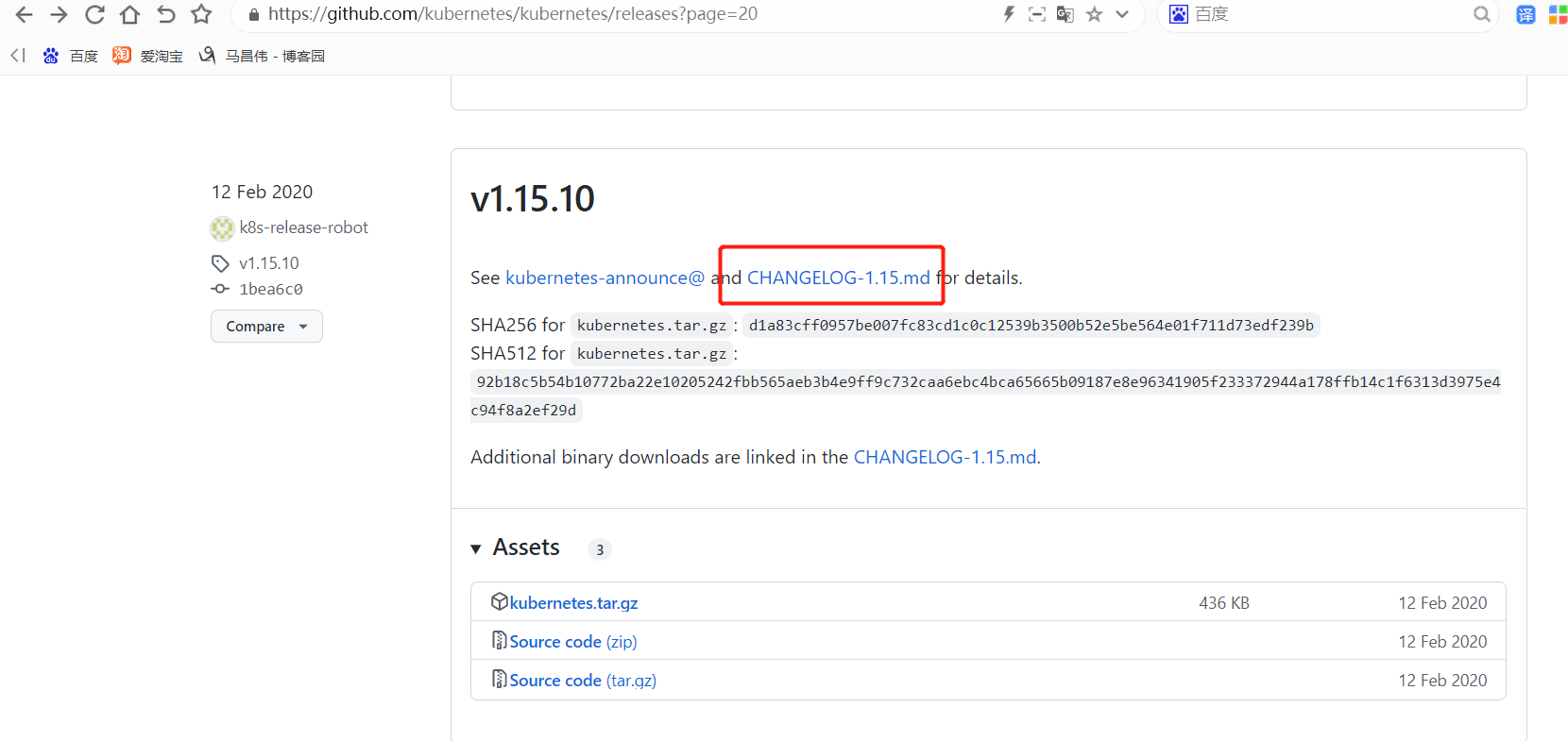

换下版本,上面版本页面404,没东西了

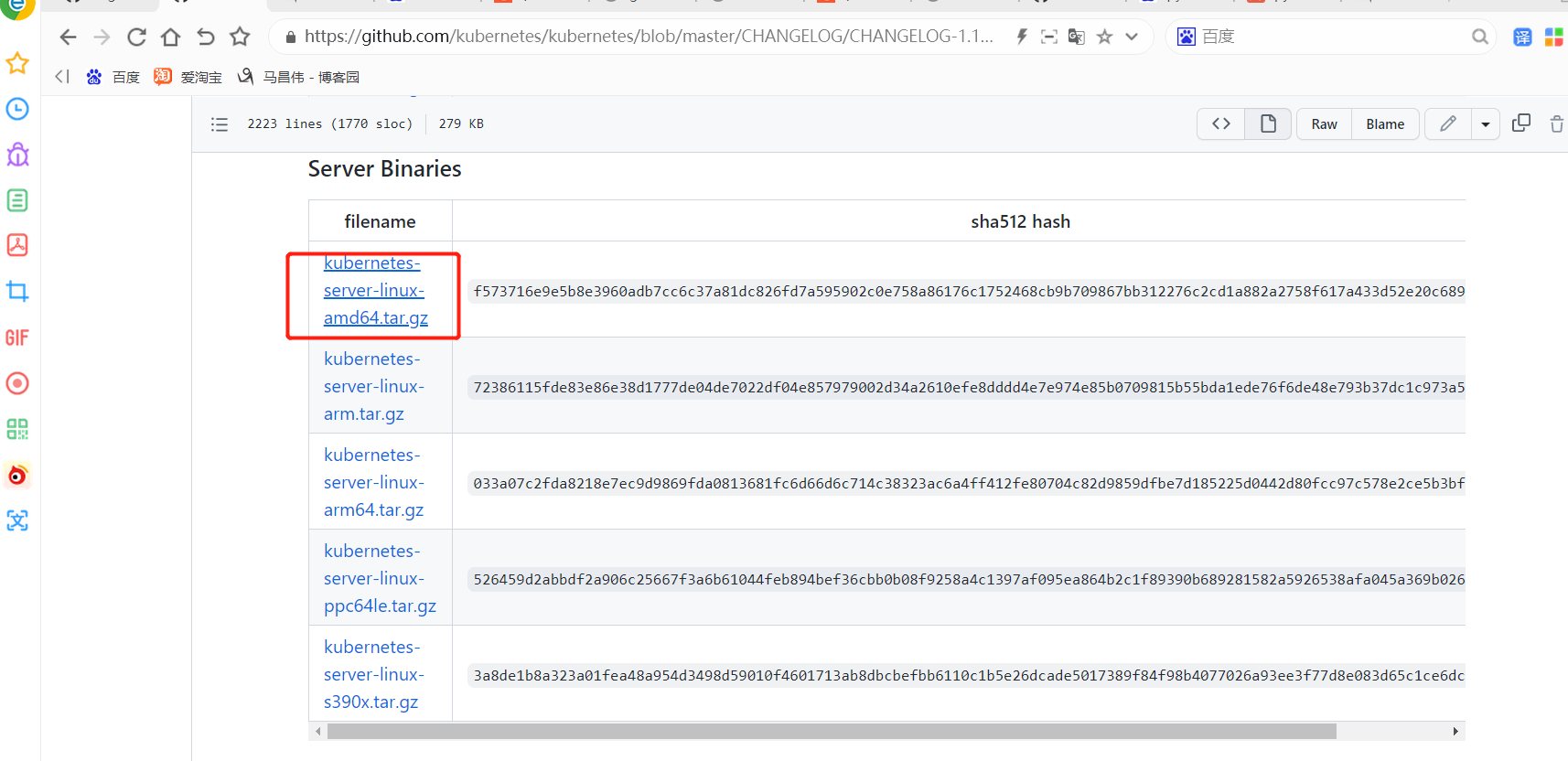

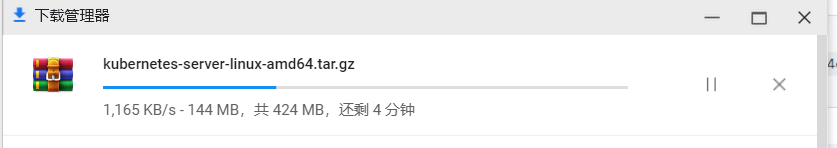

这里面分客户端服务端 node端的二进制包,只需要下载server端就行,就包含了另外两个了

https://storage.googleapis.com/kubernetes-release/release/v1.15.12/kubernetes-server-linux-amd64.tar.gz

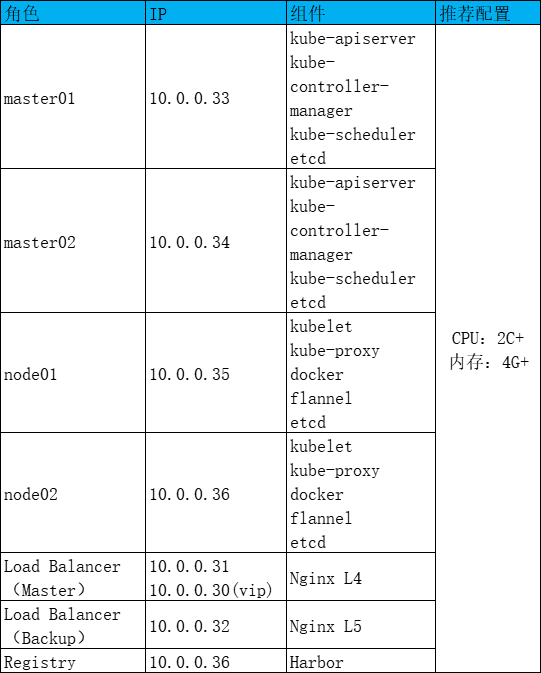

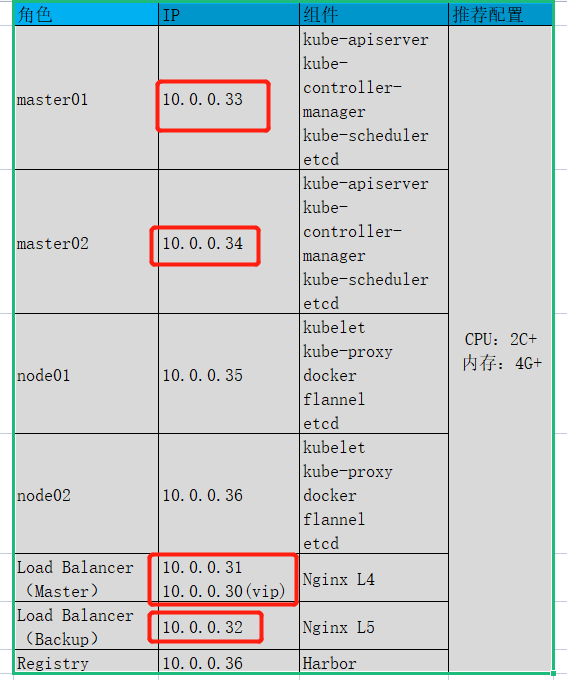

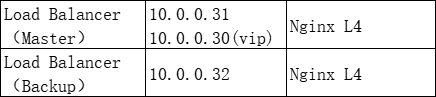

部署规划表

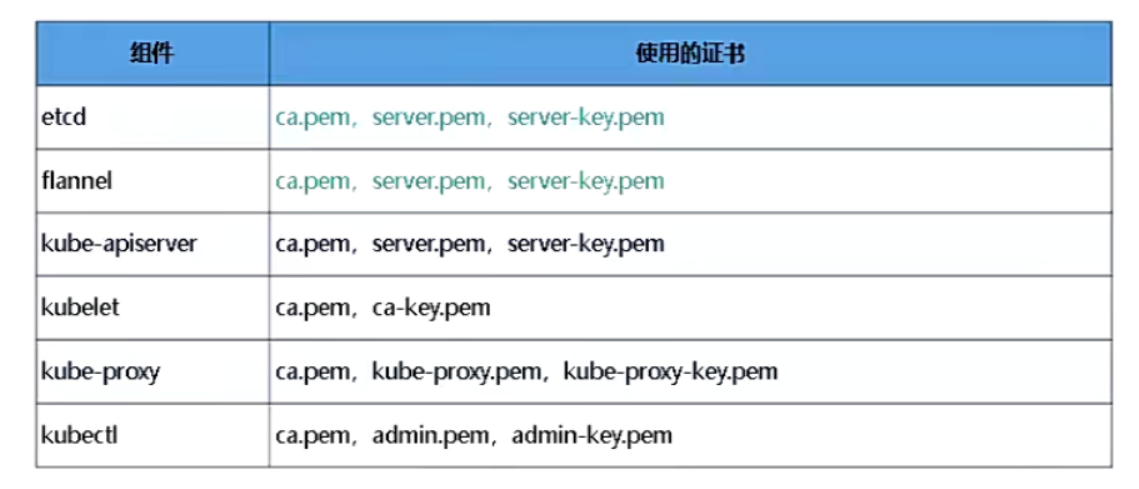

自签ssl证书

注意:服务器时间问题可能导致证书验证不通过

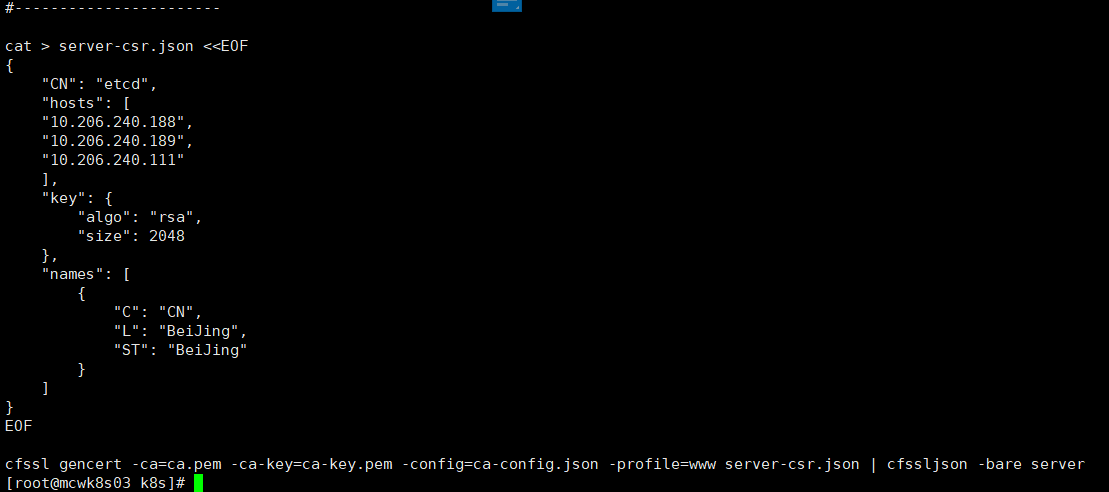

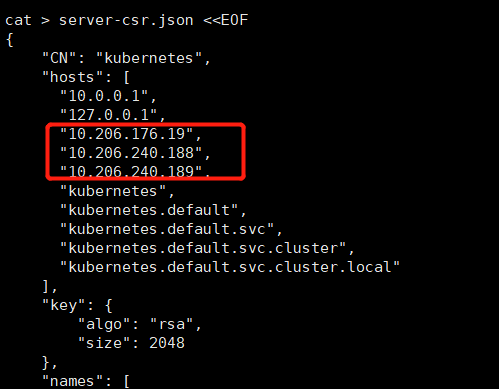

cat > ca-config.json <<EOF { "signing": { "default": { "expiry": "87600h" }, "profiles": { "www": { "expiry": "87600h", "usages": [ "signing", "key encipherment", "server auth", "client auth" ] } } } } EOF cat > ca-csr.json <<EOF { "CN": "etcd CA", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "Beijing", "ST": "Beijing" } ] } EOF cfssl gencert -initca ca-csr.json | cfssljson -bare ca - #----------------------- cat > server-csr.json <<EOF { "CN": "etcd", "hosts": [ "10.206.240.188", "10.206.240.189", "10.206.240.111" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "BeiJing", "ST": "BeiJing" } ] } EOF cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www server-csr.json | cfssljson -bare server

curl -L https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -o /usr/local/bin/cfssl curl -L https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -o /usr/local/bin/cfssljson curl -L https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 -o /usr/local/bin/cfssl-certinfo chmod +x /usr/local/bin/cfssl /usr/local/bin/cfssljson /usr/local/bin/cfssl-certinfo

这里需要使用自签ssl证书

自签ssl证书主要分两套,一套是etcd,一套是apiserver.看如下架构图。etcd是一个key value的数据库集群,它也是通过http进行通信的,为了安全性,我们使用https通信。使用https的原因就是防止数据被篡改,被泄密等的风险。apiserver也是使用https,https是这样一个通道。客户端需要访问apiserver,apiserver使用https,客户端和apiserver之间数据是进行加密传输的,保证了数据在传输中的安全性,即使是被拦截了,别人也无从了解数据具体内容,得到的只是加密字符串,etcd也是这样的。

etcd有一套证书,它有自签ca,包括生成的数字证书和私钥。flanel是集群网络,它为集群节点之间提供了跨主机通信的网络。也就是一个k8s集群之间,集群节点网络都是通的,也就是在每个节点上都能访问到pod,pod之间也是可以通信。flanel是通过etcd来存储子网的一些路由的,所以它要使用etcd的一套证书,来与etcd进行通信。

apiserver是一套证书,其它组件需要连接apiserver,所以其它组件使用到apiserver的证书。

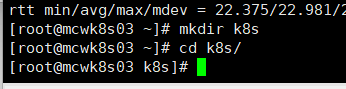

我们要先为etcd签发一个ssl证书,先将etcd部署起来,然后每个node上安装docker.我们先安装一个单master集群,然后再演变成多master。

先部署单master集群,打开33,35,36三台主机

我们先自签etcd的证书,它是key value的分布式数据库集群,建议至少使用三台做集群,官方推荐用5台,三台允许坏一台,5台能允许你坏两台。

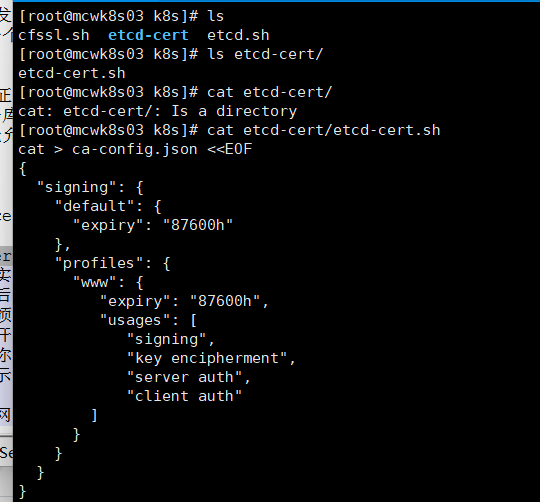

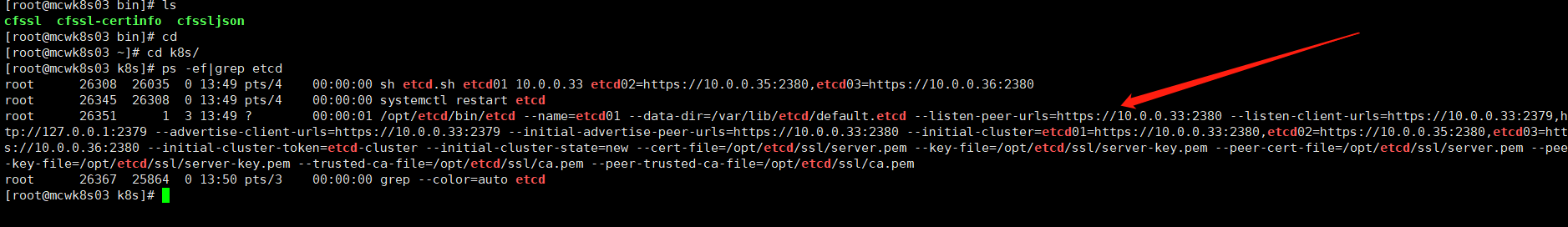

我们下载三个工具。cfssl是用来生成证书的,sfssljson是通过传入json文件生成证书,cfssl-certinfo可以用来查看生成的证书的一些信息。

[root@mcwk8s03 k8s]# ls cfssl.sh etcd-cert etcd.sh [root@mcwk8s03 k8s]# cat cfssl.sh curl -L https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -o /usr/local/bin/cfssl curl -L https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -o /usr/local/bin/cfssljson curl -L https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 -o /usr/local/bin/cfssl-certinfo chmod +x /usr/local/bin/cfssl /usr/local/bin/cfssljson /usr/local/bin/cfssl-certinfo [root@mcwk8s03 k8s]# ls /usr/local/bin/ [root@mcwk8s03 k8s]# sh cfssl.sh % Total % Received % Xferd Average Speed Time Time Time Current Dload Upload Total Spent Left Speed 0 0 0 0 0 0 0 0 --:--:-- 0:00:03 --:--:-- 0 0 0 0 0 0 0 0 0 --:--:-- 0:00:04 --:--:-- 0 100 9.8M 100 9.8M 0 0 582k 0 0:00:17 0:00:17 --:--:-- 817k % Total % Received % Xferd Average Speed Time Time Time Current Dload Upload Total Spent Left Speed 0 0 0 0 0 0 0 0 --:--:-- 0:00:05 --:--:-- 0 0 0 0 0 0 0 0 0 --:--:-- 0:00:25 --:--:-- 0 curl: (35) TCP connection reset by peer % Total % Received % Xferd Average Speed Time Time Time Current Dload Upload Total Spent Left Speed 0 0 0 0 0 0 0 0 --:--:-- 0:00:09 --:--:-- 0 0 0 0 0 0 0 0 0 --:--:-- 0:00:29 --:--:-- 0 curl: (35) TCP connection reset by peer chmod: cannot access ‘/usr/local/bin/cfssljson’: No such file or directory chmod: cannot access ‘/usr/local/bin/cfssl-certinfo’: No such file or directory [root@mcwk8s03 k8s]# ls /usr/local/bin/ cfssl [root@mcwk8s03 k8s]# [root@mcwk8s03 k8s]#

由于有两个文件下载失败,我们在浏览器上下载的包可以上传上来改个名字

[root@mcwk8s03 bin]# ls /root/k8s/ cfssl.sh etcd-cert/ etcd.sh [root@mcwk8s03 bin]# cat /root/k8s/cfssl.sh curl -L https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -o /usr/local/bin/cfssl curl -L https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -o /usr/local/bin/cfssljson curl -L https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 -o /usr/local/bin/cfssl-certinfo chmod +x /usr/local/bin/cfssl /usr/local/bin/cfssljson /usr/local/bin/cfssl-certinfo [root@mcwk8s03 bin]# ls cfssl cfssl-certinfo_linux-amd64 cfssljson_linux-amd64 [root@mcwk8s03 bin]# mv cfssl-certinfo_linux-amd64 cfssl-certinfo [root@mcwk8s03 bin]# mv cfssljson_linux-amd64 cfssljson [root@mcwk8s03 bin]# chmod +x cfssl-certinfo cfssljson [root@mcwk8s03 bin]# [root@mcwk8s03 bin]# [root@mcwk8s03 bin]# ll total 18808 -rwxr-xr-x. 1 root root 10376657 Oct 30 11:09 cfssl -rwxr-xr-x. 1 root root 6595195 Oct 30 10:48 cfssl-certinfo -rwxr-xr-x. 1 root root 2277873 Oct 30 10:48 cfssljson [root@mcwk8s03 bin]#

我们看一下etcd-cert/etcd-cert.sh脚本。要生成一个证书,需要证书机构给你颁发,在实际使用中,通常是需要去证书机构(厂商)购买证书,他们帮你生成证书,然后你就能使用证书了。但是这是收费的。这种域名证书厂商,是通过权威机构颁发的,因为在互联网上他们通常被植入成为了可信任证书列表里了。当你打开浏览器访问他的网站时,它会检测你浏览器中内置的可信任证书机构,如果你的网站证书是列表里面的,那么就是可信任的,https会显示绿色,否则显示红色。我们自签证书,就是自己作为ca机构生成证书,不被互联网认可信任,其实是一样的生成证书的。我们需要自建一个ca,签发证书,然后给我们的网站使用。

我们看下ca-config.json文件,和openssl生成证书是一样的,这样的更清晰明了。默认里面,ca过期时间十年。如果你用profiles里面的属性www,那就会使用这下面的配置,配置过期时间也是十年。其它属性不是关键的,使用默认的就行。

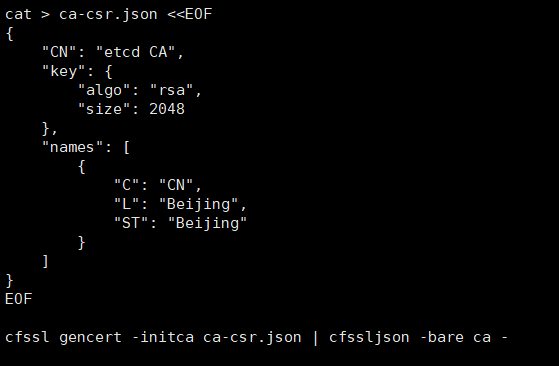

我们再看看ca的请求签名,请求ca根证书的一个请求签名。里面定义了ca的算法,algo,和你所在地区的一些属性。然后通过cfssl初始化ca,通过cfssljson传入

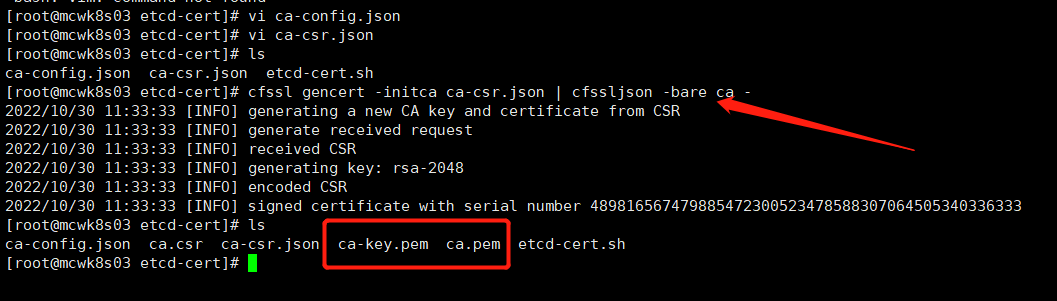

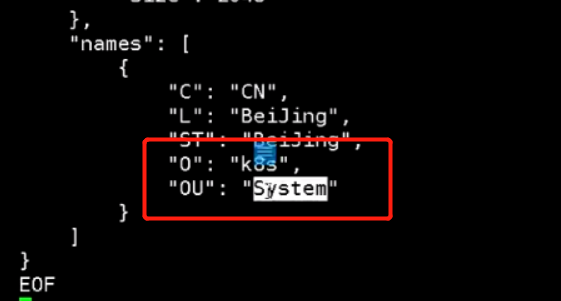

我们可以先在目录创建这两个文件,执行生成ca.pem根证书和ca-key.pem,这连个就可以理解成是一个ca机构了。可以为其它网站颁发证书

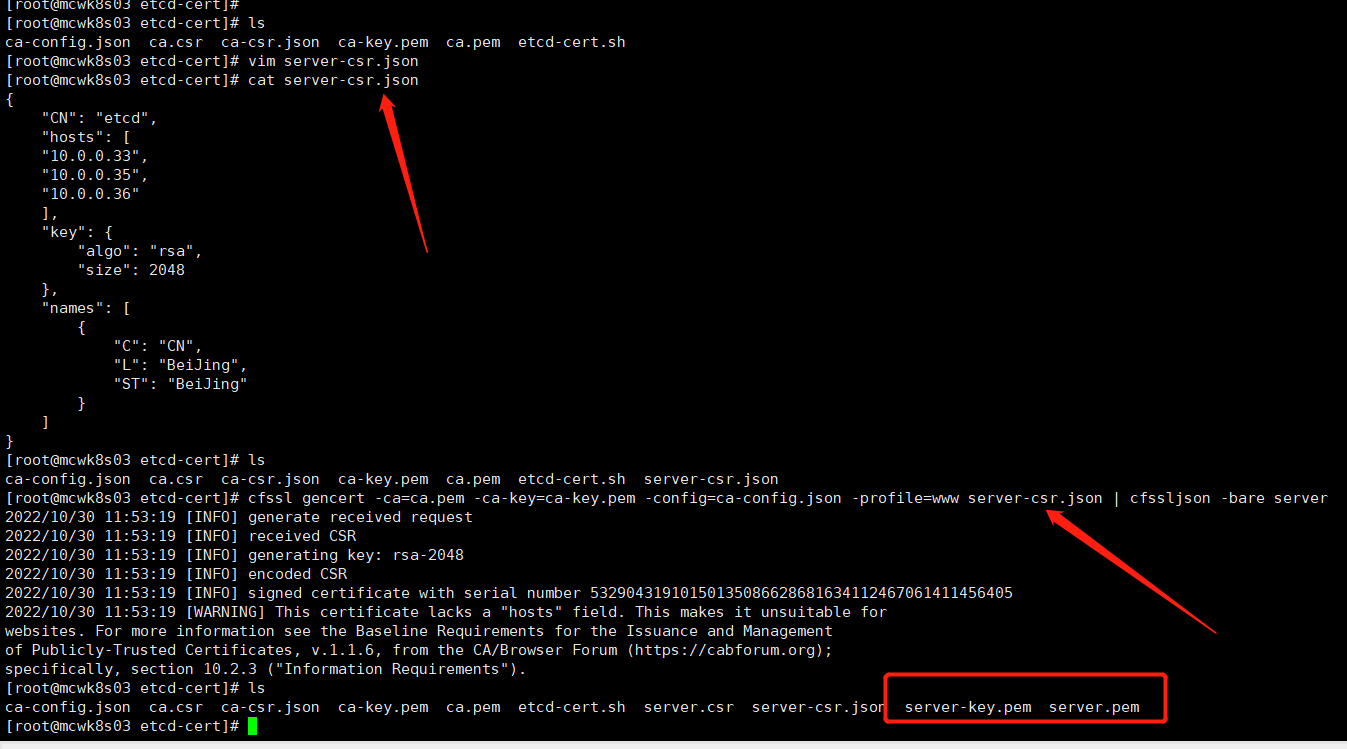

下面就可以为etcd颁发https证书了。使用server-csr.json这个配置,CN 起个名字就行。hosts里面比较关键,这是你部署的etcd的节点ip。我们目前先在33,35,36三个节点上部署etcd。names填的地址,即使不存在也没事这不是关键配置。然后是key的算法和大小。生成证书命令 -ca指定你的ca机构,-ca-key指定ca的私钥。-config指定ca的配置文件,-profile指定使用profie下面的www配置。server-csr.json,最后指定你需要证书的服务的配置,也就是下面配的json文件。重点是etcd服务的三个部署节点配置。

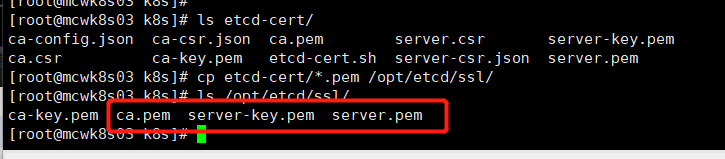

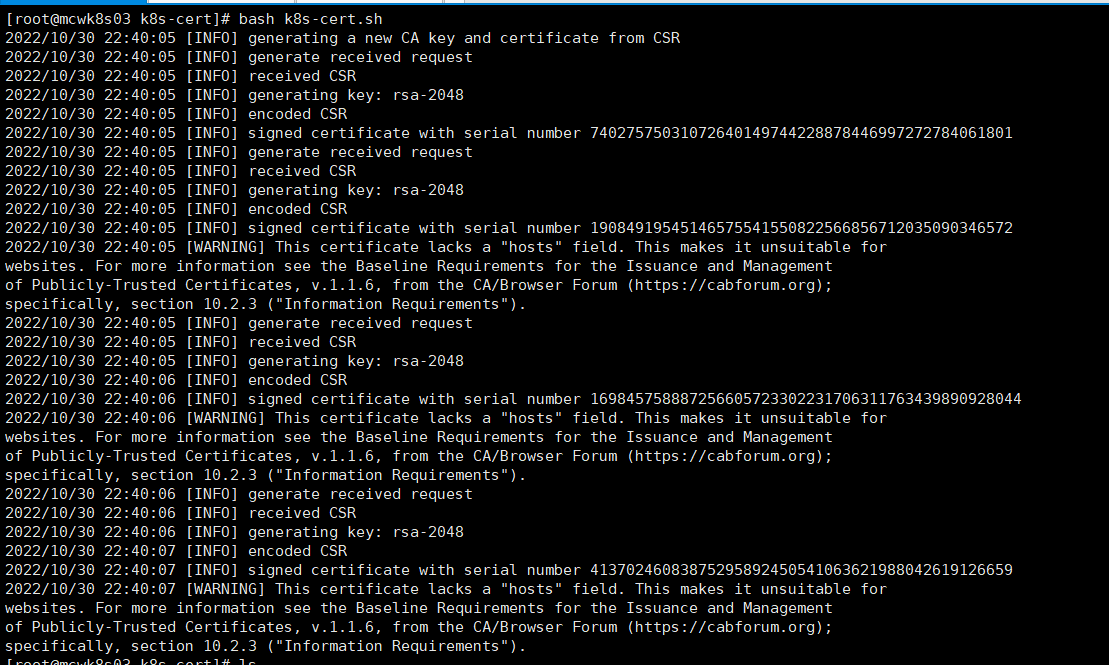

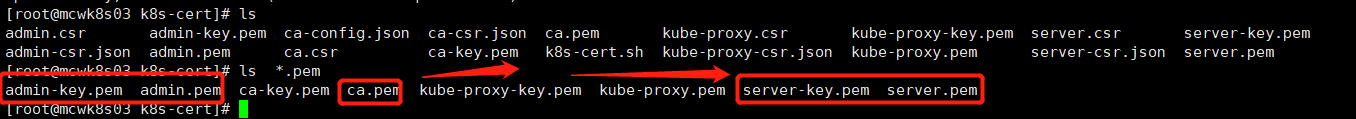

下面就执行命令,生成证书。生成了server-key.pem server.pem两个文件。像nginx的https,是有个.cert和.key的,只是不同的格式而已。.cert对于server.pem,.key对应server-key.pem。我们用server-key.pem server.pem两个证书文件就行了。也要用到ca.pem这个ca,因为这是自签的ca,只有这个ca(ca.pem)才能识别我们创建的ca证书。这样我们就自签好证书了,后面就可以直接使用这个证书了

Etcd数据库集群部署

下载包

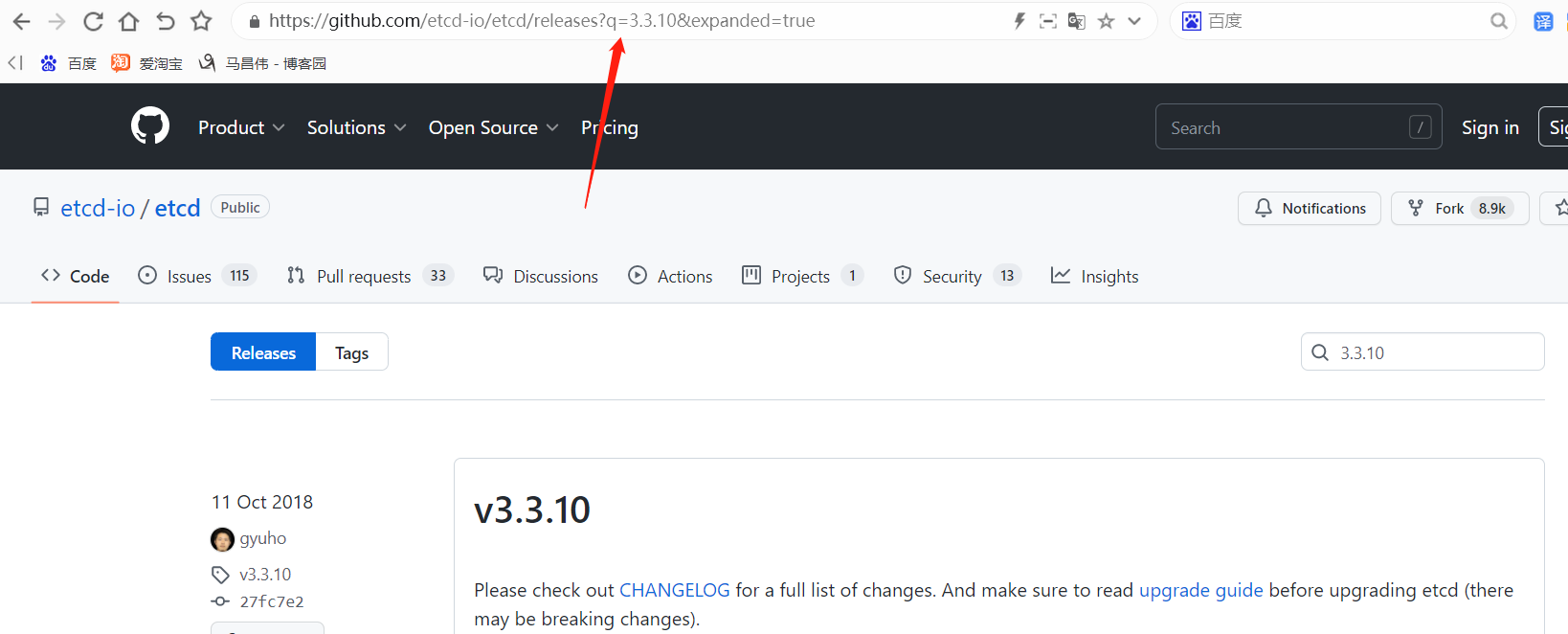

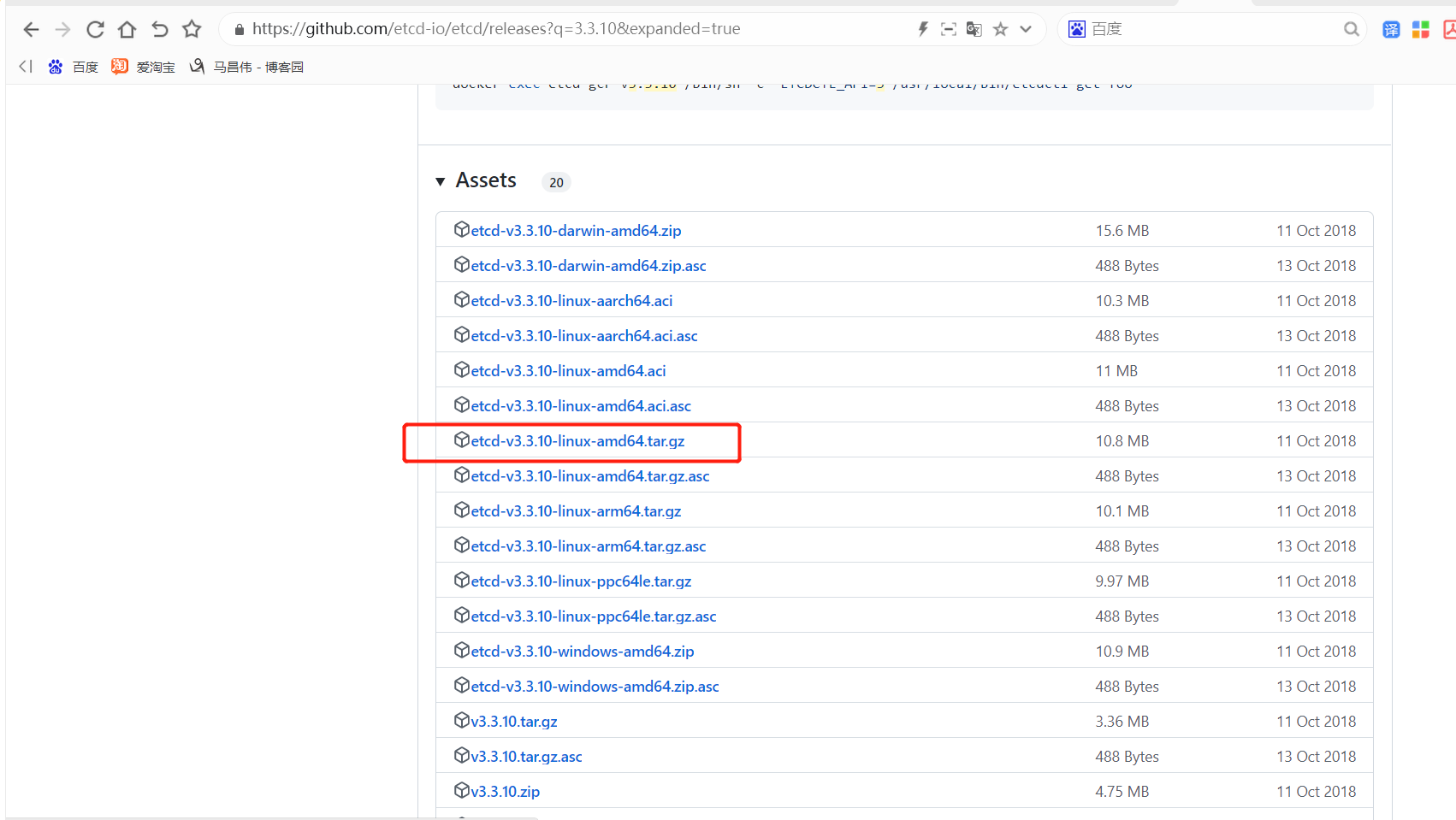

我们这里搜索下载3.3.10

找amd 64位的

https://objects.githubusercontent.com/github-production-release-asset-2e65be/11225014/edf27880-cc79-11e8-8d33-457e916d9abd?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=AKIAIWNJYAX4CSVEH53A%2F20221030%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20221030T043616Z&X-Amz-Expires=300&X-Amz-Signature=fda41a520e468f4424e0a902d39e93b1de163e75f6c8eae8ed7106c9a6222f98&X-Amz-SignedHeaders=host&actor_id=0&key_id=0&repo_id=11225014&response-content-disposition=attachment%3B%20filename%3Detcd-v3.3.10-linux-amd64.tar.gz&response-content-type=application%2Foctet-stream

部署etcd

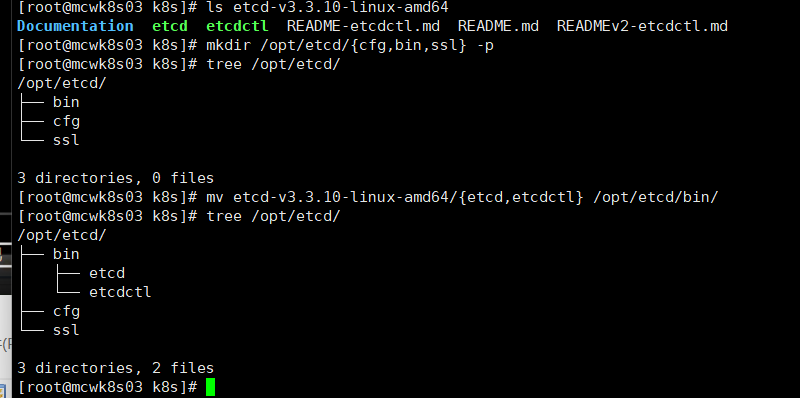

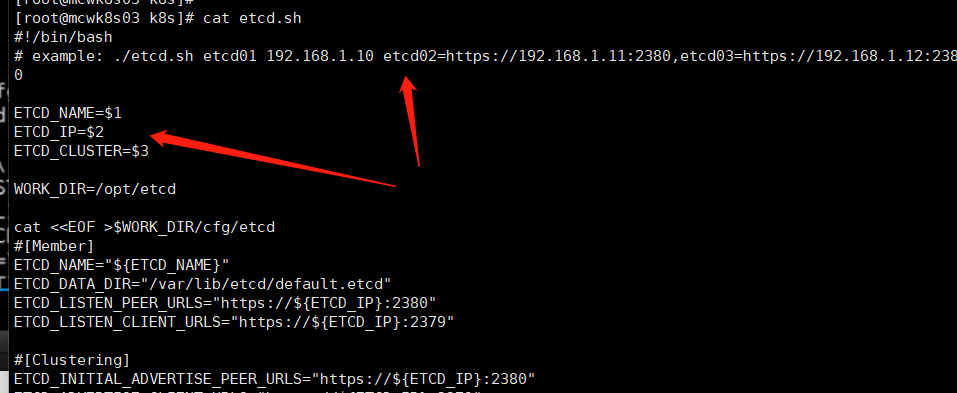

我们看一下etcd.sh文件。部署应用,一般就是配置文件,另一个就是用ststemctl来管理你的应用

[root@mcwk8s03 k8s]# ls cfssl.sh etcd-cert etcd.sh [root@mcwk8s03 k8s]# cat etcd.sh #!/bin/bash # example: ./etcd.sh etcd01 192.168.1.10 etcd02=https://192.168.1.11:2380,etcd03=https://192.168.1.12:2380 ETCD_NAME=$1 ETCD_IP=$2 ETCD_CLUSTER=$3 WORK_DIR=/opt/etcd cat <<EOF >$WORK_DIR/cfg/etcd #[Member] ETCD_NAME="${ETCD_NAME}" ETCD_DATA_DIR="/var/lib/etcd/default.etcd" ETCD_LISTEN_PEER_URLS="https://${ETCD_IP}:2380" ETCD_LISTEN_CLIENT_URLS="https://${ETCD_IP}:2379" #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="https://${ETCD_IP}:2380" ETCD_ADVERTISE_CLIENT_URLS="https://${ETCD_IP}:2379" ETCD_INITIAL_CLUSTER="etcd01=https://${ETCD_IP}:2380,${ETCD_CLUSTER}" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" ETCD_INITIAL_CLUSTER_STATE="new" EOF cat <<EOF >/usr/lib/systemd/system/etcd.service [Unit] Description=Etcd Server After=network.target After=network-online.target Wants=network-online.target [Service] Type=notify EnvironmentFile=${WORK_DIR}/cfg/etcd ExecStart=${WORK_DIR}/bin/etcd \ --name=\${ETCD_NAME} \ --data-dir=\${ETCD_DATA_DIR} \ --listen-peer-urls=\${ETCD_LISTEN_PEER_URLS} \ --listen-client-urls=\${ETCD_LISTEN_CLIENT_URLS},http://127.0.0.1:2379 \ --advertise-client-urls=\${ETCD_ADVERTISE_CLIENT_URLS} \ --initial-advertise-peer-urls=\${ETCD_INITIAL_ADVERTISE_PEER_URLS} \ --initial-cluster=\${ETCD_INITIAL_CLUSTER} \ --initial-cluster-token=\${ETCD_INITIAL_CLUSTER_TOKEN} \ --initial-cluster-state=new \ --cert-file=${WORK_DIR}/ssl/server.pem \ --key-file=${WORK_DIR}/ssl/server-key.pem \ --peer-cert-file=${WORK_DIR}/ssl/server.pem \ --peer-key-file=${WORK_DIR}/ssl/server-key.pem \ --trusted-ca-file=${WORK_DIR}/ssl/ca.pem \ --peer-trusted-ca-file=${WORK_DIR}/ssl/ca.pem Restart=on-failure LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF systemctl daemon-reload systemctl enable etcd systemctl restart etcd [root@mcwk8s03 k8s]#

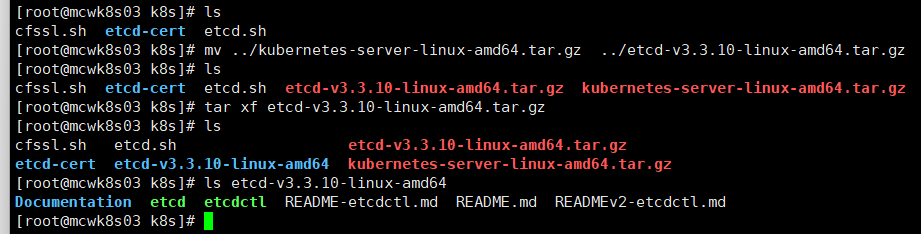

我们将上传的etcd解压,解压目录里面有两个二进制文件要用到,一个是etcd服务启动命令,一个是tecdctl 客户端工具

我们创建一下etcd目录。将两个二进制文件放到我们生成的bin目录中

看下我们的脚本。etcd的节点名称,节点ip,集群地址,工作目录(就是刚刚吗 创建的)

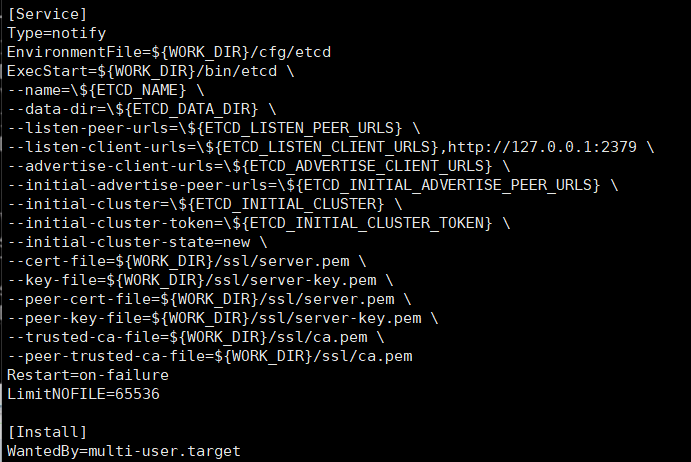

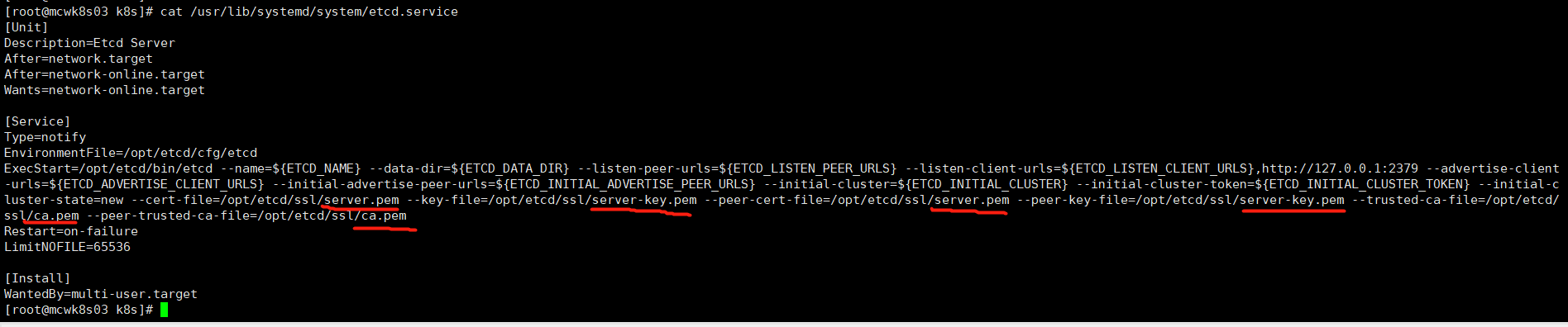

看 一下service配置,环境文件就是etcd的配置文件。执行启动就是启动etcd的命令。命令加了很多参数。yum装会产生配置文件,但是我们这种装是没有配置文件的

我们将三个证书文件复制到ssl目录下。

我们可以使用工具查看生成的证书,主要是看server.pem

[root@mcwk8s03 k8s]# cfssl-certinfo -cert /opt/etcd/ssl/server.pem { "subject": { "common_name": "etcd", "country": "CN", "locality": "BeiJing", "province": "BeiJing", "names": [ "CN", "BeiJing", "BeiJing", "etcd" ] }, "issuer": { "common_name": "etcd CA", "country": "CN", "locality": "Beijing", "province": "Beijing", "names": [ "CN", "Beijing", "Beijing", "etcd CA" ] }, "serial_number": "53290431910150135086628681634112467061411456405", "sans": [ "10.0.0.33", "10.0.0.35", "10.0.0.36" ], "not_before": "2022-10-30T03:48:00Z", "not_after": "2032-10-27T03:48:00Z", "sigalg": "SHA256WithRSA", "authority_key_id": "CC:CF:3E:C1:C6:46:8A:B1:8E:23:10:90:31:DB:38:32:C7:7F:C5:92", "subject_key_id": "38:7:19:2C:3D:32:40:94:66:D1:46:51:81:4D:F4:29:6A:46:77:B4", "pem": "-----BEGIN CERTIFICATE-----\nMIIDrzCCApegAwIBAgIUCVWgKCh8uQ0WS6M9o01apLWkpZUwDQYJKoZIhvcNAQEL\nBQAwQzELMAkGA1UEBhMCQ04xEDAOBgNVBAgTB0JlaWppbmcxEDAOBgNVBAcTB0Jl\naWppbmcxEDAOBgNVBAMTB2V0Y2QgQ0EwHhcNMjIxMDMwMDM0ODAwWhcNMzIxMDI3\nMDM0ODAwWjBAMQswCQYDVQQGEwJDTjEQMA4GA1UECBMHQmVpSmluZzEQMA4GA1UE\nBxMHQmVpSmluZzENMAsGA1UEAxMEZXRjZDCCASIwDQYJKoZIhvcNAQEBBQADggEP\nADCCAQoCggEBANRfkTyo2kqYxQDL4veObu3wpEKT0puQvPYsIryxvQSOpHIHyT2T\nCHyM3lOnnmlxG719/XoYBf4I6/zaJEr37e3ALEbI7fcP2kyB1MxRxmJLdlkPUZre\nLyJ7lfUT+vBl51rz/kueokyUCM8fhB0OBqXwIrlQtvyi26edx98RO6ifSHtYB6SE\nHHrwFjWfAWnztIPfM2smO34H/PKUgdp/sOLiI2WotqpzmasKiaiSwkJWU//0acLU\nhfPm348GQgTEicFqjibpagZ3F6PfrWoKkqYdqoXBz7idHDtd2IqcCXfrHyPxUzUH\nHK4WLHk7GhPU5L1fba4dznHm+ikXTp3O/e0CAwEAAaOBnTCBmjAOBgNVHQ8BAf8E\nBAMCBaAwHQYDVR0lBBYwFAYIKwYBBQUHAwEGCCsGAQUFBwMCMAwGA1UdEwEB/wQC\nMAAwHQYDVR0OBBYEFDgHGSw9MkCUZtFGUYFN9ClqRne0MB8GA1UdIwQYMBaAFMzP\nPsHGRoqxjiMQkDHbODLHf8WSMBsGA1UdEQQUMBKHBAoAACGHBAoAACOHBAoAACQw\nDQYJKoZIhvcNAQELBQADggEBAEEoZbLAsGKWPvAdGmHN+qtl6Oy2pa+4MnJhA5VT\n/LDc4h7RZ1lt0dAs8fDvn99btj0OXtOuczAaYWLqj2dS6aB6zyWko7Ajar30GoWB\nInBvxr4tbG1Cgu7TYt2CmQe3SSeGh+QTeFnJyOGHT2OePj/ShxTn+LKS4Z9kB3Yy\neZnlsxG4UZxiYO4KjuuhkjMi7tozgU75PUWnWkVfHaUHnSW/2zWwZennfBcRZVKA\n6nBOtY1xaNhpP7l4NI2YkBeAVYngNCusg+I5NKqS740Mh1YE5n52ZrEkSiMotyHO\n+24C759OpGqpDmZNQq28D40pnDfhjqhpL82O/7jMzVS63f4=\n-----END CERTIFICATE-----\n" } [root@mcwk8s03 k8s]#

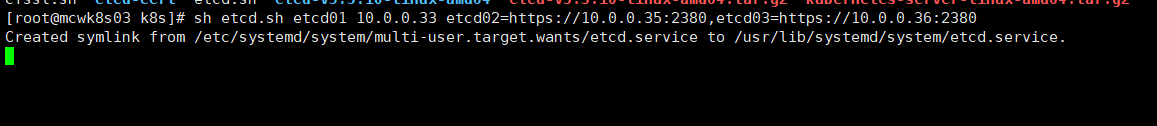

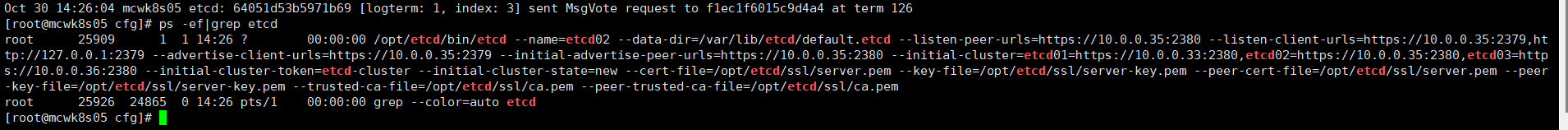

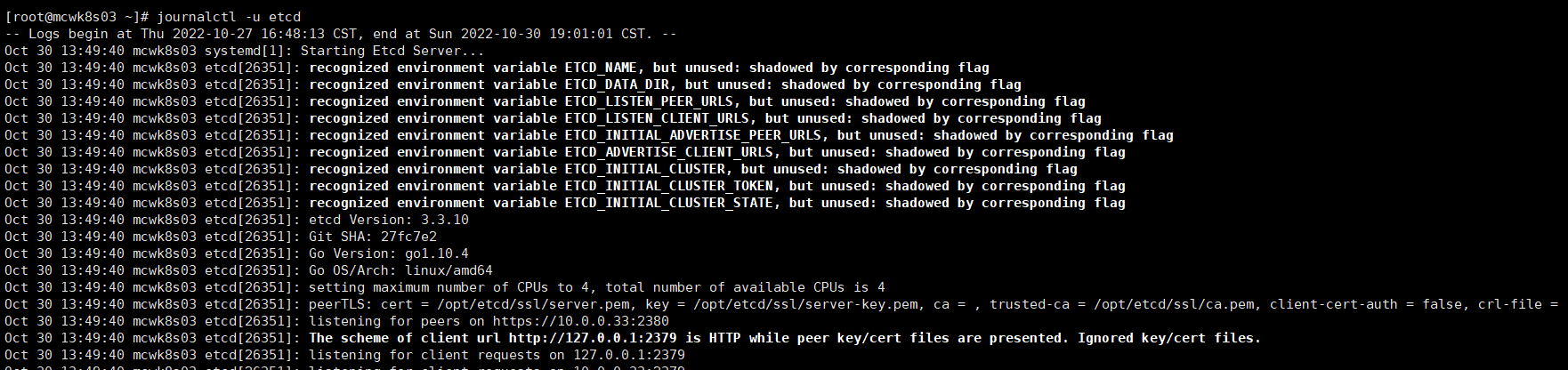

我们执行一下启动脚本,传入当前参数节点名称,另外节点的访问地址,使用https的方式访问。执行之后,该节点就启动起来了,但是它还在等待另外两个节点加入进来。进程起来后,会将启动脚本配置的参数项都引入进来。

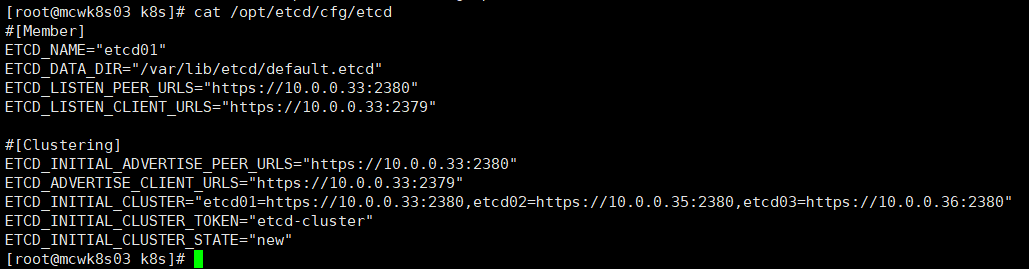

我们再来看看生成的配置文件,

在成员下有:集群中这个节点的名字,数据存放的目录,监听集群间通信的地址2380,监听客户端的访问地址2339

在集群下有,集群间通道地址2380,这个节点的客户端访问地址。集群间所有节点的地址和通信端口,集群访问token,状态,new状态指定是新建的集群。如果你有这个集群,只是要增加一个节点,那么要修改这个状态,

在看看systemd文件,可以看到已经生成这个文件了。执行命令指定了客户端和集群节点间使用的证书,证书密钥和ca机构。这里客户端和集群节点间用同一套证书。

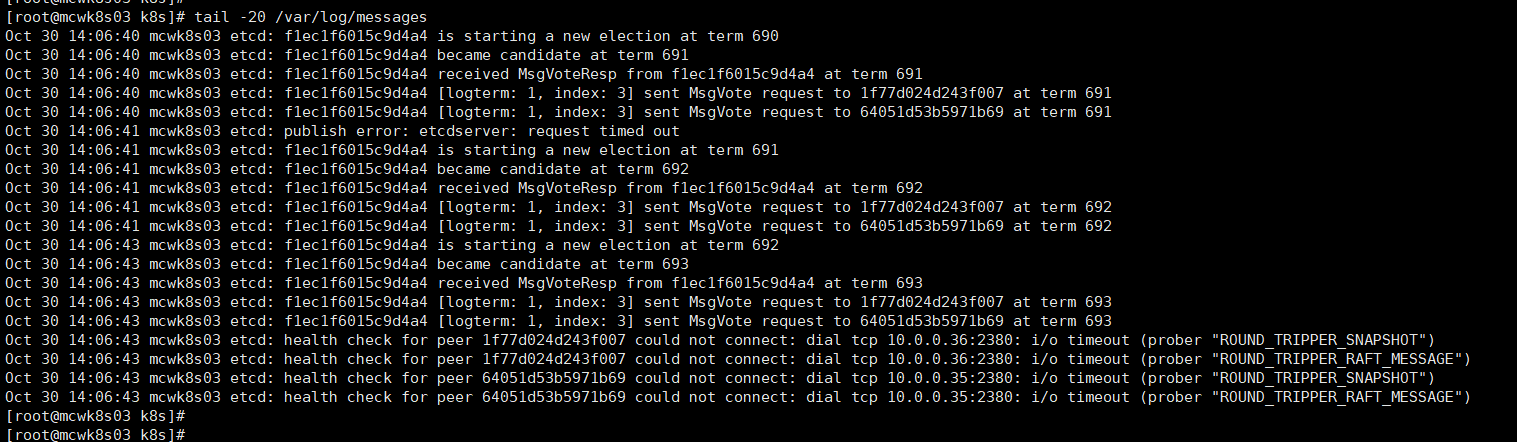

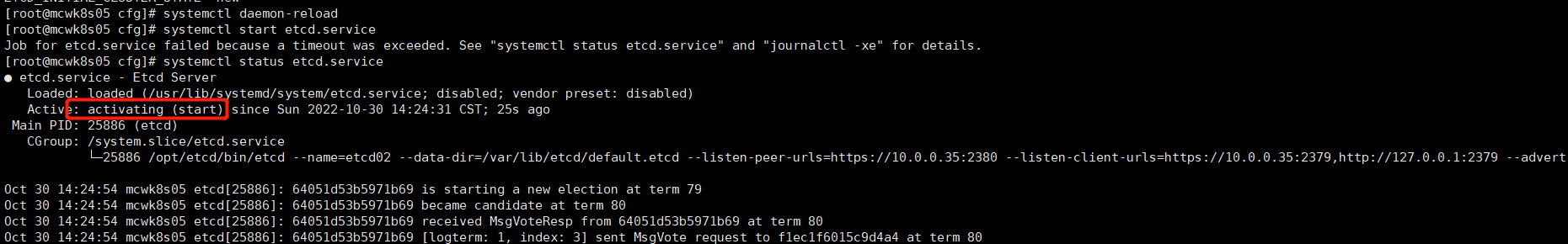

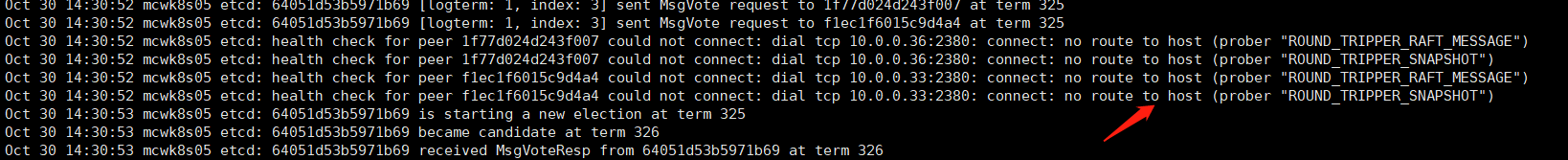

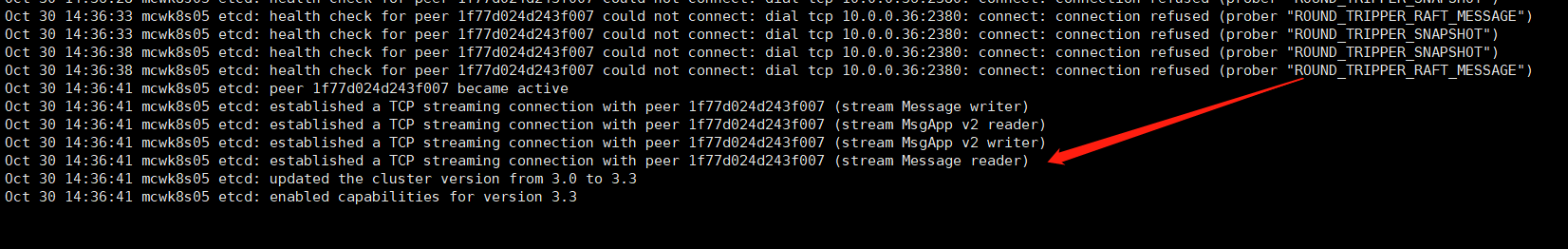

目前我们只起来一个节点,查看日志,其它节点检查连接超时。

同步文件到其它节点

[root@mcwk8s03 k8s]# scp -rp /opt/etcd/ 10.0.0.35:/opt/ [root@mcwk8s03 k8s]# scp -rp /opt/etcd/ 10.0.0.36:/opt/ [root@mcwk8s03 k8s]# scp -rp /usr/lib/systemd/system/etcd.service 10.0.0.35:/usr/lib/systemd/system/ [root@mcwk8s03 k8s]# scp -rp /usr/lib/systemd/system/etcd.service 10.0.0.36:/usr/lib/systemd/system/

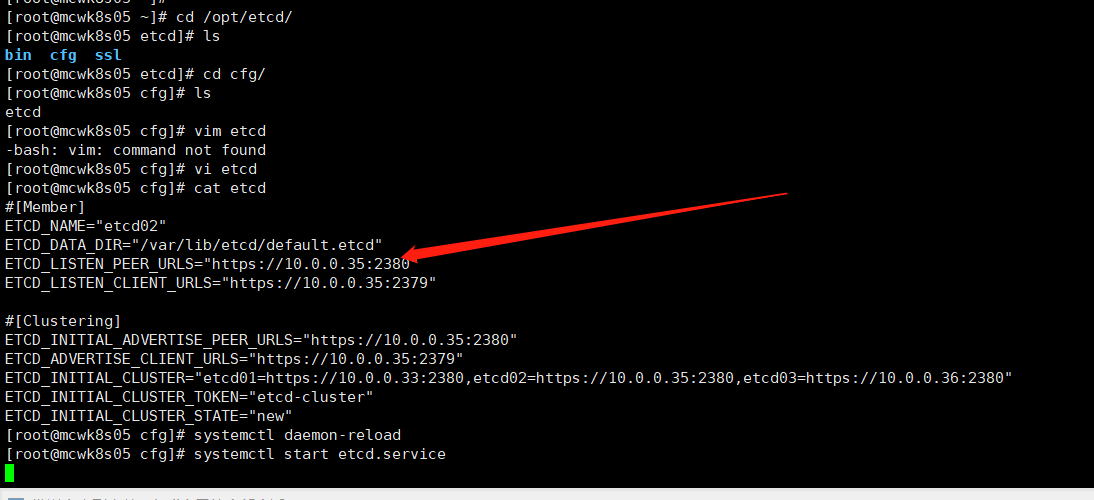

其它节点修改一下节点ip和节点名称。加载systemd配置文件,让刚刚传过来的文件生效。然后启动etcd。

启动显示有问题,但是进程时起来的。防火墙是开启状态的。

检查日志,看还是有防火墙挡住的,停掉

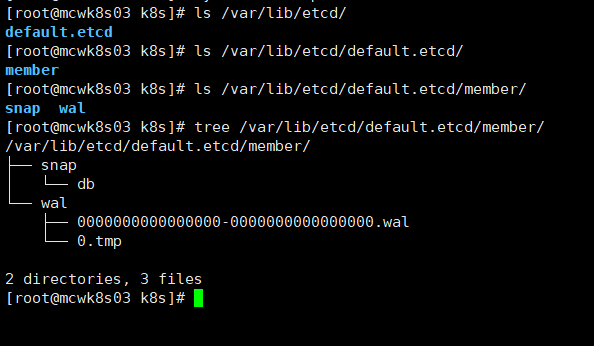

我们看一下主机3节点的数据目录,这是etcd的数据目录,如果刚刚部署,需要重新初始化一下集群,应该把它删掉再生成。

我们将etcd节点3也启动,节点二的报错就停了,就正常连接上,集群间可正常通信了。

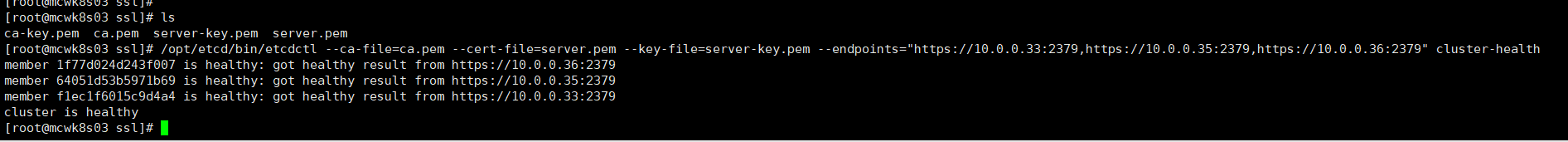

检查集群健康状况。使用客户端连接工具,连接的是客户端连接用的端口。添加参数cluster-health检查。如下我们的集群是正常的了,健康的。有时间可以测试一下,坏一台不影响使用

[root@mcwk8s03 ssl]# ls ca-key.pem ca.pem server-key.pem server.pem [root@mcwk8s03 ssl]# /opt/etcd/bin/etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints="https://10.0.0.33:2379,https://10.0.0.35:2379,https://10.0.0.36:2379" cluster-health member 1f77d024d243f007 is healthy: got healthy result from https://10.0.0.36:2379 member 64051d53b5971b69 is healthy: got healthy result from https://10.0.0.35:2379 member f1ec1f6015c9d4a4 is healthy: got healthy result from https://10.0.0.33:2379 cluster is healthy [root@mcwk8s03 ssl]#

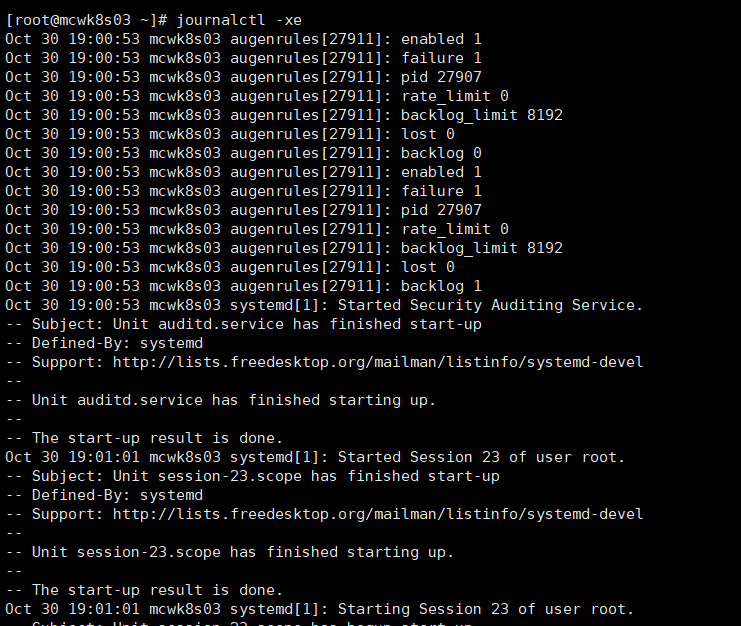

看日志使用如下命令

journalctl -xe

journalctl -u etcd

etcdctl使用

节点通过上面可以了解到 在33 35 36 三个节点上

[root@mcwk8s03 ssl]# cat /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 10.0.0.31 mcwk8s01 10.0.0.32 mcwk8s02 10.0.0.33 mcwk8s03 10.0.0.34 mcwk8s04 10.0.0.35 mcwk8s05 10.0.0.36 mcwk8s06 [root@mcwk8s03 ssl]# /opt/etcd/bin/etcdctl \ > --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem \ > --endpoints="https://10.0.0.33:2379,https://10.0.0.35:2379,https://10.0.0.36:2379" \ > cluster-health member 1f77d024d243f007 is healthy: got healthy result from https://10.0.0.36:2379 member 64051d53b5971b69 is healthy: got healthy result from https://10.0.0.35:2379 member f1ec1f6015c9d4a4 is healthy: got healthy result from https://10.0.0.33:2379 cluster is healthy [root@mcwk8s03 ssl]#

添加别名,不用在命令行输入一大串

[root@mcwk8s03 ssl]# pwd /opt/etcd/ssl [root@mcwk8s03 ssl]# cd [root@mcwk8s03 ~]# [root@mcwk8s03 ~]# vim ~/.bashrc [root@mcwk8s03 ~]# tail -1 ~/.bashrc alias etcdctl='/opt/etcd/bin/etcdctl --ca-file=/opt/etcd/ssl/ca.pem --cert-file=/opt/etcd/ssl/server.pem --key-file=/opt/etcd/ssl/server-key.pem --endpoints="https://10.0.0.33:2379,https://10.0.0.35:2379,https://10.0.0.36:2379"' [root@mcwk8s03 ~]# source ~/.bashrc [root@mcwk8s03 ~]# etcdctl cluster-health member 1f77d024d243f007 is healthy: got healthy result from https://10.0.0.36:2379 member 64051d53b5971b69 is healthy: got healthy result from https://10.0.0.35:2379 member f1ec1f6015c9d4a4 is healthy: got healthy result from https://10.0.0.33:2379 cluster is healthy [root@mcwk8s03 ~]#

查看etcd信息

[root@mcwk8s03 ~]# etcdctl ls /coreos.com [root@mcwk8s03 ~]# etcdctl ls / /coreos.com [root@mcwk8s03 ~]# etcdctl ls /coreos.com /coreos.com/network [root@mcwk8s03 ~]# etcdctl ls /coreos.com/network /coreos.com/network/config /coreos.com/network/subnets [root@mcwk8s03 ~]# etcdctl ls /coreos.com/network/subnets /coreos.com/network/subnets/172.17.59.0-24 /coreos.com/network/subnets/172.17.98.0-24 /coreos.com/network/subnets/172.17.61.0-24 [root@mcwk8s03 ~]# etcdctl ls /coreos.com/network/subnets/172.17.59.0-24/ /coreos.com/network/subnets/172.17.59.0-24 [root@mcwk8s03 ~]# etcdctl get /coreos.com/network/subnets/172.17.59.0-24 {"PublicIP":"10.0.0.33","BackendType":"vxlan","BackendData":{"VtepMAC":"b2:83:33:7b:fd:37"}} [root@mcwk8s03 ~]#

根据上面信息,查看下面的东西,etcd里面,感觉保存的数据量有点少,有点不对的样子

[root@mcwk8s03 ~]# ifconfig flannel.1 flannel.1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450 inet 172.17.59.0 netmask 255.255.255.255 broadcast 0.0.0.0 inet6 fe80::b083:33ff:fe7b:fd37 prefixlen 64 scopeid 0x20<link> ether b2:83:33:7b:fd:37 txqueuelen 0 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 8 overruns 0 carrier 0 collisions 0 [root@mcwk8s03 ~]# ifconfig docker docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500 inet 172.17.59.1 netmask 255.255.255.0 broadcast 172.17.59.255 ether 02:42:e9:a4:51:4f txqueuelen 0 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 [root@mcwk8s03 ~]# cat /run/flannel/subnet.env DOCKER_OPT_BIP="--bip=172.17.59.1/24" DOCKER_OPT_IPMASQ="--ip-masq=false" DOCKER_OPT_MTU="--mtu=1450" DOCKER_NETWORK_OPTIONS=" --bip=172.17.59.1/24 --ip-masq=false --mtu=1450" [root@mcwk8s03 ~]#

安装docker服务

curl -sSL https://get.daocloud.io/docker | sh

参考:https://www.cnblogs.com/machangwei-8/p/15721768.html

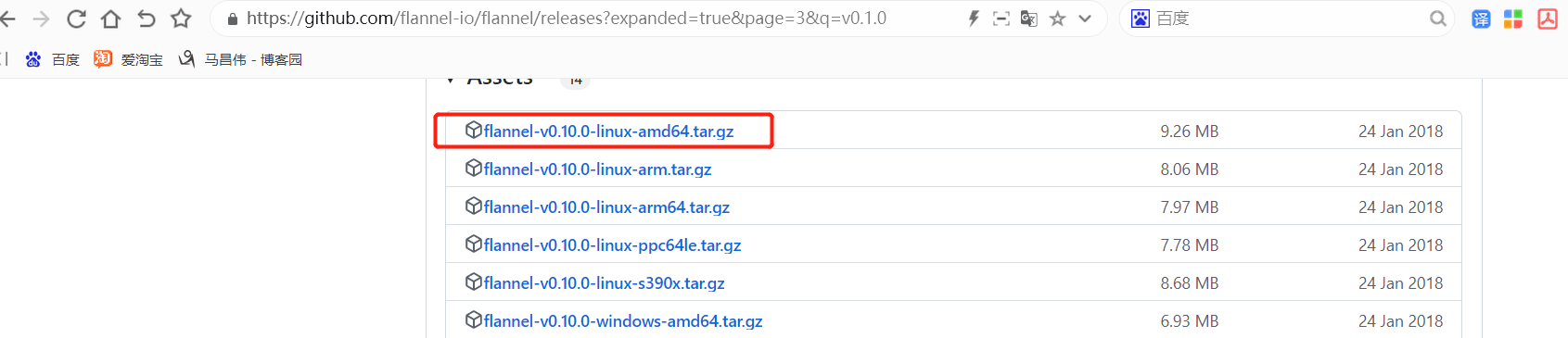

Flannel容器集群网络部署

下包和保存查看网络子网

上传到服务器

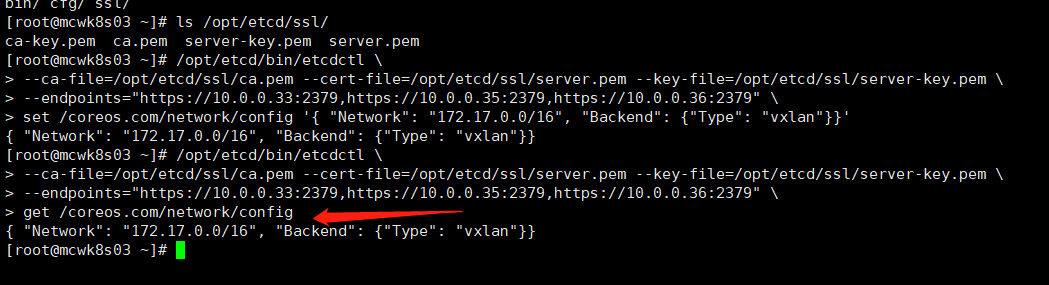

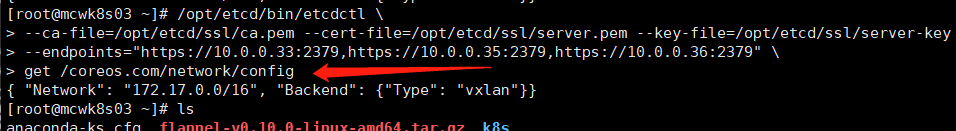

执行命令,将翁林的子网分配地址172.17.0.0/16 set存储到etcd,命令就是前面的etcd健康检查替换成set命令。以及查看保存的配置

/opt/etcd/bin/etcdctl \

--ca-file=/opt/etcd/ssl/ca.pem --cert-file=/opt/etcd/ssl/server.pem --key-file=/opt/etcd/ssl/server-key.pem \

--endpoints="https://10.0.0.33:2379,https://10.0.0.35:2379,https://10.0.0.36:2379" \

set /coreos.com/network/config '{ "Network": "172.17.0.0/16", "Backend": {"Type": "vxlan"}}'

/opt/etcd/bin/etcdctl \

--ca-file=/opt/etcd/ssl/ca.pem --cert-file=/opt/etcd/ssl/server.pem --key-file=/opt/etcd/ssl/server-key.pem \

--endpoints="https://10.0.0.33:2379,https://10.0.0.35:2379,https://10.0.0.36:2379" \

get /coreos.com/network/config

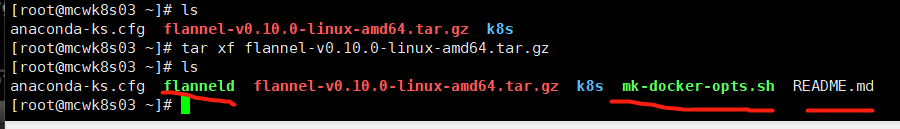

部署组件

解压包,生成两个可执行文件。

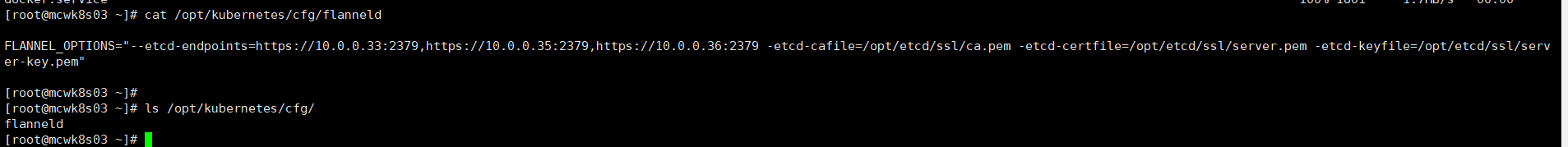

创建目录并将组件的文件放到目录下。因为flannel需要保存数据到etcd,所以需要etcd的访问证书

[root@mcwk8s03 ~]# ls anaconda-ks.cfg flanneld flannel-v0.10.0-linux-amd64.tar.gz k8s mk-docker-opts.sh README.md [root@mcwk8s03 ~]# mkdir /opt/kubernetes/{cfg,bin,ssl} -p [root@mcwk8s03 ~]# mv flanneld mk-docker-opts.sh /opt/kubernetes/bin/ [root@mcwk8s03 ~]#

将flannel的配置脚本上传。

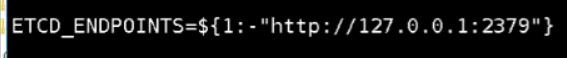

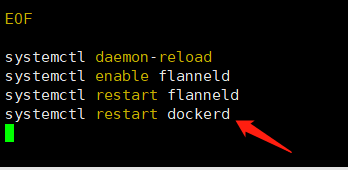

#!/bin/bash ETCD_ENDPOINTS=${1:-"http://127.0.0.1:2379"} cat <<EOF >/opt/kubernetes/cfg/flanneld FLANNEL_OPTIONS="--etcd-endpoints=${ETCD_ENDPOINTS} \ -etcd-cafile=/opt/etcd/ssl/ca.pem \ -etcd-certfile=/opt/etcd/ssl/server.pem \ -etcd-keyfile=/opt/etcd/ssl/server-key.pem" EOF cat <<EOF >/usr/lib/systemd/system/flanneld.service [Unit] Description=Flanneld overlay address etcd agent After=network-online.target network.target Before=docker.service [Service] Type=notify EnvironmentFile=/opt/kubernetes/cfg/flanneld ExecStart=/opt/kubernetes/bin/flanneld --ip-masq \$FLANNEL_OPTIONS ExecStartPost=/opt/kubernetes/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/subnet.env Restart=on-failure [Install] WantedBy=multi-user.target EOF cat <<EOF >/usr/lib/systemd/system/dockerd.service [Unit] Description=Docker Application Container Engine Documentation=https://docs.docker.com After=network-online.target firewalld.service Wants=network-online.target [Service] Type=notify EnvironmentFile=/run/flannel/subnet.env ExecStart=/usr/bin/dockerd \$DOCKER_NETWORK_OPTIONS ExecReload=/bin/kill -s HUP \$MAINPID LimitNOFILE=infinity LimitNPROC=infinity LimitCORE=infinity TimeoutStartSec=0 Delegate=yes KillMode=process Restart=on-failure StartLimitBurst=3 StartLimitInterval=60s [Install] WantedBy=multi-user.target EOF systemctl daemon-reload systemctl enable flanneld systemctl restart flanneld systemctl restart dockerd

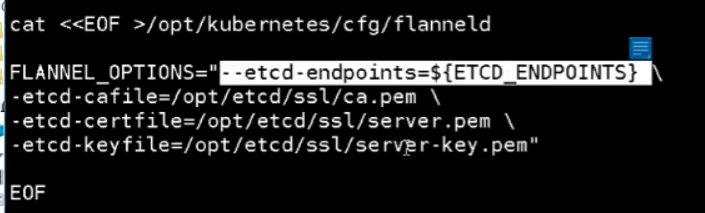

下面研究下配置脚本。如果命令行没有传endpoint值的话用后面默认,如果传了就用传递的。

写组件的配置文件,指定endpoints,指定etcd的证书文件,我们已经有这三个文件了,直接指定这三个文件。如果有多块网卡的话需要指定网卡

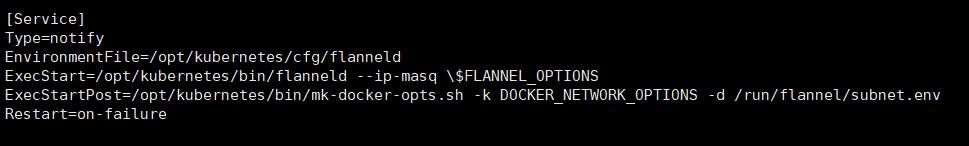

它默认获取的是etcd中这个key,

当你启动的时候,会执行这个脚本,这个脚本会生成子网环境文件。而docker读取这个文件里的子网来创建启动的

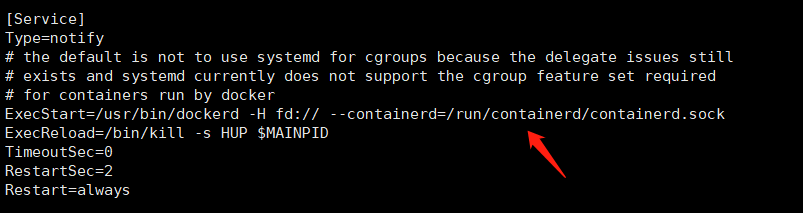

我们先将docker服务备份下。不过这里是生成的dockerd,我们启动用dockerd文件,不影响docker文件

[root@mcwk8s03 ~]# cp /usr/lib/systemd/system/docker.service /usr/lib/systemd/system/docker.servicebak

执行脚本部署flannel,因为我们写的docker启动文件是多个d,先把这个地方去掉,回头把docker的启动改成使用dockerd的启动文件。

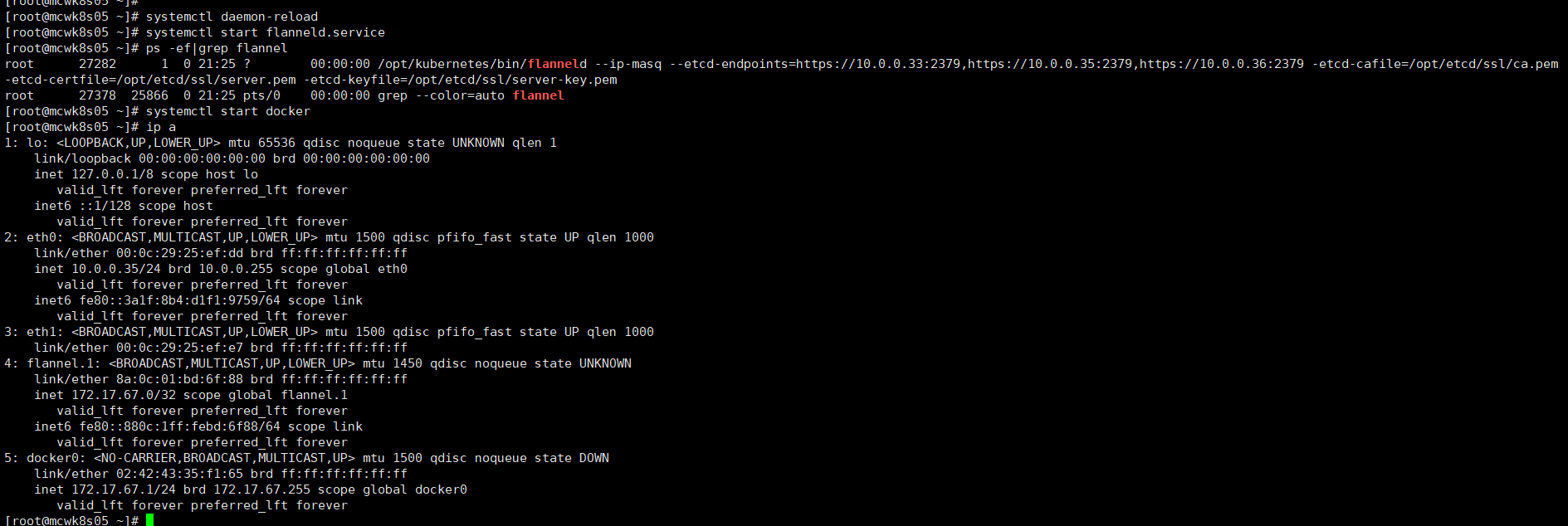

如下,将etcd的节点传进去,我们可以看到成功启动了进程。flanal这里使用的是https的双认证,就是服务的会认证你客户端的。客户端也可以认证你服务端的。在https中,一般使用单认证的。

[root@mcwk8s03 ~]# bash flannel.sh https://10.0.0.33:2379,https://10.0.0.35:2379,https://10.0.0.36:2379 Created symlink from /etc/systemd/system/multi-user.target.wants/flanneld.service to /usr/lib/systemd/system/flanneld.service. [root@mcwk8s03 ~]# ps -ef|grep flannel root 28220 1 0 20:59 ? 00:00:00 /opt/kubernetes/bin/flanneld --ip-masq --etcd-endpoints=https://10.0.0.33:2379,https://10.0.0.35:2379,https://10.0.0.36:2379 -etcd-cafile=/opt/etcd/ssl/ca.pem -etcd-certfile=/opt/etcd/ssl/server.pem -etcd-keyfile=/opt/etcd/ssl/server-key.pem root 28324 25043 0 20:59 pts/2 00:00:00 grep --color=auto flannel [root@mcwk8s03 ~]#

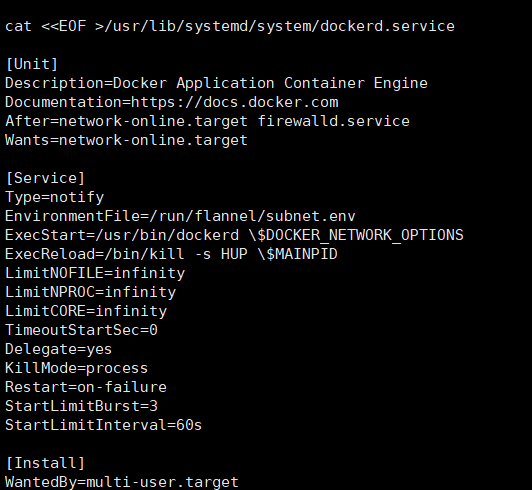

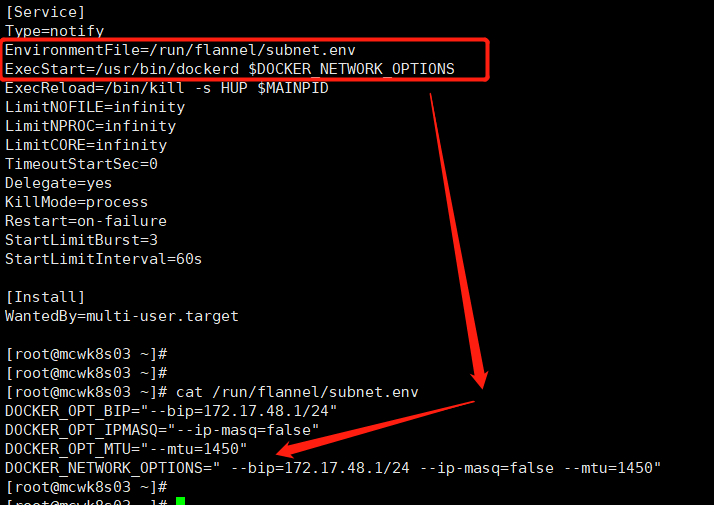

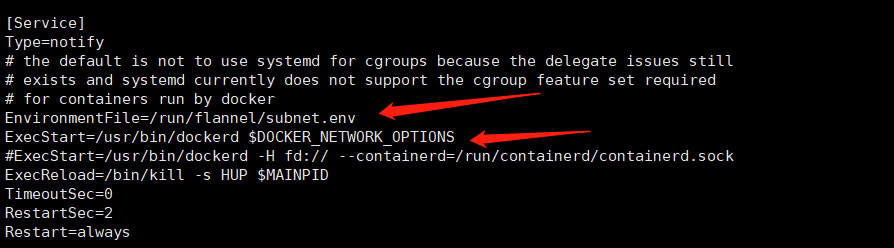

我们需要在docker的system启动文件中添加下面两个选项,docker安装后默认是没有的,是我们添加的。让docker启动时去读取这个子网的配置文件,找bip,这样创建的docker就能使用这个网段内的ip了。

我们没添加时时这样的:

先像如下方式添加上把

EnvironmentFile=/run/flannel/subnet.env

ExecStart=/usr/bin/dockerd $DOCKER_NETWORK_OPTIONS

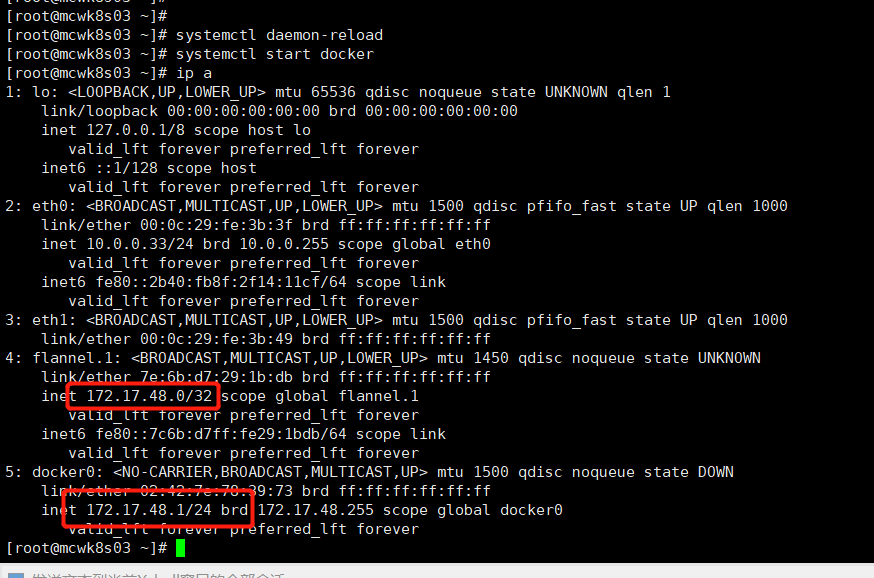

下面启动docker,两个网卡网段貌似时包含关系,这样能正常通信

下面配置其它两个节点。将文件传输到其它两个节点

[root@mcwk8s03 ~]# scp -rp /opt/kubernetes/ 10.0.0.35:/opt/ root@10.0.0.35's password: flanneld 100% 223 198.8KB/s 00:00 flanneld 100% 35MB 105.1MB/s 00:00 mk-docker-opts.sh 100% 2139 2.6MB/s 00:00 [root@mcwk8s03 ~]# scp -rp /usr/lib/systemd/system/{flanneld,docker}.service 10.0.0.35:/usr/lib/systemd/system/ root@10.0.0.35's password: flanneld.service 100% 417 237.6KB/s 00:00 docker.service 100% 1801 2.5MB/s 00:00 [root@mcwk8s03 ~]#

在第二个节点上部署flannel,不过网点貌似和第一个节点不一样。哦,好像是这样的,都是在172.17.0.0/16网段下的子网,

下面是第一次执行命令,估计是脚本帮我们生成的配置把,所以第二个节点启动的时候不需要传etcd的endpoint了,它是将endpoint也加入到启动文件里了

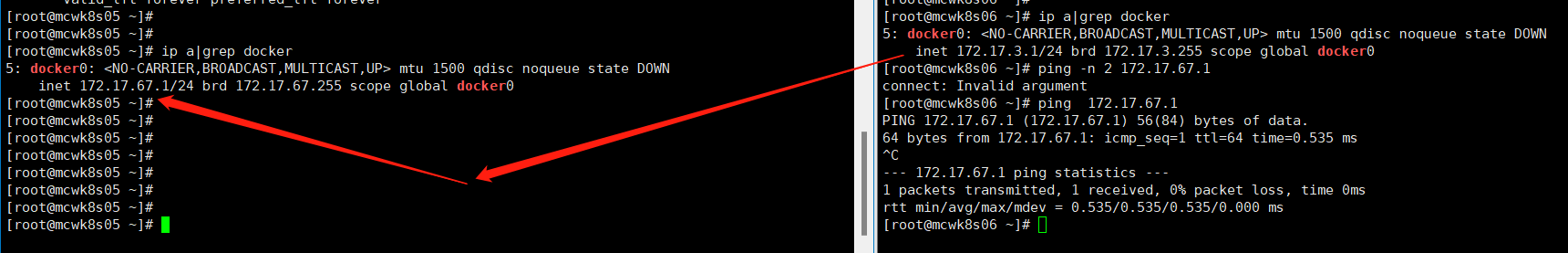

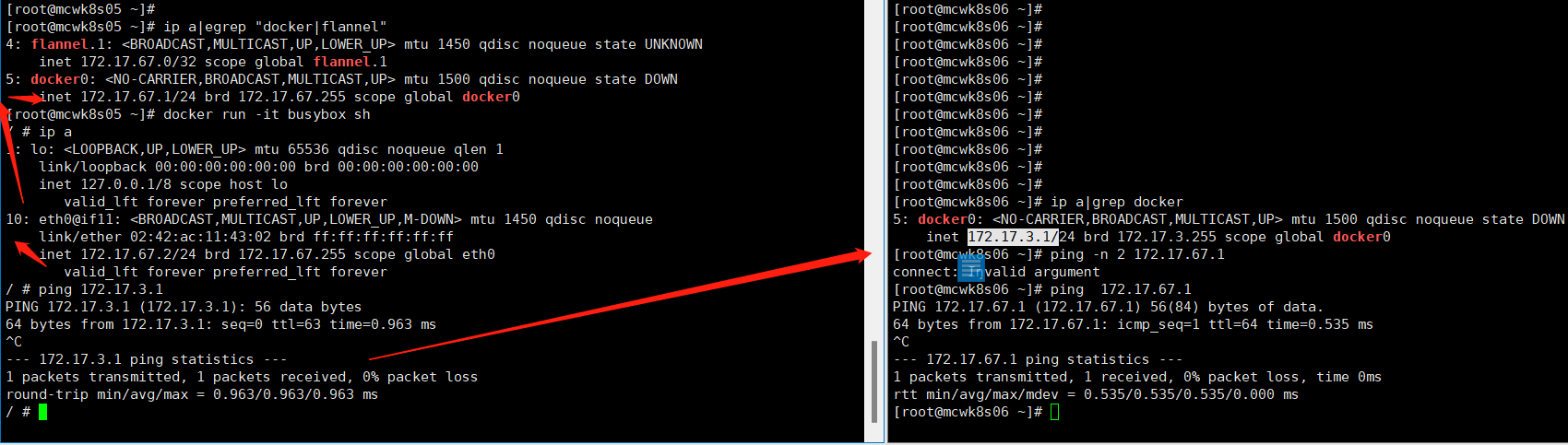

如下,在两个节点间,因为有了flannel,两个不同的子网可以互相通信了。flanel起到了路由器的作用,起个容器测试下没有问题。这样网络算是部署好了。

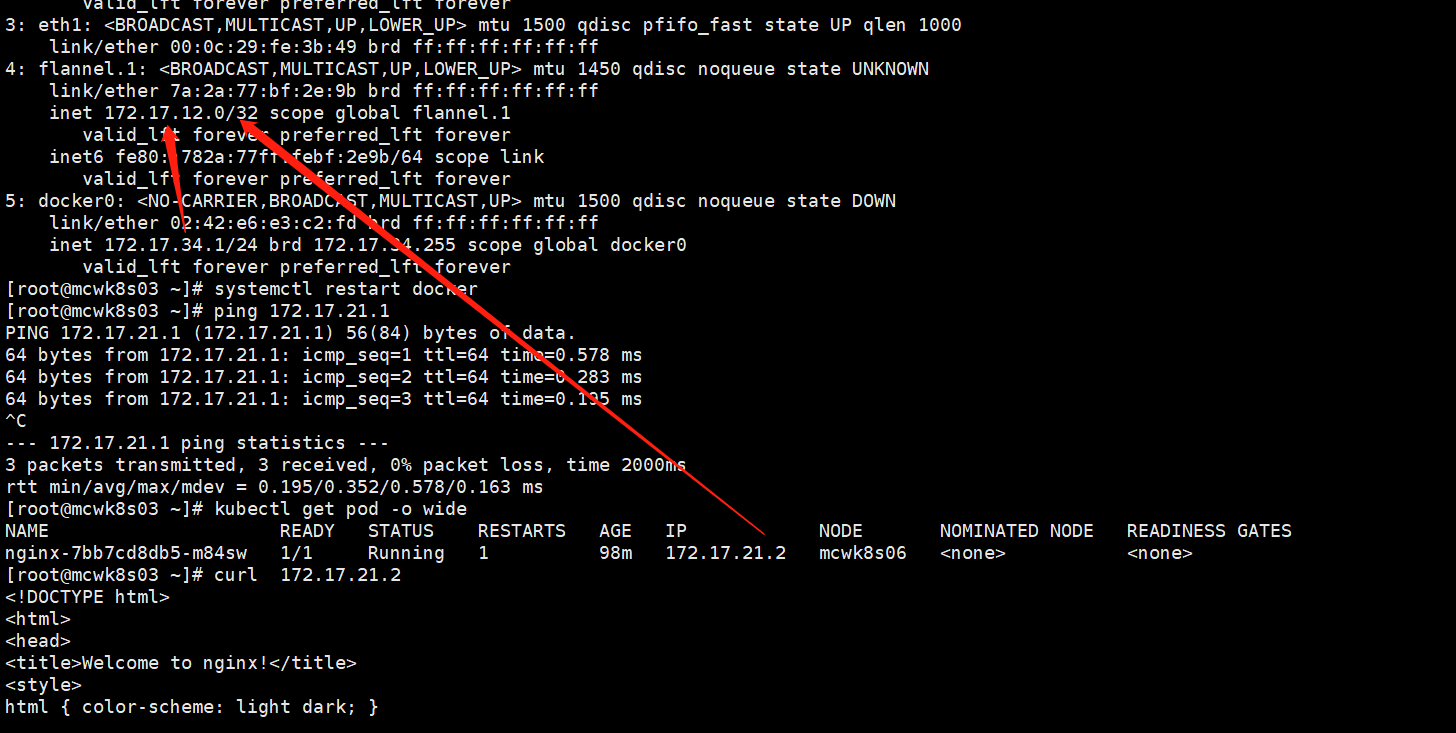

解决docker0和flanel1不在同一个网段,导致容器不能跨主机通信问题

如下,我把虚拟机从挂起到运行,发现flannel程序停掉了,于是重新启动,但是发现flannel重新分配了ip网段,和原来的不一致了,于是我重启了下docker服务,因为docker启动文件里设置了如下两个配置,所以它会重新分配ip,docker0获得的新ip和flannel1在同一个网段了,再次测试网络,可以跨主机通信。生产环境不知道重启docker会不会影响应用应该不会把?

EnvironmentFile=/run/flannel/subnet.env ExecStart=/usr/bin/dockerd $DOCKER_NETWORK_OPTIONS

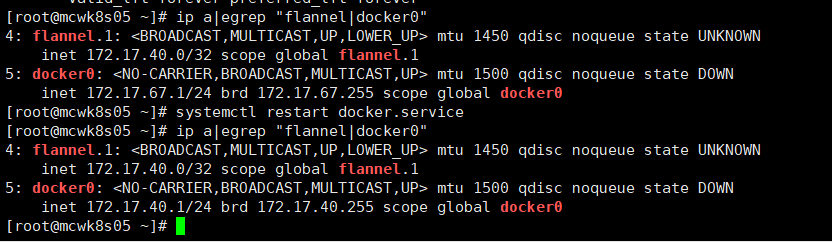

部署Master组件

环境准备以及自签证书

必须先部署aipserver

解压一些,三个部署脚本

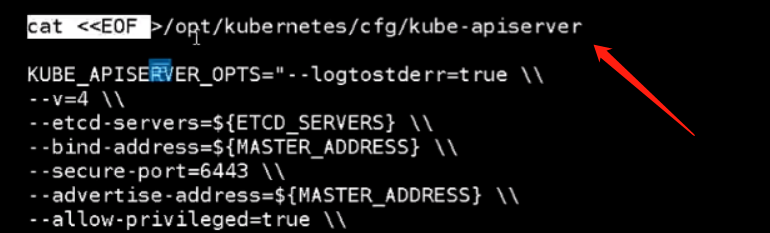

先看下apiserver部署脚本

第一个传参master地址,第二个是etcd地址

然后是生成配置文件

配置文件中需要配置它的证书。证书分两个,上面是配置apiserver的证书,下面那三个是连接etcd的证书

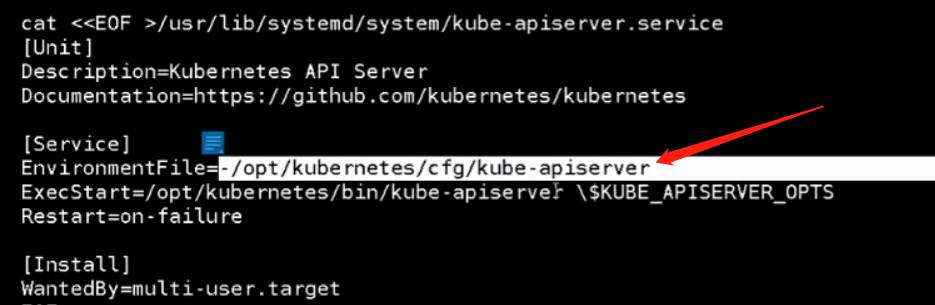

下面是生成systemd启动文件。引入了apiserver的配置文件

引入环境文件之后,执行开始后面启动命令就可以引用环境文件里面的KUBE_APISERVER_OPTS选项,里面全是启动命令后面需要接的启动参数

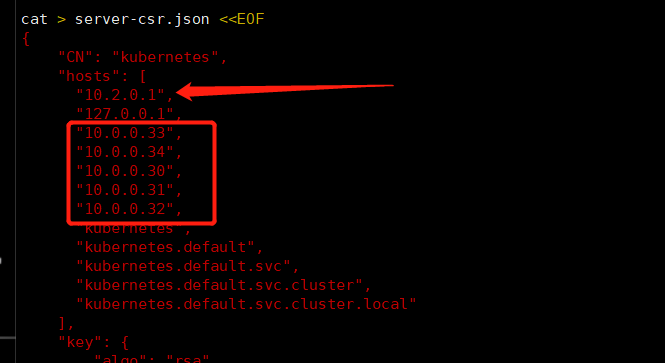

因为集群ip范围和我们宿主机是一样的,我们修改一下范围,改成10.2.0.0/24

我们先生成k8s的证书,需要改的是如下的三个IP。其它的和etcd的一样

因为集群ip访问网段我们是10.2.0.0/24,那这个ip我就改成这个把,还是第一个ip。下面的就是集群几个ip都要写上,master的和lb的ip。如果没有写也没关系,后期再生成也可以的。

下面k8s预留的角色,这些都不用修改,是官方定的

执行生成证书

admin的暂时不用。master只需要ca和server就行,proxy先不看

部署apiserver

我们将证书复制到k8s证书目录下

cp ca*pem server*pem /opt/kubernetes/ssl/

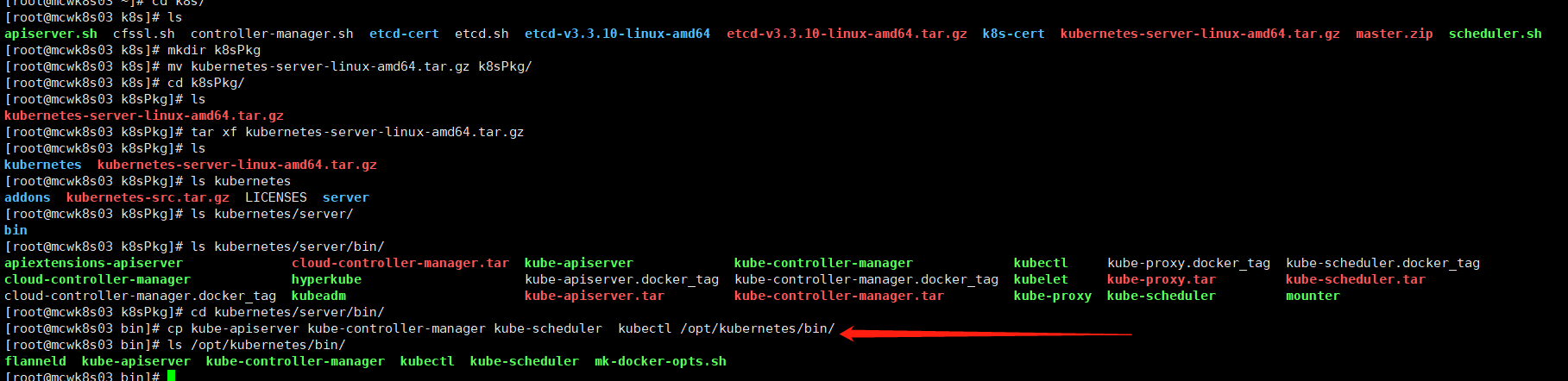

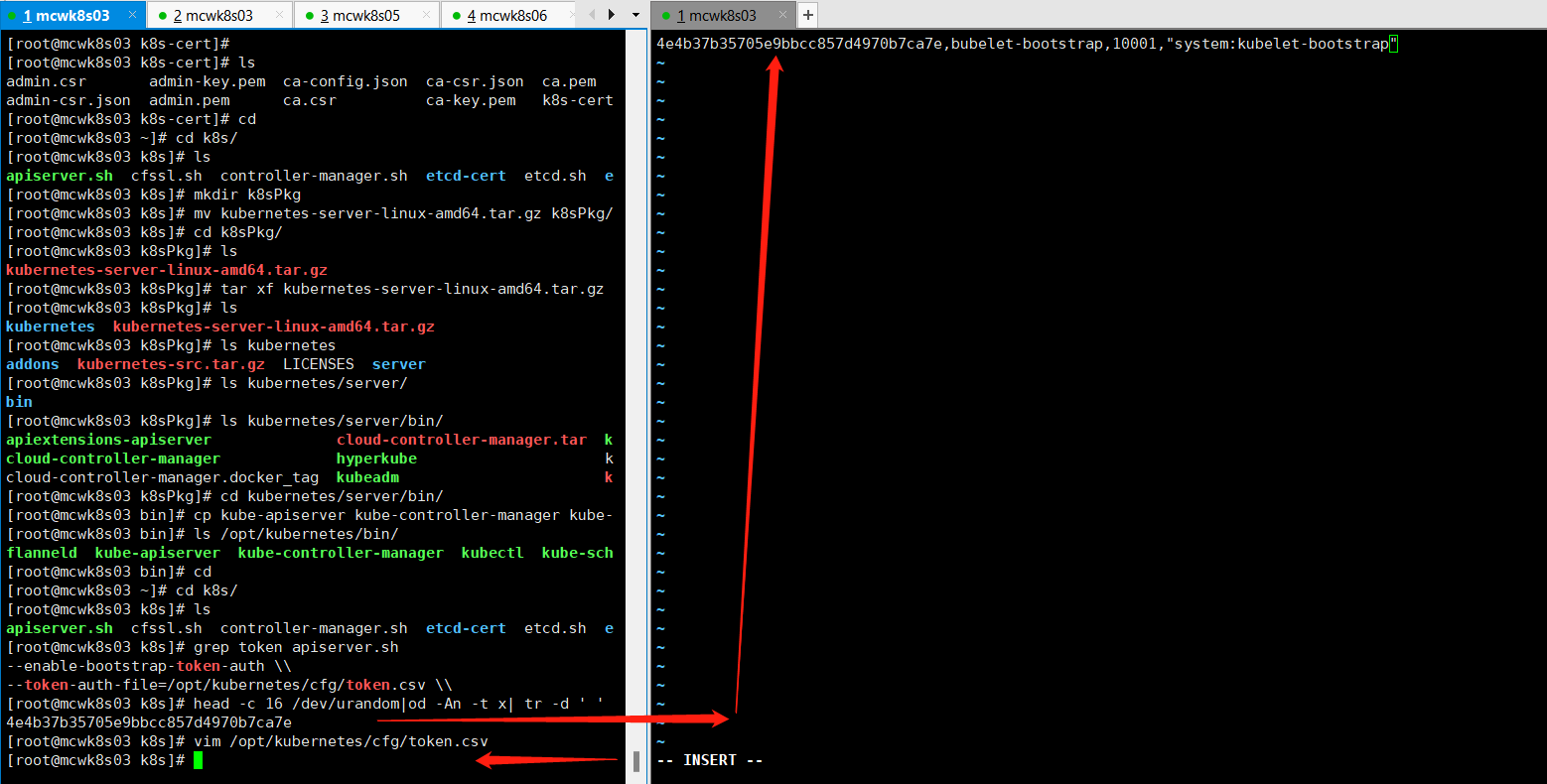

我们将k8s的包解压,将四个可执行程序拷贝到部署的bin目录下

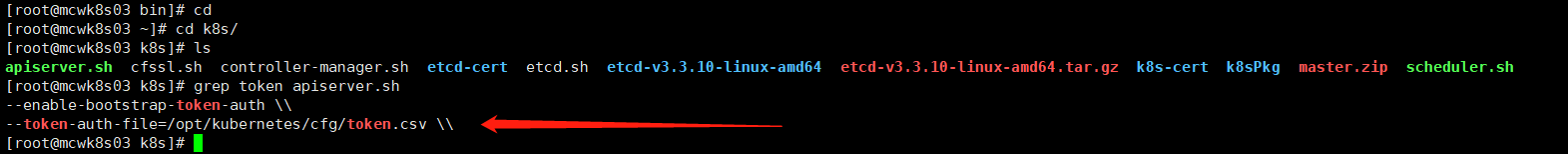

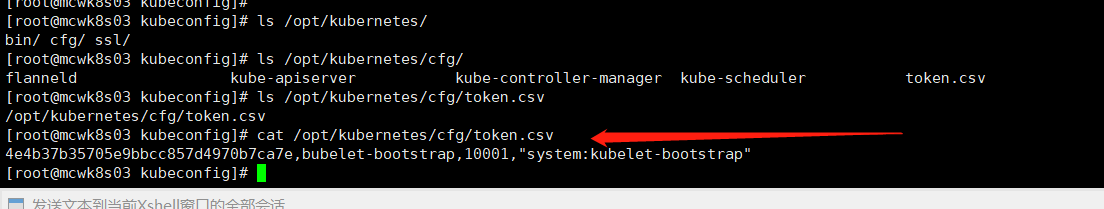

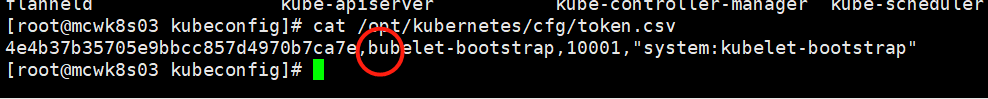

我们可以看到,配置apiserver的启动参数选项中有token这一项,我们需要创建token

官方使用的命令,生成token

[root@mcwk8s03 k8s]# head -c 16 /dev/urandom|od -An -t x| tr -d ' ' 4e4b37b35705e9bbcc857d4970b7ca7e [root@mcwk8s03 k8s]#

[root@mcwk8s03 k8s]# cat /opt/kubernetes/cfg/token.csv

4e4b37b35705e9bbcc857d4970b7ca7e,bubelet-bootstrap,10001,"system:kubelet-bootstrap"

[root@mcwk8s03 k8s]#

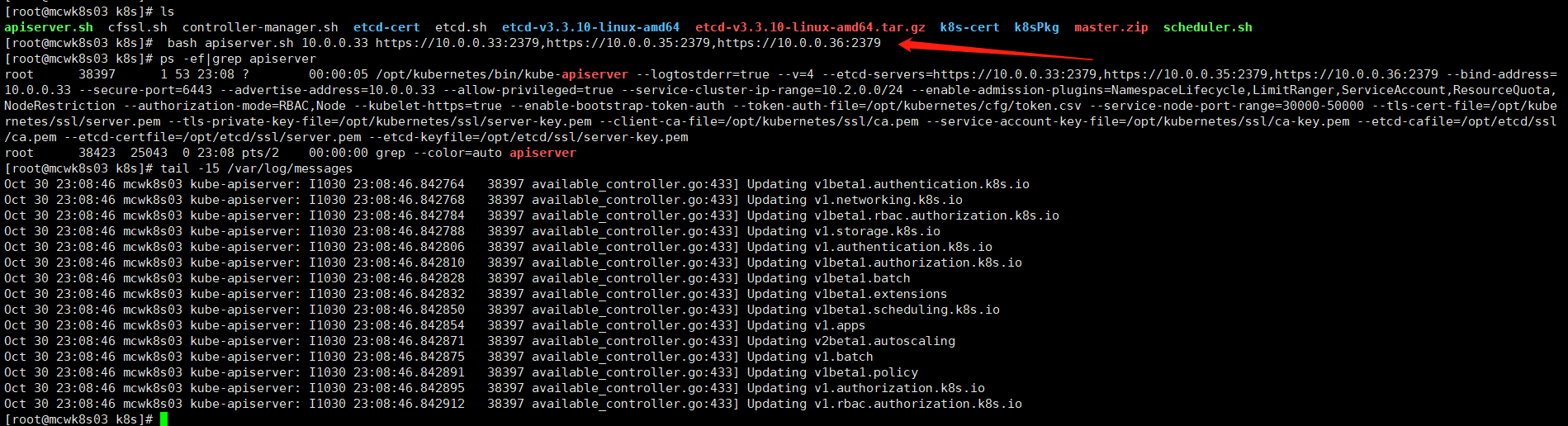

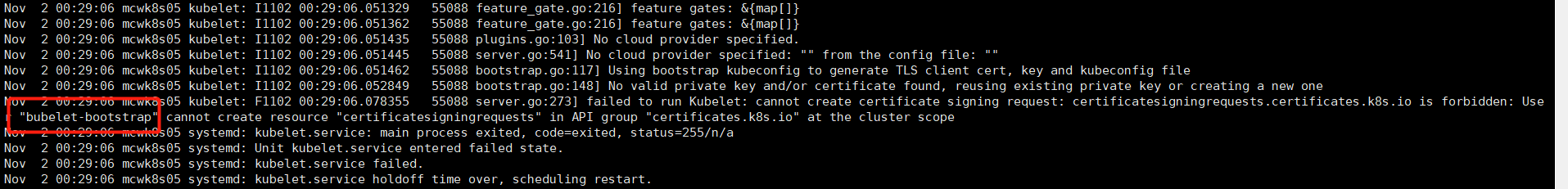

编写token文件,分别是token,用户,用户id,以及绑定的用户角色.如下,是错误的,因为kubelet-bootstrap 写成了bubelet-bootstrap,名字写错了,导致后面添加不了node进集群,需要重新做

[root@mcwk8s03 k8s]# cat /opt/kubernetes/cfg/token.csv 4e4b37b35705e9bbcc857d4970b7ca7e,bubelet-bootstrap,10001,"system:kubelet-bootstrap" [root@mcwk8s03 k8s]#

我们重新执行脚本。初始化apisver,启动apiserver成功,之前因为环境缺东西失败了,查看日志没有什么明显报错

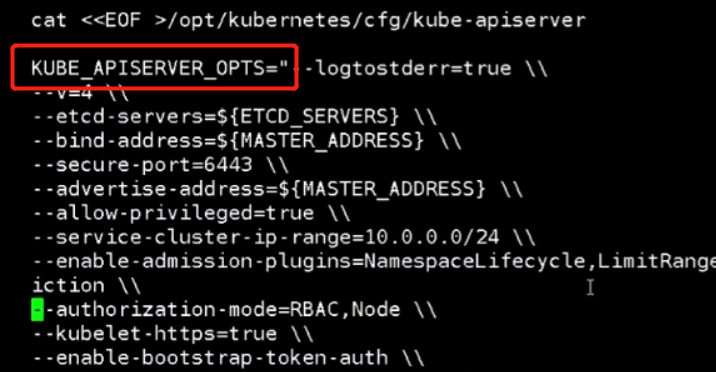

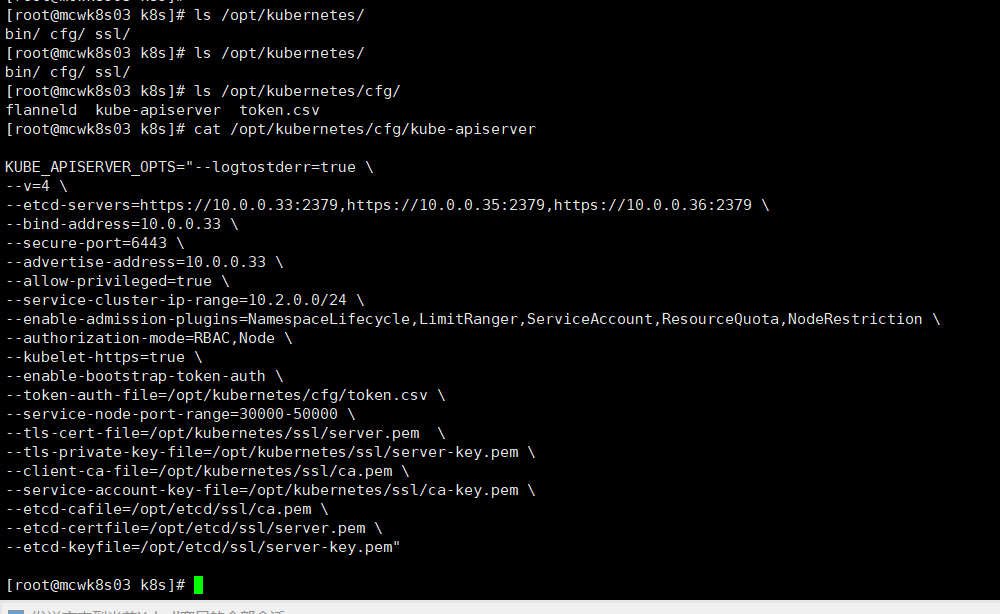

如下,我们查看生成的主配置文件。

--logtostderr=true 错误日志启动,会加到系统日志中去 --v=4日志级别,输出日志会越详细 --etcd-servers etcd地址 --bind-address绑定的地址,绑定的节点地址,也就是这个主机的ip地址

--secure-port 绑定的端口 默认端口,https --advertise-address集群通告地址和绑定地址一样 --service-cluster-ip-range服务集群ip范围,我们生成证书时也指定用这个ip范围的了。我们脚本生成配置时设置的这个范围,防止和宿主机冲突了。这是作为clusterip用的把

--enable-admission-plugins 启用准入控制 ,生产环境就用后面的这项配置就行 --authorization-mode 认证模式 这里是rbac --kubelet-https 这里是它访问使用https

--enable-bootstrap-token-auth 启用token认证 ,自动为node颁发证书

--token-auth-file kubelet在启动之后加入集群,他会以一个身份来连接server,这个身份就是我们之前在/opt/kubernetes/cfg/token.csv配置的bubelet-bootstrap,它绑定的角色是最小化权限,用于请求证书的签名

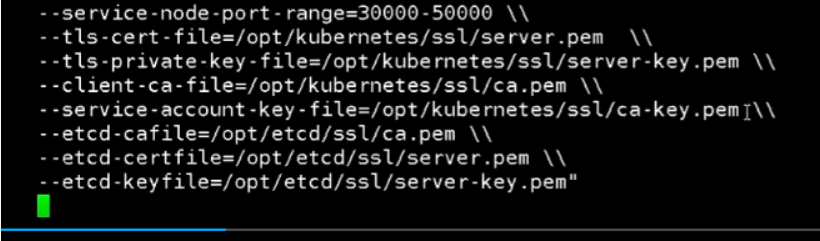

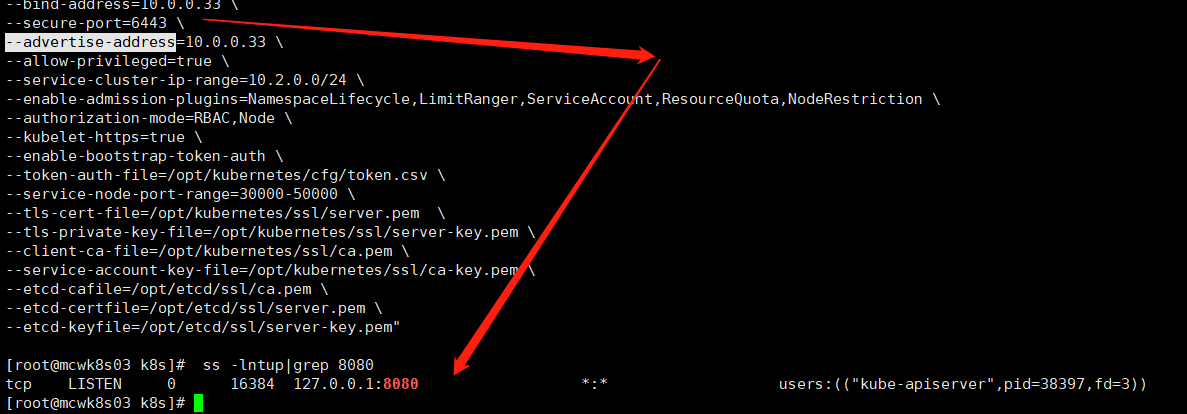

--service-node-port-range 服务内node的端口范围。再往下就是https的证书和etcd的证书了。

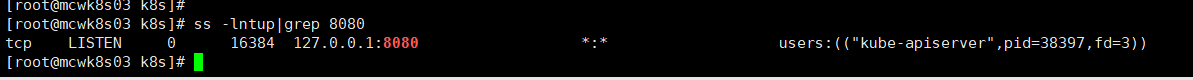

它还会起一个8080端口,这是kube-controller-manager会访问这个8080端口的。

apisever自己的证书,它自己作为ca机构有ca.pem。etcd的ca机构是在etcd服务下用的,而apiserver是作为etcd的客户端,是不需要加上这个的。

启动参数在官网中位置如下:https://kubernetes.io/docs/reference/command-line-tools-reference/kube-apiserver/

组件工具

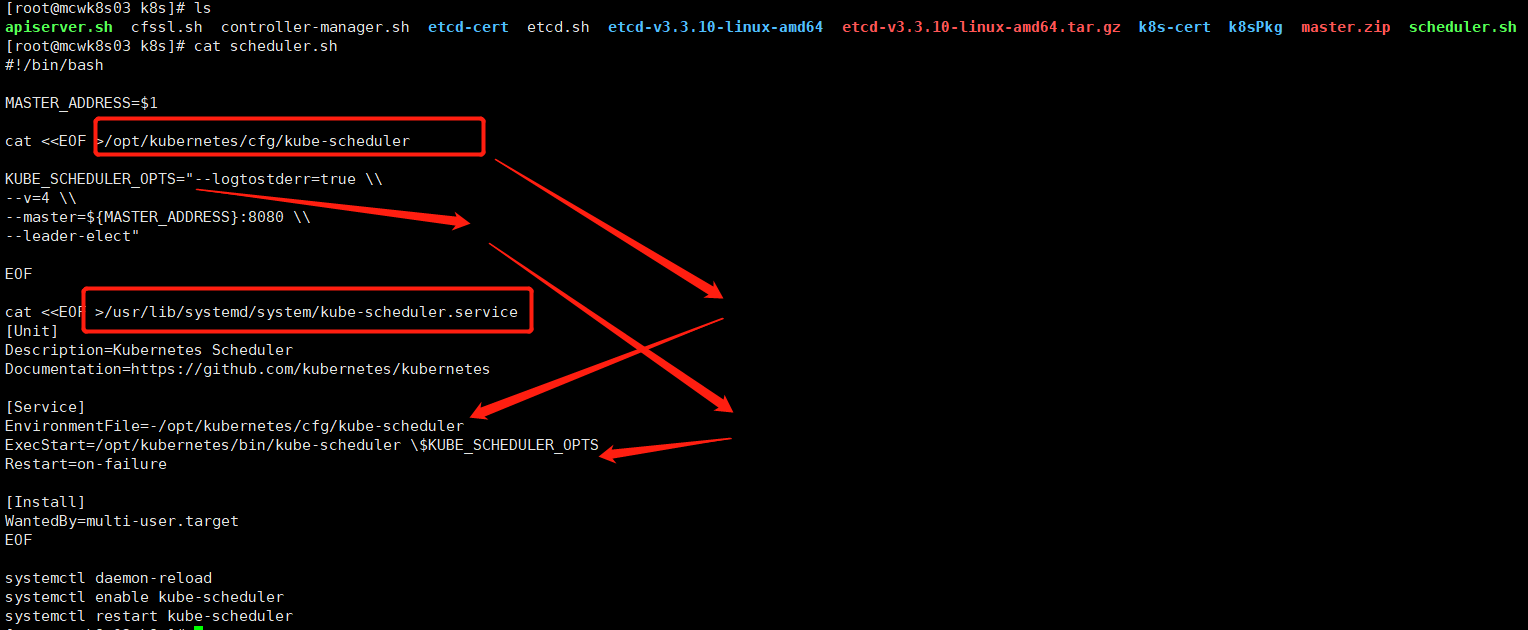

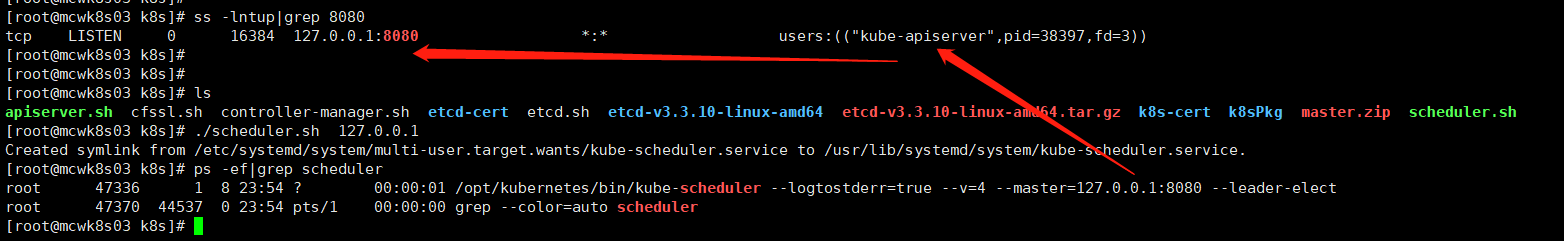

部署kube-scheduler

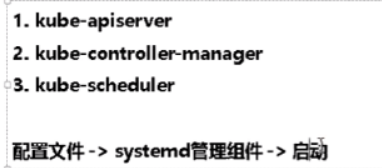

#!/bin/bash MASTER_ADDRESS=$1 cat <<EOF >/opt/kubernetes/cfg/kube-scheduler KUBE_SCHEDULER_OPTS="--logtostderr=true \\ --v=4 \\ --master=${MASTER_ADDRESS}:8080 \\ --leader-elect" EOF cat <<EOF >/usr/lib/systemd/system/kube-scheduler.service [Unit] Description=Kubernetes Scheduler Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=-/opt/kubernetes/cfg/kube-scheduler ExecStart=/opt/kubernetes/bin/kube-scheduler \$KUBE_SCHEDULER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target EOF systemctl daemon-reload systemctl enable kube-scheduler systemctl restart kube-scheduler

我们看一下部署脚本,生成了scheduler的配置文件和systemd启动文件。

配置文件,里面添加了scheduler启动参数,启动参数包括开启错误日志,日志级别,master的地址,以及leader的选举。这个服务不需要我们做高可用,它内部的选举实现了高可用,

启动文件中指定了使用的环境,也就是配置文件,然后执行开始的启动命令后面接配置文件中配置的启动参数变量

上面scheduler里指定的maser地址是指apiserver访问地址,apiserver有启动一个8080端口,它还有一个其它端口,这里用的是8080端口。

我们传入master地址,启动scheduler

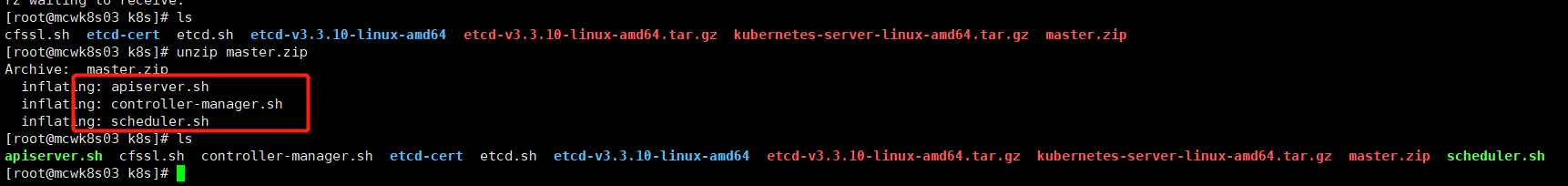

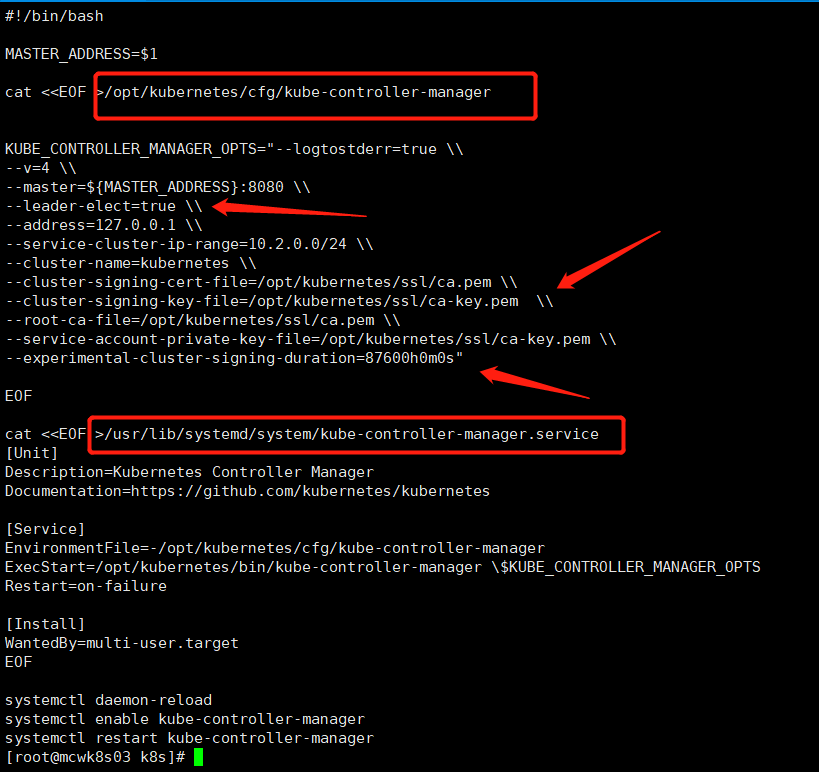

部署controller-manager

#!/bin/bash MASTER_ADDRESS=$1 cat <<EOF >/opt/kubernetes/cfg/kube-controller-manager KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=true \\ --v=4 \\ --master=${MASTER_ADDRESS}:8080 \\ --leader-elect=true \\ --address=127.0.0.1 \\ --service-cluster-ip-range=10.2.0.0/24 \\ --cluster-name=kubernetes \\ --cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem \\ --cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem \\ --root-ca-file=/opt/kubernetes/ssl/ca.pem \\ --service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem \\ --experimental-cluster-signing-duration=87600h0m0s" EOF cat <<EOF >/usr/lib/systemd/system/kube-controller-manager.service [Unit] Description=Kubernetes Controller Manager Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=-/opt/kubernetes/cfg/kube-controller-manager ExecStart=/opt/kubernetes/bin/kube-controller-manager \$KUBE_CONTROLLER_MANAGER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target EOF systemctl daemon-reload systemctl enable kube-controller-manager systemctl restart kube-controller-manager

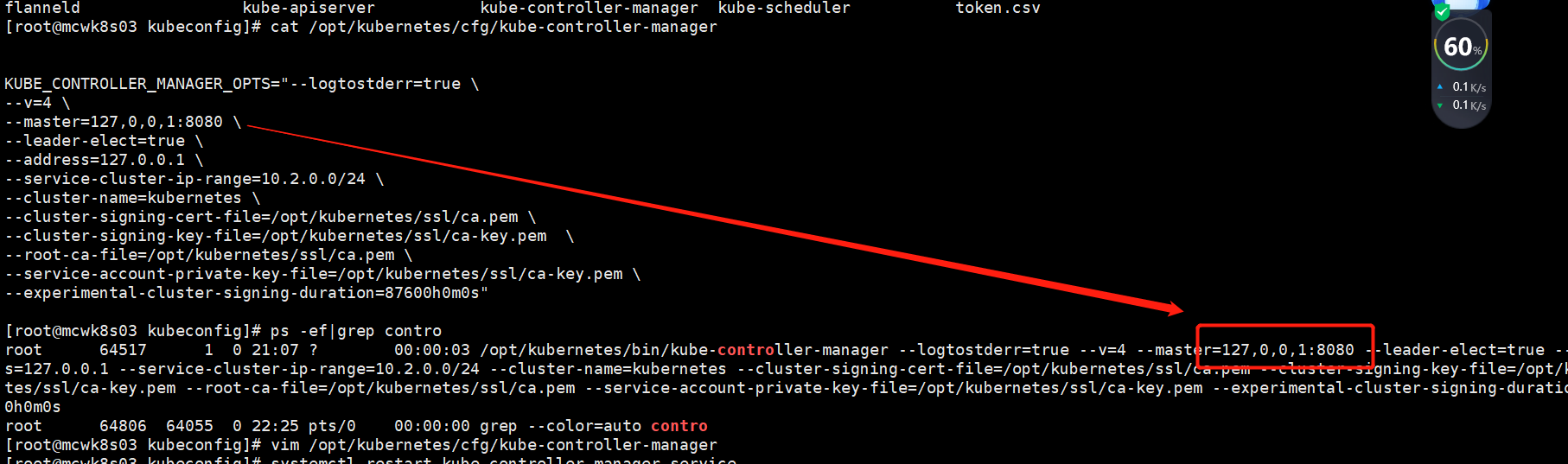

要传入master地址,这里的地址也是指apiserver的地址。然后生成controller-manager的配置。它要做一个签名的工作,所以需要证书,配置了它为kubeneres颁发签名时用的ca。过期集群的签名时间时10年,跟scheduler一样,他也是选举。

然后是创建systemd启动文件。使用配置文件的环境,然后启动命令后面接启动参数的配置项变量。服务集群ip范围是我们修改过的那个

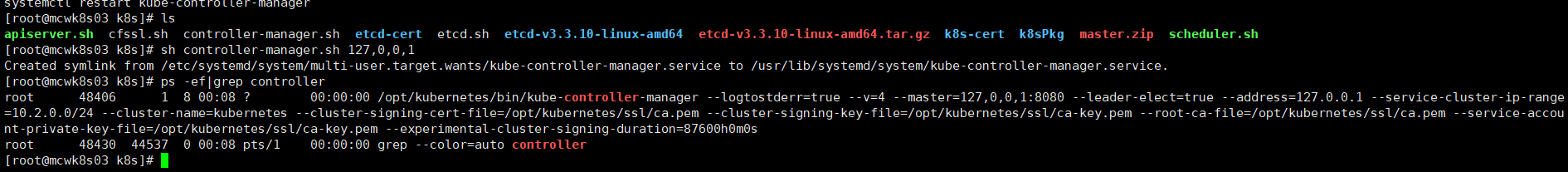

执行部署脚本,启动controller-manager。下面传参ip写错了,写成了127。0.0.1,导致后面node加入集群时,证书相关认证有问题,然后我一直找证书和node上哪里错了,忘记查看master的日志了。看来找对日志才是关键的解决问题的途径

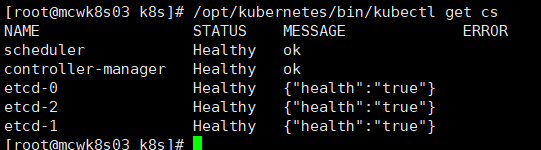

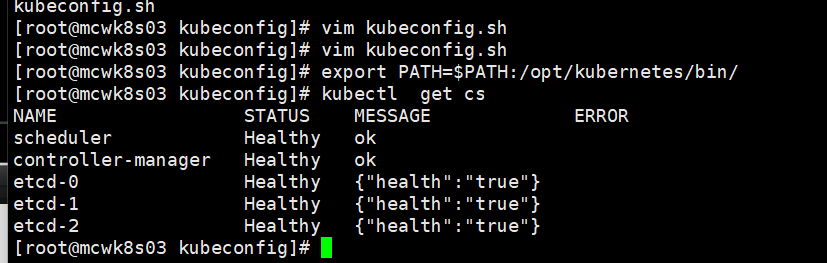

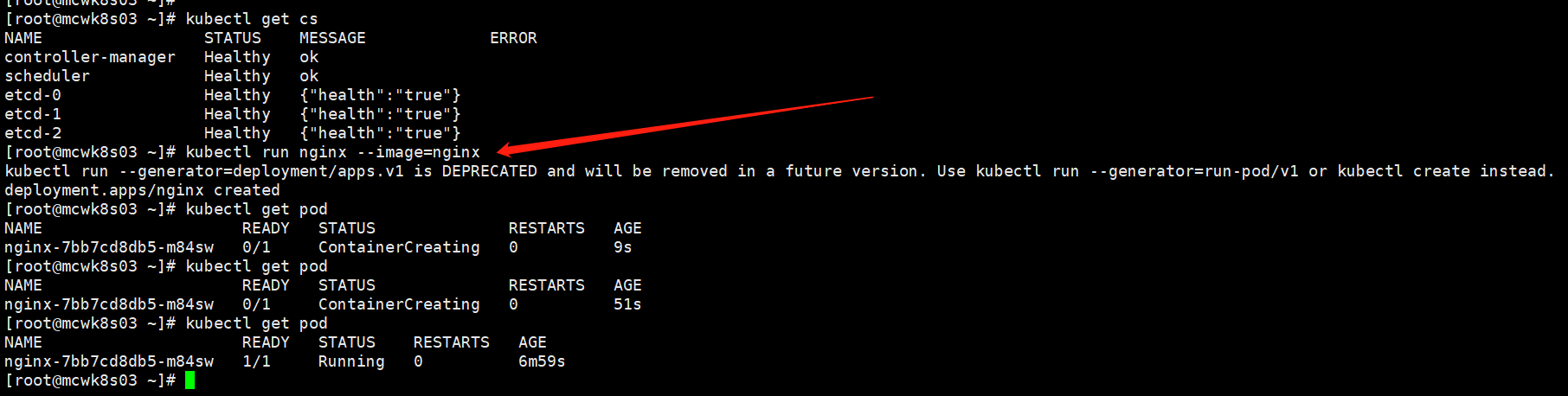

检查master部署情况 get cs查看主节点状态

\

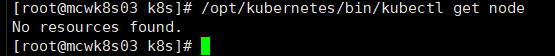

目前还没有node

部署Node组件

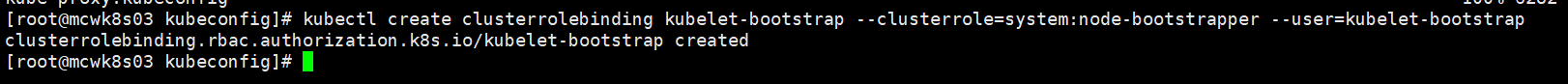

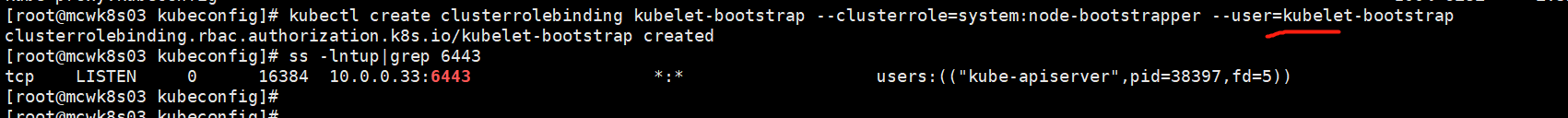

之前创建了一个token文件,将kubelet-bootstrap用户绑定到系统集群角色。创建集群角色绑定,创建的用户名 集群角色是那个 用户是那个(刚刚创建的那个)。

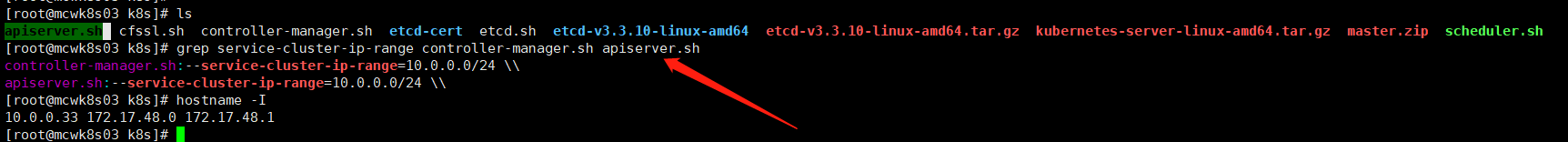

[root@mcwk8s03 ~]# ls anaconda-ks.cfg flannel.sh flannel-v0.10.0-linux-amd64.tar.gz k8s README.md [root@mcwk8s03 ~]# cd k8s/ [root@mcwk8s03 k8s]# ls apiserver.sh cfssl.sh controller-manager.sh etcd-cert etcd.sh etcd-v3.3.10-linux-amd64 etcd-v3.3.10-linux-amd64.tar.gz k8s-cert k8sPkg master.zip scheduler.sh [root@mcwk8s03 k8s]# cd k8sPkg/ [root@mcwk8s03 k8sPkg]# ls kubernetes kubernetes-server-linux-amd64.tar.gz [root@mcwk8s03 k8sPkg]# cd kubernetes/ [root@mcwk8s03 kubernetes]# ls addons kubernetes-src.tar.gz LICENSES server [root@mcwk8s03 kubernetes]# cd server/ [root@mcwk8s03 server]# ls bin [root@mcwk8s03 server]# cd bin/ [root@mcwk8s03 bin]# ls apiextensions-apiserver cloud-controller-manager.tar kube-apiserver kube-controller-manager kubectl kube-proxy.docker_tag kube-scheduler.docker_tag cloud-controller-manager hyperkube kube-apiserver.docker_tag kube-controller-manager.docker_tag kubelet kube-proxy.tar kube-scheduler.tar cloud-controller-manager.docker_tag kubeadm kube-apiserver.tar kube-controller-manager.tar kube-proxy kube-scheduler mounter [root@mcwk8s03 bin]# pwd /root/k8s/k8sPkg/kubernetes/server/bin [root@mcwk8s03 bin]# scp -rp kubelet kube-proxy 10.0.0.35:/opt/kubernetes/bin/ root@10.0.0.35's password: kubelet 100% 114MB 11.3MB/s 00:10 kube-proxy 100% 35MB 6.6MB/s 00:05 [root@mcwk8s03 bin]# scp -rp kubelet kube-proxy 10.0.0.36:/opt/kubernetes/bin/ root@10.0.0.36's password: kubelet 100% 114MB 19.0MB/s 00:06 kube-proxy 100% 35MB 13.6MB/s 00:02 [root@mcwk8s03 bin]#

上次解压node的部署脚本,两个文件

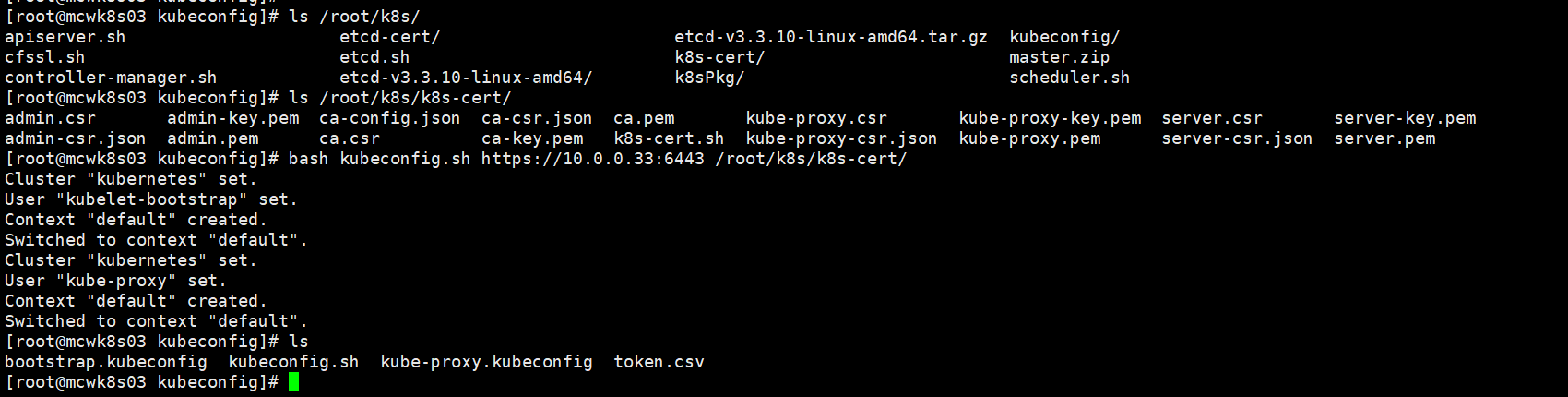

以ip作为主机名,这里。先生成/opt/kubernetes/cfg/kubelet.kubeconfig和/opt/kubernetes/cfg/bootstrap.kubeconfig 的文件

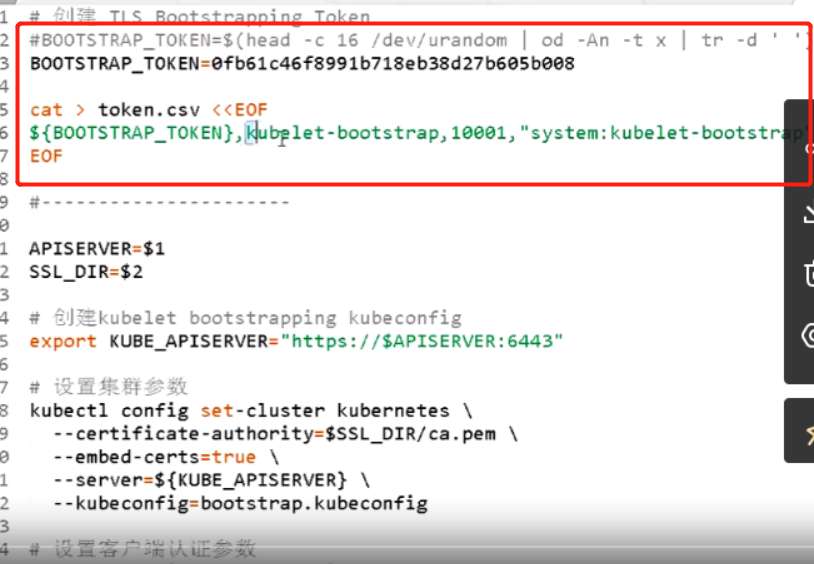

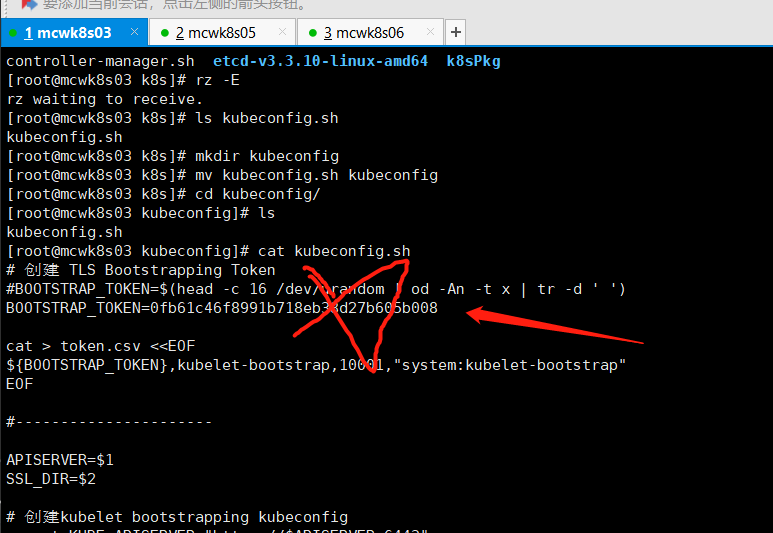

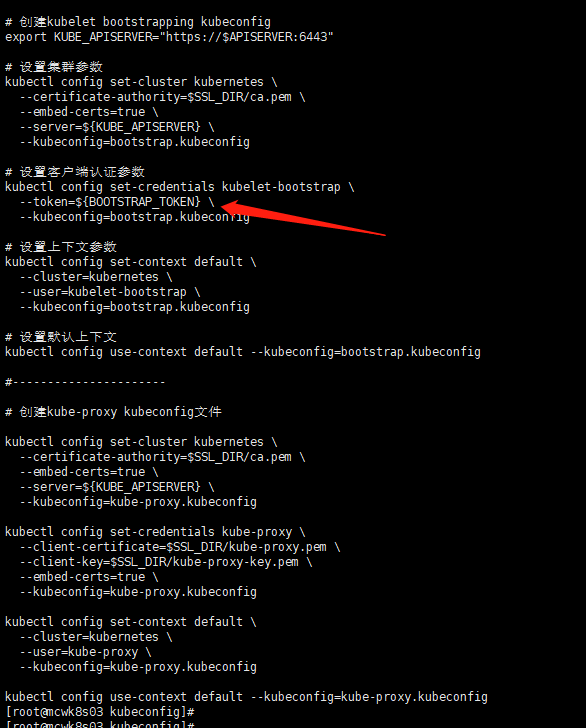

下面我们生成node上的配置文件。生成脚本放到主节点。token已经创建了,把两行删除。需要传参apiserver地址,ssl的目录

将token换成我们之前手动生成的。这个脚本主要生产node上那两个组件的配置文件。kubelet的主要是用来请求api颁发的证书。proxy组件的配置,需要从apiserver拉取网络规则,到本地刷新。

[root@mcwk8s03 kubeconfig]# cat kubeconfig.sh # 创建 TLS Bootstrapping Token #BOOTSTRAP_TOKEN=$(head -c 16 /dev/urandom | od -An -t x | tr -d ' ') BOOTSTRAP_TOKEN=0fb61c46f8991b718eb38d27b605b008 cat > token.csv <<EOF ${BOOTSTRAP_TOKEN},kubelet-bootstrap,10001,"system:kubelet-bootstrap" EOF #---------------------- APISERVER=$1 SSL_DIR=$2 # 创建kubelet bootstrapping kubeconfig export KUBE_APISERVER="https://$APISERVER:6443" # 设置集群参数 kubectl config set-cluster kubernetes \ --certificate-authority=$SSL_DIR/ca.pem \ --embed-certs=true \ --server=${KUBE_APISERVER} \ --kubeconfig=bootstrap.kubeconfig # 设置客户端认证参数 kubectl config set-credentials kubelet-bootstrap \ --token=${BOOTSTRAP_TOKEN} \ --kubeconfig=bootstrap.kubeconfig # 设置上下文参数 kubectl config set-context default \ --cluster=kubernetes \ --user=kubelet-bootstrap \ --kubeconfig=bootstrap.kubeconfig # 设置默认上下文 kubectl config use-context default --kubeconfig=bootstrap.kubeconfig #---------------------- # 创建kube-proxy kubeconfig文件 kubectl config set-cluster kubernetes \ --certificate-authority=$SSL_DIR/ca.pem \ --embed-certs=true \ --server=${KUBE_APISERVER} \ --kubeconfig=kube-proxy.kubeconfig kubectl config set-credentials kube-proxy \ --client-certificate=$SSL_DIR/kube-proxy.pem \ --client-key=$SSL_DIR/kube-proxy-key.pem \ --embed-certs=true \ --kubeconfig=kube-proxy.kubeconfig kubectl config set-context default \ --cluster=kubernetes \ --user=kube-proxy \ --kubeconfig=kube-proxy.kubeconfig kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

[root@mcwk8s03 kubeconfig]# vim /etc/profile

[root@mcwk8s03 kubeconfig]# source /etc/profile

[root@mcwk8s03 kubeconfig]#

执行生成配置

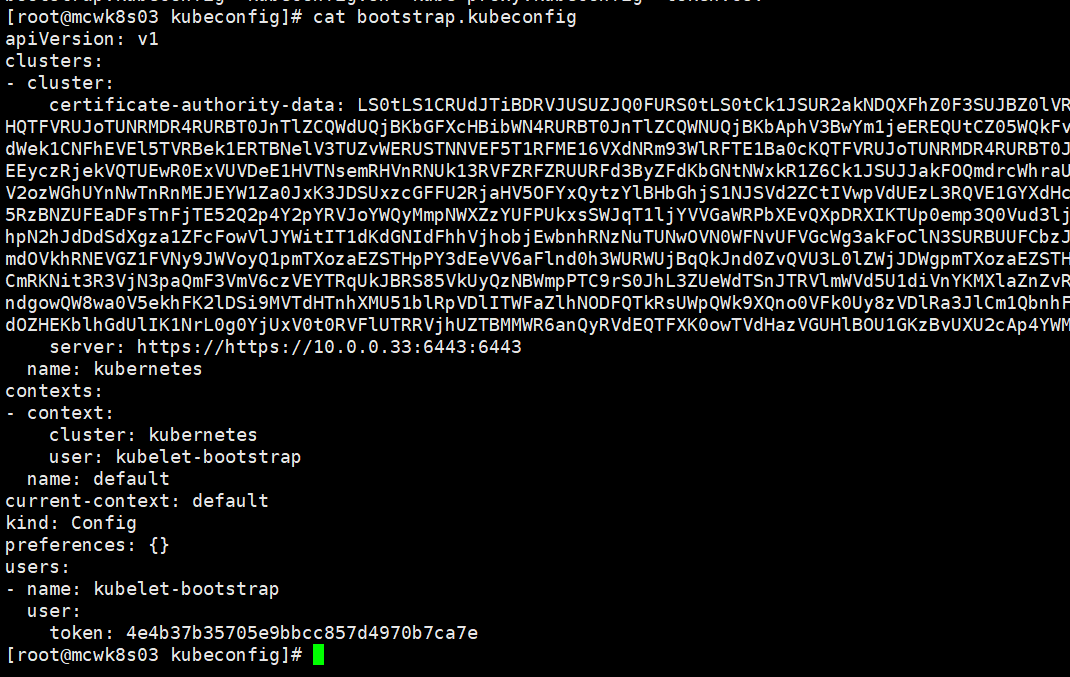

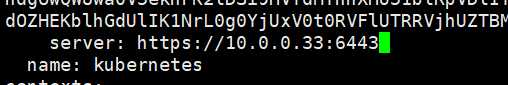

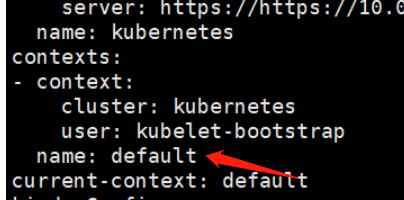

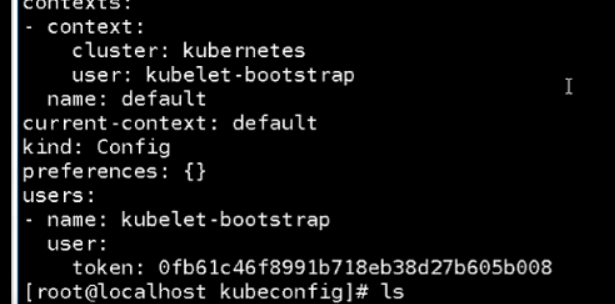

看下kubelet的配置,将ca的证书写到配置文件里面,kubeconfig就是将token和ca这些填充到这里面了。下面传参传多了,应该只需要ip地址就行

cluster指定了是哪个集群,集群名称,服务地址。前面显示是clusters,也就是可以配置多个cluster。

两个配置的都改正

定义一个上下文,指定使用那个集群,上面定义的name是kubernetes 的,用户是哪个,这里是kubelet的配置,指定使用哪个上下文。

定义用户

将配置拷贝到node上面

[root@mcwk8s03 kubeconfig]# ls bootstrap.kubeconfig kubeconfig.sh kube-proxy.kubeconfig token.csv [root@mcwk8s03 kubeconfig]# scp -rp bootstrap.kubeconfig kube-proxy.kubeconfig 10.0.0.35:/opt/kubernetes/cfg/ root@10.0.0.35's password: bootstrap.kubeconfig 100% 2163 149.6KB/s 00:00 kube-proxy.kubeconfig 100% 6282 2.0MB/s 00:00 [root@mcwk8s03 kubeconfig]# scp -rp bootstrap.kubeconfig kube-proxy.kubeconfig 10.0.0.36:/opt/kubernetes/cfg/ root@10.0.0.36's password: bootstrap.kubeconfig 100% 2163 103.3KB/s 00:00 kube-proxy.kubeconfig 100% 6282 2.5MB/s 00:00 [root@mcwk8s03 kubeconfig]#

上面那个是自动生成的。下面那个就是咱们刚刚拷贝的,手动生成的配置。证书目录,可访问的镜像

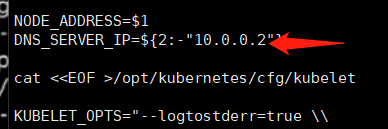

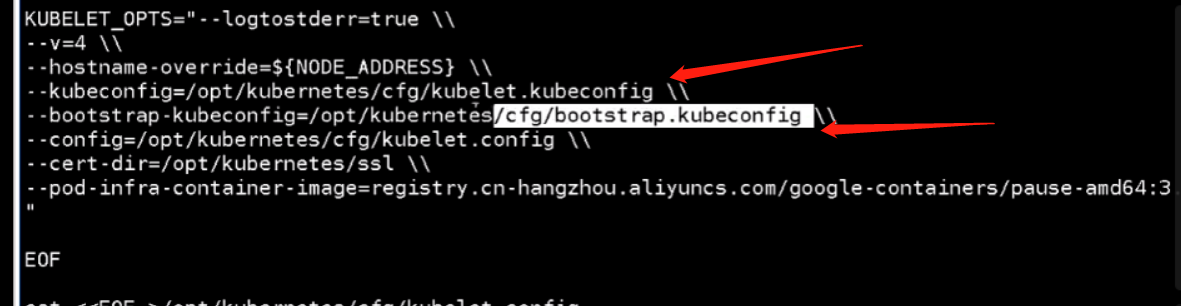

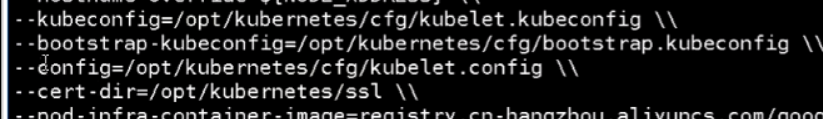

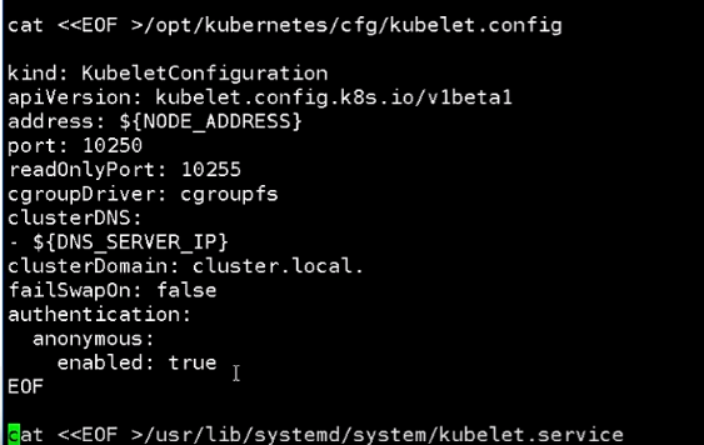

kubelet配置一个连接apiserver的,一个kubelet的配置选项的。

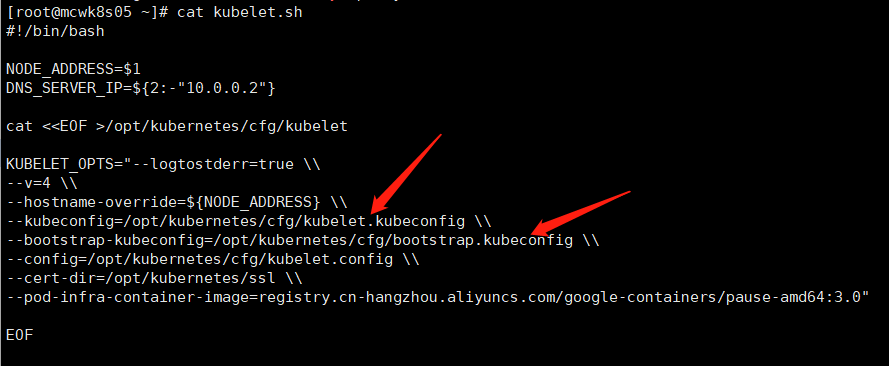

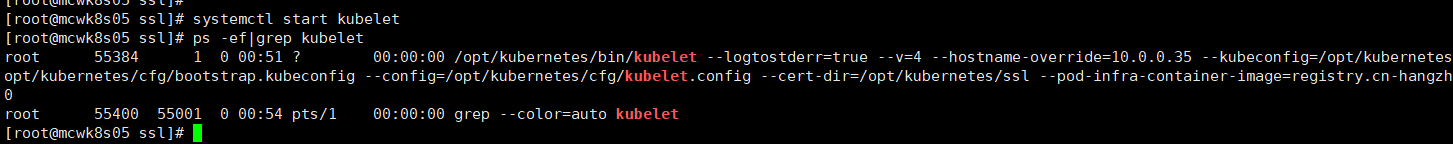

[root@mcwk8s05 ~]# cat kubelet.sh #!/bin/bash NODE_ADDRESS=$1 DNS_SERVER_IP=${2:-"10.0.0.2"} cat <<EOF >/opt/kubernetes/cfg/kubelet KUBELET_OPTS="--logtostderr=true \\ --v=4 \\ --hostname-override=${NODE_ADDRESS} \\ --kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \\ --bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig \\ --config=/opt/kubernetes/cfg/kubelet.config \\ --cert-dir=/opt/kubernetes/ssl \\ --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0" EOF cat <<EOF >/opt/kubernetes/cfg/kubelet.config kind: KubeletConfiguration apiVersion: kubelet.config.k8s.io/v1beta1 address: ${NODE_ADDRESS} port: 10250 readOnlyPort: 10255 cgroupDriver: cgroupfs clusterDNS: - ${DNS_SERVER_IP} clusterDomain: cluster.local. failSwapOn: false authentication: anonymous: enabled: true EOF cat <<EOF >/usr/lib/systemd/system/kubelet.service [Unit] Description=Kubernetes Kubelet After=docker.service Requires=docker.service [Service] EnvironmentFile=/opt/kubernetes/cfg/kubelet ExecStart=/opt/kubernetes/bin/kubelet \$KUBELET_OPTS Restart=on-failure KillMode=process [Install] WantedBy=multi-user.target EOF systemctl daemon-reload systemctl enable kubelet systemctl restart kubelet [root@mcwk8s05 ~]#

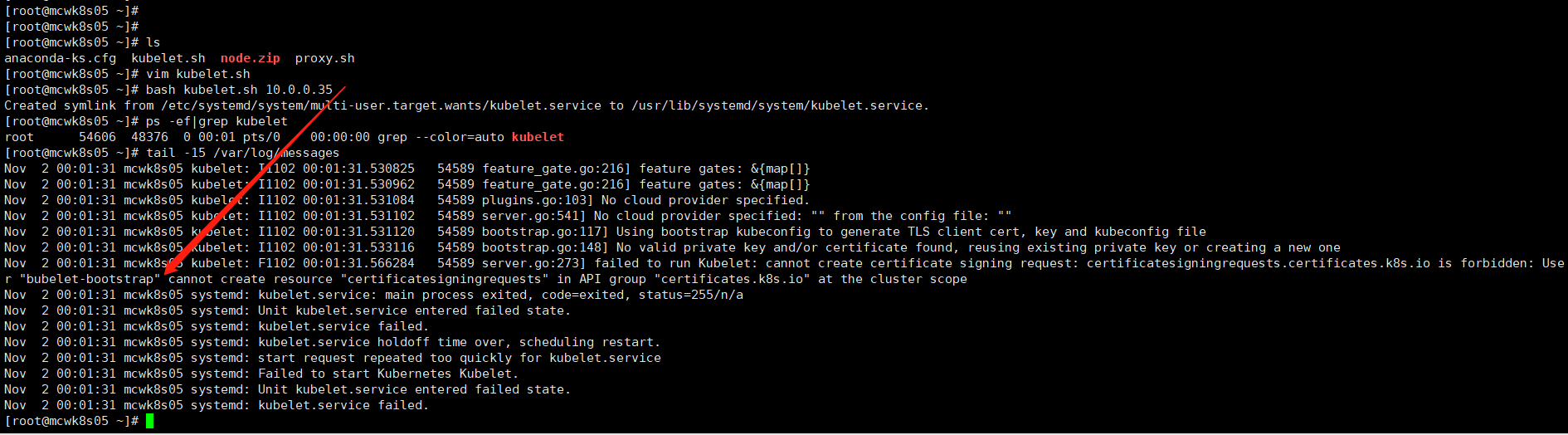

因为还没有创建用户绑定角色,所以报错,没起来虽然我们token里加了这个用户了但是没有绑定角色。我们执行部署脚本,传参node ip。

主节点上执行创建用户绑定角色

kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap

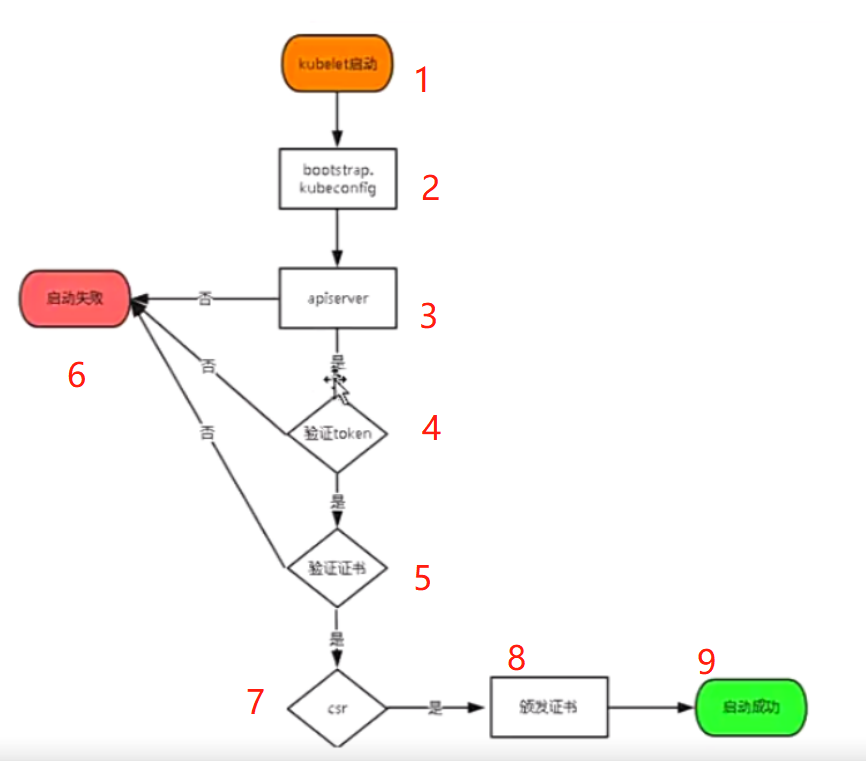

kubelet启动,会去这个配置里找到apiserver,找到token去到apiserver验证,验证失败就启动失败,验证成功就去验证证书,证书验证失败就启动失败,证书验证成功就

如下,master的cfg里的token.csv用户名配置错了。我们创建绑定的角色也不是这个

在此之前我们已经绑定了角色了

master节点我们将token用户名字改正之后,重启kube-apiserver.service。然后去node节点启动kubelet,成功启动

systemctl restart kube-apiserver.service

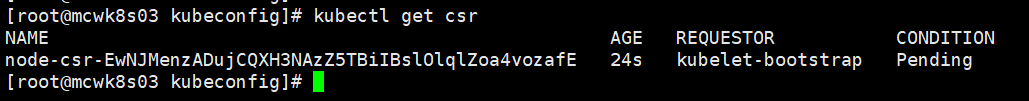

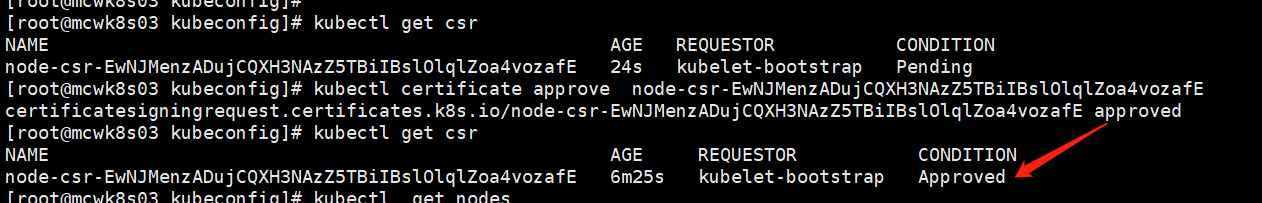

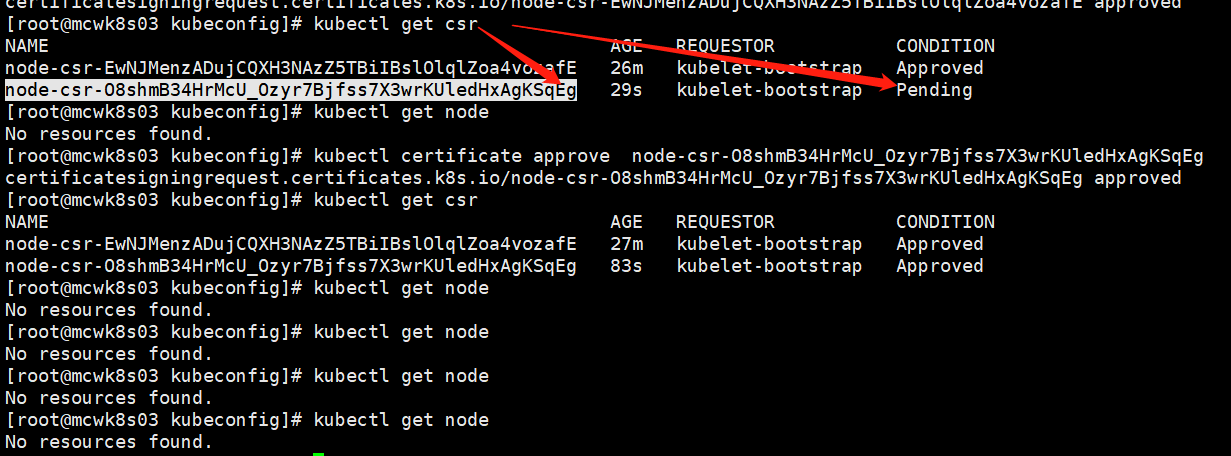

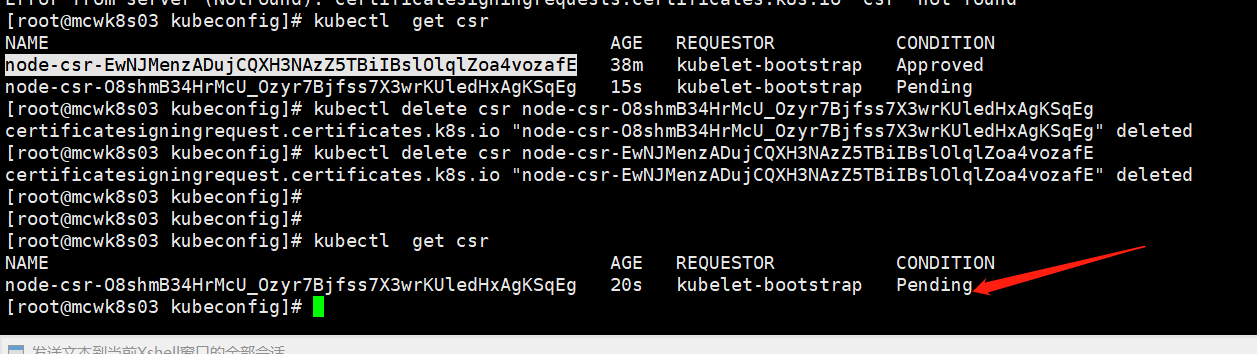

去master执行命令查看,可知刚刚启动的node节点等待apiserver颁发证书,颁发证书之后就加入到集群了。

执行如下,命令,后面接等待签名的name。然后再看有了变化了,这里有问题的,正常的是两个单词

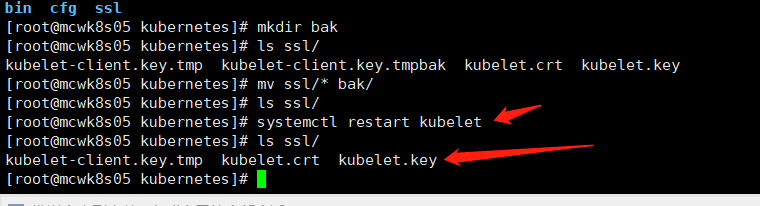

重启kubelet重新生成证书

需要重新签发证书,但是还是没有加入到集群

node 节点kubelet启动后,会向master申请csr证书,需要在master上同意证书申请

master节点执行命令,查看csr状态是Pending

master节点执行命令批准证书

master节点执行命令接受证书申请,同意后查看状态变成 Approved,Issued

node节点验证

在node节点ssl目录可以看到,多了4个kubelet的证书文件

将node的csr删除掉之后,这个node立刻自动又来要申请证书

** csr** 的全称是 CertificateSigningRequest 翻译过来就是证书签名请求

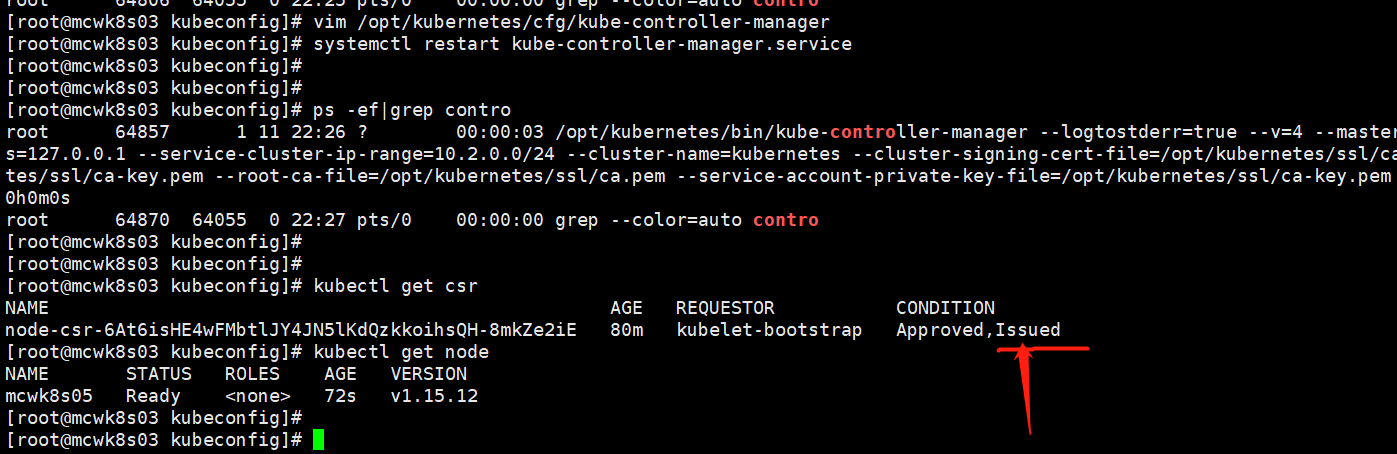

但是依然不行。这是因为master的一个组件配置错ip了,导致找不到apiserver。

配置错了,修改重启服务,然后直接就好了。node已经加入到集群中了

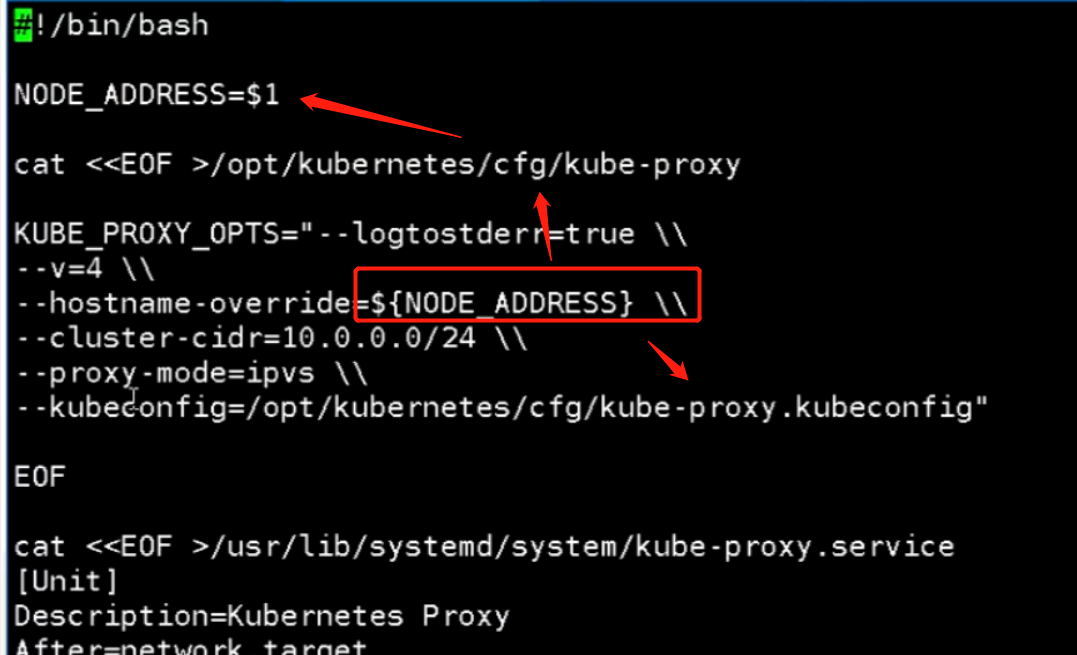

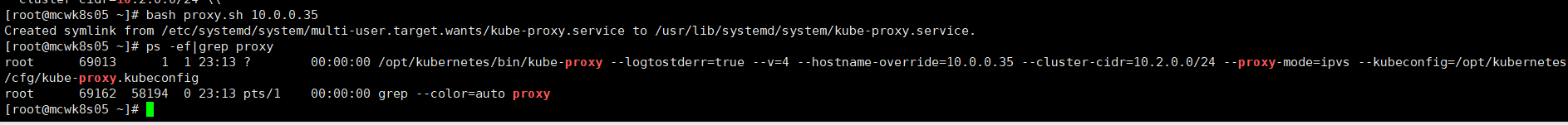

部署kube-proxy

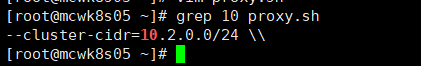

生产proxy的配置文件/。传入node ip,指定之前在主节点生成的配置,里面包含了证书相关的信息。修改cidr,修改成与主节点一致的,集群间的cidr。这个服务只要能连接到apiserver就i行,配置很少

部署成功

[root@mcwk8s05 ~]# cat proxy.sh #!/bin/bash NODE_ADDRESS=$1 cat <<EOF >/opt/kubernetes/cfg/kube-proxy KUBE_PROXY_OPTS="--logtostderr=true \\ --v=4 \\ --hostname-override=${NODE_ADDRESS} \\ --cluster-cidr=10.2.0.0/24 \\ --proxy-mode=ipvs \\ --kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfig" EOF cat <<EOF >/usr/lib/systemd/system/kube-proxy.service [Unit] Description=Kubernetes Proxy After=network.target [Service] EnvironmentFile=-/opt/kubernetes/cfg/kube-proxy ExecStart=/opt/kubernetes/bin/kube-proxy \$KUBE_PROXY_OPTS Restart=on-failure [Install] WantedBy=multi-user.target EOF systemctl daemon-reload systemctl enable kube-proxy systemctl restart kube-proxy [root@mcwk8s05 ~]#

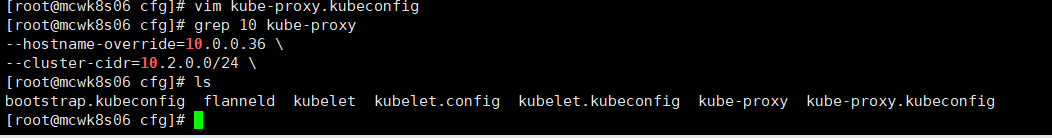

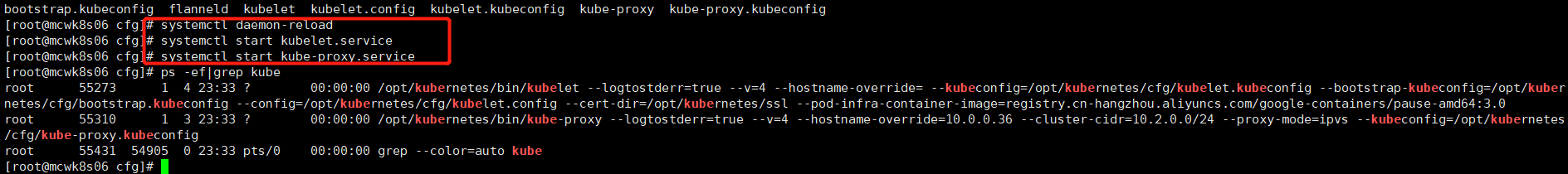

部署第二个node,复制修改一下就行

复制到第二个node

[root@mcwk8s05 ~]# scp -rp /opt/kubernetes/ 10.0.0.36:/opt/ The authenticity of host '10.0.0.36 (10.0.0.36)' can't be established. ECDSA key fingerprint is SHA256:JIUYda60WQpe7TXXwyn8+AbF08vB0aFJ5XLgn5O/UT0. ECDSA key fingerprint is MD5:f9:5a:76:92:6d:ed:41:d6:81:cb:5c:2a:56:ab:88:aa. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added '10.0.0.36' (ECDSA) to the list of known hosts. root@10.0.0.36's password: flanneld 100% 223 374.7KB/s 00:00 bootstrap.kubeconfig 100% 2163 2.2MB/s 00:00 kube-proxy.kubeconfig 100% 6269 12.8MB/s 00:00 kubelet 100% 364 376.3KB/s 00:00 kubelet.config 100% 254 545.4KB/s 00:00 kubelet.kubeconfig 100% 2292 4.2MB/s 00:00 kube-proxy 100% 185 350.8KB/s 00:00 flanneld 100% 35MB 10.3MB/s 00:03 mk-docker-opts.sh 100% 2139 1.3MB/s 00:00 kubelet 100% 114MB 55.2MB/s 00:02 kube-proxy 100% 35MB 141.7MB/s 00:00 kubelet.crt 100% 2165 2.5MB/s 00:00 kubelet.key 100% 1679 1.5MB/s 00:00 kubelet-client-2022-11-02-22-26-53.pem 100% 1269 448.1KB/s 00:00 kubelet-client-current.pem 100% 1269 508.0KB/s 00:00 kubelet-client.key.tmp 100% 227 176.7KB/s 00:00 kubelet-client.key.tmpbak 100% 227 314.3KB/s 00:00 kubelet.crt 100% 2165 2.7MB/s 00:00 kubelet.key 100% 1675 963.4KB/s 00:00 [root@mcwk8s05 ~]# ls /usr/lib/systemd/system/kubelet.service /usr/lib/systemd/system/kubelet.service [root@mcwk8s05 ~]# ls /usr/lib/systemd/system/kube-proxy.service /usr/lib/systemd/system/kube-proxy.service [root@mcwk8s05 ~]# [root@mcwk8s05 ~]# [root@mcwk8s05 ~]# scp -rp /usr/lib/systemd/system/{kubelet.service,kube-proxy.service} 10.0.0.36:/usr/lib/systemd/system/ root@10.0.0.36's password: kubelet.service 100% 264 189.5KB/s 00:00 kube-proxy.service 100% 231 375.1KB/s 00:00 [root@mcwk8s05 ~]#

看了下,貌似就proxy需要修改,改成改node ip

然后直接启动

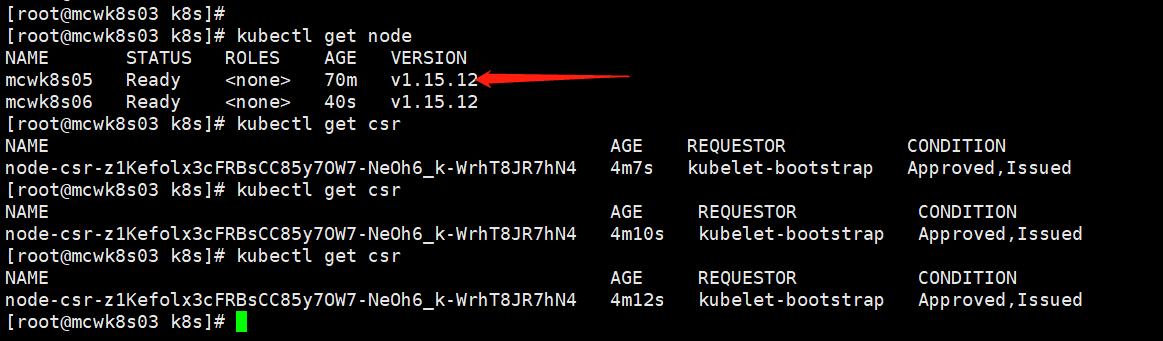

在master上认证通过一下

[root@mcwk8s03 k8s]# kubectl get csr NAME AGE REQUESTOR CONDITION node-csr-z1Kefolx3cFRBsCC85y7OW7-NeOh6_k-WrhT8JR7hN4 60s kubelet-bootstrap Pending [root@mcwk8s03 k8s]# kubectl get node NAME STATUS ROLES AGE VERSION mcwk8s05 Ready <none> 67m v1.15.12 [root@mcwk8s03 k8s]# kubectl certificate approve node-csr-z1Kefolx3cFRBsCC85y7OW7-NeOh6_k-WrhT8JR7hN4 certificatesigningrequest.certificates.k8s.io/node-csr-z1Kefolx3cFRBsCC85y7OW7-NeOh6_k-WrhT8JR7hN4 approved [root@mcwk8s03 k8s]# kubectl get csr NAME AGE REQUESTOR CONDITION node-csr-z1Kefolx3cFRBsCC85y7OW7-NeOh6_k-WrhT8JR7hN4 3m29s kubelet-bootstrap Approved,Issued [root@mcwk8s03 k8s]# kubectl get node NAME STATUS ROLES AGE VERSION mcwk8s05 Ready <none> 69m v1.15.12 mcwk8s06 NotReady <none> 9s v1.15.12 [root@mcwk8s03 k8s]#

05主机的csr不见了。估计节点加入之后,过段时间csr会消失吧。新节点成功加入

kubectl get csr kubectl certificate approve xx kubectl delete csr xx

这个角色,需要设置标签

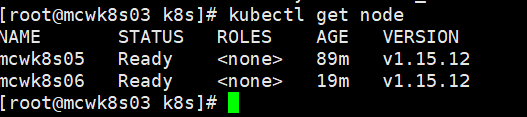

到此为止,实现上面架构里的单master集群架构的部署了。

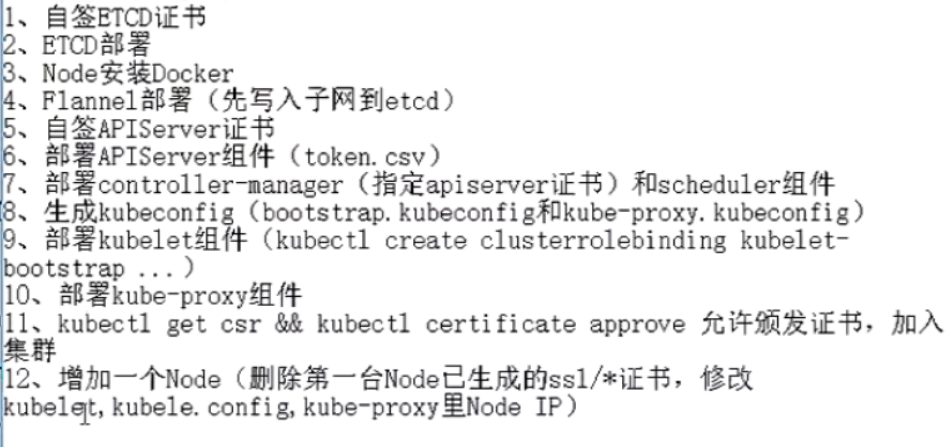

单master集群架构部署过程总结

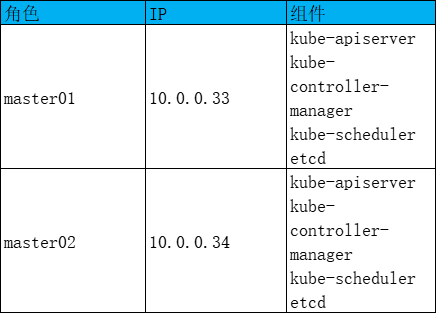

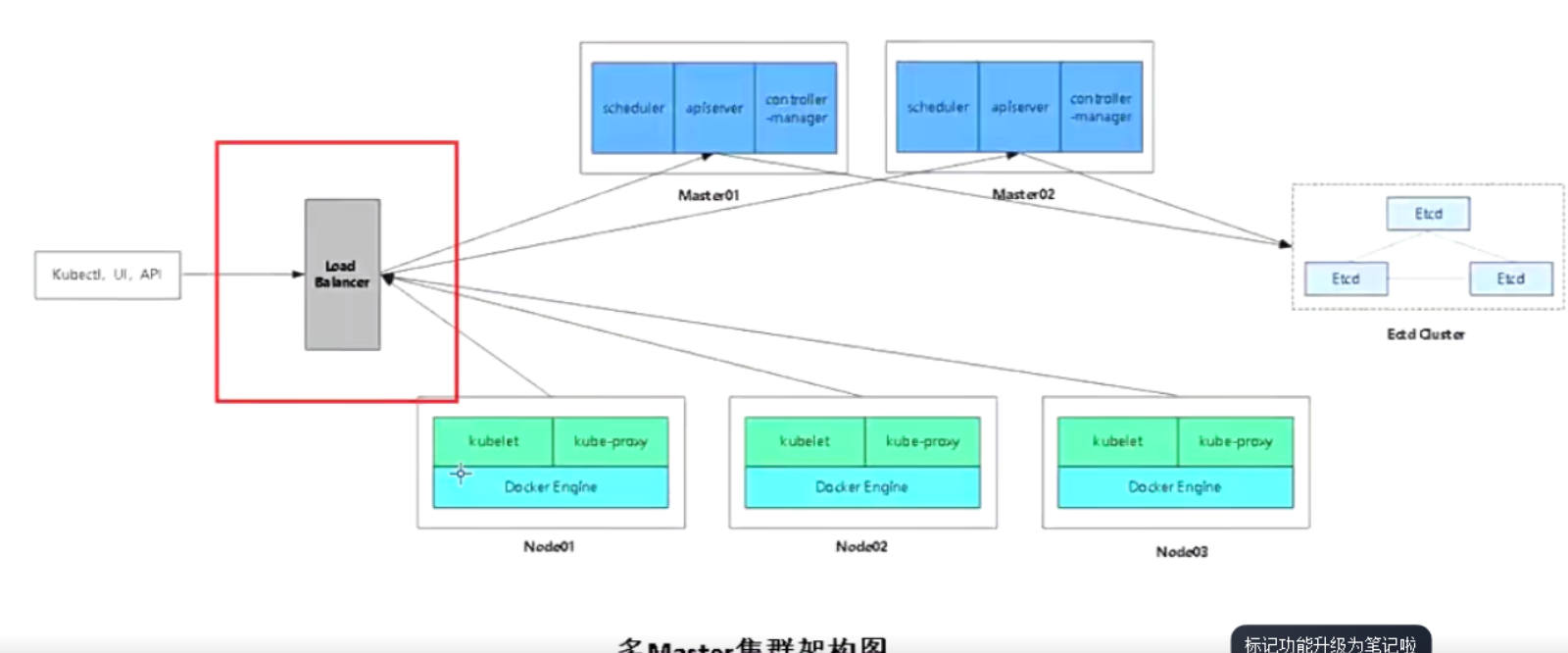

添加k8smaster02的部署

现在node都连接的是一台apiserver。要想看k8s做高可用,关注的是apiserver的高可用,而scheduler和controller manager是自己选举,我们不需要做高可用了。我们做一组负载均衡器为apiserver做高可用。node以及外部的请求都连接负载均衡器,这里做了主备,有vip,可以进行故障飘移。

下面做k8s的master02节点。只需要将master01的配置完全拷贝过去。master02是34主机

拷贝master的文件

[root@mcwk8s03 ~]# scp -rp /opt/kubernetes/ 10.0.0.34:/opt/ The authenticity of host '10.0.0.34 (10.0.0.34)' can't be established. ECDSA key fingerprint is SHA256:Fy/pzLLM8KXlQWRf+FW6cYBWS6OA5cq0YX5DCuEXwKs. ECDSA key fingerprint is MD5:30:aa:2e:65:9a:a4:43:aa:b5:cc:3c:f9:0e:8d:44:8c. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added '10.0.0.34' (ECDSA) to the list of known hosts. root@10.0.0.34's password: flanneld 100% 223 7.0KB/s 00:00 kube-apiserver 100% 909 486.7KB/s 00:00 kube-scheduler 100% 94 154.9KB/s 00:00 token.csv 100% 84 201.5KB/s 00:00 kube-controller-manager 100% 483 1.0MB/s 00:00 flanneld 100% 35MB 69.3MB/s 00:00 mk-docker-opts.sh 100% 2139 903.0KB/s 00:00 kube-apiserver 100% 157MB 31.4MB/s 00:05 kube-controller-manager 100% 111MB 13.1MB/s 00:08 kube-scheduler 100% 37MB 19.5MB/s 00:01 kubectl 100% 41MB 28.6MB/s 00:01 ca-key.pem 100% 1675 2.0MB/s 00:00 ca.pem 100% 1359 1.8MB/s 00:00 server-key.pem 100% 1675 1.5MB/s 00:00 server.pem 100% 1643 902.3KB/s 00:00 [root@mcwk8s03 ~]# ls /opt/ containerd etcd kubernetes [root@mcwk8s03 ~]# ls /opt/kubernetes/ bin cfg ssl [root@mcwk8s03 ~]# tree /opt/kubernetes/ /opt/kubernetes/ ├── bin │ ├── flanneld │ ├── kube-apiserver │ ├── kube-controller-manager │ ├── kubectl │ ├── kube-scheduler │ └── mk-docker-opts.sh ├── cfg │ ├── flanneld │ ├── kube-apiserver │ ├── kube-controller-manager │ ├── kube-scheduler │ └── token.csv └── ssl ├── ca-key.pem ├── ca.pem ├── server-key.pem └── server.pem 3 directories, 15 files [root@mcwk8s03 ~]#

[root@mcwk8s03 ~]# scp -rp /usr/lib/systemd/system/{kube-apiserver,kube-controller-manager,kube-scheduler}.service 10.0.0.34:/usr/lib/systemd/system/

root@10.0.0.34's password:

kube-apiserver.service 100% 282 3.5KB/s 00:00

kube-controller-manager.service 100% 317 214.0KB/s 00:00

kube-scheduler.service 100% 281 289.0KB/s 00:00

[root@mcwk8s03 ~]#

因为apiserver需要和etcd通信,需要etcd的证书,所以直接把etcd的目录都复制过去也没问题

[root@mcwk8s03 ~]# ls /opt/ containerd etcd kubernetes [root@mcwk8s03 ~]# scp -rp /opt/etcd/ 10.0.0.34:/opt/ root@10.0.0.34's password: Permission denied, please try again. root@10.0.0.34's password: etcd 100% 481 320.6KB/s 00:00 etcd 100% 18MB 29.5MB/s 00:00 etcdctl 100% 15MB 12.6MB/s 00:01 ca-key.pem 100% 1675 64.2KB/s 00:00 ca.pem 100% 1265 547.9KB/s 00:00 server-key.pem 100% 1679 2.9MB/s 00:00 server.pem 100% 1338 1.3MB/s 00:00 [root@mcwk8s03 ~]#

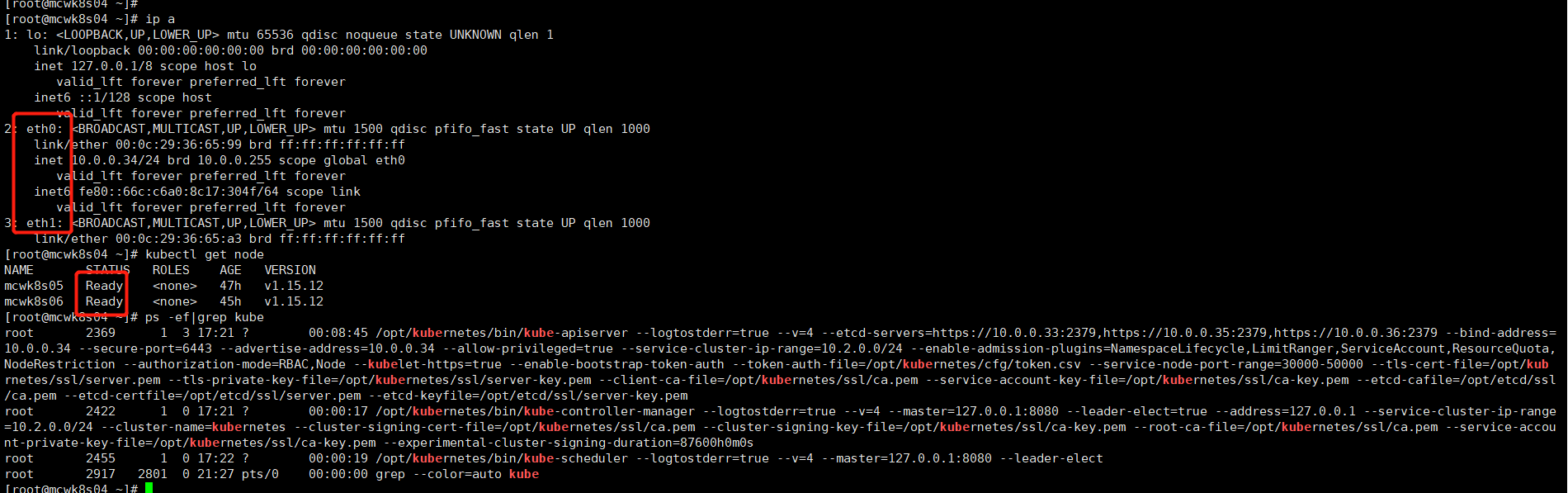

master2只需要改一下ip就行。修改ip

[root@mcwk8s04 ~]# cd /opt/kubernetes/ [root@mcwk8s04 kubernetes]# cd cfg/ [root@mcwk8s04 cfg]# ls flanneld kube-apiserver kube-controller-manager kube-scheduler token.csv [root@mcwk8s04 cfg]# vim kube-apiserver -bash: vim: command not found [root@mcwk8s04 cfg]# vi kube-apiserver [root@mcwk8s04 cfg]# grep .34 kube-apiserver --bind-address=10.0.0.34 \ --advertise-address=10.0.0.34 \ [root@mcwk8s04 cfg]#

启动三个组件,get node查询,结果正常,master2成功添加

[root@mcwk8s04 cfg]# ls flanneld kube-apiserver kube-controller-manager kube-scheduler token.csv [root@mcwk8s04 cfg]# systemctl daemon-reload [root@mcwk8s04 cfg]# systemctl start kube-apiserver.service [root@mcwk8s04 cfg]# systemctl start kube-controller-manager.service [root@mcwk8s04 cfg]# systemctl start kube-scheduler.service [root@mcwk8s04 cfg]# ps -ef|grep kube root 2369 1 54 21:32 ? 00:00:14 /opt/kubernetes/bin/kube-apiserver --logtostderr=true --v=4 --etcd-servers=https://10.0.0.33:2379,https://10.0.0.35:2379,https://10.0.0.36:2379 --bind-address=10.0.0.34 --secure-port=6443 --advertise-address=10.0.0.34 --allow-privileged=true --service-cluster-ip-range=10.2.0.0/24 --enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction --authorization-mode=RBAC,Node --kubelet-https=true --enable-bootstrap-token-auth --token-auth-file=/opt/kubernetes/cfg/token.csv --service-node-port-range=30000-50000 --tls-cert-file=/opt/kubrnetes/ssl/server.pem --tls-private-key-file=/opt/kubernetes/ssl/server-key.pem --client-ca-file=/opt/kubernetes/ssl/ca.pem --service-account-key-file=/opt/kubernetes/ssl/ca-key.pem --etcd-cafile=/opt/etcd/ssl/ca.pem --etcd-certfile=/opt/etcd/ssl/server.pem --etcd-keyfile=/opt/etcd/ssl/server-key.pem root 2422 1 5 21:32 ? 00:00:00 /opt/kubernetes/bin/kube-controller-manager --logtostderr=true --v=4 --master=127.0.0.1:8080 --leader-elect=true --address=127.0.0.1 --service-cluster-ip-range=10.2.0.0/24 --cluster-name=kubernetes --cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem --cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem --root-ca-file=/opt/kubernetes/ssl/ca.pem --service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem --experimental-cluster-signing-duration=87600h0m0s root 2455 1 15 21:32 ? 00:00:01 /opt/kubernetes/bin/kube-scheduler --logtostderr=true --v=4 --master=127.0.0.1:8080 --leader-elect root 2469 1734 0 21:32 pts/1 00:00:00 grep --color=auto kube [root@mcwk8s04 cfg]# /opt/kubernetes/bin/kubectl get node NAME STATUS ROLES AGE VERSION mcwk8s05 Ready <none> 23h v1.15.12 mcwk8s06 Ready <none> 21h v1.15.12 [root@mcwk8s04 cfg]#

master2并没有启动docker和pod网络进程,在这里。但是依然是可以查看集群信息的

部署负载均衡器

下面我们就来部署负载均衡,将node都指向负载均衡,负载均衡调度的是后面的apiserver。我们这里使用四层负载均衡

负载均衡的部署规划入下

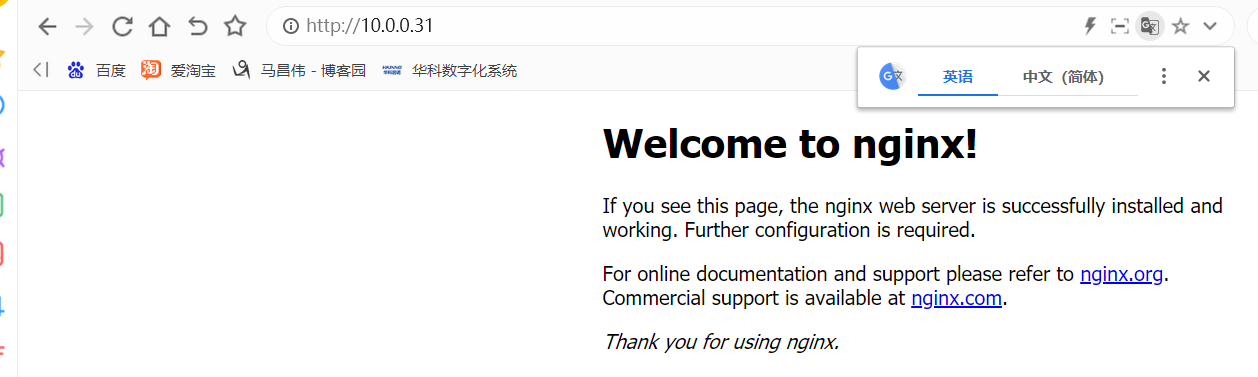

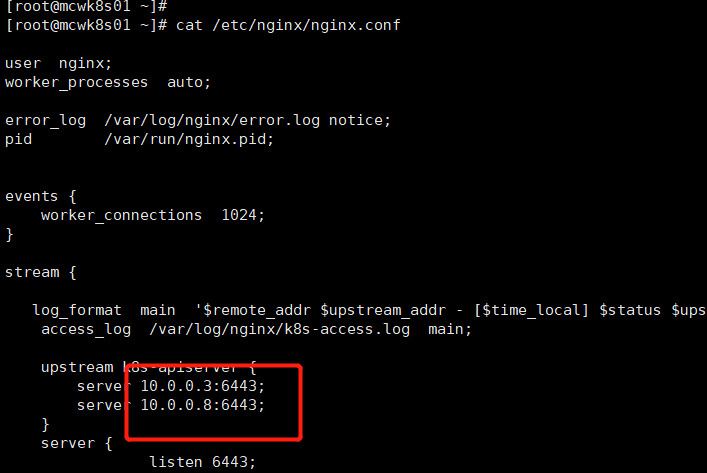

部署nginx

如下,两个部署的脚本。一个nginx的,一个keepalived。

[root@mcwk8s01 ~]# ls anaconda-ks.cfg keepalived.conf nginx.sh [root@mcwk8s01 ~]# cat keepalived.conf ! Configuration File for keepalived global_defs { # 接收邮件地址 notification_email { acassen@firewall.loc failover@firewall.loc sysadmin@firewall.loc } # 邮件发送地址 notification_email_from Alexandre.Cassen@firewall.loc smtp_server 127.0.0.1 smtp_connect_timeout 30 router_id NGINX_MASTER } vrrp_script check_nginx { script "/usr/local/nginx/sbin/check_nginx.sh" } vrrp_instance VI_1 { state MASTER interface eth0 virtual_router_id 51 # VRRP 路由 ID实例,每个实例是唯一的 priority 100 # 优先级,备服务器设置 90 advert_int 1 # 指定VRRP 心跳包通告间隔时间,默认1秒 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 10.0.0.188/24 } track_script { check_nginx } } mkdir /usr/local/nginx/sbin/ -p vim /usr/local/nginx/sbin/check_nginx.sh count=$(ps -ef |grep nginx |egrep -cv "grep|$$") if [ "$count" -eq 0 ];then /etc/init.d/keepalived stop fi chmod +x /usr/local/nginx/sbin/check_nginx.sh[root@mcwk8s01 ~]# [root@mcwk8s01 ~]# cat nginx.sh cat > /etc/yum.repos.d/nginx.repo << EOF [nginx] name=nginx repo baseurl=http://nginx.org/packages/centos/7/$basearch/ gpgcheck=0 EOF stream { log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent'; access_log /var/log/nginx/k8s-access.log main; upstream k8s-apiserver { server 10.0.0.3:6443; server 10.0.0.8:6443; } server { listen 6443; proxy_pass k8s-apiserver; } } [root@mcwk8s01 ~]#

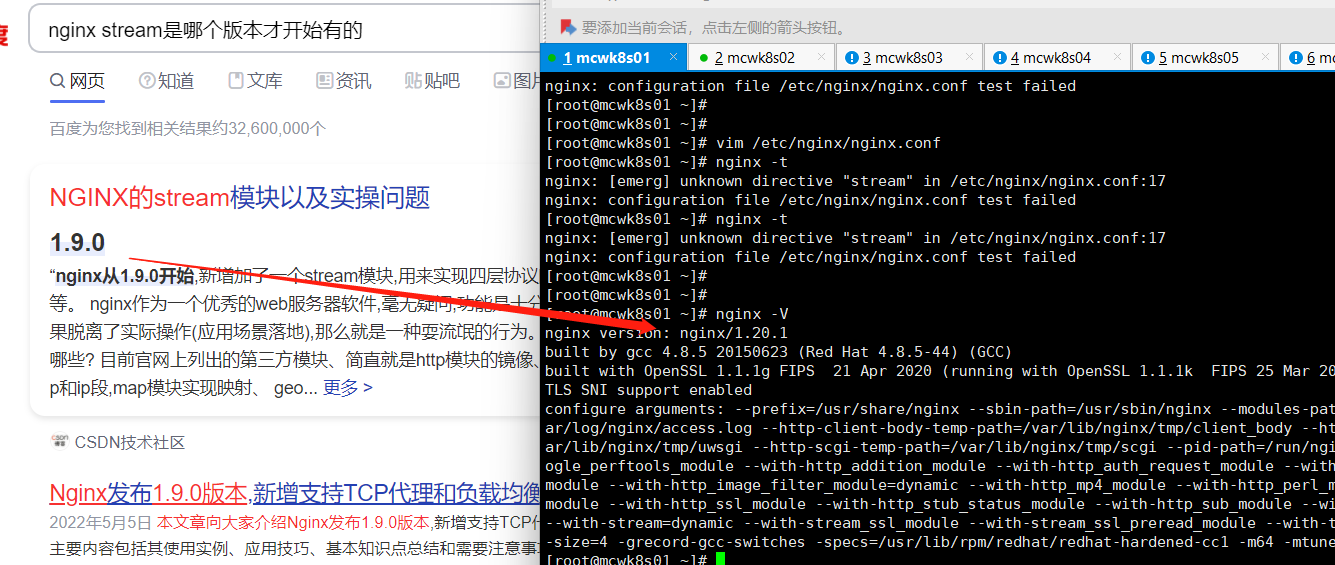

我们虚拟机配置了阿里的镜像源,用这个yum安装不行,版本太低,原因下面有。

[root@mcwk8s02 ~]# yum install -y nginx

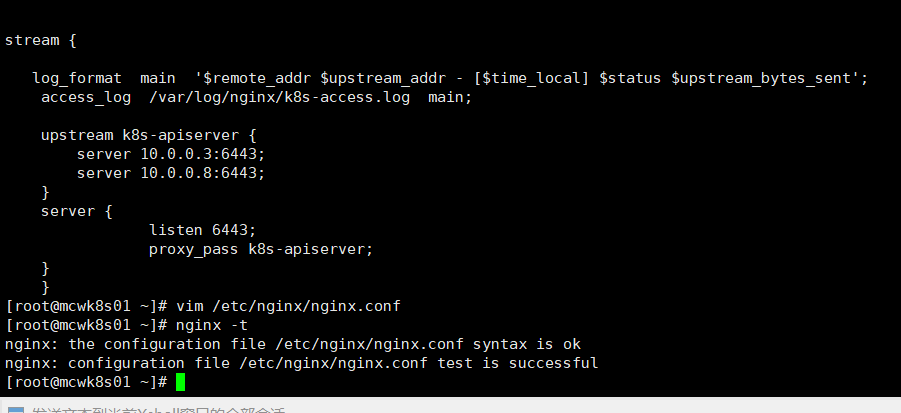

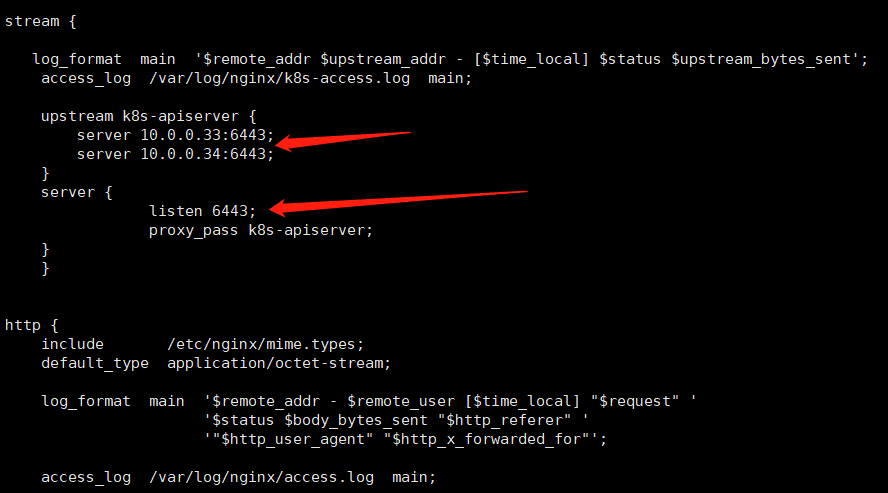

我们配置一些nginx的主节点配置。

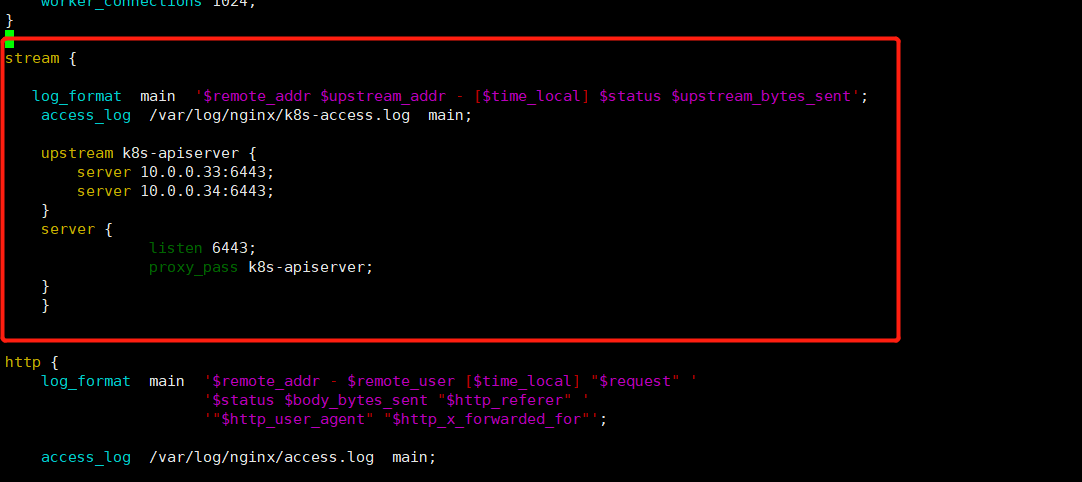

我们用nginx的四层负载均衡,http模块是七层配置。我们在http模块上面配置一个stream模块。

四层负载均衡,我们配置了日志格式,日志路径,upstream和server主机。我们配置四层,四层就不用证书了。如果配置七层,那转发就得配置证书了。

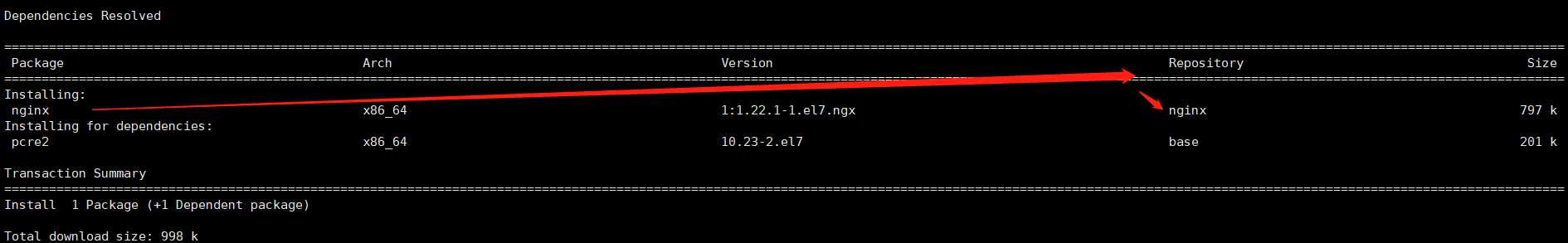

从阿里云安装的版本不行,还是得卸载了,配置官网镜像源,重新安装。看版本好像也是大的啊,不清楚

配置官方源。cat生成的,会将$当成变量来用,结果没了。需要转义

[root@mcwk8s01 ~]# cat > /etc/yum.repos.d/nginx.repo << EOF > [nginx] > name=nginx repo > baseurl=http://nginx.org/packages/centos/7/$basearch/ > gpgcheck=0 > EOF [root@mcwk8s01 ~]# cat /etc/yum.repos.d/nginx.repo [nginx] name=nginx repo baseurl=http://nginx.org/packages/centos/7// gpgcheck=0 [root@mcwk8s01 ~]# vim /etc/yum.repos.d/nginx.repo [root@mcwk8s01 ~]# cat /etc/yum.repos.d/nginx.repo [nginx] name=nginx repo baseurl=http://nginx.org/packages/centos/7/$basearch/ gpgcheck=0 [root@mcwk8s01 ~]#

转义之后正常生成文件内容

[root@mcwk8s02 ~]# cat > /etc/yum.repos.d/nginx.repo << EOF > [nginx] > name=nginx repo > baseurl=http://nginx.org/packages/centos/7/\$basearch/ > gpgcheck=0 > EOF [root@mcwk8s02 ~]# cat /etc/yum.repos.d/nginx.repo [nginx] name=nginx repo baseurl=http://nginx.org/packages/centos/7/$basearch/ gpgcheck=0 [root@mcwk8s02 ~]#

如下,是用的官网源下载的

如下,语法检查成功

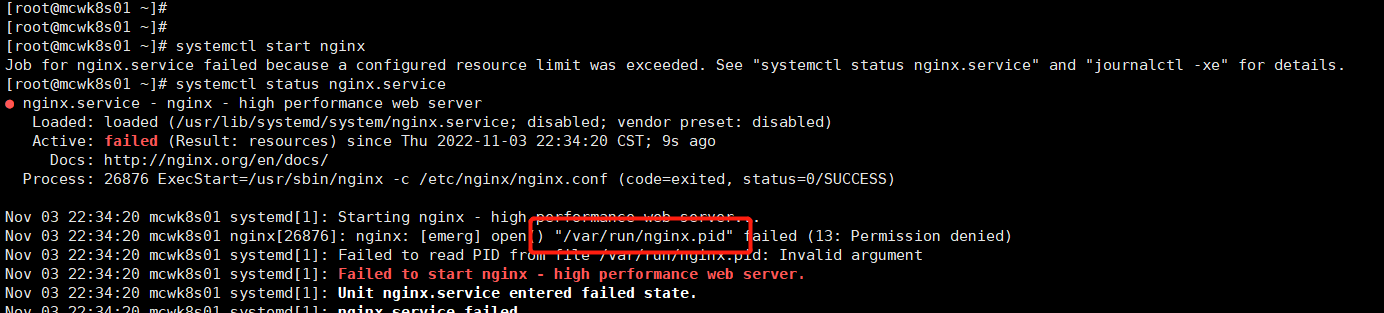

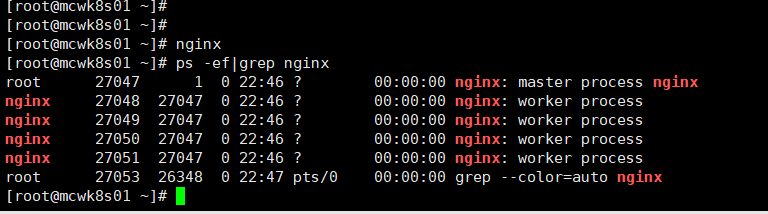

不能用systemd来启动,别人能成功,我的失败了,直接nginx启动

改好配置直接启动,没有start参数

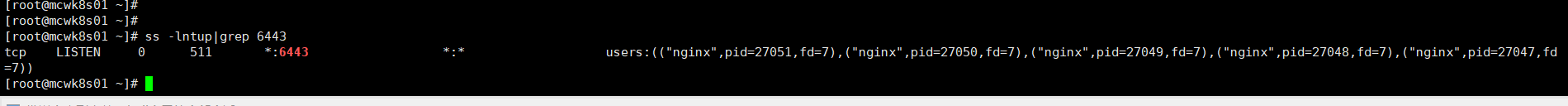

查看四层代理端口已经开启了

同步一下主节点nginx配置到备节点,启动备节点nginx

[root@mcwk8s01 ~]# scp -rp /etc/nginx/nginx.conf 10.0.0.32:/etc/nginx/

root@10.0.0.32's password:

nginx.conf 100% 1001 254.3KB/s 00:00

[root@mcwk8s01 ~]#

现在需要将两个node,让他们访问的是nginx四层代理的地址

部署keepalived高可用

nginx主备节点都安装。

[root@mcwk8s01 ~]# yum install -y keepalived

两个节点的配置备份一些,直接用我们自己的配置

[root@mcwk8s01 ~]# ls /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf [root@mcwk8s01 ~]# cp keepalived.conf /etc/keepalived/ cp: overwrite ‘/etc/keepalived/keepalived.conf’? y [root@mcwk8s01 ~]#

我们先测试一下我们master的访问

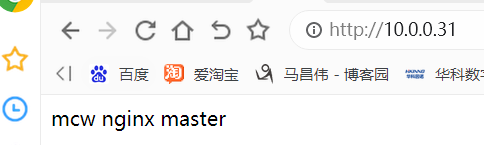

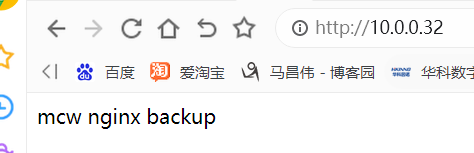

我们分别标记一下主备节点

echo "mcw nginx master" >/usr/share/nginx/html/index.html echo "mcw nginx backup" >/usr/share/nginx/html/index.html

主节点

备节点

修改脚本位置和vip

[root@mcwk8s01 ~]# cat /etc/nginx/check_nginx.sh count=$(ps -ef |grep nginx |egrep -cv "grep|$$") if [ "$count" -eq 0 ];then /etc/init.d/keepalived stop fi [root@mcwk8s01 ~]# chmod +x /etc/nginx/check_nginx.sh [root@mcwk8s01 ~]# vim /etc/nginx/check_nginx.sh [root@mcwk8s01 ~]# vim /etc/keepalived/keepalived.conf [root@mcwk8s01 ~]# egrep "check_nginx|.30" /etc/keepalived/keepalived.conf smtp_connect_timeout 30 vrrp_script check_nginx { script "/etc/nginx/check_nginx.sh" 10.0.0.30/24 check_nginx [root@mcwk8s01 ~]#

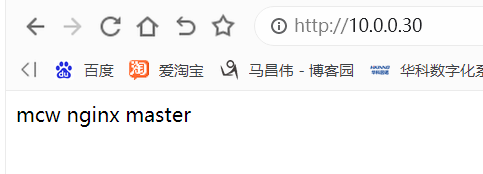

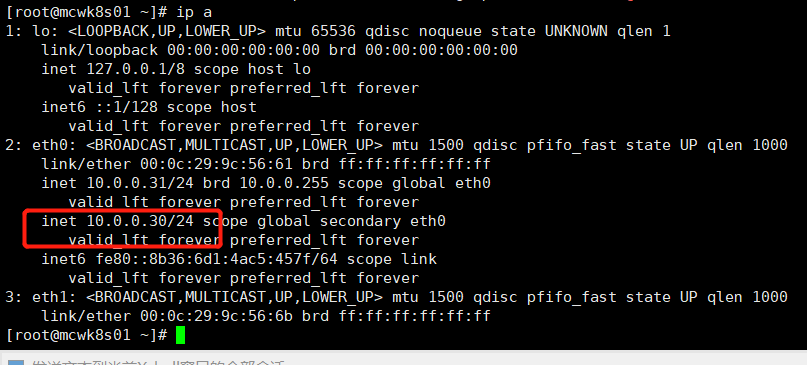

启动主机节点keepalived,查看vip

[root@mcwk8s01 ~]# systemctl start keepalived.service [root@mcwk8s01 ~]# ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:0c:29:9c:56:61 brd ff:ff:ff:ff:ff:ff inet 10.0.0.31/24 brd 10.0.0.255 scope global eth0 valid_lft forever preferred_lft forever inet 10.0.0.30/24 scope global secondary eth0 valid_lft forever preferred_lft forever inet6 fe80::8b36:6d1:4ac5:457f/64 scope link valid_lft forever preferred_lft forever 3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:0c:29:9c:56:6b brd ff:ff:ff:ff:ff:ff [root@mcwk8s01 ~]#

通过vip可正常访问主节点

将keepalived配置复制到从

[root@mcwk8s01 ~]# scp -rp /etc/keepalived/keepalived.conf 10.0.0.32:/etc/keepalived/ root@10.0.0.32's password: keepalived.conf 100% 919 707.1KB/s 00:00 [root@mcwk8s01 ~]#

[root@mcwk8s01 ~]# scp -rp /etc/nginx/check_nginx.sh 10.0.0.32:/etc/nginx/ root@10.0.0.32's password: check_nginx.sh 100% 113 104.6KB/s 00:00 [root@mcwk8s01 ~]#

修改为备节点。降低10优先级10

[root@mcwk8s02 ~]# egrep "state|priority" /etc/keepalived/keepalived.conf state BACKUP priority 90 # 优先级,备服务器设置 90 [root@mcwk8s02 ~]#

然后启动服务

[root@mcwk8s02 ~]# systemctl start keepalived.service

[root@mcwk8s02 ~]#

脚本停止命令不同,需要修改下

[root@mcwk8s01 ~]# vim /etc/nginx/check_nginx.sh

[root@mcwk8s01 ~]# cat /etc/nginx/check_nginx.sh

count=$(ps -ef |grep nginx |egrep -cv "grep|$$")

if [ "${count}" -eq 0 ];then

systemctl stop keepalived.service

fi

[root@mcwk8s01 ~]#

[root@mcwk8s01 ~]# scp -rp /etc/nginx/check_nginx.sh 10.0.0.32:/etc/nginx/ root@10.0.0.32's password: check_nginx.sh 100% 116 186.4KB/s 00:00 [root@mcwk8s01 ~]#

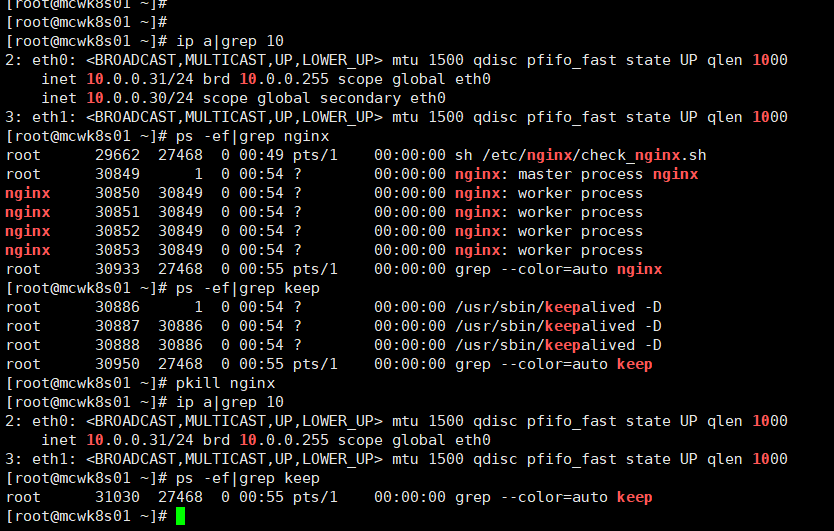

当我们杀掉主节点nginx。ip已经自动漂移到备节点

原因是:

WARNING - default user 'keepalived_script' for script execution does not exist - please create.

Nov 4 00:38:22 mcwk8s01 Keepalived_vrrp[29436]: Unable to access script `/etc/nginx/check_nginx.sh`

Nov 4 00:38:22 mcwk8s01 Keepalived_vrrp[29436]: Disabling track script check_nginx since not found

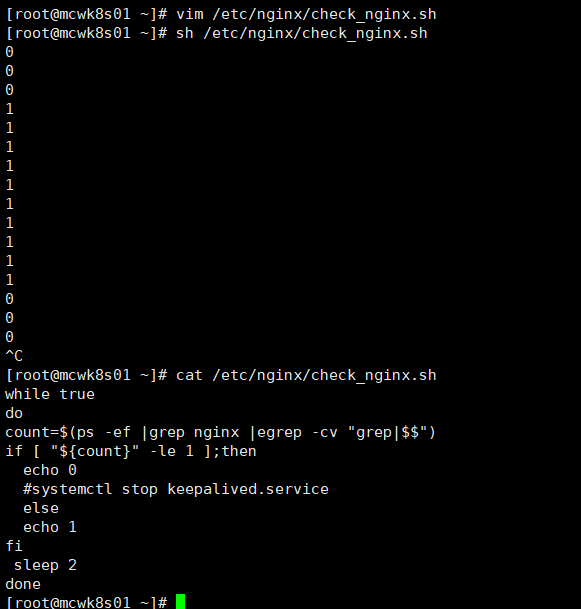

keepalived实现故障漂移的另一种方式,后台运行脚本,非配置文件运行脚本

我们不用配置文件的,这种没有成功。我们用另一种方式

下面实现了nginx开启打印1,nginx进程数为0则打印0

while true do count=$(ps -ef |grep nginx |egrep -cv "grep|$$") if [ "${count}" -le 1 ];then echo 0 #systemctl stop keepalived.service else echo 1 fi sleep 2 done

修改nginx进程数为0时就停止keepalived。下面就是可用的脚本。检查时间间隔是2秒

while true do count=$(ps -ef |grep nginx |egrep -cv "grep|$$") if [ "${count}" -le 1 ];then systemctl stop keepalived.service fi sleep 2 done

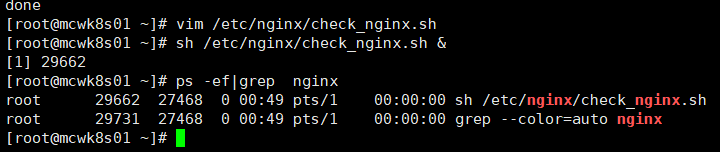

后台运行程序

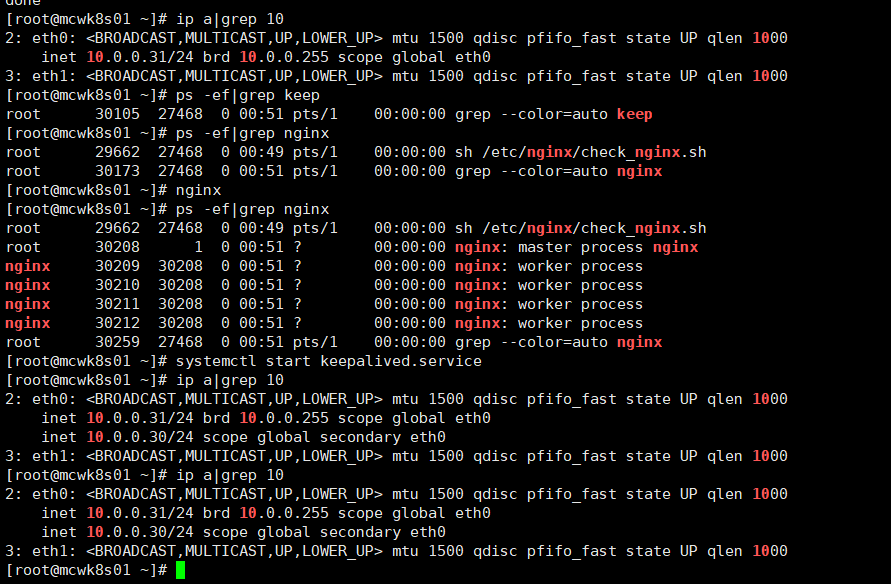

效果如下:当我们启动主节点的nginx,启动keepalived后,vip漂移回主节点

如下,当我们的nignx进程数为0时,vip自动漂移走,到了备节点上。成功实现vip的漂移

将进程加入到开机自启动中去

echo 'sh /etc/nginx/check_nginx.sh &' >>/etc/rc.local

验证可行的配置如下:

可以实现主节点没有nginx进程就停掉keepalived,,主节点vip漂移到备节点。当主节点nginx起来之后,再启动keepalived,vip自动漂移回主节点。下面的已验证可行

主节点:

[root@mcwk8s01 ~]# cat /etc/keepalived/keepalived.conf ! Configuration File for keepalived global_defs { script_user root enable_script_security notification_email { acassen@firewall.loc failover@firewall.loc sysadmin@firewall.loc } notification_email_from Alexandre.Cassen@firewall.loc smtp_server 127.0.0.1 smtp_connect_timeout 30 router_id NGINX_MASTER } vrrp_script check_nginx { script /etc/nginx/check_nginx.sh } vrrp_instance VI_1 { state MASTER interface eth0 virtual_router_id 51 priority 100 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 10.0.0.30/24 } track_script { check_nginx } } [root@mcwk8s01 ~]#

主节点开启后台进程,脚本只在主执行,备上没执行后台进程,备执行好像不能漂移vip。

[root@mcwk8s01 ~]# cat /etc/nginx/check_nginx.sh while true do count=$(ps -ef |grep nginx |egrep -cv "grep|$$") if [ "${count}" -le 1 ];then systemctl stop keepalived.service fi sleep 2 done [root@mcwk8s01 ~]# ps -ef|grep check_nginx root 40820 33038 0 22:27 pts/0 00:00:00 sh /etc/nginx/check_nginx.sh root 42110 33038 0 22:32 pts/0 00:00:00 grep --color=auto check_nginx [root@mcwk8s01 ~]#

备节点配置。其实备节点和主节点中的脚本执行是没有用到的。只是依靠主节点的后台运行脚本去判断。备节点额配置了脚本,也不能跟主节点一样的脚本,因为主节点运行的脚本是2秒,且是死循环,备节点运行同样,结果是无法实现vip漂移,系统日志报错vrrp由于该脚本xx的。mcwk8s02 Keepalived_vrrp[65303]: /etc/nginx/check_nginx.sh exited due to signal 15

[root@mcwk8s02 ~]# cat /etc/nginx/check_nginx.sh count=$(ps -ef |grep nginx |egrep -cv "grep|$$") if [ "$count" -eq 0 ];then systemctl stop keepalived.service fi [root@mcwk8s02 ~]# cat /etc/keepalived/keepalived.conf ! Configuration File for keepalived global_defs { # 接收邮件地址 script_user root enable_script_security notification_email { acassen@firewall.loc failover@firewall.loc sysadmin@firewall.loc } # 邮件发送地址 notification_email_from Alexandre.Cassen@firewall.loc smtp_server 127.0.0.1 smtp_connect_timeout 30 router_id NGINX_MASTER } vrrp_script check_nginx { script "/etc/nginx/check_nginx.sh" } vrrp_instance VI_1 { state BACKUP interface eth0 virtual_router_id 51 # VRRP 路由 ID实例,每个实例是唯一的 priority 90 # 优先级,备服务器设置 90 advert_int 1 # 指定VRRP 心跳包通告间隔时间,默认1秒 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 10.0.0.30/24 } track_script { check_nginx } } [root@mcwk8s02 ~]#

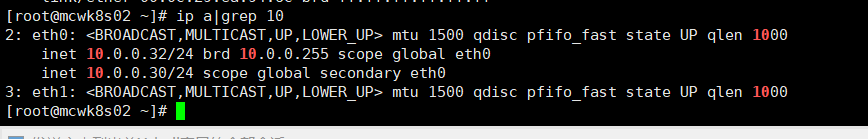

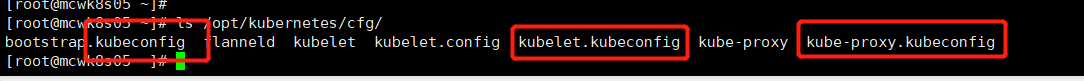

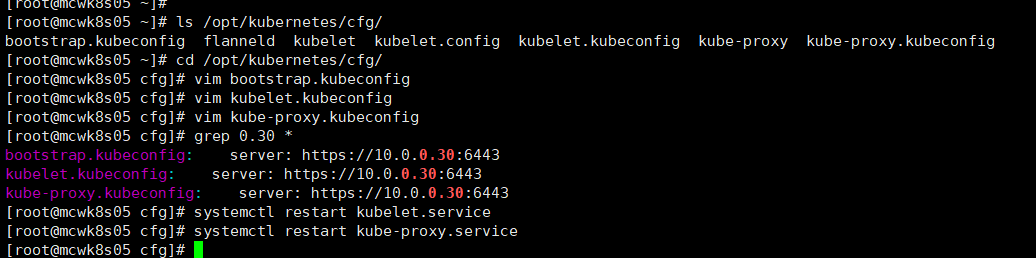

将node节点访问的apiserver地址改成负载均衡的vip地址

主要是改三个kubeconfig地址

改成负载均衡的vip之后,重启服务。master2部署完就能get到node,是因为它能连接etcd,从etcd读取的,跟这里没有关系。协议不用变,这里还是https

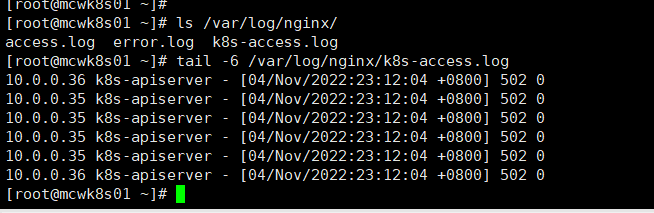

目前去nginx负载均衡上看k8s访问日志,是502错误

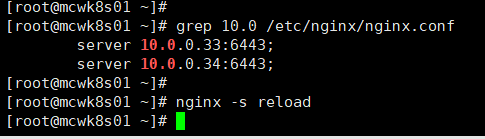

我们查看 一下nginx配置,发现没有改ip。转发的后端ip不是两个master节点的IP

修改好ip重载。再把备节点nginx也操作一下

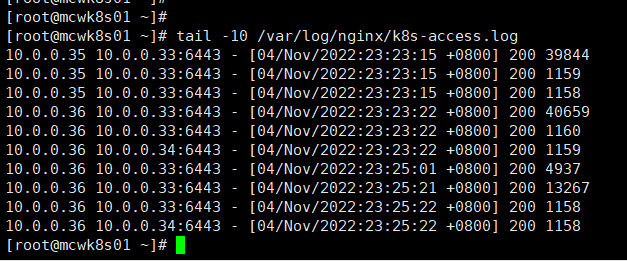

如下,我们35 和36两个node来访问我的nginx负载6443端口 ,我们就将它转发到33和34这两个apiserver,也就是两个master节点上了。

node将访问apiserver地址修改为负载均衡vip后,验证集群状况

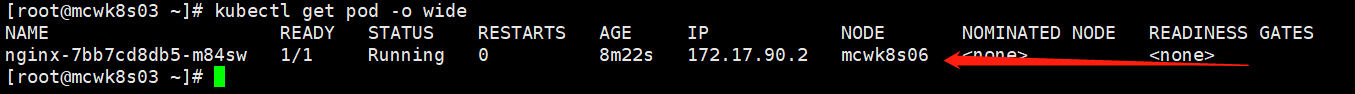

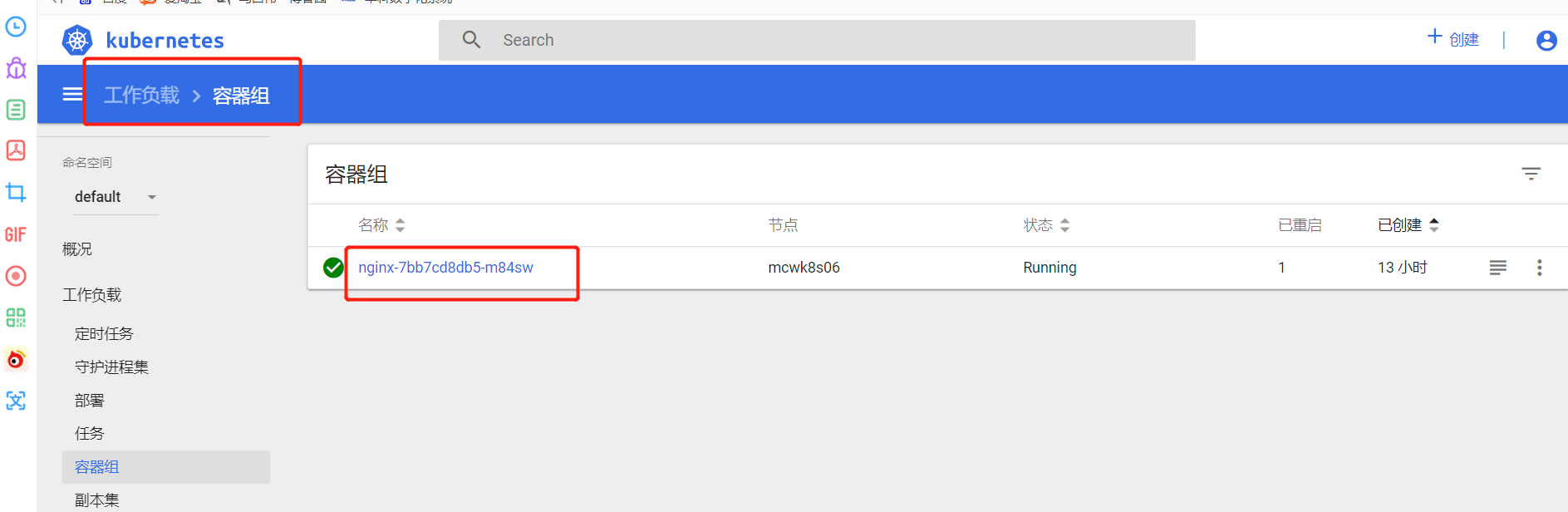

虽然多次拉取镜像失败,但是还是成功了的,最后。我们创建一个容器,发现能正常创建。在node2上,说明环境没有问题

kubectl run nginx --image=nginx

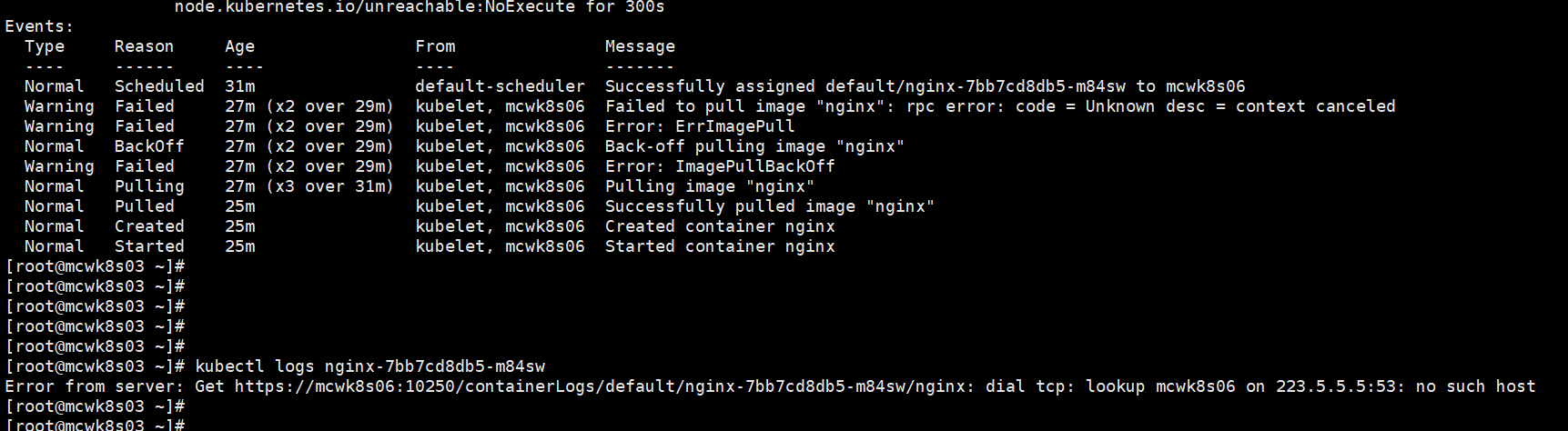

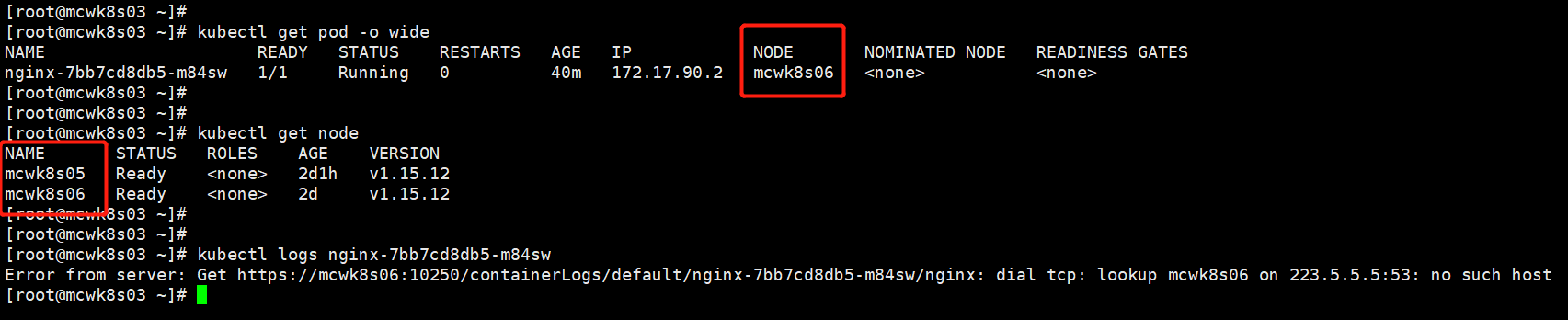

报错:dial tcp: lookup mcwk8s06 on 223.5.5.5:53: no such host

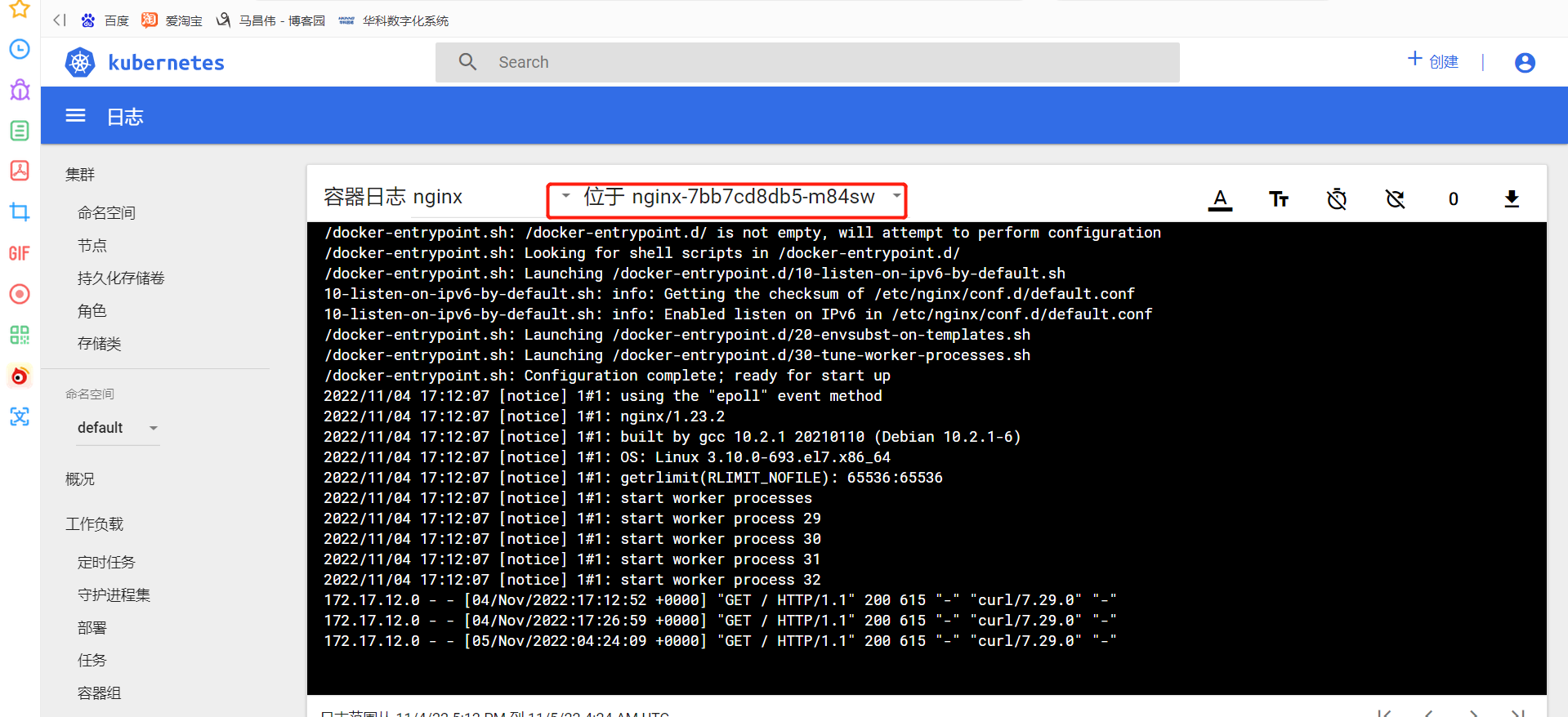

Error from server: Get https://mcwk8s06:10250/containerLogs/default/nginx-7bb7cd8db5-m84sw/nginx: dial tcp: lookup mcwk8s06 on 223.5.5.5:53: no such host

get pod显示的是主机,域名解析有问题

看日志,这里报错了,暂时不看,跟预想的有些区别。预想的是证书的问题。

这里显示的都是主机名,我们想用ip。

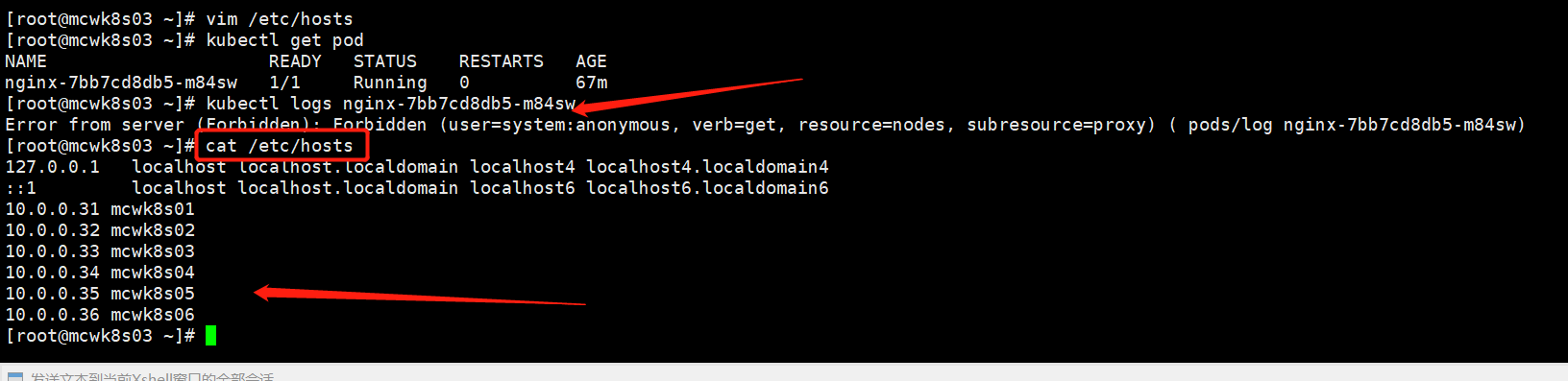

将所有的节点添加hosts解析就好了。就成了证书的问题了

报错:Error from server (Forbidden): Forbidden (user=system:anonymous, verb=get, resource=nodes, subresource=proxy)

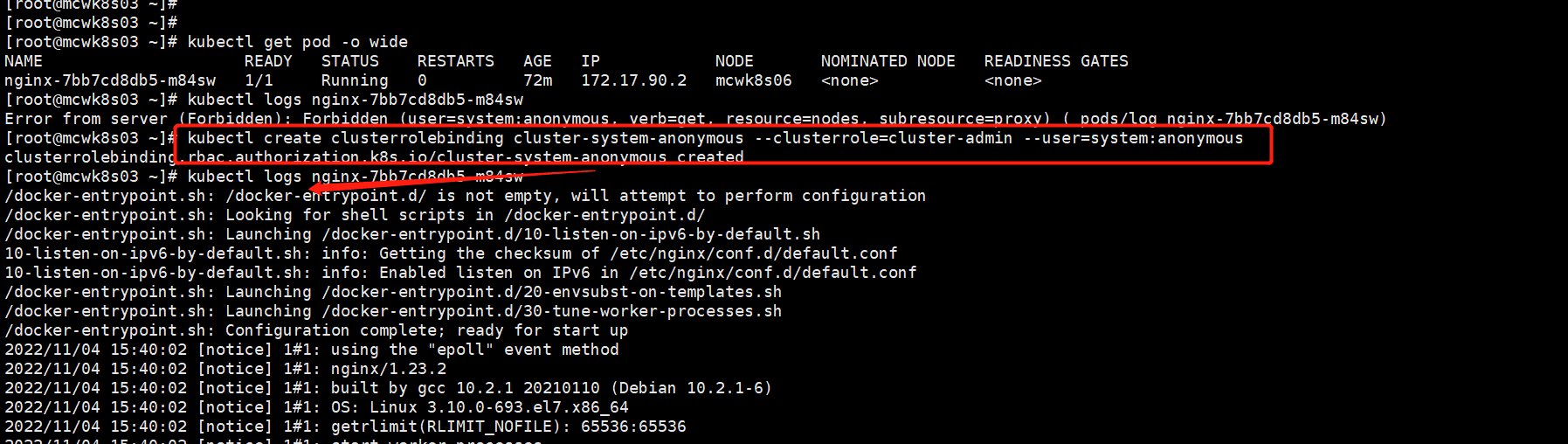

[root@mcwk8s03 ~]# kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-7bb7cd8db5-m84sw 1/1 Running 0 72m 172.17.90.2 mcwk8s06 <none> <none> [root@mcwk8s03 ~]# kubectl logs nginx-7bb7cd8db5-m84sw Error from server (Forbidden): Forbidden (user=system:anonymous, verb=get, resource=nodes, subresource=proxy) ( pods/log nginx-7bb7cd8db5-m84sw) [root@mcwk8s03 ~]#

需要授权

kubectl create clusterrolebinding cluster-system-anonymous --clusterrole=cluster-admin --user=system:anonymous

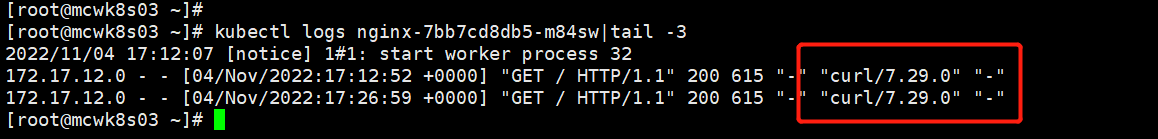

授权之后就可以查看日志了

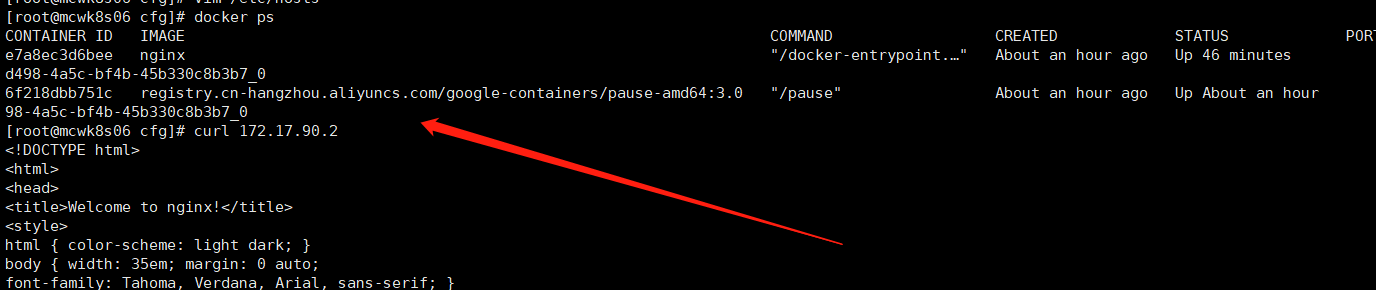

问题:master节点不能访问pod,在pod的宿主机上是可以访问的

这是个网络问题

[root@mcwk8s03 ~]# kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-7bb7cd8db5-m84sw 1/1 Running 0 81m 172.17.90.2 mcwk8s06 <none> <none> [root@mcwk8s03 ~]# curl 172.17.90.2 curl: (7) Failed connect to 172.17.90.2:80; Connection timed out [root@mcwk8s03 ~]#

网络问题:重启了两边的flannel服务和docker服务,在master上能ping通pod所在node的docker0网卡ip.然后就好了,就i能curl访问pod了。pod的ip也随着flnannel网段发生变化

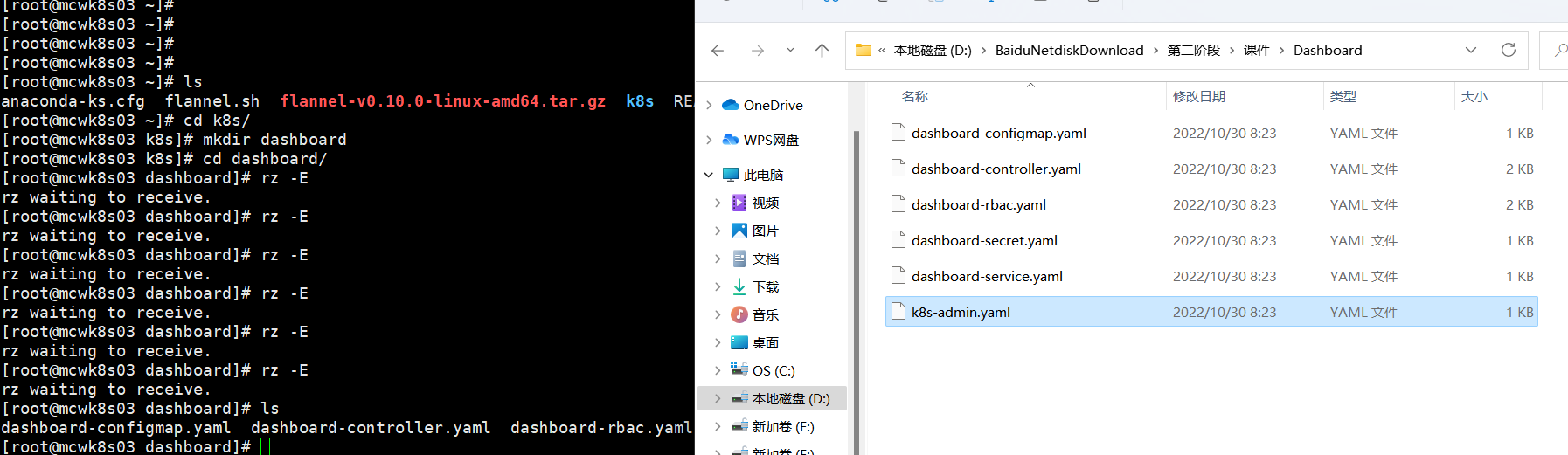

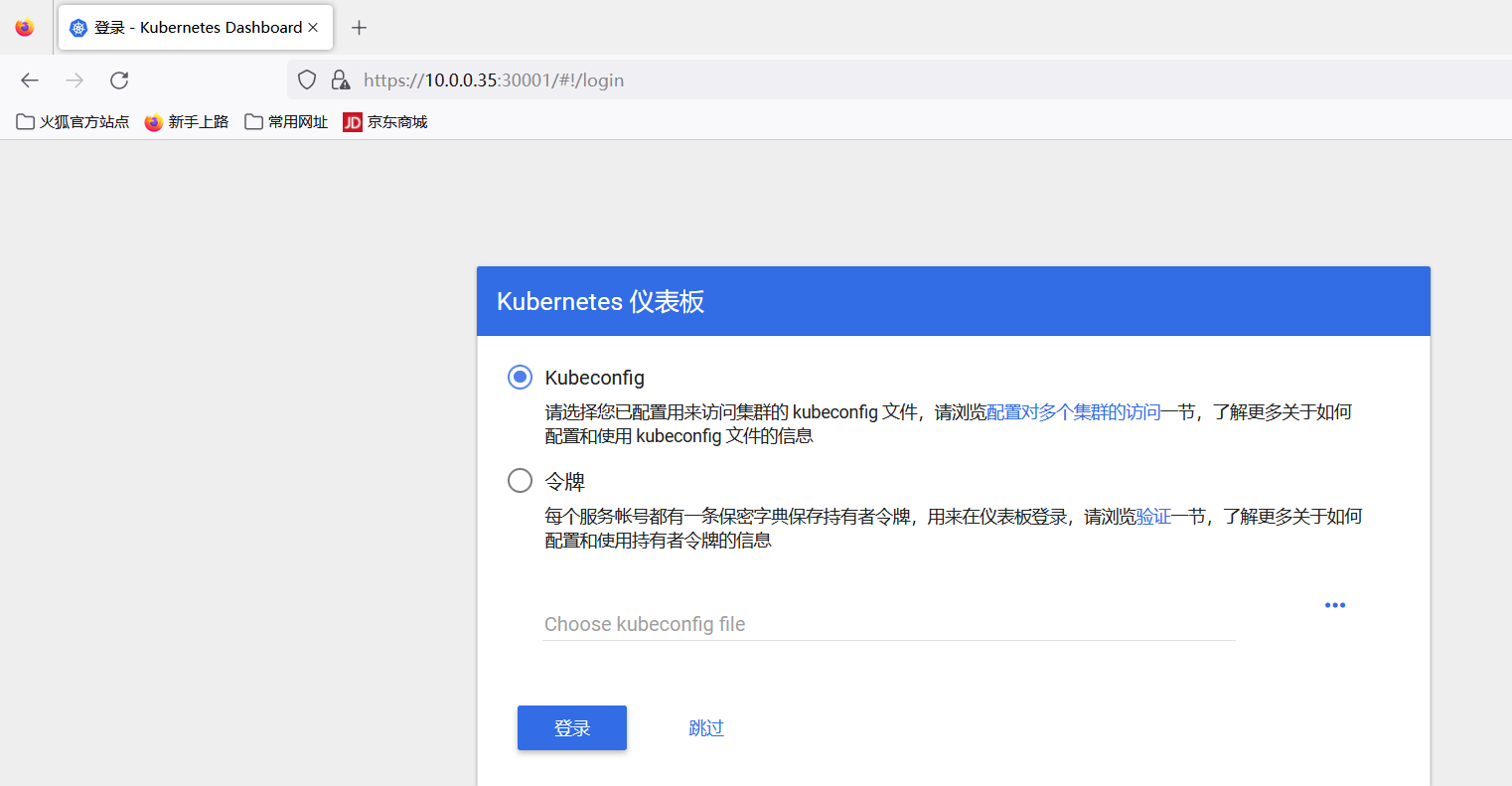

部署Web UI(Dashboard)

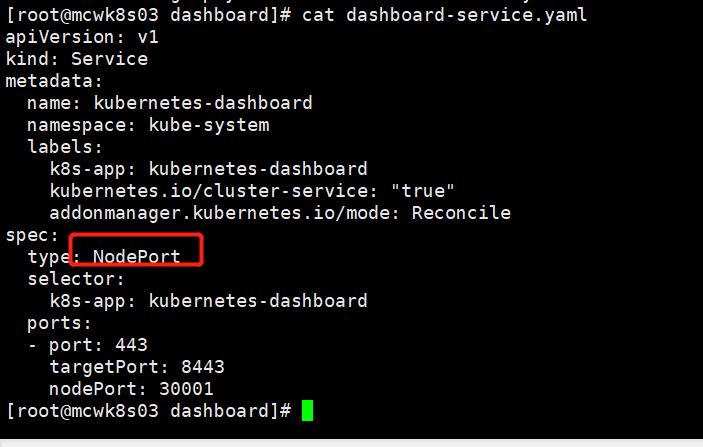

部署

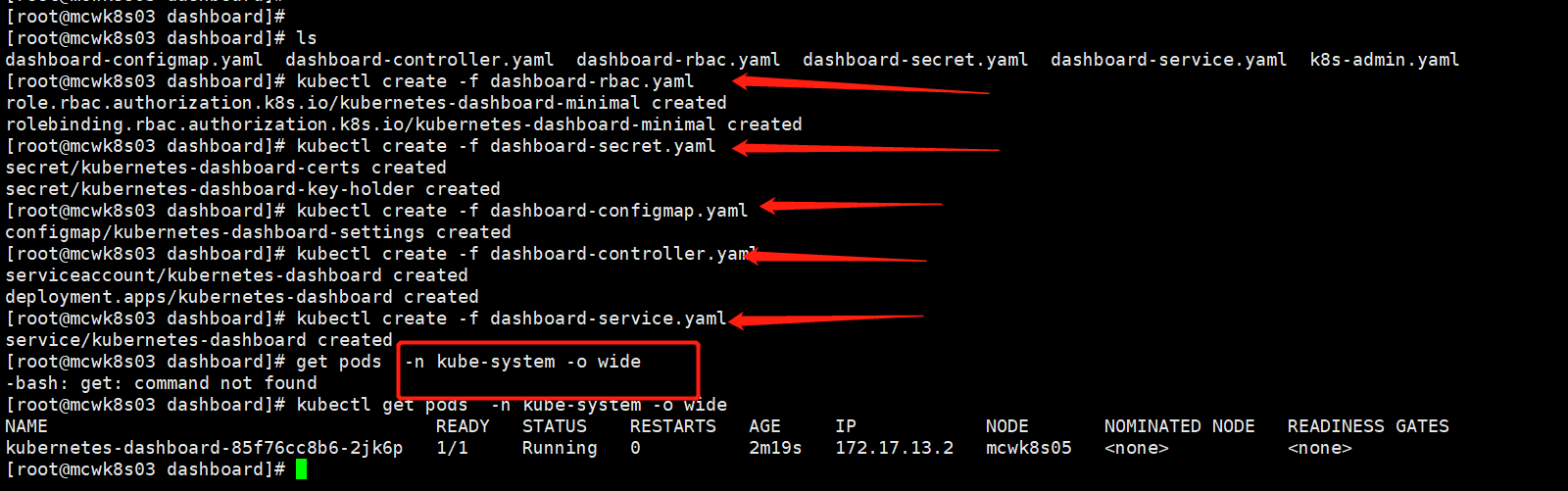

kubectl create -f dashboard-rbac.yaml kubectl create -f dashboard-secret.yaml kubectl create -f dashboard-configmap.yaml kubectl create -f dashboard-controller.yaml kubectl create -f dashboard-service.yaml kubectl get pods -n kube-system -o wide kubectl create -f k8s-admin.yaml kubectl get secret -n kube-system kubectl describe secret dashboard-admin-token-r9d4f -n kube-system

下载地址: https://github.com/kubernetes/kubernetes/tree/master/cluster/addons/dashboard

将6个文件上传,文件就是上面地址里下载的,然后改了一点点

就是改了下地址

如下按照顺序执行,是需要部署顺序的

[root@mcwk8s03 dashboard]# ls dashboard-configmap.yaml dashboard-controller.yaml dashboard-rbac.yaml dashboard-secret.yaml dashboard-service.yaml k8s-admin.yaml [root@mcwk8s03 dashboard]# kubectl create -f dashboard-rbac.yaml role.rbac.authorization.k8s.io/kubernetes-dashboard-minimal created rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard-minimal created [root@mcwk8s03 dashboard]# kubectl create -f dashboard-secret.yaml secret/kubernetes-dashboard-certs created secret/kubernetes-dashboard-key-holder created [root@mcwk8s03 dashboard]# kubectl create -f dashboard-configmap.yaml configmap/kubernetes-dashboard-settings created [root@mcwk8s03 dashboard]# kubectl create -f dashboard-controller.yaml serviceaccount/kubernetes-dashboard created deployment.apps/kubernetes-dashboard created [root@mcwk8s03 dashboard]# kubectl create -f dashboard-service.yaml service/kubernetes-dashboard created [root@mcwk8s03 dashboard]# get pods -n kube-system -o wide -bash: get: command not found [root@mcwk8s03 dashboard]# kubectl get pods -n kube-system -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES kubernetes-dashboard-85f76cc8b6-2jk6p 1/1 Running 0 2m19s 172.17.13.2 mcwk8s05 <none> <none> [root@mcwk8s03 dashboard]#

我们查看下服务的service

[root@mcwk8s03 dashboard]# kubectl get service -n kube-system NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes-dashboard NodePort 10.2.0.147 <none> 443:30001/TCP 4m21s [root@mcwk8s03 dashboard]#

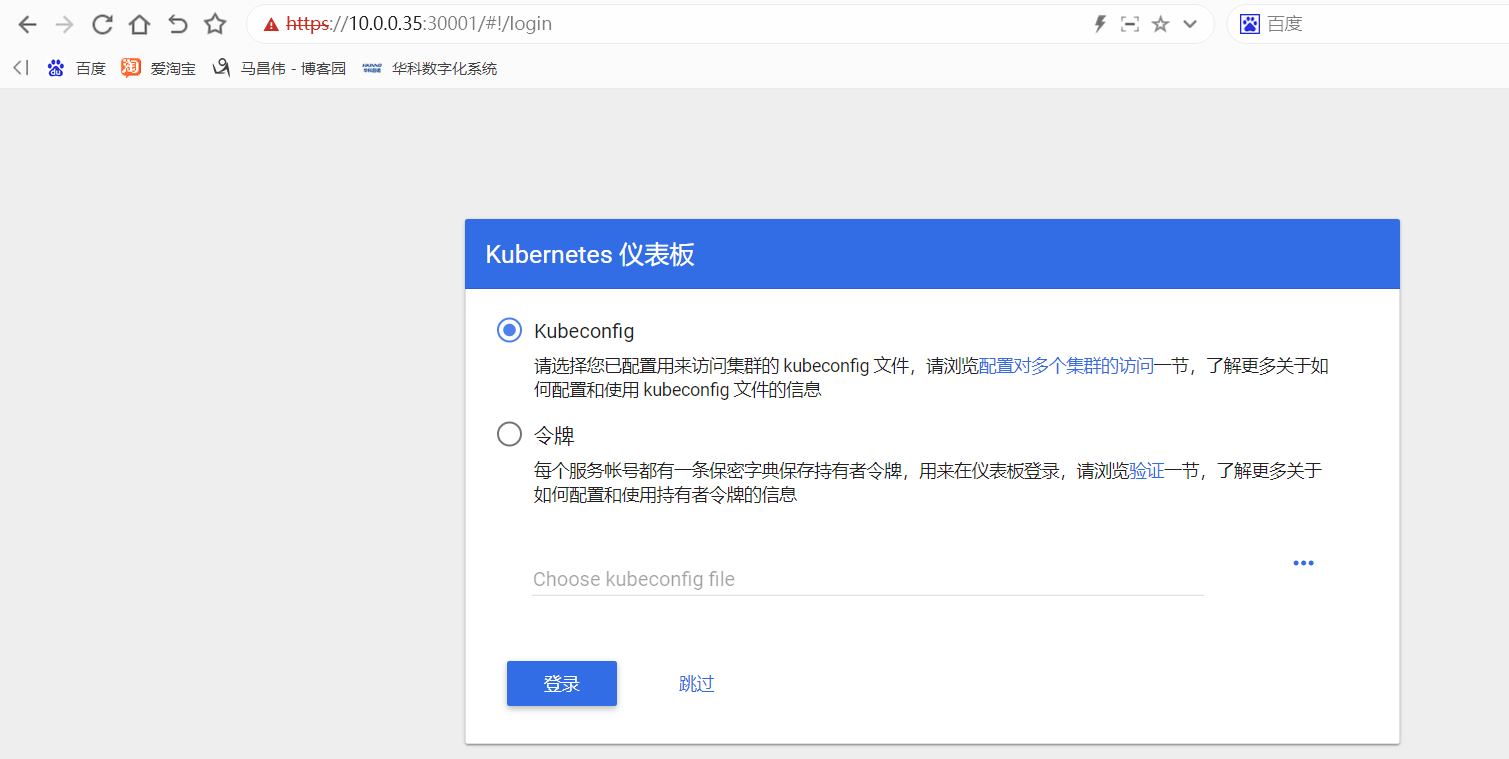

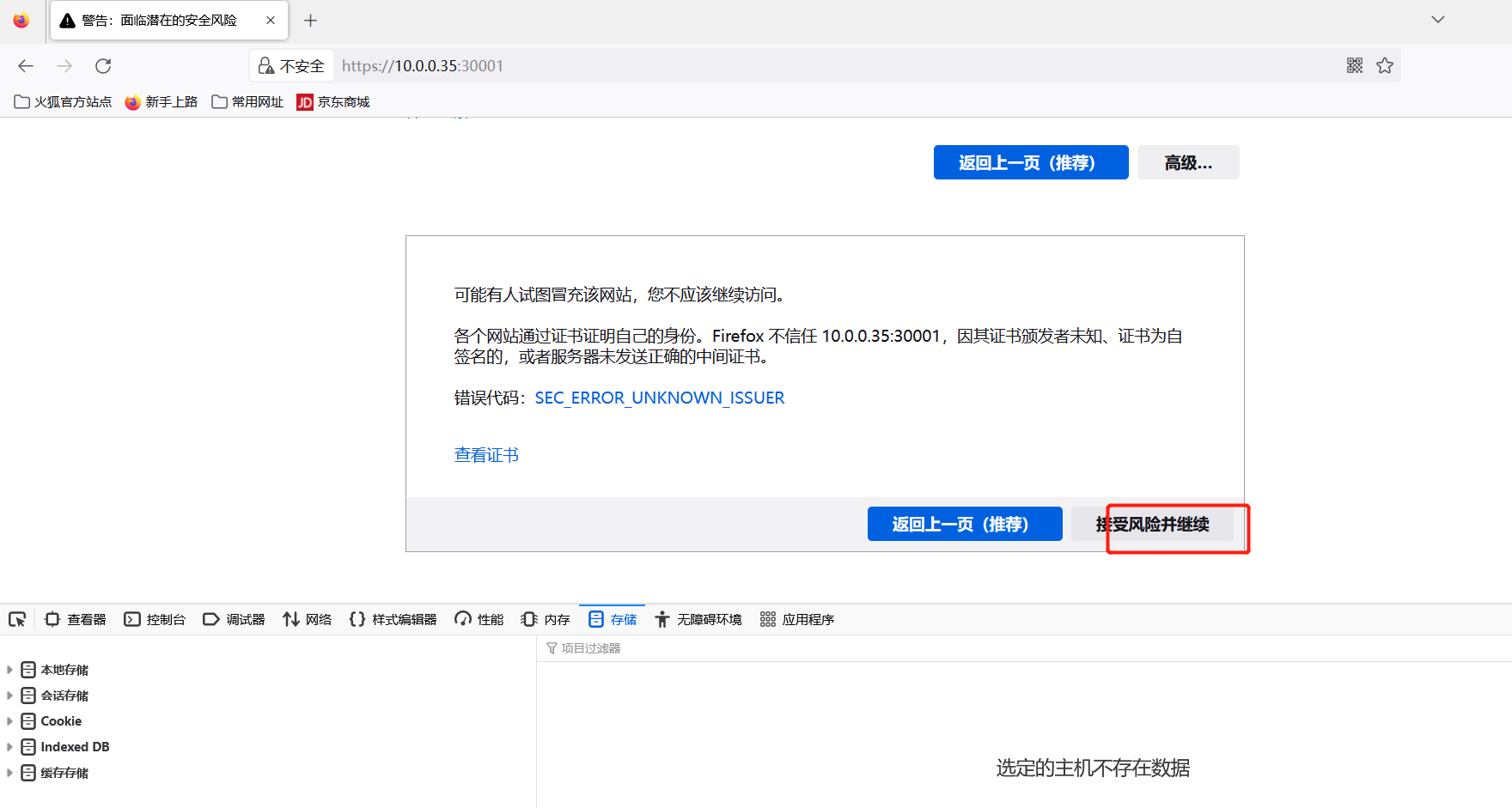

我们访问一下,注意,我们这里做的是使用https的

需要配置登录

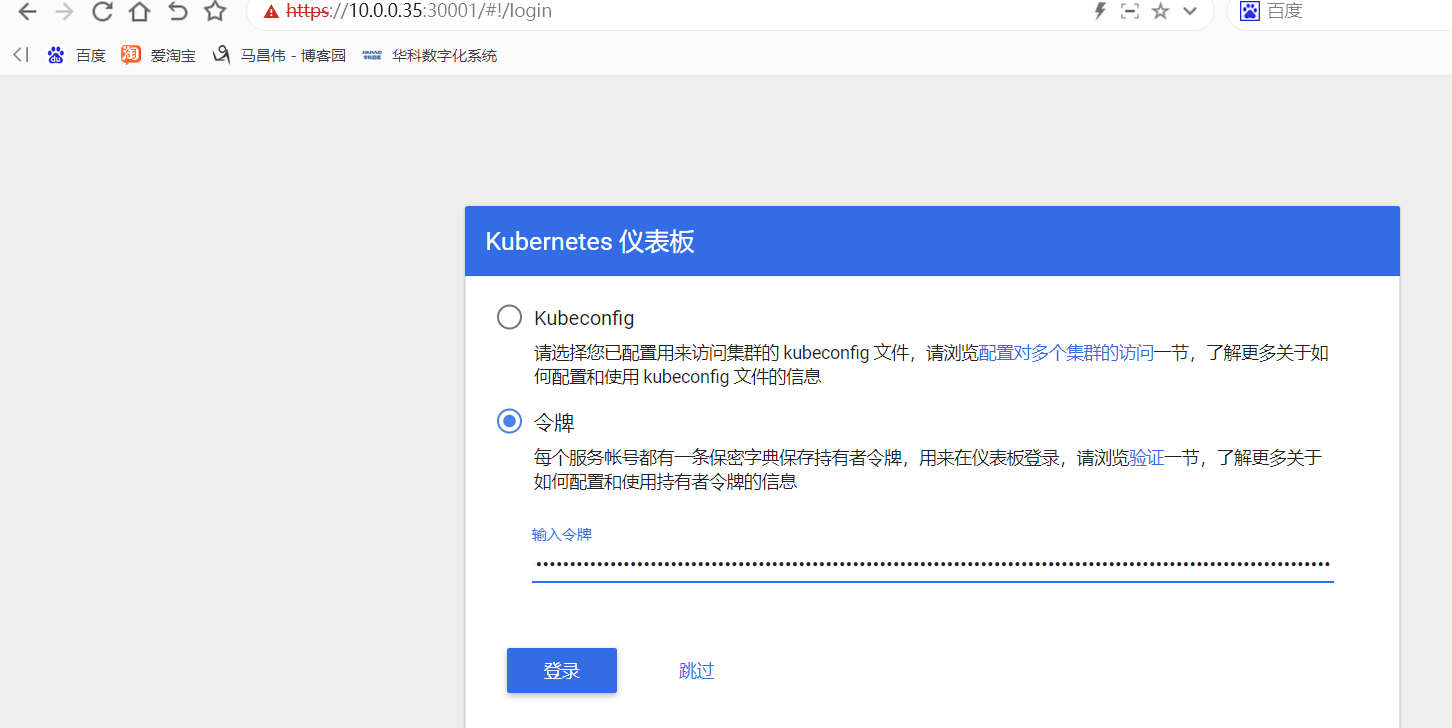

创建token获取并登录

下面我们执行如下操作:

它会创建一个service accouont 来保存我们的token

[root@mcwk8s03 dashboard]# ls dashboard-configmap.yaml dashboard-controller.yaml dashboard-rbac.yaml dashboard-secret.yaml dashboard-service.yaml k8s-admin.yaml [root@mcwk8s03 dashboard]# kubectl create -f k8s-admin.yaml serviceaccount/dashboard-admin created clusterrolebinding.rbac.authorization.k8s.io/dashboard-admin created [root@mcwk8s03 dashboard]#

查看token

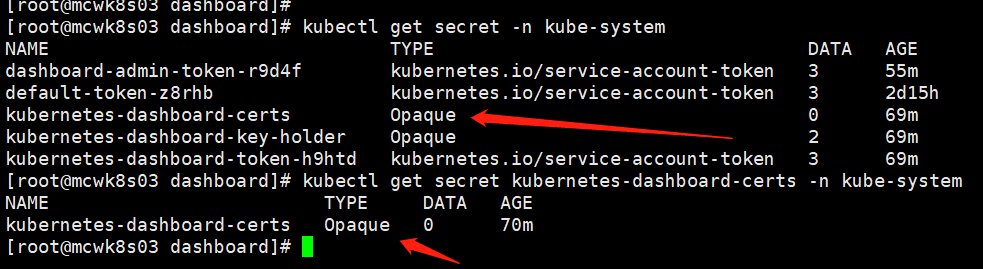

[root@mcwk8s03 dashboard]# kubectl get secret -n kube-system NAME TYPE DATA AGE dashboard-admin-token-r9d4f kubernetes.io/service-account-token 3 3m default-token-z8rhb kubernetes.io/service-account-token 3 2d14h kubernetes-dashboard-certs Opaque 0 16m kubernetes-dashboard-key-holder Opaque 2 16m kubernetes-dashboard-token-h9htd kubernetes.io/service-account-token 3 16m [root@mcwk8s03 dashboard]# kubectl describe secret dashboard-admin-token-r9d4f -n kube-system Name: dashboard-admin-token-r9d4f Namespace: kube-system Labels: <none> Annotations: kubernetes.io/service-account.name: dashboard-admin kubernetes.io/service-account.uid: f3b9bfd7-0ca6-4c04-bb34-664f327582a5 Type: kubernetes.io/service-account-token Data ==== ca.crt: 1359 bytes namespace: 11 bytes token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tcjlkNGYiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiZjNiOWJmZDctMGNhNi00YzA0LWJiMzQtNjY0ZjMyNzU4MmE1Iiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmRhc2hib2FyZC1hZG1pbiJ9.hdwZuYzMNlywmY4258_bD3dIsyTXCV8PB8wZXCaCjmOg43tcVMuYYXmtsePcVK0_kOKaUN3IC96s8sQ-rF969QEEvWHk2UPrJs29TPfXEStL-5uNWG_H2MLpJ1lHrtJ8twKpmfBrEnmYS09h5-R-JWQ_csmcKSojGNdGkzI82j-5o1r09qzMhsT__a2xzlSl-_gsn6XCylttiZnVQ2HEpYJGIjDumdUt8kYq9vbWuFlXLhE6zTs5UEIv8roZt1WB2lFqb5QOox7ZspVkT9LbyPmJJw_tr0fNOrnCPLpWnVnvV2PJNA18_NdC02aZ2SYAb2z0QVKf-b-WIwMvSUObuQ [root@mcwk8s03 dashboard]#

将上面的token输入并登录

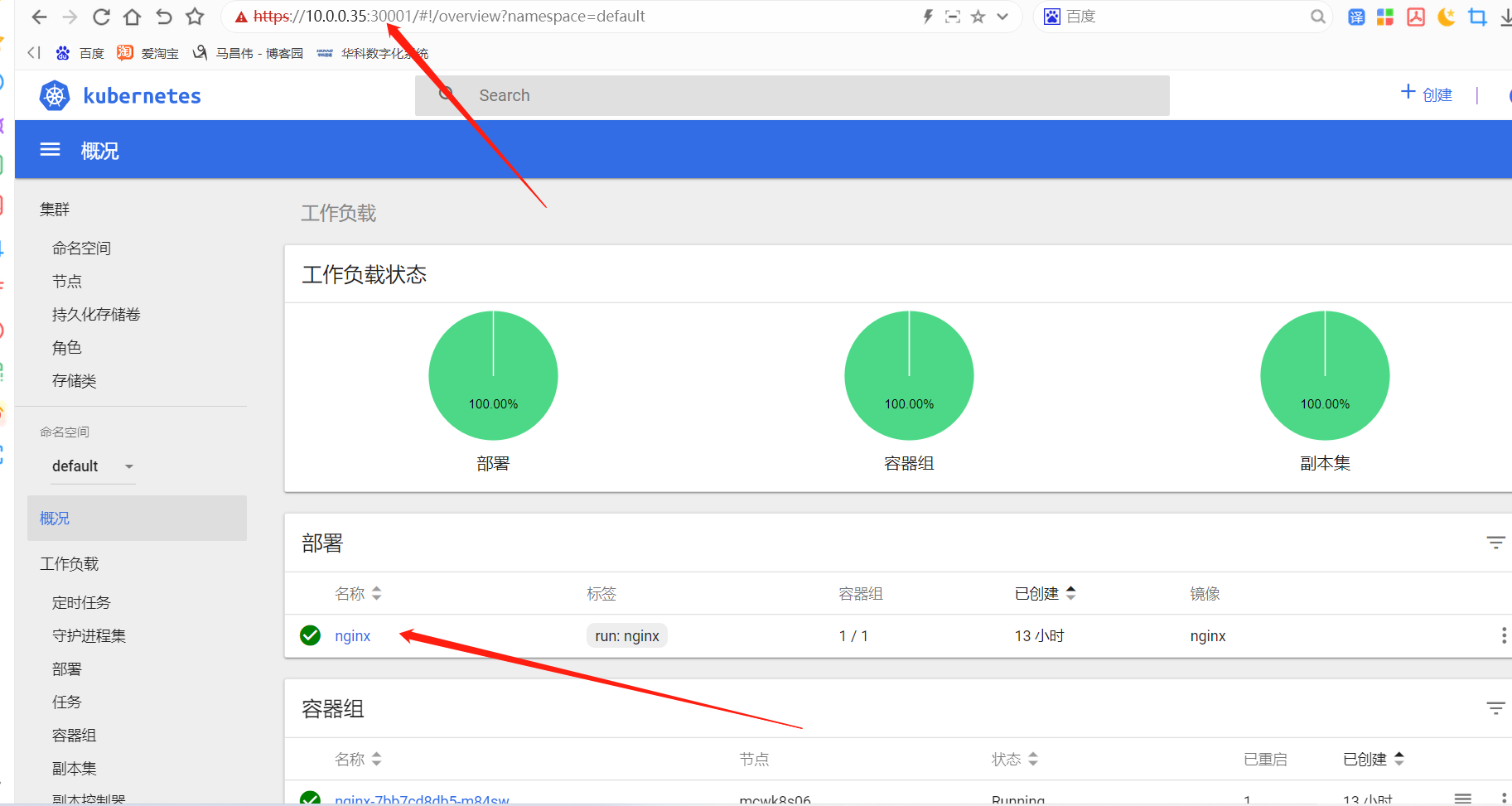

成功登录

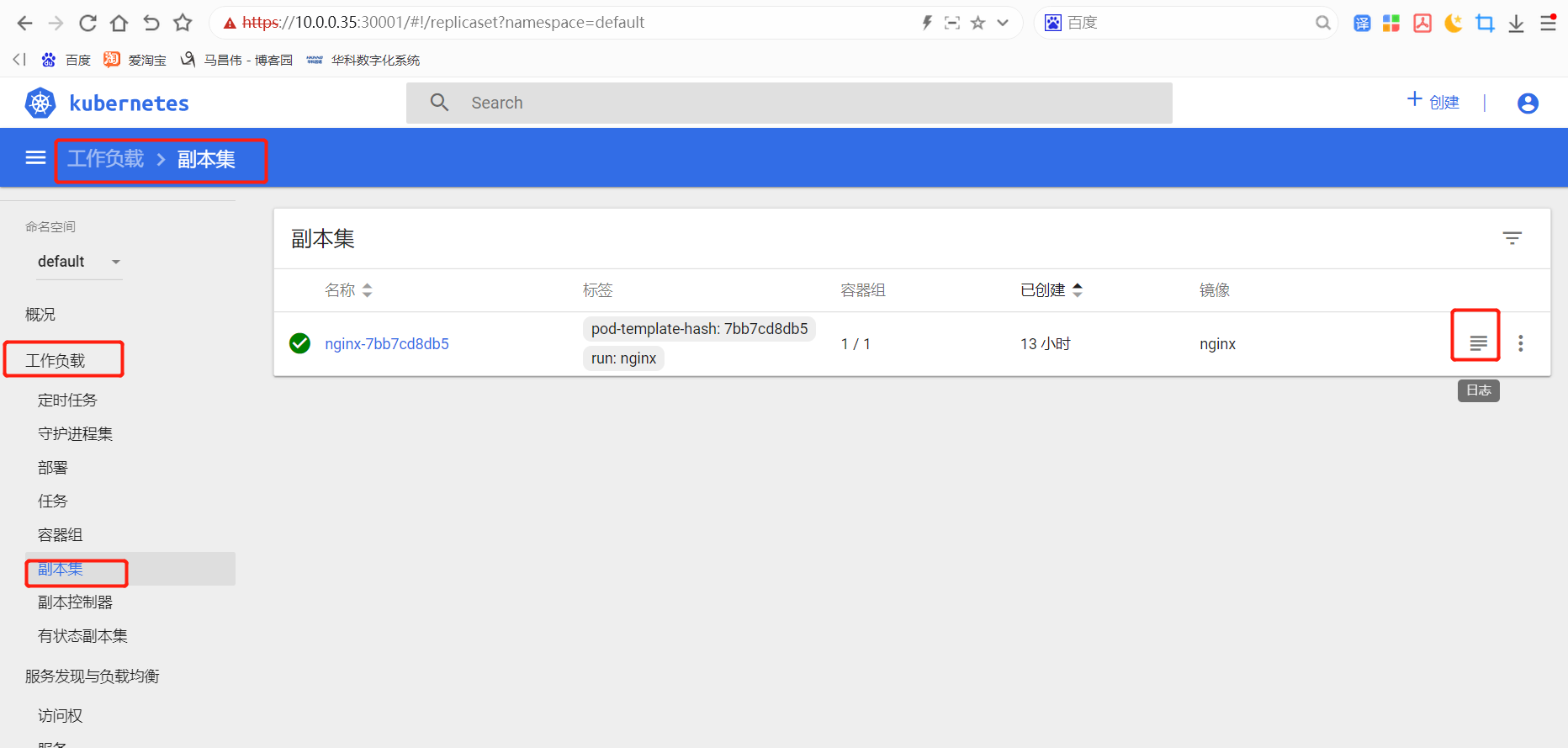

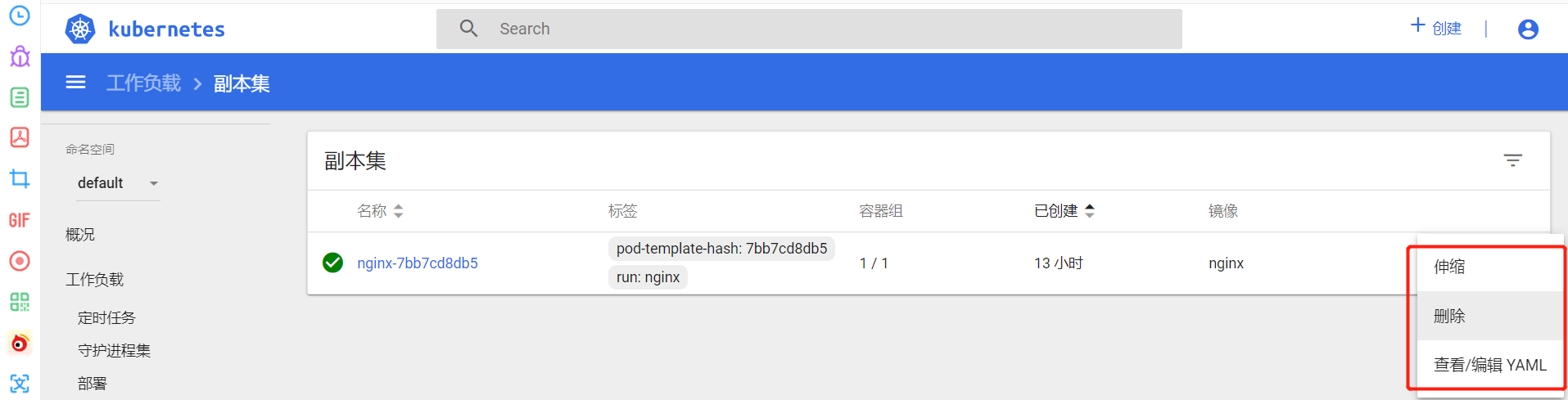

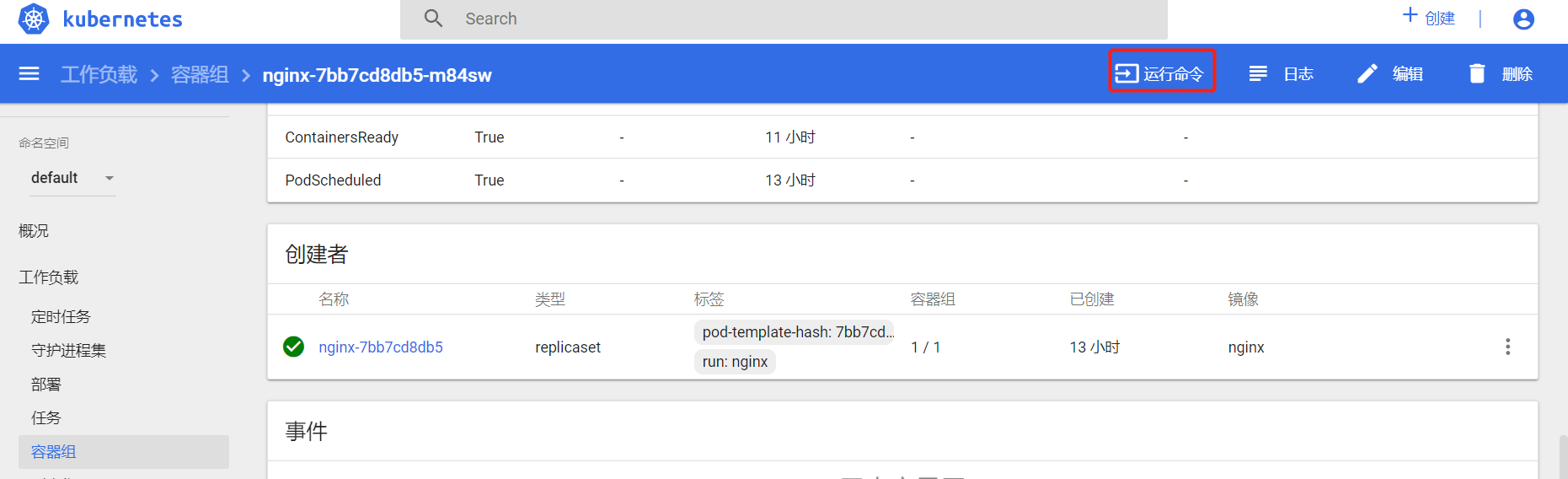

工作负载,副本集。查看容器日志

其它功能

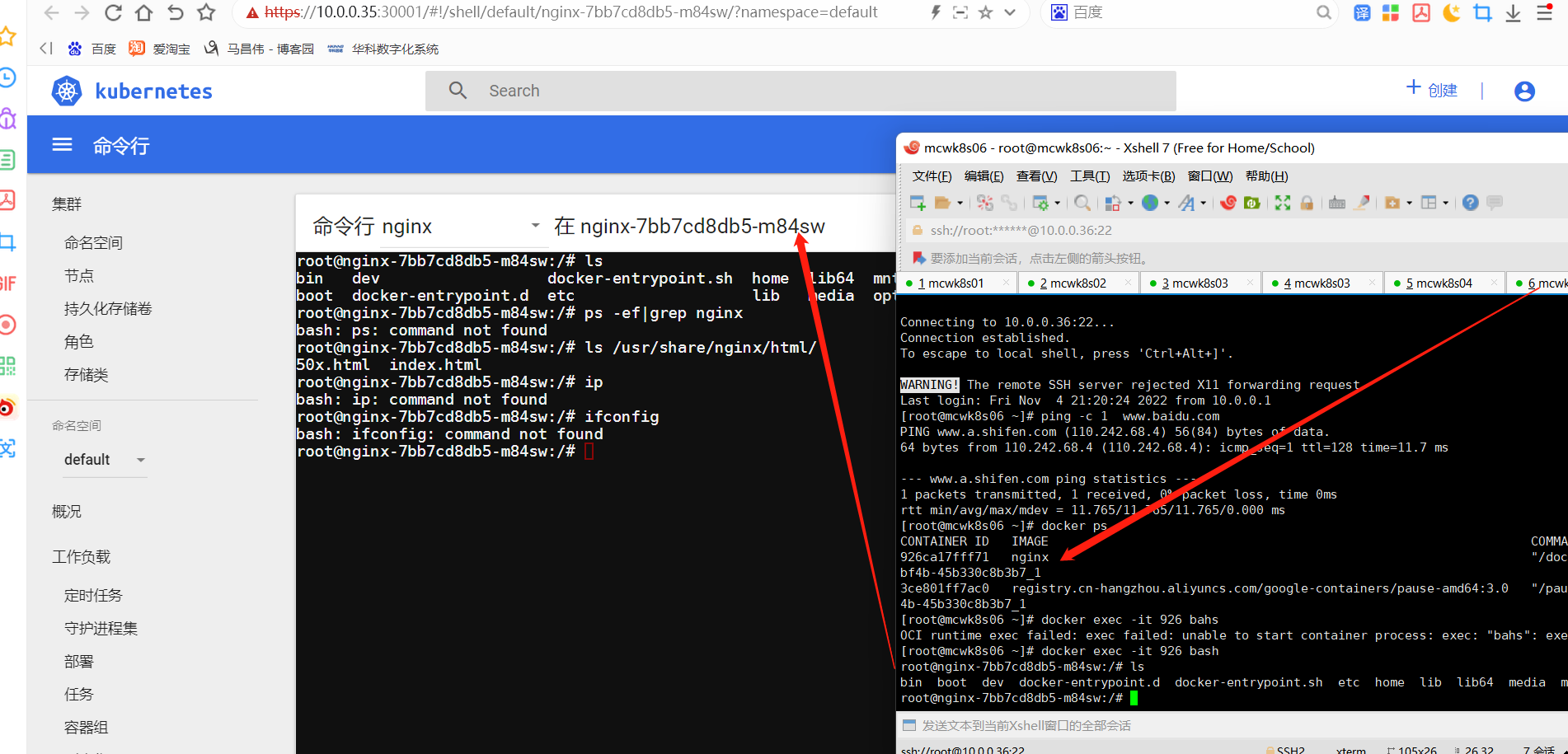

进入容器执行命令

进入容器

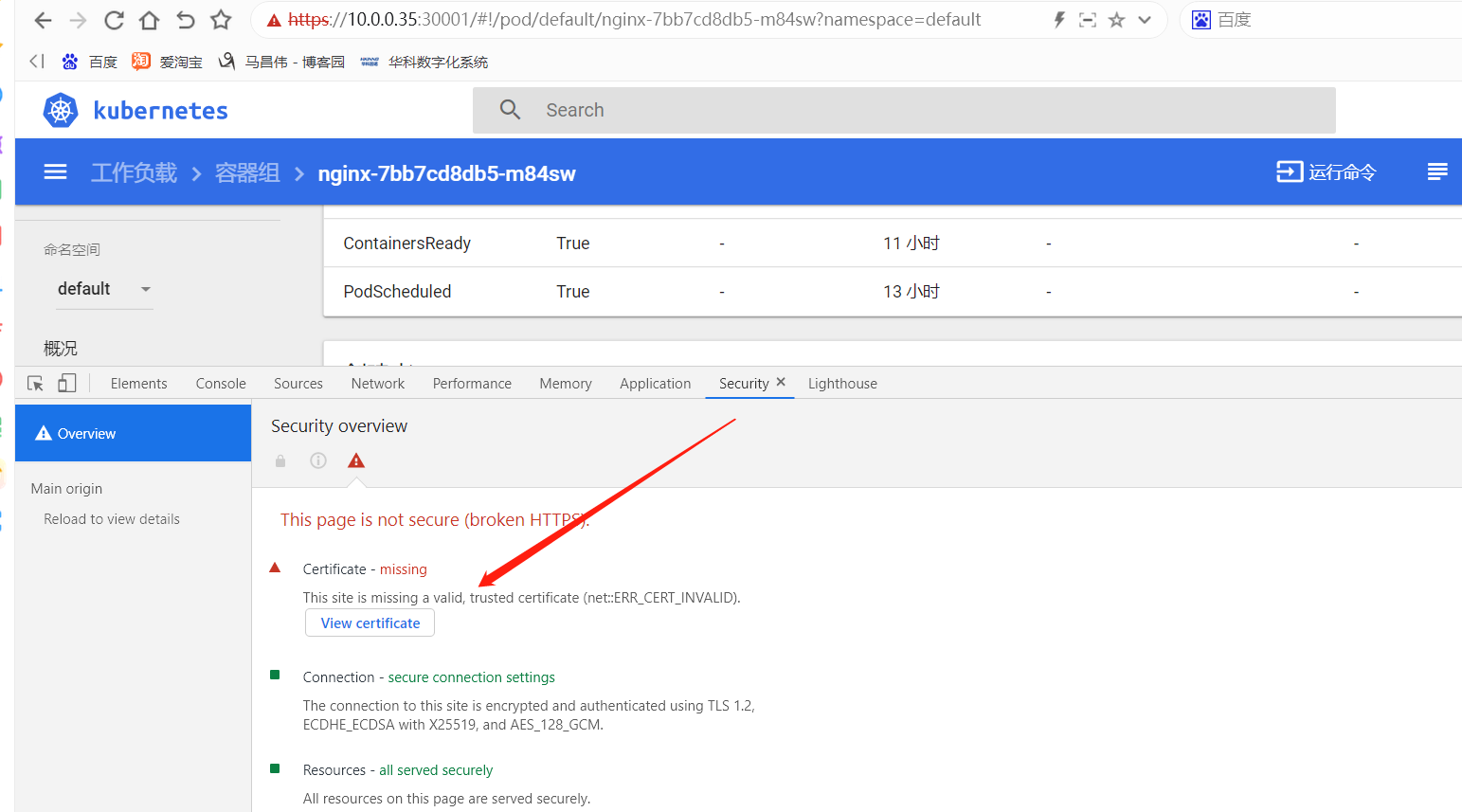

自签证书

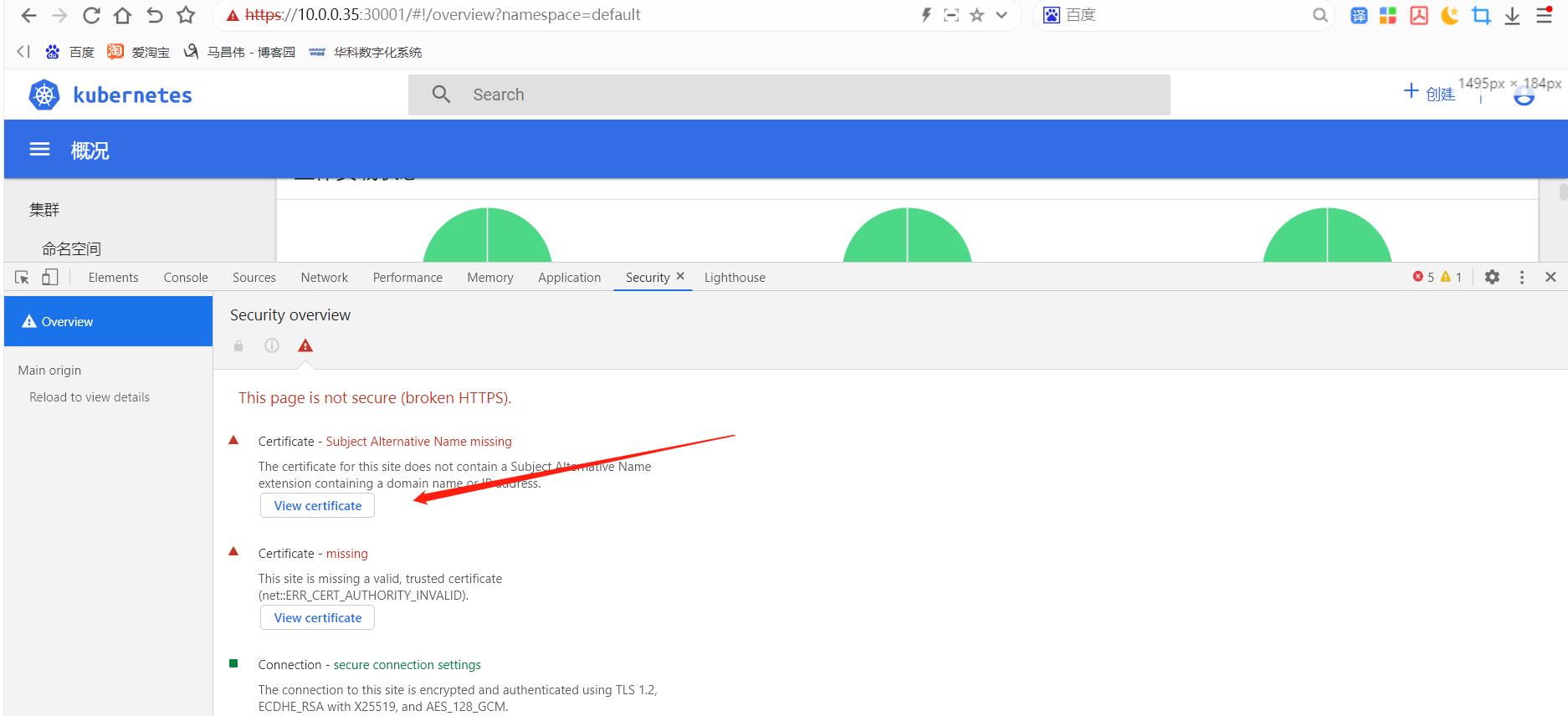

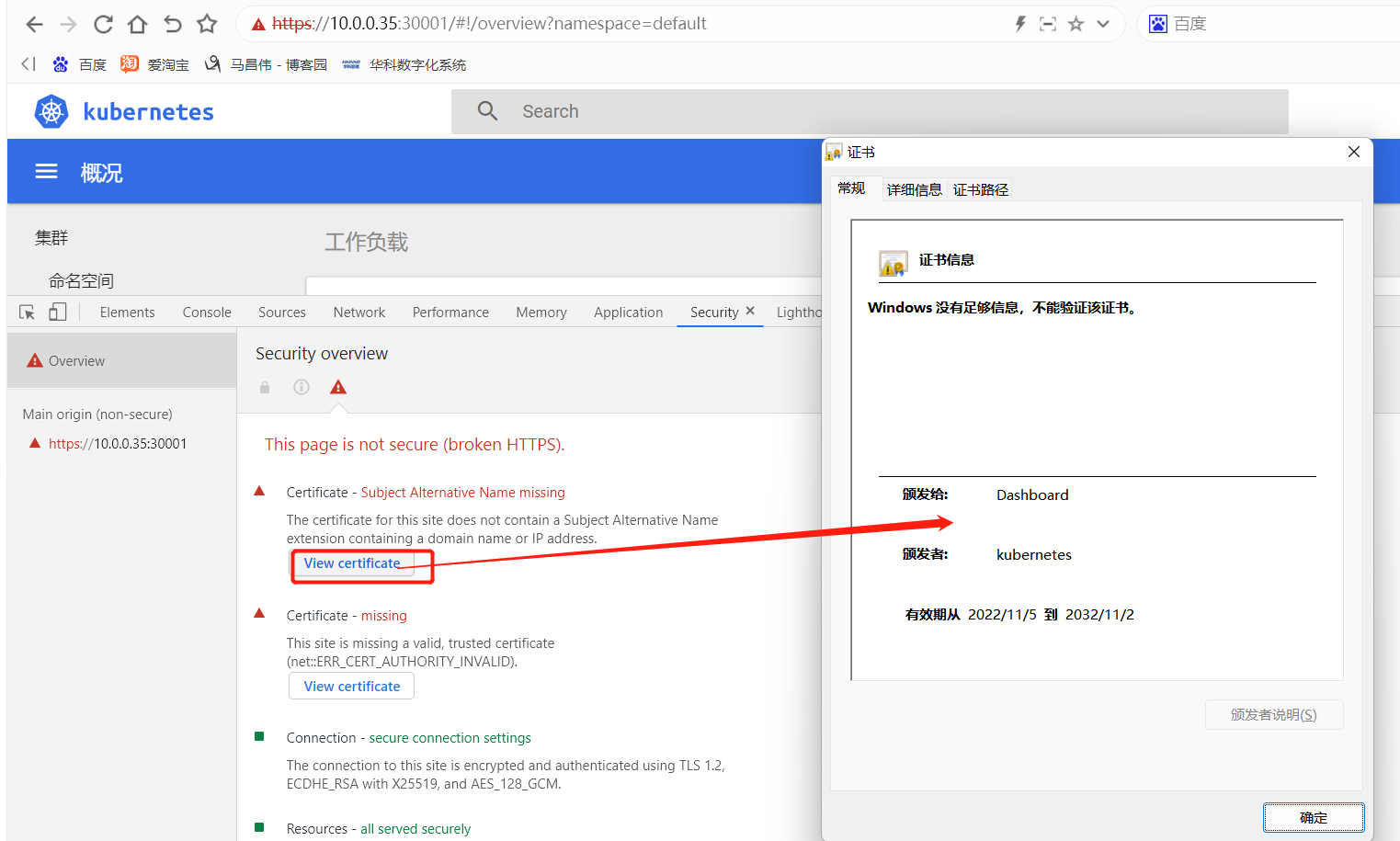

我们部署后的证书显示是这样的。是有问题的,但是我用谷歌浏览器是能正常访问的。有些浏览器可能访问不了

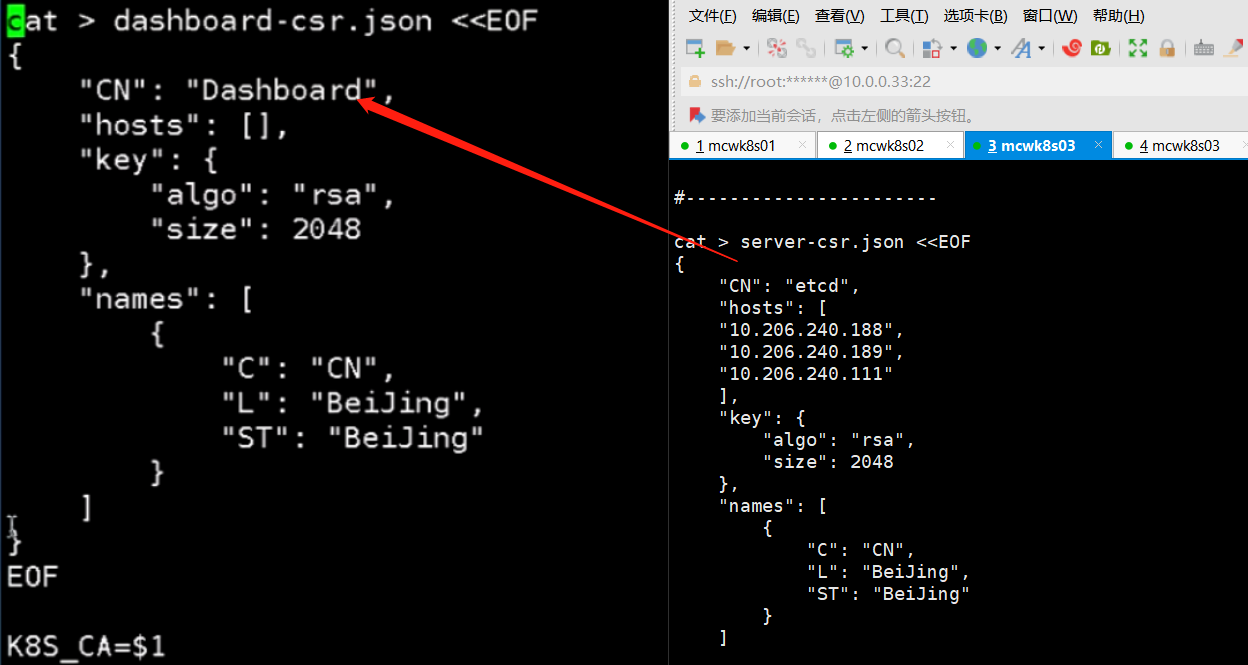

我们拿etcd的证书改下,基本一致

创建证书(指定ca,也就是k8s apiserver证书目录),删除部署的证书,导入自签证书

[root@mcwk8s03 dashboard]# ls

dashboard-cert.sh dashboard-configmap.yaml dashboard-controller.yaml dashboard-rbac.yaml dashboard-secret.yaml dashboard-service.yaml k8s-admin.yaml

[root@mcwk8s03 dashboard]# cat dashboard-cert.sh #这个脚本有问题,看后面的改后的

cat > dashboard-csr.json <<EOF

{

"CN": "Dashboard",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing"

}

]

}

EOF

K8S_CA=$1

cfssl gencert -ca=$K8S_CA/ca.pem -ca-key=$K8S_CA/ca-key.pem -config=$K8S_CA/ca-config -profile=kubernetes dashboard-csr.json | cfssljson -bare dashboard

kubectl delete secret kubernetes-dashboard-certs -n kube-system

kuebctl create secret generic kubernetes-dashboard-certs --from-file=./ -n kube-system

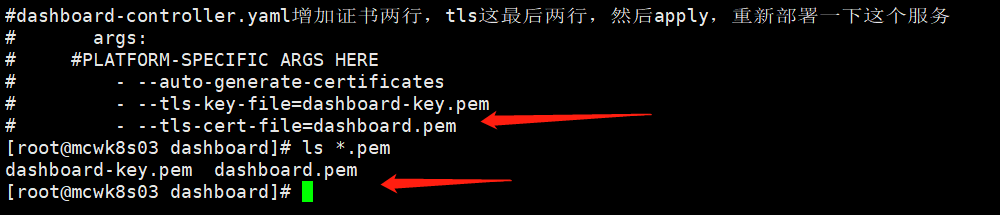

#dashboard-controller.yaml增加证书两行,tls这最后两行,然后apply,重新部署一下这个服务

# args:

# #PLATFORM-SPECIFIC ARGS HERE

# - --auto-generate-certificates

# - --tls-key-file=dashboard-key.pem

# - --tls-cert-file=dashboard.pem

[root@mcwk8s03 dashboard]#

它默认的是这个

上面脚本有问题再改改

[root@mcwk8s03 dashboard]# ls /root/k8s/k8s-cert/ admin.csr admin-key.pem ca-config.json ca-csr.json ca.pem kube-proxy.csr kube-proxy-key.pem server.csr server-key.pem admin-csr.json admin.pem ca.csr ca-key.pem k8s-cert.sh kube-proxy-csr.json kube-proxy.pem server-csr.json server.pem [root@mcwk8s03 dashboard]# ls /opt/kubernetes/ssl/ ca-key.pem ca.pem server-key.pem server.pem [root@mcwk8s03 dashboard]# [root@mcwk8s03 dashboard]# ls dashboard-cert.sh dashboard-configmap.yaml dashboard-controller.yaml dashboard-rbac.yaml dashboard-secret.yaml dashboard-service.yaml k8s-admin.yaml [root@mcwk8s03 dashboard]# bash dashboard-cert.sh /root/k8s/k8s-cert/ Failed to load config file: {"code":5200,"message":"could not read configuration file"}Failed to parse input: unexpected end of JSON input secret "kubernetes-dashboard-certs" deleted dashboard-cert.sh: line 22: kuebctl: command not found [root@mcwk8s03 dashboard]#

修改后如下:

[root@mcwk8s03 dashboard]# cat dashboard-cert.sh cat > dashboard-csr.json <<EOF { "CN": "Dashboard", "hosts": [], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "BeiJing", "ST": "BeiJing" } ] } EOF K8S_CA=$1 cfssl gencert -ca=$K8S_CA/ca.pem -ca-key=$K8S_CA/ca-key.pem -config=$K8S_CA/ca-config.json -profile=kubernetes dashboard-csr.json | cfssljson -bare dashboard kubectl delete secret kubernetes-dashboard-certs -n kube-system kubectl create secret generic kubernetes-dashboard-certs --from-file=./ -n kube-system #dashboard-controller.yaml增加证书两行,tls这最后两行,然后apply,重新部署一下这个服务 # args: # #PLATFORM-SPECIFIC ARGS HERE # - --auto-generate-certificates # - --tls-key-file=dashboard-key.pem # - --tls-cert-file=dashboard.pem [root@mcwk8s03 dashboard]#

执行生成新的dashboard证书

[root@mcwk8s03 dashboard]# bash dashboard-cert.sh /root/k8s/k8s-cert/ 2022/11/05 14:04:15 [INFO] generate received request 2022/11/05 14:04:15 [INFO] received CSR 2022/11/05 14:04:15 [INFO] generating key: rsa-2048 2022/11/05 14:04:15 [INFO] encoded CSR 2022/11/05 14:04:15 [INFO] signed certificate with serial number 127722619621407209279563714455737397962305549241 2022/11/05 14:04:15 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for websites. For more information see the Baseline Requirements for the Issuance and Management of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org); specifically, section 10.2.3 ("Information Requirements"). secret "kubernetes-dashboard-certs" deleted secret/kubernetes-dashboard-certs created [root@mcwk8s03 dashboard]# ls dashboard-cert.sh dashboard-controller.yaml dashboard-csr.json dashboard.pem dashboard-secret.yaml k8s-admin.yaml dashboard-configmap.yaml dashboard.csr dashboard-key.pem dashboard-rbac.yaml dashboard-service.yaml [root@mcwk8s03 dashboard]# pwd /root/k8s/dashboard [root@mcwk8s03 dashboard]# ls /opt/ containerd etcd kubernetes [root@mcwk8s03 dashboard]# ls /opt/kubernetes/ bin cfg ssl [root@mcwk8s03 dashboard]#

这两个文件名字已经生成了

添加两行,指定当前目录下的这两个证书文件

然后重新部署一下

[root@mcwk8s03 dashboard]# kubectl apply -f dashboard-controller.yaml Warning: kubectl apply should be used on resource created by either kubectl create --save-config or kubectl apply serviceaccount/kubernetes-dashboard configured Warning: kubectl apply should be used on resource created by either kubectl create --save-config or kubectl apply deployment.apps/kubernetes-dashboard configured [root@mcwk8s03 dashboard]#

我们再来看,这里可以点击查看到证书了。之前用火狐浏览器是访问不了,访问时进入不是安全的页面就没有点击下一步继续浏览了

火狐访问:

vip不需要kube-apiserver的证书认证。这里时四层上的转发,证书https时七层上的协议,不涉及七层上的。

dashboard 部署配置文件

contoller.yaml已经按照上面自签证书的修改添加了两行

[root@mcwk8s03 dashboard]# ls dashboard-cert.sh dashboard-controller.yaml dashboard-csr.json dashboard.pem dashboard-secret.yaml k8s-admin.yaml dashboard-configmap.yaml dashboard.csr dashboard-key.pem dashboard-rbac.yaml dashboard-service.yaml [root@mcwk8s03 dashboard]# for i in `ls *.yaml`;do echo "###########"$i && cat $i ;done ###########dashboard-configmap.yaml apiVersion: v1 kind: ConfigMap metadata: labels: k8s-app: kubernetes-dashboard # Allows editing resource and makes sure it is created first. addonmanager.kubernetes.io/mode: EnsureExists name: kubernetes-dashboard-settings namespace: kube-system ###########dashboard-controller.yaml apiVersion: v1 kind: ServiceAccount metadata: labels: k8s-app: kubernetes-dashboard addonmanager.kubernetes.io/mode: Reconcile name: kubernetes-dashboard namespace: kube-system --- apiVersion: apps/v1 kind: Deployment metadata: name: kubernetes-dashboard namespace: kube-system labels: k8s-app: kubernetes-dashboard kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile spec: selector: matchLabels: k8s-app: kubernetes-dashboard template: metadata: labels: k8s-app: kubernetes-dashboard annotations: scheduler.alpha.kubernetes.io/critical-pod: '' seccomp.security.alpha.kubernetes.io/pod: 'docker/default' spec: priorityClassName: system-cluster-critical containers: - name: kubernetes-dashboard image: siriuszg/kubernetes-dashboard-amd64:v1.8.3 resources: limits: cpu: 100m memory: 300Mi requests: cpu: 50m memory: 100Mi ports: - containerPort: 8443 protocol: TCP args: # PLATFORM-SPECIFIC ARGS HERE - --auto-generate-certificates - --tls-key-file=dashboard-key.pem - --tls-cert-file=dashboard.pem volumeMounts: - name: kubernetes-dashboard-certs mountPath: /certs - name: tmp-volume mountPath: /tmp livenessProbe: httpGet: scheme: HTTPS path: / port: 8443 initialDelaySeconds: 30 timeoutSeconds: 30 volumes: - name: kubernetes-dashboard-certs secret: secretName: kubernetes-dashboard-certs - name: tmp-volume emptyDir: {} serviceAccountName: kubernetes-dashboard tolerations: - key: "CriticalAddonsOnly" operator: "Exists" ###########dashboard-rbac.yaml kind: Role apiVersion: rbac.authorization.k8s.io/v1 metadata: labels: k8s-app: kubernetes-dashboard addonmanager.kubernetes.io/mode: Reconcile name: kubernetes-dashboard-minimal namespace: kube-system rules: # Allow Dashboard to get, update and delete Dashboard exclusive secrets. - apiGroups: [""] resources: ["secrets"] resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs"] verbs: ["get", "update", "delete"] # Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map. - apiGroups: [""] resources: ["configmaps"] resourceNames: ["kubernetes-dashboard-settings"] verbs: ["get", "update"] # Allow Dashboard to get metrics from heapster. - apiGroups: [""] resources: ["services"] resourceNames: ["heapster"] verbs: ["proxy"] - apiGroups: [""] resources: ["services/proxy"] resourceNames: ["heapster", "http:heapster:", "https:heapster:"] verbs: ["get"] --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: name: kubernetes-dashboard-minimal namespace: kube-system labels: k8s-app: kubernetes-dashboard addonmanager.kubernetes.io/mode: Reconcile roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: kubernetes-dashboard-minimal subjects: - kind: ServiceAccount name: kubernetes-dashboard namespace: kube-system ###########dashboard-secret.yaml apiVersion: v1 kind: Secret metadata: labels: k8s-app: kubernetes-dashboard # Allows editing resource and makes sure it is created first. addonmanager.kubernetes.io/mode: EnsureExists name: kubernetes-dashboard-certs namespace: kube-system type: Opaque --- apiVersion: v1 kind: Secret metadata: labels: k8s-app: kubernetes-dashboard # Allows editing resource and makes sure it is created first. addonmanager.kubernetes.io/mode: EnsureExists name: kubernetes-dashboard-key-holder namespace: kube-system type: Opaque ###########dashboard-service.yaml apiVersion: v1 kind: Service metadata: name: kubernetes-dashboard namespace: kube-system labels: k8s-app: kubernetes-dashboard kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile spec: type: NodePort selector: k8s-app: kubernetes-dashboard ports: - port: 443 targetPort: 8443 nodePort: 30001 ###########k8s-admin.yaml apiVersion: v1 kind: ServiceAccount metadata: name: dashboard-admin namespace: kube-system --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: dashboard-admin subjects: - kind: ServiceAccount name: dashboard-admin namespace: kube-system roleRef: kind: ClusterRole name: cluster-admin apiGroup: rbac.authorization.k8s.io [root@mcwk8s03 dashboard]#