python小白的爬虫之旅

1、爬www.haha56.net/main/youmo网站的内容

ieimport requests

import re

response=requests.get("http://www.haha56.net/main/youmo/")

response.encoding="gb2312"

data=response.text

#print(data)

content_res = re.findall('<dd class="preview">(.*?)</dd>', data)

title_res = re.findall('<a href=".*?" target="_blank">(.*?)</a>', data)

title_res=title_res[1:11]

content_res = content_res[1:11]

for i in range(len(content_res)):

print(title_res[i])

print(content_res[i])

2、爬https://www.doutula.com/photo/list/网站的图片

import requests

import re

response=requests.get('https://www.doutula.com/photo/list/')

data=response.text

img_res=re.findall('data-original="(.*?)"',data)

for i in img_res:

img_respone=requests.get(i)

img_data=img_respone.content

img_name=i.split('/')[-1]

f=open(img_name,'wb')

f.write(img_data)

3、对www.haha56.net/main/youmo网站的内容进行词频分析

import jieba

f=open(r'E:\实习\编程\实习\day07\2.txt','r',encoding='gb2312')

data=f.read()

data_jieba=jieba.lcut(data)

dict={}

for word in data_jieba:

if len(word)==1:

continue

if word in{"一二","货在","一家","猛吃","时说","没带","一顿"}:

continue

if word in dict:

dict[word]+=1

else:

dict[word]=1

def func(i):

return i[1]

data_list=list(dict.items())

data_list.sort(key=func)

for i in data_list[0:10]:

print(f'{i[0]:^6}{i[1]^5}')

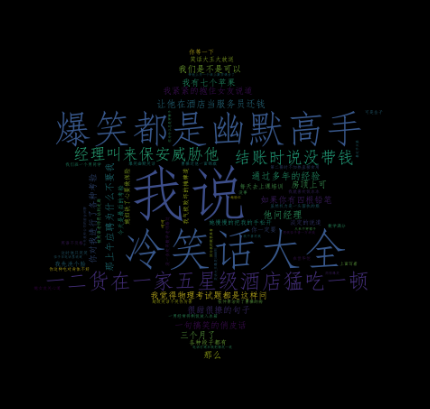

4、对www.haha56.net/main/youmo网站的内容进行词云制作

# coding=gbk

import wordcloud

from imageio import imread

mask=imread(r'E:\实习\编程\实习\day07\3.png')

f=open(r'E:\实习\编程\实习\day07\2.txt')

data=f.read()

w=wordcloud.WordCloud(font_path=r'C:\Windows\Fonts\simfang',mask=mask,width=700,height=700,background_color="black")

w.generate(data)

w.to_file('outfile.png')

既然选择了远方,只能风雨兼程