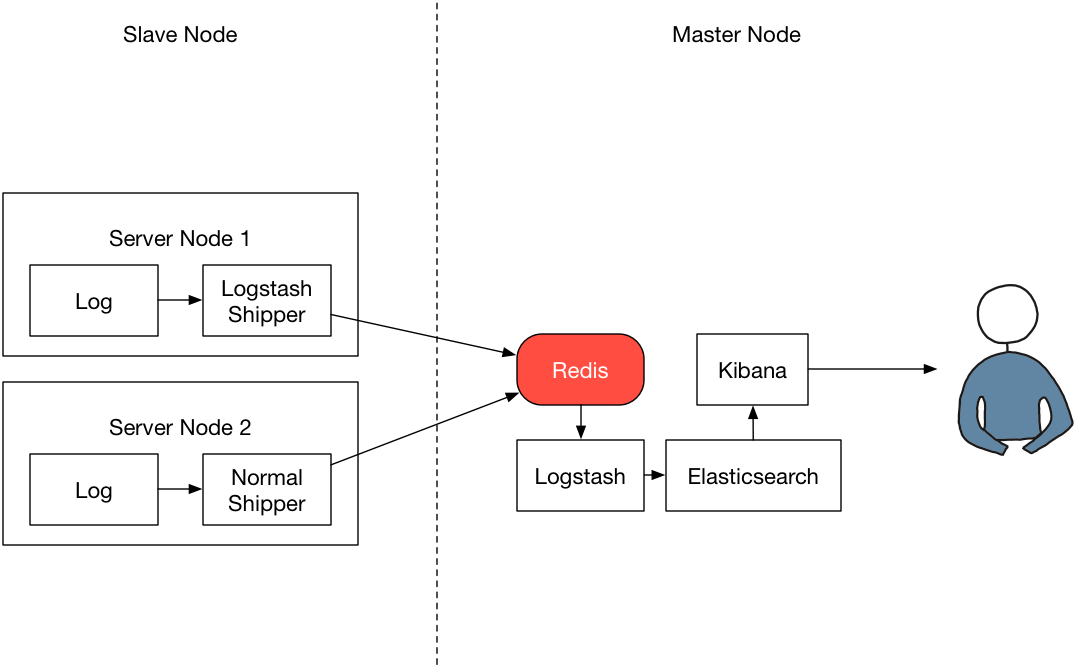

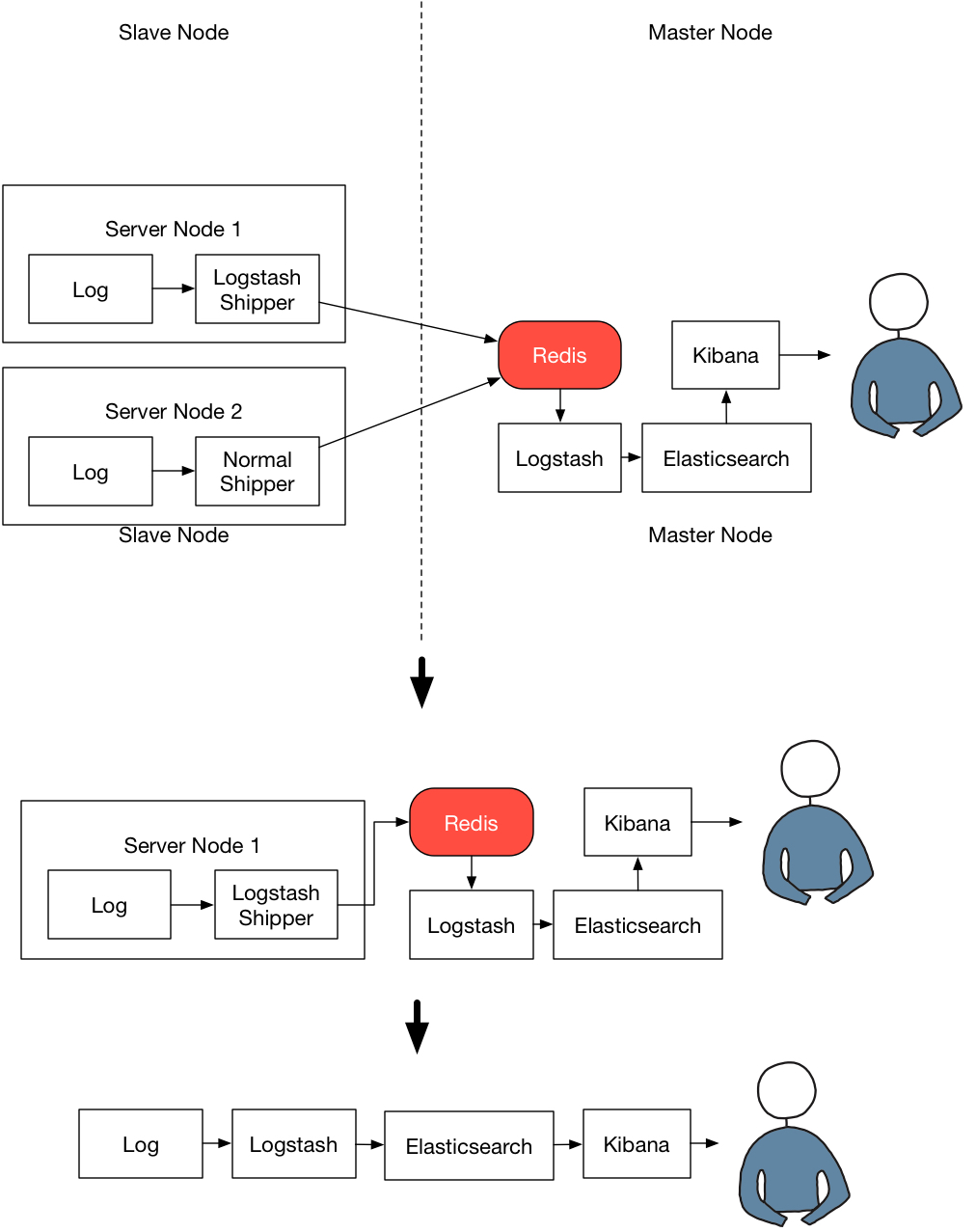

ELKR (Elasticsearch + Logstash + Kibana + Redis) 一套完整的 Nginx 日志分析技术栈

- ELK Stack 的基本概念

- 安装 ELK 组件

Elasticsearch抓取日志分析Logstash日志格式处理Kibana前端数据可视化展示

安装redis

tom@ubuntu:~$ java -version

java version "1.8.0_171"

Java(TM) SE Runtime Environment (build 1.8.0_171-b11)

Java HotSpot(TM) 64-Bit Server VM (build 25.171-b11, mixed mode)

sudo apt -y update

sudo apt -y install redis-server

tom@ubuntu:~/Desktop/2$ redis-server -v

Redis server v=3.0.6 sha=00000000:0 malloc=jemalloc-3.6.0 bits=64 build=7785291a3d2152db

tom@ubuntu:~/Desktop/2$ ss -ntlp|grep redis

tom@ubuntu:~/Desktop/2$ ss -ntlp|grep 6

LISTEN 0 5 127.0.0.1:631 *:*

LISTEN 0 128 127.0.0.1:6379 *:*

LISTEN 0 5 ::1:631

tom@ubuntu:~/Desktop/2$ sudo service redis-server restart

tom@ubuntu:~/Desktop/2$ ss -ntlp|grep 6379

LISTEN 0 128 127.0.0.1:6379 *:*

elk

apt 方式安装 ELK(管理软件方便)

使用 apt-get 方式安装软件,可以很方便地管理软件运行状态。具体步骤如下:

- 导入 Elastic PGP Key:

wget -qO - https://artifacts.elastic.co/GPG-KEY-elasticsearch | sudo apt-key add -

- 添加官方安装源:

sudo apt-get install apt-transport-https # 添加官方 apt 源信息 echo "deb https://artifacts.elastic.co/packages/7.x/apt stable main" | sudo tee -a /etc/apt/sources.list.d/elastic-7.x.list

- 安装 ELK 环境:

sudo apt-get update sudo apt-get install elasticsearch # 安装 elasticsearch sudo apt-get install kibana # 安装 kibana sudo apt-get install logstash # 安装 logstash

通过以上步骤,即可完成 ELK Stack 环境的部署。

dpkg 方式安装 ELK(类似于 apt)

由于国内网络的原因通过 apt-get 的方式安装三个软件,速度非常慢,所以也可以通过使用 wget 命令将相应的安装包(.deb)下载到环境中。

安装 elasticsearch:

wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-7.4.1-amd64.deb sudo dpkg -i elasticsearch-7.4.1-amd64.deb

安装 kibana:

wget https://artifacts.elastic.co/downloads/kibana/kibana-7.4.1-amd64.deb sudo dpkg -i kibana-7.4.1-amd64.deb

安装 logstash:

wget https://artifacts.elastic.co/downloads/logstash/logstash-7.4.1.deb sudo dpkg -i logstash-7.4.1.deb

tar 方式安装 ELK(最适合新手)

如果大家下载比较慢,也可以使用我们提供的地址:

# elasticsearch wget https://downloadfiles.oss-cn-hangzhou.aliyuncs.com/courses/aliyun/elk/elasticsearch-7.4.1-linux-x86_64.tar.gz # kibana wget https://downloadfiles.oss-cn-hangzhou.aliyuncs.com/courses/aliyun/elk/kibana-7.4.1-linux-x86_64.tar.gz # logstash wget https://downloadfiles.oss-cn-hangzhou.aliyuncs.com/courses/aliyun/elk/logstash-7.4.1.tar.gz

安装 elasticsearch:

curl -L -O https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-7.4.1-linux-x86_64.tar.gz tar -xvf elasticsearch-7.4.1-linux-x86_64.tar.gz

安装 kibana:

wget https://artifacts.elastic.co/downloads/kibana/kibana-7.4.1-linux-x86_64.tar.gz shasum -a 512 kibana-7.4.1-linux-x86_64.tar.gz tar -xzf kibana-7.4.1-linux-x86_64.tar.gz

安装 logstash:

curl -L -O https://artifacts.elastic.co/downloads/logstash/logstash-7.4.1.tar.gz tar -xzf logstash-7.4.1.tar.gz

Feb 14 05:27:38 ubuntu systemd-entrypoint[17977]: ERROR: [1] bootstrap checks fa

Feb 14 05:27:38 ubuntu systemd-entrypoint[17977]: [1]: the default discovery set

Feb 14 05:27:38 ubuntu systemd-entrypoint[17977]: ERROR: Elasticsearch did not e

Feb 14 05:27:38 ubuntu systemd[1]: elasticsearch.service: Main process exited, c

Feb 14 05:27:38 ubuntu systemd[1]: Failed to start Elasticsearch.

-- Subject: Unit elasticsearch.service has failed

https://blog.csdn.net/wd2014610/article/details/89532638

elasticsearch 外网访问

sudo vim /etc/elasticsearch/elasticsearch.yml

network.host: 0.0.0.0

cluster.initial_master_nodes: ["node-1", "node-2"]

sudo vim /etc/sysctl.conf

vm.max_map_count=655360

sudo sysctl -p

tom@ubuntu:~/Desktop/2$ ps -ef|grep -v grep|grep elasticsearch

elastic+ 18244 1 18 05:30 ? 00:00:28 /usr/share/elasticsearch/jdk/bin/java -Xshare:auto -Des.networkaddress.cache.ttl=60 -Des.networkaddress.cache.negative.ttl=10 -XX:+AlwaysPreTouch -Xss1m -Djava.awt.headless=true -Dfile.encoding=UTF-8 -Djna.nosys=true -XX:-OmitStackTraceInFastThrow -XX:+ShowCodeDetailsInExceptionMessages -Dio.netty.noUnsafe=true -Dio.netty.noKeySetOptimization=true -Dio.netty.recycler.maxCapacityPerThread=0 -Dio.netty.allocator.numDirectArenas=0 -Dlog4j.shutdownHookEnabled=false -Dlog4j2.disable.jmx=true -Djava.locale.providers=SPI,COMPAT -XX:+UseG1GC -Djava.io.tmpdir=/tmp/elasticsearch-1767355033344325245 -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/var/lib/elasticsearch -XX:ErrorFile=/var/log/elasticsearch/hs_err_pid%p.log -Xlog:gc*,gc+age=trace,safepoint:file=/var/log/elasticsearch/gc.log:utctime,pid,tags:filecount=32,filesize=64m -Xms3976m -Xmx3976m -XX:MaxDirectMemorySize=2084569088 -XX:G1HeapRegionSize=4m -XX:InitiatingHeapOccupancyPercent=30 -XX:G1ReservePercent=15 -Des.path.home=/usr/share/elasticsearch -Des.path.conf=/etc/elasticsearch -Des.distribution.flavor=default -Des.distribution.type=deb -Des.bundled_jdk=true -cp /usr/share/elasticsearch/lib/* org.elasticsearch.bootstrap.Elasticsearch -p /var/run/elasticsearch/elasticsearch.pid --quiet

elastic+ 18436 18244 0 05:30 ? 00:00:00 /usr/share/elasticsearch/modules/x-pack-ml/platform/linux-x86_64/bin/controller

tom@ubuntu:~/Desktop/2$ sudo service kibana start

tom@ubuntu:~/Desktop/2$ sudo service kibana status

● kibana.service - Kibana

Loaded: loaded (/etc/systemd/system/kibana.service; disabled; vendor preset:

Active: active (running) since Sun 2021-02-14 05:34:01 PST; 5s ago

Docs: https://www.elastic.co

Main PID: 18527 (node)

CGroup: /system.slice/kibana.service

└─18527 /usr/share/kibana/bin/../node/bin/node /usr/share/kibana/bin/

Feb 14 05:34:01 ubuntu systemd[1]: Started Kibana.

tom@ubuntu:~/Desktop/2$ ps -ef|grep -v grep |grep kibana

kibana 18527 1 69 05:34 ? 00:00:11 /usr/share/kibana/bin/../node/bin/node /usr/share/kibana/bin/../src/cli/dist --logging.dest=/var/log/kibana/kibana.log --pid.file=/run/kibana/kibana.pid

tom@ubuntu:~/Desktop/2$ ss -ntlp|grep 5601

LISTEN 0 128 127.0.0.1:5601 *:*

kibana外网访问

sudo vim /etc/kibana/kibana.yml

# To allow connections from remote users, set this parameter to a non-loopback address.

server.host: "0.0.0.0"

logstash 配置

# logstash

export LS_HOME=/usr/share/logstash

export PATH=$PATH:$LS_HOME/bin

/etc/profile

tom@ubuntu:~/Desktop/2$ logstash -e 'input { stdin { } } output { stdout {} }'

Using JAVA_HOME defined java: /home/tom/bin/jdk1.6.0_45

WARNING, using JAVA_HOME while Logstash distribution comes with a bundled JDK

/usr/share/logstash/bin

sudo vim logstash.lib.sh

unset CDPATH

export JAVA_HOME=/home/tom/bin/jdk1.6.0_45

export JRE_HOME=${JAVA_HOME}/jre

export PATH=$PATH:${JAVA_HOME}/bin

tom@ubuntu:/usr/share/logstash/bin$ sudo service logstash stop

tom@ubuntu:/usr/share/logstash/bin$ sudo service logstash start

WARNING, using JAVA_HOME while Logstash distribution comes with a bundled JDK

https://www.cnblogs.com/sanduzxcvbnm/p/11416138.html

[FATAL] 2021-02-14 08:58:52.670 [main] runner - An unexpected error occurred! {:error=>#<ArgumentError: Path "/usr/share/logstash/data" must be a writable directory. It is not writable.>, :backtrace=>["/usr/share/logstash/logstash-core/lib/logstash/settings.rb:530:in `validate'", "/usr/share/logstash/logstash-core/lib/logstash/settings.rb:290:in `validate_value'", "/usr/share/logstash/logstash-core/lib/logstash/settings.rb:201:in `block in validate_all'", "org/jruby/RubyHash.java:1415:in `each'", "/usr/share/logstash/logstash-core/lib/logstash/settings.rb:200:in `validate_all'", "/usr/share/logstash/logstash-core/lib/logstash/runner.rb:317:in `execute'", "/usr/share/logstash/vendor/bundle/jruby/2.5.0/gems/clamp-0.6.5/lib/clamp/command.rb:67:in `run'", "/usr/share/logstash/logstash-core/lib/logstash/runner.rb:273:in `run'", "/usr/share/logstash/vendor/bundle/jruby/2.5.0/gems/clamp-0.6.5/lib/clamp/command.rb:132:in `run'", "/usr/share/logstash/lib/bootstrap/environment.rb:88:in `<main>'"]}

Path "/usr/share/logstash/data" must be a writable directory. It is not writable

chown -R tom /usr/share/logstash # tom 当前登录的用户

tom@ubuntu:/usr/share/logstash$ logstash -e 'input { stdin { } } output { stdout {} }'

Using JAVA_HOME defined java: /home/tom/bin/jdk1.6.0_45

WARNING, using JAVA_HOME while Logstash distribution comes with a bundled JDK

WARNING: Could not find logstash.yml which is typically located in $LS_HOME/config or /etc/logstash. You can specify the path using --path.settings. Continuing using the defaults

Could not find log4j2 configuration at path /usr/share/logstash/config/log4j2.properties. Using default config which logs errors to the console

[INFO ] 2021-02-14 09:25:19.960 [main] runner - Starting Logstash {"logstash.version"=>"7.11.0", "jruby.version"=>"jruby 9.2.13.0 (2.5.7) 2020-08-03 9a89c94bcc Java HotSpot(TM) 64-Bit Server VM 25.171-b11 on 1.8.0_171-b11 +indy +jit [linux-x86_64]"}

[WARN ] 2021-02-14 09:25:20.561 [LogStash::Runner] multilocal - Ignoring the 'pipelines.yml' file because modules or command line options are specified

[INFO ] 2021-02-14 09:25:20.615 [LogStash::Runner] agent - No persistent UUID file found. Generating new UUID {:uuid=>"5a02fec5-9d77-4a4c-963c-eede218ac4d0", :path=>"/usr/share/logstash/data/uuid"}

[INFO ] 2021-02-14 09:25:23.399 [Converge PipelineAction::Create<main>] Reflections - Reflections took 65 ms to scan 1 urls, producing 23 keys and 47 values

[INFO ] 2021-02-14 09:25:25.356 [[main]-pipeline-manager] javapipeline - Starting pipeline {:pipeline_id=>"main", "pipeline.workers"=>4, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>50, "pipeline.max_inflight"=>500, "pipeline.sources"=>["config string"], :thread=>"#<Thread:0x48151297 run>"}

[INFO ] 2021-02-14 09:25:26.483 [[main]-pipeline-manager] javapipeline - Pipeline Java execution initialization time {"seconds"=>1.12}

[INFO ] 2021-02-14 09:25:26.579 [[main]-pipeline-manager] javapipeline - Pipeline started {"pipeline.id"=>"main"}

The stdin plugin is now waiting for input:

[INFO ] 2021-02-14 09:25:26.748 [Agent thread] agent - Pipelines running {:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]}

[INFO ] 2021-02-14 09:25:27.030 [Api Webserver] agent - Successfully started Logstash API endpoint {:port=>9601}

sdff

{

"host" => "ubuntu",

"@version" => "1",

"@timestamp" => 2021-02-14T17:25:37.361Z,

"message" => "sdff"

}

helo

{

"host" => "ubuntu",

"@version" => "1",

"@timestamp" => 2021-02-14T17:25:41.882Z,

"message" => "helo"

}

logstash 和elasticsearch

我们要做的是完成 Logstash 的输入和输出配置,将 Logstash 和 Elasticsearch 连接起来,因为 Logstash 整理的只是日志的格式,真正分析处理的部分还在 Elasticsearch 中。

- Logstash 基本配置

- Logstash 连接 Elasticsearch 的配置

http://nginx.org/en/download.html

wget http://nginx.org/download/nginx-1.19.6.tar.gz

./configure --prefix=/usr/local/nginx --with-openssl=/home/tom/Desktop/1/openssl-fips-2.0.16

make

sudo make install

https://www.openssl.org/source/

./configure

make

make install

cp conf/nginx.conf '/usr/local/nginx/conf/nginx.conf.default'

test -d '/usr/local/nginx/logs' \

|| mkdir -p '/usr/local/nginx/logs'

test -d '/usr/local/nginx/logs' \

|| mkdir -p '/usr/local/nginx/logs'

test -d '/usr/local/nginx/html' \

|| cp -R html '/usr/local/nginx'

test -d '/usr/local/nginx/logs' \

|| mkdir -p '/usr/local/nginx/logs'

make[1]: Leaving directory `/home/tom/Desktop/1/nginx-1.19.6'

vim nginx.conf

server {

listen 80;

server_name localhost;

#charset koi8-r;

#access_log logs/host.access.log main;

access_log /home/tom/Desktop/2/elk/access.log;

这里指定的是 Nginx 的访问日志存放路径

tom@ubuntu:~/Desktop/2/elk$ cat access.log

192.168.154.1 - - [15/Feb/2021:00:02:50 -0800] "GET / HTTP/1.1" 200 612 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/87.0.4280.88 Safari/537.36"

192.168.154.1 - - [15/Feb/2021:00:02:50 -0800] "GET /favicon.ico HTTP/1.1" 404 555 "http://192.168.154.131/" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/87.0.4280.88 Safari/537.36"

Logstash 的配置主要分为三大部分:input, filter, output

tom@ubuntu:~/Desktop/2/elk$ logstash -e 'input { stdin { } } output { stdout {codec=>json} }'

Using JAVA_HOME defined java: /home/tom/bin/jdk1.6.0_45

WARNING, using JAVA_HOME while Logstash distribution comes with a bundled JDK

'input { stdin { } } output { stdout {codec=>json} }'

input {

stdin {}

}

output {

stdout

{ codec => json }

}

stdin {} 表示标准输入,stdout {} 表示标准输出,在这个字符串中,其实定义了 Logstash 的两个行为 input 和 output。

在 input 中,我们定义了一个标准输入,由于什么都没有,所以 Logstash 会从终端的标准输入中读取字符串,这也是为什么刚才在输入完命令后会出现等待输入的情况。

在 output 中,我们定义了一个标准输出,也就是说 Logstash 在处理完后会将结果从标准输出(终端)中输出,而 codec 字段,则说明了输出会遵循什么样的格式,这里定义的 codec 为 json,所以我们刚才看到了一套标准的输出格式。

配置 Log->Logstash->Elasticsearch

logstash还是使用tar安装吧

https://blog.csdn.net/weixin_41601114/article/details/106470966

配置文件的地址

tom@ubuntu:~/Desktop/2/elk$ cd /etc/logstash/conf.d/

tom@ubuntu:/etc/logstash/conf.d$ sudo touch logstash-shipper.conf

编写配置文件

tom@ubuntu:/etc/logstash/conf.d$ sudo vim logstash-shipper.conf

tom@ubuntu:/etc/logstash$ cat conf.d/logstash-shipper.conf

input {

stdin {}

file {

path => "/home/tom/Desktop/2/elk/access.log"

start_position => "beginning"

codec => multiline {

negate => true

pattern => '^\d'

what => 'previous'

}

}

}

output {

stdout {

codec => rubydebug

}

elasticsearch {

hosts => ["localhost:9200"]

index => "logstash-%{+YYYY.MM.dd}"

}

}

检查配置文件

tom@ubuntu:/etc/logstash/conf.d$ logstash -f logstash-shipper.conf --config.test_and_exit

tom@ubuntu:~/Desktop/1/logstash-7.4.1/bin$ ./logstash -f ../config/logstash-shipper.conf --config.test_and_exit

Thread.exclusive is deprecated, use Thread::Mutex

Sending Logstash logs to /home/tom/Desktop/1/logstash-7.4.1/logs which is now configured via log4j2.properties

[2021-02-15T08:49:41,721][WARN ][logstash.config.source.multilocal] Ignoring the 'pipelines.yml' file because modules or command line options are specified

[2021-02-15T08:49:43,112][INFO ][org.reflections.Reflections] Reflections took 42 ms to scan 1 urls, producing 20 keys and 40 values

[2021-02-15T08:49:43,522][ERROR][logstash.codecs.multiline] Unknown setting 'wath' for multiline

[2021-02-15T08:49:43,535][FATAL][logstash.runner ] The given configuration is invalid. Reason: Unable to configure plugins: (ConfigurationError) Something is wrong with your configuration.

[2021-02-15T08:49:43,547][ERROR][org.logstash.Logstash ] java.lang.IllegalStateException: Logstash stopped processing because of an error: (SystemExit) exit

Unknown setting 'wath' for multiline wath 这个写错了what

tom@ubuntu:~/Desktop/1/logstash-7.4.1/bin$ ./logstash -f ../config/logstash-shipper.conf --config.test_and_exit

Thread.exclusive is deprecated, use Thread::Mutex

Sending Logstash logs to /home/tom/Desktop/1/logstash-7.4.1/logs which is now configured via log4j2.properties

[2021-02-15T08:52:11,402][WARN ][logstash.config.source.multilocal] Ignoring the 'pipelines.yml' file because modules or command line options are specified

[2021-02-15T08:52:12,802][INFO ][org.reflections.Reflections] Reflections took 48 ms to scan 1 urls, producing 20 keys and 40 values

Configuration OK

[2021-02-15T08:52:15,192][INFO ][logstash.runner ] Using config.test_and_exit mode. Config Validation Result: OK. Exiting Logstash

希望大家能够明白 Logstash 的语法说明,其实就是 输入,过滤,输出。

Logstash 作为数据进入 Elasticsearch 前的一个节点,也是过滤数据分析的的关键节点之一。

参考阅读

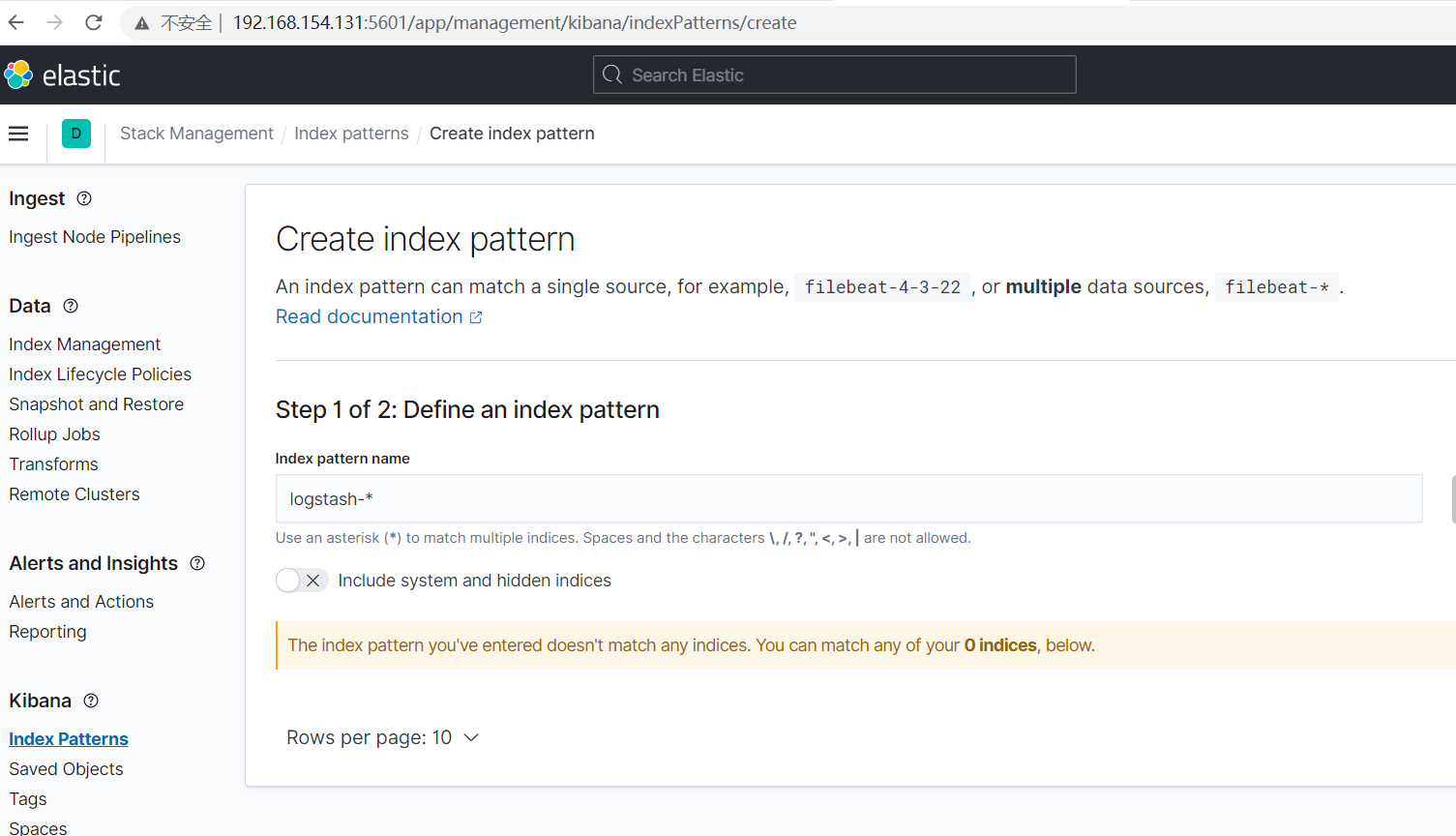

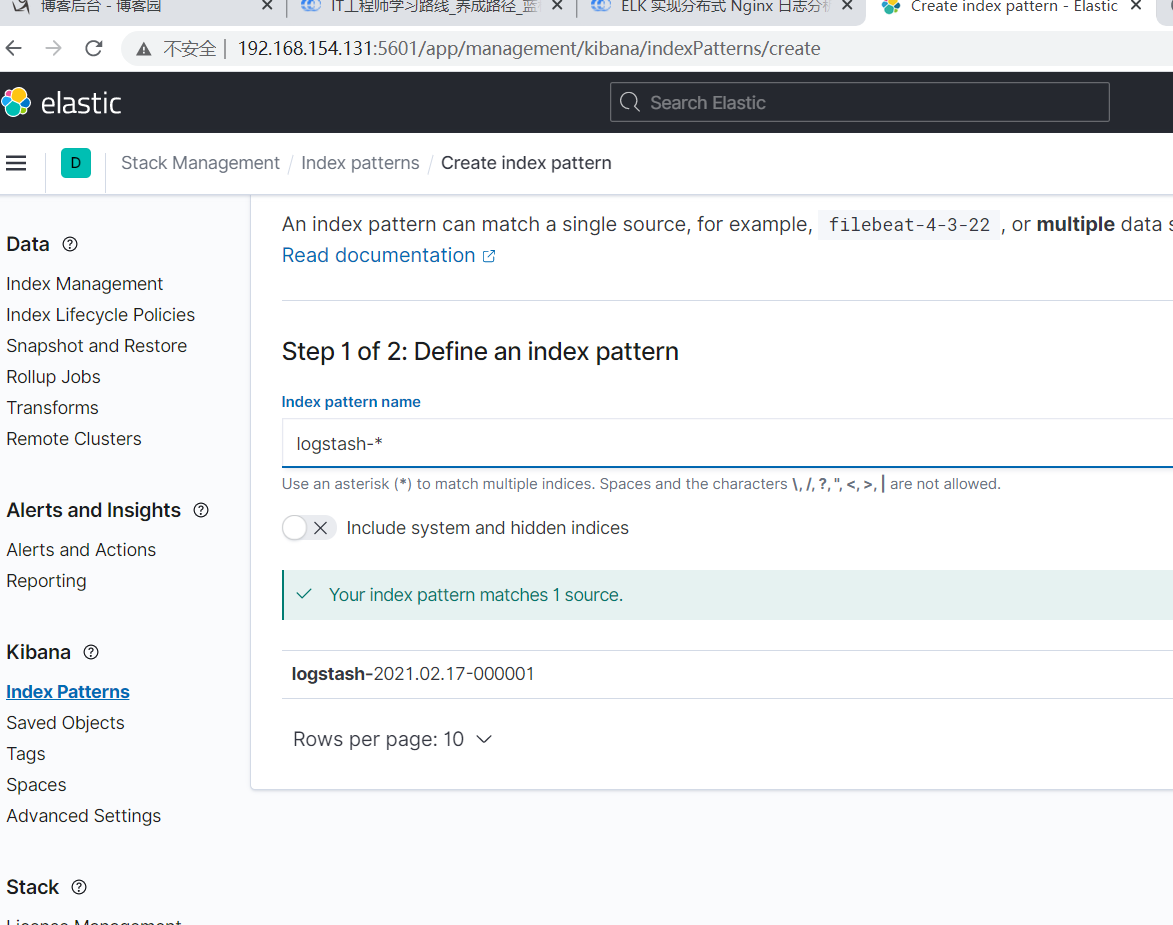

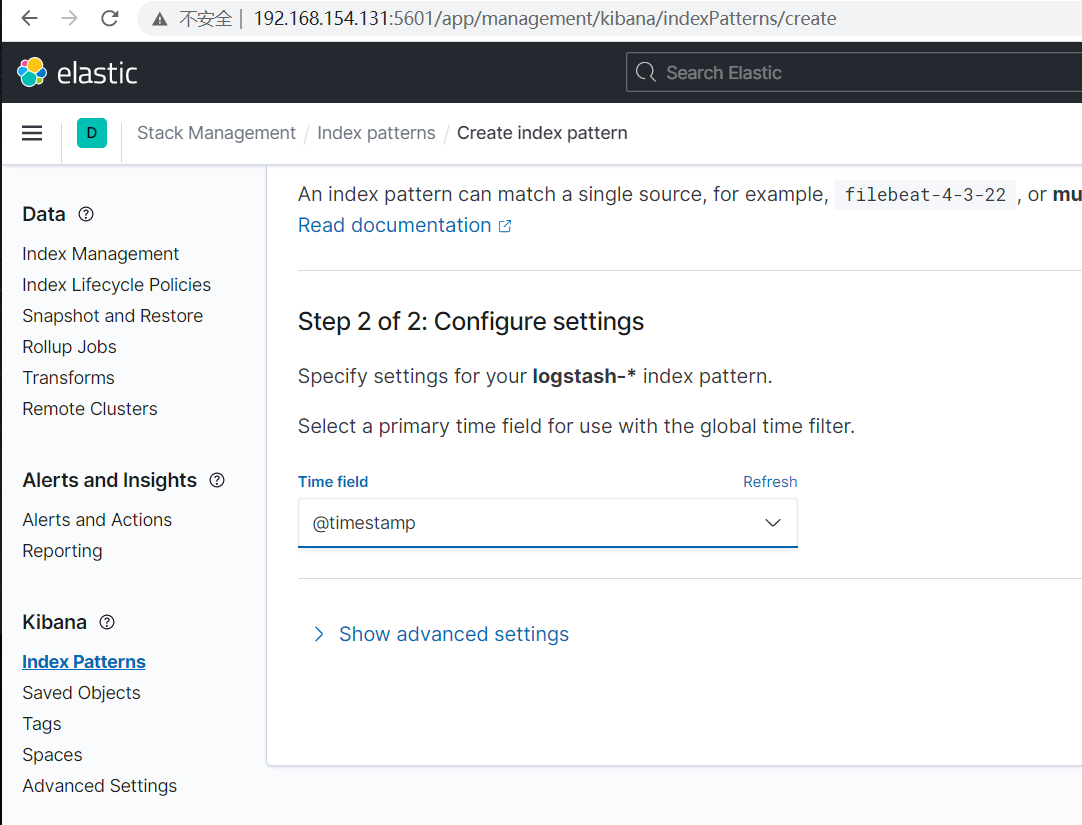

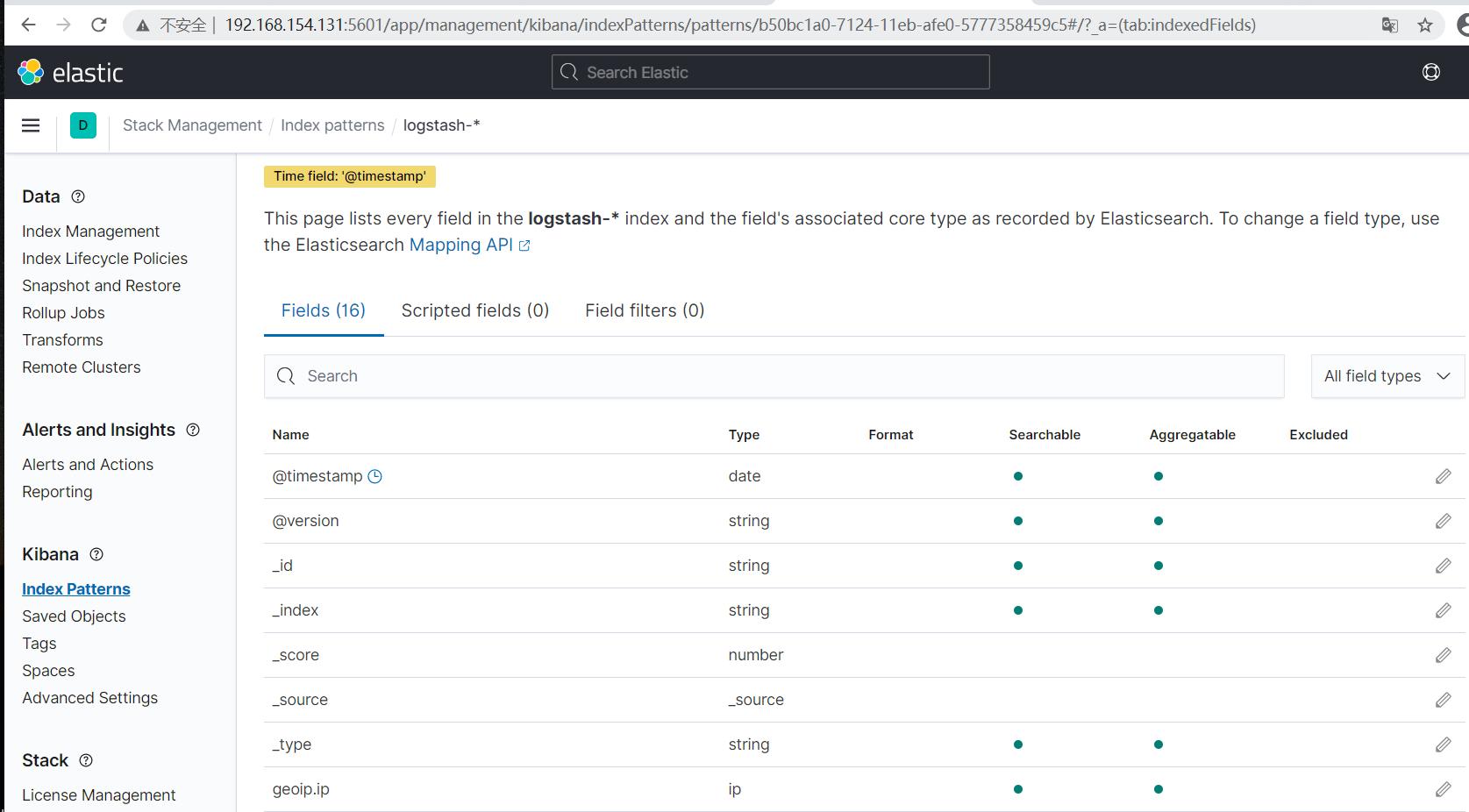

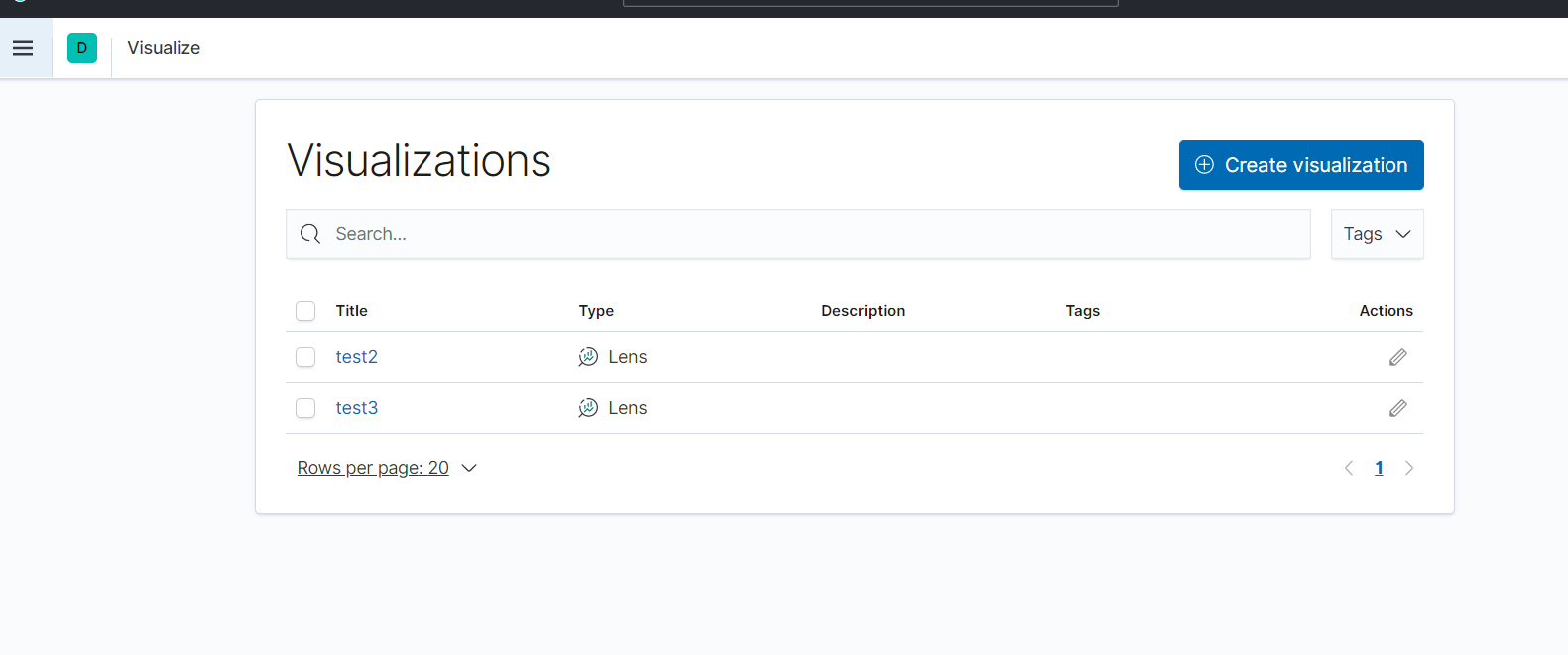

kibana

1:创建索引

num is deprecated

{

"host" => "ubuntu",

"message" => "192.168.154.1 - - [17/Feb/2021:05:16:58 -0800] \"GET / HTTP/1.1\" 200 612 \"-\" \"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/87.0.4280.88 Safari/537.36\"",

"@version" => "1",

"@timestamp" => 2021-02-17T13:30:58.088Z,

"path" => "/home/tom/Desktop/2/elk/access.log"

}

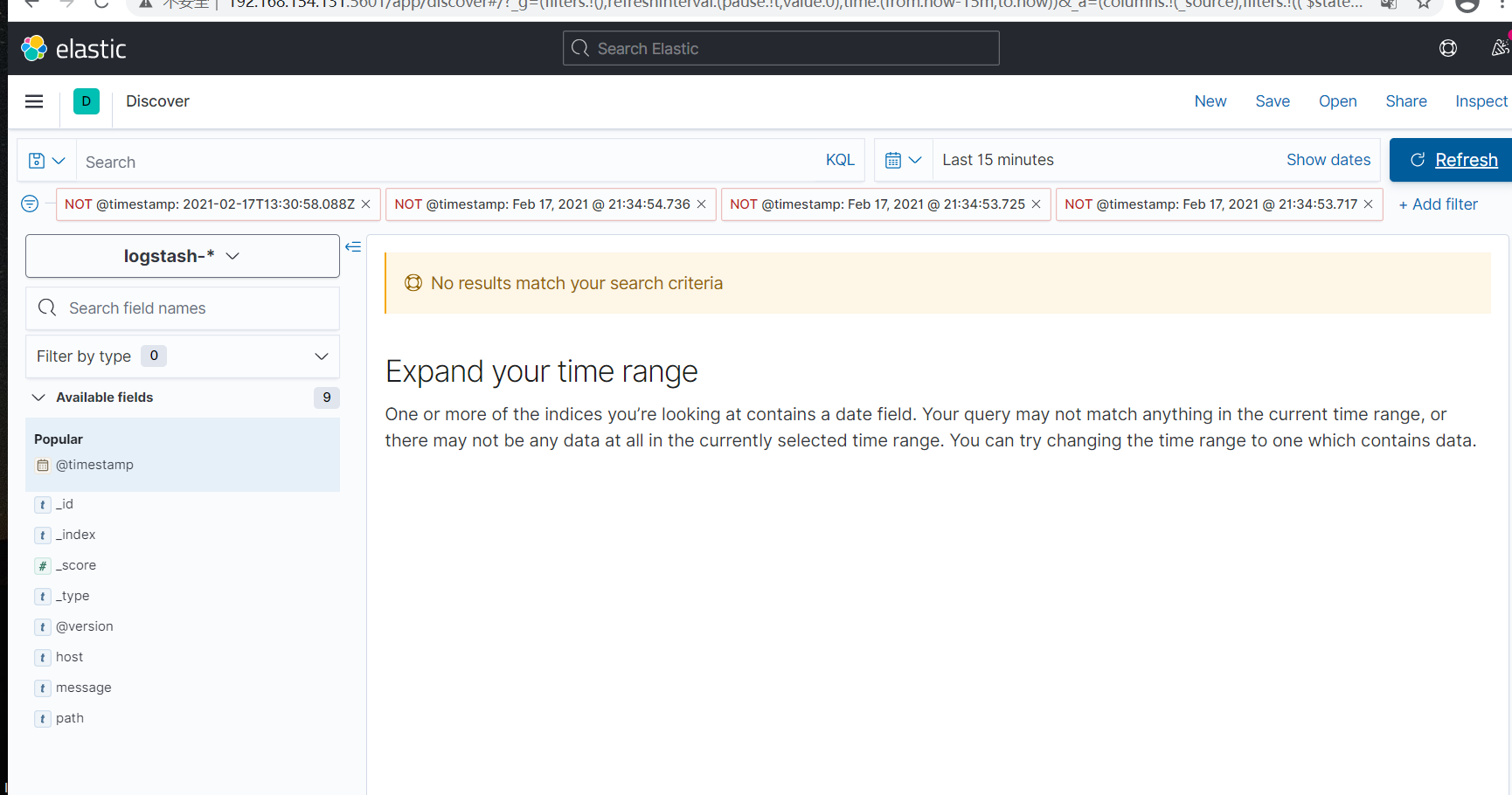

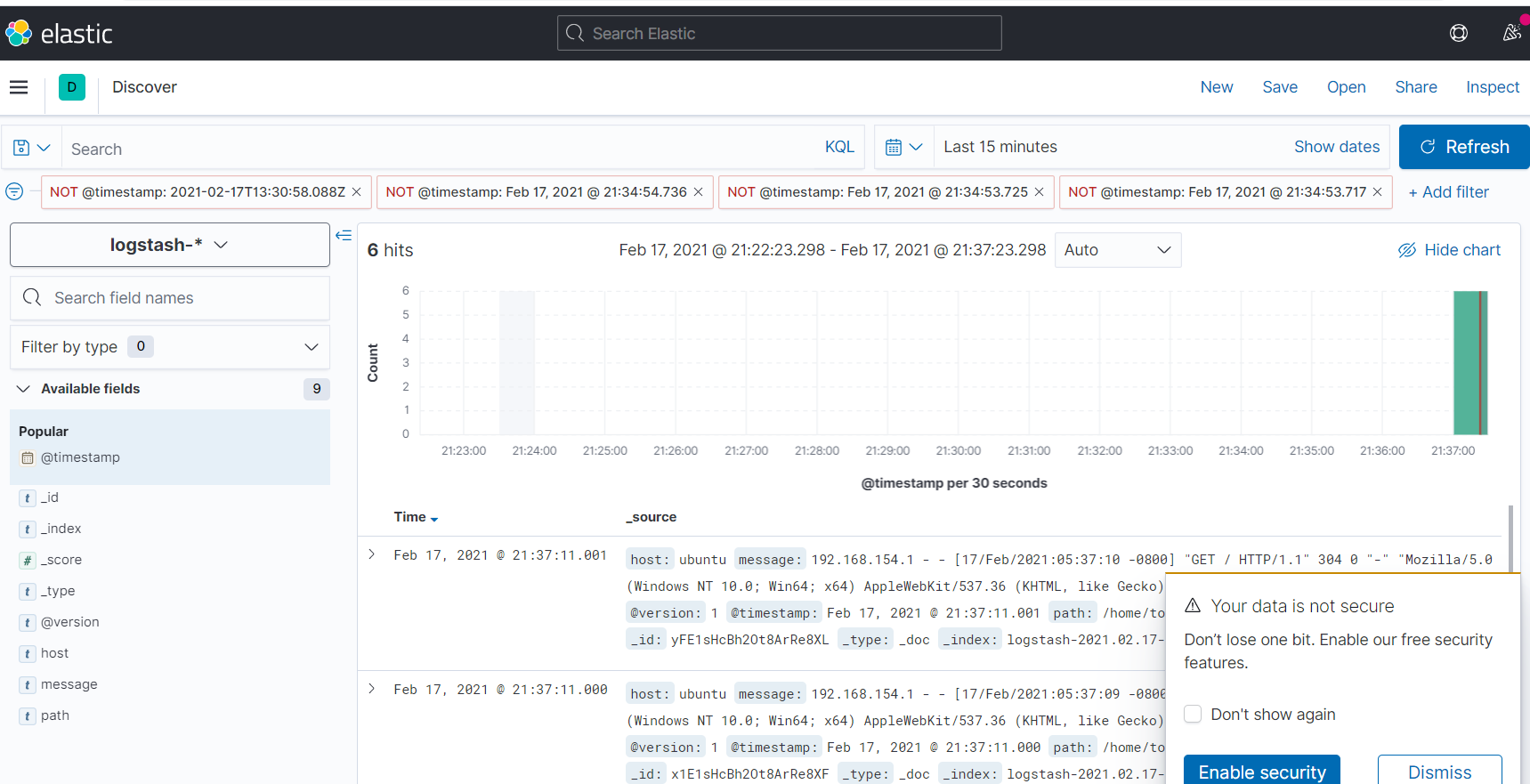

左侧的discover

没有统计数据,访问nginx几次,产生访问的数据,刷新discover页面,可以看到统计的数据

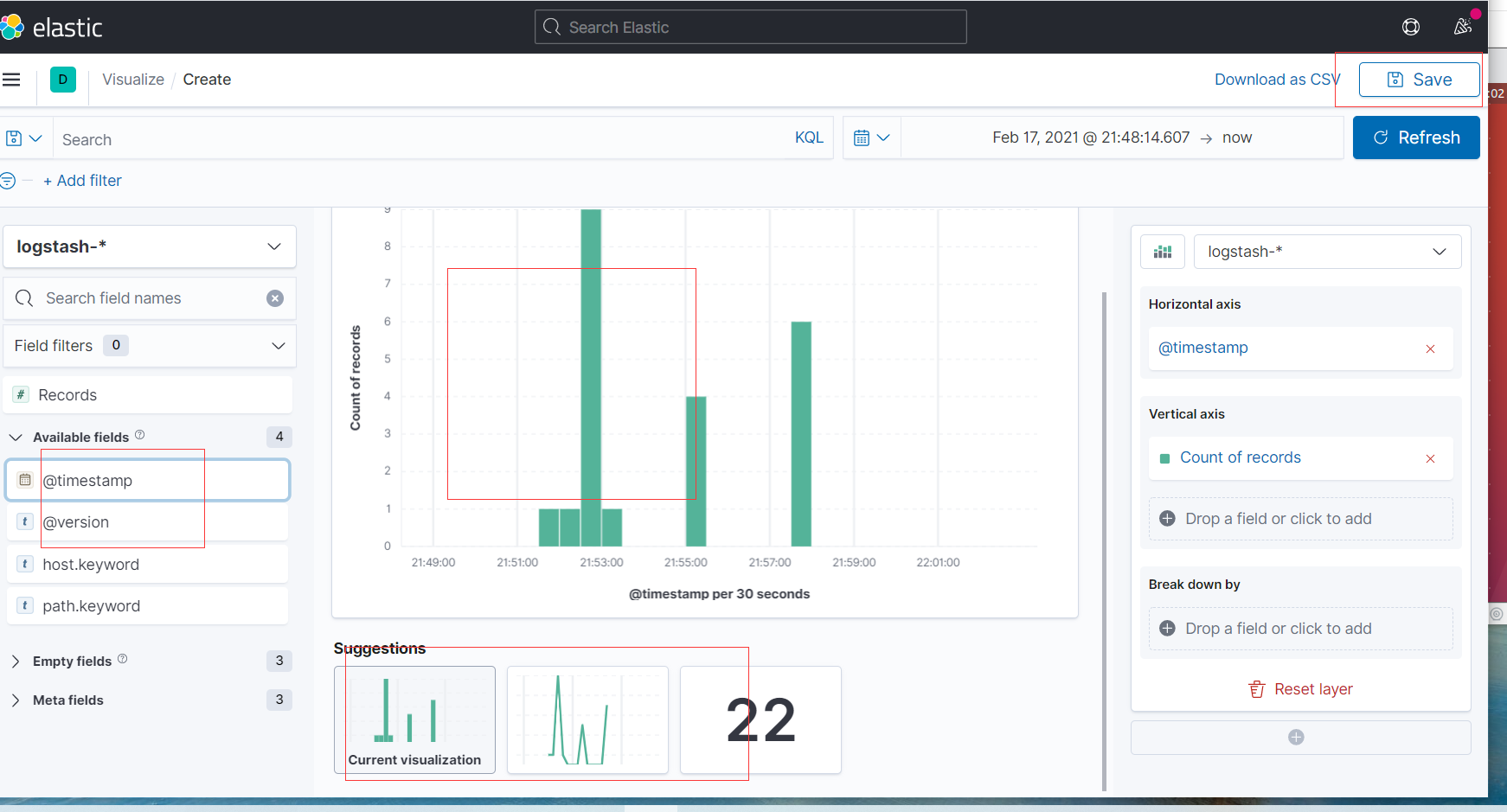

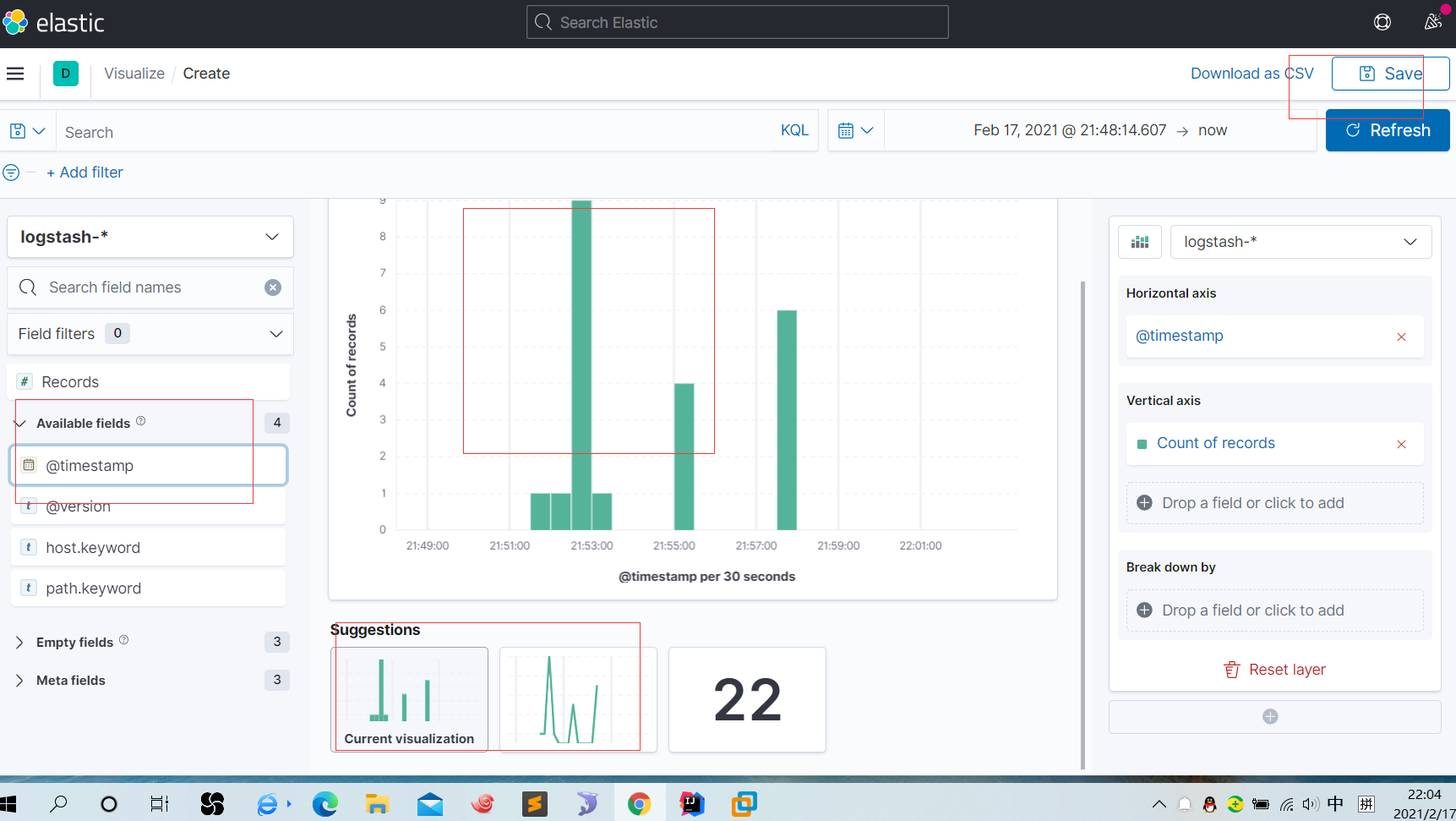

在virtual创建图形

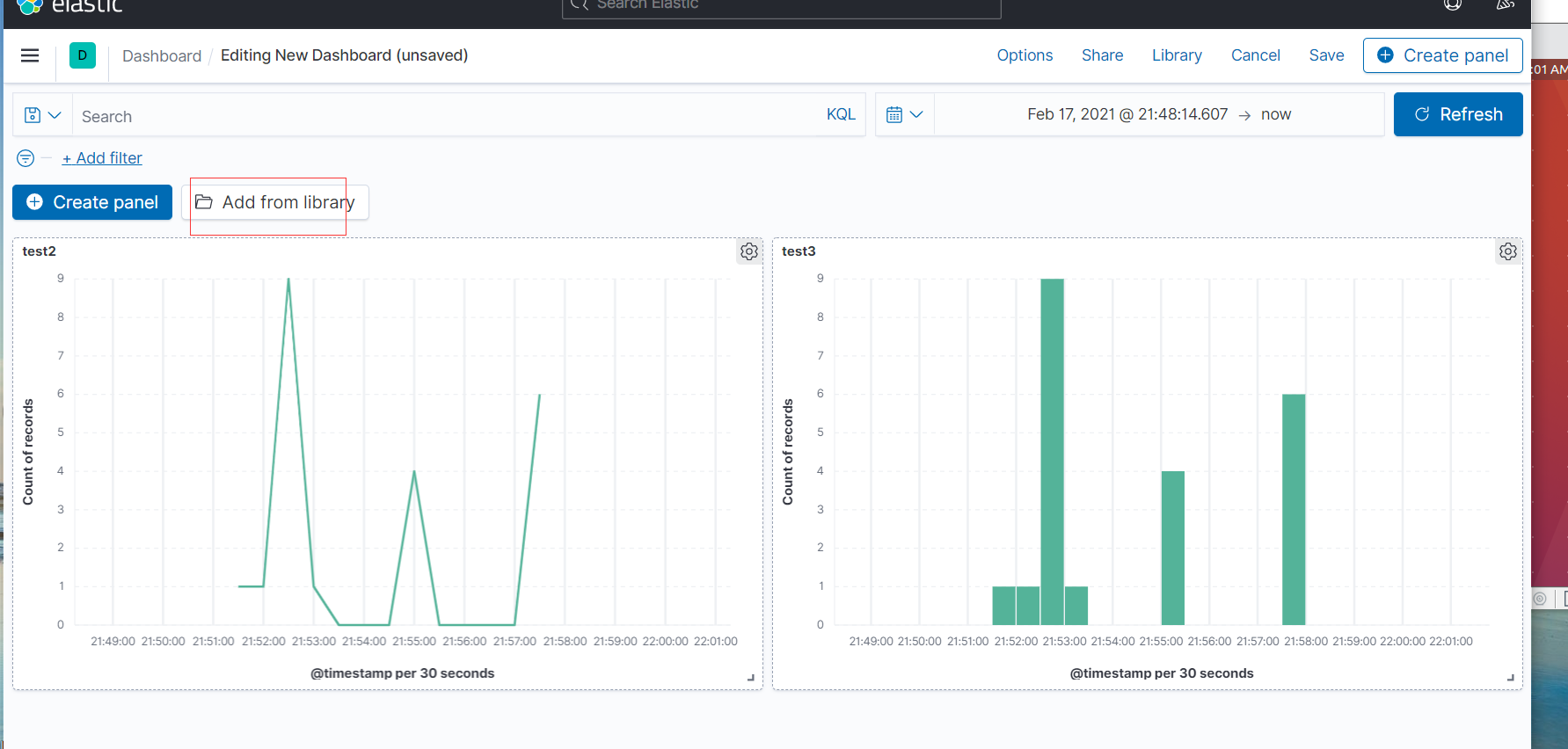

在dashboard添加图形

Lucene 基本查询

注意:Lucene 语法的搜索并不是 Kibana 特有的,Kibana 只是一个用于数据可视化的 Web 前端,真正的搜索引擎其实是构建在了 Elasticsearch 之中。

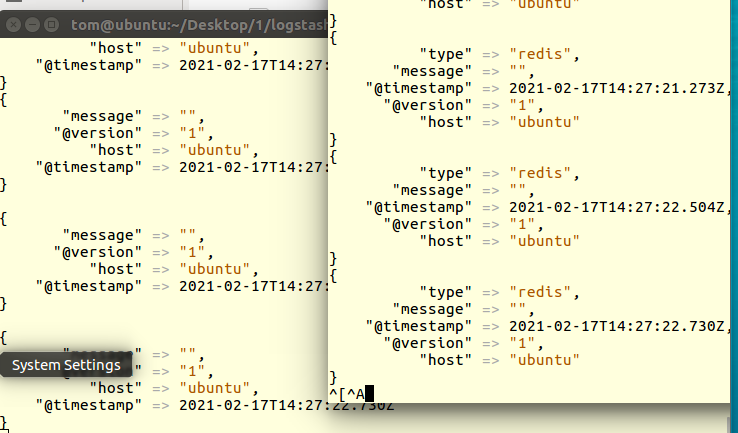

redis

修改配置文件,将 Logstash 的 input 设置为 Redis,从 Redis 中读取日志数据,进入

vim logstash-indexer.conf

input {

redis {

host => "localhost"

type => "redis"

data_type => "list"

key => "logstash"

}

}

output {

stdout {

codec => rubydebug

}

elasticsearch {

hosts=>["localhost:9200"]

index=>"logstash-%{+YYYY.MM.dd}"

}

}

配置 Logstash 的 output 为 Redis,将日志数据输出到 Redis,编辑 logstash-shipper.conf:

stdin {}

file {

path => "/home/tom/Desktop/2/elk/access.log"

start_position => "beginning"

codec => multiline {

negate => true

pattern => '^\d'

what => 'previous'

}

}

}

output {

stdout {

codec => rubydebug

}

redis {

host => "localhost"

data_type => "list"

key => "logstash"

}

}

检查配置

tom@ubuntu:~/Desktop/1/logstash-7.4.1/bin$ ./logstash -f ../config/logstash-shipper.conf --config.test_and_exit

Thread.exclusive is deprecated, use Thread::Mutex

Sending Logstash logs to /home/tom/Desktop/1/logstash-7.4.1/logs which is now configured via log4j2.properties

[2021-02-17T06:22:34,444][WARN ][logstash.config.source.multilocal] Ignoring the 'pipelines.yml' file because modules or command line options are specified

[2021-02-17T06:22:35,673][INFO ][org.reflections.Reflections] Reflections took 45 ms to scan 1 urls, producing 20 keys and 40 values

Configuration OK

[2021-02-17T06:22:37,343][INFO ][logstash.runner ] Using config.test_and_exit mode. Config Validation Result: OK. Exiting Logstash

tom@ubuntu:~/Desktop/1/logstash-7.4.1/bin$ ./logstash -f ../config/logstash-indexer.conf --config.test_and_exit

Thread.exclusive is deprecated, use Thread::Mutex

Sending Logstash logs to /home/tom/Desktop/1/logstash-7.4.1/logs which is now configured via log4j2.properties

[2021-02-17T06:23:54,695][WARN ][logstash.config.source.multilocal] Ignoring the 'pipelines.yml' file because modules or command line options are specified

[2021-02-17T06:23:55,964][INFO ][org.reflections.Reflections] Reflections took 37 ms to scan 1 urls, producing 20 keys and 40 values

Configuration OK

[2021-02-17T06:23:57,368][INFO ][logstash.runner ] Using config.test_and_exit mode. Config Validation Result: OK. Exiting Logstash

tom@ubuntu:~/Desktop/1/logstash-7.4.1/bin$ ./logstash -f ../config/logstash-shipper.conf

tom@ubuntu:~/Desktop/1/logstash-7.4.1/bin$ redis-cli

127.0.0.1:6379> keys *

1) "logstash"

在同一台主机上启动多个 logstash 进程需要将它们的数据目录分开,这里通过 --path.data 选项给第二个进程分配了一个单独的目录。

tom@ubuntu:~/Desktop/1/logstash-7.4.1/bin$ ./logstash -f ../config/logstash-indexer.conf --path.data /home/tom/Desktop/1/logstash-7.4.1/data2

参考阅读

~

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· go语言实现终端里的倒计时

· 如何编写易于单元测试的代码

· 10年+ .NET Coder 心语,封装的思维:从隐藏、稳定开始理解其本质意义

· .NET Core 中如何实现缓存的预热?

· 从 HTTP 原因短语缺失研究 HTTP/2 和 HTTP/3 的设计差异

· 分享一个免费、快速、无限量使用的满血 DeepSeek R1 模型,支持深度思考和联网搜索!

· 使用C#创建一个MCP客户端

· ollama系列1:轻松3步本地部署deepseek,普通电脑可用

· 基于 Docker 搭建 FRP 内网穿透开源项目(很简单哒)

· 按钮权限的设计及实现