Elasticsearch Xpack认证

说明

ES(在本文中,ES即Elasticsearch简称)的xpack一直都是要收费的,然而在6.8版本开始,ES开放了部分xpack认证功能,但一些高级功能仍旧收费(如单点登录以及对字段级和文档级安全性的Active Directory / LDAP身份验证仍然是付费功能)。

概述

本文主要针对ES 6.8.0 安全认证部署

一、单机ES安全认证部署

(1)下载ES 6.8.0 linux 安装包

(2)上传至服务器并解压

# 解压

sudo tar -zxvf elasticsearch-6.8.0.tar.gz

# 将 es 目录授予 elasticsearch 用户,因为 es 不允许使用 root 用户启动

sudo chown -R elasticsearch elasticsearch-6.8

(3)修改 ES config/elasticsearch.yml 配置,添加如下配置

xpack.security.audit.enabled: true

xpack.security.enabled: true

xpack.security.transport.ssl.enabled: true

(4)启动ES

# 切换用户

su elasticsearch

# 启动ES

./bin/elasticsrearch

(5)验证

页面访问 ${IP}:9200,会弹出输入用户名密码的窗口。ES有一些默认的用户名(elastic、kibana、logstash等),但是需要自己设置密码,否则登录不进去。

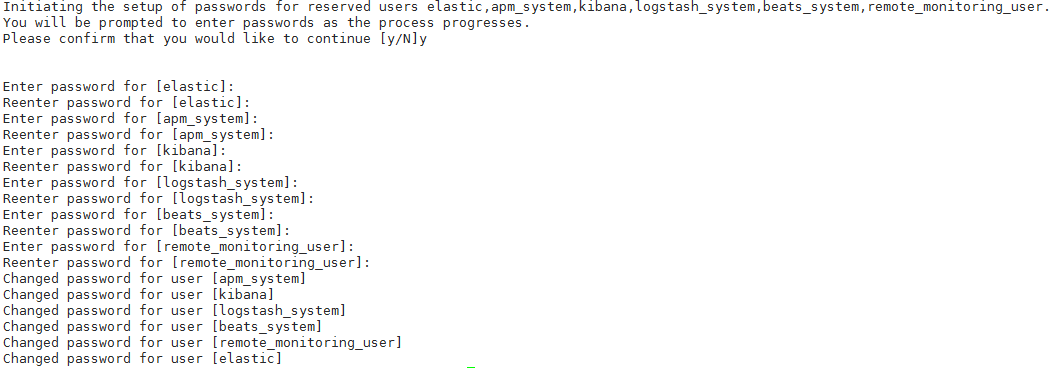

(6)给默认用户设置密码

./bin/elasticsearch-setup-passwords interactive

设置完成之后选择其中一个用户再去登录即可访问到ES节点信息

二、ES集群安全认证部署

(1)基于单机拷贝两份作为另外两个ES节点。一个master,两个worker

cp elasticsearch-6.8.0 elasticsearch-6.8.0-worker0

cp elasticsearch-6.8.0 elasticsearch-6.8.0-worker1

(2)三个节点ES配置文件

# 节点角色

node.name: master

node.master: true

node.data: false

# 数据以及日志目录

path.data: /opt/elasticsearch-6.8.0/data

path.logs: /opt/elasticsearch-6.8.0/logs

# http以及tcp端口,http用于API访问,tcp用于集群内部访问

http.port: 9200

transport.tcp.port: 9300

# 集群节点

discovery.zen.ping.unicast.hosts: ["xxx:9300","xxx:9301","xxx:9302"]

# xpack 访问认证

xpack.security.audit.enabled: true

xpack.security.enabled: true

xpack.security.transport.ssl.enabled: true

# xpack 集群认证

xpack.security.transport.ssl.verification_mode: certificate

xpack.security.transport.ssl.keystore.path: certificates.p12

xpack.security.transport.ssl.truststore.path: certificates.p12

# 节点角色

node.name: worker0

node.master: false

node.data: true

# 数据以及日志目录

path.data: /opt/elasticsearch-6.8.0-worker0/data

path.logs: /opt/elasticsearch-6.8.0-worker0/logs

# http以及tcp端口,http用于API访问,tcp用于集群内部访问

http.port: 9201

transport.tcp.port: 9301

# 集群节点

discovery.zen.ping.unicast.hosts: ["xxx:9300","xxx:9301","xxx:9302"]

# xpack 访问认证

xpack.security.audit.enabled: true

xpack.security.enabled: true

xpack.security.transport.ssl.enabled: true

# xpack 集群认证

xpack.security.transport.ssl.verification_mode: certificate

xpack.security.transport.ssl.keystore.path: certificates.p12

xpack.security.transport.ssl.truststore.path: certificates.p12

# 节点角色

node.name: worker1

node.master: false

node.data: true

# 数据以及日志目录

path.data: /opt/elasticsearch-6.8.0-worker1/data

path.logs: /opt/elasticsearch-6.8.0-worker1/logs

# http以及tcp端口,http用于API访问,tcp用于集群内部访问

http.port: 9202

transport.tcp.port: 9302

# 集群节点

discovery.zen.ping.unicast.hosts: ["xxx:9300","xxx:9301","xxx:9302"]

# xpack 访问认证

xpack.security.audit.enabled: true

xpack.security.enabled: true

xpack.security.transport.ssl.enabled: true

# xpack 集群认证

xpack.security.transport.ssl.verification_mode: certificate

xpack.security.transport.ssl.keystore.path: certificates.p12

xpack.security.transport.ssl.truststore.path: certificates.p12

(3)配置秘钥文件

- 生成ca文件(在任何一个节点目录下执行都行)

./bin/elasticsearch-certutil ca

- 生成节点秘钥文件

./bin/elasticsearch-certutil cert --ca elastic-stack-ca.p12

- 复制秘钥文件到各节点的指定目录下(根据配置复制到具体目录下,这里是复制到config目录下)

- 在各个节点执行以下命令设置密码

./bin/elasticsearch-keystore add xpack.security.transport.ssl.keystore.secure_password

./bin/elasticsearch-keystore add xpack.security.transport.ssl.truststore.secure_password

(4)启动各个节点并登陆验证

三、kibana ES

(1)下载kibana

(2)kibana 配置

server.name: "xxxx"

# 连接es master节点

elasticsearch.url: "http://${ip}:9200"

# 认证账号密码

elasticsearch.username: "kibana"

elasticsearch.password: "xxxx"

# kibana默认访问端口

server.port: 5601

server.host: "0.0.0.0"

# 监控(此配置开启的话,会造成cpu监控为N/A,改成false即可)

xpack.monitoring.ui.container.elasticsearch.enabled: true

# 开启认证

xpack.security.enabled: true

(3)启动kibana

./bin/kibana

(4)访问

${ip}:5601

四、logstash ES

(2)logstash 的 logstash.yml 配置

# xpack 认证

xpack.monitoring.enabled: true

xpack.monitoring.elasticsearch.username: "logstash"

xpack.monitoring.elasticsearch.password: "xxx"

xpack.monitoring.elasticsearch.url: ["${ip}:9200"]

(3)logstash 的 pipeline.yml 管道配置

- pipeline.id: xxx-pipeline

path.config: "/usr/share/logstash/pipelines/xxx.conf"

pipeline.workers: 10

pipeline.batch.size: 500

(4)logstash 管道连接 ES

elasticsearch {

action => "index"

hosts => ["${ip}:9200"]

index => "xxx"

# 认证

user => "logstash"

password => "xxx"

document_type => "xxx"

document_id => "xxx"

template => "/usr/share/logstash/es-template/xxx.json"

template_name => "xxx"

template_overwrite => true

}

(5)运行 logstash

# 无需指定配置文件,默认走pipelines.yml的配置

./bin/logstash

五、错误记录

(1)错误:with the same id but is a different node instance

原因:不同的ES节点使用了同一个data目录

(2)Caused by: java.security.UnrecoverableKeyException: failed to decrypt safe contents entry: javax.crypto.BadPaddingException: Given final block not properly padded. Such issues can arise if a bad key is used during decryp

原因:证书有问题,重新生成即可

六、参考文章

(1)es认证:https://blog.csdn.net/qq_37461349/article/details/103047795

(2)es认证:https://blog.csdn.net/shen12138/article/details/107016991/

(3)logstash连接认证ES:https://www.cnblogs.com/wueryuan/p/13523833.html