RDD练习:词频统计

1.读文本文件生成RDD lines

lines = sc.textFile('file:///home/hadoop/word.txt')

lines.collect()

2.将一行一行的文本分割成单词 words

words=lines.flatMap(lambda line:line.split())

words.collect()

3.全部转换为小写

words=lines.flatMap(lambda line:line.lower().split())

words.collect()

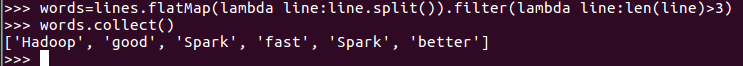

4.去掉长度小于3的单词

words=lines.flatMap(lambda line:line.split()).filter(lambda line:len(line)>3)

words.collect()

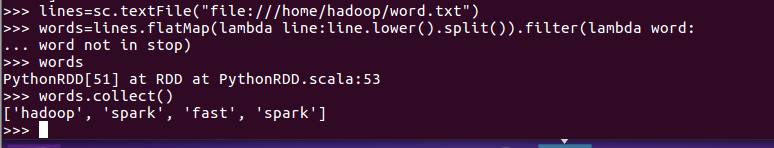

5.去掉停用词

1.准备停用词文本:

lines = sc.textFile('file:///home/hadoop/stopwords.txt')

stop = lines.flatMap(lambda line : line.split()).collect()

stop

2.去除停用词:

lines=sc.textFile("file:///home/hadoop/word.txt")

words=lines.flatMap(lambda line:line.lower().split()).filter(lambda word:word not in stop)

words

words.collect()

6.转换成键值对 map()

wordskv=words.map(lambda word:(word.lower(),1))

wordskv.collect()

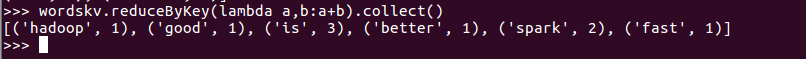

7.统计词频 reduceByKey()

wordskv.reduceByKey(lambda a,b:a+b).collect()

二、学生课程分数 groupByKey()

-- 按课程汇总全总学生和分数

1. 分解出字段 map()

2. 生成键值对 map()

3. 按键分组

4. 输出汇总结果

1.读大学计算机系的成绩数据集生成RDD

lines = sc.textFile('file:///home/hadoop/chapter4-data01.txt')

lines.take(6)

2.按科目汇总学生和分数

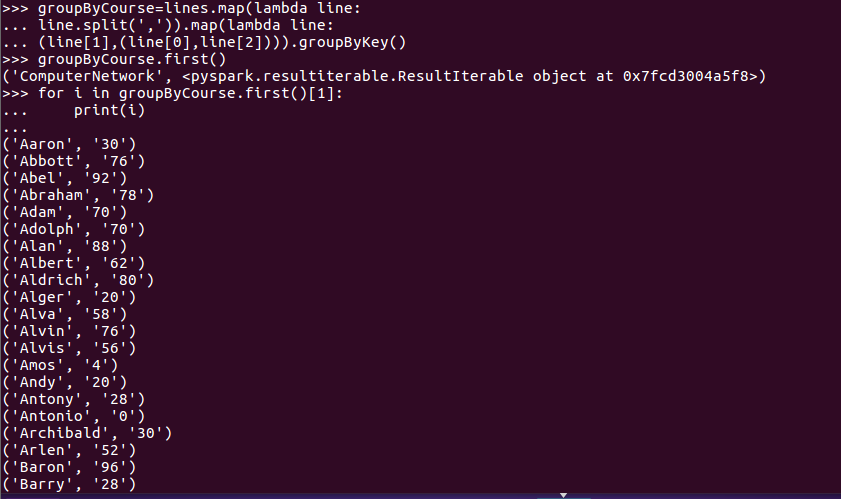

groupByCourse=lines.map(lambda line:line.split(',')).map(lambda line:(line[1],(line[0],line[2]))).groupByKey()

groupByCourse.first()

for i in groupByCourse.first()[1]:

... print(i)

三、学生课程分数 reduceByKey()

-- 每门课程的选修人数

lines=sc.textFile('file:///home/hadoop/chapter4-data01.txt')

reduceByClass=lines.map(lambda line:line.split(',')). map(lambda line:(line[1],1))

reduceByClass.reduceByKey(lambda a,b:a+b).collect()

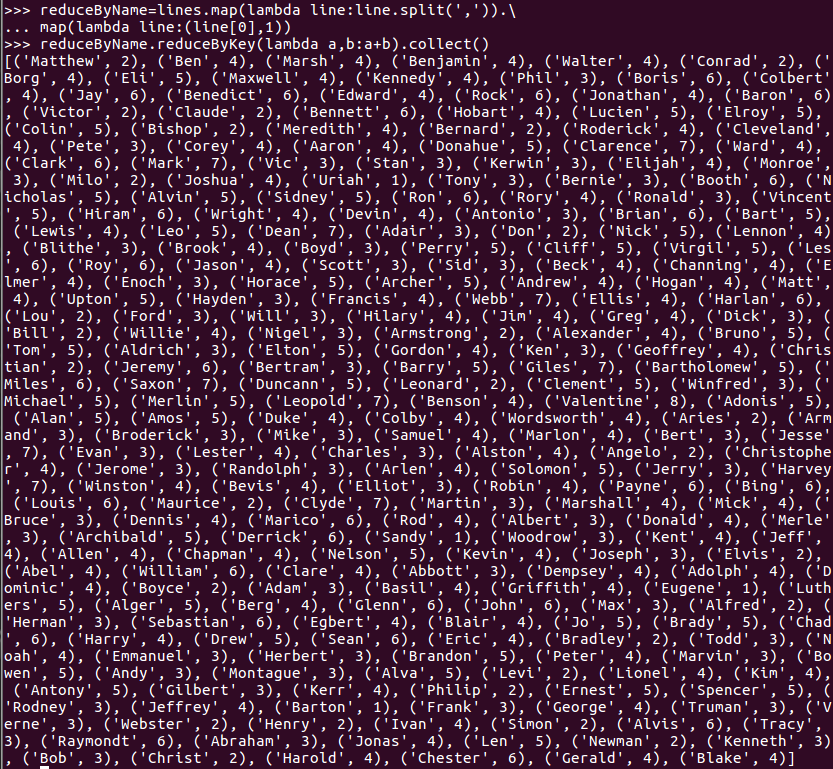

-- 每个学生的选修课程数

reduceByName=lines.map(lambda line:line.split(',')).map(lambda line:(line[0],1))

reduceByName.reduceByKey(lambda a,b:a+b).collect()